前言

随着AI技术的不断发展,AI Agent已经成为近年来的热门话题。AI Agent是指能够自主完成任务的智能体,其可以通过与用户的交互来完成任务。

当下,AI Agent的应用场景越来越多,比如智能客服、智能助手、智能推荐等。但是,AI Agent的交互协议却没有一个统一的标准。基于此,各大互联网科技公司逐渐开始推出自己定义的各种规范:

2024年11月,Claude母公司Anthropic开源公布了MCP(Model Communication Protocol),其定义了 Agent/LLM 去调用外部 Tools 时的协议规范。

2025年3月,谷歌推出了A2A(Agent2Agent)协议,其定义了 Agent 与 Agent 之间的通信协议规范。

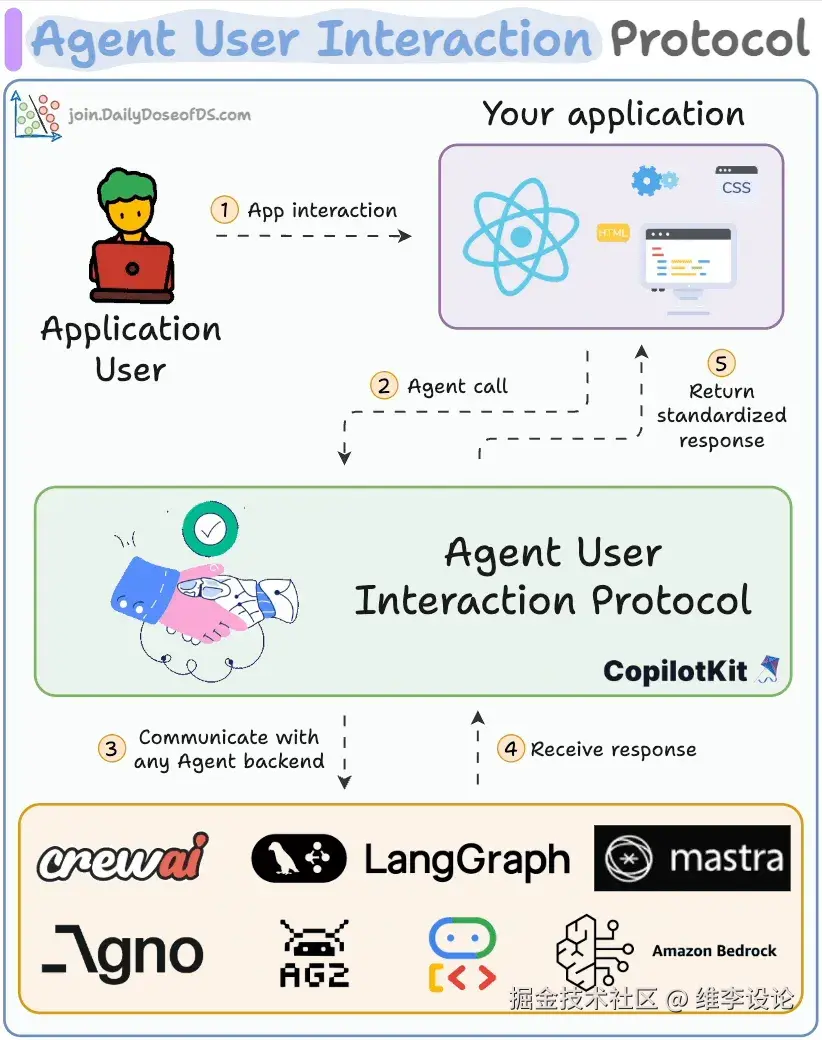

2025年5月,CopilotKit团队为了补充AI Agent相关协议的不足,推出了AG-UI(Agent User Interface)协议,其定义了 Agent 与前端应用之间的通信协议规范。

架构

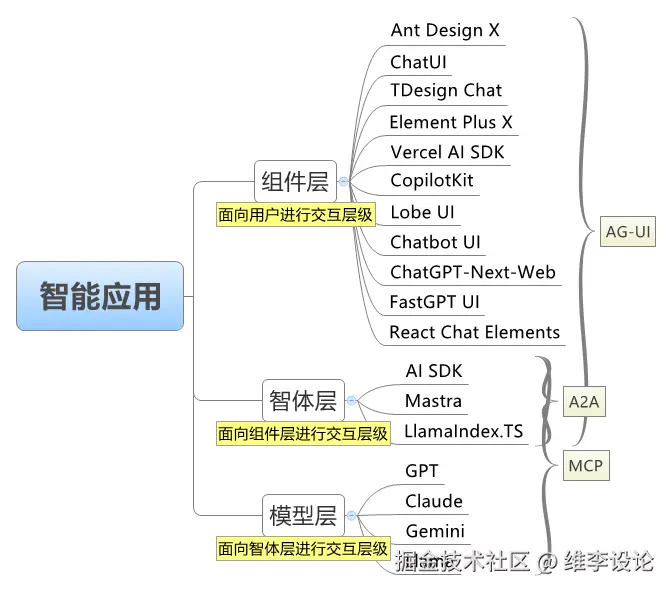

从前端角度来看,对智能应用而言,可以将其划分为三层,分别是组件层、智体层以及模型层。

其中,组件层负责提供基础的组件,比如按钮、输入框、弹窗等,业界常见的AI组件库,包括:Ant Design X、Vercel AI SDK、CopilotKit等。

智体层负责处理用户的请求,比如调用外部的工具、进行推理等,业界常见的框架,包括:AI SDK、Mastra、LlamaIndex TS等。

模型层负责提供模型服务,比如大模型、小模型等,常见模型包括:GPT系列、Claude系列、Gemini系列、Llama系列等。

组件层和智体层之间遵照AG-UI规范,智体层内部各个智能体之间遵照A2A规范,而智体层和模型层之间则可遵循MCP规范进行协议通信。

实践

正如前述分层模型,AG-UI协议主要是针对组件层和智体层之间的交互。当前端应用仅为一个组件时,那么也可以看做是应用和智体层之间的相互作用。

由于当前前端应用渲染模式的多样化,常见包括:CSR、SSR、ISR、SSG、ESR等,对于AG-UI协议的实现并不完全局限于服务端工程中,这里仅以常见的纯前端渲染CSR的前端应用来直接调用后端服务的方式进行举例,对于混合渲染的工程方案,可以参考AG-UI官方文档案例中的基于Next.js实现的方式。

注:所有AG-UI的实现都是对接口的包装补充,其并不能通过是写在前端工程还是后端工程来区分,其是否需要在前端工程中实现,主要取决于前端应用的渲染模式。

我们以express的Node.js服务框架来启动一个后端服务应用,其可以作为BFF层,也可以作为真实的服务层来进行应用,代码如下:

javascript

// https://gitee.com/vleedesigntheory-ai/veeai-ag-ui/blob/master/app.ts

import express, { Request, Response } from 'express'

import {

RunAgentInputSchema,

RunAgentInput,

EventType,

Message,

} from '@ag-ui/core'

import { EventEncoder } from '@ag-ui/encoder'

import { OpenAI } from 'openai'

import { v4 as uuidv4 } from 'uuid'

const app = express()

app.use(express.json())

const OPENAI_API_KEY = '';

app.post('/awp', async (req: Request, res: Response) => {

try {

console.log('req.body', typeof req.body, req.body) // 解析并验证请求体

const input = req.body

console.log('input', input) // 设置 SSE headers

res.setHeader('Content-Type', 'text/event-stream')

res.setHeader('Cache-Control', 'no-cache')

res.setHeader('Connection', 'keep-alive') // 创建事件 encoder

const encoder = new EventEncoder() // 发送 started 事件

const runStarted = {

type: EventType.RUN_STARTED,

threadId: input.threadId,

runId: input.runId,

}

res.write(encoder.encode(runStarted))

if (OPENAI_API_KEY) {

// 初始化 OpenAI 客户端

const client = new OpenAI({ apiKey: OPENAI_API_KEY })

// 将 AG-UI 消息转换为 OpenAI 消息格式

const openaiMessages = input.messages

.filter((msg: Message) =>

['user', 'system', 'assistant'].includes(msg.role),

)

.map((msg: Message) => ({

role: msg.role as 'user' | 'system' | 'assistant',

content: msg.content || '',

})) // 生成消息 ID

const messageId = uuidv4() // 发送 '文本消息开始' 事件

const textMessageStart = {

type: EventType.TEXT_MESSAGE_START,

messageId,

role: 'assistant',

}

res.write(encoder.encode(textMessageStart)) // 创建流式传输完成请求

const stream = await client.chat.completions.create({

model: 'gpt-3.5-turbo',

messages: openaiMessages,

stream: true,

}) // 处理流并发送 '文本消息内容' 事件

for await (const chunk of stream) {

if (chunk.choices[0]?.delta?.content) {

const content = chunk.choices[0].delta.content

const textMessageContent = {

type: EventType.TEXT_MESSAGE_CONTENT,

messageId,

delta: content,

}

res.write(encoder.encode(textMessageContent))

}

}

// 发送 '文本消息结束' 事件

const textMessageEnd = {

type: EventType.TEXT_MESSAGE_END,

messageId,

}

res.write(encoder.encode(textMessageEnd))

}

// 发送 finished 事件

const runFinished = {

type: EventType.RUN_FINISHED,

threadId: input.threadId,

runId: input.runId,

}

res.write(encoder.encode(runFinished)) // 结束响应

res.end()

} catch (error) {

console.log('error', error)

res.status(422).json({ error: (error as Error).message })

}

})

app.use('/', express.static('public'))

app.listen(8000, () => {

console.log('Server running on http://localhost:8000')

})在前端层,通过包装fetch的SSE post请求,来进行调用,代码如下:

xml

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>AG-UI Express服务</title>

</head>

<body>

<p>AG-UI Express服务已启动</p>

<div id="awp"></div>

<script>

async function fetchSSE(url, onMessage) {

const response = await fetch(url, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

token: '11111',

},

body: JSON.stringify({

threadId: "1",

runId: '1',

messages: '请问js之父是谁?'

})

});

const reader = response.body.getReader();

const decoder = new TextDecoder();

let buffer = '';

while (true) {

const {

done,

value

} = await reader.read();

if (done) break;

buffer += decoder.decode(value, {

stream: true

});

const lines = buffer.split('\n');

buffer = lines.pop(); // 保留未完整的一行

for (const line of lines) {

if (line.startsWith('data:')) {

const data = line.slice(5).trim();

if (data) {

onMessage({

data

});

}

}

}

}

if (buffer.length > 0) {

const data = buffer.slice(5).trim();

if (data) {

onMessage({

data

});

}

}

}

fetchSSE('/awp', (event) => {

console.log('Received SSE event:', event);

})

</script>

</body>

</html>原理

AG-UI 协议的出现主要是为了解决智能体与前端应用之间的交互以下标准化问题,其工作流如下:

- 客户端通过 POST 请求发起一次 AI Agent 会话;

- 建立 HTTP 流,如 SSE 或 WebSocket 等协议,实现事件的实时监听与传输;

- 每个事件都包含类型和元信息 Metadata,用于标识和描述事件内容;

- AI Agent 持续以流式方式将事件推送至 UI 端;

- UI 端根据收到的每条事件,实时动态更新界面;

- 同时,UI 端也可以反向发送事件或上下文信息,供 AI Agent 实时处理和响应

在AG-UI 协议中最核心的部分在于事件的定义,代码如下:

ini

export enum EventType {

TEXT_MESSAGE_START = "TEXT_MESSAGE_START",

TEXT_MESSAGE_CONTENT = "TEXT_MESSAGE_CONTENT",

TEXT_MESSAGE_END = "TEXT_MESSAGE_END",

TEXT_MESSAGE_CHUNK = "TEXT_MESSAGE_CHUNK",

THINKING_TEXT_MESSAGE_START = "THINKING_TEXT_MESSAGE_START",

THINKING_TEXT_MESSAGE_CONTENT = "THINKING_TEXT_MESSAGE_CONTENT",

THINKING_TEXT_MESSAGE_END = "THINKING_TEXT_MESSAGE_END",

TOOL_CALL_START = "TOOL_CALL_START",

TOOL_CALL_ARGS = "TOOL_CALL_ARGS",

TOOL_CALL_END = "TOOL_CALL_END",

TOOL_CALL_CHUNK = "TOOL_CALL_CHUNK",

TOOL_CALL_RESULT = "TOOL_CALL_RESULT",

THINKING_START = "THINKING_START",

THINKING_END = "THINKING_END",

STATE_SNAPSHOT = "STATE_SNAPSHOT",

STATE_DELTA = "STATE_DELTA",

MESSAGES_SNAPSHOT = "MESSAGES_SNAPSHOT",

RAW = "RAW",

CUSTOM = "CUSTOM",

RUN_STARTED = "RUN_STARTED",

RUN_FINISHED = "RUN_FINISHED",

RUN_ERROR = "RUN_ERROR",

STEP_STARTED = "STEP_STARTED",

STEP_FINISHED = "STEP_FINISHED",

}其中,文本消息事件(TEXT_MESSAGE_)用于实时流式文本生成,类似AI Copilot的打字效果;工具调用事件 (TOOL_CALL_)用于完整的工具调用生命周期管理;状态管理事件(STATE_)用于状态同步,确保客户端和服务端状态一致;生命周期事件 (RUN* / STEP_)进行执行控制,管理整个代理执行的生命周期。

要实现整个事件流机制,AG-UI通过rxjs实现事件的发布与订阅,代码如下:

ini

export const defaultApplyEvents = (

input: RunAgentInput,

events$: Observable<BaseEvent>,

agent: AbstractAgent,

subscribers: AgentSubscriber[],

) => {}其中,agent是智能体,subscribers是订阅者,events$是事件流,input是运行参数。

AbstractAgent是包含了事件类型的抽象类,代码如下:

typescript

export abstract class AbstractAgent {

public agentId?: string;

public description: string;

public threadId: string;

public messages: Message[];

public state: State;

public debug: boolean = false;

public subscribers: AgentSubscriber[] = [];

constructor({

agentId,

description,

threadId,

initialMessages,

initialState,

debug,

}: AgentConfig = {}) {

this.agentId = agentId;

this.description = description ?? "";

this.threadId = threadId ?? uuidv4();

this.messages = structuredClone_(initialMessages ?? []);

this.state = structuredClone_(initialState ?? {});

this.debug = debug ?? false;

}

public subscribe(subscriber: AgentSubscriber) {}

protected abstract run(input: RunAgentInput): Observable<BaseEvent>

public async runAgent(

parameters?: RunAgentParameters,

subscriber?: AgentSubscriber,

): Promise<RunAgentResult> {}

public abortRun() {}

protected apply(

input: RunAgentInput,

events$: Observable<BaseEvent>,

subscribers: AgentSubscriber[],

): Observable<AgentStateMutation> {}

protected processApplyEvents(

input: RunAgentInput,

events$: Observable<AgentStateMutation>,

subscribers: AgentSubscriber[],

): Observable<AgentStateMutation> {}

protected prepareRunAgentInput(parameters?: RunAgentParameters): RunAgentInput {}

protected async onInitialize(input: RunAgentInput, subscribers: AgentSubscriber[]) {}

protected onError(input: RunAgentInput, error: Error, subscribers: AgentSubscriber[]) {}

protected async onFinalize(input: RunAgentInput, subscribers: AgentSubscriber[]) {}

public clone() {}

public addMessage(message: Message) {}

public addMessages(messages: Message[]) {}

public setMessages(messages: Message[]) {}

public setState(state: State) {}

}AgentSubscriber的interface接口如下:

css

export interface AgentSubscriber {

// Request lifecycle

onRunInitialized?(

params: AgentSubscriberParams,

): MaybePromise<Omit<AgentStateMutation, "stopPropagation"> | void>;

onRunFailed?(

params: { error: Error } & AgentSubscriberParams,

): MaybePromise<Omit<AgentStateMutation, "stopPropagation"> | void>;

onRunFinalized?(

params: AgentSubscriberParams,

): MaybePromise<Omit<AgentStateMutation, "stopPropagation"> | void>;

// Events

onEvent?(

params: { event: BaseEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onRunStartedEvent?(

params: { event: RunStartedEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onRunFinishedEvent?(

params: { event: RunFinishedEvent; result?: any } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onRunErrorEvent?(

params: { event: RunErrorEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onStepStartedEvent?(

params: { event: StepStartedEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onStepFinishedEvent?(

params: { event: StepFinishedEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onTextMessageStartEvent?(

params: { event: TextMessageStartEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onTextMessageContentEvent?(

params: {

event: TextMessageContentEvent;

textMessageBuffer: string;

} & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onTextMessageEndEvent?(

params: { event: TextMessageEndEvent; textMessageBuffer: string } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onToolCallStartEvent?(

params: { event: ToolCallStartEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onToolCallArgsEvent?(

params: {

event: ToolCallArgsEvent;

toolCallBuffer: string;

toolCallName: string;

partialToolCallArgs: Record<string, any>;

} & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onToolCallEndEvent?(

params: {

event: ToolCallEndEvent;

toolCallName: string;

toolCallArgs: Record<string, any>;

} & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onToolCallResultEvent?(

params: { event: ToolCallResultEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onStateSnapshotEvent?(

params: { event: StateSnapshotEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onStateDeltaEvent?(

params: { event: StateDeltaEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onMessagesSnapshotEvent?(

params: { event: MessagesSnapshotEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onRawEvent?(

params: { event: RawEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

onCustomEvent?(

params: { event: CustomEvent } & AgentSubscriberParams,

): MaybePromise<AgentStateMutation | void>;

// State changes

onMessagesChanged?(

params: Omit<AgentSubscriberParams, "input"> & { input?: RunAgentInput },

): MaybePromise<void>;

onStateChanged?(

params: Omit<AgentSubscriberParams, "input"> & { input?: RunAgentInput },

): MaybePromise<void>;

onNewMessage?(

params: { message: Message } & Omit<AgentSubscriberParams, "input"> & {

input?: RunAgentInput;

},

): MaybePromise<void>;

onNewToolCall?(

params: { toolCall: ToolCall } & Omit<AgentSubscriberParams, "input"> & {

input?: RunAgentInput;

},

): MaybePromise<void>;

}总结

综上,虽然AG-UI协议定义的方式并不一定是最终的智体层与组件层的最终形态,但其将传统"调用"方式的交互方式,转化为相互的"协作"方式,是一种开发范式的升级;除此之外,其也为人机协作的交互方式变化提供了更多的可能。

正如CopilotKit团队所说的那样------就像 REST 之于 API,AG-UI 是 Agent 之于用户界面的流式交互协议。AG-UI协议的出现,为AI Agent系统的开发提供了一种新的交互方式,也为AI Agent系统的发展提供了一种新的思路。

ps: 最后,veeai-ag-ui实现了一个简单版本的AG-UI,欢迎star~~~