1. 准备红外标定板

同时采集红外相机与可见光相机的标定板数据,需要标定板在红外相机图像与可见光相机图像下都能有良好的视觉特征,推荐直接采购专业的红外标定板。

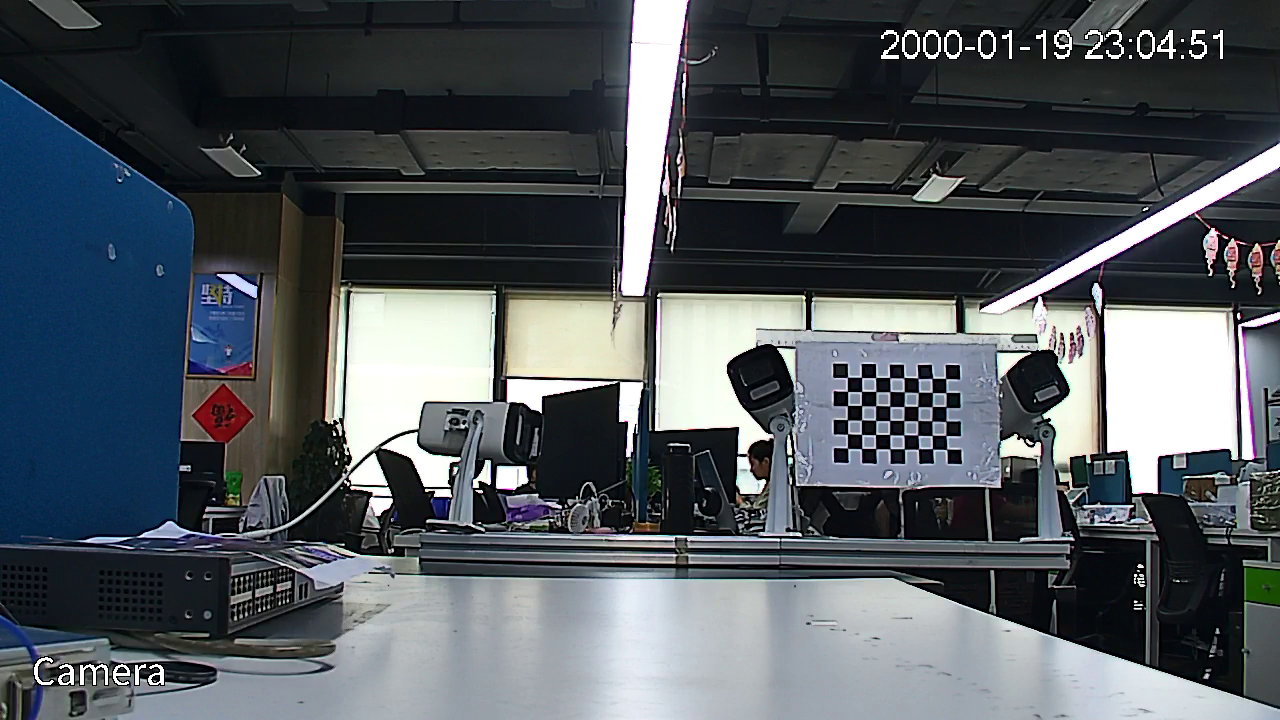

之前曾经自制了一些红外标定板,但效果不佳。

- 尝试自制了圆点标定板,将硬币固定在白纸上,然后使用冰箱降温后采集数据,然后计算圆点的圆心尝试计算Homography,精度不佳失败

计算圆心

-

尝试自制了棋盘格红外标定板,将20x20mm的正方形铝片固定在白纸上,然后使用冰箱降温后采集数据,目前能够计算出矩阵。但是需要冰箱降温,常温下几分钟红外图像上边界就会模糊,导致了集采的红外相机图像中标定板特征不稳定。

如果条件有限,可以尝试一下,是能够实现计算Homography的

-

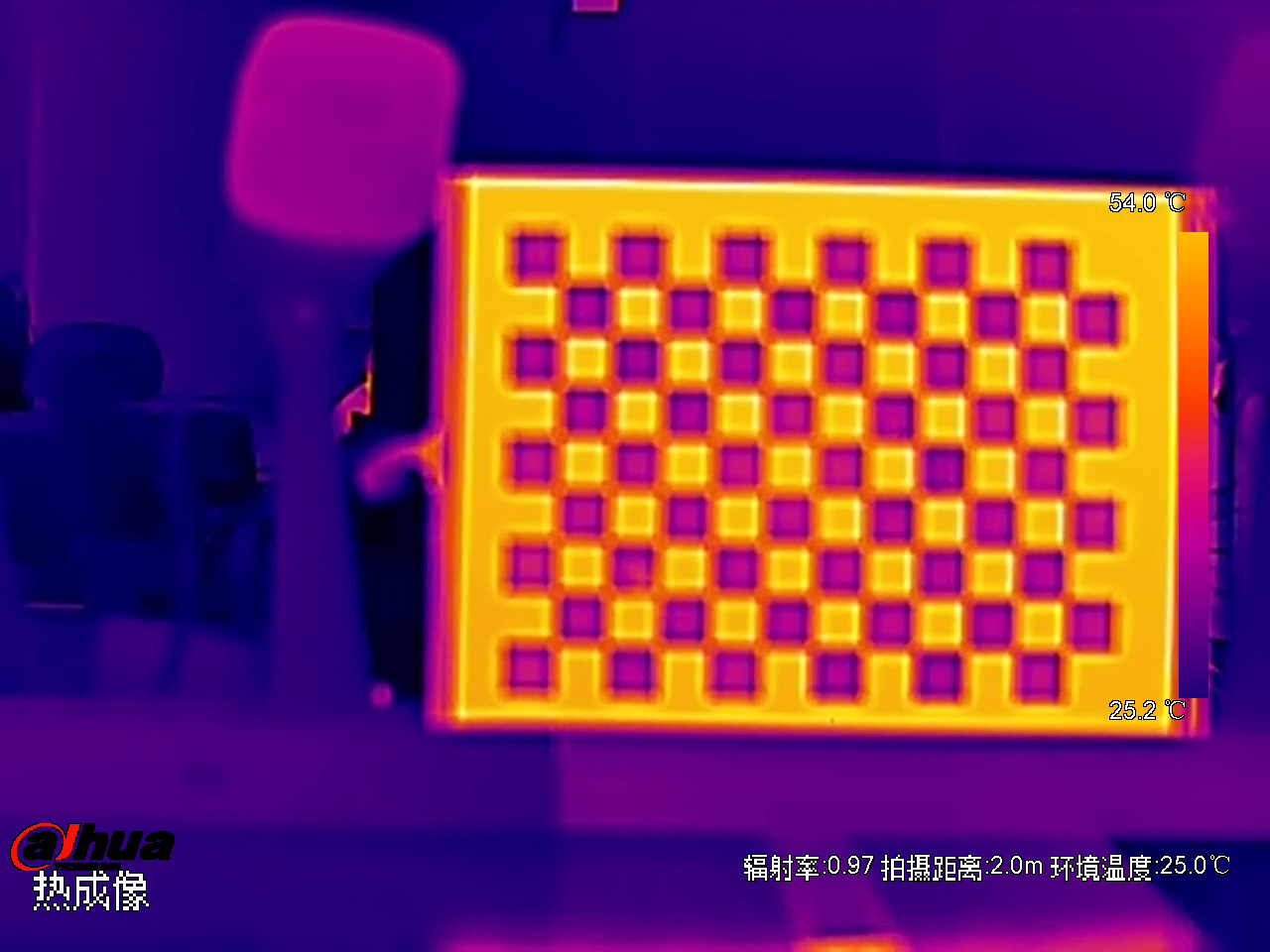

采购了专业的红外标定板,自带了红外加温,精度更加精准。采集效果如下:

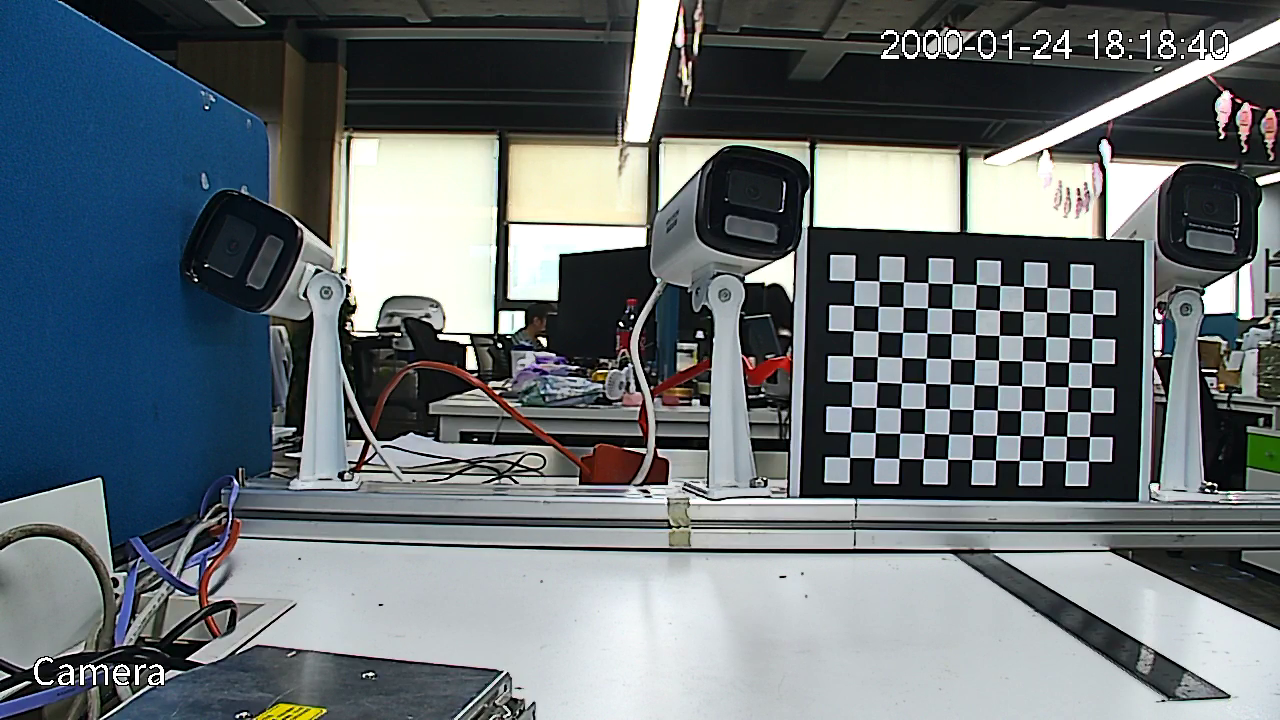

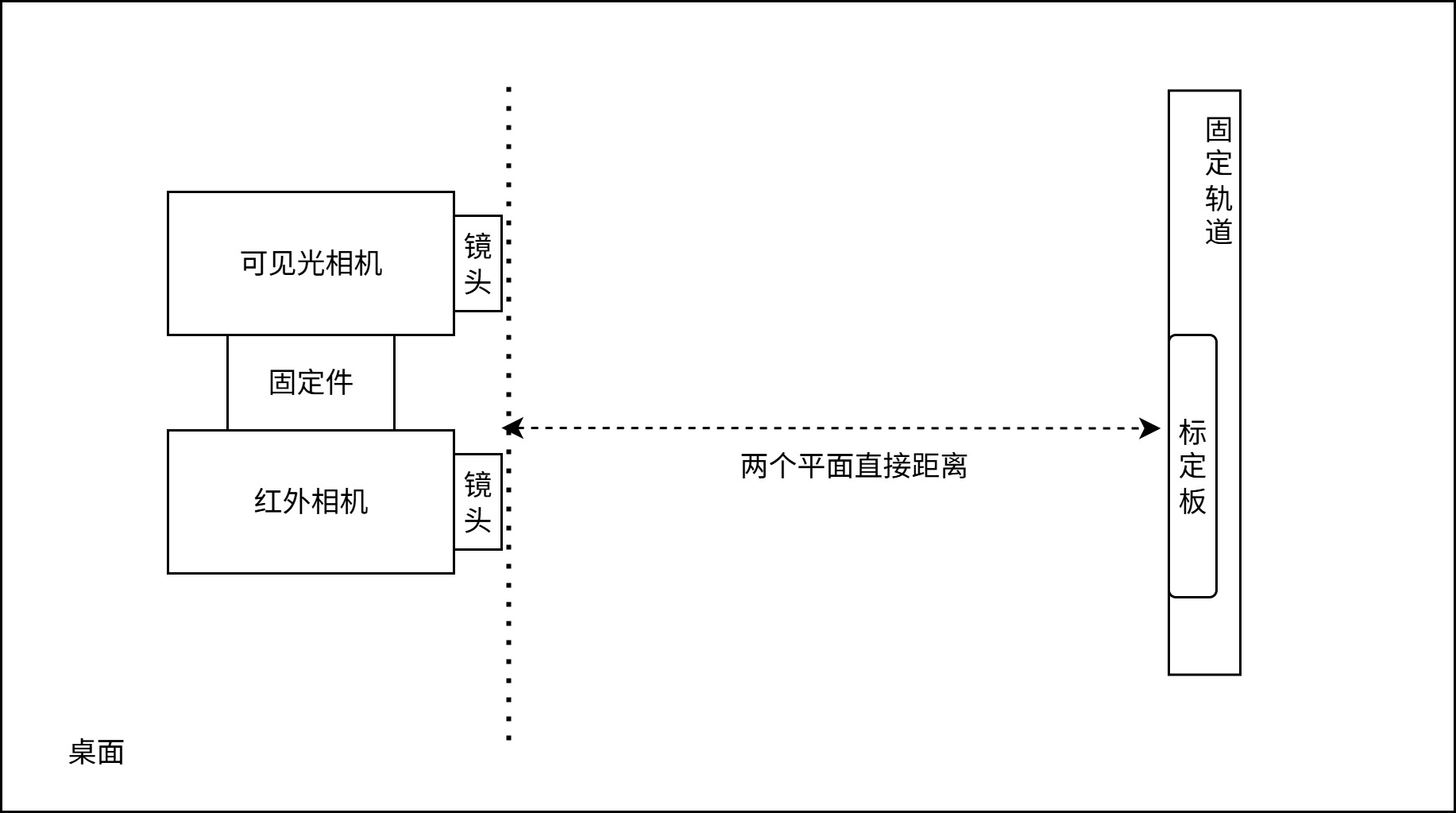

2. 双光相机同时采集图片数据

可见光与红外相机彼此相互固定,视角平视,平稳放置桌面上;

标定板垂直于桌面;

整体布置如图:

然后精细测量距离,按照距离设置多个采集点(如90cm,100cm,110cm等)。

在每个采集点,红外相机与可见光相机同时采集多张图像,红外标定板可以在标定板所在平面进行位移(例如左右位移、上下位移、不可以前后位移、不要调整标定板倾斜角度),丰富采集特征。

然后收集数据,制作标定数据集,文件结构可以参考本案例:

|--dataset

|--90

|--vis

|--90_1.png

|--90_2.png

·······

|--ir

|--90_1.png

|--90_2.png

·······

|--100

|--vis

|--100_1.png

·······

|--ir

|--100_1.png

·······

·······其中dataset的一级子目录下的数字命名目录,代表标定板平面距离相机镜头平面之间的距离;

vis目录表示存放可见光相机采集的图片;

ir目录表示存放红外相机采集的图片;

vis与ir目录中的同名图片名,表示同时采集的可见光与红外相机图像对;

3. 计算多端距离的Homography

运行以下代码,每个距离都需要计算一次,每次的计算结果都会存放在homography.yaml文件内

可执行代码:

import os

import cv2

import numpy as np

from glob import glob

# 可以计算出11*8的标定板的双光数据,输出H,并计算出H误差值

# ========== 用户配置 ==========

vis_dir = "250922/170/vis" # 可见光图像目录(同名文件)

ir_dir = "250922/170/ir" # 红外图像目录(同名文件)

pattern_size = (11, 8) # 内角点数 (cols, rows)

square_size = 20.0 # 棋盘每一格实际大小

# ==============================

USE_SB = hasattr(cv2, "findChessboardCornersSB")

print("Use findChessboardCornersSB:", USE_SB)

def preprocess_ir(img):

"""IR 图像预处理,返回 (原图增强, 反相增强)"""

if len(img.shape) == 3:

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

else:

gray = img.copy()

g = cv2.normalize(gray, None, 0, 255, cv2.NORM_MINMAX).astype(np.uint8)

clahe = cv2.createCLAHE(clipLimit=2.0, tileGridSize=(8, 8))

g = clahe.apply(g)

return g, 255 - g

def detect_corners(gray, pattern_size):

"""检测棋盘格角点"""

if USE_SB:

ret, corners = cv2.findChessboardCornersSB(gray, pattern_size, flags=cv2.CALIB_CB_NORMALIZE_IMAGE)

# print("111 ret=",ret)

if not ret:

ret, corners = cv2.findChessboardCorners(gray, pattern_size, None)

# print("222 ret=",ret)

else:

ret, corners = cv2.findChessboardCorners(gray, pattern_size,

cv2.CALIB_CB_ADAPTIVE_THRESH + cv2.CALIB_CB_NORMALIZE_IMAGE)

return ret, corners

def find_corners_in_pair(vis_img, ir_img, pattern_size):

vis_gray = cv2.cvtColor(vis_img, cv2.COLOR_BGR2GRAY) if len(vis_img.shape)==3 else vis_img

ret_v, corners_v = detect_corners(vis_gray, pattern_size)

g, g_inv = preprocess_ir(ir_img)

ret_i, corners_i = detect_corners(g, pattern_size)

# print("aaa ret_i=", ret_i)

if not ret_i:

ret_i, corners_i = detect_corners(g_inv, pattern_size)

# print("bbb ret_i=", ret_i)

return ret_v, corners_v if ret_v else None, ret_i, corners_i if ret_i else None

def collect_pairs(vis_dir, ir_dir, pattern_size):

vis_files = sorted([os.path.basename(p) for p in glob(os.path.join(vis_dir, "*.png"))])

ir_files = sorted([os.path.basename(p) for p in glob(os.path.join(ir_dir, "*.png"))])

common = sorted(list(set(vis_files).intersection(set(ir_files))))

print("Found {} matching pairs".format(len(common)))

objp = np.zeros((pattern_size[1]*pattern_size[0], 3), np.float32)

objp[:,:2] = np.mgrid[0:pattern_size[0], 0:pattern_size[1]].T.reshape(-1,2) * square_size

objpoints, imgpoints_vis, imgpoints_ir, used_pairs = [], [], [], []

max_corners_vis, max_corners_ir = 0, 0

for fn in common:

vis = cv2.imread(os.path.join(vis_dir, fn))

ir = cv2.imread(os.path.join(ir_dir, fn))

rv, cv_pts, ri, ir_pts = find_corners_in_pair(vis, ir, pattern_size)

if rv: max_corners_vis = max(max_corners_vis, len(cv_pts))

if ri: max_corners_ir = max(max_corners_ir, len(ir_pts))

if rv and ri:

objpoints.append(objp)

imgpoints_vis.append(cv_pts)

imgpoints_ir.append(ir_pts)

used_pairs.append(fn)

else:

print(f"Skip pair (no corners): {fn} vis:{rv} ir:{ri}")

print("Used pairs:", len(used_pairs))

print("Max corners detected - vis:", max_corners_vis, "ir:", max_corners_ir)

return objpoints, imgpoints_vis, imgpoints_ir, used_pairs

def compute_homography_for_pairs(vis_dir, ir_dir, pattern_size):

objpoints, imgpoints_vis, imgpoints_ir, used_pairs = collect_pairs(vis_dir, ir_dir, pattern_size)

if len(imgpoints_vis) == 0:

raise RuntimeError("No valid pairs with detected corners found.")

# 堆叠所有角点

src_pts = np.vstack([p.reshape(-1,2) for p in imgpoints_vis])

dst_pts = np.vstack([p.reshape(-1,2) for p in imgpoints_ir])

# 计算单应性矩阵 H

H, mask = cv2.findHomography(src_pts, dst_pts, cv2.RANSAC, 5.0)

print("Homography H:\n", H)

# ========== 重投影误差计算 ==========

src_pts_h = np.hstack([src_pts, np.ones((src_pts.shape[0],1))]) # Nx3

dst_proj = (H @ src_pts_h.T).T

dst_proj = dst_proj[:, :2] / dst_proj[:, 2:3]

errors = np.linalg.norm(dst_pts - dst_proj, axis=1)

mean_error = np.mean(errors)

print("Overall mean reprojection error: {:.3f} px".format(mean_error))

# 分组计算每对图像的误差

print("\nPer-image reprojection errors:")

idx = 0

for fn, cv_pts in zip(used_pairs, imgpoints_vis):

n = cv_pts.shape[0]

pts_proj = dst_proj[idx:idx+n]

pts_true = imgpoints_ir[used_pairs.index(fn)].reshape(-1,2)

err = np.linalg.norm(pts_true - pts_proj, axis=1)

print(f"{fn}: mean={err.mean():.3f} px, max={err.max():.3f} px")

idx += n

return H

if __name__ == "__main__":

H = compute_homography_for_pairs(vis_dir, ir_dir, pattern_size)

print("\nUse map_point_with_homography(pt, H) to map points.")

# 距离相机距离(90~160),只能输入10的倍数,单位mm

distance = 170

key = 'D'+str(distance)

fs = cv2.FileStorage("homography.yaml", cv2.FILE_STORAGE_APPEND) # 用 APPEND,避免覆盖已有数据

fs.startWriteStruct(key, cv2.FileNode_MAP) # 开始写一个 map 节点

fs.write("H", H) # 在 "90" 下写 H

fs.endWriteStruct() # 结束 map 节点

fs.release()

print(f"Homography saved under key '{key}' in homography.yaml")保存的homography.yaml文件的内容类似于:

%YAML:1.0

---

D90:

H: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 1.7505547963346215, 0.023093222201774798,

-1005.7988140079869, -0.083884560976669895, 1.8066956354122292,

-185.34136073705577, -0.00014410247880878996,

2.9408854846017554e-06, 1. ]

...

---

D100:

H: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 1.868168239702898, 0.022399324469085005,

-1035.0148286141678, -0.061566766871658858, 1.8925217293905943,

-214.30490708482179, -9.6328418452955476e-05,

1.564063723674256e-05, 1. ]

...

---

D110:

H: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 1.8408660703996047, 0.017141105193817001,

-975.16228114276885, -0.061934846207833884, 1.8767835424024828,

-210.65410703157605, -9.8069621188215096e-05,

2.6711579326946303e-06, 1. ]

...

---

D120:

H: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 1.8328933252101294, 0.024564642191984416,

-938.09857209010909, -0.062640786299579337, 1.8840657084681294,

-213.00717084871943, -0.00010130284390731729,

1.2897500667397806e-05, 1. ]

...

---

D130:

H: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 1.9152483930801767, 0.015826600732674858,

-955.03363923000359, -0.045507960809097601, 1.9419551479116461,

-234.15698134882834, -6.2356828247003843e-05,

9.0866646050568969e-06, 1. ]

...

---

D140:

H: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 1.8669029101443313, 0.024225503821909331,

-906.16616218328113, -0.056619591042881418, 1.9055402383166042,

-220.4182901074731, -8.3178063722362926e-05,

6.7155057310306864e-06, 1. ]

...

---

D150:

H: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 1.9110810377280794, 0.046155366291742814,

-918.22913787338996, -0.052461292672370499, 1.9573099387545476,

-234.76129303562087, -7.106748349136362e-05,

2.9258475293066501e-05, 1. ]

...

---

D160:

H: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 1.8494264669160794, 0.039595504578104873,

-858.40583622348072, -0.060992361759845334, 1.9055206881067623,

-217.18880427387955, -9.0795590179023328e-05,

2.4264766836270281e-05, 1. ]