K8s 集群部署环境准备

环境搭建

关闭防火墙和增强功能

[root@localhost ~]# systemctl stop firewalld

[root@master ~]# systemctl disable firewalld.service

[root@localhost ~]# setenforce 0

[root@master ~]# sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config编辑主机映射

# 配置主机映射

cat >>/etc/hosts <<EOF

192.168.10.100 master

192.168.10.150 node1

192.168.10.160 node2

EOF关闭swap分区

[root@master ~]# swapoff -a

[root@master ~]# sed -i '/swap/s/^/#/g' /etc/fstab配置内核参数

cat >/etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sysctl --system安装 ipset 、 ipvsadm

yum -y install conntrack ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git

# 配置文件

cat >/etc/modules-load.d/ipvs.conf <<EOF # Load IPVS at boot

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

nf_conntrack_ipv4

EOF

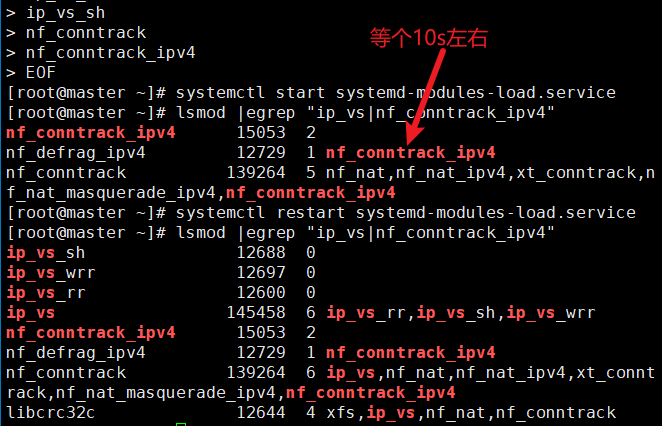

# 注意,这里需要等一会,让它启动好了,再进行下一步

[root@master ~]# systemctl start systemd-modules-load.service

[root@master ~]# lsmod |egrep "ip_vs|nf_conntrack_ipv4"安装container

安装依赖包

yum -y install yum-utils device-mapper-persistent-data lvm2添加阿里云docker

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo添加 overlay 和 netfilter 模块

cat >>/etc/modules-load.d/containerd.conf <<EOF

overlay

br_netfilter

EOF

[root@master ~]# modprobe overlay

[root@master ~]# modprobe br_netfilte安装 Containerd ,这里安装最新版本

yum -y install containerd.io创建container的配置文件

mkdir -p /etc/containerd

containerd config default > /etc/containerd/config.toml

sed -i '/SystemdCgroup/s/false/true/g' /etc/containerd/config.toml

sed -i '/sandbox_image/s/registry.k8s.io/registry.aliyuncs.com\/google_containers/g' /etc/containerd/config.toml启动container

systemctl enable containerd

systemctl start containerd安装 kubectl 、 kubelet 、 k ubeadm

添加阿里云kubernets

cat >/etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF捕获

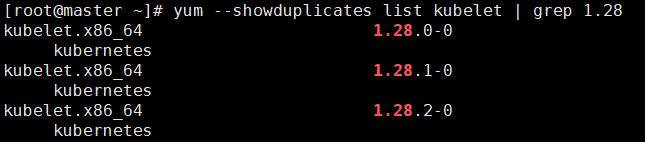

[root@master ~]# yum --showduplicates list kubelet | grep 1.28

安装最新的版本

yum -y install kubectl-1.28.2 kubelet-1.28.2 kubeadm-1.28.2初始化集群k8s(在master节点做)

建立初始化集群

# 查看镜像

kubeadm config images list --kubernetes-version=v1.28.2

# 配置文件

cat > /tmp/kubeadm-init.yaml <<EOF

apiVersion: kubeadm.k8s.io/v1beta3

kind: ClusterConfiguration

kubernetesVersion: v1.28.2

controlPlaneEndpoint: 192.168.10.100:6443

imageRepository: registry.aliyuncs.com/google_containers

networking:

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/16

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

EOF

# 再次执行kubeadm init

kubeadm init --config=/tmp/kubeadm-init.yaml --ignore-preflight-errors=all创建kubectl

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

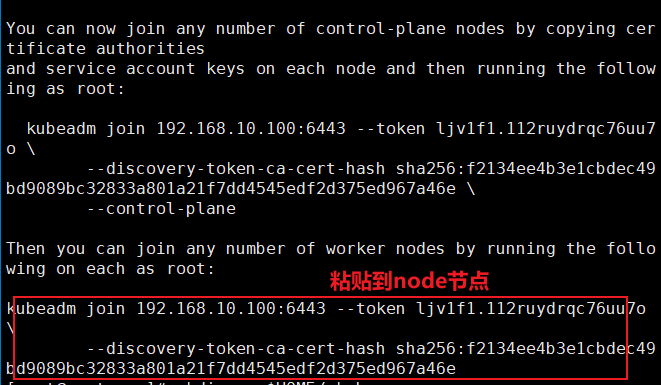

sudo chown $(id -u):$(id -g) $HOME/.kube/config复权node节点(仅这个在node节点里面做)

kubeadm join 192.168.10.100:6443 --token ljv1f1.112ruydrqc76uu7o \

--discovery-token-ca-cert-hash sha256:f2134ee4b3e1cbdec49bd9089bc32833a801a21f7dd4545edf2d375ed967a46e 启动kublet(3个节点)

# 启动

systemctl enable kubelet

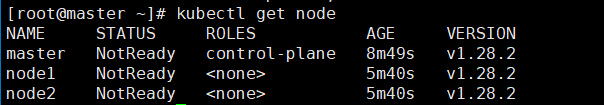

systemctl start kubelet检查node节点

kubectl get node

kubectl get pod -A

导入相关的文件

准备文件

calico-cni-v3.24.5.tar

calico-controllers-v3.24.5.tar

calico-node-v3.24.5.tar

calico.yaml

nerdctl-1.7.0-linux-amd64.tar.gz

将nerdctl-1.7.0-linux-amd64.tar.gz解压到/usr/local/bin下面

tar -C /usr/local/bin -xzf nerdctl-1.7.0-linux-amd64.tar.gz下载完之后使用nerdctl导入calico镜像

calico只需要master节点就可以,如果在node节点配置也没啥影响

[root@master opt]# nerdctl -n k8s.io load -i calico-cni-v3.24.5.tar

[root@master opt]# nerdctl -n k8s.io load -i calico-node-v3.24.5.tar启动ymal文件

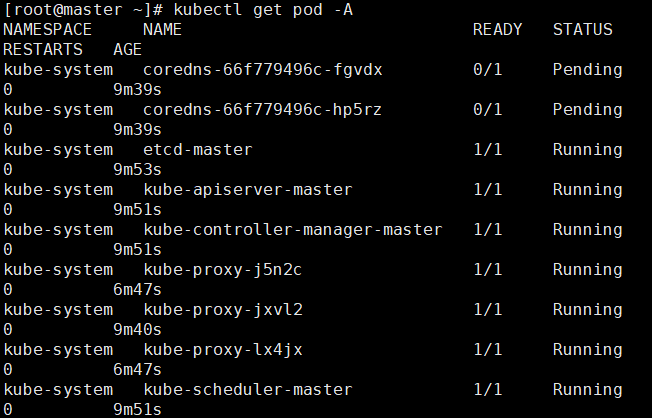

[root@master opt]# kubectl apply -f calico.yaml获取节点

[root@master opt]# kubectl get nodes

[root@master opt]# kubectl get pod -A

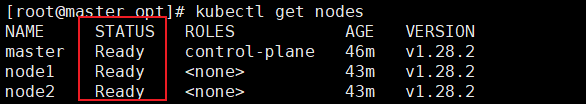

遇到这种情况,检查一下错误的namespace

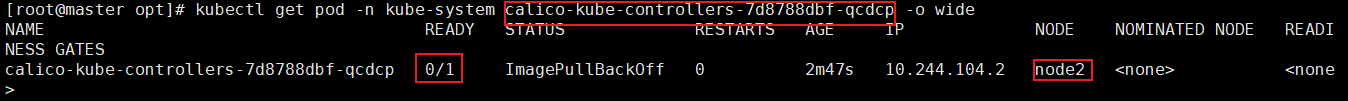

[root@master opt]# kubectl get pod -n kube-system calico-kube-controllers-7d8788dbf-qcdcp -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-7d8788dbf-qcdcp 0/1 ImagePullBackOff 0 2m47s 10.244.104.2 node2 <none> <none>

在node2节点安装镜像

[root@node2 opt]# nerdctl -n k8s.io load -i calico-controllers-v3.24.5.tar

unpacking docker.io/calico/kube-controllers:v3.24.5 (sha256:2b6acd7f677f76ffe12ecf3ea7df92eb9b1bdb07336d1ac2a54c7631fb753f7e)...

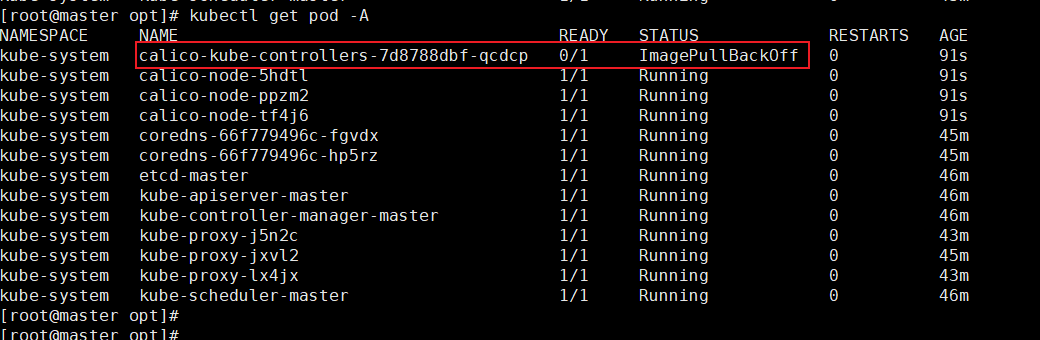

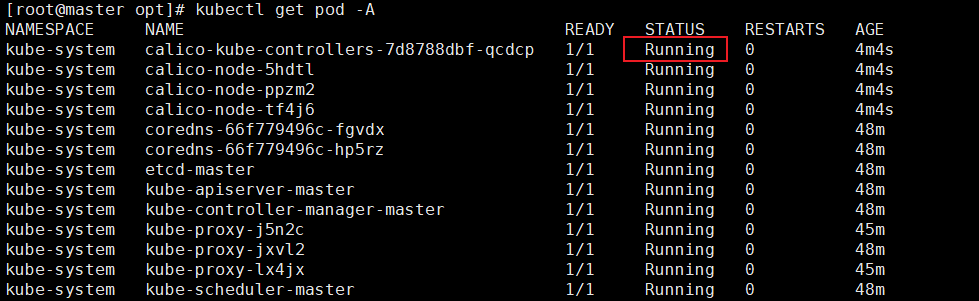

Loaded image: calico/kube-controllers:v3.24.5再次查看pod -A 节点

[root@master opt]# kubectl get pod -A

安装****kubernetes-dashboard

这里可以3个一起安装,减少报错的机会

dashboard-v2.7.0.tar

metrics-scraper-v1.0.8.tar

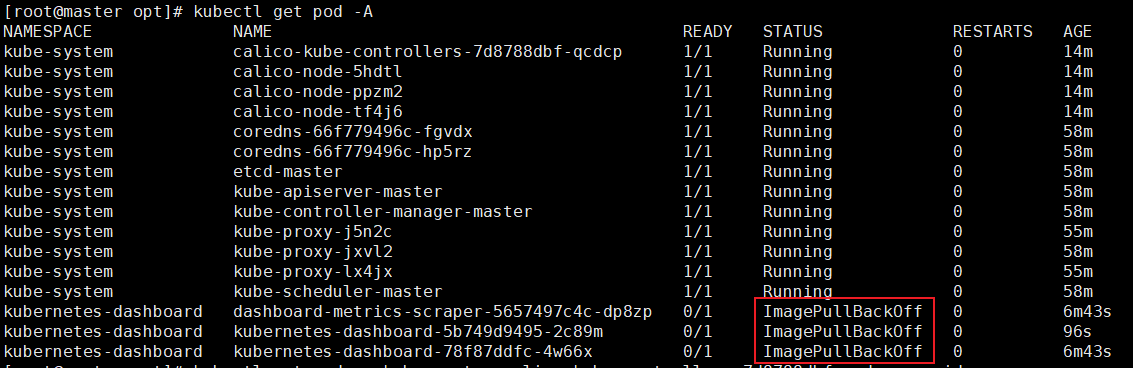

[root@master opt]# kubectl get pod,svc -n kubernetes-dashboardrecommended.yaml

[root@master opt]# nerdctl -n k8s.io load -i dashboard-v2.7.0.tar

[root@master opt]# nerdctl -n k8s.io load -i metrics-scraper-v1.0.8.tar 仅仅在master里面启动ymal文件

[root@master opt]# kubectl apply -f recommended.yaml报错的解决办法

这四个dashboard没起来

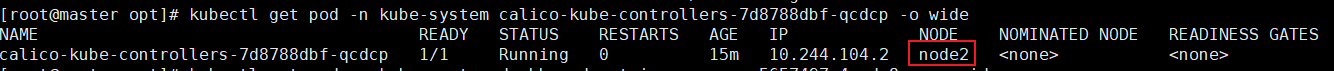

[root@master opt]# kubectl get pod -n kube-system calico-kube-controllers-7d8788dbf-qcdcp -o wide

这里是指node2里面运行,但是node1里面没有运行

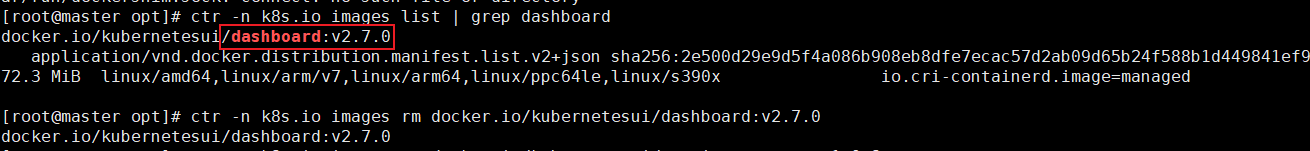

ctr -n k8s.io images list | grep dashboard

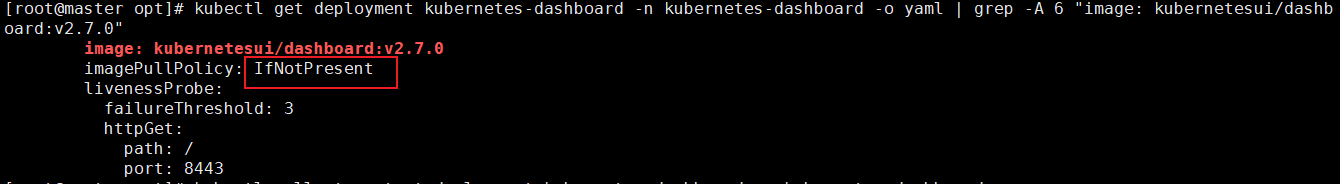

捕获dashboard

[root@node1 opt]# ctr -n k8s.io images rm docker.io/kubernetesui/dashboard:v2.7.0

docker.io/kubernetesui/dashboard:v2.7.0删除某一个镜像

kubectl rollout restart deployment kubernetes-dashboard -n kubernetes-dashboard重新加载

[root@master opt]# kubectl get deployment kubernetes-dashboard -n kubernetes-dashboard -o yaml | grep -A 6 "image: kubernetesui/dashboard:v2.7.0"