cpp

#GPU训练

import torch

from torch import nn

import torchvision

from torch.utils.tensorboard import SummaryWriter

from torch.utils.data import DataLoader

#1.准备训练数据 测试数据

train_data = torchvision.datasets.CIFAR10(

root="./",

train=True,

transform=torchvision.transforms.ToTensor(),

download=True

)

test_data = torchvision.datasets.CIFAR10(

root="./",

train=False,

transform=torchvision.transforms.ToTensor(),

download=True

)

train_size = len(train_data)

test_size = len(test_data)

#加载数据集

train_dataloader = DataLoader(train_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

#2.创建神经网络

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, 2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, 2),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4, 64),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

#3.实例化模型

tudui = Tudui()

if torch.cuda.is_available():

tudui = tudui.cuda()

print("GPU训练")

else:

print("CPU训练")

#4.损失函数与优化器

loss_fn = nn.CrossEntropyLoss()

if torch.cuda.is_available():

loss_fn = loss_fn.cuda()

learning_rate = 1e-2

optimizer = torch.optim.SGD(tudui.parameters(), lr= learning_rate)

#5.训练与测试

epoch = 10

for i in range(epoch):

acy = 0

total_loss = 0

tudui.train()

for data in train_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs, targets = imgs.cuda(), targets.cuda()

outputs = tudui(imgs)

acy_t = (outputs.argmax(1) == targets).sum()

acy += acy_t

loss = loss_fn(outputs, targets)

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss+=loss.item()

print("训练epoch:{}, loss:{} acy:{}".format(i+1, total_loss, acy/train_size))

#6.测试

accuracy = 0

tudui.eval()

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs, targets = imgs.cuda(), targets.cuda()

outputs = tudui(imgs)

accuracy_t = (outputs.argmax(1) == targets).sum()

accuracy += accuracy_t

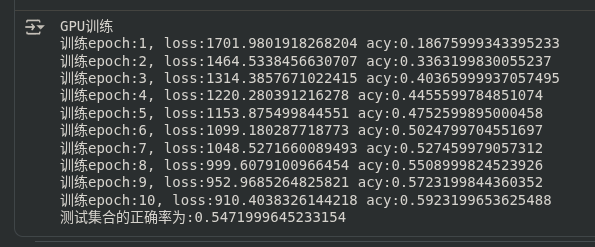

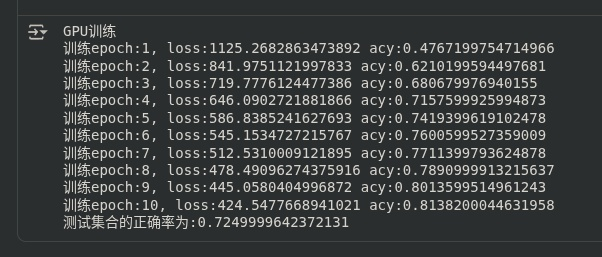

print("测试集合的正确率为:{}".format(accuracy/test_size))以上代码,训练结果如下:

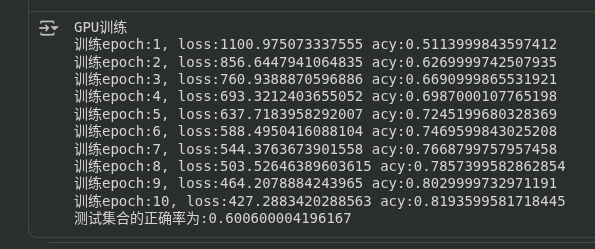

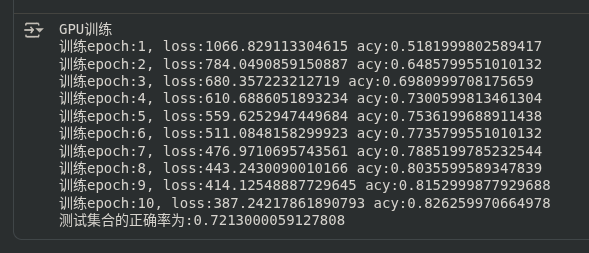

加入了归一化:

加入激活函数:

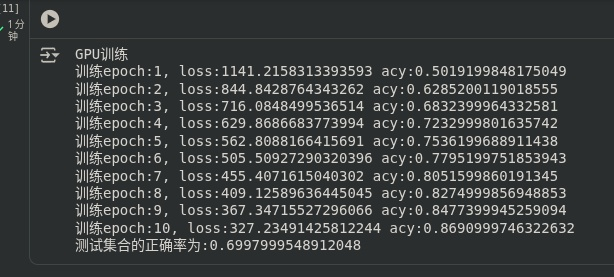

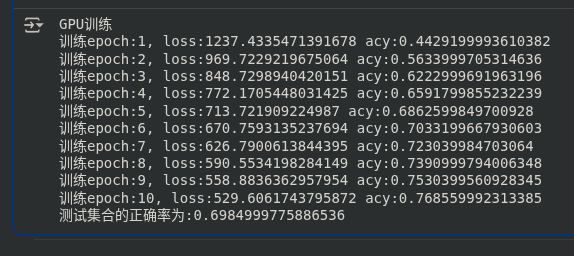

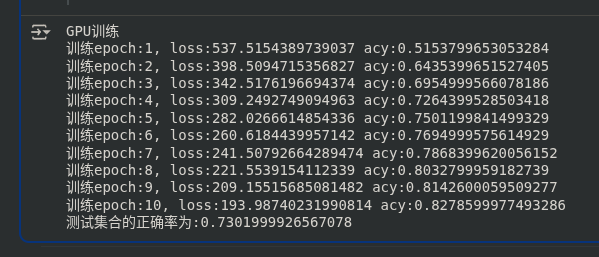

dropout()在各种参数下:

cpp

0.1

训练epoch:10, loss:391.0225857049227 acy:0.8289600014686584

测试集合的正确率为:0.7027999758720398

0.2

训练epoch:10, loss:439.34341555833817 acy:0.8060199618339539

测试集合的正确率为:0.6718999743461609

0.3

训练epoch:10, loss:478.8938387930393 acy:0.7883599996566772

测试集合的正确率为:0.652999997138977

0.4

训练epoch:10, loss:537.8044945299625 acy:0.7644599676132202

测试集合的正确率为:0.7123000025749207

0.5

训练epoch:10, loss:594.5000147819519 acy:0.7396399974822998

测试集合的正确率为:0.6775999665260315

0.8

训练epoch:10, loss:904.8575140833855 acy:0.5776599645614624

测试集合的正确率为:0.538599967956543以下也是0.4时:

优化器SGD()改为Adm():

学习率从0.01改为0.001,改变不大

Batch_size从64到128

修改了以上各种参数的代码:

cpp

#GPU训练

import torch

from torch import nn

import torchvision

from torch.utils.tensorboard import SummaryWriter

from torch.utils.data import DataLoader

#1.准备训练数据 测试数据

train_data = torchvision.datasets.CIFAR10(

root="./",

train=True,

transform=torchvision.transforms.ToTensor(),

download=True

)

test_data = torchvision.datasets.CIFAR10(

root="./",

train=False,

transform=torchvision.transforms.ToTensor(),

download=True

)

train_size = len(train_data)

test_size = len(test_data)

#加载数据集

train_dataloader = DataLoader(train_data, batch_size=128, shuffle=True)

test_dataloader = DataLoader(test_data, batch_size=128, shuffle=False)

#2.创建神经网络

class Tudui(nn.Module):

def __init__(self):

super(Tudui, self).__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, 1, 2),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, 1, 2),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, 5, 1, 2),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(2),

nn.Flatten(),

nn.Linear(64*4*4, 64),

nn.BatchNorm1d(64),

nn.ReLU(),

nn.Dropout(0.4),

nn.Linear(64, 10)

)

def forward(self, x):

x = self.model(x)

return x

#3.实例化模型

tudui = Tudui()

if torch.cuda.is_available():

tudui = tudui.cuda()

print("GPU训练")

else:

print("CPU训练")

#4.损失函数与优化器

loss_fn = nn.CrossEntropyLoss()

if torch.cuda.is_available():

loss_fn = loss_fn.cuda()

learning_rate = 1e-3

optimizer = torch.optim.Adam(tudui.parameters(), lr= learning_rate)

#5.训练与测试

epoch = 10

for i in range(epoch):

acy = 0

total_loss = 0

tudui.train()

for data in train_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs, targets = imgs.cuda(), targets.cuda()

outputs = tudui(imgs)

acy_t = (outputs.argmax(1) == targets).sum()

acy += acy_t

loss = loss_fn(outputs, targets)

print("this batch of loss:{}".format(loss.item()))

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss+=loss.item()

print("训练epoch:{}, loss:{} acy:{}".format(i+1, total_loss, acy/train_size))

#6.测试

accuracy = 0

tudui.eval()

with torch.no_grad():

for data in test_dataloader:

imgs, targets = data

if torch.cuda.is_available():

imgs, targets = imgs.cuda(), targets.cuda()

outputs = tudui(imgs)

accuracy_t = (outputs.argmax(1) == targets).sum()

accuracy += accuracy_t

print("测试集合的正确率为:{}".format(accuracy/test_size))