作者:来自 Elastic Jonathan Buttner

了解如何使用 Elasticsearch 开放推理 API 结合 Google 的 Gemini 模型进行内容生成、问答和摘要。

Elasticsearch 具有与行业领先的生成式 AI 工具和提供商的原生集成。查看我们的网络研讨会,了解如何超越 RAG 基础,或使用 Elastic 向量数据库构建可投入生产的应用。

为了为你的用例构建最佳搜索解决方案,现在可以开始免费云试用或在本地机器上尝试 Elastic。

我们很高兴地宣布 Elasticsearch 开放 inference API 的最新扩展:通过 Google Cloud 的 Vertex AI 平台集成 Google 的 Gemini 模型!

Elasticsearch 开放推理 chat completion API 提供了一种标准化、功能丰富且熟悉的方式来访问大型语言模型。借助此功能,Elasticsearch 开发者可以使用大型语言模型(例如 Gemini 2.5 Pro)来构建生成式 AI 应用,包括问答、摘要和文本生成等用例。

现在,他们可以使用自己的 Google Cloud 帐号执行聊天补全任务、存储摘要、在 ES|QL 或直接通过推理 API 使用 Google 的 Gemini 模型,并利用 Elasticsearch 全面的 AI 搜索工具和经过验证的向量数据库能力。

使用 Google 的 Gemini 模型回答问题并生成工具调用

在这篇博客中,我们将演示如何使用 Google 的 Gemini 模型,通过 ES|QL 回答关于存储在 Elasticsearch 中的数据的问题。在开始与 Elasticsearch 交互之前,请确保你拥有一个 Google Cloud Vertex AI 帐号以及必要的密钥。我们将在配置 inference 端点时使用它们。

创建 Vertex AI 服务帐号并获取密钥

-

点击 "Create service account" 按钮。

- 输入一个适合你的名称。

- 点击 "Create and continue"。

- 为其授予 Vertex AI User 角色。

- 点击 "Add another role",然后授予 "Service account token creator" 角色。此角色用于允许服务帐号生成所需的访问令牌。

- 点击 "Done"。

-

前往 console.cloud.google.com/iam-admin/s... 并点击刚创建的服务帐号。

-

进入 "keys" 标签页,点击 "Add key -> Create new key -> JSON -> Create"。

- 如果你收到错误信息 "Service account key creation is disabled",则需要管理员修改组织策略 iam.disableServiceAccountKeyCreation 或授予例外。

-

服务帐号密钥应会自动下载。

现在我们已经下载了服务帐号密钥,在使用它创建推理端点之前,需要先对其进行格式化。以下 sed 命令将会转义引号并去除换行符。

swift

`sed 's/"/\\"/g' <path to your service account file> | tr -d "\n"` AI写代码设置推理端点并与 Google 的 Gemini 模型交互

我们将使用 Kibana 的 Console 在 Elasticsearch 中执行以下步骤,而无需设置 IDE。

首先,我们配置一个推理端点,用于与 Gemini 交互:

bash

`

1. PUT _inference/chat_completion/gemini_chat_completion

2. {

3. "service": "googlevertexai",

4. "service_settings": {

5. "service_account_json": "<output string from the sed command>",

6. "model_id": "gemini-2.5-pro",

7. "location": "us-central1",

8. "project_id": "<project id>"

9. }

10. }

`AI写代码在成功创建推理端点后,我们会收到如下类似的响应,状态码为 200 OK:

bash

`

1. {

2. "inference_id": "gemini_chat_completion",

3. "task_type": "chat_completion",

4. "service": "googlevertexai",

5. "service_settings": {

6. "project_id": "<project id>",

7. "location": "us-central1",

8. "model_id": "gemini-2.5-pro",

9. "rate_limit": {

10. "requests_per_minute": 1000

11. }

12. }

13. }

`AI写代码现在我们可以调用已配置的端点,对任意文本输入执行聊天补全,并实时接收响应。让我们请 Gemini 简要介绍一下 ES|QL:

bash

`

1. POST _inference/chat_completion/gemini_chat_completion/_stream

2. {

3. "messages": [

4. {

5. "role": "user",

6. "content": "What is a short one line description of Elastic ES|QL?"

7. }

8. ]

9. }

`AI写代码我们应该会收到一个状态码为 200 OK 的流式响应,其中包含对 ES|QL 的简短描述:

css

`

1. event: message

2. data: {"id":"VvZ3aOSwMpu3nvgPoLfSgQU","choices":[{"delta":{"content":"ES|QL is a powerful, piped query language used","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

4. event: message

5. data: {"id":"VvZ3aOSwMpu3nvgPoLfSgQU","choices":[{"delta":{"content":" to search, transform, and aggregate data in Elasticsearch in a single, sequential query.","role":"model"},"finish_reason":"STOP","index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk","usage":{"completion_tokens":28,"prompt_tokens":13,"total_tokens":1469}}

7. event: message

8. data: [DONE]

`AI写代码推理聊天补全 API 支持工具调用,使我们能够以可靠的方式与托管在 Vertex AI 平台上的 Google Gemini 模型交互,从而获取关于我们数据的信息。

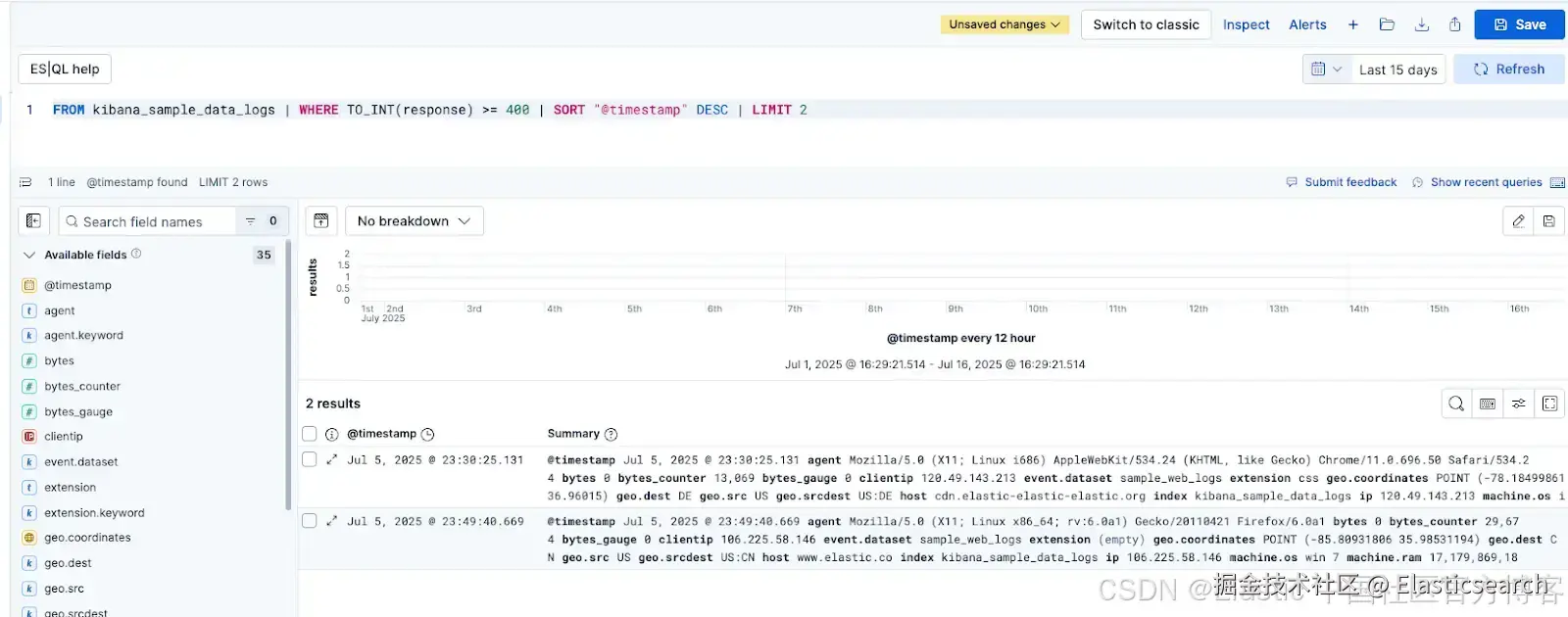

我们将让 Gemini 编写一个 ES|QL 查询,用于检索在 response 字段中包含失败状态码的文档,并对结果进行总结。由于 response 字段可能没有映射为整数类型,因此我们需要让 Gemini 获取映射信息,并相应地调整查询。

我们假设已经实现了一个 Gemini 可以交互的工具。为了演示,我们将在 Dev Console 中自行执行该工具调用。

初始请求:

bash

`

1. POST _inference/chat_completion/gemini_chat_completion/_stream

2. {

3. "messages": [

4. {

5. "role": "user",

6. "content": "You are an expert in ES|QL, the query language for Elasticsearch. I have added the sample data from https://www.elastic.co/docs/manage-data/ingest/sample-data. The index is called kibana_sample_data_logs. Can you retrieve the mapping, generate and execute an ES|QL query to retrieve the most recent two web logs with a failure status code for the response field? To retrieve the most recent logs please use SORT on the @timestamp field. After retrieving the logs, please summarize the results in a concise manner. The response field may not be mapped as an integer, so be sure to handle it correctly."

7. }

8. ],

9. "tools": [

10. {

11. "type": "function",

12. "function": {

13. "name": "retrieve_index_mapping",

14. "description": "Retrieves the mapping for an Elasticsearch index.",

15. "parameters": {

16. "type": "object",

17. "properties": {

18. "index_name": {

19. "type": "string",

20. "description": "The name of the index to retrieve the mapping for."

21. }

22. },

23. "required": [

24. "index_name"

25. ]

26. }

27. }

28. },

29. {

30. "type": "function",

31. "function": {

32. "name": "execute_esql_query",

33. "description": "Executes an ES|QL query against an Elasticsearch index.",

34. "parameters": {

35. "type": "object",

36. "properties": {

37. "query": {

38. "type": "string",

39. "description": "The ES|QL query to execute."

40. }

41. },

42. "required": [

43. "query"

44. ]

45. }

46. }

47. }

48. ],

49. "tool_choice": "auto",

50. "temperature": 0.7

51. }

`AI写代码收起代码块Gemini 响应了一个工具调用,用于获取索引 kibana_sample_data_logs 的映射信息:

swift

`

1. event: message

2. data: {"id":"agZ4aP6OGZapnvgPtbCBkQU","choices":[{"delta":{"role":"model","tool_calls":[{"index":0,"id":"retrieve_index_mapping","function":{"arguments":"{\"index_name\":\"kibana_sample_data_logs\"}","name":"retrieve_index_mapping"},"type":"function"}]},"finish_reason":"STOP","index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk","usage":{"completion_tokens":16,"prompt_tokens":182,"total_tokens":663}}

4. event: message

5. data: [DONE]

`AI写代码为了模拟工具调用,我们将使用以下命令获取映射信息:

bash

`GET kibana_sample_data_logs/_mapping`AI写代码为了完成工具调用的模拟,我们将在下一次向 Gemini 的请求中包含该响应:

包含映射结果的请求:

swift

`

1. POST _inference/chat_completion/gemini_chat_completion/_stream

2. {

3. "messages": [

4. {

5. "role": "user",

6. "content": "You are an expert in ES|QL, the query language for Elasticsearch. I have added the sample data from https://www.elastic.co/docs/manage-data/ingest/sample-data. The index is called kibana_sample_data_logs. Can you retrieve the mapping, generate and execute an ES|QL query to retrieve the most recent two web logs with a failure status code for the response field? To retrieve the most recent logs please use SORT on the @timestamp field. After retrieving the logs, please summarize the results in a concise manner. The response field may not be mapped as an integer, so be sure to handle it correctly."

7. },

8. {

9. "role": "assistant",

10. "content": "",

11. "tool_calls": [

12. {

13. "function": {

14. "name": "retrieve_index_mapping",

15. "arguments": "{\"index_name\":\"kibana_sample_data_logs\"}"

16. },

17. "id": "1",

18. "type": "function"

19. }

20. ]

21. },

22. {

23. "role": "tool",

24. "content": "{\".ds-kibana_sample_data_logs-2025.07.16-000001\":{\"mappings\":{\"_data_stream_timestamp\":{\"enabled\":true},\"properties\":{\"@timestamp\":{\"type\":\"date\"},\"agent\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"bytes\":{\"type\":\"long\"},\"bytes_counter\":{\"type\":\"long\",\"time_series_metric\":\"counter\"},\"bytes_gauge\":{\"type\":\"long\",\"time_series_metric\":\"gauge\"},\"clientip\":{\"type\":\"ip\"},\"event\":{\"properties\":{\"dataset\":{\"type\":\"keyword\"}}},\"extension\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"geo\":{\"properties\":{\"coordinates\":{\"type\":\"geo_point\"},\"dest\":{\"type\":\"keyword\"},\"src\":{\"type\":\"keyword\"},\"srcdest\":{\"type\":\"keyword\"}}},\"host\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"index\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ip\":{\"type\":\"ip\"},\"ip_range\":{\"type\":\"ip_range\"},\"machine\":{\"properties\":{\"os\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ram\":{\"type\":\"long\"}}},\"memory\":{\"type\":\"double\"},\"message\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"phpmemory\":{\"type\":\"long\"},\"referer\":{\"type\":\"keyword\"},\"request\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"time_series_dimension\":true}}},\"response\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"tags\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"timestamp\":{\"type\":\"alias\",\"path\":\"@timestamp\"},\"timestamp_range\":{\"type\":\"date_range\"},\"url\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"utc_time\":{\"type\":\"date\"}}}}}",

25. "tool_call_id": "1"

26. }

27. ],

28. "tools": [

29. {

30. "type": "function",

31. "function": {

32. "name": "retrieve_index_mapping",

33. "description": "Retrieves the mapping for an Elasticsearch index.",

34. "parameters": {

35. "type": "object",

36. "properties": {

37. "index_name": {

38. "type": "string",

39. "description": "The name of the index to retrieve the mapping for."

40. }

41. },

42. "required": [

43. "index_name"

44. ]

45. }

46. }

47. },

48. {

49. "type": "function",

50. "function": {

51. "name": "execute_esql_query",

52. "description": "Executes an ES|QL query against an Elasticsearch index.",

53. "parameters": {

54. "type": "object",

55. "properties": {

56. "query": {

57. "type": "string",

58. "description": "The ES|QL query to execute."

59. }

60. },

61. "required": [

62. "query"

63. ]

64. }

65. }

66. }

67. ],

68. "tool_choice": "auto",

69. "temperature": 0.7

70. }

`AI写代码收起代码块现在 Gemini 已经获得了映射信息,它响应了一个工具调用,用于执行查询以检索最近的两条 Web 日志:

vbnet

`

1. event: message

2. data: {"id":"3AZ4aMfXLJapnvgPtbCBkQU","choices":[{"delta":{"content":"\n","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

4. event: message

5. data: {"id":"3AZ4aMfXLJapnvgPtbCBkQU","choices":[{"delta":{"role":"model","tool_calls":[{"index":0,"id":"execute_esql_query","function":{"arguments":"{\"query\":\"FROM kibana_sample_data_logs WHERE TO_INT(response) >= 400 ORDER BY \\\"@timestamp\\\" DESC LIMIT 2\"}","name":"execute_esql_query"},"type":"function"}]},"finish_reason":"STOP","index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk","usage":{"completion_tokens":38,"prompt_tokens":665,"total_tokens":1652}}

7. event: message

8. data: [DONE]

`AI写代码仔细观察,你会发现不同子句前缺少管道字符。我们假设的工具调用将模拟 Kibana 并返回一个错误,从而让我们看到 Gemini 如何处理该问题。Kibana 的 ES|QL 查询控制台会返回如下错误:

arduino

`[esql] > Couldn't parse Elasticsearch ES|QL query. Check your query and try again. Error: line 1:30: mismatched input 'WHERE' expecting {<EOF>, '|', ',', 'metadata'}`AI写代码让我们将其包含在 execute_esql_query 的响应中。

包含错误的请求:

swift

`

1. POST _inference/chat_completion/gemini_chat_completion/_stream

2. {

3. "messages": [

4. {

5. "role": "user",

6. "content": "You are an expert in ES|QL, the query language for Elasticsearch. I have added the sample data from https://www.elastic.co/docs/manage-data/ingest/sample-data. The index is called kibana_sample_data_logs. Can you retrieve the mapping, generate and execute an ES|QL query to retrieve the most recent two web logs with a failure status code for the response field? To retrieve the most recent logs please use SORT on the @timestamp field. After retrieving the logs, please summarize the results in a concise manner. The response field may not be mapped as an integer, so be sure to handle it correctly."

7. },

8. {

9. "role": "assistant",

10. "content": "",

11. "tool_calls": [

12. {

13. "function": {

14. "name": "retrieve_index_mapping",

15. "arguments": "{\"index_name\":\"kibana_sample_data_logs\"}"

16. },

17. "id": "1",

18. "type": "function"

19. }

20. ]

21. },

22. {

23. "role": "tool",

24. "content": "{\".ds-kibana_sample_data_logs-2025.07.16-000001\":{\"mappings\":{\"_data_stream_timestamp\":{\"enabled\":true},\"properties\":{\"@timestamp\":{\"type\":\"date\"},\"agent\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"bytes\":{\"type\":\"long\"},\"bytes_counter\":{\"type\":\"long\",\"time_series_metric\":\"counter\"},\"bytes_gauge\":{\"type\":\"long\",\"time_series_metric\":\"gauge\"},\"clientip\":{\"type\":\"ip\"},\"event\":{\"properties\":{\"dataset\":{\"type\":\"keyword\"}}},\"extension\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"geo\":{\"properties\":{\"coordinates\":{\"type\":\"geo_point\"},\"dest\":{\"type\":\"keyword\"},\"src\":{\"type\":\"keyword\"},\"srcdest\":{\"type\":\"keyword\"}}},\"host\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"index\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ip\":{\"type\":\"ip\"},\"ip_range\":{\"type\":\"ip_range\"},\"machine\":{\"properties\":{\"os\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ram\":{\"type\":\"long\"}}},\"memory\":{\"type\":\"double\"},\"message\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"phpmemory\":{\"type\":\"long\"},\"referer\":{\"type\":\"keyword\"},\"request\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"time_series_dimension\":true}}},\"response\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"tags\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"timestamp\":{\"type\":\"alias\",\"path\":\"@timestamp\"},\"timestamp_range\":{\"type\":\"date_range\"},\"url\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"utc_time\":{\"type\":\"date\"}}}}}",

25. "tool_call_id": "1"

26. },

27. {

28. "role": "assistant",

29. "content": "",

30. "tool_calls": [

31. {

32. "function": {

33. "name": "execute_esql_query",

34. "arguments": "{\"query\":\"FROM kibana_sample_data_logs WHERE TO_INT(response) >= 400 ORDER BY \\\"@timestamp\\\" DESC LIMIT 2\"}"

35. },

36. "id": "2",

37. "type": "function"

38. }

39. ]

40. },

41. {

42. "role": "tool",

43. "content": "[esql] > Couldn't parse Elasticsearch ES|QL query. Check your query and try again. Error: line 1:30: mismatched input 'WHERE' expecting {<EOF>, '|', ',', 'metadata'}",

44. "tool_call_id": "2"

45. }

46. ],

47. "tools": [

48. {

49. "type": "function",

50. "function": {

51. "name": "retrieve_index_mapping",

52. "description": "Retrieves the mapping for an Elasticsearch index.",

53. "parameters": {

54. "type": "object",

55. "properties": {

56. "index_name": {

57. "type": "string",

58. "description": "The name of the index to retrieve the mapping for."

59. }

60. },

61. "required": [

62. "index_name"

63. ]

64. }

65. }

66. },

67. {

68. "type": "function",

69. "function": {

70. "name": "execute_esql_query",

71. "description": "Executes an ES|QL query against an Elasticsearch index.",

72. "parameters": {

73. "type": "object",

74. "properties": {

75. "query": {

76. "type": "string",

77. "description": "The ES|QL query to execute."

78. }

79. },

80. "required": [

81. "query"

82. ]

83. }

84. }

85. }

86. ],

87. "tool_choice": "auto",

88. "temperature": 0.7

89. }

`AI写代码收起代码块Gemini 能够识别错误并生成正确的查询:

vbnet

``

1. event: message

2. data: {"id":"7gh4aPLAMvzcnvgP1q6EgQw","choices":[{"delta":{"content":"\n","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

4. event: message

5. data: {"id":"7gh4aPLAMvzcnvgP1q6EgQw","choices":[{"delta":{"content":"I","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

7. event: message

8. data: {"id":"7gh4aPLAMvzcnvgP1q6EgQw","choices":[{"delta":{"content":"'m sorry, it seems I made a mistake in the previous ES|QL query. Let me correct it and try again. The different parts of the query should be separated by a pipe `|` character.","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

10. event: message

11. data: {"id":"7gh4aPLAMvzcnvgP1q6EgQw","choices":[{"delta":{"role":"model","tool_calls":[{"index":0,"id":"execute_esql_query","function":{"arguments":"{\"query\":\"FROM kibana_sample_data_logs | WHERE TO_INT(response) >= 400 | ORDER BY \\\"@timestamp\\\" DESC | LIMIT 2\"}","name":"execute_esql_query"},"type":"function"}]},"finish_reason":"STOP","index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk","usage":{"completion_tokens":85,"prompt_tokens":750,"total_tokens":1620}}

13. event: message

14. data: [DONE]

``AI写代码我们的下一条消息将包含在 Kibana 中执行正确格式查询的结果。要检索文档,我们可以直接在 Discover 中执行查询并复制文档内容,或者在 Dev Tools 中执行查询。假设我们的工具实现使用了 Discover,并检索了文档的完整源数据。

包含文档结果的请求:

swift

`

1. POST _inference/chat_completion/gemini_chat_completion/_stream

2. {

3. "messages": [

4. {

5. "role": "user",

6. "content": "You are an expert in ES|QL, the query language for Elasticsearch. I have added the sample data from https://www.elastic.co/docs/manage-data/ingest/sample-data. The index is called kibana_sample_data_logs. Can you retrieve the mapping, generate and execute an ES|QL query to retrieve the most recent two web logs with a failure status code for the response field? To retrieve the most recent logs please use SORT on the @timestamp field. After retrieving the logs, please summarize the results in a concise manner. The response field may not be mapped as an integer, so be sure to handle it correctly."

7. },

8. {

9. "role": "assistant",

10. "content": "",

11. "tool_calls": [

12. {

13. "function": {

14. "name": "retrieve_index_mapping",

15. "arguments": "{\"index_name\":\"kibana_sample_data_logs\"}"

16. },

17. "id": "1",

18. "type": "function"

19. }

20. ]

21. },

22. {

23. "role": "tool",

24. "content": "{\".ds-kibana_sample_data_logs-2025.07.16-000001\":{\"mappings\":{\"_data_stream_timestamp\":{\"enabled\":true},\"properties\":{\"@timestamp\":{\"type\":\"date\"},\"agent\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"bytes\":{\"type\":\"long\"},\"bytes_counter\":{\"type\":\"long\",\"time_series_metric\":\"counter\"},\"bytes_gauge\":{\"type\":\"long\",\"time_series_metric\":\"gauge\"},\"clientip\":{\"type\":\"ip\"},\"event\":{\"properties\":{\"dataset\":{\"type\":\"keyword\"}}},\"extension\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"geo\":{\"properties\":{\"coordinates\":{\"type\":\"geo_point\"},\"dest\":{\"type\":\"keyword\"},\"src\":{\"type\":\"keyword\"},\"srcdest\":{\"type\":\"keyword\"}}},\"host\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"index\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ip\":{\"type\":\"ip\"},\"ip_range\":{\"type\":\"ip_range\"},\"machine\":{\"properties\":{\"os\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"ram\":{\"type\":\"long\"}}},\"memory\":{\"type\":\"double\"},\"message\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"phpmemory\":{\"type\":\"long\"},\"referer\":{\"type\":\"keyword\"},\"request\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"time_series_dimension\":true}}},\"response\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"tags\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"timestamp\":{\"type\":\"alias\",\"path\":\"@timestamp\"},\"timestamp_range\":{\"type\":\"date_range\"},\"url\":{\"type\":\"text\",\"fields\":{\"keyword\":{\"type\":\"keyword\",\"ignore_above\":256}}},\"utc_time\":{\"type\":\"date\"}}}}}",

25. "tool_call_id": "1"

26. },

27. {

28. "role": "assistant",

29. "content": "",

30. "tool_calls": [

31. {

32. "function": {

33. "name": "execute_esql_query",

34. "arguments": "{\"query\":\"FROM kibana_sample_data_logs WHERE TO_INT(response) >= 400 SORT \\\"@timestamp\\\" DESC LIMIT 2\"}"

35. },

36. "id": "2",

37. "type": "function"

38. }

39. ]

40. },

41. {

42. "role": "tool",

43. "content": "[esql] > Couldn't parse Elasticsearch ES|QL query. Check your query and try again. Error: line 1:30: mismatched input 'WHERE' expecting {<EOF>, '|', ',', 'metadata'}",

44. "tool_call_id": "2"

45. },

46. {

47. "role": "assistant",

48. "content": "",

49. "tool_calls": [

50. {

51. "function": {

52. "name": "execute_esql_query",

53. "arguments": "{\"query\":\"FROM kibana_sample_data_logs | WHERE TO_INT(response) >= 400 | SORT \\\"@timestamp\\\" DESC | LIMIT 2\"}"

54. },

55. "id": "3",

56. "type": "function"

57. }

58. ]

59. },

60. {

61. "role": "tool",

62. "content": "[{\"@timestamp\":\"2025-07-06T03:30:25.131Z\",\"agent\":\"Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24\",\"agent.keyword\":\"Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24\",\"bytes\":0,\"bytes_counter\":13069,\"bytes_gauge\":0,\"clientip\":\"120.49.143.213\",\"event.dataset\":\"sample_web_logs\",\"extension\":\"css\",\"extension.keyword\":\"css\",\"geo.coordinates\":\"POINT (-78.18499861 36.96015)\",\"geo.dest\":\"DE\",\"geo.src\":\"US\",\"geo.srcdest\":\"US:DE\",\"host\":\"cdn.elastic-elastic-elastic.org\",\"host.keyword\":\"cdn.elastic-elastic-elastic.org\",\"index\":\"kibana_sample_data_logs\",\"index.keyword\":\"kibana_sample_data_logs\",\"ip\":\"120.49.143.213\",\"machine.os\":\"ios\",\"machine.os.keyword\":\"ios\",\"machine.ram\":20401094656,\"message\":\"120.49.143.213 - - [2018-07-22T03:30:25.131Z] \\\"GET /styles/main.css_1 HTTP/1.1\\\" 503 0 \\\"-\\\" \\\"Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24\\\"\",\"message.keyword\":\"120.49.143.213 - - [2018-07-22T03:30:25.131Z] \\\"GET /styles/main.css_1 HTTP/1.1\\\" 503 0 \\\"-\\\" \\\"Mozilla/5.0 (X11; Linux i686) AppleWebKit/534.24 (KHTML, like Gecko) Chrome/11.0.696.50 Safari/534.24\\\"\",\"referer\":\"http://twitter.com/success/konstantin-feoktistov\",\"request\":\"/styles/main.css\",\"request.keyword\":\"/styles/main.css\",\"response\":\"503\",\"response.keyword\":\"503\",\"tags\":[\"success\",\"login\"],\"tags.keyword\":[\"login\",\"success\"],\"timestamp\":\"2025-07-06T03:30:25.131Z\",\"url\":\"https://cdn.elastic-elastic-elastic.org/styles/main.css_1\",\"url.keyword\":\"https://cdn.elastic-elastic-elastic.org/styles/main.css_1\",\"utc_time\":\"2025-07-06T03:30:25.131Z\"},{\"@timestamp\":\"2025-07-06T03:49:40.669Z\",\"agent\":\"Mozilla/5.0 (X11; Linux x86_64; rv:6.0a1) Gecko/20110421 Firefox/6.0a1\",\"agent.keyword\":\"Mozilla/5.0 (X11; Linux x86_64; rv:6.0a1) Gecko/20110421 Firefox/6.0a1\",\"bytes\":0,\"bytes_counter\":29674,\"bytes_gauge\":0,\"clientip\":\"106.225.58.146\",\"event.dataset\":\"sample_web_logs\",\"extension\":\"\",\"extension.keyword\":\"\",\"geo.coordinates\":\"POINT (-85.80931806 35.98531194)\",\"geo.dest\":\"CN\",\"geo.src\":\"US\",\"geo.srcdest\":\"US:CN\",\"host\":\"www.elastic.co\",\"host.keyword\":\"www.elastic.co\",\"index\":\"kibana_sample_data_logs\",\"index.keyword\":\"kibana_sample_data_logs\",\"ip\":\"106.225.58.146\",\"machine.os\":\"win 7\",\"machine.os.keyword\":\"win 7\",\"machine.ram\":17179869184,\"message\":\"106.225.58.146 - - [2018-07-22T03:49:40.669Z] \\\"GET /apm_1 HTTP/1.1\\\" 503 0 \\\"-\\\" \\\"Mozilla/5.0 (X11; Linux x86_64; rv:6.0a1) Gecko/20110421 Firefox/6.0a1\\\"\",\"message.keyword\":\"106.225.58.146 - - [2018-07-22T03:49:40.669Z] \\\"GET /apm_1 HTTP/1.1\\\" 503 0 \\\"-\\\" \\\"Mozilla/5.0 (X11; Linux x86_64; rv:6.0a1) Gecko/20110421 Firefox/6.0a1\\\"\",\"referer\":\"http://www.elastic-elastic-elastic.com/success/richard-o-covey\",\"request\":\"/apm\",\"request.keyword\":\"/apm\",\"response\":\"503\",\"response.keyword\":\"503\",\"tags\":[\"success\",\"security\"],\"tags.keyword\":[\"security\",\"success\"],\"timestamp\":\"2025-07-06T03:49:40.669Z\",\"url\":\"https://www.elastic.co/downloads/apm_1\",\"url.keyword\":\"https://www.elastic.co/downloads/apm_1\",\"utc_time\":\"2025-07-06T03:49:40.669Z\"}]",

63. "tool_call_id": "3"

64. }

65. ],

66. "tools": [

67. {

68. "type": "function",

69. "function": {

70. "name": "retrieve_index_mapping",

71. "description": "Retrieves the mapping for an Elasticsearch index.",

72. "parameters": {

73. "type": "object",

74. "properties": {

75. "index_name": {

76. "type": "string",

77. "description": "The name of the index to retrieve the mapping for."

78. }

79. },

80. "required": [

81. "index_name"

82. ]

83. }

84. }

85. },

86. {

87. "type": "function",

88. "function": {

89. "name": "execute_esql_query",

90. "description": "Executes an ES|QL query against an Elasticsearch index.",

91. "parameters": {

92. "type": "object",

93. "properties": {

94. "query": {

95. "type": "string",

96. "description": "The ES|QL query to execute."

97. }

98. },

99. "required": [

100. "query"

101. ]

102. }

103. }

104. }

105. ],

106. "tool_choice": "auto",

107. "temperature": 0.7

108. }

`AI写代码收起代码块Gemini 的最后一次响应重申了对话迄今为止的内容,并总结了检索到的两条文档,就像我们要求的那样:

以下是总结结果的响应:

swift

``

1. event: message

2. data: {"id":"5Qx4aKerCZj3ld8PnuzrqAQ","choices":[{"delta":{"content":"\nthought\n1. **Search**: The user wants to query Elasticsearch using ES|QL. The available tool `execute_esql_query` is a perfect match for this.\n\n2. **Parameters**: The `execute_esql_query` tool requires a `query` string.\n\n3. **Query Generation**: The user wants to \"retrieve the most recent two web logs with a failure status code for the response field\". They also mention that the `response` field might not be an integer and that we should use `SORT` on the `@timestamp` field.\n\n - **FROM clause**: The index is specified as `kibana_sample_data_logs`. So, `FROM kibana_sample_data_logs`.\n - **WHERE clause**: A \"failure status code\" is typically a 4xx or 5xx code. The user also mentions the `response` field might not be an integer. The `TO_INT()` function in ES|QL can be used to cast the `response` field to an integer for numerical comparison. So, the condition will be `TO_INT(response) >= 400`.\n - **SORT clause**: The user wants the \"most recent\" logs and explicitly asks to \"use SORT on the @timestamp field\". To get the most recent, we need to sort in descending order. So, `SORT \"@timestamp\" DESC`.\n - **LIMIT clause**: The user wants the \"most recent two\" logs. So, `LIMIT 2`.\n\n4. **Initial Query Construction**: Putting it all together, the initial query is `FROM kibana_sample_data_logs WHERE TO_INT(response) >= 400 SORT \"@timestamp\" DESC LIMIT 2`.\n\n5. **Tool Call**: Call the `execute_esql_query` tool with the generated query.\n\n6. **Execution Analysis**: The first tool call `print(default_api.execute_esql_query(query = \"FROM kibana_sample_data_logs WHERE TO_INT(response) >= 400 SORT \\\"@timestamp\\\" DESC LIMIT 2\"))` fails with a parsing error: `mismatched input 'WHERE' expecting {<EOF>, '|', ',', 'metadata'}`.\n\n7. **Correction**: The error message indicates that the `WHERE` keyword is not expected after the `FROM` clause. In ES|QL, pipes (`|`) are used to separate different stages of the query. The correct syntax is to pipe the output of the `FROM` clause to the `WHERE` clause.\n\n8. **Corrected Query Construction**: The corrected query is `FROM kibana_sample_data_logs | WHERE TO_INT(response) >= 400 | SORT \"@timestamp\" DESC | LIMIT 2`.\n\n9. **Second Tool Call**: Call the `execute_esql_query` tool with the corrected query.\n\n10. **Execution Analysis**: The second tool call is successful and returns a JSON object with two log entries.\n\n11. **Summarization**: The user wants the results to be summarized \"in a concise manner\". I need to extract the key information from the returned JSON and present it to the user.\n\n - The first log has a timestamp of `2025-07-06T03:30:25.131Z`, a response code of `503`, a client IP of `120.49.143.213`, and the request was for `/styles/main.css`.\n - The second log has a timestamp of `2025-07-06T03:49:40.669Z`, a response code of `503`, a client IP of `106.225.58.146`, and the request was for `/apm`.\n\n12. **Final Response**: Combine the corrected action and the summary into a final response for the user. Acknowledge the initial error and explain the correction. Then, present the summarized results.","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

4. event: message

5. data: {"id":"5Qx4aKerCZj3ld8PnuzrqAQ","choices":[{"delta":{"content":"I have retrieved the mapping for the `kibana_sample_data_logs` index and executed an ES|QL query to retrieve the two most recent web logs with a failure status code.\n\nI initially made a mistake in the ES|QL syntax,","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

7. event: message

8. data: {"id":"5Qx4aKerCZj3ld8PnuzrqAQ","choices":[{"delta":{"content":" but I have corrected it and successfully retrieved the data.\n\nHere is a summary of the results:\n\nThe most recent web log with a failure status code has a timestamp of **2025-07-06T03:49:40.669Z**, a response code of","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

10. event: message

11. data: {"id":"5Qx4aKerCZj3ld8PnuzrqAQ","choices":[{"delta":{"content":" **503**, a client IP of **106.225.58.146** and the request was for **/apm**.\n\nThe second most recent web log with a failure status code has a timestamp of **2025-07-06T03:30:","role":"model"},"index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk"}

13. event: message

14. data: {"id":"5Qx4aKerCZj3ld8PnuzrqAQ","choices":[{"delta":{"content":"25.131Z**, a response code of **503**, a client IP of **120.49.143.213** and the request was for **/styles/main.css**.","role":"model"},"finish_reason":"STOP","index":0}],"model":"gemini-2.5-pro","object":"chat.completion.chunk","usage":{"completion_tokens":1099,"prompt_tokens":2287,"total_tokens":3386}}

16. event: message

17. data: [DONE]

``AI写代码使用 ES|QL 的 Completion 命令总结结果

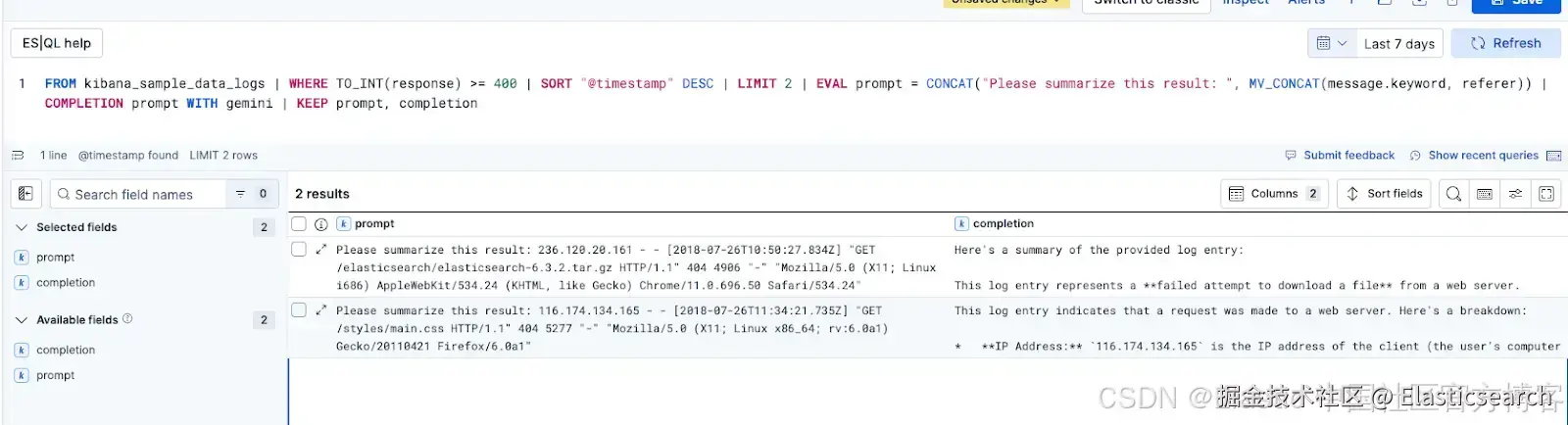

在之前的工作流中,我们使用了 Google 的 Gemini 模型来总结两条失败的 Web 日志。我们也可以利用 ES|QL 的 Completion 命令的能力,直接在 Kibana 中总结结果。首先,我们需要创建一个使用 completion 任务类型的新推理端点。

bash

`

1. PUT _inference/completion/gemini_completion

2. {

3. "service": "googlevertexai",

4. "service_settings": {

5. "service_account_json": "<output string from the sed command>",

6. "model_id": "gemini-2.5-flash-lite",

7. "location": "us-central1",

8. "project_id": "<project id>"

9. }

10. }

`AI写代码同样,在成功创建推理端点后,我们会收到如下类似的响应,状态码为 200 OK:

bash

`

1. {

2. "inference_id": "gemini_completion",

3. "task_type": "completion",

4. "service": "googlevertexai",

5. "service_settings": {

6. "project_id": "<project id>",

7. "location": "us-central1",

8. "model_id": "gemini-2.5-flash-lite",

9. "rate_limit": {

10. "requests_per_minute": 1000

11. }

12. }

13. }

`AI写代码现在我们可以执行 Gemini 为我们生成的 ES|QL 查询的修改版本。

sql

`FROM kibana_sample_data_logs | WHERE TO_INT(response) >= 400 | SORT "@timestamp" DESC | LIMIT 2 | EVAL prompt = CONCAT("Please summarize this result: ", MV_CONCAT(message.keyword, referer)) | COMPLETION prompt WITH gemini_completion | KEEP prompt, completion`AI写代码对查询的修改如下:

-

EVAL prompt ------ 该命令创建一个字段,并将其与文本一起发送给 Gemini,以提供特定字段并指示如何处理这些字段。在我们的示例中,它会要求 Gemini 总结 message 和 referer 字段的内容

-

COMPLETION ------ 该命令会将每个结果传递给 LLM,以执行我们指示它完成的任务

-

WITH gemini_completion ------ 这是我们之前创建的推理端点 ID

-

KEEP prompt, completion ------ 这指示 ES|QL 生成一个表格,包含我们发送给 Gemini 的 prompt 以及收到的结果

结果应如下图所示。

总结

我们持续将最先进的 AI 工具和提供商引入 Elasticsearch,希望你和我们一样对与 Google Cloud 的 Vertex AI 平台及 Google 的 Gemini 模型的集成感到兴奋!将 Google Cloud 的生成式 AI 能力与 Elasticsearch 向量数据库和 AI 搜索工具结合,提供高相关性、可投入生产的搜索和分析体验。

访问 Elasticsearch Labs 上的 Google Cloud Vertex AI 页面,或在 Search Labs GitHub 上尝试其他示例笔记本。

要在本地测试运行 Elasticsearch,请使用 Docker 安装并启动本地的 Elasticsearch 和 Kibana,命令如下:

sql

`curl -fsSL https://elastic.co/start-local | sh` AI写代码通过免费 Elastic Cloud 试用或通过 Google Cloud Marketplace 订阅即可开始使用。