1、docker拉取

bash

docker pull elasticsearch:8.17.4

docker pull kibana:8.17.4

docker pull logstash:8.17.4

docker pull docker.elastic.co/beats/filebeat:8.17.4

docker pull zookeeper:latest

docker pull confluentinc/cp-kafka:latest2、elasticsearch安装

bash

mkdir -p /data/elasticsearch/{data,logs}

chmod 777 /data/elasticsearch/data

chmod 777 /data/elasticsearch/logs

cat >> /etc/sysctl.conf << EOF

vm.max_map_count=262144

EOF

sysctl -pelasticsearch启动

bash

docker run -d \

--restart unless-stopped \

--name elasticsearch \

--hostname elasticsearch \

-e "ES_JAVA_OPTS=-Xms1024m -Xmx1024m" \

-e "discovery.type=single-node" \

-p 9200:9200 \

-v /data/elasticsearch/logs:/usr/share/elasticsearch/logs \

-v /data/elasticsearch/data:/usr/share/elasticsearch/data \

-v /etc/localtime:/etc/localtime \

elasticsearch:8.17.4创建用户密码:GuL0ZfYeMX7fAMqK

bash

docker exec -it elasticsearch bash

/usr/share/elasticsearch/bin/elasticsearch-reset-password -u elastic -i

/usr/share/elasticsearch/bin/elasticsearch-reset-password -u kibana_system -i

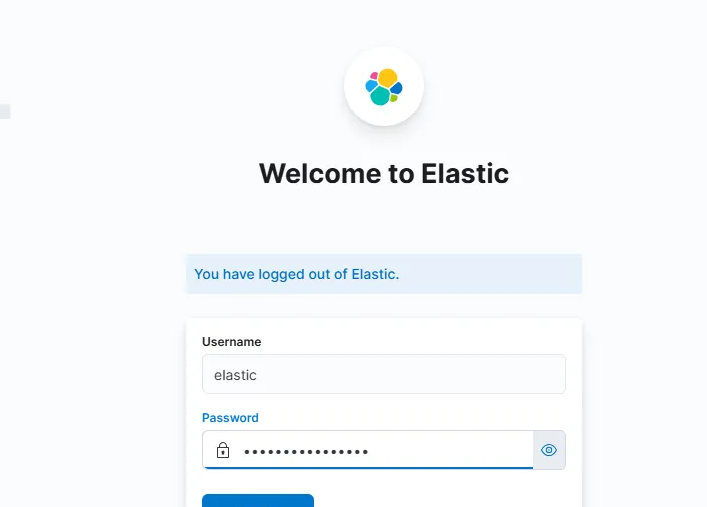

/usr/share/elasticsearch/bin/elasticsearch-reset-password -u logstash_system -iElasticsearch 登录信息

账户信息

| 服务 | 用户名 | 密码 |

|---|---|---|

| Elasticsearch | elastic |

GuL0ZfYeMX7fAMqK |

| Kibana | kibana_system |

GuL0ZfYeMX7fAMqK |

访问地址

| 服务 | URL |

|---|---|

| Elasticsearch | https://172.16.52.115:9200/ |

| Kibana | https://172.16.52.115:5601/ |

说明

- Elasticsearch 账户

elastic是超级管理员账户 - Kibana 系统账户

kibana_system用于 Kibana 与 Elasticsearch 的通信 - 两个服务使用相同的密码

- 访问需要使用 HTTPS 协议

bash

3、kibana安装

mkdir -p /data/kibana/{conf,logs}

chmod 777 /data/kibana/conf

chmod 777 /data/kibana/logs

cat > /data/kibana/conf/kibana.yml << EOF

server.port: 5601

server.host: "0.0.0.0"

server.publicBaseUrl: "http://172.16.52.115:5601"

elasticsearch.hosts: ["https://172.16.52.115:9200"]

elasticsearch.username: "kibana_system"

elasticsearch.password: "GuL0ZfYeMX7fAMqK"

elasticsearch.ssl.verificationMode: "none"

xpack.reporting.csv.maxSizeBytes: 20971520

xpack.security.encryptionKey: "something_at_least_32_characters"

logging:

appenders:

file:

type: file

fileName: /usr/share/kibana/logs/kibana.log

layout:

type: json

root:

appenders:

- default

- file

i18n.locale: "zh-CN"

EOFkibana启动

bash

docker run -d \

--restart unless-stopped \

--name kibana \

--hostname kibana \

-p 5601:5601 \

-v /etc/localtime:/etc/localtime \

-v /data/kibana/logs:/usr/share/kibana/logs \

-v /data/kibana/conf/kibana.yml:/usr/share/kibana/config/kibana.yml \

kibana:8.17.44、zookeeper安装

bash

mkdir -p /data/zookeeper/{conf,logs,data}

--restart unless-stopped \

--name zookeeper \

-p 2181:2181 \

-v /data/zookeeper/data:/data \

-v /data/zookeeper/logs:/datalog \

-v /etc/localtime:/etc/localtime \

zookeeper:latest5、kafka安装

bash

mkdir -p /data/kafka/data

chmod 777 /data/kafka/data

docker run -d \

--restart unless-stopped \

--name kafka \

-p 9092:9092 \

-e KAFKA_ZOOKEEPER_CONNECT=172.16.52.115:2181 \

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://172.16.52.115:9092 \

-e KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR=1 \

-v /etc/localtime:/etc/localtime \

-v /data/kafka/data:/var/lib/kafka/data \

confluentinc/cp-kafka:latest

bash

# kafka命令:

docker exec -it kafka bash

kafka-topics --list --bootstrap-server 172.16.52.115:9092 # 列出所有 Topic;

kafka-topics --create --bootstrap-server 172.16.52.115:9092 --replication-factor 1 --partitions 6 --topic nginx # 创建一个 topic;

kafka-console-producer --broker-list localhost:9092 --topic nginx #运行kafka生产者发送消息

{"datas":[{"channel":"","metric":"temperature","producer":"ijinus","sn":"IJA0101-00002245","time":"1543208156100","value":"80"}],"ver":"1.0"} #发送消息

kafka-topics --bootstrap-server 172.16.52.115:9092 --delete --topic nginx #删除kafka的topic6、logstash安装

bash

mkdir -p /data/logstash/{conf,logs,data}

chmod 777 /data/logstash/data

chmod 777 /data/logstash/logs

cat > /data/logstash/conf/logstash.yml << EOF

node.name: node-1

http.host: 0.0.0.0

http.port: 9600

path.data: /usr/share/logstash/data

path.logs: /usr/share/logstash/logs

pipeline.ordered: false

EOF

cat > /data/logstash/conf/logstash.conf << EOF

input{

kafka{

bootstrap_servers => "172.16.52.115:9092"

topics => ["nginx-access", "syslog"]

consumer_threads => 5

decorate_events => true

auto_offset_reset => "latest"

codec => "json"

}

}

filter {

json{

source => "message"

}

mutate {

remove_field => ["host","prospector","fields","input","log"]

}

grok {

match => { "message" => "%{IPORHOST:client_ip} (%{USER:ident}|- ) (%{USER:auth}|-) \\[%{HTTPDATE:timestamp}\\] \"(?:%{WORD:verb} (%{NOTSPACE:request}|-)(?: HTTP/%{NUMBER:http_version})?|-)\" %{NUMBER:status} (?:%{NUMBER:bytes}|-) \"(?:%{URI:referrer}|-)\" \"%{GREEDYDATA:agent}\"" }

}

}

output {

elasticsearch {

hosts => "https://172.16.52.115:9200"

ssl => true

ssl_certificate_verification => false

index => "%{[@metadata][kafka][topic]}-%{+YYYY.MM.dd}"

user => "elastic"

password => "GuL0ZfYeMX7fAMqK"

}

}

EOF启动logstash:

bash

docker run -d \

--restart unless-stopped \

--name logstash \

-v /etc/localtime:/etc/localtime \

-v /data/logstash/data:/usr/share/logstash/data \

-v /data/logstash/logs:/usr/share/logstash/logs \

-v /data/logstash/conf/logstash.conf:/usr/share/logstash/pipeline/logstash.conf \

-v /data/logstash/conf/logstash.yml:/usr/share/logstash/config/logstash.yml \

logstash:8.17.47、部署filebeat

bash

7.1、nginx安装

yum install -y yum-utilsepel-release

bash

# yum安装nginx

yum install -y nginx

rm -rf /etc/nginx/conf.d/default.conf

systemctl enable nginx

# 配置nginx主配置文件

vi /etc/nginx/nginx.conf

# nginx.conf 内容:

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

sendfile on;

server_tokens off;

tcp_nopush on;

tcp_nodelay on;

# nginx日志格式

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

keepalive_timeout 1d;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header x-client-ip $remote_addr;

include /etc/nginx/conf.d/*.conf;

}

# 配置虚拟主机

vi /etc/nginx/conf.d/test.conf

# test.conf 内容:

server {

listen 80;

server_name localhost;

access_log /var/log/nginx/access.log main;

location / {

root /var/www/html;

index index.html index.htm;

}

}

# 启动nginx

systemctl start nginx

systemctl status nginx

# 测试nginx

mkdir /var/www/html -p

echo "welcome to my site..." > /var/www/html/index.html7.2、filebeat安装

filebeat主要功能用来采集日志,然后发送到kafka

1.拉取镜像

bash

docker pull docker.elastic.co/beats/filebeat:8.17.42.创建日志文件夹路径

bash

mkdir -p /data/filebeat/log3.创建filebeat.yml文件,内容如下:

bash

vi /data/filebeat/filebeat.yml

filebeat.inputs:

- type: log

ignore_older: 10h

enable: true

paths:

- /var/log/nginx/access.log

fields:

filetype: nginx-access

fields_under_root: true

scan_frequency: 10s

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 1

name: "172.16.52.115"

setup.kibana:

output.kafka:

hosts: ["172.16.52.115:9092"]

topic: nginx-access

enabled: true

worker: 6

compression_level: 3

loadbalance: true

processors:

- drop_fields:

fields: ["input", "host", "agent.type", "agent.ephemeral_id", "agent.id", "agent.version", "ecs"]

logging.level: info4.执行命令启动filebeat

bash

docker run -d --name filebeat \

-v /data/filebeat/log:/var/log/filebeat \

-v /data/filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml \

-v /var/log/nginx:/var/log/nginx \

-v /etc/localtime:/etc/localtime \

docker.elastic.co/beats/filebeat:8.17.48、验证

1、在kafka的消费端执行命令同样可以看出数据,如图:

bash

docker exec -it kafka bash

kafka-console-consumer --bootstrap-server 172.16.52.115:9092 --topic nginx-access --from-beginning2、在elasticsearch查看索引

bash

https://172.16.52.115:9200/_cat/indices?v3、清理es索引

bash

curl -XDELETE "https://172.16.52.115:9200/nginx-access-2025.01.11" -u elastic:GuL0ZfYeMX7fAMqK -kdocker-compose

bash

mkdir /data/docker/log_elk/elasticsearch2/{config,data} -p

mkdir /data/docker/log_elk/logstash2/{data,config,pipeline} -p

mkdir /data/docker/log_elk/kibana2/config -p

mkdir /data/docker/log_elk/filebeat -p

chmod -R 777 /data/docker/log_elk

bash

4、创建文件

4.1、elasticsearch配置

vim /data/docker/log_elk/elasticsearch2/config/elasticsearch.yml

cluster.name: "docker-cluster"

network.host: 0.0.0.0

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-headers: Authorization

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

4.2、logstash配置

vim /data/docker/log_elk/logstash2/config/logstash.yml

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: ["http://172.16.52.115:9200"]

xpack.monitoring.enab1ed: true

xpack.monitoring.elasticsearch.username: "elastic"

xpack.monitoring.elasticsearch.password: "GuL0ZfYeMX7fAMqK"

bash

vim /data/docker/log_elk/logstash2/pipeline/logstash.conf

input{

kafka{

bootstrap_servers => "172.16.52.115:9092"

topics => ["nginx","syslog"]

consumer_threads => 5

decorate_events => true

auto_offset_reset => "latest"

codec => "json"

}

}

filter {

json{

source => "message"

}

mutate {

remove_field => ["host","prospector","fields","input","log"]

}

grok {

match => { "message" => "%{IPORHOST:client_ip} (%{USER:ident}|- ) (%{USER:auth}|-) \[%{HTTPDATE:timestamp}\] \"(?:%{WORD:verb} (%{NOTSPACE:request}|-)(?: HTTP/%{NUMBER:http_version})?|-)\" %{NUMBER:status} (?:%{NUMBER:bytes}|-) \"(?:%{URI:referrer}|-)\" \"%{GREEDYDATA:agent}\"" }

}

}

output {

elasticsearch {

hosts => "http://172.16.52.115:9200"

ssl => true

ssl_certificate_verification => false

index => "%{[@metadata][kafka][topic]}-%{+YYYY.MM.dd}"

user => "elastic"

password => "GuL0ZfYeMX7fAMqK"

}

}4.3、kibana配置

bash

vim /data/docker/log_elk/kibana2/config/kibana.yml

server.port: 5601

server.name: kibana

server.host: 0.0.0.0

elasticsearch.hosts: ["http://172.16.52.115:9200"]

elasticsearch.username: "elastic"

elasticsearch.password: "GuL0ZfYeMX7fAMqK"4.4、filebeat配置

bash

vim /data/docker/log_elk/filebeat/filebeat.yml

filebeat.inputs:

- type: log

paths:

- /var/log/nginx/access.log

tags: ["nginx-accesslog"]

fields:

document_type: nginxaccess

topic: nginx

- type: log

paths:

- /opt/var/log/messages

tags: ["syslog-accesslog"]

fields:

document_type: syslogaccess

topic: syslog

output.kafka:

hosts: ["172.16.52.115:9092"]

topic: '%{[fields.topic]}'

partition.hash:

reachable_only: true

compression: gzip

max_message_bytes: 1000000

required_acks: 1

logging.to_files: true5、配置docker-compose.yaml

bash

vim /data/docker/log_elk/docker-compose.yaml

version: '3.3'

services:

elasticsearch:

image: elasticsearch:7.17.4

hostname: elasticsearch

restart: always

container_name: elk-es

deploy:

mode: replicated

replicas: 1

ports:

- "9200:9200"

- "9300:9300"

volumes:

- /data/docker/log_elk/elasticsearch2/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /data/docker/log_elk/elasticsearch2/data:/usr/share/elasticsearch/data

environment:

TZ: Asia/Shanghai

ES_JAVA_OPTS: "-Xmx256m -Xms256m"

ELASTIC_PASSWORD: "GuL0ZfYeMX7fAMqK"

# Use single node discovery in order to disable production mode and avoid bootstrap checks.

# see: https://www.elastic.co/guide/en/elasticsearch/reference/7.11/bootstrap-checks.html

discovery.type: single-node

# Force publishing on the 'elk' overlay.

network.publish_host: _eth0_

networks:

- elk

logstash:

image: logstash:7.17.4

command: "/usr/local/bin/docker-entrypoint"

restart: always

container_name: elk-logs

ports:

- "5044:5044"

- "5000:5000"

- "9600:9600"

volumes:

- /data/docker/log_elk/logstash2/data:/usr/share/logstash/data

- /data/docker/log_elk/logstash2/config/logstash.yml:/usr/share/logstash/config/logstash.yml

- /data/docker/log_elk/logstash2/pipeline/logstash.conf:/usr/share/logstash/pipeline/logstash.conf

environment:

TZ: Asia/Shanghai

LS_JAVA_OPTS: "-Xmx256m -Xms256m"

hostname: logstash

# restart: always

networks:

- elk

deploy:

mode: replicated

replicas: 1

filebeat:

image: docker.elastic.co/beats/filebeat:7.17.4

container_name: elk-filebeat

restart: always

volumes:

- /data/docker/log_elk/filebeat/filebeat.yml:/usr/share/filebeat/filebeat.yml

- /var/log/nginx:/var/log/nginx:ro

- /var/log/messages:/opt/var/log/messages:ro

networks:

- elk

deploy:

mode: replicated

replicas: 1

kibana:

image: kibana:7.17.4

restart: always

container_name: elk-kibana

environment:

TZ: Asia/Shanghai

ports:

- "5601:5601"

volumes:

- /data/docker/log_elk/kibana2/config/kibana.yml:/usr/share/kibana/config/kibana.yml

networks:

- elk

deploy:

mode: replicated

replicas: 1

zookeeper:

restart: always

container_name: elk-zk

image: zookeeper:latest

environment:

TZ: Asia/Shanghai

ports:

- 2181:2181

kafka:

image: confluentinc/cp-kafka:latest

container_name: elk-kaf

environment:

TZ: Asia/Shanghai

KAFKA_LISTENERS: PLAINTEXT://0.0.0.0:9092

KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://172.16.52.115:9092

KAFKA_ZOOKEEPER_CONNECT: 172.16.52.115:2181

KAFKA_CREATE_TOPICS: "logdev:1:1"

restart: always

ports:

- 9092:9092

networks:

elk:6、elk启动服务

bash

cd /data/docker/log_elk

docker-compose up -d7、elk验证

7.1、kafka验证 kafka主题检查:

bash

docker exec -it elk-kaf kafka-topics --list --bootstrap-server 172.16.52.115:90922、检查kafka是否正常消费

bash

docker exec -it elk-kaf bash

kafka-console-producer --topic test01 --bootstrap-server kafka:9092

kafka-console-consumer --topic test01 --from-beginning --bootstrap-server kafka:9092 --group group01查询主题列表

bash

kafka-topics --bootstrap-server kafka:9092 --list查询消费组列表

bash

kafka-consumer-groups --bootstrap-server localhost:9092 --list