使用二进制文件方式部署kubernetes(2)

添加k8s的系统配置

所有节点执行

bash

cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

net.ipv6.conf.all.disable_ipv6 = 0

net.ipv6.conf.default.disable_ipv6 = 0

net.ipv6.conf.lo.disable_ipv6 = 0

net.ipv6.conf.all.forwarding = 1

EOF

bash

cat <<EOF | tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF再加载配置

bash

sysctl --system其中各条目功能如下

bash

#核心网络功能

net.ipv4.ip_forward = 1 # 启用ipv4端口转发

net.bridge.bridge-nf-call-iptables = 1 # 让iptables规则能处理桥接流量,默认情况下,通过网桥的流量不会经过 iptables。Kubernetes 的 Service 和网络策略都依赖 iptables,是CNI 插件正常工作的必需

net.bridge.bridge-nf-call-ip6tables = 1 # 同上,但针对IPv6和ip6tables

fs.may_detach_mounts = 1 # 允许安全地卸载挂载点,容器运行时(如 containerd)需要能安全地卸载容器的文件系统挂载点,防止卸载过程中出现"设备忙"错误

bash

#内存管理

vm.overcommit_memory=1 #内存超分配策略,1表示总是超分配内存

vm.panic_on_oom=0 # 内存不足时不要panic,内核不会崩溃重启,而是触发OOM killer杀掉进程

bash

#文件系统限制

fs.inotify.max_user_watches=89100 # 增加inotify监控的文件/目录数量

fs.file-max=52706963 # 系统全局文件描述符上限

fs.nr_open=52706963 # 单个进程可打开文件数的上限

bash

#连接跟踪

net.netfilter.nf_conntrack_max=2310720 # 连接跟踪表的最大条目数,每个网络连接(TCP/UDP)都会被内核跟踪。Kubernetes 集群有大量跨节点 Pod 通信,需要较大连接跟踪表

net.ipv4.ip_conntrack_max = 65536 # 传统连接跟踪表大小(这是为兼容旧内核,新内核使用前面的nf_conntrack_max)

bash

#TCP协议栈优化

net.ipv4.tcp_keepalive_time = 600 # TCP keepalive探测开始时间。10分钟后开始发送keepalive包,用于检测死连接

net.ipv4.tcp_keepalive_probes = 3 # keepalive探测次数

net.ipv4.tcp_keepalive_intvl =15 # keepalive探测间隔

net.ipv4.tcp_max_tw_buckets = 36000 # TIME_WAIT状态连接的最大数量,防止短时间大量短连接耗尽连接资源

net.ipv4.tcp_tw_reuse = 1 # 重用TIME_WAIT状态的socket,允许新连接重用处于TIME_WAIT状态的连接,减少端口耗尽问题

net.ipv4.tcp_max_orphans = 327680 # 孤儿连接最大数量,不属于任何进程的连接数量上限,防止内存泄露

net.ipv4.tcp_orphan_retries = 3 # 孤儿连接的重试次数

net.ipv4.tcp_syncookies = 1 # 启用SYN cookies,防止SYM flood攻击

net.ipv4.tcp_max_syn_backlog = 16384 # SYN队列大小,半连接队列长度,用来应对连接风暴

net.ipv4.tcp_timestamps = 0 # 禁用TCP时间戳

net.core.somaxconn = 16384 # 监听socket的连接队列大小,影响负载均衡器和客户端连接的并发能力

bash

#IPv6配置

net.ipv6.conf.all.disable_ipv6 = 0

net.ipv6.conf.default.disable_ipv6 = 0

net.ipv6.conf.lo.disable_ipv6 = 0 #以上三点启用IPv6,支持双栈集群

net.ipv6.conf.all.forwarding = 1 #启用IPv6转发配置Hosts

所有节点执行

bash

vim /etc/hosts

#末尾加上以下信息

192.168.153.161 master1 #节点ip+节点名称

192.168.153.162 worker1

...cfssl工具集安装

CFSSL(CloudFlare's PKI/TLS toolkit)是CloudFlare开发的一个PKI/TLS工具集,用于处理证书的签发、管理和验证。也就是用来制作证书的

只需在master1节点上执行即可

下载工具(github)

bash

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.3/cfssl_1.6.3_linux_amd64 -O cfssl

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.3/cfssljson_1.6.3_linux_amd64 -O cfssljson

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.3/cfssl-certinfo_1.6.3_linux_amd64 -O cfssl-certinfo将其移至 /usr/local/bin/ 下并赋予权限

bash

mv cfssl* /usr/local/bin

chmod u+x /usr/local/bin/cfssl*etcd集群部署

etcd 是 K8s 集群的分布式、高可用的键值存储数据库,存储集群所有状态数据,保证整个系统的一致性和可靠性。

安装etcd

下载安装包(github)

只在master1执行

bash

wget https://github.com/etcd-io/etcd/releases/download/v3.5.7/etcd-v3.5.7-linux-amd64.tar.gz解压并进入目录

bash

tar -xvf etcd-v3.5.7-linux-amd64.tar.gz && cd etcd-v3.5.7-linux-amd64 将其移至/usr/local/bin

bash

mv etcd* /usr/local/bin创建etcd证书存放目录

所有master节点执行

创建证书存放目录

bash

mkdir -p /opt/etcd/ssl && cd /opt/etcd/ssl配置etcd的CA

配置ca用于后续证书的签发

只要master1节点执行

创建CA配置文件,该文件可理解为 CA的发证规则手册

定义CA如何给别人签发证书(有效期、用途等),每次签发证书时都要参考,可反复使用

相当于:公司的"人事发证规定"

json

cat > ca-config.json << "EOF"

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"etcd": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF结构解析

json

{

"signing": { // 证书签发配置

"default": { // 默认配置

"expiry": "876000h" // 默认证书有效期 = 100年,单位为小时,可根据实际所需修改

},

"profiles": { // 定义不同的证书用途配置

"etcd": { // 名为 "etcd" 的配置模板

"usages": [ // 证书用途(X.509 Key Usage)

"signing", // 可用于数字签名

"key encipherment", // 可用于密钥加密

"server auth", // TLS 服务器身份验证

"client auth" // TLS 客户端身份验证

],

"expiry": "876000h" // 证书有效期 = 100年,单位为小时,可根据实际所需修改

}

}

}

}创建CA证书签名请求文件,该文件可以理解为是CA的身份声明书

定义CA自己是谁(名称、位置、加密方式等),只在创建该CA时使用一次(这里是针对etcd的CA),信息会写入CA证书中

相当于:公司的"营业执照申请表"

json

cat > ca-csr.json << "EOF"

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF结构解析

json

{

// Common Name (通用名称) - 证书持有者的主要标识名,在证书中显示为:CN=etcd

"CN": "etcd",

// 密钥配置部分 - 定义加密算法和密钥强度

"key": {

"algo": "rsa", // 算法类型:rsa

"size": 2048 // 密钥长度:2048位

},

// 证书主体详细信息,这些信息会出现在证书的Subject字段中

"names": [

{

"C": "CN", // Country (国家) - 2字母国家代码

"ST": "Beijing", // State or Province (州/省) - 所在省份

"L": "Beijing", // Locality (城市/地区) - 所在城市

"O": "etcd", // Organization (组织) - 公司或机构名称

"OU": "Etcd Security" // Organizational Unit (组织单元) - 部门或小组

}

],

// 特别声明:这是一个CA证书(不是普通终端证书),有这个字段才表示这是一个证书颁发机构

"ca": {

"expiry": "876000h" // CA证书本身的有效期为100年

}

}生成CA证书和私钥

bash

cfssl gencert -initca ca-csr.json | cfssljson -bare ca && ls *pem

生成etcd证书

创建etcd证书签名请求文件etcd-csr.json

master1节点执行

json

cat > etcd-csr.json << "EOF"

{

"CN": "etcd",

"hosts": [

"192.168.153.161", //etcd集群成员ip地址(这里将etcd部署在各master节点,所以为各master节点ip)

"192.168.153.162",

"192.168.153.163"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "BeiJing",

"L": "BeiJing",

"O": "etcd",

"OU": "System"

}

]

}

EOF生成etcd证书和私钥

bash

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=etcd etcd-csr.json | cfssljson -bare etcd && ls etcd*.pem

拷贝证书到其他master节点

master1执行

bash

NODES='master2 master3'; \

for NODE in $NODES; \

do \

ssh $NODE "mkdir -p /opt/etcd/ssl"; \

for FILE in ca.pem etcd-key.pem etcd.pem; \

do \

scp /opt/etcd/ssl/${FILE} $NODE:/opt/etcd/ssl/${FILE};\

done \

done有出现是否想继续连接提示输入yes即可

集群配置生成脚本

只在master1执行

bash

cd /tmp && vim etcd_config_gen.sh

etcd1=192.168.153.161

etcd2=192.168.153.162

etcd3=192.168.153.163

TOKEN=etcd-cluster

ETCDHOSTS=($etcd1 $etcd2 $etcd3)

NAMES=("etcd1" "etcd2" "etcd3")

for i in "${!ETCDHOSTS[@]}"; do

HOST=${ETCDHOSTS[$i]}

NAME=${NAMES[$i]}

cat << EOF > /tmp/$NAME.conf

# [member]

ETCD_NAME="$NAME"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://$HOST:2380"

ETCD_LISTEN_CLIENT_URLS="https://$HOST:2379"

# [cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://$HOST:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://$HOST:2379"

ETCD_INITIAL_CLUSTER="${NAMES[0]}=https://${ETCDHOSTS[0]}:2380,${NAMES[1]}=https://${ETCDHOSTS[1]}:2380,${NAMES[2]}=https://${ETCDHOSTS[2]}:2380"

ETCD_INITIAL_CLUSTER_TOKEN="$TOKEN"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

done

ls /tmp/etcd*

scp /tmp/etcd2.conf $etcd2:/opt/etcd/etcd.conf

scp /tmp/etcd3.conf $etcd3:/opt/etcd/etcd.conf

cp /tmp/etcd1.conf /opt/etcd/etcd.conf

rm -f /tmp/etcd*.conf脚本解释

bash

etcd1=192.168.153.161

etcd2=192.168.153.162

etcd3=192.168.153.163 #etcd节点的IP地址

TOKEN=etcd-cluster # 集群令牌:集群的唯一标识符

ETCDHOSTS=($etcd1 $etcd2 $etcd3)

NAMES=("etcd1" "etcd2" "etcd3")

for i in "${!ETCDHOSTS[@]}"; do # 遍历数组,为每个节点生成配置

HOST=${ETCDHOSTS[$i]}

NAME=${NAMES[$i]}

cat << EOF > /tmp/$NAME.conf # 生成配置文件内容

# [member] - 节点自身配置

ETCD_NAME="$NAME" # 节点名称:etcd1/etcd2/etcd3

ETCD_DATA_DIR="/var/lib/etcd/default.etcd" # 数据存储目录

ETCD_LISTEN_PEER_URLS="https://$HOST:2380" # 监听其他节点连接的地址

ETCD_LISTEN_CLIENT_URLS="https://$HOST:2379" # 监听客户端连接的地址

# [cluster] - 集群配置

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://$HOST:2380" # 对外公布的对等地址

ETCD_ADVERTISE_CLIENT_URLS="https://$HOST:2379" # 对外公布的客户端地址

ETCD_INITIAL_CLUSTER="${NAMES[0]}=https://${ETCDHOSTS[0]}:2380,${NAMES[1]}=https://${ETCDHOSTS[1]}:2380,${NAMES[2]}=https://${ETCDHOSTS[2]}:2380" # 集群所有成员列表

ETCD_INITIAL_CLUSTER_TOKEN="$TOKEN" # 集群令牌(防止误加入)

ETCD_INITIAL_CLUSTER_STATE="new" # new表示新建集群,existing表示加入已有集群

EOF

done

ls /tmp/etcd*

scp /tmp/etcd2.conf $etcd2:/opt/etcd/etcd.conf # 将配置文件分发到对应节点

scp /tmp/etcd3.conf $etcd3:/opt/etcd/etcd.conf

cp /tmp/etcd1.conf /opt/etcd/etcd.conf # 本地节点(etcd1)直接复制

rm -f /tmp/etcd*.conf # 清理临时文件执行脚本

bash

sh etcd_config_gen.sh开启etcd服务

设置systemd服务

只在master1执行

json

cat > /usr/lib/systemd/system/etcd.service <<"EOF"

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

EnvironmentFile=/opt/etcd/etcd.conf

ExecStart=/usr/local/bin/etcd \

--cert-file=/opt/etcd/ssl/etcd.pem \

--key-file=/opt/etcd/ssl/etcd-key.pem \

--peer-cert-file=/opt/etcd/ssl/etcd.pem \

--peer-key-file=/opt/etcd/ssl/etcd-key.pem \

--trusted-ca-file=/opt/etcd/ssl/ca.pem \

--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF服务配置文件拷贝到其他etcd节点

bash

NODES='master2 master3'; \

for NODE in $NODES; \

do \

scp /usr/local/bin/etcd* $NODE:/usr/local/bin/;\

scp /usr/lib/systemd/system/etcd.service $NODE:/usr/lib/systemd/system/;\

done集群启动

bash

systemctl enable --now etcd启动失败可以执行tail -f /var/log/messages或 journalctl -u etcd查看日志

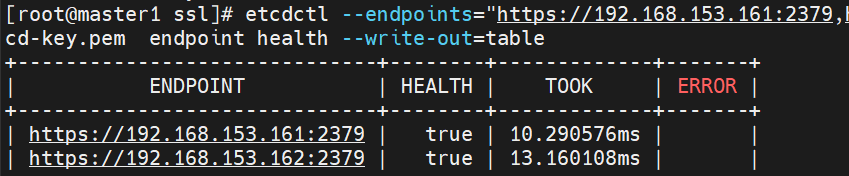

集群验证

bash

etcdctl --endpoints="https://192.168.153.161:2379,https://192.168.153.162:2379,https://192.168.153.163:2379" --cacert=/opt/etcd/ssl/ca.pem --cert=/opt/etcd/ssl/etcd.pem --key=/opt/etcd/ssl/etcd-key.pem endpoint health --write-out=table

HEALTH应该都为true