前言

本文仅为 LiteLLM 部署流程,非 LiteLLM 使用教程。

部署环境:阿里云ACK

部署方式:PostgreSQL + Redis + LiteLLM

参考链接:官网文档

LiteLLM 部署流程

Redis 部署

可实现多个 litellm 容器间的负载均衡

本次使用 bitnami 提供的 redis 版本,yaml 文件中使用的环境变量可参考对应文档添加或更改

需要注意的是,bitnami 在 2025 下半年终止了免费镜像的提供。本次 yaml 中不提供具体镜像地址,可自行去渡渡鸟或其他镜像站同步

yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

annotations:

labels:

app.kubernetes.io/component: master

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: redis

name: litellm-redis-master

namespace: litellm

spec:

podManagementPolicy: OrderedReady

replicas: 1

selector:

matchLabels:

app.kubernetes.io/component: master

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: redis

serviceName: litellm-redis-headless

template:

metadata:

annotations:

labels:

app.kubernetes.io/component: master

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: redis

spec:

containers:

- args:

- -c

- /opt/bitnami/scripts/start-scripts/start-master.sh

command:

- /bin/bash

env:

- name: BITNAMI_DEBUG

value: "false"

- name: REDIS_REPLICATION_MODE

value: master

- name: ALLOW_EMPTY_PASSWORD

value: "no"

- name: REDIS_PASSWORD

valueFrom:

secretKeyRef:

key: redis-password

name: litellm-redis

- name: REDIS_TLS_ENABLED

value: "no"

- name: REDIS_PORT

value: "6379"

image: ## FIXME: 本次使用 bitnami 提供的 redos:7.0.12

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command:

- sh

- -c

- /health/ping_liveness_local.sh 5

failureThreshold: 5

initialDelaySeconds: 20

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 6

name: redis

ports:

- containerPort: 6379

name: redis

protocol: TCP

readinessProbe:

exec:

command:

- sh

- -c

- /health/ping_readiness_local.sh 1

failureThreshold: 5

initialDelaySeconds: 20

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 2

securityContext:

runAsUser: 1001

volumeMounts:

- mountPath: /opt/bitnami/scripts/start-scripts

name: start-scripts

- mountPath: /health

name: health

- mountPath: /data

name: redis-data

- mountPath: /opt/bitnami/redis/mounted-etc

name: config

- mountPath: /opt/bitnami/redis/etc/

name: redis-tmp-conf

- mountPath: /tmp

name: tmp

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: deepflow

restartPolicy: Always

schedulerName: default-scheduler

securityContext:

fsGroup: 1001

terminationGracePeriodSeconds: 30

volumes:

- configMap:

defaultMode: 493

name: litellm-redis-scripts

name: start-scripts

- configMap:

defaultMode: 493

name: litellm-redis-health

name: health

- configMap:

defaultMode: 420

name: litellm-redis-configuration

name: config

- emptyDir: {}

name: redis-tmp-conf

- emptyDir: {}

name: tmp

## 本次使用 emptyDir 方式临时存储数据

- emptyDir: {}

name: redis-data

## 此处可自行选择使用 PVC 或者 emptyDir 方式存储数据

# volumeClaimTemplates:

# - apiVersion: v1

# kind: PersistentVolumeClaim

# metadata:

# labels:

# app.kubernetes.io/component: master

# app.kubernetes.io/instance: litellm

# app.kubernetes.io/name: redis

# name: redis-data

# namespace: litellm

# spec:

# accessModes:

# - ReadWriteOnce

# resources:

# requests:

# storage: 20Gi

## 需要注意的是,SC 并不通用(不同地区/节点可能无法使用同一个)

# storageClassName: "alicloud-disk-essd"

# volumeMode: Filesystem

---

apiVersion: v1

data:

master.conf: |-

dir /data

# User-supplied master configuration:

rename-command FLUSHDB ""

rename-command FLUSHALL ""

# End of master configuration

redis.conf: |-

# User-supplied common configuration:

# Enable AOF https://redis.io/topics/persistence#append-only-file

appendonly yes

# Disable RDB persistence, AOF persistence already enabled.

save ""

# End of common configuration

replica.conf: |-

dir /data

# User-supplied replica configuration:

rename-command FLUSHDB ""

rename-command FLUSHALL ""

# End of replica configuration

kind: ConfigMap

metadata:

annotations:

labels:

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: redis

name: litellm-redis-configuration

namespace: litellm

---

apiVersion: v1

data:

ping_liveness_local.sh: |-

#!/bin/bash

[[ -f $REDIS_PASSWORD_FILE ]] && export REDIS_PASSWORD="$(< "${REDIS_PASSWORD_FILE}")"

[[ -n "$REDIS_PASSWORD" ]] && export REDISCLI_AUTH="$REDIS_PASSWORD"

response=$(

timeout -s 3 $1 \

redis-cli \

-h localhost \

-p $REDIS_PORT \

ping

)

if [ "$?" -eq "124" ]; then

echo "Timed out"

exit 1

fi

responseFirstWord=$(echo $response | head -n1 | awk '{print $1;}')

if [ "$response" != "PONG" ] && [ "$responseFirstWord" != "LOADING" ] && [ "$responseFirstWord" != "MASTERDOWN" ]; then

echo "$response"

exit 1

fi

ping_liveness_local_and_master.sh: |-

script_dir="$(dirname "$0")"

exit_status=0

"$script_dir/ping_liveness_local.sh" $1 || exit_status=$?

"$script_dir/ping_liveness_master.sh" $1 || exit_status=$?

exit $exit_status

ping_liveness_master.sh: |-

#!/bin/bash

[[ -f $REDIS_MASTER_PASSWORD_FILE ]] && export REDIS_MASTER_PASSWORD="$(< "${REDIS_MASTER_PASSWORD_FILE}")"

[[ -n "$REDIS_MASTER_PASSWORD" ]] && export REDISCLI_AUTH="$REDIS_MASTER_PASSWORD"

response=$(

timeout -s 3 $1 \

redis-cli \

-h $REDIS_MASTER_HOST \

-p $REDIS_MASTER_PORT_NUMBER \

ping

)

if [ "$?" -eq "124" ]; then

echo "Timed out"

exit 1

fi

responseFirstWord=$(echo $response | head -n1 | awk '{print $1;}')

if [ "$response" != "PONG" ] && [ "$responseFirstWord" != "LOADING" ]; then

echo "$response"

exit 1

fi

ping_readiness_local.sh: |-

#!/bin/bash

[[ -f $REDIS_PASSWORD_FILE ]] && export REDIS_PASSWORD="$(< "${REDIS_PASSWORD_FILE}")"

[[ -n "$REDIS_PASSWORD" ]] && export REDISCLI_AUTH="$REDIS_PASSWORD"

response=$(

timeout -s 3 $1 \

redis-cli \

-h localhost \

-p $REDIS_PORT \

ping

)

if [ "$?" -eq "124" ]; then

echo "Timed out"

exit 1

fi

if [ "$response" != "PONG" ]; then

echo "$response"

exit 1

fi

ping_readiness_local_and_master.sh: |-

script_dir="$(dirname "$0")"

exit_status=0

"$script_dir/ping_readiness_local.sh" $1 || exit_status=$?

"$script_dir/ping_readiness_master.sh" $1 || exit_status=$?

exit $exit_status

ping_readiness_master.sh: |-

#!/bin/bash

[[ -f $REDIS_MASTER_PASSWORD_FILE ]] && export REDIS_MASTER_PASSWORD="$(< "${REDIS_MASTER_PASSWORD_FILE}")"

[[ -n "$REDIS_MASTER_PASSWORD" ]] && export REDISCLI_AUTH="$REDIS_MASTER_PASSWORD"

response=$(

timeout -s 3 $1 \

redis-cli \

-h $REDIS_MASTER_HOST \

-p $REDIS_MASTER_PORT_NUMBER \

ping

)

if [ "$?" -eq "124" ]; then

echo "Timed out"

exit 1

fi

if [ "$response" != "PONG" ]; then

echo "$response"

exit 1

fi

kind: ConfigMap

metadata:

annotations:

labels:

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: redis

name: litellm-redis-health

namespace: litellm

---

apiVersion: v1

data:

start-master.sh: |

#!/bin/bash

[[ -f $REDIS_PASSWORD_FILE ]] && export REDIS_PASSWORD="$(< "${REDIS_PASSWORD_FILE}")"

if [[ ! -f /opt/bitnami/redis/etc/master.conf ]];then

cp /opt/bitnami/redis/mounted-etc/master.conf /opt/bitnami/redis/etc/master.conf

fi

if [[ ! -f /opt/bitnami/redis/etc/redis.conf ]];then

cp /opt/bitnami/redis/mounted-etc/redis.conf /opt/bitnami/redis/etc/redis.conf

fi

ARGS=("--port" "${REDIS_PORT}")

ARGS+=("--requirepass" "${REDIS_PASSWORD}")

ARGS+=("--masterauth" "${REDIS_PASSWORD}")

ARGS+=("--include" "/opt/bitnami/redis/etc/redis.conf")

ARGS+=("--include" "/opt/bitnami/redis/etc/master.conf")

exec redis-server "${ARGS[@]}"

kind: ConfigMap

metadata:

annotations:

labels:

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: redis

name: litellm-redis-scripts

namespace: litellm

---

apiVersion: v1

data:

redis-password: ## FIXME: 自行通过 base64 编码后设置 Redis 密码

kind: Secret

metadata:

annotations:

labels:

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: redis

name: litellm-redis

namespace: litellm

type: Opaque

---

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: redis

name: litellm-redis-headless

namespace: litellm

spec:

clusterIP: None

clusterIPs:

- None

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: tcp-redis

port: 6379

protocol: TCP

targetPort: redis

publishNotReadyAddresses: true

selector:

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: redis

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

app.kubernetes.io/component: master

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: redis

name: litellm-redis-master

namespace: litellm

spec:

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: tcp-redis

port: 6379

protocol: TCP

targetPort: redis

selector:

app.kubernetes.io/component: master

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: redis

type: ClusterIPPostgreSQL 部署

当使用 Postgres 作为后端数据库时,可以启用 虚拟 Key 和 费用追踪 功能:

- 虚拟 Key:不是写在配置文件里的静态 key,而是存储在数据库中的逻辑 key。没有数据库就无法可靠地管理这些 Key。

- 费用追踪:每一次模型调用都会持久化写入数据库,从而支持账单、配额等功能

本次使用 bitnami 提供的 postgres 版本,yaml 文件中使用的环境变量可参考对应文档添加或更改

需要注意的是,bitnami 在 2025 下半年终止了免费镜像的提供。本次 yaml 中不提供具体镜像地址,可自行去渡渡鸟或其他镜像站同步

yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

annotations:

labels:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: postgresql

name: litellm-postgresql

namespace: litellm

spec:

podManagementPolicy: OrderedReady

replicas: 1

selector:

matchLabels:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: postgresql

serviceName: litellm-postgresql-hl

template:

metadata:

labels:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: postgresql

name: litellm-postgresql

spec:

containers:

- env:

- name: BITNAMI_DEBUG

value: "false"

- name: POSTGRESQL_PORT_NUMBER

value: "5432"

- name: POSTGRESQL_VOLUME_DIR

value: /bitnami/postgresql

- name: PGDATA

value: /bitnami/postgresql/data

- name: POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

key: postgres-password

name: litellm-postgresql

- name: POSTGRES_DB

value: litellm

- name: POSTGRESQL_ENABLE_LDAP

value: "no"

- name: POSTGRESQL_ENABLE_TLS

value: "no"

- name: POSTGRESQL_LOG_HOSTNAME

value: "false"

- name: POSTGRESQL_LOG_CONNECTIONS

value: "false"

- name: POSTGRESQL_LOG_DISCONNECTIONS

value: "false"

- name: POSTGRESQL_PGAUDIT_LOG_CATALOG

value: "off"

- name: POSTGRESQL_CLIENT_MIN_MESSAGES

value: error

- name: POSTGRESQL_SHARED_PRELOAD_LIBRARIES

value: pgaudit

image: ## FIXME: 本次使用 bitnami 提供的 postgresql:15.3.0-debian-11-r7

imagePullPolicy: IfNotPresent

livenessProbe:

exec:

command:

- /bin/sh

- -c

- exec pg_isready -U "postgres" -d "dbname=litellm" -h 127.0.0.1 -p 5432

failureThreshold: 6

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: postgresql

ports:

- containerPort: 5432

name: tcp-postgresql

protocol: TCP

readinessProbe:

exec:

## 注: 此处 command 手动添加了通过 postgres 用户创建 litellm 库的步骤,便于 litellm 部署时直接引用

command:

- /bin/sh

- -c

- -e

- |

exec pg_isready -U "postgres" -d "dbname=litellm" -h 127.0.0.1 -p 5432

[ -f /opt/bitnami/postgresql/tmp/.initialized ] || [ -f /bitnami/postgresql/.initialized ]

failureThreshold: 6

initialDelaySeconds: 5

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

resources:

requests:

cpu: 250m

memory: 256Mi

securityContext:

runAsUser: 1001

volumeMounts:

- mountPath: /dev/shm

name: dshm

- mountPath: /bitnami/postgresql

name: litellm-postgresql

dnsPolicy: ClusterFirst

restartPolicy: Always

securityContext:

fsGroup: 1001

volumes:

- emptyDir:

medium: Memory

name: dshm

## 本次使用 emptyDir 方式临时存储

- emptyDir: {}

name: litellm-postgresql

## 此处可自行选择使用 PVC 或者 emptyDir 方式存储数据

# volumeClaimTemplates:

# - apiVersion: v1

# kind: PersistentVolumeClaim

# metadata:

# name: litellm-postgresql

# namespace: litellm

# spec:

# accessModes:

# - ReadWriteOnce

# resources:

# requests:

# storage: 20Gi

# storageClassName: "alicloud-disk-topology-alltype"

# volumeMode: Filesystem

---

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: postgresql

name: litellm-postgresql

namespace: litellm

spec:

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: tcp-postgresql

port: 5432

protocol: TCP

targetPort: tcp-postgresql

selector:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: postgresql

type: ClusterIP

---

apiVersion: v1

kind: Service

metadata:

annotations:

labels:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: postgresql

service.alpha.kubernetes.io/tolerate-unready-endpoints: "true"

name: litellm-postgresql-hl

namespace: litellm

spec:

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- name: tcp-postgresql

port: 5432

protocol: TCP

targetPort: tcp-postgresql

publishNotReadyAddresses: true

selector:

app.kubernetes.io/component: primary

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: postgresql

type: ClusterIP

---

apiVersion: v1

data:

postgres-password: ## FIXME: 自行通过 base64 编码后设置 postgres 密码

kind: Secret

metadata:

annotations:

labels:

app.kubernetes.io/instance: litellm

app.kubernetes.io/name: postgresql

name: litellm-postgresql

namespace: litellm

type: OpaqueLitellm 部署

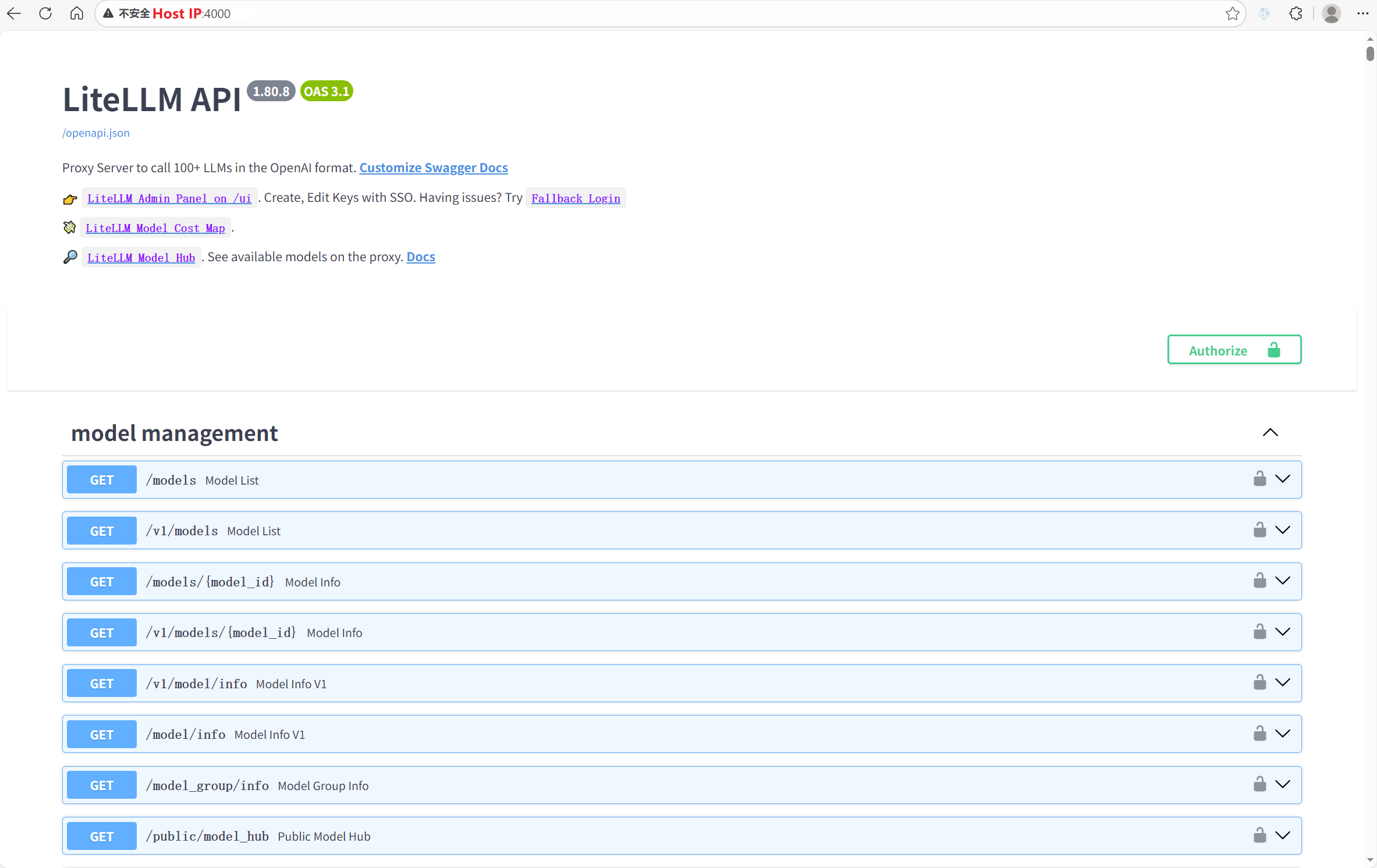

LiteLLM 是一个统一的 AI 接口代理服务,只需要对接 LiteLLM 一个地址,而不用关心后面到底接的是 OpenAI、Azure 或是其他厂商。

yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: litellm-config-file

namespace: litellm

data:

config.yaml: |

## 对接 Redis,自行对应上方部署后的值

router_settings:

redis_host: <redis host>

redis_password: <redis password>

redis_port: <redis port>

---

apiVersion: v1

kind: Secret

type: Opaque

metadata:

name: litellm-secrets

namespace: litellm

data:

## FIXME: 此变量的作用可参考官网链接,本文部署中未手动在 configMap 中配置 model_list,因此没啥用:

## https://docs.litellm.ai/docs/proxy/deploy#kubernetes

CA_AZURE_OPENAI_API_KEY:

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: litellm-deployment

namespace: litellm

labels:

app: litellm

spec:

selector:

matchLabels:

app: litellm

template:

metadata:

labels:

app: litellm

spec:

## 注: 由于部署时网络环境原因,本次选择宿主机网络部署。

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

containers:

- name: litellm

image: "docker.litellm.ai/berriai/litellm:main-stable"

args:

- "--config"

- "/app/proxy_server_config.yaml"

ports:

- containerPort: 4000

volumeMounts:

- name: config-volume

mountPath: /app/proxy_server_config.yaml

subPath: config.yaml

env:

## 管理员密钥

- name: LITELLM_MASTER_KEY

value:

## 连接 postgres 数据库

## 查看 pgsql yaml 中 command 注释即可

## postgresql://<user>:<password>@<host>:<port>/<dbname>

- name: DATABASE_URL

value:

envFrom:

- secretRef:

name: litellm-secrets

volumes:

- name: config-volume

configMap:

name: litellm-config-file

---

apiVersion: v1

kind: Service

metadata:

name: litellm-service

namespace: litellm

spec:

selector:

app: litellm

ports:

- protocol: TCP

port: 4000

type: ClusterIPLitellm 前端效果