简介

一份示例代码,使用langgraph构建一个简单的graph,模型加载mcp工具,使用langsmith查看效果

1, 代码示例

文件名:main_demo_mcp.py

python

import main_utils

llm = main_utils.llm_ali # 加载一个llm

from langgraph.graph import StateGraph, START, END

from typing import TypedDict, Literal

import asyncio

from langchain.messages import HumanMessage

from langchain_mcp_adapters.client import MultiServerMCPClient

# modelscope 的 MCP广场

mcp_config_gaode = {"transport": "streamable_http",

"url": "https://mcp.api-inference.modelscope.net/******/mcp"}

mcp_config_12306 = {"transport": "streamable_http",

"url": "https://mcp.api-inference.modelscope.net/*****/mcp"

}

# mcp client

mcp_client_ = MultiServerMCPClient({"mcp_config_gaode": mcp_config_gaode,

"mcp_config_12306": mcp_config_12306})

class State(TypedDict):

query: str

answer: str | None

intent: Literal["chat", "qa", "code"] | None

def detect_intent(state: State) -> State:

query = state["query"]

prompt = f"""根据用户的问题, 判断用户的意图是聊天、问答还是代码

用户问题:{query}, 回答"chat", "qa", "code"中的一个"""

response = llm.invoke(prompt).content

response = response.strip()

if response not in ["chat", "qa", "code"]:

response = "qa"

return {"intent": response.strip()}

def route_func(state: State) -> str | None:

intent = state["intent"]

if intent == "chat":

return "chat_"

elif intent == "qa":

return "qa_"

elif intent == "code":

return "code_"

else:

return None

def chat_(state: State) -> State:

query = state["query"]

response = llm.invoke(query).content

return {"answer": response.strip()}

async def qa_(state: State) -> State:

query = state["query"]

from langchain.agents import create_agent

mcp_tools_list = await mcp_client_.get_tools() #获取mcp工具列表

# 打印 观看tool说明

for itemid, item in enumerate(mcp_tools_list):

print(itemid, item)

# 定义带mcp工具的智能体

agent_ = create_agent(model=llm, tools=mcp_tools_list)

response = await agent_.ainvoke({"messages": HumanMessage(content=query)})

response_1 = response["messages"]

response_2 = response_1[-1].content #最终的结果

return {"answer": response_2}

def code_(state: State) -> State:

query = state["query"]

prompt = f"""根据用户的问题, 生成对应的代码

用户问题:{query}, 只输出代码,不输出解释"""

response = llm.invoke(prompt).content

return {"answer": response.strip()}

builder = StateGraph(State)

builder.add_node("detect_intent", detect_intent)

builder.add_node("chat_", chat_)

builder.add_node("code_", code_)

builder.add_node("qa_", qa_)

builder.add_edge(START, "detect_intent")

builder.add_edge("chat_", END)

builder.add_edge("qa_", END)

builder.add_edge("code_", END)

builder.add_conditional_edges("detect_intent",

route_func,

{

"chat_": "chat_",

"qa_": "qa_",

"code_": "code_"}

)

graph = builder.compile()

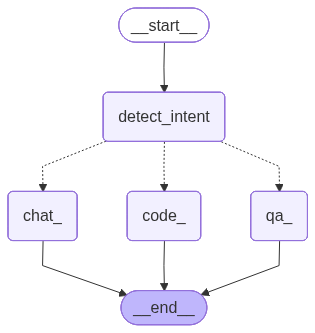

# 画结构图

pic = graph.get_graph().draw_mermaid_png()

with open("graph.png", "wb") as f:

f.write(pic)

# 如果只用langsmith的话,下面的代码都可以注释掉

async def main():

query_ = "明天济南到郑州的火车"

query_ = "郑州市的天气"

#加载mcp为异步方式,graph也只能使用这种

r = await graph.ainvoke({"query": query_}) 方式了

for key in r:

print("----------- output key&value: ", key, r[key])

if __name__ == "__main__":

asyncio.run(main())graph结构如下

2, 配置langgraph.json

文件名:langgraph.json

上面的main_demo_mcp.py 文件,代码中的图名称为graph,需对应好。dependencies随便填写个值即可

python

{

"dependencies": [

"langgraph"

],

"graphs": {

"eval_graph": "main_demo_mcp:graph"

}

}3, langsmith调试

终端下执行 langgraph dev 命令,即可,自动打开页面,登录即可。

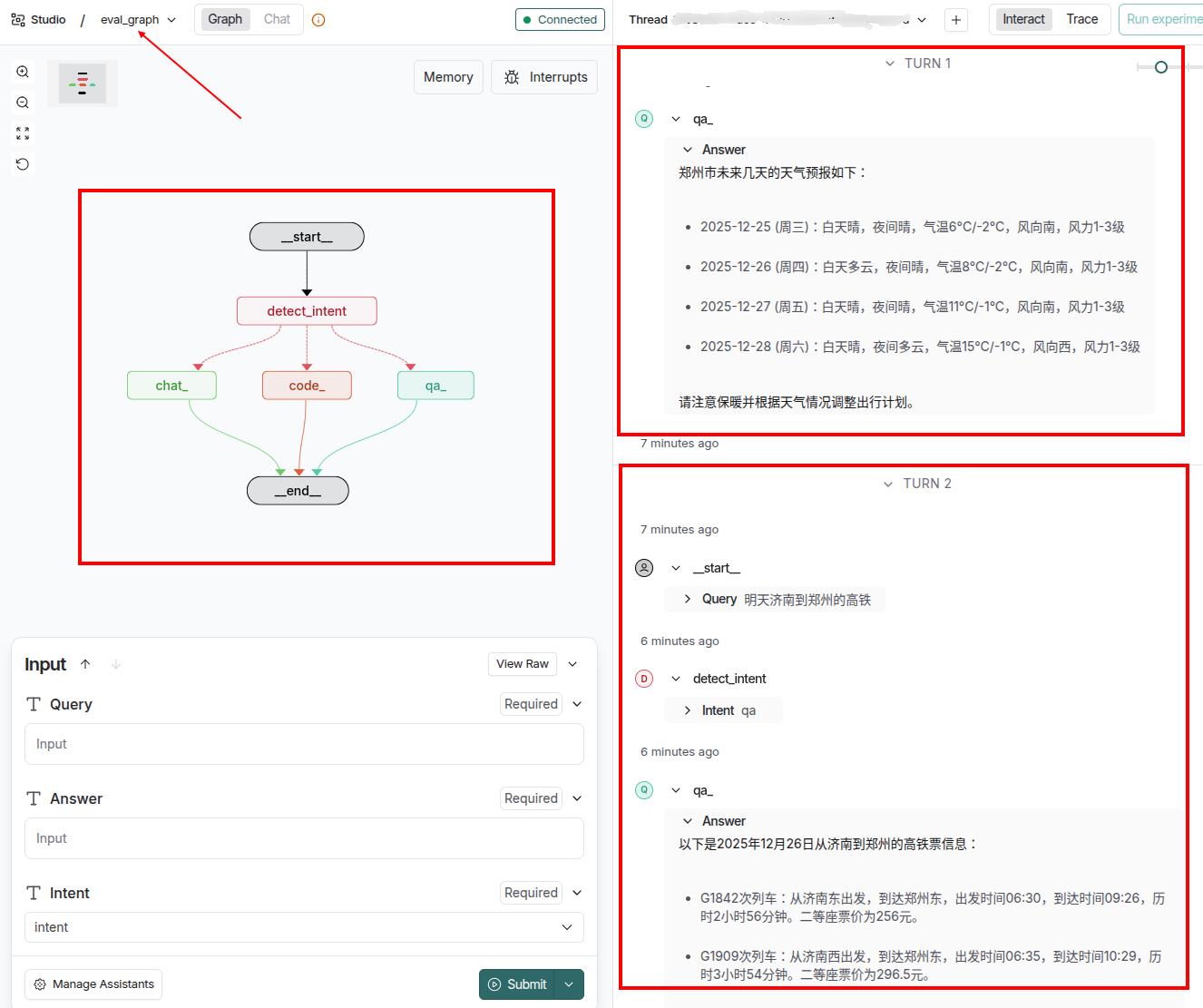

如下图所示,eval_graph为langgraph.json中定义的字段,如果有多个图,可以在这里选择。

左侧方框为图的结构。

右上为第一轮对话,看到可以得到天气的信息,如果没有加载的高德的mcp工具,无法得到天气信息。

右下为第二轮对话,看到可以得到火车信息,如果没有加载12306的mcp工具的话,无法得到火车信息。