java

app侧调用的api是

Kotlin

private val audioTrack: AudioTrack = AudioTrack.Builder()base/media/java/android/media/AudioTrack.java

先看下 streamType 是如何从老版本转向新版本的。这里调用了 setLegacyStreamType

java

public AudioTrack(int streamType, int sampleRateInHz, int channelConfig, int audioFormat,

int bufferSizeInBytes, int mode, int sessionId)

throws IllegalArgumentException {

// mState already == STATE_UNINITIALIZED

this((new AudioAttributes.Builder())

.setLegacyStreamType(streamType)

.build(),

(new AudioFormat.Builder())

.setChannelMask(channelConfig)

.setEncoding(audioFormat)

.setSampleRate(sampleRateInHz)

.build(),

bufferSizeInBytes,

mode, sessionId);

deprecateStreamTypeForPlayback(streamType, "AudioTrack", "AudioTrack()");

}base/media/java/android/media/AudioAttributes.java setLegacyStreamType

这里可以看出,老版本的 streamType 被转换为新的

mContentType = CONTENT_TYPE_UNKNOWN;

mUsage = USAGE_UNKNOWN;

接下来调用 getAudioAttributesForStrategyWithLegacyStreamType

这个方法就是使用 LegacyStreamType 根据策略获取 mUsage

这里的策略可以使用dumpsys media.audio_policy查看

java

public Builder setLegacyStreamType(int streamType) {

//...

setInternalLegacyStreamType(streamType);

return this;

}

@UnsupportedAppUsage

public Builder setInternalLegacyStreamType(int streamType) {

mContentType = CONTENT_TYPE_UNKNOWN;

mUsage = USAGE_UNKNOWN;

if (AudioProductStrategy.getAudioProductStrategies().size() > 0) {

AudioAttributes attributes =

AudioProductStrategy.getAudioAttributesForStrategyWithLegacyStreamType(

streamType);

if (attributes != null) {

mUsage = attributes.mUsage;

//...

}

}

switch (streamType) {

case AudioSystem.STREAM_VOICE_CALL:

mContentType = CONTENT_TYPE_SPEECH;

break;

//...

default:

Log.e(TAG, "Invalid stream type " + streamType + " for AudioAttributes");

}

if (mUsage == USAGE_UNKNOWN) {

mUsage = usageForStreamType(streamType);

}

return this;

}dumpsys media.audio_policy

看 Attributes 标签

每个的 stream: AUDIO_STREAM_VOICE_CALL 就对应 streamType

返回的就是 Usage

java

Policy Engine dump:

Product Strategies dump:

-STRATEGY_PHONE (id: 0)

Selected Device: {AUDIO_DEVICE_OUT_EARPIECE, @:}

Group: 13 stream: AUDIO_STREAM_VOICE_CALL

Attributes: { Content type: AUDIO_CONTENT_TYPE_UNKNOWN Usage: AUDIO_USAGE_VOICE_COMMUNICATION Source: AUDIO_SOURCE_INVALID Flags: 0x0 Tags: }

Group: 3 stream: AUDIO_STREAM_BLUETOOTH_SCO

Attributes: { Content type: AUDIO_CONTENT_TYPE_UNKNOWN Usage: AUDIO_USAGE_UNKNOWN Source: AUDIO_SOURCE_INVALID Flags: 0x4 Tags: }

//...

-STRATEGY_MEDIA (id: 5)

Selected Device: {AUDIO_DEVICE_OUT_SPEAKER, @:}

Group: 14 stream: AUDIO_STREAM_ASSISTANT

Attributes: { Content type: AUDIO_CONTENT_TYPE_SPEECH Usage: AUDIO_USAGE_ASSISTANT Source: AUDIO_SOURCE_INVALID Flags: 0x0 Tags: }

Group: 6 stream: AUDIO_STREAM_MUSIC

Attributes: { Content type: AUDIO_CONTENT_TYPE_UNKNOWN Usage: AUDIO_USAGE_MEDIA Source: AUDIO_SOURCE_INVALID Flags: 0x0 Tags: }

Group: 6 stream: AUDIO_STREAM_MUSIC

Attributes: { Content type: AUDIO_CONTENT_TYPE_UNKNOWN Usage: AUDIO_USAGE_GAME Source: AUDIO_SOURCE_INVALID Flags: 0x0 Tags: }

Group: 6 stream: AUDIO_STREAM_MUSIC

Attributes: { Content type: AUDIO_CONTENT_TYPE_UNKNOWN Usage: AUDIO_USAGE_ASSISTANT Source: AUDIO_SOURCE_INVALID Flags: 0x0 Tags: }

Group: 6 stream: AUDIO_STREAM_MUSIC

Attributes: { Content type: AUDIO_CONTENT_TYPE_UNKNOWN Usage: AUDIO_USAGE_ASSISTANCE_NAVIGATION_GUIDANCE Source: AUDIO_SOURCE_INVALID Flags: 0x0 Tags: }

Group: 6 stream: AUDIO_STREAM_MUSIC

Attributes: { Any }

Group: 11 stream: AUDIO_STREAM_SYSTEM

Attributes: { Content type: AUDIO_CONTENT_TYPE_UNKNOWN Usage: AUDIO_USAGE_ASSISTANCE_SONIFICATION Source: AUDIO_SOURCE_INVALID Flags: 0x0 Tags: }

//...base/media/java/android/media/AudioTrack.java

在这里 mStreamType 最后被赋值为 STREAM_DEFAULT

创建了一个 AttributionSource 用来记录 uid pid packageName 等信息

最终调用了 native_setup 进入到 native 层

java

private AudioTrack(@Nullable Context context, AudioAttributes attributes, AudioFormat format,

int bufferSizeInBytes, int mode, int sessionId, boolean offload, int encapsulationMode,

@Nullable TunerConfiguration tunerConfiguration)

throws IllegalArgumentException {

super(attributes, AudioPlaybackConfiguration.PLAYER_TYPE_JAM_AUDIOTRACK);

//...

mStreamType = AudioSystem.STREAM_DEFAULT;

//...

AttributionSource attributionSource = context == null

? AttributionSource.myAttributionSource() : context.getAttributionSource();

// native initialization

try (ScopedParcelState attributionSourceState = attributionSource.asScopedParcelState()) {

int initResult = native_setup(new WeakReference<AudioTrack>(this), mAttributes,

sampleRate, mChannelMask, mChannelIndexMask, mAudioFormat,

mNativeBufferSizeInBytes, mDataLoadMode, session,

attributionSourceState.getParcel(), 0 /*nativeTrackInJavaObj*/, offload,

encapsulationMode, tunerConfiguration, getCurrentOpPackageName());

if (initResult != SUCCESS) {

loge("Error code " + initResult + " when initializing AudioTrack.");

return; // with mState == STATE_UNINITIALIZED

}

}

//...

native_setPlayerIId(mPlayerIId); // mPlayerIId now ready to send to native AudioTrack.

}base/core/java/android/content/AttributionSource.java

java

public AttributionSource(int uid, int pid, @Nullable String packageName,

@Nullable String attributionTag, @NonNull IBinder token) {

this(uid, pid, packageName, attributionTag, token, /*renouncedPermissions*/ null,

Context.DEVICE_ID_DEFAULT, /*next*/ null);

}native

base/core/jni/android_media_AudioTrack.cpp native_setup****AudioTrack_setup

jaa 这个参数就是 java 层传递的 mAttributes

构造 native 层的 AudioTrack 名为 lpTrack

lpTrack = sp<AudioTrack>::make(attributionSource);

根据 jaa 构造 paa (mAttributes)

auto paa = JNIAudioAttributeHelper::makeUnique();

jint jStatus = JNIAudioAttributeHelper::nativeFromJava(env, jaa, paa.get());

将 java 层传递过来的参数设置进去

lpTrack->set

cpp

// ----------------------------------------------------------------------------

static jint android_media_AudioTrack_setup(JNIEnv *env, jobject thiz, jobject weak_this,

jobject jaa, jintArray jSampleRate,

jint channelPositionMask, jint channelIndexMask,

jint audioFormat, jint buffSizeInBytes, jint memoryMode,

jintArray jSession, jobject jAttributionSource,

jlong nativeAudioTrack, jboolean offload,

jint encapsulationMode, jobject tunerConfiguration,

jstring opPackageName) {

//...

sp<AudioTrack> lpTrack;

const auto lpJniStorage = sp<AudioTrackJniStorage>::make(clazz, weak_this, offload);

if (nativeAudioTrack == 0) {

//...

android::content::AttributionSourceState attributionSource;

attributionSource.readFromParcel(parcelForJavaObject(env, jAttributionSource));

lpTrack = sp<AudioTrack>::make(attributionSource);

// read the AudioAttributes values

auto paa = JNIAudioAttributeHelper::makeUnique();

jint jStatus = JNIAudioAttributeHelper::nativeFromJava(env, jaa, paa.get());

if (jStatus != (jint)AUDIO_JAVA_SUCCESS) {

return jStatus;

}

ALOGV("AudioTrack_setup for usage=%d content=%d flags=0x%#x tags=%s",

paa->usage, paa->content_type, paa->flags, paa->tags);

//...

status_t status = NO_ERROR;

switch (memoryMode) {

case MODE_STREAM:

status = lpTrack->set(AUDIO_STREAM_DEFAULT, // stream type, but more info conveyed

// in paa (last argument)

sampleRateInHertz,

format, // word length, PCM

nativeChannelMask, offload ? 0 : frameCount,

offload ? AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD

: AUDIO_OUTPUT_FLAG_NONE,

lpJniStorage,

0, // notificationFrames == 0 since not using EVENT_MORE_DATA

// to feed the AudioTrack

0, // shared mem

true, // thread can call Java

sessionId, // audio session ID

offload ? AudioTrack::TRANSFER_SYNC_NOTIF_CALLBACK

: AudioTrack::TRANSFER_SYNC,

(offload || encapsulationMode) ? &offloadInfo : NULL,

attributionSource, // Passed from Java

paa.get());

break;

//...

}

//...

return (jint) AUDIOTRACK_ERROR_SETUP_NATIVEINITFAILED;

}libaudioclient

av/media/libaudioclient/AudioTrack.cpp lpTrack->set

这里主要调用了 createTrack_l

cpp

status_t AudioTrack::set(

audio_stream_type_t streamType,

uint32_t sampleRate,

audio_format_t format,

audio_channel_mask_t channelMask,

size_t frameCount,

audio_output_flags_t flags,

const wp<IAudioTrackCallback>& callback,

int32_t notificationFrames,

const sp<IMemory>& sharedBuffer,

bool threadCanCallJava,

audio_session_t sessionId,

transfer_type transferType,

const audio_offload_info_t *offloadInfo,

const AttributionSourceState& attributionSource,

const audio_attributes_t* pAttributes,

bool doNotReconnect,

float maxRequiredSpeed,

audio_port_handle_t selectedDeviceId)

{

//...

// create the IAudioTrack

{

AutoMutex lock(mLock);

status = createTrack_l();

}

//...

return logIfErrorAndReturnStatus(status, "");

}

status_t AudioTrack::createTrack_l()

{

//...

const sp<IAudioFlinger>& audioFlinger = AudioSystem::get_audio_flinger();

//... 下面继续分析,这里先分析 get_audio_flinger

}av/media/libaudioclient/AudioSystem.cpp get_audio_flinger

cpp

sp<IAudioFlinger> AudioSystem::get_audio_flinger() {

return AudioFlingerServiceTraits::getService();

}

static sp<IAudioFlinger> getService(

std::chrono::milliseconds waitMs = std::chrono::milliseconds{-1}) {

//...

// mediautils::getService() installs a persistent new service notification.

auto service = mediautils::getService<

media::IAudioFlingerService>(waitMs);

ALOGD("%s: checking for service %s: %p", __func__, getServiceName(), service.get());

ul.lock();

// return the IAudioFlinger interface which is adapted

// from the media::IAudioFlingerService.

return mService;

}av/media/utils/include/mediautils/ServiceSingleton.h getService

serviceHandler->template get<Service>

checkServicePassThrough

cpp

template<typename Service>

auto getService(std::chrono::nanoseconds waitNs = {}) {

const auto serviceHandler = details::ServiceHandler::getInstance(Service::descriptor);

return interfaceFromBase<Service>(serviceHandler->template get<Service>(

waitNs, true /* useCallback */));

}

template <typename Service>

auto get(std::chrono::nanoseconds waitNs, bool useCallback) {

//...

for (bool first = true; true; first = false) {

// we may have released mMutex, so see if service has been obtained.

if (mSkipMode == SkipMode::kImmediate || (service && mValid)) return service;

int options = 0;

if (mSkipMode == SkipMode::kNone) {

const auto traits = getTraits_l<Service>();

// first time or not using callback, check the service.

if (first || !useCallback) {

auto service_new = checkServicePassThrough<Service>(

traits->getServiceName());

if (service_new) {

mValid = true;

service = std::move(service_new);

const auto service_fixed = service;

//...

return service_fixed;

}

}

// install service callback if needed.

if (useCallback && !mServiceNotificationHandle) {

setServiceNotifier_l<Service>();

}

options = static_cast<int>(traits->options());

}

//...

}

}av/media/utils/include/mediautils/BinderGenericUtils.h checkServicePassThrough

很明显这里调用了defaultServiceManager()->checkService(serviceName);

cpp

template<typename Service>

auto checkServicePassThrough(const char *const name = "") {

if constexpr(is_ndk<Service>)

{

const auto serviceName = fullyQualifiedServiceName<Service>(name);

return Service::fromBinder(

::ndk::SpAIBinder(AServiceManager_checkService(serviceName.c_str())));

} else /* constexpr */ {

const auto serviceName = fullyQualifiedServiceName<Service>(name);

auto binder = defaultServiceManager()->checkService(serviceName);

return interface_cast<Service>(binder);

}

}获取到 audioFlinger 服务之后,接着 createTrack_l 分析

av/media/libaudioclient/include/media/AudioTrack.h

media::IAudioTrack

av/media/libaudioclient/aidl/android/media/IAudioTrack.aidl

cpp

sp<media::IAudioTrack> mAudioTrack;av/media/libaudioclient/AudioTrack.cpp

创建 CreateTrackInput 将 java 传递上来的赋值

调用 audioFlinger 的 createTrack 传递了两个 aidl parcelable, 函数会返回一个 parcelable

通过返回的这个output.audioTrack 对 mAudioTrack进行赋值

in

media::CreateTrackResponse

av/media/libaudioclient/aidl/android/media/CreateTrackResponse.aidl

media::CreateTrackRequest

av/media/libaudioclient/aidl/android/media/CreateTrackRequest.aidl

out

media::IAudioTrack

av/media/libaudioclient/aidl/android/media/IAudioTrack.aidl

cpp

status_t AudioTrack::createTrack_l()

{

//...

const sp<IAudioFlinger>& audioFlinger = AudioSystem::get_audio_flinger();

//...

IAudioFlinger::CreateTrackInput input;

//...

input.config.sample_rate = mSampleRate;

input.config.channel_mask = mChannelMask;

//...

media::CreateTrackResponse response;

auto aidlInput = input.toAidl();

//...

status = audioFlinger->createTrack(aidlInput.value(), response);

//...

IAudioFlinger::CreateTrackOutput output{};

if (status == NO_ERROR) {

auto trackOutput = IAudioFlinger::CreateTrackOutput::fromAidl(response);

//...

output = trackOutput.value();

}

//...

mSessionId = output.sessionId;

mStreamType = output.streamType;

mSampleRate = output.sampleRate;

//...

mAudioTrack = output.audioTrack;

}av/media/libaudioclient/IAudioFlinger.cpp input.toAidl();

主要是将 CreateTrackInput 包装成 media::CreateTrackRequest

av/media/libaudioclient/aidl/android/media/CreateTrackRequest.aidl

cpp

ConversionResult<media::CreateTrackRequest> IAudioFlinger::CreateTrackInput::toAidl() const {

media::CreateTrackRequest aidl;

aidl.attr = VALUE_OR_RETURN(legacy2aidl_audio_attributes_t_AudioAttributes(attr));

// Do not be mislead by 'Input'--this is an input to 'createTrack', which creates output tracks.

aidl.config = VALUE_OR_RETURN(legacy2aidl_audio_config_t_AudioConfig(

config, false /*isInput*/));

aidl.clientInfo = VALUE_OR_RETURN(legacy2aidl_AudioClient_AudioClient(clientInfo));

aidl.sharedBuffer = VALUE_OR_RETURN(legacy2aidl_NullableIMemory_SharedFileRegion(sharedBuffer));

aidl.notificationsPerBuffer = VALUE_OR_RETURN(convertIntegral<int32_t>(notificationsPerBuffer));

aidl.speed = speed;

aidl.audioTrackCallback = audioTrackCallback;

aidl.flags = VALUE_OR_RETURN(legacy2aidl_audio_output_flags_t_int32_t_mask(flags));

aidl.frameCount = VALUE_OR_RETURN(convertIntegral<int64_t>(frameCount));

aidl.notificationFrameCount = VALUE_OR_RETURN(convertIntegral<int64_t>(notificationFrameCount));

aidl.selectedDeviceId = VALUE_OR_RETURN(

legacy2aidl_audio_port_handle_t_int32_t(selectedDeviceId));

aidl.sessionId = VALUE_OR_RETURN(legacy2aidl_audio_session_t_int32_t(sessionId));

return aidl;

}audio server

调用到 AudioFlinger 服务中的 createTrack

av/services/audioflinger/AudioFlinger.cpp createTrack

getOutputForAttr 获取输出通道ID output.outputId

根据 output.outputId 匹配 PlaybackThread

使用 PlaybackThread 创建track createTrack_l

最后使用 createTrack_l 创建的 track 给 output.audioTrack 赋值,这个就跟上文中 libaudioclient AudioTrack.cpp 最后获取的是一个

cpp

status_t AudioFlinger::createTrack(const media::CreateTrackRequest& _input,

media::CreateTrackResponse& _output)

{

//... 又转回来了

CreateTrackInput input = VALUE_OR_RETURN_STATUS(CreateTrackInput::fromAidl(_input));

//...

sp<IAfTrack> track;

//...

output.sessionId = sessionId;

output.outputId = AUDIO_IO_HANDLE_NONE;

//...

lStatus = AudioSystem::getOutputForAttr(&localAttr, &output.outputId, sessionId, &streamType,

adjAttributionSource, &input.config, input.flags,

&selectedDeviceIds, &portId, &secondaryOutputs,

&isSpatialized, &isBitPerfect, &volume, &muted);

//...

{

audio_utils::lock_guard _l(mutex());

IAfPlaybackThread* thread = checkPlaybackThread_l(output.outputId);

//...

track = thread->createTrack_l(client, streamType, localAttr, &output.sampleRate,

input.config.format, input.config.channel_mask,

&output.frameCount, &output.notificationFrameCount,

input.notificationsPerBuffer, input.speed,

input.sharedBuffer, sessionId, &output.flags,

callingPid, adjAttributionSource, input.clientInfo.clientTid,

&lStatus, portId, input.audioTrackCallback, isSpatialized,

isBitPerfect, &output.afTrackFlags, volume, muted);

//... 赋值

output.afFrameCount = thread->frameCount();

output.afSampleRate = thread->sampleRate();

output.afChannelMask = static_cast<audio_channel_mask_t>(thread->channelMask() |

thread->hapticChannelMask());

output.afFormat = thread->format();

output.afLatencyMs = thread->latency();

output.portId = portId;

//... 音效相关

if (lStatus == NO_ERROR) {

audio_utils::lock_guard _dl(thread->mutex());

updateSecondaryOutputsForTrack_l(track.get(), thread, secondaryOutputs);

if (effectThread != nullptr) {

// No thread safety analysis: double lock on a thread capability.

audio_utils::lock_guard_no_thread_safety_analysis _sl(effectThread->mutex());

if (moveEffectChain_ll(sessionId, effectThread, thread) == NO_ERROR) {

effectThreadId = thread->id();

effectIds = thread->getEffectIds_l(sessionId);

}

}

if (effectChain != nullptr) {

if (moveEffectChain_ll(sessionId, nullptr, thread, effectChain.get())

== NO_ERROR) {

effectThreadId = thread->id();

effectIds = thread->getEffectIds_l(sessionId);

}

}

}

}

//...

output.audioTrack = IAfTrack::createIAudioTrackAdapter(track);

}

// checkPlaybackThread_l() must be called with AudioFlinger::mutex() held

IAfPlaybackThread* AudioFlinger::checkPlaybackThread_l(audio_io_handle_t output) const

{

return mPlaybackThreads.valueFor(output).get();

}output.outputId 可以使用 dumpsys media.audio_flinger

I/O handle: 53 这一项就是outputId

cpp

Output thread 0x79895a7760, name AudioOut_35, tid 2177, type 0 (MIXER):

I/O handle: 53

Standby: yes

Sample rate: 48000 Hz

HAL frame count: 768

//...av/services/audioflinger/Tracks.cpp createIAudioTrackAdapter

这个 TrackHandle 就是 bn 端,传过去的就是 bp 端

class TrackHandle : public android::media::BnAudioTrack

**sp<TrackHandle>::make(track);**最终返回的就是这个

cpp

sp<media::IAudioTrack> IAfTrack::createIAudioTrackAdapter(const sp<IAfTrack>& track) {

return sp<TrackHandle>::make(track);

}av/services/audioflinger/Threads.cpp createTrack_l

调用 IAfTrack::create 这里面实际上就是创建了一个Track 对象,跟应用端的是一一对应的

创建好的 track 添加到 Threads 的 mTracks 中

然后回到 AudioFlinger 的 createTrack ↑

cpp

// PlaybackThread::createTrack_l() must be called with AudioFlinger::mutex() held

sp<IAfTrack> PlaybackThread::createTrack_l(

const sp<Client>& client,

audio_stream_type_t streamType,

const audio_attributes_t& attr,

uint32_t *pSampleRate,

audio_format_t format,

audio_channel_mask_t channelMask,

size_t *pFrameCount,

size_t *pNotificationFrameCount,

uint32_t notificationsPerBuffer,

float speed,

const sp<IMemory>& sharedBuffer,

audio_session_t sessionId,

audio_output_flags_t *flags,

pid_t creatorPid,

const AttributionSourceState& attributionSource,

pid_t tid,

status_t *status,

audio_port_handle_t portId,

const sp<media::IAudioTrackCallback>& callback,

bool isSpatialized,

bool isBitPerfect,

audio_output_flags_t *afTrackFlags,

float volume,

bool muted)

{

//...

sp<IAfTrack> track;

//...

{ // scope for mutex()

audio_utils::lock_guard _l(mutex());

//...

track = IAfTrack::create(this, client, streamType, attr, sampleRate, format,

channelMask, frameCount,

nullptr /* buffer */, (size_t)0 /* bufferSize */, sharedBuffer,

sessionId, creatorPid, attributionSource, trackFlags,

IAfTrackBase::TYPE_DEFAULT, portId, SIZE_MAX /*frameCountToBeReady*/,

speed, isSpatialized, isBitPerfect, volume, muted);

//...

mTracks.add(track);

//... 音效相关

sp<IAfEffectChain> chain = getEffectChain_l(sessionId);

//...

}

//...

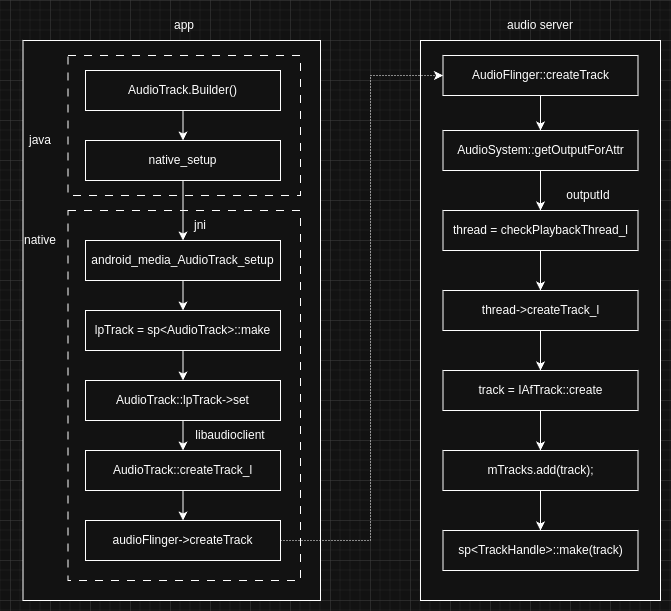

}流程图