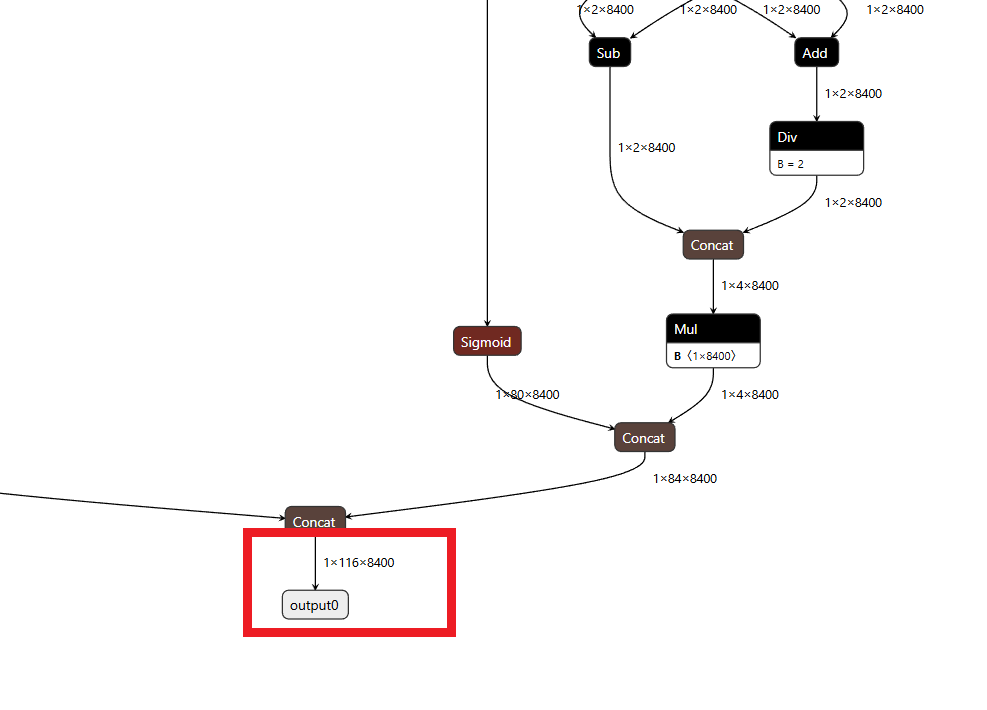

yolo26已经正式发布了,因此使用C++代码实现YOLO26-seg实例分割部署,首先看yolov11-seg网络结构,发现输出shape是1x116x8400

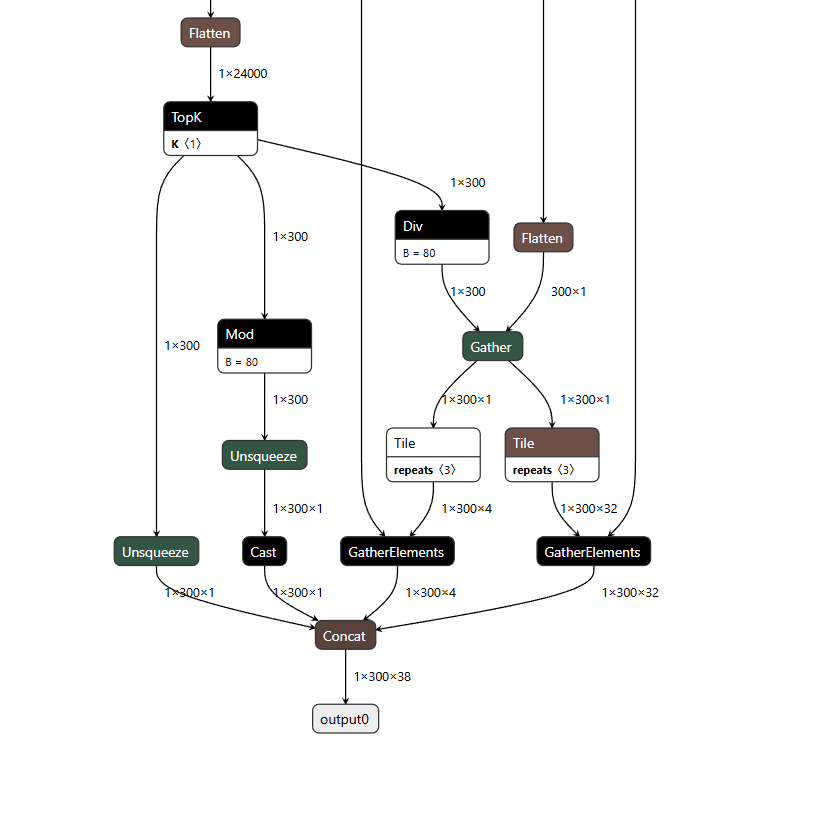

再来看看yolo26-seg网络结构输出,输出shape是1x300x38

可见yolo11和yolo26输出是不一样的是不能共用代码。

安装好yolo26环境,要求ultralytics==8.4.0,转换命令

yolo export model=yolo26n-seg.pt format=onnx opset=12

测试环境:

vs2019

cmake==3.30.1

vs2019

onnxruntime-win-x64-gpu-1.20.1

opencv==4.9.0

运行步骤:

先删除build文件夹

然后打开CMakeLists.txt里面opencv和onnxruntime路径

重新cmake后会生成exe

测试命令:切换到exe路径后执行

测试图片:

yolo26_ort --input=test.jpg

测试摄像头:

yolo26_ort --input=0 [--gpu] 注意运行gpu需要安装onnxruntime-win-x64-gpu-1.20.1对应cuda这个官方可以查询到,测试cuda12.4+cudnn9.4.1可以其他版本应该也可以看要求

测试视频:

yolo26_ort --input=test_video.mp4 --output=result.mp4 --conf=0.3

调用代码:

#include <iostream>

#include <opencv2/opencv.hpp>

#include <chrono>

#include <algorithm>

#include "YOLO26Seg.hpp"

//注意如果onnx上推理显示为空,很可能是导出onnx问题,需要设置opset=12

struct Args

{

std::string model_path = "./yolo26n-seg.onnx";

std::string classes_path = "./labels.txt";

std::string input_path = "./input.mov";

std::string output_path = "./output.mp4";

bool use_gpu = false;

float conf_threshold = 0.25f;

float iou_threshold = 0.45f;

bool help = false;

};

void print_help()

{

std::cout << "YOLO26 C++ Object Segmatation\n\n";

std::cout << "Usage: ./yolo26_detector [options]\n\n";

std::cout << "Options:\n";

std::cout << " --model <path> Path to ONNX model file (default: ./best_fixed.onnx)\n";

std::cout << " --classes <path> Path to class names file (default: ./classes.txt)\n";

std::cout << " --input <path> Path to input video file or camera device index (default: ./input.mov)\n";

std::cout << " --output <path> Path to output video file (default: ./output.mp4)\n";

std::cout << " --gpu Use GPU acceleration if available (default: false)\n";

std::cout << " --conf <value> Confidence threshold (default: 0.25)\n";

std::cout << " --iou <value> IoU threshold for NMS (default: 0.45)\n";

std::cout << " --help Show this help message\n\n";

std::cout << "Examples:\n";

std::cout << " YOLO26_ort --input=test_video.mp4 --output=result.mp4 --conf=0.3\n";

std::cout << " YOLO26_ort --input=0 --gpu # Use webcam with GPU and Segmatation\n";

std::cout << " YOLO26_ort --input=test.jpg # Image Segmatation\n";

}

Args parse_args(int argc, char *argv[])

{

Args args;

for (int i = 1; i < argc; ++i)

{

std::string arg(argv[i]);

if (arg == "--help" || arg == "-h")

{

args.help = true;

}

else if (arg.find("--model=") == 0)

{

args.model_path = arg.substr(8);

}

else if (arg.find("--classes=") == 0)

{

args.classes_path = arg.substr(10);

}

else if (arg.find("--input=") == 0)

{

args.input_path = arg.substr(8);

}

else if (arg.find("--output=") == 0)

{

args.output_path = arg.substr(9);

}

else if (arg == "--gpu")

{

args.use_gpu = true;

}

else if (arg.find("--conf=") == 0)

{

args.conf_threshold = std::stof(arg.substr(7));

}

else if (arg.find("--iou=") == 0)

{

args.iou_threshold = std::stof(arg.substr(6));

}

else

{

std::cerr << "Unknown argument: " << arg << std::endl;

}

}

return args;

}

bool is_camera_input(const std::string &input)

{

try

{

std::stoi(input);

return true;

}

catch (const std::exception &)

{

return false;

}

}

bool is_image_file(const std::string &input)

{

std::string lower = input;

std::transform(lower.begin(), lower.end(), lower.begin(), ::tolower);

return lower.find(".jpg") != std::string::npos ||

lower.find(".jpeg") != std::string::npos ||

lower.find(".png") != std::string::npos ||

lower.find(".bmp") != std::string::npos;

}

void draw_fps(cv::Mat &frame, double fps)

{

std::string fps_text = "FPS: " + std::to_string(static_cast<int>(fps));

cv::putText(frame, fps_text, cv::Point(10, 30), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 255, 0), 2);

}

int main(int argc, char *argv[])

{

Args args = parse_args(argc, argv);

if (args.help)

{

print_help();

return 0;

}

std::cout << "YOLO26 C++ Object Detection & Segmentation\n";

std::cout << "============================================\n";

std::cout << "Model: " << args.model_path << "\n";

std::cout << "Classes: " << args.classes_path << "\n";

std::cout << "Input: " << args.input_path << "\n";

std::cout << "Output: " << args.output_path << "\n";

std::cout << "GPU: " << (args.use_gpu ? "enabled" : "disabled") << "\n";

std::cout << "Confidence threshold: " << args.conf_threshold << "\n";

std::cout << "IoU threshold: " << args.iou_threshold << "\n";

std::cout << "\n";

YOLO26SegDetector detector(args.model_path, args.classes_path, args.use_gpu);

if (is_image_file(args.input_path))

{

std::cout << "Processing single image: " << args.input_path << std::endl;

cv::Mat image = cv::imread(args.input_path);

if (image.empty())

{

std::cerr << "Error: Cannot read image file: " << args.input_path << std::endl;

return -1;

}

cv::Mat result = image.clone();

auto start_time = std::chrono::high_resolution_clock::now();

auto detections = detector.segment(image, args.conf_threshold, args.iou_threshold);

auto end_time = std::chrono::high_resolution_clock::now();

auto duration = std::chrono::duration_cast<std::chrono::milliseconds>(end_time - start_time);

std::cout << "Detection completed in " << duration.count() << "ms" << std::endl;

std::cout << "Detected " << detections.size() << " objects" << std::endl;

detector.drawSegmentationsAndBoxes(result, detections);

std::string output_path = args.output_path;

if (output_path == "./output.mp4")

{

size_t dot_pos = args.input_path.find_last_of('.');

std::string base_name = args.input_path.substr(0, dot_pos);

output_path = base_name + "_result.jpg";

}

cv::imwrite(output_path, result);

std::cout << "Result saved to: " << output_path << std::endl;

cv::Mat display_result;

double scale = std::min(1280.0 / result.cols, 720.0 / result.rows);

cv::Size display_size(result.cols * scale, result.rows * scale);

cv::resize(result, display_result, display_size);

cv::imshow("YOLO26 Result", display_result);

cv::waitKey(0);

return 0;

}

cv::VideoCapture cap;

if (is_camera_input(args.input_path))

{

int camera_id = std::stoi(args.input_path);

cap.open(camera_id);

std::cout << "Opening camera " << camera_id << std::endl;

}

else

{

cap.open(args.input_path);

std::cout << "Opening video file: " << args.input_path << std::endl;

}

if (!cap.isOpened())

{

std::cerr << "Error: Cannot open input source: " << args.input_path << std::endl;

return -1;

}

int frame_width = static_cast<int>(cap.get(cv::CAP_PROP_FRAME_WIDTH));

int frame_height = static_cast<int>(cap.get(cv::CAP_PROP_FRAME_HEIGHT));

double fps = cap.get(cv::CAP_PROP_FPS);

if (fps <= 0)

fps = 30.0;

std::cout << "Video properties: " << frame_width << "x" << frame_height << " @ " << fps << " FPS\n\n";

cv::VideoWriter writer;

if (!is_camera_input(args.input_path))

{

int fourcc = cv::VideoWriter::fourcc('m', 'p', '4', 'v');

writer.open(args.output_path, fourcc, fps, cv::Size(frame_width, frame_height));

if (!writer.isOpened())

{

std::cerr << "Error: Cannot open output video file: " << args.output_path << std::endl;

return -1;

}

std::cout << "Output will be saved to: " << args.output_path << std::endl;

}

auto start_time = std::chrono::high_resolution_clock::now();

int frame_count = 0;

double avg_fps = 0.0;

cv::Mat frame;

std::cout << "\nProcessing... Press 'q' to quit.\n\n";

while (true)

{

auto frame_start = std::chrono::high_resolution_clock::now();

if (!cap.read(frame))

{

if (is_camera_input(args.input_path))

{

std::cerr << "Error reading from camera" << std::endl;

break;

}

else

{

std::cout << "End of video file reached" << std::endl;

break;

}

}

cv::Mat result = frame.clone();

auto detections = detector.segment(frame, args.conf_threshold, args.iou_threshold);

detector.drawSegmentationsAndBoxes(result, detections);

auto frame_end = std::chrono::high_resolution_clock::now();

auto frame_duration = std::chrono::duration_cast<std::chrono::milliseconds>(frame_end - frame_start);

double current_fps = 1000.0 / frame_duration.count();

frame_count++;

avg_fps = (avg_fps * (frame_count - 1) + current_fps) / frame_count;

draw_fps(result, current_fps);

if (is_camera_input(args.input_path))

{

cv::imshow("YOLO26 seg", result);

char key = cv::waitKey(1) & 0xFF;

if (key == 'q' || key == 27)

{

break;

}

}

if (writer.isOpened())

{

writer.write(result);

}

if (!is_camera_input(args.input_path) && frame_count % 30 == 0)

{

std::cout << "Processed " << frame_count << " frames, Average FPS: "

<< static_cast<int>(avg_fps) << std::endl;

}

}

cap.release();

if (writer.isOpened())

{

writer.release();

}

cv::destroyAllWindows();

auto end_time = std::chrono::high_resolution_clock::now();

auto total_duration = std::chrono::duration_cast<std::chrono::seconds>(end_time - start_time);

std::cout << "\nProcessing completed!\n";

std::cout << "Total frames processed: " << frame_count << std::endl;

std::cout << "Total time: " << total_duration.count() << " seconds\n";

std::cout << "Average FPS: " << static_cast<int>(avg_fps) << std::endl;

return 0;

}最后测试效果: