今天,我们尝试使用LLaMA-Factory工具实现LLM的微调工作

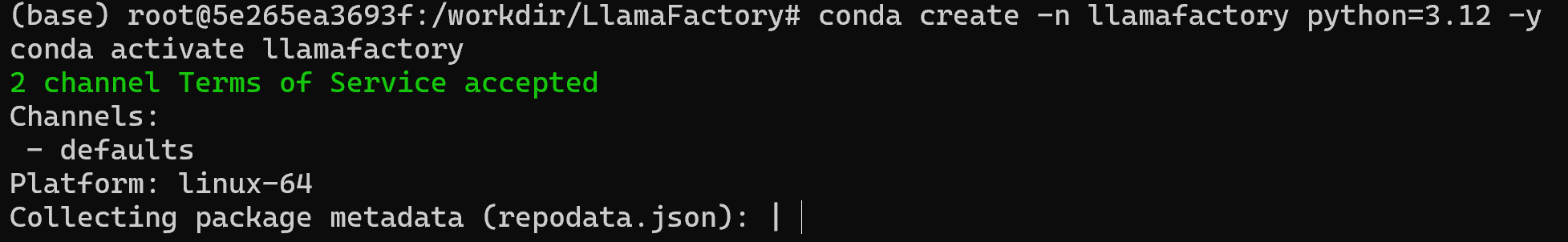

首先,创建并激活conda环境

bash

conda create -n llamafactory python=3.12 -y

conda activate llamafactory

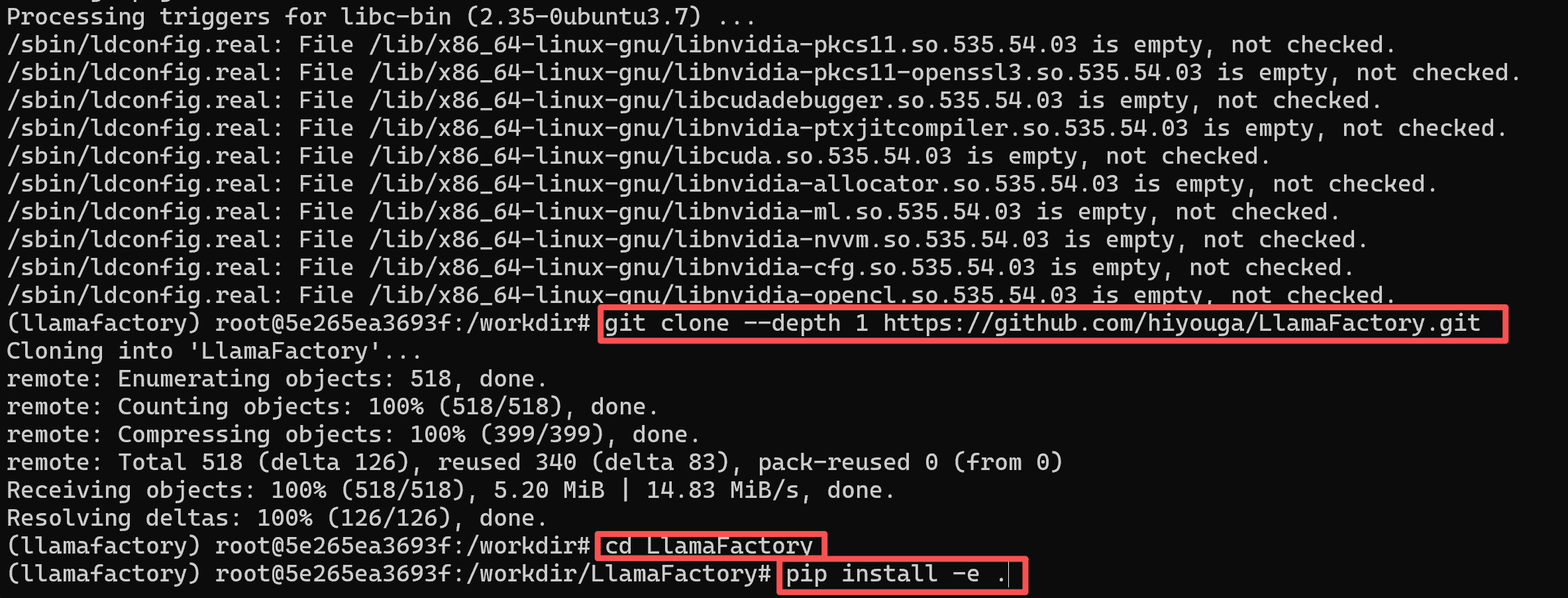

然后,根据官方说明使用如下命令下载git,然后clone并从源码进行安装

bash

apt update

apt install git -y

bash

git clone --depth 1 https://github.com/hiyouga/LlamaFactory.git

cd LlamaFactory

pip install -e .

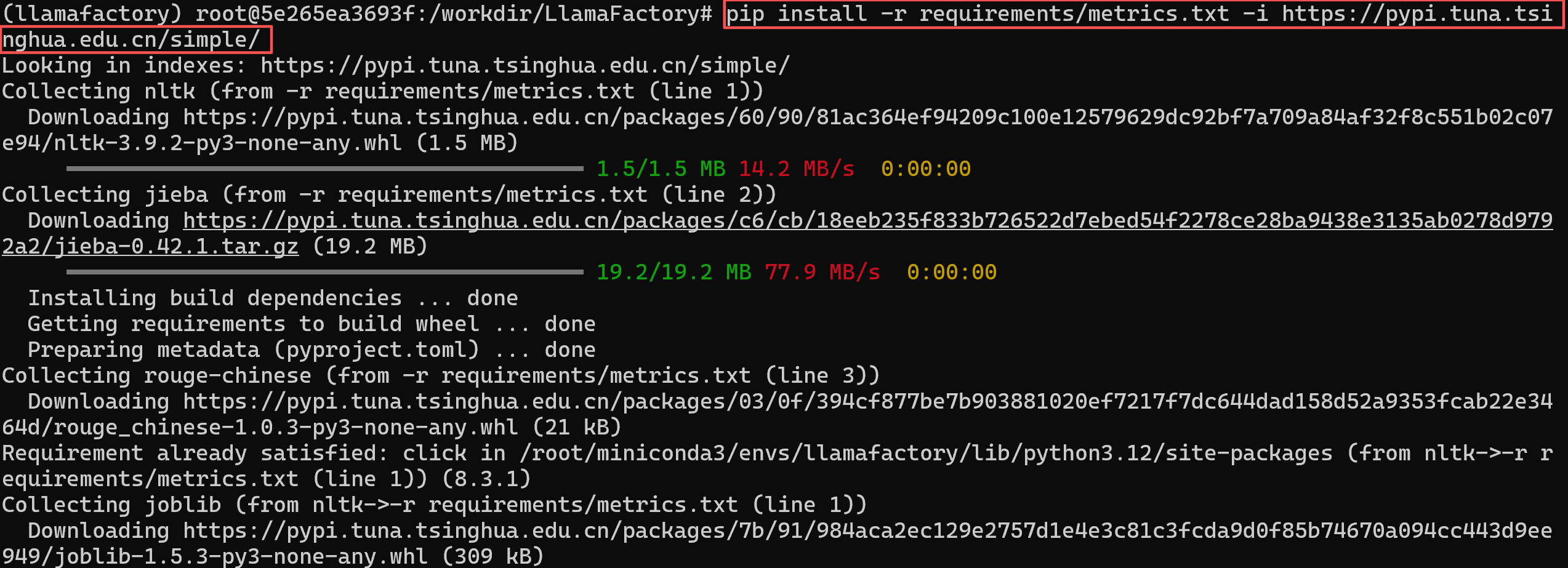

pip install -r requirements/metrics.txt -i https://pypi.tuna.tsinghua.edu.cn/simple/

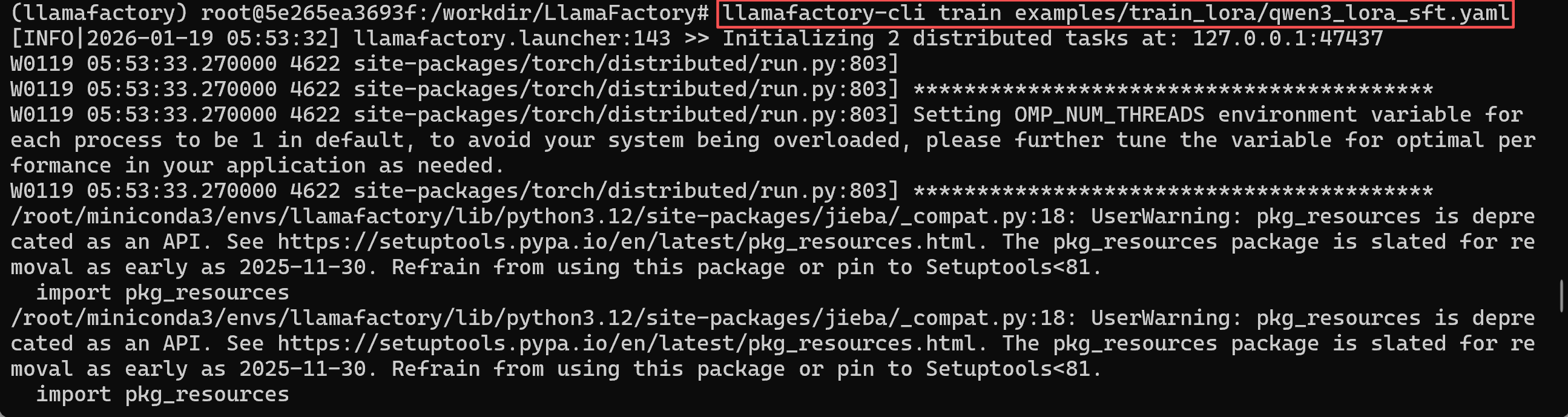

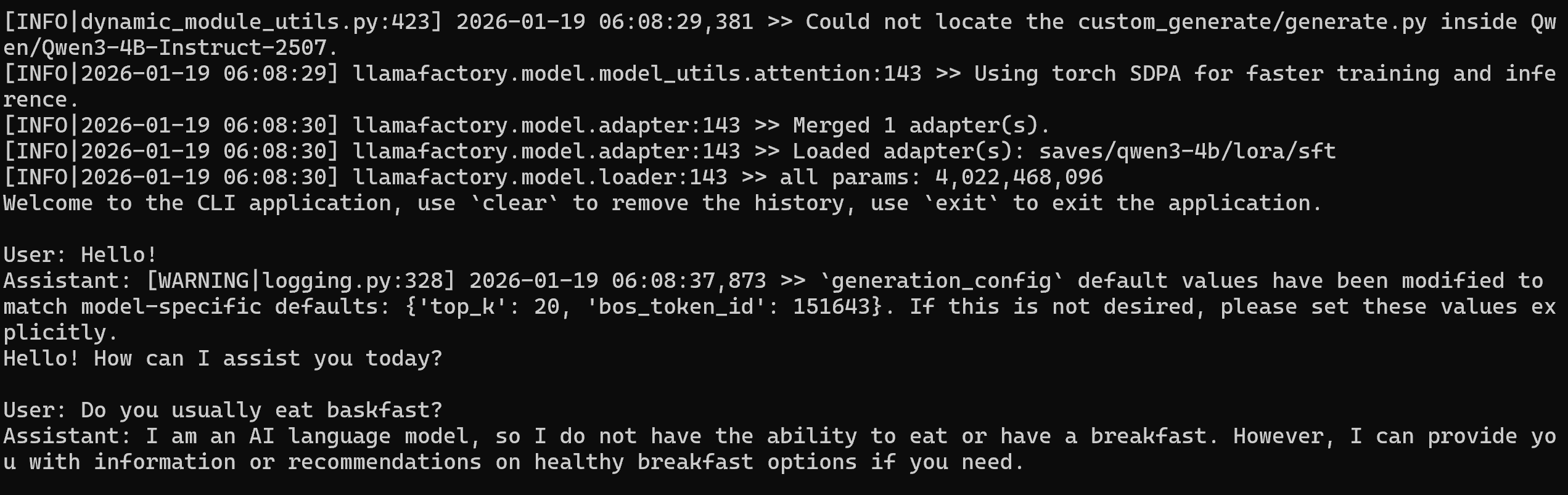

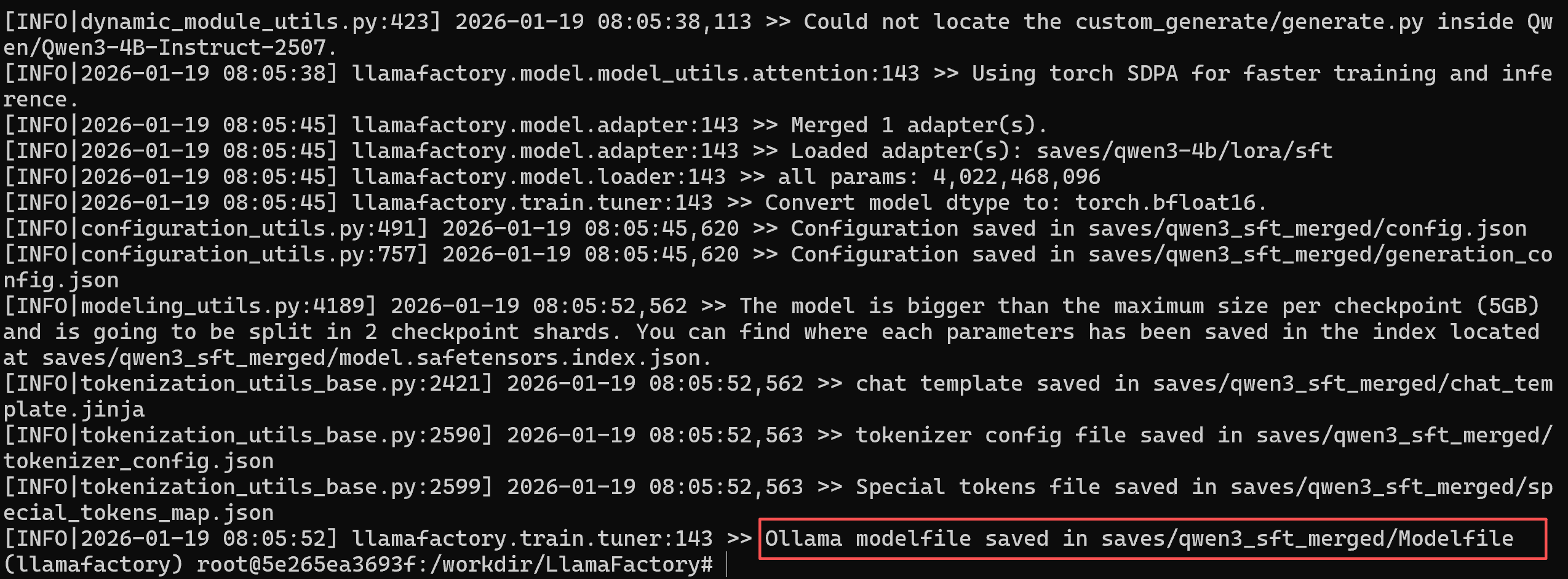

然后,使用如下命令进行Qwen3-4B的LoRA微调、推理与合并

bash

llamafactory-cli train examples/train_lora/qwen3_lora_sft.yaml

llamafactory-cli chat examples/inference/qwen3_lora_sft.yaml

llamafactory-cli export examples/merge_lora/qwen3_lora_sft.yaml