1、集群规划

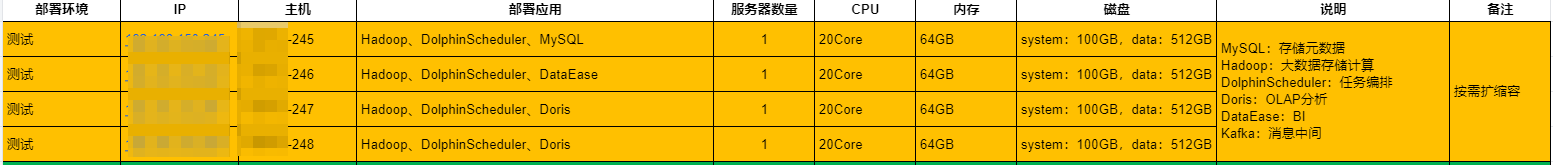

1.1、集群资源规划

1.2、HostName

powershell

cat > /etc/hosts << EOF

192.168.xxx.xxx xxx-245

192.168.xxx.xxx xxx-246

192.168.xxx.xxx xxx-247

192.168.xxx.xxx xxx-248

EOF1.3、创建用户及免密

powershell

ansible cluster -m user -a "name=hadoop password={{'Hadoop.2026'|password_hash('sha512')}}"

ansible cluster -m shell -a "echo 'hadoop ALL=(ALL) NOPASSWD: ALL' >> /etc/sudoers"

sudo -u hadoop -i

ssh-keygen -t rsa -b 2048 -P '' -f ~/.ssh/id_rsa

ssh-copy-id 192.168.xxx.xxx

ssh-copy-id 192.168.xxx.xxx

ssh-copy-id 192.168.xxx.xxx

ssh-copy-id 192.168.xxx.xxx1.4、JDK

powershell

vi /etc/profile

# jdk

export JAVA_HOME=/usr/java/jdk-17

export PATH=$JAVA_HOME/bin:$PATH2、Zookeeper集群

2.1、安装解压

powershell

# 分发

ansible cluster -m copy -a 'src=/opt/software/apache-zookeeper-3.9.4-bin.tar.gz dest=/opt/software/'

# 解压

ansible cluster -m shell -a "tar -xvf /opt/software/apache-zookeeper-3.9.4-bin.tar.gz -C /opt/apps"

# 改名

ansible cluster -m shell -a "mv /opt/apps/apache-zookeeper-3.9.4-bin /opt/apps/zookeeper-3.9.4"2.2、ZK核心配置文件

powershell

# 基本时间单位(毫秒)

tickTime=2000

# Leader选举初始化超时时间

initLimit=10

# 与Leader同步的超时时间

syncLimit=5

# 客户端连接端口

clientPort=2181

# 数据存储目录

dataDir=/data/zookeeper/data

# 集群节点配置(格式:server.ID=主机名:选举端口:通信端口)

server.1=xxx-246:2888:3888

server.2=xxx-247:2888:3888

server.3=xxx-248:2888:3888

# 自动清理频率(小时)

autopurge.purgeInterval=24

# 保留快照数量

autopurge.snapRetainCount=32.3、节点标识

注:每个节点都要按配置文件修改

powershell

ansible cluster -m shell -a "mkdir -p /data/zookeeper/data/"

ansible cluster -m shell -a "echo '1' > /data/zookeeper/data/myid"2.4、环境变量

powershell

# zookeeper

export ZK_HOME=/opt/apps/zookeeper-3.9.4

export PATH=$PATH:$ZK_HOME/bin

source /etc/profile2.5、分发配置

powershell

ansible cluster -m copy -a 'src=/opt/apps/zookeeper-3.9.4/conf/zoo.cfg dest=/opt/apps/zookeeper-3.9.4/conf/'2.6、修改日志目录

powershell

vi bin/zkEnv.sh

# 修改

ZOO_LOG_DIR=/data/zookeeper/log

# 分发

ansible cluster -m copy -a 'src=/opt/apps/zookeeper-3.9.4/bin/zkEnv.sh dest=/opt/apps/zookeeper-3.9.4/bin'2.7、创建目录并赋权

powershell

ansible cluster -m shell -a "mkdir -p /data/zookeeper/{data,log}"

ansible cluster -m shell -a "chown -R hadoop:hadoop /opt/apps/zookeeper-3.9.4"

ansible cluster -m shell -a "chown -R hadoop:hadoop /data/zookeeper/"2.8、启动与状态验证

powershell

zkServer.sh start

zkServer.sh status

ansible cluster -m shell -a '/opt/apps/zookeeper-3.9.4/bin/zkServer.sh restart'

ansible cluster -m shell -a '/opt/apps/zookeeper-3.9.4/bin/zkServer.sh status'3、Hadoop集群

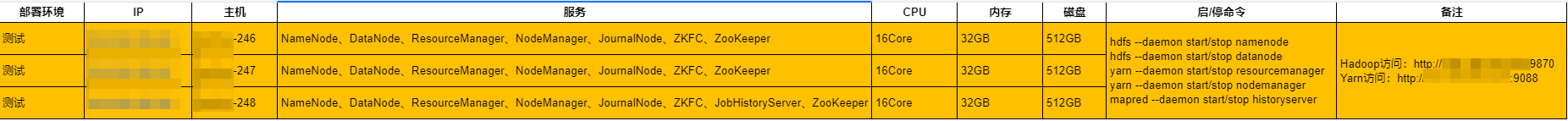

3.1、集群规划

3.2、安装解压

powershell

# 解压

tar -xzf hadoop-3.4.2.tar.gz -C /opt/apps/

ansible cluster -m shell -a "tar -xzf /opt/software/hadoop-3.4.2.tar.gz -C /opt/apps"3.3、修改配置

1、hadoop-env.sh

powershell

export JAVA_HOME=/usr/java/jdk-17

export HADOOP_OPTS="--add-opens java.base/java.lang=ALL-UNNAMED"

export HADOOP_LOG_DIR=/data/hadoop/log

export HADOOP_CLASSPATH=$HADOOP_HOME/lib/*:$HADOOP_HOME/etc/hadoop/*

export HADOOP_CONF_DIR=/opt/apps/hadoop-3.4.2/etc/hadoop2、yarn-env.sh

powershell

export JAVA_HOME=/usr/java/jdk-173、workers

powershell

xxx-246

xxx-247

xxx-2484、capacity-scheduler.xml

xml

yarn.scheduler.capacity.maximum-am-resource-percent=0.55、core-site.xml

xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 集群名称 -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hdfs-ha</value>

</property>

<!-- 指定hadoop运行时产生文件的存储目录 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop/data/tmp</value>

</property>

<!-- 指定要连接的zkServer地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>xxx-246:2181,xxx-247:2181,xxx-248:2181</value>

</property>

</configuration>6、hdfs-site.xml

xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- namenode存储目录 -->

<property>

<name>dfs.namenode.name.dir</name>

<value>/data/hadoop/data/namenode</value>

</property>

<!-- datanode数据存储目录 -->

<property>

<name>dfs.datanode.data.dir</name>

<value>/data/hadoop/data/datanode</value>

</property>

<!-- journalnode数据存储目录 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/data/hadoop/data/journal</value>

</property>

<!-- 完全分布式集群名称 -->

<property>

<name>dfs.nameservices</name>

<value>hdfs-ha</value>

</property>

<!-- 集群中的namenode节点都有哪些 -->

<property>

<name>dfs.ha.namenodes.hdfs-ha</name>

<value>nn-246,nn-247,nn-248</value>

</property>

<!-- namenode的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.hdfs-ha.nn-246</name>

<value>xxx-246:9000</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hdfs-ha.nn-247</name>

<value>xxx-247:9000</value>

</property>

<property>

<name>dfs.namenode.rpc-address.hdfs-ha.nn-248</name>

<value>xxx-248:9000</value>

</property>

<!-- namenode的http通信地址 -->

<property>

<name>dfs.namenode.http-address.hdfs-ha.nn-246</name>

<value>xxx-246:9870</value>

</property>

<property>

<name>dfs.namenode.http-address.hdfs-ha.nn-247</name>

<value>xxx-247:9870</value>

</property>

<property>

<name>dfs.namenode.http-address.hdfs-ha.nn-248</name>

<value>xxx-248:9870</value>

</property>

<!-- 指定namenode元数据在journalnode上的存放位置,journalnode用于同步主备namenode之间的edits文件 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://xxx-246:8485;xxx-247:8485;xxx-248:8485/hdfs-ha</value>

</property>

<!-- 访问代理类:client用于确定哪个NameNode为Active -->

<property>

<name>dfs.client.failover.proxy.provider.hdfs-ha</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制,即同一时刻只能有一台服务器对外响应 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<!-- 使用隔离机制时需要ssh秘钥登陆 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<!-- 启用namenode故障自动转移 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 设置数据块应该被复制的份数,也就是副本数,默认:3 -->

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

</configuration>7、mapred-site.xml

xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 指定MapReduce框架使用的资源管理器名称,这里设置为YARN -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- 每个Map Task需要的内存量 -->

<property>

<name>mapreduce.map.memory.mb</name>

<value>2048</value>

</property>

<!-- 每个Reduce Task需要的内存量 -->

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>2048</value>

</property>

<!-- 任务内部排序缓冲区大小 -->

<property>

<name>mapreduce.task.io.sort.mb</name>

<value>128</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/opt/apps/hadoop-3.4.2</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/opt/apps/hadoop-3.4.2</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/opt/apps/hadoop-3.4.2</value>

</property>

<!-- 历史服务器端地址 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>xxx-248:10020</value>

</property>

<!-- 历史服务器web端地址 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>xxx-248:19888</value>

</property>

</configuration>8、yarn-site.xml

xml

<?xml version="1.0"?>

<configuration>

<!-- nodemanager获取数据的方式 -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 启用resourcemanager ha -->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!-- 声明resourcemanager的地址 -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yarn-ha</value>

</property>

<!-- 指定resourcemanager的逻辑列表 -->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm-246,rm-247,rm-248</value>

</property>

<!-- rm-246的配置 -->

<!-- 指定rm-246的主机名 -->

<property>

<name>yarn.resourcemanager.hostname.rm-246</name>

<value>xxx-246</value>

</property>

<!-- 指定rm-246的web端地址 -->

<property>

<name>yarn.resourcemanager.webapp.address.rm-246</name>

<value>xxx-246:9088</value>

</property>

<!-- rm-247的配置 -->

<!-- 指定rm-247的主机名 -->

<property>

<name>yarn.resourcemanager.hostname.rm-247</name>

<value>xxx-247</value>

</property>

<!-- 指定rm-247的web端地址 -->

<property>

<name>yarn.resourcemanager.webapp.address.rm-247</name>

<value>xxx-247:9088</value>

</property>

<!-- rm-248的配置 -->

<!-- 指定rm-248的主机名 -->

<property>

<name>yarn.resourcemanager.hostname.rm-248</name>

<value>xxx-248</value>

</property>

<!-- 指定rm-248的web端地址 -->

<property>

<name>yarn.resourcemanager.webapp.address.rm-248</name>

<value>xxx-248:9088</value>

</property>

<!-- 指定zookeeper集群地址 -->

<property>

<name>hadoop.zk.address</name>

<value>xxx-246:2181,xxx-247:2181,xxx-248:2181</value>

</property>

<!-- 启用自动恢复 -->

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<!-- 指定resourcemanager的状态信息存储在zookeeper集群 -->

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<!-- 忽略虚拟内存检查 -->

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<!-- 每个NodeManager可用内存 -->

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>32768</value>

</property>

<!-- 每个任务可申请的最小/最大内存资源量 -->

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>32768</value>

</property>

<!-- 每个NodeManager可用CPU核心数(虚拟CPU个数,推荐值与物理CPU核数数目相同) -->

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>16</value>

</property>

<!-- 单个任务可申请的最小/最大虚拟CPU个数 -->

<property>

<name>yarn.scheduler.minimum-allocation-vcores</name>

<value>1</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>48</value>

</property>

<!-- 开启日志聚集功能 -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!-- 设置日志聚集服务器地址 -->

<property>

<name>yarn.log.server.url</name>

<value>http://xxx-248:19888/jobhistory/logs</value>

</property>

<!-- 设置日志保留时间为3天 -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>259200</value>

</property>

<!-- 每天检查一次 -->

<property>

<name>yarn.log-aggregation.retain-check-interval-seconds</name>

<value>86400</value>

</property>

<!-- 应用执行完日志保留的时间 -->

<property>

<name>yarn.nodemanager.delete.debug-delay-sec</name>

<value>600</value>

</property>

<!-- 容器日志本地保存路径 -->

<property>

<name>yarn.nodemanager.log-dirs</name>

<value>/data/hadoop/log</value>

</property>

</configuration>9、配置环境变量

powershell

vi /etc/profile

# hadoop

export HADOOP_HOME=/opt/apps/hadoop-3.4.2

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop3.4、分发配置

powershell

ansible cluster -m copy -a "src=/opt/apps/hadoop-3.4.2/etc/hadoop/capacity-scheduler.xml dest=/opt/apps/hadoop-3.4.2/etc/hadoop/"

ansible cluster -m copy -a "src=/opt/apps/hadoop-3.4.2/etc/hadoop/workers dest=/opt/apps/hadoop-3.4.2/etc/hadoop/"

ansible cluster -m copy -a "src=/opt/apps/hadoop-3.4.2/etc/hadoop/mapred-site.xml dest=/opt/apps/hadoop-3.4.2/etc/hadoop/"

ansible cluster -m copy -a "src=/opt/apps/hadoop-3.4.2/etc/hadoop/yarn-site.xml dest=/opt/apps/hadoop-3.4.2/etc/hadoop/"

ansible cluster -m copy -a "src=/opt/apps/hadoop-3.4.2/etc/hadoop/core-site.xml dest=/opt/apps/hadoop-3.4.2/etc/hadoop/"

ansible cluster -m copy -a "src=/opt/apps/hadoop-3.4.2/etc/hadoop/hdfs-site.xml dest=/opt/apps/hadoop-3.4.2/etc/hadoop/"

ansible cluster -m copy -a "src=/opt/apps/hadoop-3.4.2/etc/hadoop/hadoop-env.sh dest=/opt/apps/hadoop-3.4.2/etc/hadoop/"

ansible cluster -m copy -a "src=/opt/apps/hadoop-3.4.2/etc/hadoop/yarn-env.sh dest=/opt/apps/hadoop-3.4.2/etc/hadoop/"3.5、创建目录并赋权

powershell

# 创建目录

ansible cluster -m shell -a "mkdir -p /data/hadoop/data/{namenode,journal,datanode,tmp}"

ansible cluster -m shell -a "mkdir -p /data/hadoop/data/log"

# 赋权

ansible cluster -m shell -a "chown -R hadoop:hadoop /data/hadoop"

ansible cluster -m shell -a "chown -R hadoop:hadoop /opt/apps/hadoop-3.4.2"3.6、服务启动

1、启动所有JournalNode服务

powershell

# 启动

hdfs --daemon start journalnode

hdfs --daemon stop journalnode

# 验证 JournalNode 状态

curl http://xxx-246:8480/jmx2、主节点格式化NameNode

powershell

hdfs namenode -format3、主节点启动NameNode

powershell

hdfs --daemon start namenode4、同步元数据到所有备用NameNode节点

powershell

# 所有备用NN节点执行

hdfs namenode -bootstrapStandby5、启动所有备用NameNode

powershell

# 所有备用NN节点执行

hdfs --daemon start namenode6、切换NameNode为Active

powershell

hdfs haadmin -getAllServiceState

hdfs haadmin -transitionToActive nn-246

hdfs haadmin -transitionToActive --forcemanual nn-2467、初始化 ZooKeeper 故障转移控制器

powershell

# 停止所以Hadoop服务

stop-dfs.sh

# 初始化HA在Zookeeper中的状态

hdfs zkfc -formatZK8、启动HDFS

powershell

# 启动NameNode、DataNode

start-dfs.sh9、启动YARN

powershell

# 启动ResourceManager、NodeManager

start-yarn.sh

# 查看节点状态

yarn rmadmin -getServiceState rm-24610、启动日志服务

powershell

mapred --daemon start historyserver4、Hadoop操作

4.1、Web访问

powershell

Hadoop访问:http://192.168.xxx.xxx:9870

Yarn访问:http://192.168.xxx.xxx:90884.2、创建目录并赋权

powershell

hadoop fs -mkdir /tmp

hadoop fs -mkdir /spark-jars

hadoop fs -mkdir /spark-history

hadoop fs -mkdir /tmp/hadoop-yarn

hadoop fs -mkdir -p /user/hive

hadoop fs -mkdir -p /user/hive/tmp

hadoop fs -mkdir -p /user/hive/warehouse

hadoop fs -chmod g+w /tmp

hadoop fs -chmod g+w /spark-jars

hadoop fs -chmod g+w /spark-history

hadoop fs -chmod g+w /user/hive

hadoop fs -chmod g+w /user/hive/tmp

hadoop fs -chmod -R o+w /user/hive/tmp

hadoop fs -chmod -R g+w /user/hive/warehouse

hdfs dfs -chmod -R o+w /tmp/hadoop-yarn

hdfs dfs -chown -R hadoop:hive /user/hive4.3、运行PI任务

powershell

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.4.2.jar pi 10 100