我们的k8s集群是二进制部署,版本是1.20.4 同时选择的kube-prometheus版本是kube-prometheus-0.8.0

一、prometheus添加自定义监控与告警(etcd)

1、步骤及注意事项(前提,部署参考部署篇)

1.1 一般etcd集群会开启HTTPS认证,因此访问etcd需要对应的证书

1.2 使用证书创建etcd的secret

1.3 将etcd的secret挂在到prometheus

1.4创建etcd的servicemonitor对象(匹配kube-system空间下具有k8s-app=etcd标签的service)

1.5 创建service关联被监控对象

2、操作部署

2.1 创建etcd的secret

ETC的自建证书路径:/opt/etcd/ssl,cd /opt/etcd/ssl

kubectl create secret generic etcd-certs --from-file=server.pem --from-file=server-key.pem --from-file=ca.pem -n monitoring

可以用下面的命令验证下是否有内容产出,由内存说明是没有问题的

curl --cert /opt/etcd/ssl/server.pem --key /opt/etcd/ssl/server-key.pem https://192.168.7.108:2379/metrics -k |more

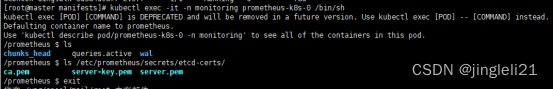

可以进入容器查看,查看证书挂载进去了

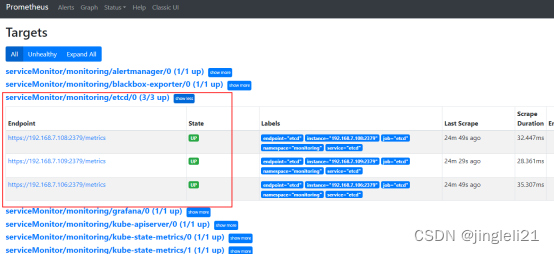

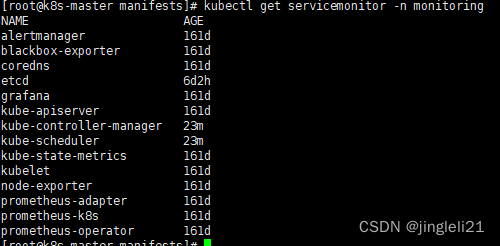

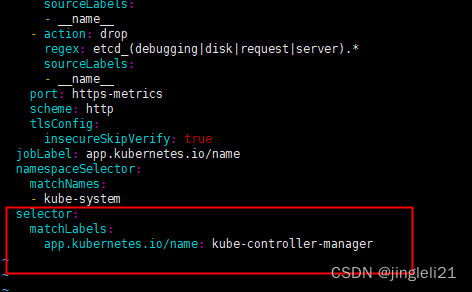

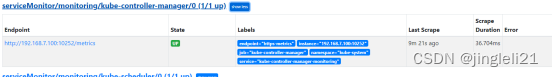

root@master manifests\]# kubectl exec -it -n monitoring prometheus-k8s-0 /bin/sh /prometheus $ ls /etc/prometheus/secrets/etcd-certs/ ca.pem server-key.pem server.pem  2.2 添加secret到名为k8s的prometheus对象上(kubectl edit prometheus k8s -n monitoring或者修改yaml文件并更新资源) apiVersion: monitoring.coreos.com/v1 kind: Prometheus metadata: labels: prometheus: k8s name: k8s namespace: monitoring spec: alerting: alertmanagers: - name: alertmanager-main namespace: monitoring port: web baseImage: quay.io/prometheus/prometheus nodeSelector: kubernetes.io/os: linux podMonitorNamespaceSelector: {} podMonitorSelector: {} replicas: 2 secrets: - etcd-certs resources: requests: memory: 400Mi ruleSelector: matchLabels: prometheus: k8s role: alert-rules securityContext: fsGroup: 2000 runAsNonRoot: true runAsUser: 1000 serviceAccountName: prometheus-k8s serviceMonitorNamespaceSelector: {} serviceMonitorSelector: {} version: v2.11.0 或者可以直接找到配置文件更新vim prometheus-prometheus.yaml kubectl replace -f prometheus-prometheus.yaml 3、创建servicemonitoring对象 apiVersion: monitoring.coreos.com/v1 kind: ServiceMonitor metadata: labels: k8s-app: etcd1 #这个serviceMonitor的标签 name: etcd namespace: monitoring spec: endpoints: - interval: 30s port: etcd #port名字就是service里面的spec.ports.name scheme: https #访问的方式 tlsConfig: caFile: /etc/prometheus/secrets/etcd-certs/ca.pem #证书位置/etc/prometheus/secrets,这个路径是默认的挂载路径 certFile: /etc/prometheus/secrets/etcd-certs/server.pem keyFile: /etc/prometheus/secrets/etcd-certs/server-key.pem selector: matchLabels: k8s-app: etcd1 namespaceSelector: matchNames: - monitoring #匹配的命名空间 4、创建service并自定义endpoint --- apiVersion: v1 kind: Endpoints metadata: labels: k8s-app: etcd1 name: etcd namespace: monitoring subsets: - addresses: - ip: 192.168.7.108 - ip: 192.168.7.109 - ip: 192.168.7.106 ports: - name: etcd #name port: 2379 #port protocol: TCP --- apiVersion: v1 kind: Service metadata: labels: k8s-app: etcd1 name: etcd namespace: monitoring spec: ports: - name: etcd port: 2379 protocol: TCP targetPort: 2379 sessionAffinity: None type: ClusterIP kubectl replace -f prometheus-prometheus.yaml kubectl apply -f servicemonitor.yaml kubectl apply -f service.yam 到这里就可以在prometheus中查看到etcd的监控信息了  添加告警 apiVersion: monitoring.coreos.com/v1 kind: PrometheusRule metadata: labels: prometheus: k8s role: alert-rules name: etcd-rules namespace: monitoring spec: groups: - name: etcd-exporter.rules rules: - alert: EtcdClusterUnavailable annotations: summary: etcd cluster small description: If one more etcd peer goes down the cluster will be unavailable expr: | count(up{job="etcd"} == 0) > (count(up{job="etcd"}) / 2-1) for: 3m labels: severity: critical 二、prometheus添加自定义监控与告警(kube-controller-manager) ## Kube-prometheus默认是配置了kube-controller-manager的servicemonitor的,但是因为我们是二进制部署的,所以无法找到对应的kube-contorller-manager的service和endpoints,所以这里我们需要自己去手动创建service和endpoints kubectl get servicemonitor -n monitoring  通过查看servicemonitor去查看需要匹配的service的labels vim kubernetes-serviceMonitorKubeControllerManager.yaml  以看到他是通过标签app.kubernetes.io/name=kube-controller-manager来匹配controller-manager的当我们查看的时候,并没有符合这个标签的svc所以prometheus找不到controller-manager地址。 好了,下面我们就开始创建service和endpoints了 创建service 首先创建一个endpoint,指向宿主机ip+10252,然后在创建一个同名的service,和上面查出来的标签 --- apiVersion: v1 kind: Endpoints metadata: annotations: app.kubernetes.io/name: kube-controller-manager name: kube-controller-manager-monitoring namespace: kube-system subsets: - addresses: - ip: 192.168.7.100 ports: - name: https-metrics port: 10252 protocol: TCP --- apiVersion: v1 kind: Service metadata: labels: app.kubernetes.io/name: kube-controller-manager name: kube-controller-manager-monitoring namespace: kube-system spec: ports: - name: https-metrics port: 10252 protocol: TCP targetPort: 10252 sessionAffinity: None type: ClusterIP #注:kube-prometheus使用的是https、而暴露使用的是http,将https改成http kubectl edit servicemonitor -n monitoring kube-controller-manager 60 scheme: http 配置完成后就可以再prometheus界面查看到监控信息了,这样就是成功了  三、prometheus添加自定义监控与告警(kube-scheduler) Kube-scheduler的配置和kube-controller-manager的配置类似 kubectl edit servicemonitor -n monitoring kube-scheduler #scheme: https 改为scheme: http 好了,下面我们就开始创建service和endpoints了 apiVersion: v1 kind: Service metadata: labels: app.kubernetes.io/name: kube-scheduler name: scheduler namespace: kube-system spec: ports: - name: https-metrics port: 10251 protocol: TCP targetPort: 10251 sessionAffinity: None type: ClusterIP --- apiVersion: v1 kind: Endpoints metadata: labels: app.kubernetes.io/name: kube-scheduler name: scheduler namespace: kube-system subsets: - addresses: - ip: 192.168.7.100 ports: - name: https-metrics port: 10251 protocol: TCP Kubectl apply -f svc-kube-scheduler.yaml 配置完成后就可以再prometheus界面查看到监控信息了,这样就是成功了  至此 controller-manager、scheduler 已经起来 备注:看到不少的资料都是说需要修改kube-controller-manager和kube-scheduler的监听地址,从127.0.0.1修改成0.0.0.0 但是因为我的配置中kube-controller-manager的配置文件一开是就是0.0.0.0所以没有修改,kube-scheduler的配置文件监听地址是127.0.0.1也没有进行修改但是依然成功了,所以这里还有待验证。