1.安装mysql数据库

这里采用docker部署mysql,如果没有安装docker

bash

#安装yum工具

yum install -y yum-utils device-mapper-persistent-data lvm2 --skip-broken

#设置docker镜像源

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's/download.docker.com/mirrors.aliyun.com\/docker-ce/g' /etc/yum.repos.d/docker-ce.repo

yum makecache fast

#安装docker

yum install -y docker-ce

#查看docker的版本

docker --verison

#启动docker

systemctl start docker

#开机启动

systemctl enable docker

#配置镜像加速器

vi /etc/docker/daemon.json

{

"registry-mirrors":["https://aa25jngu.mirror.aliyuncs.com"]

}

#重启docker

systemctl daemon-reload

systemctl restart docker安装mysql

bash

#创建mysql数据目录

mkdir -p /docker/mysql/data

#启动mysql容器

docker run -d --name mysql -p 3306:3306 -v /docker/mysql/data:/var/lib/mysql -e MYSQL_ROOT_PASSWORD=123456 mysql:5.7.442.配置hadoop

core-site.xml,新增配置

bash

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>3.下载hive

29 March 2024: release 4.0.0 available

- This release works with Hadoop 3.3.6, Tez 0.10.3

https://dlcdn.apache.org/hive/hive-4.0.0/apache-hive-4.0.0-bin.tar.gz

mysql jdbc jar

https://repo1.maven.org/maven2/mysql/mysql-connector-java/5.1.49/mysql-connector-java-5.1.49.jar

bash

[root@hadoop1 hadoop]# tar -zxvf apache-hive-4.0.0-bin.tar.gz -C /export/server/

[root@hadoop1 server]# ln -s /export/server/apache-hive-4.0.0-bin/ /export/server/hive

[root@hadoop1 export]# mv mysql-connector-java-5.1.49.jar /export/server/hive/lib/

[root@hadoop1 export]# chown -R hadoop:hadoop /export4.配置hive

bash

[root@hadoop1 export]# vi /export/server/hive/conf/hive-env.sh

export HADOOP_HOME=/export/server/hadoop

export HIVE_CONF_DIR=/export/server/hive/conf

export HIVE_AUX_JARS_PATH=/export/server/hive/libhive-site.xml

bash

[root@hadoop1 conf]# vi /export/server/hive/conf/hive-site.xml

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop1:3306/hive?createDatabaseIfNotExist=true&useSSL=false&useUnicode=true&characterEncoding=UTF-8</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>hadoop1</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://hadoop1:9083</value>

</property>

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

</configuration>create database hive charset utf8;

bash

[root@hadoop1 bin]# /export/server/hive/bin/schematool -initSchema -dbType mysql -verbos

[hadoop@hadoop1 data]$ mkdir -p hive/logs

[hadoop@hadoop1 bin]$ cd /export/server/hive/bin

[hadoop@hadoop1 data]$ nohup ./hive --service metastore >> /data/hive/logs/metastore.log 2>&1 &

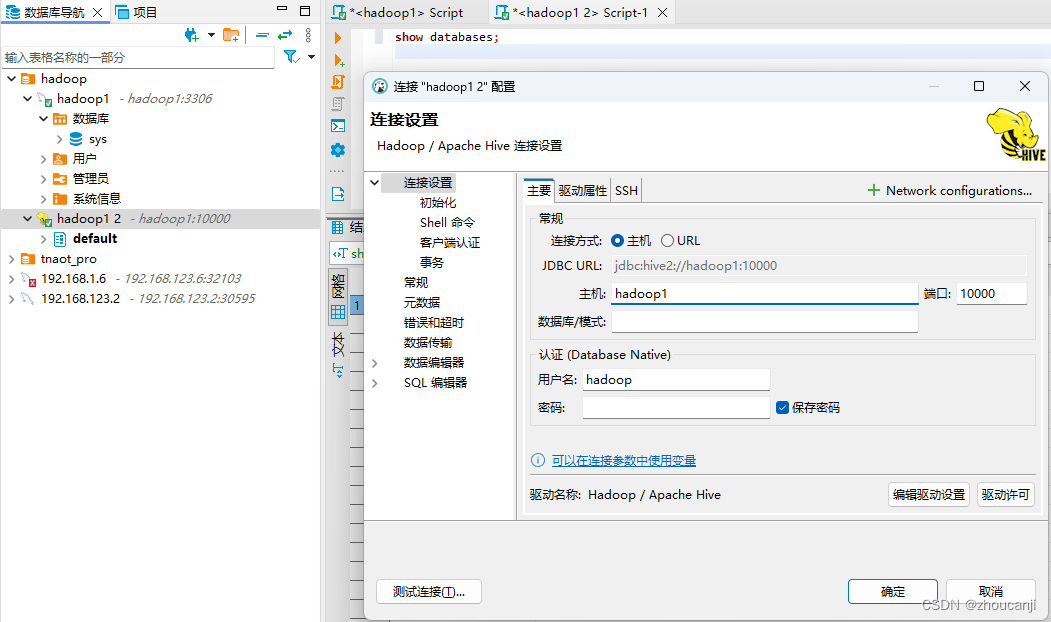

[hadoop@hadoop1 bin]$ nohup ./hive --service hiveserver2 >> /data/hive/logs/hiveserver2.log 2>&1 &5.使用内置客户端beeline,或第三方dbeaver

bash

[hadoop@hadoop1 bin]$ beeline

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/export/server/apache-hive-4.0.0-bin/lib/log4j-slf4j-impl-2.18.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/export/server/hadoop-3.3.6/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/export/server/apache-hive-4.0.0-bin/lib/log4j-slf4j-impl-2.18.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/export/server/hadoop-3.3.6/share/hadoop/common/lib/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Beeline version 4.0.0 by Apache Hive

beeline> !connect jdbc:hive2://hadoop1:10000

Connecting to jdbc:hive2://hadoop1:10000

Enter username for jdbc:hive2://hadoop1:10000: hadoop

Enter password for jdbc:hive2://hadoop1:10000:

Connected to: Apache Hive (version 4.0.0)

Driver: Hive JDBC (version 4.0.0)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://hadoop1:10000> show databases;

INFO : Compiling command(queryId=hadoop_20240604004120_6c11b3b6-ce13-4c04-a1d2-6bc799a040a0): show databases

INFO : Semantic Analysis Completed (retrial = false)

INFO : Created Hive schema: Schema(fieldSchemas:[FieldSchema(name:database_name, type:string, comment:from deserializer)], properties:null)

INFO : Completed compiling command(queryId=hadoop_20240604004120_6c11b3b6-ce13-4c04-a1d2-6bc799a040a0); Time taken: 0.014 seconds

INFO : Concurrency mode is disabled, not creating a lock manager

INFO : Executing command(queryId=hadoop_20240604004120_6c11b3b6-ce13-4c04-a1d2-6bc799a040a0): show databases

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hadoop_20240604004120_6c11b3b6-ce13-4c04-a1d2-6bc799a040a0); Time taken: 0.009 seconds

+----------------+

| database_name |

+----------------+

| default |

+----------------+

1 row selected (0.201 seconds)

0: jdbc:hive2://hadoop1:10000>