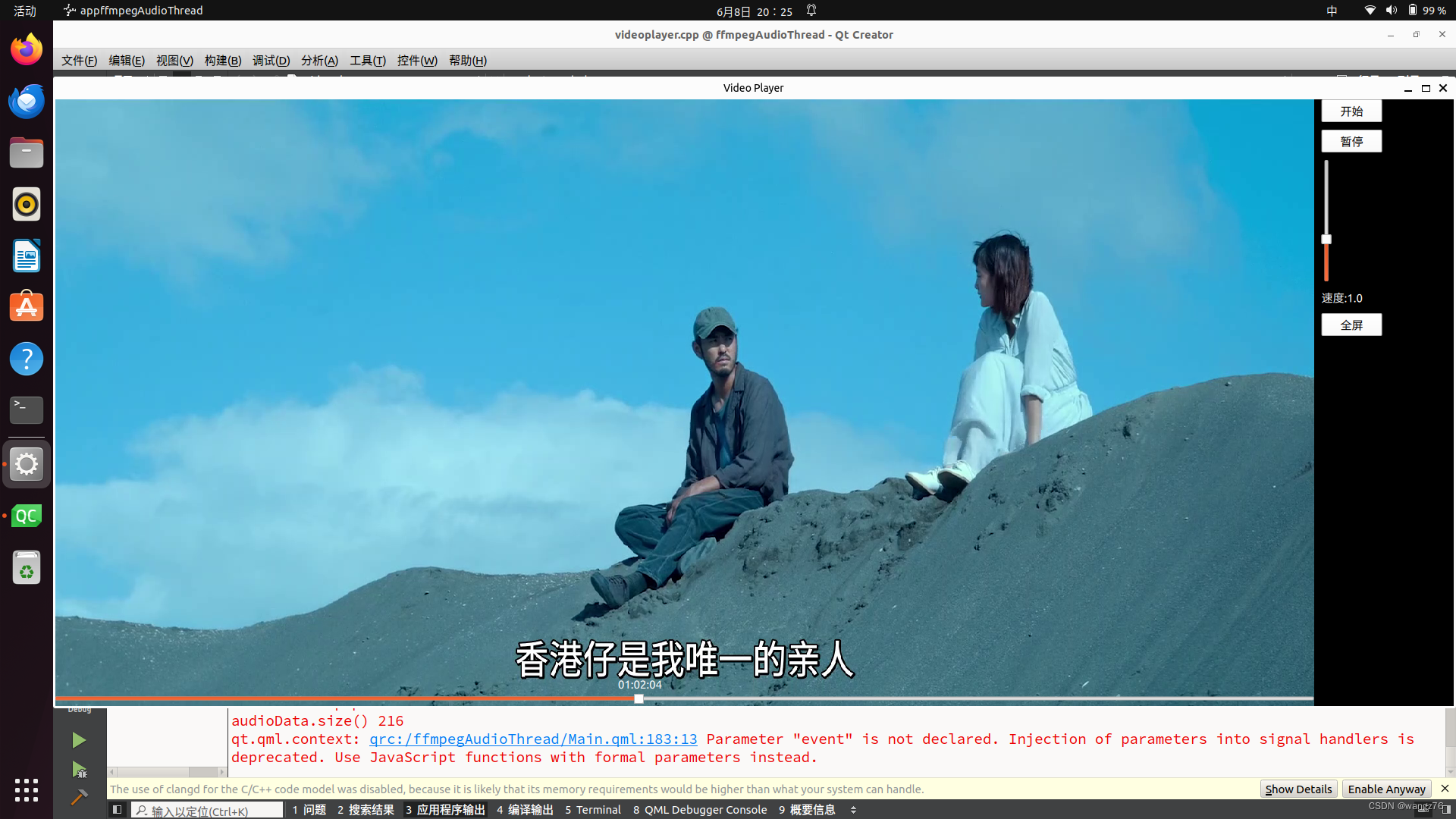

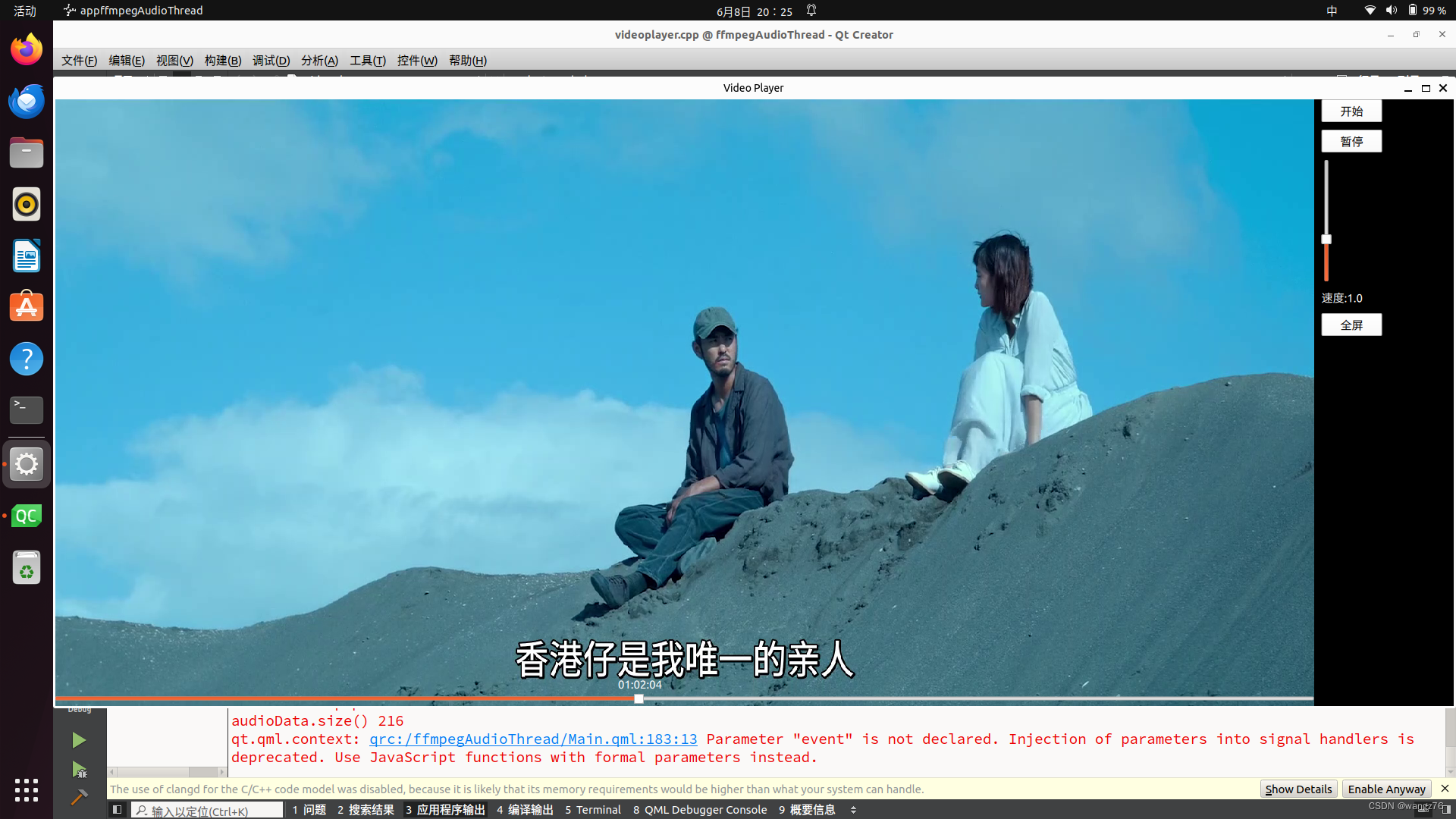

程序如图:

开发环境在ubuntu下,如果改windows下,也就改一下cmakelists.txt。windows下如何配置ffmpeg以前的文章有写,不再重复。

源程序如下:

GitHub - wangz1155/ffmpegAudioThread: 用qt6,qml,ffmpeg,写一个有快进功能的影音播放GitHub - wangz1155/ffmpegAudioThread: 用qt6,qml,ffmpeg,

程序看不懂,可以拷贝出来让AI帮忙分析,不一定要用chatGPT、copilot,国内的"通义"、"天工"、"豆包"、"kimi"等等也很多。

主要文件:

CMakeLists.txt

Main.qml

main.cpp

videoplayer.h

videoplayer.cpp

可以学到的主要知识:

1、cmake配置

2、qt、qml、c++联合编程,指针有点多,要特别注意,这个程序经过测试基本能用,但有些实验代码还在,未充分整理。

3、ffmpeg、滤镜

一、CMakeLists.txt文件主要内容

cmake_minimum_required(VERSION 3.16)

project(ffmpegAudioThread VERSION 0.1 LANGUAGES CXX)

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

set(CMAKE_AUTOMOC ON)

set(CMAKE_AUTORCC ON)

set(CMAKE_AUTOUIC ON)

find_package(Qt6 6.4 REQUIRED COMPONENTS Quick Multimedia)

find_package(FFmpeg REQUIRED)

include_directories(${FFMPEG_INCLUDE_DIRS})

set(FFMPEG_LIBRARIES /usr/lib/x86_64-linux-gnu)

qt_standard_project_setup()

qt_add_executable(appffmpegAudioThread

main.cpp

)

qt_add_qml_module(appffmpegAudioThread

URI ffmpegAudioThread

VERSION 1.0

QML_FILES

Main.qml

SOURCES videoplayer.h videoplayer.cpp

)

Qt for iOS sets MACOSX_BUNDLE_GUI_IDENTIFIER automatically since Qt 6.1.

If you are developing for iOS or macOS you should consider setting an

explicit, fixed bundle identifier manually though.

set_target_properties(appffmpegAudioThread PROPERTIES

MACOSX_BUNDLE_GUI_IDENTIFIER com.example.appffmpeg01

MACOSX_BUNDLE_BUNDLE_VERSION ${PROJECT_VERSION}

MACOSX_BUNDLE_SHORT_VERSION_STRING {PROJECT_VERSION_MAJOR}.{PROJECT_VERSION_MINOR}

MACOSX_BUNDLE TRUE

WIN32_EXECUTABLE TRUE

)

target_link_libraries(appffmpegAudioThread

PRIVATE Qt6::Quick Qt6::Multimedia

${FFMPEG_LIBRARIES}/libavformat.so

${FFMPEG_LIBRARIES}/libavcodec.so

${FFMPEG_LIBRARIES}/libavutil.so

${FFMPEG_LIBRARIES}/libswscale.so

${FFMPEG_LIBRARIES}/libswresample.so

${FFMPEG_LIBRARIES}/libavfilter.so

)

include(GNUInstallDirs)

install(TARGETS appffmpegAudioThread

BUNDLE DESTINATION .

LIBRARY DESTINATION ${CMAKE_INSTALL_LIBDIR}

RUNTIME DESTINATION ${CMAKE_INSTALL_BINDIR}

)

二、main.qml主要内容

import QtQuick

import QtQuick.Window

import QtQuick.Controls

import QtQuick.Dialogs

import ffmpegAudioThread

Window {

id:window001

visible: true

width: 900

height: 600

title: "Video Player"

color:"black"

function formatTime(milliseconds) {

var seconds = Math.floor(milliseconds / 1000);

var minutes = Math.floor(seconds / 60);

var hours = Math.floor(minutes / 60);

seconds = seconds % 60;

minutes = minutes % 60;

// 使用padStart来确保数字总是显示两位

var formattedTime = hours.toString().padStart(2, '0') + ":" +

minutes.toString().padStart(2, '0') + ":" +

seconds.toString().padStart(2, '0');

return formattedTime;

}

FileDialog{

id:fileDialog

onAccepted: {

console.log(fileDialog.selectedFile)

if (videoPlayer.loadFile(fileDialog.selectedFile)) {

videoPlayer.play();

}

}

}

Row {

anchors.fill: parent

spacing: 10

VideoPlayer {

id: videoPlayer

width: parent.width * 0.90

height: parent.height

onVideoWidthChanged: {

window001.width=videoPlayer.videoWidth

}

onVideoHeightChanged: {

window001.height=videoPlayer.videoHeight

}

onDurationChanged: {

slider.to=videoPlayer.duration

}

onPositionChanged: {

if(!slider.pressed){

slider.value=videoPlayer.position

}

}

Slider{

id:slider

width:videoPlayer.width

height:20

anchors.bottom:parent.bottom

from: 0

to:videoPlayer.duration

value:videoPlayer.position

visible:true

opacity: 0

onValueChanged: {

if(slider.pressed){

var intValue=Math.floor(slider.value)

videoPlayer.setPosi(intValue)

}

}

Keys.onPressed: {

if(event.key===Qt.Key_Escape){

window001.visibility=Window.Windowed

window001.width=videoPlayer.videoWidth

window001.height=videoPlayer.videoHeight

videoPlayer.width=window001.width * 0.90

videoPlayer.height=window001.height

}

}

MouseArea{

id:mouseArea

anchors.fill: parent

hoverEnabled: true

onEntered: {

slider.opacity=1

}

onExited:{

slider.opacity=0

}

onClicked: {

var newPosition=mouse.x/width;

slider.value=slider.from+newPosition*(slider.to-slider.from);

var intValue=Math.floor(slider.value)

videoPlayer.setPosi(intValue)

}

}

Label{

id:valueLabel

text:formatTime(slider.value)

color: "white"

x:slider.leftPadding+slider.visualPosition*(slider.width-width)

y:slider.topPadding-height

}

}

}

Column {

id: column

width: parent.width * 0.1

height: parent.height

spacing: 10

anchors.verticalCenter: parent.verticalCenter

Button {

text: "开始"

onClicked: {

fileDialog.open()

}

}

Button {

text: "暂停"

onClicked: videoPlayer.pause()

}

Slider{

id:playbackSpeedSlider

from:0.5

to:2.0

value:1.0

stepSize: 0.1

orientation: Qt.Vertical

onValueChanged: {

playbackSpeedLabel.text="速度:"+playbackSpeedSlider.value.toFixed(1)

videoPlayer.audioSpeed(playbackSpeedSlider.value.toFixed(1))

}

}

Label{

id:playbackSpeedLabel

color:"white"

text: "速度:1.0"

}

Button{

text:"全屏"

onClicked: {

if(window001.visibility===Window.FullScreen){

window001.visibility=Window.Windowed

window001.width=videoPlayer.videoWidth

window001.height=videoPlayer.videoHeight

videoPlayer.width=window001.width * 0.90

videoPlayer.height=window001.height

}else{

window001.visibility=Window.FullScreen

var v_width=videoPlayer.videoWidth

var v_height=videoPlayer.videoHeight

videoPlayer.width=1920

videoPlayer.height=1920*(v_height/v_width)

}

}

}

Keys.onPressed: {

if(event.key===Qt.Key_Escape){

window001.visibility=Window.Windowed

window001.width=videoPlayer.videoWidth

window001.height=videoPlayer.videoHeight

videoPlayer.width=window001.width * 0.90

videoPlayer.height=window001.height

}

}

}

}

}

三、main.cpp主要内容

#include <QGuiApplication>

#include <QQmlApplicationEngine>

int main(int argc, char *argv[])

{

QGuiApplication app(argc, argv);

QQmlApplicationEngine engine;

const QUrl url(QStringLiteral("qrc:/ffmpegAudioThread/Main.qml"));

QObject::connect(

&engine,

&QQmlApplicationEngine::objectCreationFailed,

&app,

\]() { QCoreApplication::exit(-1); },

Qt::QueuedConnection);

engine.load(url);

return app.exec();

}

## 四、videoplayer.h、videoplayer.cpp主要内容,这部分是核心。

定义了两个类,class AudioThread : public QThread,继承之QThread,可以线程运行用于播放声音。class VideoPlayer : public QQuickPaintedItem,继承之QQuickPaintedItem,主要用于在qml中绘制QImage,来实现视频播放。

### (一)videoplayer.h

#ifndef VIDEOPLAYER_H

#define VIDEOPLAYER_H

#include \

#include \

#include \

#include \

#include \

#include \

#include \

#include \

#include \

#include \

#include \

#include \

#include \

extern "C" {

#include \

#include \

#include \

#include \

#include \

#include \

#include \

#include \

#include \

}

struct AudioData{

QByteArray buffer;

qint64 pts;

qint64 duration;

};

class AudioThread : public QThread

{

Q_OBJECT

QML_ELEMENT

public:

AudioThread(QObject \*parent = nullptr);

\~AudioThread();

void run() override;

void cleanQueue();

void setCustomTimebase(qint64 \*timebase);

qint64 audioTimeLine=0;

void conditionWakeAll();

void pause();

void resume();

int init_filters(const char \*filters_descr);

void stop();

void deleteAudioSink();

void initAudioThread();

QAudioFormat::SampleFormat ffmpegToQtSampleFormat(AVSampleFormat ffmpegFormat);

signals:

void audioFrameReady(qint64 pts);

void audioProcessed();

void sendAudioTimeLine(qint64 timeLine);

private slots:

void processAudio();

public slots:

void handleAudioPacket(AVPacket \*packet);

void receiveAudioParameter(AVFormatContext \*format_Ctx,AVCodecContext \*audioCodec_Ctx,int \*audioStream_Index);

void setPlaybackSpeed(double speed);

private:

AVFormatContext \*formatCtx = nullptr;

int \*audioStreamIndex = nullptr;

// 音频编解码器上下文

AVCodecContext \*audioCodecCtx;

// 音频重采样上下文

SwrContext \*swrCtx;

// 音频输出设备

QAudioSink \*audioSink;

// 音频设备输入/输出接口

QIODevice \*audioIODevice;

QMutex mutex;

QWaitCondition condition;

bool shouldStop = false;

qint64 audioClock = 0; /\*\*\< 音频时钟 \*/

qint64 \*audioTimebase=nullptr;

bool pauseFlag=false;

QQueue\ audioData;

QQueue\ packetQueue;

AVFilterContext \*buffersink_ctx=nullptr;

AVFilterContext \*buffersrc_ctx=nullptr;

AVFilterGraph \*filter_graph=nullptr;

qint64 originalPts=0;

double playbackSpeed=2.0;

char filters_descr\[64\]={0};

int data_size=0;

QMediaDevices \*outputDevices=nullptr;

QAudioDevice outputDevice;

QAudioFormat format;

QTimer \*timer;

bool timerFlag=false;

};

class VideoPlayer : public QQuickPaintedItem

{

Q_OBJECT

QML_ELEMENT

Q_PROPERTY(int videoWidth READ videoWidth NOTIFY videoWidthChanged)

Q_PROPERTY(int videoHeight READ videoHeight NOTIFY videoHeightChanged)

Q_PROPERTY(qint64 duration READ duration NOTIFY durationChanged)

Q_PROPERTY(qint64 position READ position WRITE setPosition NOTIFY positionChanged)

public:

VideoPlayer(QQuickItem \*parent = nullptr);

\~VideoPlayer();

Q_INVOKABLE bool loadFile(const QString \&fileName);

Q_INVOKABLE void play();

Q_INVOKABLE void pause();

Q_INVOKABLE void stop();

Q_INVOKABLE void setPosi(qint64 position);

Q_INVOKABLE void audioSpeed(qreal speed);

int videoWidth() const {

return m_videoWidth;

}

int videoHeight() const{

return m_videoHeight;

}

qint64 duration() const{

return m_duration;

}

qint64 position() const{

return m_position;

}

void setPosition(int p);

void cleanVideoPacketQueue();

qint64 turnPoint=0;

void delay(int milliseconds);

signals:

void videoWidthChanged();

void videoHeightChanged();

void durationChanged(qint64 duration);

void positionChanged(qint64 position);

void deliverPacketToAudio(AVPacket \*deliverPacket);

void sendAudioParameter(AVFormatContext \*formatCtx,AVCodecContext \*audioCodecCtx,int \*audioStreamIndex);

void sendSpeed(double speed);

protected:

void paint(QPainter \*painter) override;

public slots:

void receiveAudioTimeLine(qint64 timeLine);

private slots:

void onTimeout();

private:

void cleanup();

void decodeVideo();

AVFormatContext \*formatCtx = nullptr;

AVCodecContext \*videoCodecCtx = nullptr;

SwsContext \*swsCtx = nullptr;

AVCodecContext \*audioCodecCtx=nullptr;

SwrContext \*swrCtx=nullptr;

QImage currentImage;

QTimer \*timer = nullptr;

QTimer \*syncTimer=nullptr;

int videoStreamIndex = -1;

int audioStreamIndex = -1;

AudioThread \*audioThread = nullptr;

AVPacket \*audioPacket=nullptr;

qint64 audioClock = 0; /\*\*\< 音频时钟 \*/

qint64 videoClock = 0; /\*\*\< 视频时钟 \*/

QMutex mutex;

double audioPts=0;

QQueue\ videoQueue;

QQueue\ videoPacketQueue;

int m_videoWidth=0;

int m_videoHeight=0;

qint64 m_duration=0;

qint64 m_position=0;

qint64 customTimebase=0;

};

#endif // VIDEOPLAYER_H

### (二)videoplayer.cpp

#include "videoplayer.h"

#include \

AudioThread::AudioThread(QObject \*parent)

: QThread(parent),

audioCodecCtx(nullptr),

swrCtx(nullptr),

audioSink(nullptr),

audioIODevice(nullptr),

buffersink_ctx(nullptr),

buffersrc_ctx(nullptr),

filter_graph(nullptr),

shouldStop(false),

pauseFlag(false),

playbackSpeed(1.0),

data_size(0){

}

AudioThread::\~AudioThread() {

shouldStop=true;

condition.wakeAll();

wait();

}

//设置播放速度

void AudioThread::setPlaybackSpeed(double speed)

{

playbackSpeed=speed;

qDebug()\<\<"playbackSpeed"\<\channel_layout)

audioCodecCtx-\>channel_layout =

av_get_default_channel_layout(audioCodecCtx-\>channels);

snprintf(args, sizeof(args),

"time_base=%d/%d:sample_rate=%d:sample_fmt=%s:channel_layout=0x%"PRIx64,

audioCodecCtx-\>time_base.num, audioCodecCtx-\>time_base.den, audioCodecCtx-\>sample_rate,

av_get_sample_fmt_name(audioCodecCtx-\>sample_fmt), audioCodecCtx-\>channel_layout);

ret = avfilter_graph_create_filter(\&buffersrc_ctx, buffersrc, "in",

args, nullptr, filter_graph);

if (ret \< 0) {

av_log(nullptr, AV_LOG_ERROR, "Cannot create buffer source\\n");

goto end;

}

ret = avfilter_graph_create_filter(\&buffersink_ctx, buffersink, "out",

nullptr, nullptr, filter_graph);

if (ret \< 0) {

av_log(nullptr, AV_LOG_ERROR, "Cannot create buffer sink\\n");

goto end;

}

outputs-\>name = av_strdup("in");

outputs-\>filter_ctx = buffersrc_ctx;

outputs-\>pad_idx = 0;

outputs-\>next = nullptr;

inputs-\>name = av_strdup("out");

inputs-\>filter_ctx = buffersink_ctx;

inputs-\>pad_idx = 0;

inputs-\>next = nullptr;

if ((ret = avfilter_graph_parse_ptr(filter_graph, filters_descr,

\&inputs, \&outputs, nullptr)) \< 0)

goto end;

if ((ret = avfilter_graph_config(filter_graph, nullptr)) \< 0)

goto end;

end:

avfilter_inout_free(\&inputs);

avfilter_inout_free(\&outputs);

if(ret\<0\&\& filter_graph){

avfilter_graph_free(\&filter_graph);

filter_graph=nullptr;

}

return ret;

}

//音频播放

void AudioThread::processAudio()

{

AudioData audioDataTemp;

if (shouldStop) {

quit();

return;

}

//QMutexLocker locker(\&mutex);

if (pauseFlag) {

return;

}

int bytesFree = audioSink-\>bytesFree();

if (!audioData.isEmpty() \&\& bytesFree \>= audioData.head().buffer.size()) {

AudioData dataTemp = audioData.dequeue();

audioTimeLine = dataTemp.pts + dataTemp.duration + audioSink-\>bufferSize() / data_size \* dataTemp.duration;

emit sendAudioTimeLine(audioTimeLine);

qDebug() \<\< "audioTimeLine" \<\< audioTimeLine;

audioIODevice-\>write(dataTemp.buffer);

} else {

qDebug() \<\< "duration_error";

}

if (packetQueue.isEmpty()) {

qDebug() \<\< "packetQueue.isEmpty()" ;

return;

//condition.wait(\&mutex);

if (shouldStop) return;

}

if (!packetQueue.isEmpty()) {

AVPacket \*packet = packetQueue.dequeue();

AVFrame \*frame = av_frame_alloc();

if (!frame) {

qWarning() \<\< "无法分配音频帧";

return;

}

int ret = avcodec_send_packet(audioCodecCtx, packet);

if (ret \< 0) {

qWarning() \<\< "无法发送音频包到解码器";

av_packet_unref(packet);

av_packet_free(\&packet);

av_frame_free(\&frame);

return;

}

while (ret \>= 0) {

ret = avcodec_receive_frame(audioCodecCtx, frame);

if (ret == AVERROR(EAGAIN) \|\| ret == AVERROR_EOF) {

av_frame_free(\&frame);

break;

} else if (ret \< 0) {

qWarning() \<\< "无法接收解码后的音频帧";

av_frame_free(\&frame);

break;

}

originalPts = frame-\>pts \* av_q2d(formatCtx-\>streams\[\*audioStreamIndex\]-\>time_base) \* 1000;

if (av_buffersrc_add_frame(buffersrc_ctx, frame) \< 0) {

qWarning() \<\< "无法将音频帧送入滤镜链";

av_frame_free(\&frame);

break;

}

while (true) {

AVFrame \*filt_frame = av_frame_alloc();

if (!filt_frame) {

qWarning() \<\< "无法分配滤镜后的音频帧";

break;

}

ret = av_buffersink_get_frame(buffersink_ctx, filt_frame);

if (ret == AVERROR(EAGAIN) \|\| ret == AVERROR_EOF) {

av_frame_free(\&filt_frame);

break;

} else if (ret \< 0) {

qWarning() \<\< "无法从滤镜链获取处理后的音频帧";

av_frame_free(\&filt_frame);

break;

}

if (!filt_frame \|\| filt_frame-\>nb_samples \<= 0) {

qWarning() \<\< "滤镜数据nb_samples\<=0";

av_frame_free(\&filt_frame);

continue;

}

if (!filt_frame-\>data\[0\]\[0\]) {

qWarning() \<\< "滤镜数据为空";

av_frame_free(\&filt_frame);

continue;

}

if (filt_frame-\>extended_data == nullptr) {

qWarning() \<\< "滤镜数据extended_data";

av_frame_free(\&filt_frame);

continue;

}

data_size = av_samples_get_buffer_size(nullptr, filt_frame-\>channels,

filt_frame-\>nb_samples,

(AVSampleFormat)filt_frame-\>format, 1);

if (data_size \< 0) {

qWarning() \<\< "无法获取缓冲区大小";

av_frame_unref(filt_frame);

break;

}

audioDataTemp.duration = ((filt_frame-\>nb_samples \* 1000) / filt_frame-\>sample_rate);

audioDataTemp.pts = originalPts;

qDebug() \<\< "audioDataTemp.duration" \<\< audioDataTemp.duration;

qDebug() \<\< "audioDataTemp.pts" \<\< audioDataTemp.pts;

audioDataTemp.buffer = QByteArray((char\*)filt_frame-\>data\[0\], data_size);

audioData.enqueue(audioDataTemp);

qDebug() \<\< "audioData.size()" \<\< audioData.size();

av_frame_free(\&filt_frame);

}

av_frame_free(\&frame);

}

av_packet_unref(packet);

av_packet_free(\&packet);

}

emit audioProcessed();

}

//返回音频类型

QAudioFormat::SampleFormat AudioThread::ffmpegToQtSampleFormat(AVSampleFormat ffmpegFormat) {

switch (ffmpegFormat) {

case AV_SAMPLE_FMT_U8: return QAudioFormat::UInt8;

case AV_SAMPLE_FMT_S16: return QAudioFormat::Int16;

case AV_SAMPLE_FMT_S32: return QAudioFormat::Int32;

case AV_SAMPLE_FMT_FLT: return QAudioFormat::Float;

case AV_SAMPLE_FMT_DBL: // Qt没有直接对应的64位浮点格式

case AV_SAMPLE_FMT_U8P: // 平面格式

case AV_SAMPLE_FMT_S16P: // 平面格式

case AV_SAMPLE_FMT_S32P: // 平面格式

case AV_SAMPLE_FMT_FLTP: // 平面格式

case AV_SAMPLE_FMT_DBLP: // 平面格式unknown

default: return QAudioFormat::Float;

}

}

//初始化音频

void AudioThread::initAudioThread(){

if(filter_graph!=nullptr){

avfilter_graph_free(\&filter_graph);

}

timerFlag=true;

snprintf(filters_descr, sizeof(filters_descr), "atempo=%.1f", playbackSpeed);

outputDevices=new QMediaDevices();

outputDevice=outputDevices-\>defaultAudioOutput();

//format=outputDevice.preferredFormat();

format.setSampleRate(audioCodecCtx-\>sample_rate);

format.setChannelCount(audioCodecCtx-\>channels);

//format.setSampleFormat(QAudioFormat::Float);

format.setSampleFormat(ffmpegToQtSampleFormat(audioCodecCtx-\>sample_fmt));

audioSink = new QAudioSink(outputDevice, format);

audioIODevice =audioSink-\>start();

if (init_filters(filters_descr) \< 0) {

qWarning() \<\< "无法初始化滤镜图表";

return;

}

}

//初始化音频,开始timer

void AudioThread::run() {

timerFlag=true;

snprintf(filters_descr, sizeof(filters_descr), "atempo=%.1f", playbackSpeed);

outputDevices=new QMediaDevices();

outputDevice=outputDevices-\>defaultAudioOutput();

//format=outputDevice.preferredFormat();

format.setSampleRate(audioCodecCtx-\>sample_rate);

format.setChannelCount(audioCodecCtx-\>channels);

//format.setSampleFormat(QAudioFormat::Float);

format.setSampleFormat(ffmpegToQtSampleFormat(audioCodecCtx-\>sample_fmt));

audioSink = new QAudioSink(outputDevice, format);

audioIODevice =audioSink-\>start();

if (init_filters(filters_descr) \< 0) {

qWarning() \<\< "无法初始化滤镜图表";

return;

}

timer = new QTimer(this);

connect(timer, \&QTimer::timeout, this, \&AudioThread::processAudio);

timer-\>start(10); // 每10ms触发一次

exec();

avfilter_graph_free(\&filter_graph);

}

VideoPlayer::VideoPlayer(QQuickItem \*parent)

: QQuickPaintedItem(parent),

formatCtx(nullptr),

videoCodecCtx(nullptr),

swsCtx(nullptr),

audioCodecCtx(nullptr),

swrCtx(nullptr),

timer(new QTimer(this)),

customTimebase(0),

audioThread(new AudioThread(this)) {

connect(timer, \&QTimer::timeout, this, \&VideoPlayer::onTimeout);

connect(this,\&VideoPlayer::deliverPacketToAudio,audioThread,\&AudioThread::handleAudioPacket);

connect(audioThread,\&AudioThread::sendAudioTimeLine,this,\&VideoPlayer::receiveAudioTimeLine);

connect(this,\&VideoPlayer::sendAudioParameter,audioThread,\&AudioThread::receiveAudioParameter);

connect(this,\&VideoPlayer::sendSpeed,audioThread,\&AudioThread::setPlaybackSpeed);

avformat_network_init();

av_register_all(); // 注册所有编解码器

avfilter_register_all();

}

VideoPlayer::\~VideoPlayer() {

stop();

audioThread-\>quit();

audioThread-\>wait();

delete audioThread;

}

//打开视频文件,如果打开成功,qml中执行 play();文件选择用的 qml

bool VideoPlayer::loadFile(const QString \&fileName) {

stop();

formatCtx = avformat_alloc_context();

if (avformat_open_input(\&formatCtx, fileName.toStdString().c_str(), nullptr, nullptr) != 0) {

qWarning() \<\< "无法打开文件";

return false;

}

if (avformat_find_stream_info(formatCtx, nullptr) \< 0) {

qWarning() \<\< "无法获取流信息";

return false;

}

for (unsigned int i = 0; i \< formatCtx-\>nb_streams; ++i) {

if (formatCtx-\>streams\[i\]-\>codecpar-\>codec_type == AVMEDIA_TYPE_VIDEO) {

videoStreamIndex = i;

} else if (formatCtx-\>streams\[i\]-\>codecpar-\>codec_type == AVMEDIA_TYPE_AUDIO) {

audioStreamIndex = i;

}

}

if (videoStreamIndex == -1) {

qWarning() \<\< "未找到视频流";

return false;

}

if (audioStreamIndex == -1) {

qWarning() \<\< "未找到音频流";

return false;

}

AVCodec \*videoCodec = avcodec_find_decoder(formatCtx-\>streams\[videoStreamIndex\]-\>codecpar-\>codec_id);

if (!videoCodec) {

qWarning() \<\< "未找到视频解码器";

return false;

}

videoCodecCtx = avcodec_alloc_context3(videoCodec);

avcodec_parameters_to_context(videoCodecCtx, formatCtx-\>streams\[videoStreamIndex\]-\>codecpar);

if (avcodec_open2(videoCodecCtx, videoCodec, nullptr) \< 0) {

qWarning() \<\< "无法打开视频解码器";

return false;

}

swsCtx = sws_getContext(videoCodecCtx-\>width, videoCodecCtx-\>height, videoCodecCtx-\>pix_fmt,

videoCodecCtx-\>width, videoCodecCtx-\>height, AV_PIX_FMT_RGB24,

SWS_BILINEAR, nullptr, nullptr, nullptr);

AVCodec \*audioCodec = avcodec_find_decoder(formatCtx-\>streams\[audioStreamIndex\]-\>codecpar-\>codec_id);

if (!audioCodec) {

qWarning() \<\< "未找到音频解码器";

return false;

}

audioCodecCtx = avcodec_alloc_context3(audioCodec);

if(avcodec_parameters_to_context(audioCodecCtx, formatCtx-\>streams\[audioStreamIndex\]-\>codecpar)\<0){

qWarning() \<\< "无法复制音频解码器上下文";

return false;

}

if (avcodec_open2(audioCodecCtx, audioCodec, nullptr) \< 0)

{

qWarning() \<\< "无法打开音频解码器";

return false;

}

emit sendAudioParameter(formatCtx,audioCodecCtx,\&audioStreamIndex);

if(!audioThread-\>isRunning()){

audioThread-\>start();

}else{

audioThread-\>initAudioThread();

}

audioThread-\>resume();

m_duration=formatCtx-\>duration / AV_TIME_BASE \*1000;

emit durationChanged(m_duration);

customTimebase=0;

return true;

}

void VideoPlayer::play() {

if (!timer-\>isActive()) {

timer-\>start(1000 / 150);//用150是保证2倍数时,数据量足够,避免出现卡顿。

}

}

void VideoPlayer::pause() {

if (timer-\>isActive()) {

timer-\>stop();

audioThread-\>pause();

}else{

timer-\>start();

audioThread-\>resume();

}

}

void VideoPlayer::stop() {

if (timer-\>isActive()) {

timer-\>stop();

}

cleanup();

}

//绘制视频

void VideoPlayer::paint(QPainter \*painter) {

if (!currentImage.isNull()) {

painter-\>drawImage(boundingRect(), currentImage);

}

}

//接收音频时间线,调整视频时间线。

void VideoPlayer::receiveAudioTimeLine(qint64 timeLine)

{

customTimebase=timeLine+15;

}

//视频队列清空

void VideoPlayer::cleanVideoPacketQueue(){

//QMutexLocker locker(\&mutex);

while(!videoPacketQueue.isEmpty()){

//packetQueue.dequeue();

AVPacket \*packet=videoPacketQueue.dequeue();

av_packet_unref(packet);

av_packet_free(\&packet);

}

//locker.unlock();

}

//定义了Q_PROPERTY(qint64 position READ position WRITE setPosition NOTIFY positionChanged) 必须要有

void VideoPlayer::setPosition(int p){

/\*

qint64 position=p;

QMutexLocker locker(\&mutex);

if(av_seek_frame(formatCtx,-1,position\*AV_TIME_BASE/1000,AVSEEK_FLAG_ANY)\<0){

qWarning()\<\<"无法跳转到指定位置";

return;

}

avcodec_flush_buffers(videoCodecCtx);

avcodec_flush_buffers(audioCodecCtx);

m_position=position;

emit positionChanged(m_position);\*/

}

//查找定位,用于进度条拖拽。

void VideoPlayer::setPosi(qint64 position){

if (timer-\>isActive()) {

timer-\>stop();

}else{

}

audioThread-\>deleteAudioSink();

qint64 target_ts=position\*1000;

avcodec_flush_buffers(videoCodecCtx);

avcodec_flush_buffers(audioCodecCtx);

cleanVideoPacketQueue();

//if(av_seek_frame(formatCtx,-1,target_ts,AVSEEK_FLAG_BACKWARD)\<0){

if(avformat_seek_file(formatCtx,-1,INT64_MIN,target_ts,INT64_MAX,AVSEEK_FLAG_BACKWARD)){ //这方法查找更准确

qWarning()\<\<"无法跳转到指定位置";

return;

}

if (timer-\>isActive()) {

}else{

timer-\>start();

}

audioThread-\>resume();

m_position=position;

customTimebase=position;

//turnPoint=position;

emit positionChanged(m_position);

}

//发送速度参数给音频滤镜

void VideoPlayer::audioSpeed(qreal speed)

{

double s=speed;

emit sendSpeed(s);

}

//主线程延迟标准程序

void VideoPlayer::delay(int milliseconds) {

QTime dieTime = QTime::currentTime().addMSecs(milliseconds);

while (QTime::currentTime() \< dieTime) {

QCoreApplication::processEvents(QEventLoop::AllEvents, 100);

}

}

//定时器,定时执行内容

void VideoPlayer::onTimeout() {

AVPacket \*packet=av_packet_alloc();

if(!packet) return;

if (av_read_frame(formatCtx, packet) \>= 0) {

if (packet-\>stream_index == videoStreamIndex) {

videoPacketQueue.enqueue(packet);

decodeVideo();

} else if (packet-\>stream_index == audioStreamIndex) {

AVPacket \*audioPacket=av_packet_alloc();

if(!audioPacket){

av_packet_unref(packet);

av_packet_free(\&packet);

return;

}

av_packet_ref(audioPacket,packet);

emit deliverPacketToAudio(audioPacket);

}

}else{

decodeVideo();

}

}

//解码视频,并刷新

void VideoPlayer::decodeVideo() {

if(videoPacketQueue.isEmpty()){

return;

}

AVPacket \*packet=videoPacketQueue.first();

qint64 videoPts=packet-\>pts\*av_q2d(formatCtx-\>streams\[videoStreamIndex\]-\>time_base)\*1000;//转换为毫秒

if(videoPts\>(customTimebase)){

return;

}else{

packet=videoPacketQueue.dequeue();

}

AVFrame \*frame = av_frame_alloc();

if (!frame) {

qWarning() \<\< "无法分配视频帧";

av_packet_unref(packet);

av_packet_free(\&packet);

return;

}

int ret = avcodec_send_packet(videoCodecCtx, packet);

if (ret \< 0) {

qWarning() \<\< "无法发送视频包到解码器";

av_frame_free(\&frame);

av_packet_unref(packet);

av_packet_free(\&packet);

return;

}

ret = avcodec_receive_frame(videoCodecCtx, frame);

if (ret == AVERROR(EAGAIN) \|\| ret == AVERROR_EOF) {

av_frame_free(\&frame);

av_packet_unref(packet);

av_packet_free(\&packet);

return;

} else if (ret \< 0) {

qWarning() \<\< "无法接收解码后的视频帧";

av_frame_free(\&frame);

av_packet_unref(packet);

av_packet_free(\&packet);

return;

}

av_packet_unref(packet);

av_packet_free(\&packet);

m_position=customTimebase; //以音频轴更新视频轴

emit positionChanged(m_position);

if(m_videoWidth!=frame-\>width\|\|m_videoHeight!=frame-\>height){

m_videoWidth=frame-\>width;

m_videoHeight=frame-\>height;

emit videoWidthChanged();

emit videoHeightChanged();

}

// 缩放视频帧

AVFrame \*rgbFrame = av_frame_alloc();

if (!rgbFrame) {

qWarning() \<\< "无法分配RGB视频帧";

av_frame_free(\&frame);

return;

}

rgbFrame-\>format = AV_PIX_FMT_RGB24;

rgbFrame-\>width = videoCodecCtx-\>width;

rgbFrame-\>height = videoCodecCtx-\>height;

ret = av_frame_get_buffer(rgbFrame, 0);

if (ret \< 0) {

qWarning() \<\< "无法分配RGB视频帧数据缓冲区";

av_frame_free(\&frame);

av_frame_free(\&rgbFrame);

return;

}

sws_scale(swsCtx, frame-\>data, frame-\>linesize, 0, videoCodecCtx-\>height,

rgbFrame-\>data, rgbFrame-\>linesize);

// 将RGB视频帧转换为QImage

currentImage = QImage(rgbFrame-\>data\[0\], rgbFrame-\>width, rgbFrame-\>height, rgbFrame-\>linesize\[0\], QImage::Format_RGB888).copy();

// 释放视频帧

av_frame_free(\&frame);

av_frame_free(\&rgbFrame);

update();

}

//清除,用于开始下一个新文件

void VideoPlayer::cleanup() {

if (swsCtx) {

sws_freeContext(swsCtx);

swsCtx = nullptr;

}

if (videoCodecCtx) {

avcodec_free_context(\&videoCodecCtx);

videoCodecCtx = nullptr;

}

if (swrCtx) {

swr_free(\&swrCtx);

swrCtx = nullptr;

}

if (audioCodecCtx) {

avcodec_free_context(\&audioCodecCtx);

audioCodecCtx = nullptr;

}

if (formatCtx) {

avformat_close_input(\&formatCtx);

formatCtx = nullptr;

}

while(!videoQueue.isEmpty()){

AVFrame \*frame;

frame=videoQueue.dequeue();

av_frame_free(\&frame);

}

audioThread-\>deleteAudioSink();

cleanVideoPacketQueue();

m_position=0;

m_duration=0;

emit positionChanged(m_position);

emit durationChanged(m_duration);

}