sqoop MySQL导入数据到hive报错

ERROR tool.ImportTool: Encountered IOException running import job: java.io.IOException: Hive exited with status 64

报错解释:

这个错误表明Sqoop在尝试导入数据到Hive时遇到了问题,导致Hive进程异常退出。状态码64是一个特殊的退出代码,它表明有一些基本的配置问题或者环境问题导致Hive无法正常启动。

bash

24/06/25 13:07:56 INFO hive.HiveImport: OK

24/06/25 13:07:56 INFO hive.HiveImport: Time taken: 6.621 seconds

24/06/25 13:07:57 INFO hive.HiveImport: FAILED: NullPointerException null

24/06/25 13:07:58 ERROR tool.ImportTool: Encountered IOException running import job: java.io.IOException: Hive exited with status 64

at org.apache.sqoop.hive.HiveImport.executeExternalHiveScript(HiveImport.java:389)

at org.apache.sqoop.hive.HiveImport.executeScript(HiveImport.java:339)

at org.apache.sqoop.hive.HiveImport.importTable(HiveImport.java:240)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:514)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:605)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)可见报错是因为hive.HiveImport: FAILED: NullPointerException null

查看hive的日志

cat $HIVE_HOME/logs/hive.log

bash

2024-06-25T13:07:57,385 INFO [bfdcbd34-f72f-47c8-8abd-ec287b0cc884 main] ql.Driver: Returning Hive schema: Schema(fieldSchemas:null, properties:null)

2024-06-25T13:07:57,558 ERROR [bfdcbd34-f72f-47c8-8abd-ec287b0cc884 main] ql.Driver: FAILED: NullPointerException null

java.lang.NullPointerException

at org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAccessController.getCurrentRoleNames(SQLStdHiveAccessController.java:194)

at org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAccessControllerWrapper.getCurrentRoleNames(SQLStdHiveAccessControllerWrapper.java:155)

at org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAuthorizationValidator.checkPrivileges(SQLStdHiveAuthorizationValidator.java:132)

at org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAuthorizationValidator.checkPrivileges(SQLStdHiveAuthorizationValidator.java:84)

at org.apache.hadoop.hive.ql.security.authorization.plugin.HiveAuthorizerImpl.checkPrivileges(HiveAuthorizerImpl.java:87)

at org.apache.hadoop.hive.ql.Driver.doAuthorizationV2(Driver.java:974)

at org.apache.hadoop.hive.ql.Driver.doAuthorization(Driver.java:由堆栈跟踪,可见是授权类错误

查看conf/hive-site.xml

bash

<property>

<name>hive.security.authorization.enabled</name>

<value>true</value>

</property>

<property>

<name>hive.server2.enable.doAs</name>

<value>false</value>

</property>

<!-- 指定超级管理员 -->

<property>

<name>hive.users.in.admin.role</name>

<value>ljr</value>

</property>

<!-- 默认字符集 -->

<property>

<name>default.character.set</name>

<value>UTF-8</value>

</property>

<!-- 默认分隔符-->

<property>

<name>hive.default.fileformat.magic.code</name>

<value>\t</value>

</property>

<!-- 授权类-->

<property>

<name>hive.security.authorization.manager</name>

<value>org.apache.hadoop.hive.ql.security.authorization.plugin.sqlstd.SQLStdHiveAuthorizerFactory</value>

</property>

<property>

<name>hive.security.authenticator.manager</name>

<value>org.apache.hadoop.hive.ql.security.SessionStateUserAuthenticator</value>

</property>发现配置了hive.security.authorization.manage和hive.security.authenticator.manager

hive.security.authorization.manager 和 hive.security.authenticator.manager 是 Hive 配置中与安全相关的两个重要参数。

1.hive.security.authorization.manager:

这个参数用于指定 Hive 的授权管理器。

授权管理器负责处理 Hive 中的权限检查,例如检查用户是否有权限执行某个查询或访问某个表1。

++Hive 默认可能不提供具体的授权管理器实现,但你可以通过配置此参数来指定自定义的授权管理器类,该类需要实现 Hive 提供的授权接口++。配置时,你可以hive.security.authorization.manager 设置为自定义的类名,并将相关的 JAR 包放入 Hive 的类路径下(如 $HIVE_HOME/lib/)。

2.hive.security.authenticator.manager:

这个参数用于指定 Hive 的身份验证管理器。身份验证管理器负责验证连接到 Hive 的用户的身份。

Hive 默认可能使用 Hadoop 的默认身份验证器(如 HadoopDefaultAuthenticator),但你也可以通过配置此参数来指定自定义的身份验证管理器类。当你需要在 Hive 中跟踪用户操作或实现更复杂的身份验证逻辑时,可能需要重写身份验证管理器

由于配置以上两个参数但是实际上并没有把自定义的hive.security.authorization.manager类放到lib目录下所以导致了NullPointerException null;

非必要指定授权管理器的话,注释掉以上两个参数,重启hive的相关服务;

重试导入

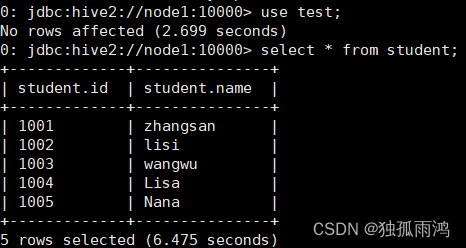

ljr@node1 sqoop\]$ sqoop import --connect jdbc:mysql://node1:3306/test?zeroDateTimeBehavior=CONVERT_TO_NULL --username root --password 1234 --table student2 --split-by id --num-mappers 1 --hive-import --fields-terminated-by "," --hive-overwrite --hive-table test.student --null-non-string '\\\\N' --null-string '\\\\N' ```bash 24/06/25 14:20:08 INFO hive.HiveImport: Loading uploaded data into Hive 24/06/25 14:20:23 INFO hive.HiveImport: SLF4J: Class path contains multiple SLF4J bindings. 24/06/25 14:20:23 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/export/server/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class] 24/06/25 14:20:23 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/export/server/hbase/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class] 24/06/25 14:20:23 INFO hive.HiveImport: SLF4J: Found binding in [jar:file:/export/server/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class] 24/06/25 14:20:23 INFO hive.HiveImport: SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation. 24/06/25 14:20:23 INFO hive.HiveImport: SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory] 24/06/25 14:20:29 INFO hive.HiveImport: 24/06/25 14:20:29 INFO hive.HiveImport: Logging initialized using configuration in file:/export/server/hive/conf/hive-log4j2.properties Async: true 24/06/25 14:20:37 INFO hive.HiveImport: OK 24/06/25 14:20:37 INFO hive.HiveImport: Time taken: 5.598 seconds 24/06/25 14:20:39 INFO hive.HiveImport: Loading data to table test.student 24/06/25 14:20:39 INFO hive.HiveImport: Moved: 'hdfs://node1:8020/user/hive/warehouse/test.db/student/part-m-00000' to trash at: hdfs://node1:8020/user/ljr/.Trash/Current 24/06/25 14:20:45 INFO hive.HiveImport: OK 24/06/25 14:20:45 INFO hive.HiveImport: Time taken: 7.687 seconds 24/06/25 14:20:45 INFO hive.HiveImport: Hive import complete. ``` 可见import complete 查看目标表student  数据导入成功。 以上案例可说明,正确的文件配置对环境的正常运行起着至关重要的作用,在写入配置参数时要充分了解参数的作用。