1 摄像头内参的标定

【相机标定具体操作】

使用将要标定的摄像头,以不同的角度采集棋盘格,要保证视野内出现完整的棋盘格。采集图片数量约15张左右即可。

以11*8的棋盘格为例,具体流程如下:

- step 1. 设置棋盘格3D点;通过opencv角点检测获取2D点的坐标

- 3D的点的生成:在每张图片上,共存在11*8个角点存在。每张图存在自己的世界坐标系,是以右上角(长边为底边)为3D坐标原点,所以在代码中需要生成一个shape=(11*8,3) 的numpy数组。存放着世界坐标系中的棋盘格点,例如(0,0,0), (1,0,0), (2,0,0) ...,(8,5,0)...。关键api:

np.mgrid- 图片上的2D角点检测:该工作在opencv提供了对应的api直接调用即可,对应api为

cv2.findChessboardCorners。也可以进一步进行角点精确化检测,对应api为cv2.cornerSubPix- step 2. 估计相机内参,可得到相机内参和畸变参数

- 对应api为

cv2.calibrateCamera- step 3. 反投影,计算误差。若误差大于一定阈值,则重新采集图片进行标定

- 对应api为

cv2.projectPoints- step 4. 利用相机内参去畸变,并显示

- 对应api为

cv2.undistort

摄像头捕获的用于标定的图片

然后角点检测可视化图片

具体代码的实现如下:

python' '' step 1. 设置棋盘格3D点;通过opencv角点检测 获取2D点 step 2. 估计相机内参 step 3. 反投影,计算误差 step 4. 利用相机内参去畸变,并显示 ''' import os import cv2 import glob import numpy as np path_image = "L:/WORKFILE/calibration-master/data_own/calib_image_1" image_namelist = glob.glob(os.path.join(path_image, "*.png")) criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001) chess_board_w = 11 chess_board_h = 8 ### step 1. 设置3D点;通过角点检测获取2D点=============================================== # 世界坐标系中的棋盘格点,例如(0,0,0), (1,0,0), (2,0,0) ....,(8,5,0) chess_points = np.zeros((chess_board_w * chess_board_h, 3), np.float32) chess_points[:, :2] = np.mgrid[0:chess_board_w, 0:chess_board_h].T.reshape(-1, 2) # chess_points = chess_points * 0.03 # 每个格子3cm world_points = [] # 在世界坐标系中的3D点 image_points = [] # 在图像平面的2D点 for image_name in image_namelist: image = cv2.imread(image_name) if len(image.shape) == 3: gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) # 找到棋盘格角点 # 入参为:棋盘图像(8位灰度或彩色图像)、棋盘尺寸、存放角点的位置 finded, corners = cv2.findChessboardCorners(gray, (chess_board_w, chess_board_h)) if finded == True: world_points.append(chess_points) image_points.append(corners) # if finded == True: # # 角点精确检测,可选择使用 # # 入参为:输入图像、角点初始坐标、搜索窗口为2*winsize+1、死区、求角点的迭代终止条件 # corners2 = cv2.cornerSubPix(gray, corners, (11,11), (-1,-1), criteria) # # 将角点在图像上显示 # cv2.drawChessboardCorners(image, (chess_board_w, chess_board_h), corners2, finded) # image_copy = cv2.resize(image, (500,500)) # cv2.imshow('image', image_copy) # cv2.waitKey(10) # cv2.destroyWindow('image') ### step 2. 估计相机内参,并更新yaml文件=============================================== image_size = cv2.cvtColor(cv2.imread(image_namelist[0]), cv2.COLOR_BGR2GRAY).shape[::-1] # (H, W) _, inter_matrix, dist_coeffs, rvecs, tvecs = cv2.calibrateCamera(world_points, image_points, image_size, None, None) # inter_matrix:相机内参; dist_coeffs:相机畸变系数; rvecs:旋转向量 (外参数); tvecs :平移向量 (外参数) print("相机内参矩阵\n", inter_matrix, "\n畸变参数:\n", dist_coeffs) ### step 3. 反投影,计算误差=============================================== # 通过反投影误差,我们可以来评估结果的好坏。越接近0,说明结果越理想。 # 通过之前计算的内参数矩阵、畸变系数、旋转矩阵和平移向量,使用cv2.projectPoints()计算三维点到二维图像的投影, # 然后计算反投影得到的点与图像上检测到的点的误差,最后计算一个对于所有标定图像的平均误差,这个值就是反投影误差。 mean_error = 0 for i in range(len(world_points)): imgpoints2, _ = cv2.projectPoints(world_points[i], rvecs[i], tvecs[i], inter_matrix, dist_coeffs) error = cv2.norm(image_points[i], imgpoints2, cv2.NORM_L2)/len(imgpoints2) mean_error += error print( "total error: {}".format(mean_error/len(world_points)) ) ### step 4. 利用相机内参去畸变,并显示=============================================== #cap = cv2.VideoCapture(0) while 1: frame = cv2.imread("L:/WORKFILE/calibration-master/detect1.jpg") # _, frame = cap.read() ## 从摄像头中捕获图片 # 我们已经得到了相机内参和畸变系数,在将图像去畸变之前, # 我们还可以使用cv.getOptimalNewCameraMatrix()优化内参数和畸变系数, # 通过设定自由自由比例因子alpha。当alpha设为0的时候, # 将会返回一个剪裁过的将去畸变后不想要的像素去掉的内参数和畸变系数; # 当alpha设为1的时候,将会返回一个包含额外黑色像素点的内参数和畸变系数,并返回一个ROI用于将其剪裁掉 ## ## cv2.undistort函数通过给定的相机矩阵(camera matrix)和畸变系数(distortion coefficients), ## 可以计算出原始畸变图像中每个像素点在无畸变图像中的正确位置,并据此对图像进行校正 h, w = frame.shape[:2] newcameramtx, roi = cv2.getOptimalNewCameraMatrix(inter_matrix, dist_coeffs, (w,h), 1, (w,h)) undistorted = cv2.undistort(frame, inter_matrix, dist_coeffs, None, newcameramtx) x, y, w, h = roi cv2.rectangle(undistorted, (x,y),(x+w,y+h),(255,0,255),2) mat = np.concatenate((frame, undistorted), axis=1) image_copy = cv2.resize(mat, (500*2,500)) windows_name = "Left: Origin | Right: Undistorted" cv2.imshow(windows_name, image_copy) key = cv2.waitKey(1) if key == 27 or key & 0xFF == ord("q"): cv2.destroyWindow(windows_name) break

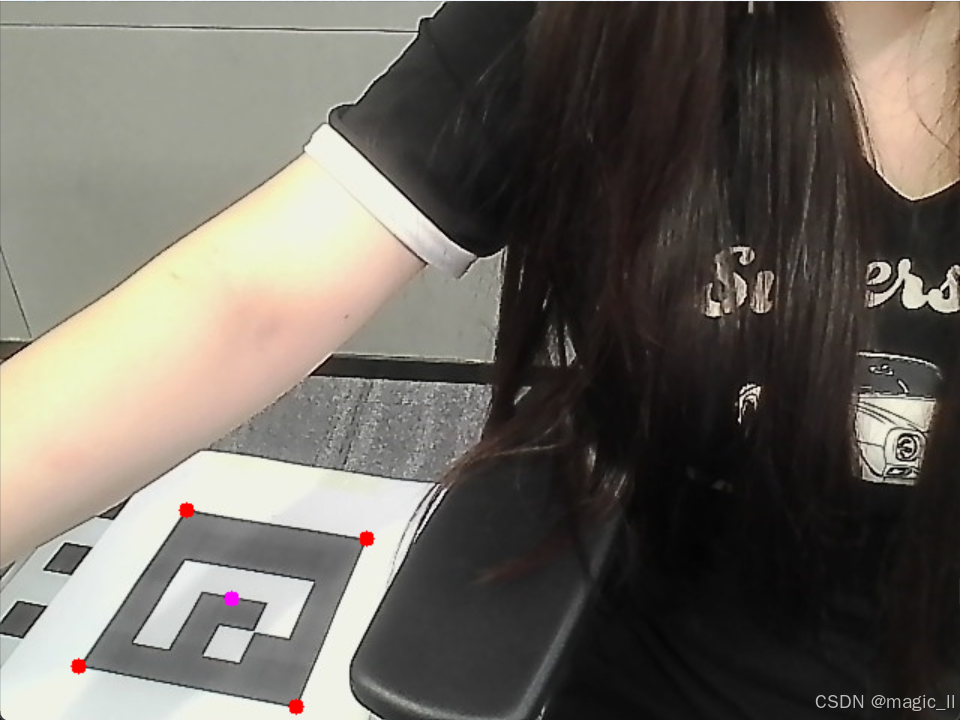

2 摄像头外参的标定

外参标定,可以用opencv中提供的

cv2.solvePnP进行估计。

pythonimport os import cv2 import glob import numpy as np ##==step1: 给定标定的相机内参、图片上的2D坐标、以及对应的世界坐标系下的3D坐标 dist_coeffs = np.array([[ 1.26656599e-01, -8.62714566e-01, 1.95000458e-03, -6.37704248e-04, 1.11050691e+00]]) inter_matrix = np.array([[713.85537736, 0. , 329.50142968], [0. , 714.28046903, 235.29954597], [0. , 0. , 1. ]]) ## 世界坐标系下的坐标 objPoints = np.array([[-0.25, -0.25, 0.], [-0.25, 0.25, 0.], [ 0.25, 0.25, 0.], [ 0.25, -0.25, 0.]]) ## 像素坐标系下的坐标 marker_corner = np.array([[[52, 443], # [x, y] [124, 339], [244, 358], [197, 470]]], dtype=np.float32) ##==step2. 使用 cv2.solvePnP 计算对应的外参 valid, rvec_obj, tvec_obj = cv2.solvePnP(objPoints, marker_corner, cameraMatrix=inter_matrix, distCoeffs=dist_coeffs) print(rvec_obj) print(tvec_obj) ##==step3. 使用相机内外参,将3D的点转换为2D坐标 points_3d = np.float32([[0, 0, 0]]) imgpts, jac = cv2.projectPoints(points_3d, rvec_obj, tvec_obj, inter_matrix, dist_coeffs) ##==step4. 2D坐标可视化 frame = cv2.imread("L:/WORKFILE/calibration-master/detect1.jpg") for pt in imgpts[:,0,:].astype(int): cv2.circle(frame, (pt[0], pt[1]), 5, (255, 0, 255), -1) for pt in marker_corner[0].astype(int): cv2.circle(frame, (pt[0], pt[1]), 5, (0, 0, 255), -1) cv2.imshow('result', frame) cv2.waitKey(0) ## undistort,这里使用不到 # height_img, width_img = frame.shape[:2] # newcameramtx, roi = cv2.getOptimalNewCameraMatrix(inter_matrix, dist_coeffs, (width_img, height_img), 1, (width_img, height_img)) # newcam_mtx = newcameramtx # dst = cv2.undistort(frame, inter_matrix, dist_coeffs, None, newcameramtx) # x, y, w, h = roi # frame = dst[y:y+h, x:x+w]