之前做过一次Kaggle的时间序列竞赛数据集练习:CSDN链接效果并不理想,之后在Kaggle的评论中又找到了各式各样的模型方法,其中我还手动还原过第三名的Entity Embedding:CSDN链接。这个参赛方法中,使用了除了比赛给出的数据以外的外部数据(天气数据等)。而这次,我准备还原一个没有使用外部数据且方法较为简单,但是效果较好的策略。也就是第66名的策略。

详细的策略可以看这里 R语言版的源代码在这里 Kaggle页面可以看这里

我复现出来的NoteBook可以在Kaggle上看到。

特征工程 Feature Engineering

原策略中的特征工程制造了以下几个特征:

1、傅里叶级数展开的非常数项(包括正弦、余弦函数各自的前5项),可以在CSDN链接与R语言源码中查看原理。

2、"日期趋势"项以及它的对数值(DateTrend和DateTrendLog)

3、对于假期以及促销事件,加入这些事件发生前后的衰减项

4、对于这些特殊事件,将这些事件发生的前后n天做0-1变量(stair-step变量)

5、加入了"是否明天闭店"、"是否昨日闭店"、"上周日是否闭店"等0-1变量

6、将日期分解为年月日等维度,并加入对应的二分类变量特征。

python

import warnings

warnings.filterwarnings("ignore")

import pandas as pd

from datetime import datetime

import numpy as np

df_train = pd.read_csv("./input/train.csv")

df_test = pd.read_csv("./input/test.csv")

df_store = pd.read_csv("./input/store.csv")

train_range = pd.date_range(df_train["Date"].min(),df_train["Date"].max(), freq='D')

test_range = pd.date_range(df_test["Date"].min(),df_test["Date"].max(), freq='D')

full_range = pd.date_range(df_train["Date"].min(),df_test["Date"].max(), freq='D')

# 根据full_range构建df_full

df_full = pd.concat([df_train,df_test],axis=0).drop(columns=["Id"])

weekday_dict = df_full[["Date","DayOfWeek"]].drop_duplicates()

# 构建全零的表格

df_all_zero = pd.DataFrame(full_range,columns=["Date"])

for col in ["Sales","Customers","Open","Promo","StateHoliday","SchoolHoliday"]:

df_all_zero[col]=0

df_all_zero = df_all_zero.merge(weekday_dict,on="Date",how="left")

df_all_stores = pd.DataFrame(df_full["Store"].unique(),columns=["Store"])

df_all_zero = df_all_zero.merge(df_all_stores,how="cross")

# 将全零表格填充到full表

df_all_zero = df_all_zero.set_index(["Store","Date"])

df_full = df_full.set_index(["Store","Date"])

df_full = df_full.combine_first(df_all_zero)

df_full = df_full.reset_index()

del df_all_stores,df_all_zero

# df_full = df_full.merge(df_store,on="Store")

# df_full = df_full.sort_values(["Store","Date"]).reset_index(drop=True)

def logger(func):

"""

装饰器,用于测量函数运行时间并打印函数名

"""

def log(*args, **kwargs):

# 获取当前时间作为开始时间

start_time = datetime.now().strftime("%Y-%m-%d %H:%M:%S")

print(f"Function {func.__name__} started at {start_time}")

# 调用原始函数

result = func(*args, **kwargs)

# 获取当前时间作为结束时间

end_time = datetime.now().strftime("%Y-%m-%d %H:%M:%S")

print(f"Function {func.__name__} ended at {end_time}")

return result

return log

class DataFrameClass():

@logger

def __init__(self,df:pd.DataFrame,df_store:pd.DataFrame,full_range,train_range,test_range):

self.data = df

self.full_range = full_range

self.train_range = train_range

self.test_range = test_range

self.store = df_store

self.store["Competition_OpenDate"] = pd.Series(map(self.build_Date,self.store["CompetitionOpenSinceYear"],self.store["CompetitionOpenSinceMonth"]))

# 如果一家店Sales为0,则也视为Close

idx = self.data[(self.data["Sales"]==0) & (self.data["Date"]<=self.train_range[-1])].index

self.data.loc[idx,"Open"] = 0

self.data["Close"] = 1-self.data["Open"]

date_trend_dict = {i:j for i,j in zip(full_range,np.linspace(1,0,len(full_range),endpoint=False).tolist()[::-1])}

self.data["DateTrend"] = self.data["Date"].map(lambda x:date_trend_dict[x])

self.data["CloseOnSunday"] = 0

self.data.loc[self.data[(self.data["DayOfWeek"] == 7) & (self.data["Close"]==1)].index,"CloseOnSunday"]=1

def pre_process_data(self):

"""

数据预处理

"""

self.add_fourier_series()

self.get_dummy_col("DayOfWeek",drop_col="DayOfWeek_7",is_store=False)

self.get_dummy_col("StateHoliday",drop_col="StateHoliday_0",is_store=False)

unique_values = sorted(list(set([str(i) for i in self.data["StateHoliday"].unique()])))

value_dict = {j:i+1 for i,j in enumerate(unique_values)}

self.get_continous_value("StateHoliday",value_dict,is_store=False)

for col in ["StoreType","Assortment","PromoInterval"]:

if col == "PromoInterval":

value_dict={'Feb,May,Aug,Nov': 2, 'Jan,Apr,Jul,Oct': 3, 'Mar,Jun,Sept,Dec': 4, 'nan': 1}

else:

unique_values = sorted(list(set([str(i) for i in self.store[col].unique()])))

value_dict = {j:i+1 for i,j in enumerate(unique_values)}

self.get_continous_value(col,value_dict,is_store=True)

self.data = self.data.merge(self.store,on="Store")

self.get_CompetitionOpen()

self.get_isPromo2()

self.get_Date_decompose()

self.get_dummy_col("month")

self.get_dummy_col("day")

for col in ["Promo","StateHoliday_b","StateHoliday_a","isPromo2"]:

self.get_is_Event_first_day(col)

self.get_Event_Started_Last_Date(col)

self.get_is_Event_first_day("StateHoliday_c")

idxs = self.data[(self.data["month"]==12) & (self.data["day"]==26)].index#12月26号需要特别处理为当天

self.data.loc[idxs,"StateHoliday_c_first_day"] = 1

self.get_Event_Started_Last_Date("StateHoliday_c")

self.get_is_Event_first_day("Open")

self.get_is_Event_first_day("Close")#Close_first_day相当于Closed,Open_first_day相当于Opened

# 是否第二天歇业

idxs = self.data[(self.data.shift(-1)["Close_first_day"]==1) & (self.data["Date"]!=full_range[-1])].index

self.data["TomorrowClosed"] = 0

self.data.loc[idxs,"TomorrowClosed"]=1

self.get_Event_Started_Last_Date("Close")

self.get_Last_Event_distance("Close")

self.get_Event_Started_Last_Date("Open")

self.get_Last_Event_distance("Open")

self.get_is_Event_first_day("CloseOnSunday")

self.get_Event_Started_Last_Date("CloseOnSunday")

self.data["WasClosedOnSunday"] = ((self.data["Date"]-self.data["CloseOnSunday_last_started"]).dt.days<7).astype(int)

self.get_Event_Start_NextDay("StateHoliday_c")

self.get_Event_Start_NextDay("Open")

self.get_Event_Start_NextDay("Close")

self.get_Next_Event_distance("Open") #Will be Closed for days

# LongClosedNextDate

self.data["LongClosed_first_day"]=0

idxs = self.data[(self.data["Close_first_day"]==1) & (self.data["Open_next_distance"]>5) & (self.data["Open_next_distance"]<180)].index

self.data.loc[idxs,"LongClosed_first_day"]=1

self.get_Event_Start_NextDay("LongClosed")

self.get_Next_Event_distance("LongClosed")

self.get_Event_Start_NextDay("StateHoliday_b")

self.get_Event_Start_NextDay("StateHoliday_a")

# LongOpenLastDate

self.data["LongOpen_first_day"]=0

idxs = self.data[(self.data["Open_first_day"]==1) & (self.data["Close_last_distance"]>5) & (self.data["Close_last_distance"]<180)].index

self.data.loc[idxs,"LongOpen_first_day"]=1

self.get_Event_Started_Last_Date("LongOpen")

self.get_Last_Event_distance("LongOpen")

self.get_Event_After_Decay("Promo",[1,0.75,0.5,0.25])

self.get_Event_After_Decay("isPromo2",[1,0.5])

self.get_Event_After_Decay("StateHoliday_c",[1,0.5],event_active_confidence=0) #相当于StateHolidayCLastDecay

self.get_Event_After_Decay("StateHoliday_a",[1,0.5],event_active_confidence=0)

self.get_Event_After_Decay("StateHoliday_b",[1,0.5],event_active_confidence=0)

self.get_Event_Before_Decay("StateHoliday_b",[-1,1,0.5])

self.get_Event_Before_Decay("StateHoliday_a",[-1,1,0.5])

self.get_Event_Before_Decay("StateHoliday_c",[1/14 * (i+1) for i in range(14)])

# get_scale_log_features

for col in self.data.columns:

if "_Decay_" in col or col == "DateTrend":

self.get_scale_log_features(col)

self.get_before_stairs("StateHoliday_a",steps=list(range(1,16))+[21,28])

self.get_before_stairs("StateHoliday_b",steps=list(range(1,16))+[21,28])

self.get_before_stairs("StateHoliday_c",steps=list(range(1,16))+[21,28])

self.get_before_stairs("LongClosed",steps=list(range(1,16))+[21,28])

self.get_after_stairs("Promo",[2,3,4])

self.get_after_stairs("StateHoliday_a",steps=list(range(1,16))+[21,28])

self.get_after_stairs("StateHoliday_b",steps=list(range(1,16))+[21,28])

self.get_after_stairs("StateHoliday_c",steps=list(range(1,16))+[21,28])

self.get_after_stairs("LongOpen",steps=list(range(1,16))+[21,28])

self.get_after_stairs("isPromo2",steps=list(range(1,16))+[21,28])

def build_Date(self,year,month):

"""

根据年月构造时间

"""

if pd.isna(year) or pd.isna(month):

return np.nan

year=str(int(year))

month = str(int(month))

day = "1"

return pd.to_datetime("-".join([year,month,day]))

@logger

def add_fourier_series(self,terms=5,T=365):

"""

将time_range里面的每一个日期都mapping到terms个sin与terms个cos

参考:https://blog.csdn.net/qq_36810398/article/details/103312408

R源码:https://rdrr.io/cran/forecast/man/fourier.html

"""

if "Fourier1" in self.data.columns:

return

n=len(self.full_range)

series = pd.Series(range(1,n+1))

res_df = pd.DataFrame()

for t in range(terms):

res_df["Fourier"+str(t+1)] = np.sin(2*np.pi*series*(t+1)/T)

res_df["Fourier"+str(terms+t+1)] = np.cos(2*np.pi*series*(t+1)/T)

res_df["Date"] = self.full_range

self.data = self.data.merge(res_df,on="Date",how="left")

def update_col(self,is_store,func):

"""

将对应的表格使用传入的func函数处理

"""

if is_store:

self.store = func(self.store)

else:

self.data = func(self.data)

@logger

def get_dummy_col(self,col_name,drop_col=None,is_store=False):

# 获取哑变量/独立热编码

df = self.data if not is_store else self.store

if ","+col_name+"_" in ",".join(self.data.columns):

return

res = pd.get_dummies(df[col_name].astype(str),dtype=int,prefix=col_name)

if drop_col in res.columns:

res = res.drop(drop_col,axis=1)

self.update_col(is_store,lambda x:pd.concat([x,res],axis=1))

@logger

def get_continous_value(self,colname,value_dict,is_store=False):

"""

分类变量根据Value_dict改连续型变量

"""

df = self.data if not is_store else self.store

if ","+colname+"_continous" in ",".join(df.columns):

return

col = df[colname]

new_col = col.map(lambda x:value_dict[str(x)])

new_col.name = colname+"_continous"

self.update_col(is_store,lambda x:pd.concat([x,new_col],axis=1))

@logger

def get_CompetitionOpen(self):

"""

竞争对手是否开店

"""

if "CompetitionOpen" in self.data.columns:

return

self.data["CompetitionOpen"] = (self.data["Date"]>=self.data["Competition_OpenDate"]).astype(int)

@logger

def get_isPromo2(self):

"""

当天是否有进行Promo2促销

"""

if "isPromo2" in self.data.columns:

return

idx = [i for i in self.data.index if self.data.loc[i,"Date"].strftime('%b') in str(self.data.loc[i,"PromoInterval"]) \

and ((self.data.loc[i,"Date"].weekofyear > self.data.loc[i,"Promo2SinceWeek"] and self.data.loc[i,"Date"].year == self.data.loc[i,"Promo2SinceYear"])\

or (self.data.loc[i,"Date"].year > self.data.loc[i,"Promo2SinceYear"]))]

self.data["isPromo2"] = 0

self.data.loc[idx,"isPromo2"] = 1

@logger

def get_Date_decompose(self,prefix=""):

"""

日期分解

"""

col = self.data["Date"]

year = col.map(lambda x:x.year)

month = col.map(lambda x:x.month)

day = col.map(lambda x:x.day)

weekofyear = col.map(lambda x:x.weekofyear)

year.name = prefix+"year"

month.name = prefix+"month"

day.name = prefix+"day"

self.update_col(False,lambda x:pd.concat([x,year],axis=1))

self.update_col(False,lambda x:pd.concat([x,month],axis=1))

self.update_col(False,lambda x:pd.concat([x,day],axis=1))

@logger

def get_is_Event_first_day(self,event_col):

"""

是否为事件的第一天

"""

if ","+event_col+"_first_day" in ",".join(self.data):

return

tmp = self.data[event_col].shift(1).fillna(0)

res = (self.data[event_col]-tmp).map(lambda x:x>0).astype(int)

res.name = event_col+"_first_day"

if event_col in ("Close","Open"):

first_day_idx = self.data[(self.data[event_col]==1) & (self.data["Date"]==full_range[0])].index

res[first_day_idx]=0

self.data = pd.concat([self.data,res],axis=1)

@logger

def get_Event_Started_Last_Date(self,event_col,default = pd.to_datetime("2000-01-01")):

"""

上次事件发生的日期,没有就填充default值

"""

if ","+event_col+"_last_started" in ",".join(self.data):

return

if ","+event_col+"_first_day" not in ",".join(self.data):

raise ValueError("run get_is_Event_first_day first")

self.data[event_col+"_last_started"] = np.nan

for i in self.data[self.data["Date"]==pd.to_datetime("2013-01-01")].index:

self.data.loc[i,event_col+"_last_started"] = default

for i in self.data[self.data[event_col+"_first_day"]==1].index:

self.data.loc[i,event_col+"_last_started"] = self.data.loc[i,"Date"]

self.data[event_col+"_last_started"] = self.data[event_col+"_last_started"].fillna(method='ffill')

@logger

def get_Event_Start_NextDay(self,event_col,default=pd.to_datetime("2099-01-01")):

"""

事件下次开始发生的时间,没有就填充default值

"""

if ","+event_col+"_next_start" in ",".join(self.data):

return

if ","+event_col+"_first_day" not in ",".join(self.data):

raise ValueError("run get_is_Event_first_day first")

self.data[event_col+"_next_start"] = np.nan

for i in self.data[self.data["Date"]==pd.to_datetime("2015-09-17")].index:

self.data.loc[i,event_col+"_next_start"] = default

for i in self.data[self.data[event_col+"_first_day"]==1].index:

self.data.loc[i,event_col+"_next_start"] = self.data.loc[i,"Date"]

self.data[event_col+"_next_start"] = self.data[event_col+"_next_start"].fillna(method='bfill')

@logger

def get_Last_Event_distance(self,event_col):

"""

事件离上次开始的距离

"""

if ","+event_col+"_last_started" not in ",".join(self.data):

raise ValueError("run get_Event_Started_Last_Date first")

self.data[event_col+"_last_distance"] = (self.data["Date"] - self.data[event_col+"_last_started"]).dt.days

@logger

def get_Next_Event_distance(self,event_col):

"""

事件离下次开始的距离

"""

if ","+event_col+"_next_start" not in ",".join(self.data):

raise ValueError("run get_Event_Start_NextDay first")

self.data[event_col+"_next_distance"] = (self.data[event_col+"_next_start"]-self.data["Date"]).dt.days

@logger

def get_Event_After_Decay(self,event_col,decay_values,event_active_confidence=1):

"""

事件结束之后,事件的后续效应以及其衰减

"""

if ","+event_col+"_first_day" not in ",".join(self.data):

raise ValueError("run get_is_Event_first_day first")

ones = self.data[self.data[event_col+"_first_day"]==1].index

max_date = full_range[-1]

self.data[event_col + "_Decay_after"] = self.data[event_col+"_first_day"]

for idx in ones:

for j,decay_value in enumerate(decay_values):

if self.data.loc[idx+j,"Date"]>max_date:

break

self.data.loc[idx+j,event_col + "_Decay_after"]=decay_value*abs(self.data.loc[idx+j,event_col]-(1-event_active_confidence))

@logger

def get_Event_Before_Decay(self,event_col,decay_values,event_active_confidence=0):

"""

事件开始前,对事件预期的效应以及它的衰减

"""

if ","+event_col+"_first_day" not in ",".join(self.data):

raise ValueError("run get_is_Event_first_day first")

ones = self.data[self.data[event_col+"_first_day"]==1].index

min_date = full_range[0]

self.data[event_col + "_Decay_before"] = self.data[event_col+"_first_day"]

for idx in ones:

for j,decay_value in enumerate(decay_values):

if self.data.loc[idx-j,"Date"]==min_date: #这里写2个是为了避免"第一天就是事件开始"的情况

break

self.data.loc[idx-j,event_col + "_Decay_before"]=decay_value*abs(self.data.loc[idx+j,event_col]-(1-event_active_confidence))

if self.data.loc[idx-j,"Date"]==min_date:

break

@logger

def get_scale_log_features(self,event_col):

"""

进行以下操作:1、缩放(除以最大值)2、计算log1p(log(1+x),|x|<=1)3、将计算后的值再进行一次缩放

"""

self.data[event_col+"_scaled"] = np.log1p(self.data[event_col]/self.data[event_col].max())

self.data[event_col+"_scaled"] = self.data[event_col+"_scaled"]/self.data[event_col+"_scaled"].max()

@logger

def get_before_stairs(self,event_col,steps=[]):

"""

开始前的第n天填写1,剩下的日期为0;以此类推;n为steps中的元素

"""

if ","+event_col+"_first_day" not in ",".join(self.data):

raise ValueError("run get_is_Event_first_day first")

steps.sort(reverse=True)

min_date = full_range[0]

for i in steps:

self.data[event_col+"_NextDate_"+str(i)+"_before"] = self.data[event_col+"_first_day"]

if i>1:

ones = self.data[self.data[event_col+"_first_day"]==1].index

for idx in ones:

for j in range(idx,idx-i,-1):

if self.data.loc[j,"Date"] == min_date:

break

self.data.loc[j,event_col+"_NextDate_"+str(i)+"_before"] = 1

if self.data.loc[j,"Date"] == min_date:

break

@logger

def get_after_stairs(self,event_col,steps=[]):

"""

结束后的第n天填写1,剩下的日期为0;以此类推;n为steps中的元素

"""

if ","+event_col+"_first_day" not in ",".join(self.data):

raise ValueError("run get_is_Event_first_day first")

steps.sort(reverse=True)

max_date = full_range[-1]

for i in steps:

self.data[event_col+"_NextDate_"+str(i)+"_after"] = self.data[event_col+"_first_day"]

if i>1:

ones = self.data[self.data[event_col+"_first_day"]==1].index

for idx in ones:

for j in range(idx,idx+i):

if self.data.loc[j,"Date"] == max_date:

break

self.data.loc[j,event_col+"_NextDate_"+str(i)+"_after"] = 1

def save_csv(self,path):

self.data.to_csv(path,index=None)

print(f"saved in {path}")

data = DataFrameClass(df_full,df_store,full_range,train_range,test_range)

data.pre_process_data()

data.save_csv("data_full_range.csv")广义线性模型 GLM

原作者使用R语言中的glmnet来对每一家店铺进行建模,而在python中我只找到了glmnet_python这个包,其他的包在我的本地环境下运行不起来。

官方文档

需要注意的是,这个包似乎只能在Linux系统下运行,而且需要安装libgfortran.so.3。可以参考CSDN上的安装教程。如果Ubuntu用户遇到公钥问题,还可以参考CSDN上的报错信息。

同时,在我本地环境跑这个包的时候,会有报错,需要修改它的源代码,将某一行的"any()"改为".any()"。因此这个包可能无法在Kaggle上正常运行。

原策略的大致思路是,首先找到最佳的 α \alpha α,也就是惩罚项中L1惩罚项的比率。原本策略中使用的是 α = 1 \alpha=1 α=1,也就是Lasso回归。

接着,单独拿出每家店的数据,在100个 λ \lambda λ中使用15折交叉验证找到最好的那个 λ \lambda λ,也就是惩罚项的系数,进行最终建模预测。

在建模前,首先会筛选只在测试集中的店铺,同时对每一家店铺做线性回归,将每个店铺差异过大(Z_score>=2.5)的部分排除。原作者还使用了一个remove_before_changepoint函数筛去了部分数据,这一部分我并没有理解,因此没有做在预处理中。

除此以外,我还是用了sklearn中的ElasticNet方法模仿glmnet,但是效果并不好。我将这个方法以及贝叶斯调参的过程放在了代码中。

python

import warnings

warnings.filterwarnings("ignore")

import pandas as pd

from sklearn.linear_model import enet_path,ElasticNet,LinearRegression,Lasso

import numpy as np

import optuna

from xgboost import XGBRegressor,DMatrix

from datetime import datetime

import re

from matplotlib import pyplot as plt

import matplotlib as mpl

import glmnet_python

from glmnet import glmnet

from glmnetPredict import glmnetPredict

import scipy

df = pd.read_csv("data_full_range.csv")

df_train = pd.read_csv("./input/train.csv")

df_test = pd.read_csv("./input/test.csv")

df_store = pd.read_csv("./input/store.csv")

df_train["Date"] = pd.to_datetime(df_train["Date"])

df_test["Date"] = pd.to_datetime(df_test["Date"])

df["Date"] = pd.to_datetime(df["Date"])

df["Store"] = df["Store"].astype(int)

train_range = pd.date_range(df_train["Date"].min(),df_train["Date"].max(), freq='D')

test_range = pd.date_range(df_test["Date"].min(),df_test["Date"].max(), freq='D')

full_range = pd.date_range(df_train["Date"].min(),df_test["Date"].max(), freq='D')

base_linear_features =["Promo", "isPromo2", "SchoolHoliday",

"DayOfWeek_1", "DayOfWeek_2", "DayOfWeek_3",

"DayOfWeek_4", "DayOfWeek_5", "DayOfWeek_6",

"StateHoliday_a", "StateHoliday_b", "StateHoliday_c",

"CompetitionOpen", "Open"]

trend_features = ["DateTrend", "DateTrend_scaled"]

decay_features = [i for i in df.columns if "Decay_" in i and "_scaled" not in i] # 多了StateHoliday_a_Decay_after、StateHoliday_a_Decay_before、StateHoliday_b_Decay_after

decay_scaled_features = [i for i in df.columns if "Decay_" in i and "_scaled" in i]

stair_step_features = [i for i in df.columns if bool(re.search(r"\d",i)) and (i.endswith("_before") or i.endswith("_after")) and "Decay" not in i]

stair_step_features += ["Open_first_day","Promo_first_day","isPromo2_first_day",

"TomorrowClosed","WasClosedOnSunday"]

month_features = [f"month_{i}" for i in range(1,13)]

month_day_features = [f"day_{i}"for i in range(1,32)]

fourier_features = [f"Fourier{i}" for i in range(1,11)]

linear_features = base_linear_features+trend_features+decay_features+decay_scaled_features+stair_step_features+month_day_features+month_features+fourier_features

XGB_Features = ["Store", "DayOfWeek", "Open", "Promo", "SchoolHoliday",

"StoreType_continous", "Assortment_continous", "CompetitionDistance",

"CompetitionOpenSinceMonth", "CompetitionOpenSinceYear",

"Promo2", "Promo2SinceWeek", "Promo2SinceYear",

"PromoInterval_continous", "month", "year", "day"]+decay_features+stair_step_features+fourier_features

def make_fold(train,train_range=train_range,step_by=3,step=1,predict_interval=9*7):

total = len(train_range)-1

last_train_date = train_range[total-predict_interval-(step-1)*step_by]

last_predict_date = train_range[total-(step-1)*step_by]

train_set = train[train["Date"]<=last_train_date]

test_set = train[(train["Date"]>last_train_date) & (train["Date"]<=last_predict_date)]

return (train_set.drop(columns="Date"),test_set.drop(columns="Date"))

def RMSPE(y_pred,y_real):

return np.sqrt(np.mean(((y_pred-y_real)/y_real)**2))

def exp_RMSPE(y_pred,y_real):

return RMSPE(np.exp(y_pred),np.exp(y_real))

def find_best_l1_ratio(trial: optuna.trial.Trial,steps:int,train: pd.DataFrame):

l1_ratio = trial.suggest_float("l1",0,1)

res = []

for step in range(1,steps+1):

model = ElasticNet(l1_ratio=l1_ratio)

train_set,valid_set = make_fold(train,step=step)

train_X = train_set.drop(columns=["Store","Sales"])

train_Y = train_set["Sales"]

valid_X = valid_set.drop(columns=["Store","Sales"])

valid_Y = valid_set["Sales"]

model.fit(train_X,train_Y)

y_pred = model.predict(valid_X)

res.append(exp_RMSPE(y_pred,valid_Y))

return np.median(res)

def find_best_alpha(l1_ratio,steps,train: pd.DataFrame):

#对应best_glmnet_lambda

alphas = enet_path(train.drop(columns=["Sales","Date"]),train["Sales"],l1_ratio=l1_ratio)[0]

alpha_dict = {i:[] for i in alphas}

for step in range(1,steps+1):

train_set,valid_set = make_fold(train,step=step,predict_interval=6*7)

train_X = train_set.drop(columns="Sales")

train_Y = train_set["Sales"]

valid_X = valid_set.drop(columns="Sales")

valid_Y = valid_set["Sales"]

for alpha in alphas:

model = ElasticNet(alpha=alpha,l1_ratio=l1_ratio)

model.fit(train_X,train_Y)

y_pred = model.predict(valid_X)

alpha_dict[alpha].append(exp_RMSPE(y_pred,valid_Y))

#return alpha_dict

alpha_median = [np.median(alpha_dict[i]) for i in alphas]

best_alpha = alphas[np.argmin(alpha_median)]

return best_alpha

def predict_with_best_alpha(alpha:float,l1_ratio:float,train:pd.DataFrame,test:pd.DataFrame):

model = ElasticNet(alpha=alpha,l1_ratio=l1_ratio)

model.fit(train.drop(columns="Sales"),train["Sales"])

res = model.predict(test)

return res

def ElasticNetPredict_per_store(l1_ratio,train,test):

stores = list(train["Store"].unique())

res = pd.DataFrame(columns=["Store","Date","Sales"])

for i,store in enumerate(stores):

train_store = train[train["Store"] == store].reset_index(drop=True)

test_store = test[test["Store"] == store].reset_index(drop=True)

best_alpha = find_best_alpha(l1_ratio=l1_ratio,steps=15,train=train_store.drop(columns=["Store"]))

store_res = predict_with_best_alpha(best_alpha,l1_ratio,train_store.drop(columns=["Store","Date"]),test_store.drop(columns=["Store","Date"]))

test_store["Sales"] = np.exp(store_res)

res = pd.concat([res,test_store[["Store","Date","Sales"]]],axis=0)

print((i+1)/len(stores),"Compelete")

return res

def Linear_Model_Predict_per_store_zscrore(train):

stores = list(train["Store"].unique())

res = pd.DataFrame(columns=["Store","Date","Z_Score"])

for i,store in enumerate(stores):

train_store = train[train["Store"] == store].reset_index(drop=True)

model = LinearRegression()

X = train_store.drop(columns=["Sales","Date","Store"])

Y = train_store["Sales"]

model.fit(X,Y)

store_res = model.predict(X)

train_store["predict_Sales"] = store_res

Error = (train_store["predict_Sales"]-train_store["Sales"]).abs()

Z_Score = Error/Error.median()

train_store["Z_Score"] = Z_Score

res = pd.concat([res,train_store[["Store","Date","Z_Score"]]],axis=0)

return res

def predict_with_best_lambda_glmNet(alpha:float,lambdau:float,train:pd.DataFrame,test:pd.DataFrame):

model = glmnet(x=train.drop(columns=["Sales"]).values,y=train["Sales"].values,alpha=alpha

,family="gaussian",lambdau=scipy.float64([lambdau]))

res = glmnetPredict(model, test.values, ptype = 'response')[:,-0]

return res

def GLM_per_store(alpha,train,test):

stores = list(train["Store"].unique())

res = pd.DataFrame(columns=["Store","Date","Sales"])

for i,store in enumerate(stores):

train_store = train[train["Store"] == store].reset_index(drop=True)

test_store = test[test["Store"] == store].reset_index(drop=True)

best_lambda = find_best_lambda(alpha=alpha,steps=15,train=train_store.drop(columns=["Store"]))

store_res = predict_with_best_lambda_glmNet(alpha=alpha,lambdau=best_lambda

,train=train_store.drop(columns=["Store","Date"])

,test=test_store.drop(columns=["Store","Date"]))

test_store["Sales"] = np.exp(store_res)

res = pd.concat([res,test_store[["Store","Date","Sales"]]],axis=0)

print((i+1)/len(stores),"Compelete")

return res

def find_best_lambda(alpha,steps,train: pd.DataFrame):

lambdas = glmnet(x=train.drop(columns=["Sales","Date"]).values,y=train["Sales"].values,alpha=alpha,nlambda=100,family="gaussian")["lambdau"]

lambda_dict = {i:[] for i in lambdas}

for step in range(1,steps+1):

train_set,valid_set = make_fold(train,step=step,predict_interval=6*7)

train_X = train_set.drop(columns="Sales").values

train_Y = train_set["Sales"].values

valid_X = valid_set.drop(columns="Sales").values

valid_Y = valid_set["Sales"].values

model = glmnet(x=train_X,y=train_Y,alpha=1

,family="gaussian",lambdau=scipy.float64([lambdas]))

y_pred = glmnetPredict(model, valid_X, ptype = 'response')

for i,x in enumerate(lambdas):

lambda_dict[x].append(exp_RMSPE(y_pred[:,i],valid_Y))

lambda_median = [np.median(lambda_dict[i]) for i in lambdas]

best_lambda = lambdas[np.argmin(lambda_median)]

return best_lambda

# filter trainset

test_store = set(df_test["Store"].unique())

df_full = df[df["Store"].isin(test_store)]

df_full = df_full[["Date","Sales","Store"]+linear_features]

df_full_train = df_full[df_full["Date"].isin(train_range) & (df["Open"]==1)]

df_full_train["Sales"] = np.log(df_full_train["Sales"])

df_full_test = df_full[df_full["Date"].isin(test_range)]

train_range = df_full_train[["Store","Date"]]

del df_full

z_scores = Linear_Model_Predict_per_store_zscrore(df_full_train)

df_full_train = df_full_train.merge(z_scores,how="left",on=["Store","Date"])

df_full_train = df_full_train[df_full_train["Z_Score"]<2.5]

del z_scores

del df_full_train["Z_Score"]

best_alpha = 1

GLM_res = GLM_per_store(best_alpha,df_full_train,df_full_test.drop(columns="Sales"))

GLM_res.to_csv("GLM_result.csv",index=None)

GLM_res = pd.read_csv("./GLM_result.csv")

GLM_res["Date"] = pd.to_datetime(GLM_res["Date"])

GLM_res = df_test.merge(GLM_res,how="left")

GLM_res[["Id","Sales"]].to_csv("submission_GLM.csv",index=None)

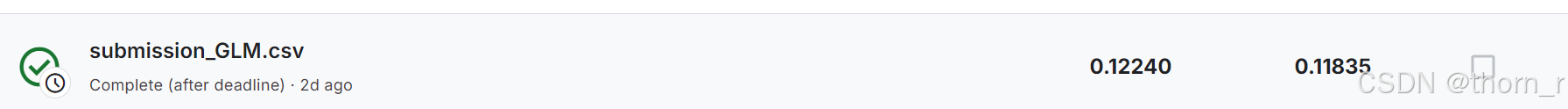

GLM为单个店铺分别建模的效果看上去并不理想

XGBoost

XGBoost相较于GLM来说,将各个分类变量也加入了特征中,没有进行"使用线性模型剔除异常值"的操作,也并非对所有店铺单独进行建模,而是总体上进行建模。

python

# filter trainset

test_store = set(df_test["Store"].unique())

df_full = df[(df["Store"].isin(test_store))]

df_full = df_full[["Date","Sales"]+XGB_Features]

df_full_train = df_full.merge(train_range)

df_full_train["Sales"] = np.log(df_full_train["Sales"])

df_full_train["Store"] = df_full_train["Store"].astype(int)

df_full_test = df_full[df_full["Date"].isin(test_range)]

del df_full

def find_best_XGBoostParameters(trial:optuna.trial.Trial,train: pd.DataFrame):

booster = trial.suggest_categorical("booster",["gbtree","gblinear","dart"])

learning_rate = trial.suggest_float("learning_rate",0.001,0.1)

max_depth = trial.suggest_int("max_depth",2,10)

subsample = trial.suggest_float("subsample",0.7,1.0)

colsample_bytree = trial.suggest_float("colsample_bytree",0.5,1)

model = XGBRegressor(booster=booster,

learning_rate=learning_rate,

max_depth=max_depth,

subsample=subsample,

colsample_bytree=colsample_bytree)

train_set,valid_set = make_fold(train,step=1)

train_X = train_set.drop(columns="Sales")

train_Y = train_set["Sales"]

valid_X = valid_set.drop(columns="Sales")

valid_Y = valid_set["Sales"]

model.fit(train_X,train_Y)

y_pred = model.predict(valid_X)

return exp_RMSPE(y_pred,valid_Y)

# study = optuna.create_study()

# study.optimize(lambda trial:find_best_XGBoostParameters(trial,df_full_train),n_trials=100,show_progress_bar=True)

# study.best_params

model = XGBRegressor(

booster = 'gbtree',

learning_rate = 0.02,

max_depth=10,

subsample=0.9,

colsample_bytree=0.7,

eval_metric = exp_RMSPE,

n_estimators=3000,

#early_stopping_rounds=100,

device="cuda"

)

X = df_full_train.drop(columns=["Sales","Date"])

Y = df_full_train["Sales"]

_,valid_set = make_fold(df_full_train,step=1)

valid_X = valid_set.drop(columns="Sales")

valid_Y = valid_set["Sales"]

model.fit(X,Y)

X_test = df_full_test.drop(columns=["Date","Sales"])

Y_test = model.predict(X_test)

df_full_test["Sales"] = np.exp(Y_test)

XGBoost_res = df_test.merge(df_full_test[["Store","Date","Sales"]],how="left")

XGBoost_res[['Id',"Sales"]].to_csv("XGBoost_res.csv",index=None)

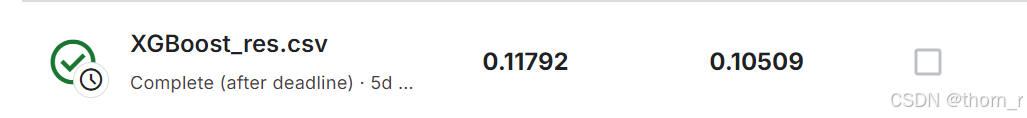

XGBoost的效果看上去还挺好的。

最后将这2个结果合并在一起并乘以一个系数(测试集时的数据似乎偏小,这个系数是讨论区得出来的)

python

XGBoost_res = pd.read_csv("XGBoost_res.csv")

GLM_res = pd.read_csv("submission_GLM.csv")

XGBoost_res = XGBoost_res.rename(columns={"Sales":"XGBoost_Sales"})

GLM_res = GLM_res.rename(columns={"Sales":"GLM_Sales"})

res = XGBoost_res.merge(GLM_res)

res["Sales"] =0.985*(res["GLM_Sales"]+res["XGBoost_Sales"])/2

res[["Id","Sales"]].to_csv("mixed_submission_GLM.csv",index=None)

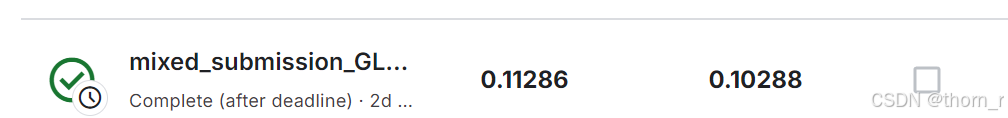

可以看到,混合后的效果很好,和原本66名的差距很小,大约79~80名的样子。

反思

原本我想使用ElasticNet参数调优代替GLM的,但是效果很差,后续有必要看一下线性模型和family设置为Gaussian的广义线性模型有何差异。

还有就是我使用Optuna的自动调参和给出的手动调参结果有着不晓得差距,后续也有必要对这一部分知识进行补完。