安装

windows系统kafka小白入门篇------下载安装,环境配置,入门代码书写_windows kafka-CSDN博客

使用

硬编码方式

注意要关掉创建的生产者对象 也可以使用 try(producer) { ...... }

创建生产者

java

package kafkademo.kafka;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import java.util.Properties;

import java.util.concurrent.ExecutionException;

public class Producer {

public static void main(String[] args) throws ExecutionException, InterruptedException {

// 1. kafka链接配置

Properties properties = new Properties();

// kafka链接地址

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

// key value的序列化

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringSerializer");

// 2. 创建生产这对象

KafkaProducer<String, String> producer = new KafkaProducer<String, String>(properties);

// 3. 发送消息

/**

* 第一个参数:topic

* 第二个参数:消息的key

* 第三个参数:消息的value

*/

ProducerRecord<String, String> record = new ProducerRecord<String, String>("topic-first", "key-001", "hello kafka");

// 同步发送消息

RecordMetadata recordMetadata = producer.send(record).get();

System.out.println(recordMetadata.hasOffset());

producer.close();

}

}创建消费者

java

package kafkademo.kafka;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import java.time.Duration;

import java.util.Arrays;

import java.util.Properties;

public class Consumer {

public static void main(String[] args) {

// 1.kafka配置信息

Properties properties = new Properties();

// 链接地址

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

// key value反序列化

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringDeserializer");

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringDeserializer");

// 设置消费者组

properties.put(ConsumerConfig.GROUP_ID_CONFIG, "group1");

// 2. 创建消费者对象

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(properties);

// 3.订阅主题

consumer.subscribe(Arrays.asList("topic-first"));

// 4.拉去消息

while (true) {

ConsumerRecords<String, String> consumerRecords = consumer.poll(Duration.ofMillis(1000));

for (ConsumerRecord<String, String> consumerRecord : consumerRecords) {

System.out.println(consumerRecord.key() + ": " + consumerRecord.value());

}

}

}

}创建消费者2

java

package kafkademo.kafka;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import java.time.Duration;

import java.util.Arrays;

import java.util.Properties;

public class Consumer2 {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringDeserializer");

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringDeserializer");

/**

* 多个消费者在相同组

* 只有一个消费者能够消费

* 10个消费者在一个组 只有一个消费者可以消费,说白了就是一个组只能有一个消费者消费

*/

// properties.put(ConsumerConfig.GROUP_ID_CONFIG, "group1");

/**

* 多个消费者在不同组

* 每个消费者都能消费到

* 10个消费者在10个组,10个消费者都能消费

* 说白了就是以组为单位消费

*/

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"group2");

KafkaConsumer<String, String> consumer = new KafkaConsumer<>(properties);

consumer.subscribe(Arrays.asList("topic-first"));

while (true) {

ConsumerRecords<String, String> consumerRecords = consumer.poll(Duration.ofMillis(1000));

for (ConsumerRecord<String, String> consumerRecord : consumerRecords) {

System.out.println(consumerRecord.key() + ": " + consumerRecord.value());

}

}

}

}Consumer和Consumer2在同一个组的运行结果

不在同一个组的运行结果

使用情景

一对一

多个消费者在同一个组,那么只有一个消费者能接收消息

一对多

多个消费者在多个组,那每个消费者都可以接受到消息

总结

10 个消费者在一个组 只有 1 个消费者可以消费,10 个消费者在10 个组,10 个消费者都能消费,说白了就是以组为单位进行消费。

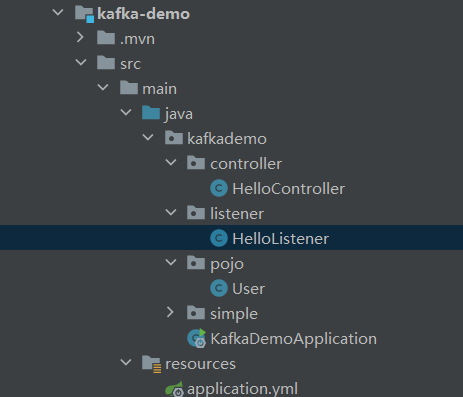

与springboot集成(使用KafkaTemplate类)

项目结构如下

1. 导入依赖 spring-kafka 、 json

XML

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<artifactId>water-test</artifactId>

<groupId>com.ape</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<groupId>com.ape</groupId>

<artifactId>kafka-demo</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<exclusions>

<exclusion>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

</project>2. 配置文件

spring:

application:

name: kafka-demo

kafka:

bootstrap-servers: localhost:9092

producer: # 生产者 发信息

retries: 10 # 重试次数

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

# 消费者 收信息

# 这个项目中消费者和生产者在一起,一般来说消费者的服务只需要写消费者配置

# 生产者的服务只需要写生产者配置

consumer:

group-id: ${spring.application.name}-test

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

server:

port: 99913. 这个项目的生产者和消费者在一起,controller接受请求变成生产者,监听器是消费者

java

package kafkademo.controller;

import com.alibaba.fastjson.JSON;

import kafkademo.pojo.User;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class HelloController {

@Autowired

private KafkaTemplate<String ,String > kafkaTemplate;

@GetMapping("/hello")

public String hello(){

User user = new User("啊啊啊", 19);

kafkaTemplate.send("topic-test", JSON.toJSONString(user));

return "ok";

}

}

java

package kafkademo.listener;

import com.alibaba.fastjson.JSON;

import kafkademo.pojo.User;

import lombok.extern.slf4j.Slf4j;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

@Component

@Slf4j

public class HelloListener {

@KafkaListener(topics = "topic-test") // 消费者订阅的主题

public void onMessage(String message){

User user = JSON.parseObject(message, User.class);

System.out.println(user);

}

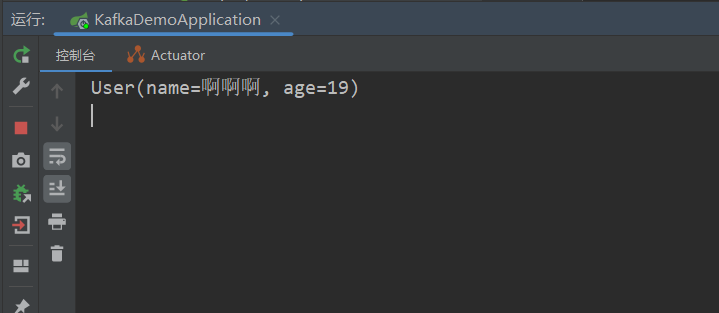

}运行结果

- 发送请求

- 结果