Acting Under Uncertainty

1 Reasoning Under Uncertainty

• Real world problems contain uncertainties due to:

--- partial observability,

--- nondeterminism, or

--- adversaries.

• Example of dental diagnosis using propositional logic

T oothache ⇒ C av ity

• However inaccurate, not all patients with toothaches have cavities

T oothache ⇒ C av ity ∨ GumP roblem ∨ Abscess . . .

• In order to make the rule true, we have to add an almost unlimited list of possible problems.

• The only way to fix the rule is to make it logically exhaustive

在现实世界中,由于部分可观察性、非确定性或对抗性,问题往往包含不确定性。例如,在牙科诊断中,我们可以使用命题逻辑来表达规则,如"牙痛 ⇒ 蛀牙"。然而,这个规则并不准确,因为并非所有有牙痛的患者都有蛀牙。

为了使这个规则在逻辑上正确,我们需要添加一个几乎无限的可能的疾病列表。例如,我们可以修改规则为"牙痛 ⇒ 蛀牙 ∨ 牙龈问题 ∨ 脓肿..."。这样,我们试图通过逻辑上详尽的方式来修复规则,使其更加准确。

在处理不确定性时,我们通常需要使用概率逻辑或模糊逻辑等技术,这些技术可以更好地处理真实世界中的不确定性和模糊性。例如,模糊逻辑允许我们表达和处理模糊的概念,如"有些"或"大多数",而不是简单的"是"或"否"。

• Rational agents much choose the right thing, which depends on:

--- relative importance of the various goals

--- the likelihood that, and degree to which, goals will be achieved.

• Large domains such as medical diagnosis fail for three main reasons:

--- Laziness: it is too much work to list complete set of logic rules 工作量大

--- Theoretical ignorance: medical science has no complete theory for the domain 理论不完整

--- Practical ignorance: even if we know all rules, uncertainty from partial observability

• An agent only has a degree of belief in the relevant sentences. 不确定性

• Probability Theory

--- tool to deal with degrees of belief of relevant sentences.

--- summarises the uncertainty that comes from our laziness and ignorance.

• Uncertainty and rational decisions

--- An agent requires preference among different possible outcomes of various plans

--- Utility Theory: defines the quality of the outcome being useful

◦ Every state has a degree of usefulness/utility

◦ Agent prefers higher utility

--- Decision Theory: Preferences (Utility Theory) combined with probabilities

◦ Decision theory = probability theory + utility theory

◦ agent is rational if and only if it chooses the action that yields the highest expected utility,

averaged over all the possible outcomes of the action.

◦ principle of maximum expected utility (MEU).

理性代理需要在其不同的计划的各种可能结果之间进行偏好排序。效用理论(Utility Theory)定义了结果的质量,即结果的效用。

- 效用 utility:每个状态都有一个效用度,它表示该状态对代理的有用程度。

- 偏好 preference :代理更倾向于具有更高效用的状态。

Probability Theory

• How can we deal with complex rules which are not always true?

• We extend propositional logic to deal with probabilities.

• We associate a degree of belief with each proposition.

--- P (h) = 0.5

• Here, h is a random variable.

• It has a domain of values it can take on (e.g., {true, false})

• Random variables can be

--- Boolean: as above, with domain {true, false}

--- Discrete: taking on values from some countable domain. E.g. Weather can be sunny,

rainy, windy, or cloudy.

--- Continuous: taking on values from the set of real numbers.

我们可以为命题"h"分配一个概率值 P(h) = 0.5。在这里,h 是一个随机变量,它有一个可以取值的域(例如,{true, false})。随机变量可以是布尔型、离散型或连续型。

- 布尔型随机变量:如上所述,其域为 {true, false}。

- 离散型随机变量:取值来自某个可数域。例如,天气可以是晴天、雨天、风天或多云。

- 连续型随机变量:取值来自实数集。

通过这种方式,我们可以表达命题的不确定性。例如,如果一个命题"h"的真实性是不确定的,我们可以将其概率设置为 0.5,表示我们对这个命题的信念程度是中等的。

• In logic, we had a number of possible worlds

--- one had to be true;

--- all others had to be false

• Probability theory talks about how probable each possible world is:

--- Ω (uppercase omega) refers to the sample space

(the set of all possible worlds)

--- ω (lowercase omega) refers to one such world

• A fully specified probability model

associates a probability P (ω) ∈ [0, 1] to each possible world

---

--- We can use logical formula to specify possible worlds and obtain the probability of all

the worlds in which the formula holds, so for any proposition φ,

我们可以使用逻辑公式来指定可能的世界,并计算公式成立的世界的概率。对于任何命题 φ,命题 φ 成立的世界的概率 P(φ) 等于所有满足 φ 的世界 ω 的概率之和

Basic Notation

• Prior, or unconditional probabilities measure the degree of belief associated with

some proposition in the absence of any other information.

• For example: P (coin = heads) = 0.5 (abbreviated P (heads) = 0.5)

• A probability distribution captures the probability of each possible value of the

proposition

E.g. Fair Coin P (coin)

--- P (heads) = 0.5

--- P (tails) = 0.5

--- We write this P (coin) : P (coin = h) = 0.5, P (coin = t) = 0.5

- 先验概率或无条件概率:这些概率衡量了在没有其他信息的情况下,某个命题的信念程度。

- 示例:P(coin = heads) = 0.5(简写为 P(heads) = 0.5),这表示公平硬币出现正面的概率是 0.5。

- 概率分布:概率分布捕捉了命题每个可能值的概率。

- 公平硬币的例子:P(heads) = 0.5,P(tails) = 0.5。

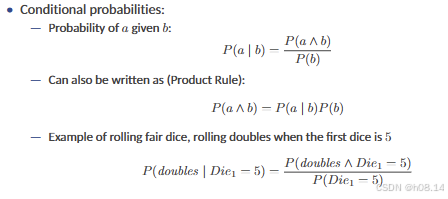

Conditional or posterior probability: given evidence that has happened, degree of

belief of new event

条件概率或后验概率是指在已经发生某些证据的情况下,对某个新事件的信念程度。

条件概率的表示方法:

- 给定 b 的事件 a 的概率:P(a | b) = P(a ∧ b) / P(b)

- 也可以写作乘积规则:P(a ∧ b) = P(a | b)P(b)

例如,掷一个公平骰子时,如果第一次骰子掷出 5,那么掷出双倍(两个骰子都是 5)的概率是 P(doubles | Die1 = 5) = P(doubles ∧ Die1 = 5) / P(Die1 = 5)。

条件概率是处理实际问题时非常重要的概念,因为它允许我们在已知某些信息的情况下,对其他事件进行概率推理。在决策制定和数据分析中,条件概率经常被用来估计未来事件的可能性。

Joint Probability Distribution

• A joint probability distribution captures the probability distribution of a set of

variables. E.g. P (coin, die)

• In the above examples, we have seen that P (a ∧ b) = P (a) ∗ P (b)

Warning: not always true!

• This only works when a and b are independent, formally, when

P (b | a) = P (b)

• E.g. a ≡ it rains today, b ≡ it rains tomorrow:

P (b | a) > P (b)

• Thus, in general: P (a ∧ b) = P (b | a)P (a) (conditional probability)

Notice this also means that P (a ∧ b) = P (a | b)P (b)

联合概率分布(Joint Probability Distribution)捕捉了一组变量的概率分布。例如,P(coin, die) 表示同时掷一个硬币和一个骰子的概率分布。

在之前的例子中,我们看到了 P(a ∧ b) = P(a) * P(b) 的规则,这通常适用于独立事件。然而,这并不总是正确的!这个规则只有在 a 和 b 是独立事件时才成立,在数学上,这表示 P(b | a) = P(b)。

例如,考虑两个事件:a ≡ 今天下雨,b ≡ 明天下雨。在这种情况下,P(b | a)(在已知今天下雨的情况下明天也下雨的概率)通常大于 P(b)(明天下雨的概率),因为今天下雨可能增加明天也下雨的可能性。

因此,在一般情况下,P(a ∧ b) = P(b | a)P(a)(条件概率)。这也意味着 P(a ∧ b) = P(a | b)P(b),这实际上是条件概率的另一种表示形式。