部署资源

- AUTODL 使用最小3080Ti 资源,cuda > 12.0

- 使用云服务器,部署fastGPT oneAPI,M3E 模型

操作步骤

-

配置代理

export HF_ENDPOINT=https://hf-mirror.com -

下载qwen2模型 - 如何下载huggingface

huggingface-cli download Qwen/Qwen2-7B-Instruct-GGUF qwen2-7b-instruct-q5_k_m.gguf --local-dir . --local-dir-use-symlinks False -

创建模型文件

FROM qwen2-7b-instruct-q5_k_m.gguf # set the temperature to 1 [higher is more creative, lower is more coherent] PARAMETER temperature 0.7 PARAMETER top_p 0.8 PARAMETER repeat_penalty 1.05 TEMPLATE """{{ if and .First .System }}<|im_start|>system {{ .System }}<|im_end|> {{ end }}<|im_start|>user {{ .Prompt }}<|im_end|> <|im_start|>assistant {{ .Response }}""" # set the system message SYSTEM """ You are a helpful assistant. """ -

导入模型

ollama create qwen2:7b -f Modelfile -

运行qwen2客户端

ollama run qwen2-7b -

运行m3e RAG模型

version: '3' services: m3e_api: container_name: m3e_api environment: TZ: Asia/Shanghai image: registry.cn-hangzhou.aliyuncs.com/fastgpt_docker/m3e-large-api:latest restart: always ports: - "6200:6008" -

运行fastAPI + oneAPI

version: '3.3' services: # db pg: image: pgvector/pgvector:0.7.0-pg15 # docker hub # image: registry.cn-hangzhou.aliyuncs.com/fastgpt/pgvector:v0.7.0 # 阿里云 container_name: pg restart: always ports: # 生产环境建议不要暴露 - 5432:5432 networks: - fastgpt environment: # 这里的配置只有首次运行生效。修改后,重启镜像是不会生效的。需要把持久化数据删除再重启,才有效果 - POSTGRES_USER=username - POSTGRES_PASSWORD=password - POSTGRES_DB=postgres volumes: - ./pg/data:/var/lib/postgresql/data mongo: image: mongo:5.0.18 # dockerhub # image: registry.cn-hangzhou.aliyuncs.com/fastgpt/mongo:5.0.18 # 阿里云 # image: mongo:4.4.29 # cpu不支持AVX时候使用 container_name: mongo restart: always ports: - 27017:27017 networks: - fastgpt command: mongod --keyFile /data/mongodb.key --replSet rs0 environment: - MONGO_INITDB_ROOT_USERNAME=myusername - MONGO_INITDB_ROOT_PASSWORD=mypassword volumes: - ./mongo/data:/data/db entrypoint: - bash - -c - | openssl rand -base64 128 > /data/mongodb.key chmod 400 /data/mongodb.key chown 999:999 /data/mongodb.key echo 'const isInited = rs.status().ok === 1 if(!isInited){ rs.initiate({ _id: "rs0", members: [ { _id: 0, host: "mongo:27017" } ] }) }' > /data/initReplicaSet.js # 启动MongoDB服务 exec docker-entrypoint.sh "$$@" & # 等待MongoDB服务启动 until mongo -u myusername -p mypassword --authenticationDatabase admin --eval "print('waited for connection')" > /dev/null 2>&1; do echo "Waiting for MongoDB to start..." sleep 2 done # 执行初始化副本集的脚本 mongo -u myusername -p mypassword --authenticationDatabase admin /data/initReplicaSet.js # 等待docker-entrypoint.sh脚本执行的MongoDB服务进程 wait $$! # fastgpt sandbox: container_name: sandbox image: ghcr.io/labring/fastgpt-sandbox:latest # git # image: registry.cn-hangzhou.aliyuncs.com/fastgpt/fastgpt-sandbox:latest # 阿里云 networks: - fastgpt restart: always fastgpt: container_name: fastgpt image: ghcr.io/labring/fastgpt:v4.8.9 # git # image: registry.cn-hangzhou.aliyuncs.com/fastgpt/fastgpt:v4.8.9 # 阿里云 ports: - 3200:3000 networks: - fastgpt depends_on: - mongo - pg - sandbox restart: always environment: # root 密码,用户名为: root。如果需要修改 root 密码,直接修改这个环境变量,并重启即可。 - DEFAULT_ROOT_PSW=1234 # AI模型的API地址哦。务必加 /v1。这里默认填写了OneApi的访问地址。 - OPENAI_BASE_URL=http://oneapi:3000/v1 # AI模型的API Key。(这里默认填写了OneAPI的快速默认key,测试通后,务必及时修改) - CHAT_API_KEY=sk-fastgpt # 数据库最大连接数 - DB_MAX_LINK=30 # 登录凭证密钥 - TOKEN_KEY=any # root的密钥,常用于升级时候的初始化请求 - ROOT_KEY=root_key # 文件阅读加密 - FILE_TOKEN_KEY=filetoken # MongoDB 连接参数. 用户名myusername,密码mypassword。 - MONGODB_URI=mongodb://myusername:mypassword@mongo:27017/fastgpt?authSource=admin # pg 连接参数 - PG_URL=postgresql://username:password@pg:5432/postgres # sandbox 地址 - SANDBOX_URL=http://sandbox:3000 # 日志等级: debug, info, warn, error - LOG_LEVEL=info - STORE_LOG_LEVEL=warn volumes: - ./config.json:/app/data/config.json # oneapi mysql: # image: registry.cn-hangzhou.aliyuncs.com/fastgpt/mysql:8.0.36 # 阿里云 image: mysql:8.0.36 container_name: mysql restart: always ports: - 3306:3306 networks: - fastgpt command: --default-authentication-plugin=mysql_native_password environment: # 默认root密码,仅首次运行有效 MYSQL_ROOT_PASSWORD: oneapimmysql MYSQL_DATABASE: oneapi volumes: - ./mysql:/var/lib/mysql oneapi: container_name: oneapi image: ghcr.io/songquanpeng/one-api:v0.6.7 # image: registry.cn-hangzhou.aliyuncs.com/fastgpt/one-api:v0.6.6 # 阿里云 ports: - 3001:3000 depends_on: - mysql networks: - fastgpt restart: always environment: # mysql 连接参数 - SQL_DSN=root:oneapimmysql@tcp(mysql:3306)/oneapi # 登录凭证加密密钥 - SESSION_SECRET=oneapikey # 内存缓存 - MEMORY_CACHE_ENABLED=true # 启动聚合更新,减少数据交互频率 - BATCH_UPDATE_ENABLED=true # 聚合更新时长 - BATCH_UPDATE_INTERVAL=10 # 初始化的 root 密钥(建议部署完后更改,否则容易泄露) - INITIAL_ROOT_TOKEN=fastgpt volumes: - ./oneapi:/data networks: fastgpt: -

编辑fastGPT 的模型配置

{

"feConfigs": {

"lafEnv": "https://laf.dev"

},

"systemEnv": {

"vectorMaxProcess": 15,

"qaMaxProcess": 15,

"pgHNSWEfSearch": 100

},

"llmModels":[

{

"model": "qwen2:7b",

"name": "qwen2",

"avatar": "/imgs/model/openai.svg",

"maxContext": 125000,

"maxResponse": 4000,

"quoteMaxToken": 120000,

"maxTemperature": 1.2,

"charsPointsPrice": 0,

"censor": false,

"vision": true,

"datasetProcess": false,

"usedInClassify": true,

"usedInExtractFields": true,

"usedInToolCall": true,

"usedInQueryExtension": true,

"toolChoice": true,

"functionCall": false,

"customCQPrompt": "",

"customExtractPrompt": "",

"defaultSystemChatPrompt": "",

"defaultConfig": {}

}

],

"vectorModels": [

{

"model": "mxbai-embed-large",

"name": "mxbai",

"avatar": "/imgs/model/openai.svg",

"charsPointsPrice": 0,

"defaultToken": 512,

"maxToken": 3000,

"weight": 100

},

{

"model": "m3e",

"name": "M3E",

"price": 0.1,

"defaultToken": 500,

"maxToken": 1800

}

],

"reRankModels": [],

"audioSpeechModels": [

{

"model": "tts-1",

"name": "OpenAI TTS1",

"charsPointsPrice": 0,

"voices": [

{ "label": "Alloy", "value": "alloy", "bufferId": "openai-Alloy" },

{ "label": "Echo", "value": "echo", "bufferId": "openai-Echo" },

{ "label": "Fable", "value": "fable", "bufferId": "openai-Fable" },

{ "label": "Onyx", "value": "onyx", "bufferId": "openai-Onyx" },

{ "label": "Nova", "value": "nova", "bufferId": "openai-Nova" },

{ "label": "Shimmer", "value": "shimmer", "bufferId": "openai-Shimmer" }

]

}

],

"whisperModel": {

"model": "whisper-1",

"name": "Whisper1",

"charsPointsPrice": 0

}

} -

打开oneapi http://ip:3001, 初始密码 root 1234, 配置qwen2 模型以及M3E模型

-

点击测试

- 注:M3E 点击测试后提示404是正常的

- 注:M3E 点击测试后提示404是正常的

-

重启fastgpt 和 oneapi

docker-compose restart fastgpt oneapi -

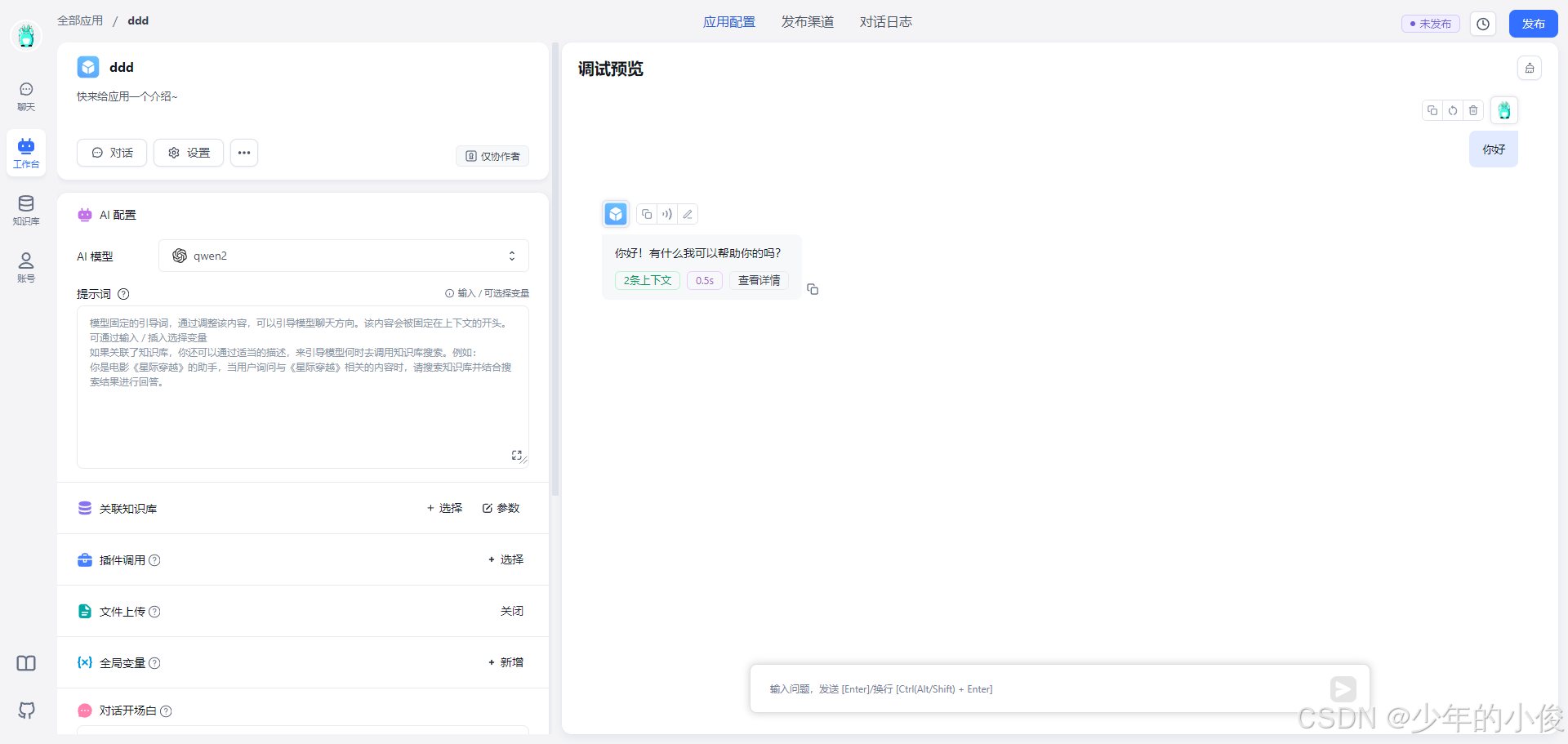

在fastgpt 中创建一个应用进行测试

-

大功告成!!!