一、网络介绍

ResNet网络模型主要为在网络训练模型中,增加残差训练机制,按照"结果不会变的更坏"的处理原则,同时避免深度神经网络中梯度消失与过拟合的问题。

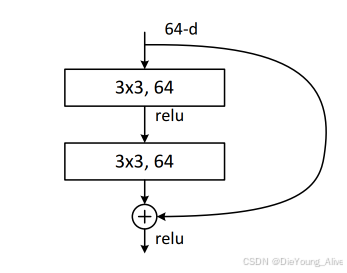

二、残差原理

三、源码解读

以ResNet18为例

1、BasicBlock == 为ResNet中的基础block模块,[2,2,2,2]为 网络中相关层循环的次数

python

# 当模型初始化时 weights != None时自动进行权重文件检索,不存在权重文件则进行权重文件下载。

def resnet18(*, weights: Optional[ResNet18_Weights] = None, progress: bool = True, **kwargs: Any) -> ResNet:

weights = ResNet18_Weights.verify(weights) # 验证初始化权重文件是否存在

# 当权重文件检索完毕后 进入_resnet 模块中,同时传入(BasicBlock, [2, 2, 2, 2], weights, progress, **kwargs) 相关参数

return _resnet(BasicBlock, [2, 2, 2, 2], weights, progress, **kwargs)

2、进入_resnet函数

python

def _resnet(

block: Type[Union[BasicBlock, Bottleneck]],

layers: List[int],

weights: Optional[WeightsEnum],

progress: bool,

**kwargs: Any,

) -> ResNet:

if weights is not None:

# 当权重文件 不等于 空值时,将模型输出的n_class 更改为 weights中的n_class数量

_ovewrite_named_param(kwargs, "num_classes", len(weights.meta["categories"]))

# 初始化模型

model = ResNet(block, layers, **kwargs)

if weights is not None:

# 为模型加载相关权重参数

model.load_state_dict(weights.get_state_dict(progress=progress))

# 返回模型

return model3、初始化模型,进入ResNet模块中,同时传入(block, layers, **kwargs),其中block为BasicBlock,layers为[2, 2, 2, 2]

python

class ResNet(nn.Module):

def __init__(

self,

block: Type[Union[BasicBlock, Bottleneck]],

layers: List[int],

num_classes: int = 1000,

zero_init_residual: bool = False,

groups: int = 1,

width_per_group: int = 64,

replace_stride_with_dilation: Optional[List[bool]] = None,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

_log_api_usage_once(self)

if norm_layer is None:

norm_layer = nn.BatchNorm2d

self._norm_layer = norm_layer

self.inplanes = 64 # 定义初始输入通道为64

self.dilation = 1

if replace_stride_with_dilation is None:

replace_stride_with_dilation = [False, False, False]

self.groups = groups

self.base_width = width_per_group

# 头部函数 用于读取图像数据

self.conv1 = nn.Conv2d(3, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False) # 常规7*7卷积核进行输入图像卷积 输入3 输出 64 kernel 7

self.bn1 = norm_layer(self.inplanes) # 常规的batchNorm2d

self.relu = nn.ReLU(inplace=True) # Relu函数

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1) # 重叠池化

# 核心层函数 == 构建 ResNet 网络模型的主体结构

self.layer1 = self._make_layer(block, 64, layers[0]) # 将(BasicBlock,64,2) 传入_make_layer 函数中 == 生成第一层layer

"""

········跳转········

"""

self.layer2 = self._make_layer(block, 128, layers[1], stride=2, dilate=replace_stride_with_dilation[0]) # 将(BasicBlock,128,2,2,False) 传入_make_layer 函数中 == 生成第二层layer

"""

········跳转········

"""

self.layer3 = self._make_layer(block, 256, layers[2], stride=2, dilate=replace_stride_with_dilation[1])

self.layer4 = self._make_layer(block, 512, layers[3], stride=2, dilate=replace_stride_with_dilation[2])

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_classes)

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode="fan_out", nonlinearity="relu")

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

if zero_init_residual:

for m in self.modules():

if isinstance(m, Bottleneck) and m.bn3.weight is not None:

nn.init.constant_(m.bn3.weight, 0) # type: ignore[arg-type]

elif isinstance(m, BasicBlock) and m.bn2.weight is not None:

nn.init.constant_(m.bn2.weight, 0) # type: ignore[arg-type]

def _make_layer( #

self, # 将(BasicBlock,128,2,2,False) 传入_make_layer 函数中 == 生成第二层layer

block: Type[Union[BasicBlock, Bottleneck]], # BasicBlock

planes: int, # 128

blocks: int, # 2

stride: int = 1, # 2

dilate: bool = False,

) -> nn.Sequential:

norm_layer = self._norm_layer # 图像标准化采用class中函数

downsample = None # 降采样默认为None

previous_dilation = self.dilation # 膨胀系数 默认为Flase

# 将(BasicBlock,64,2) 传入_make_layer 函数中 == 生成第一层layer

if dilate: # 第一层 False

self.dilation *= stride # 不执行

stride = 1 # 不执行

if stride != 1 or self.inplanes != planes * block.expansion: # 不执行 # 第二轮 stride = 2 ,执行降采样工作 == 采用1*1卷积进行处理,输入 64 输出 128 步长 2

downsample = nn.Sequential(

conv1x1(self.inplanes, planes * block.expansion, stride),

norm_layer(planes * block.expansion),

)

# 创建主体层

layers = [] # 创建层列表 用于存放 layers

layers.append(

block( # 将(BasicBlock,64,2) 传入_make_layer 函数中 == 生成第一层layer

# 将(BasicBlock,128,2,2,False) 传入_make_layer 函数中 == 生成第二层layer

self.inplanes, planes, stride, downsample, self.groups, self.base_width, previous_dilation, norm_layer # 对BasicBlock中的函数进行解析,创建第一层layers

)

)

self.inplanes = planes * block.expansion # 定义输入通道 为 64 * 1 # 第二层 定义修改输入通道为128

for _ in range(1, blocks): # blocks = 2

layers.append(

block(

self.inplanes, # 128

planes, # 128

groups=self.groups,

base_width=self.base_width,

dilation=self.dilation,

norm_layer=norm_layer,

)

)

return nn.Sequential(*layers) # 对layers列表进行解包,返回生成第一层 顺序容器跳转 BasicBlock函数

python

class BasicBlock(nn.Module):

expansion: int = 1 # 默认初始膨胀系数

def __init__(

self,

inplanes: int,

planes: int,

stride: int = 1,

downsample: Optional[nn.Module] = None,

groups: int = 1,

base_width: int = 64,

dilation: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

) -> None:

super().__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

if groups != 1 or base_width != 64:

raise ValueError("BasicBlock only supports groups=1 and base_width=64")

if dilation > 1:

raise NotImplementedError("Dilation > 1 not supported in BasicBlock")

# 将(BasicBlock,64,2 ) 传入_make_layer 函数中 == 生成第一层layer

self.conv1 = conv3x3(inplanes, planes, stride) # 第一层 常规 3*3卷积 输入64 输出 64 步长为1

self.bn1 = norm_layer(planes) # 标准化函数

self.relu = nn.ReLU(inplace=True) # 激活函数

self.conv2 = conv3x3(planes, planes) # 输入 64 输出 64 步长1

self.bn2 = norm_layer(planes) # 标准化函数

self.downsample = downsample # 降采样默认为None

self.stride = stride # stride 默认为1

def forward(self, x: Tensor) -> Tensor:

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out # 返回第一层 _make_layer 函数其余相同= 最终生成ResNet网络模型

四、模型特点

在模型进行全连接输出时,去除了原本存在的dropout函数,全连接进行激活后进行输出。