文章目录

- [1、docker swarm介绍](#1、docker swarm介绍)

- [2、docker swarm概念与架构](#2、docker swarm概念与架构)

-

- [2.1 架构](#2.1 架构)

- [2.2 概念](#2.2 概念)

- [3、docker swarm集群部署](#3、docker swarm集群部署)

-

- [3.1 容器镜像仓库 Harbor准备](#3.1 容器镜像仓库 Harbor准备)

- [3.2 主机准备](#3.2 主机准备)

-

- [3.2.1 主机名](#3.2.1 主机名)

- [3.2.2 IP地址](#3.2.2 IP地址)

- [3.2.3 主机名与IP地址解析](#3.2.3 主机名与IP地址解析)

- [3.3.4 主机时间同步](#3.3.4 主机时间同步)

- [3.2.5 主机安全设置](#3.2.5 主机安全设置)

- [3.3 docker安装](#3.3 docker安装)

-

- [3.3.1 docker安装](#3.3.1 docker安装)

- [3.3.2 配置docker daemon使用harbor](#3.3.2 配置docker daemon使用harbor)

- [3.4 docker swarm集群初始化](#3.4 docker swarm集群初始化)

-

- [3.4.1 获取docker swarm命令帮助](#3.4.1 获取docker swarm命令帮助)

- [3.4.2 在管理节点初始化](#3.4.2 在管理节点初始化)

- [3.4.3 添加工作节点到集群](#3.4.3 添加工作节点到集群)

- [3.4.4 添加管理节点到集群](#3.4.4 添加管理节点到集群)

- [3.4.5 模拟管理节点出现故障](#3.4.5 模拟管理节点出现故障)

-

- [3.4.5.1 停止docker服务并查看结果](#3.4.5.1 停止docker服务并查看结果)

- [3.4.5.2 启动docker服务并查看结果](#3.4.5.2 启动docker服务并查看结果)

- [4、docker swarm集群应用](#4、docker swarm集群应用)

-

- [4.1 容器镜像准备](#4.1 容器镜像准备)

-

- [4.1.1 v1版本](#4.1.1 v1版本)

- [4.1.2 v2版本](#4.1.2 v2版本)

- [4.2 发布服务](#4.2 发布服务)

-

- [4.2.1 使用`docker service ls`查看服务](#4.2.1 使用

docker service ls查看服务) - [4.2.2 发布服务](#4.2.2 发布服务)

- [4.2.3 查看已发布服务](#4.2.3 查看已发布服务)

- [4.2.4 查看已发布服务容器](#4.2.4 查看已发布服务容器)

- [4.2.5 访问已发布的服务](#4.2.5 访问已发布的服务)

- [4.2.1 使用`docker service ls`查看服务](#4.2.1 使用

- [4.3 服务扩展](#4.3 服务扩展)

- [4.4 服务裁减](#4.4 服务裁减)

- [4.5 负载均衡](#4.5 负载均衡)

- [4.6 删除服务](#4.6 删除服务)

- [4.7 服务版本更新](#4.7 服务版本更新)

- [4.8 服务版本回退](#4.8 服务版本回退)

- [4.9 服务版本滚动间隔更新](#4.9 服务版本滚动间隔更新)

- [4.10 副本控制器](#4.10 副本控制器)

- [4.11 在指定网络中发布服务](#4.11 在指定网络中发布服务)

- [4.12 服务网络模式](#4.12 服务网络模式)

- [4.13 服务数据持久化存储](#4.13 服务数据持久化存储)

-

- [4.13.1 本地存储](#4.13.1 本地存储)

-

- [4.13.1.1 在集群所有主机上创建本地目录](#4.13.1.1 在集群所有主机上创建本地目录)

- [4.13.1.2 发布服务时挂载本地目录到容器中](#4.13.1.2 发布服务时挂载本地目录到容器中)

- [4.13.1.3 验证是否使用本地目录](#4.13.1.3 验证是否使用本地目录)

- [4.13.2 网络存储](#4.13.2 网络存储)

-

- [4.13.2.1 部署NFS存储](#4.13.2.1 部署NFS存储)

- [4.13.2.2 为集群所有主机安装nfs-utils软件](#4.13.2.2 为集群所有主机安装nfs-utils软件)

- [4.13.2.3 创建存储卷](#4.13.2.3 创建存储卷)

- [4.13.2.4 发布服务](#4.13.2.4 发布服务)

- [4.13.2.5 验证](#4.13.2.5 验证)

- [4.14 服务互联与服务发现](#4.14 服务互联与服务发现)

- [4.15 docker swarm网络](#4.15 docker swarm网络)

- [5、docker stack](#5、docker stack)

-

- [5.1 docker stack介绍](#5.1 docker stack介绍)

- [5.2 docker stack与docker compose区别](#5.2 docker stack与docker compose区别)

- [5.3 docker stack常用命令](#5.3 docker stack常用命令)

- [5.4 部署wordpress案例](#5.4 部署wordpress案例)

- [5.5 部署nginx与web管理服务案例](#5.5 部署nginx与web管理服务案例)

- [5.6 nginx+haproxy+nfs案例](#5.6 nginx+haproxy+nfs案例)

1、docker swarm介绍

Docker Swarm是Docker官方提供的一款集群管理工具,其主要作用是把若干台Docker主机抽象为一个整体,并且通过一个入口统一管理这些Docker主机上的各种Docker资源。

Swarm和Kubernetes比较类似,但是更加轻,具有的功能也较kubernetes更少一些。

- 是docker host集群管理工具

- docker官方提供的

- docker 1.12版本以后

- 用来统一集群管理的,把整个集群资源做统一调度

- 比kubernetes要轻量化

- 实现scaling 规模扩大或缩小

- 实现rolling update 滚动更新或版本回退

- 实现service discovery 服务发现

- 实现load balance 负载均衡

- 实现route mesh 路由网格,服务治理

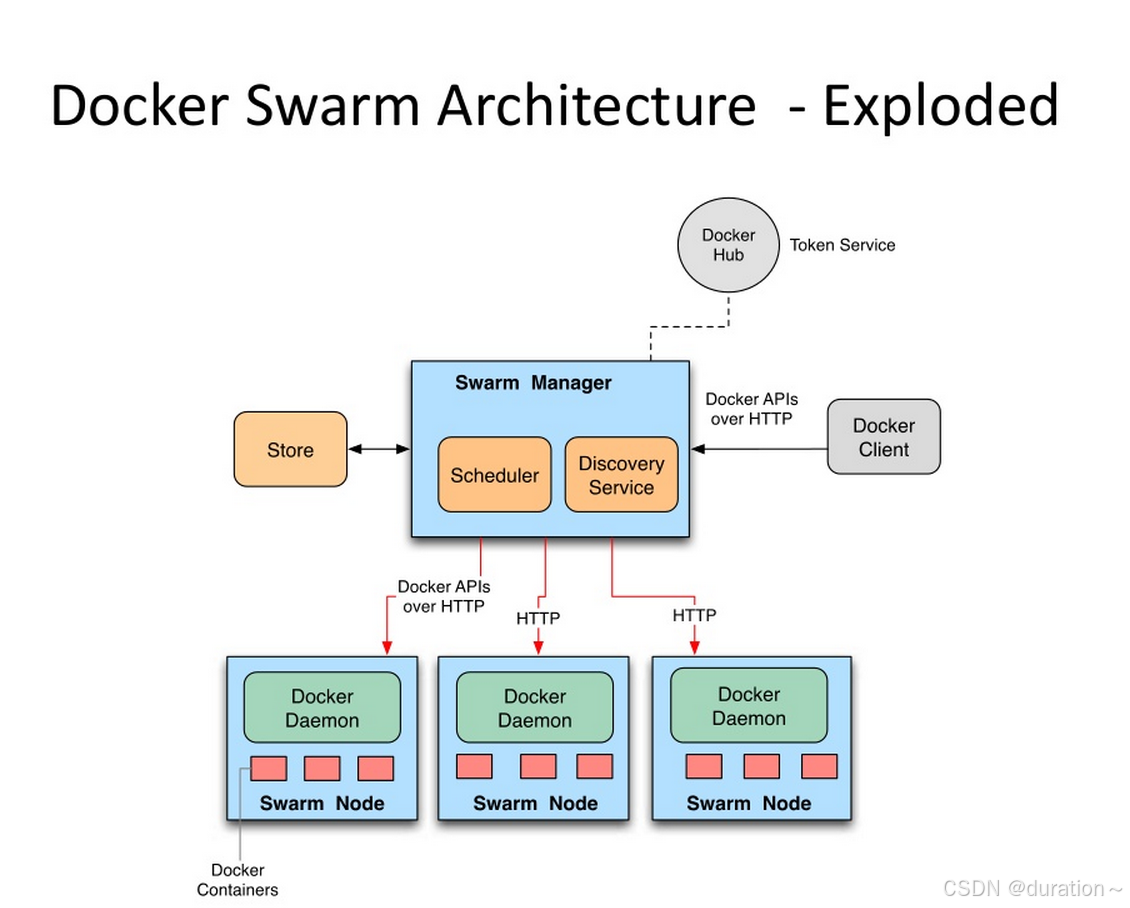

2、docker swarm概念与架构

参考网址:https://docs.docker.com/swarm/overview/

2.1 架构

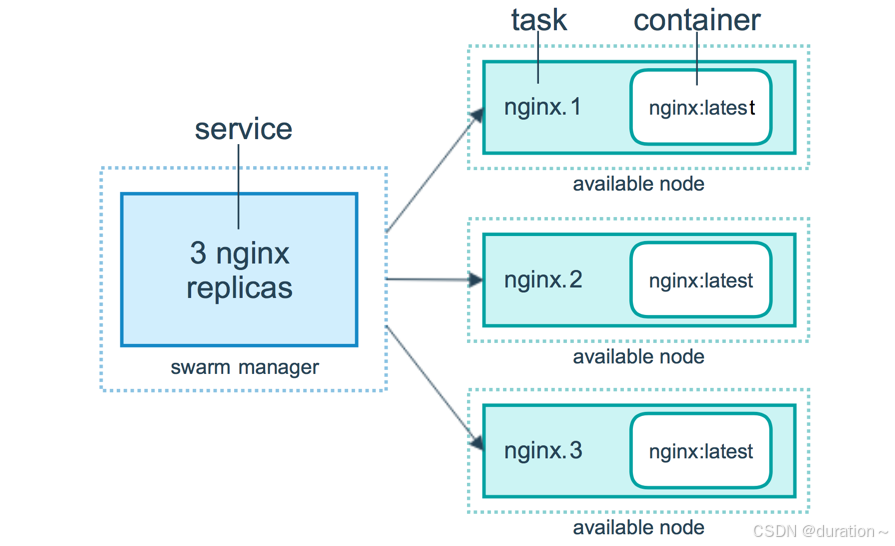

2.2 概念

节点 (node): 就是一台docker host上面运行了docker engine.节点分为两类:

- 管理节点(manager node) 负责管理集群中的节点并向工作节点分配任务

- 工作节点(worker node) 接收管理节点分配的任务,运行任务

可以使用如下命令查看节点

shell

docker node ls服务(services): 在工作节点运行的,由多个任务共同组成

shell

docker service ls任务(task): 运行在工作节点上容器或容器中包含应用,是集群中调度最小管理单元

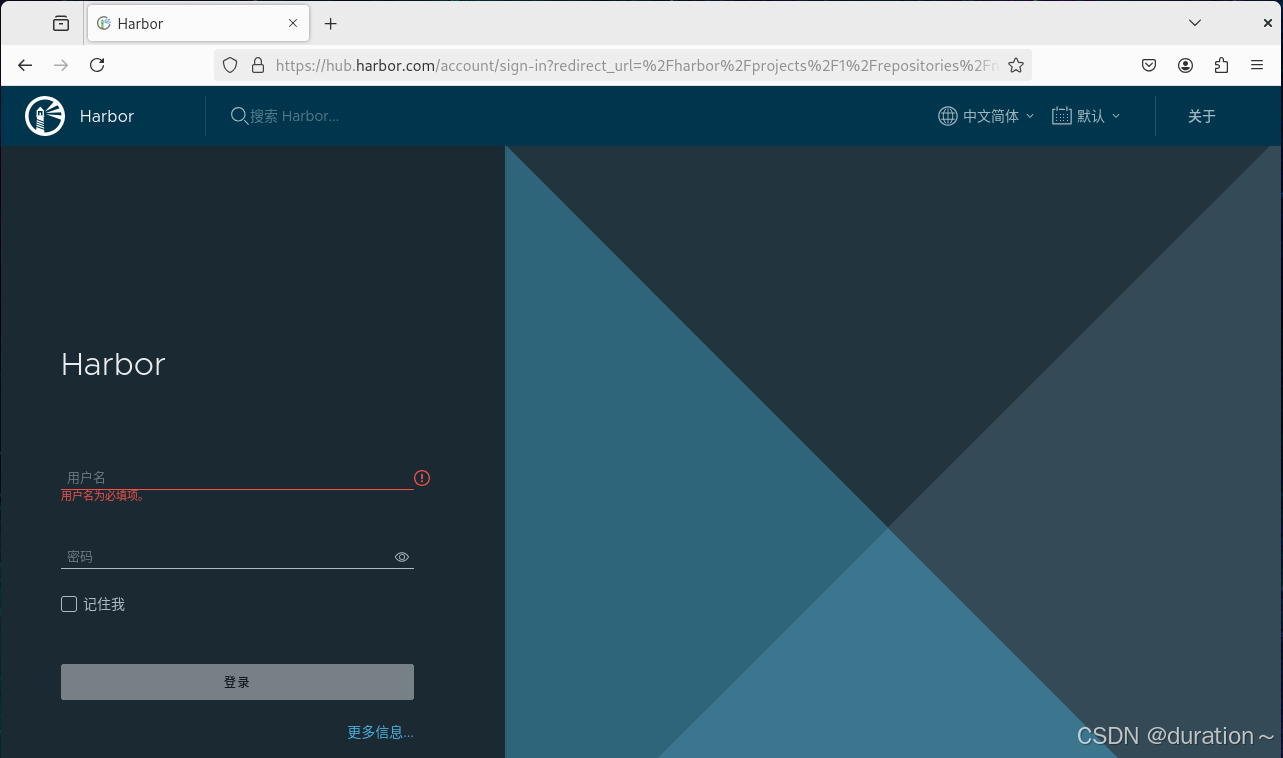

3、docker swarm集群部署

部署3主2从节点集群,另需提前准备1台本地容器镜像仓库服务器(Harbor)

3.1 容器镜像仓库 Harbor准备

3.2 主机准备

3.2.1 主机名

设置主机名

shell

hostnamectl set-hostname xxx说明:

powershell

sm1 管理节点1

sm2 管理节点2

sm3 管理节点3

sw1 工作节点1

sw2 工作节点23.2.2 IP地址

编辑网卡配置文件

shell

vim /etc/sysconfig/network-scripts/ifcfg-ens33内容如下

powershell

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

BOOTPROTO="static"

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens33"

DEVICE="ens33"

ONBOOT="yes"

IPADDR="192.168.150.xxx"

PREFIX="24"

GATEWAY="192.168.150.2"

DNS1="192.168.150.2"说明:

powershell

sm1 管理节点1 192.168.150.108

sm2 管理节点2 192.168.150.111

sm3 管理节点3 192.168.150.112

sw1 工作节点1 192.168.150.110

sw2 工作节点2 192.168.150.1093.2.3 主机名与IP地址解析

编辑主机/etc/hosts文件,添加主机名解析

shell

vim /etc/hosts添加如下内容

powershell

192.168.150.108 sm1

192.168.150.111 sm2

192.168.150.112 sm3

192.168.150.110 sw1

192.168.150.109 sw23.3.4 主机时间同步

添加计划任务,实现时间同步,同步服务器为time1.aliyun.com

shell

crontab -e查看添加后计划任务

powershell

[root@localhost ~]# crontab -l

0 */1 * * * ntpdate time1.aliyun.com3.2.5 主机安全设置

关闭防火墙

shell

systemctl stop firewalld

systemctl disable firewalld使用非交互式修改selinux配置文件

shell

sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config重启所有的主机系统

shell

reboot重启后验证selinux是否关闭

shell

sestatus3.3 docker安装

3.3.1 docker安装

下载YUM源

shell

wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo安装docker-ce

shell

yum -y install docker-ce启动docker服务并设置为开机自启动

shell

systemctl enable docker

systemctl start docker3.3.2 配置docker daemon使用harbor

编辑daemon.json文件,配置docker daemon使用harbor

shell

vim /etc/docker/daemon.json添加harbor域名解析

shell

echo "192.168.150.145 hub.harbor.com" >> /etc/hosts将私有仓库标记为不安全

json

{

"insecure-registries": ["hub.harbor.com"]

}重启docker服务

powershell

systemctl restart docker深度登录harbor

powershell

[root@localhost ~]# docker login hub.harbor.com

Username: admin

Password: 123456

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded3.4 docker swarm集群初始化

3.4.1 获取docker swarm命令帮助

获取docker swarm命令使用帮助

powershell

[root@localhost ~]# docker swarm --help

Usage: docker swarm COMMAND

Manage Swarm

Commands:

ca Display and rotate the root CA

init Initialize a swarm 初始化

join Join a swarm as a node and/or manager 加入集群

join-token Manage join tokens 集群加入时token管理

leave Leave the swarm 离开集群

unlock Unlock swarm

unlock-key Manage the unlock key

update Update the swarm 更新集群这个只能在20.10.12之前的版本看到这么多输出,现在只有init、join存在。

3.4.2 在管理节点初始化

查看更多帮助--help:

shell

[root@sm1 ~]# docker swarm init --help

Usage: docker swarm init [OPTIONS]

Initialize a swarm

Options:

--advertise-addr string Advertised address (format: "<ip|interface>[:port]")

--autolock Enable manager autolocking (requiring an unlock key to start a stopped manager)

--availability string Availability of the node ("active", "pause", "drain") (default "active")

--cert-expiry duration Validity period for node certificates (ns|us|ms|s|m|h) (default 2160h0m0s)

--data-path-addr string Address or interface to use for data path traffic (format: "<ip|interface>")

--data-path-port uint32 Port number to use for data path traffic (1024 - 49151). If no value is set or is set to 0, the default port (4789) is used.

--default-addr-pool ipNetSlice default address pool in CIDR format (default [])

--default-addr-pool-mask-length uint32 default address pool subnet mask length (default 24)

--dispatcher-heartbeat duration Dispatcher heartbeat period (ns|us|ms|s|m|h) (default 5s)

--external-ca external-ca Specifications of one or more certificate signing endpoints

--force-new-cluster Force create a new cluster from current state

--listen-addr node-addr Listen address (format: "<ip|interface>[:port]") (default 0.0.0.0:2377)

--max-snapshots uint Number of additional Raft snapshots to retain

--snapshot-interval uint Number of log entries between Raft snapshots (default 10000)

--task-history-limit int Task history retention limit (default 5)本次在sm1上初始化,初始化集群

powershell

[root@sm1 ~]# docker swarm init --advertise-addr 192.168.150.108 --listen-addr 192.168.150.108:2377

Swarm initialized: current node (3vny5618cymqu3se2u6hrm8dv) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-5jo6zl6w5jxfldy621p5b6j2y6wlisiyx3hh7regommro7llaw-0vpzhptq7kh7qk1p6jvgxoew6 192.168.150.108:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.说明:

--advertise-addr:当主机有多块网卡时使用其选择其中一块用于广播,用于其它节点连接管理节点使用--listen-addr:监听地址,用于承载集群流量使用

3.4.3 添加工作节点到集群

使用初始化过程中生成的token加入集群

powershell

[root@sw1 ~]# docker swarm join --token SWMTKN-1-5jo6zl6w5jxfldy621p5b6j2y6wlisiyx3hh7regommro7llaw-0vpzhptq7kh7qk1p6jvgxoew6 192.168.150.108:2377

This node joined a swarm as a worker.查看已加入的集群

powershell

[root@sm1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3vny5618cymqu3se2u6hrm8dv * sm1 Ready Active Leader 26.1.4

yr60hl09ykkm9pgeclsxogsti sw1 Ready Active 26.1.4如果使用的token已过期,可以再次生成新的token加入集群,重新生成用于添加工作点的token命令如下:

powershell

[root@sm1 ~]# docker swarm join-token worker

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-5jo6zl6w5jxfldy621p5b6j2y6wlisiyx3hh7regommro7llaw-0vpzhptq7kh7qk1p6jvgxoew6 192.168.150.108:2377当然如果token没有过期是不会发生改变的,加入集群:

powershell

[root@sw2 ~]# docker swarm join --token SWMTKN-1-5jo6zl6w5jxfldy621p5b6j2y6wlisiyx3hh7regommro7llaw-0vpzhptq7kh7qk1p6jvgxoew6 192.168.150.108:2377

This node joined a swarm as a worker.查看node状态

powershell

[root@sm1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3vny5618cymqu3se2u6hrm8dv * sm1 Ready Active Leader 26.1.4

yr60hl09ykkm9pgeclsxogsti sw1 Ready Active 26.1.4

4ezg4jqp7glqnk3fq8qyeyq3k sw2 Ready Active 26.1.43.4.4 添加管理节点到集群

生成用于添加管理节点加入集群所使用的token

powershell

[root@sm1 ~]# docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join --token SWMTKN-1-5jo6zl6w5jxfldy621p5b6j2y6wlisiyx3hh7regommro7llaw-a1x8y2gsn1sidq18k6c99uqys 192.168.150.108:2377将管理节点2加入集群

powershell

[root@sm2 ~]# docker swarm join --token SWMTKN-1-5jo6zl6w5jxfldy621p5b6j2y6wlisiyx3hh7regommro7llaw-a1x8y2gsn1sidq18k6c99uqys 192.168.150.108:2377

This node joined a swarm as a manager.将管理节点3加入集群

powershell

[root@sm3 ~]# docker swarm join --token SWMTKN-1-5jo6zl6w5jxfldy621p5b6j2y6wlisiyx3hh7regommro7llaw-a1x8y2gsn1sidq18k6c99uqys 192.168.150.108:2377

This node joined a swarm as a manager.查看节点状态

powershell

[root@sm1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3vny5618cymqu3se2u6hrm8dv * sm1 Ready Active Leader 26.1.4

tryv3pguoblxpobx6ahhz1z9y sm2 Ready Active Reachable 26.1.4

sgdtlqzl3sskqyjx7alqqsb28 sm3 Ready Active Reachable 26.1.4

yr60hl09ykkm9pgeclsxogsti sw1 Ready Active 26.1.4

4ezg4jqp7glqnk3fq8qyeyq3k sw2 Ready Active 26.1.43.4.5 模拟管理节点出现故障

3.4.5.1 停止docker服务并查看结果

停止管理节点sm1的docker服务

shell

systemctl stop docker查看node状态,发现sm1不可达,状态为未知,并重启选择出leader

powershell

[root@sm3 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3vny5618cymqu3se2u6hrm8dv sm1 Unknown Active Unreachable 26.1.4

tryv3pguoblxpobx6ahhz1z9y sm2 Ready Active Reachable 26.1.4

sgdtlqzl3sskqyjx7alqqsb28 * sm3 Ready Active Leader 26.1.4

yr60hl09ykkm9pgeclsxogsti sw1 Ready Active 26.1.4

4ezg4jqp7glqnk3fq8qyeyq3k sw2 Ready Active 26.1.43.4.5.2 启动docker服务并查看结果

再次启动sm1的docker服务

shell

systemctl start docker观察可以得知sm1是可达状态,但并不是Leader

powershell

[root@sm1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

3vny5618cymqu3se2u6hrm8dv * sm1 Ready Active Reachable 26.1.4

tryv3pguoblxpobx6ahhz1z9y sm2 Ready Active Reachable 26.1.4

sgdtlqzl3sskqyjx7alqqsb28 sm3 Ready Active Leader 26.1.4

yr60hl09ykkm9pgeclsxogsti sw1 Ready Active 26.1.4

4ezg4jqp7glqnk3fq8qyeyq3k sw2 Ready Active 26.1.44、docker swarm集群应用

4.1 容器镜像准备

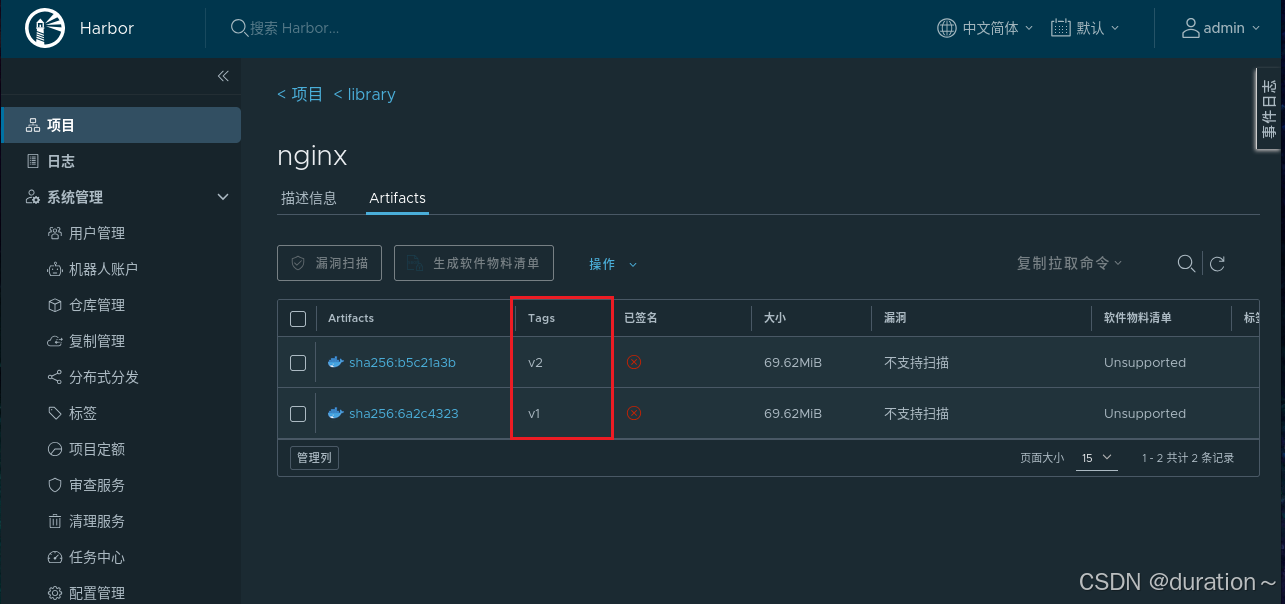

准备多个版本的容器镜像,以便于后期使用测试,在harbor服务器中操作。

4.1.1 v1版本

创建新的工作目录:

shell

mkdir nginxing

cd nginxing生成网站文件v1版

shell

vim index.html # 添加内容 v1编写Dockerfile文件,用于构建容器镜像,vim Dockerfile:

dockerfile

FROM nginx:latest

MAINTAINER 'tom<tom@docker.com>'

ADD index.html /usr/share/nginx/html

RUN echo "daemon off;" >> /etc/nginx/nginx.conf

EXPOSE 80

CMD /usr/sbin/nginx使用docker build构建容器镜像v1

powershell

docker build -t hub.harbor.com/library/nginx:v1 .登录harbor

powershell

[root@harbor nginxing]# docker hub.harbor.com

Username: admin

Password: 12345推送容器镜像v1至harbor

shell

docker push hub.harbor.com/library/nginx:v14.1.2 v2版本

生成v2版本时,和v1流程一样,只不过将index.html文件中的内容变为v2,打tag标记和推送时使用nginx:v2。

4.2 发布服务

在docker swarm中,对外暴露的是服务(service),而不是容器。

为了保持高可用架构,它准许同时启动多个容器共同支撑一个服务,如果一个容器挂了,它会自动使用另一个容器

4.2.1 使用docker service ls查看服务

在管理节点(manager node)上操作

powershell

[root@sm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS4.2.2 发布服务

docker service create发布服务,其他相关命令使用docker service --help查看

powershell

[root@sm1 ~]# docker service create --name nginx-svc-1 --replicas 1 --publish 80:80 hub.harbor.com/library/nginx:v1

jiibbvhsugclfbq5vz4sfpg7o

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service jiibbvhsugclfbq5vz4sfpg7o converged 说明

- 创建一个服务,名为

nginx_svc-1 --replicas 1指定1个副本--publish 80:80将服务内部的80端口发布到外部网络的80端口- 使用的镜像为

hub.harbor.com/library/nginx:v1

4.2.3 查看已发布服务

查看服务

powershell

[root@sm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

jiibbvhsugcl nginx-svc-1 replicated 1/1 hub.harbor.com/library/nginx:v1 *:80->80/tcp4.2.4 查看已发布服务容器

发现运行在了管理节点sm2上,看来worker和manger的关系并不是很严格

powershell

[root@sm2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

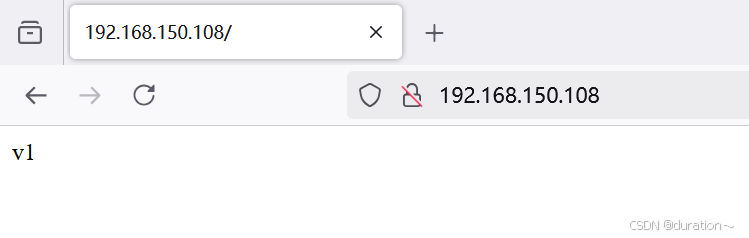

6250612e77ee hub.harbor.com/library/nginx:v1 "/docker-entrypoint...." 20 minutes ago Up 20 minutes 80/tcp nginx-svc-1.1.nr46gcgughgyy4g9ov6dsxhu04.2.5 访问已发布的服务

所有节点都能访问到

powershell

[root@sm1 ~]# curl http://192.168.150.108

v1

[root@sm1 ~]# curl http://192.168.150.109

v1

[root@sm1 ~]# curl http://192.168.150.110

v1

[root@sm1 ~]# curl http://192.168.150.111

v1

[root@sm1 ~]# curl http://192.168.150.112

v1在集群之外的主机访问

4.3 服务扩展

使用scale指定副本数来扩展

powershell

[root@sm1 ~]# docker service scale nginx-svc-1=2

nginx-svc-1 scaled to 2

overall progress: 2 out of 2 tasks

1/2: running [==================================================>]

2/2: running [==================================================>]

verify: Service nginx-svc-1 converged副本数变为两个

powershell

[root@sm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

jiibbvhsugcl nginx-svc-1 replicated 2/2 hub.harbor.com/library/nginx:v1 *:80->80/tcp查看具体都运行在哪个节点上

powershell

[root@sm1 ~]# docker service ps nginx-svc-1

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

nr46gcgughgy nginx-svc-1.1 hub.harbor.com/library/nginx:v1 sm2 Running Running 48 minutes ago

m1hflyisjrca nginx-svc-1.2 hub.harbor.com/library/nginx:v1 sm1 Running Running about a minute ago 验证服务1

powershell

[root@sm2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6250612e77ee hub.harbor.com/library/nginx:v1 "/docker-entrypoint...." About an hour ago Up About an hour 80/tcp nginx-svc-1.1.nr46gcgughgyy4g9ov6dsxhu0验证服务2

powershell

[root@sm1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

911658a9d519 hub.harbor.com/library/nginx:v1 "/docker-entrypoint...." 17 minutes ago Up 17 minutes 80/tcp nginx-svc-1.2.m1hflyisjrcatk0ua53xexgfe问题:现在仅扩展为2个副本,如果把服务扩展到3个副本,集群会如何分配主机呢?

powershell

[root@sm1 ~]# docker service scale nginx-svc-1=3

nginx-svc-1 scaled to 3

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service nginx-svc-1 converged

[root@sm1 ~]# docker service ps nginx-svc-1

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

nr46gcgughgy nginx-svc-1.1 hub.harbor.com/library/nginx:v1 sm2 Running Running about an hour ago

m1hflyisjrca nginx-svc-1.2 hub.harbor.com/library/nginx:v1 sm1 Running Running 27 minutes ago

j758n23lzir2 nginx-svc-1.3 hub.harbor.com/library/nginx:v1 sw2 Running Running 21 seconds ago 说明:

正常来说当把服务扩展到一定数量时,管理节点也会参与到负载运行中来,也就是说先消耗工作节点。

我们目前是5个节点,如果超过了这个阈值呢?

powershell

[root@sm1 ~]# docker service ps nginx-svc-1

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

nr46gcgughgy nginx-svc-1.1 hub.harbor.com/library/nginx:v1 sm2 Running Running about an hour ago

m1hflyisjrca nginx-svc-1.2 hub.harbor.com/library/nginx:v1 sm1 Running Running 28 minutes ago

j758n23lzir2 nginx-svc-1.3 hub.harbor.com/library/nginx:v1 sw2 Running Running about a minute ago

kzfmw02wfcrf nginx-svc-1.4 hub.harbor.com/library/nginx:v1 sw1 Running Running 17 seconds ago

mi9x8x5c7xud nginx-svc-1.5 hub.harbor.com/library/nginx:v1 sm3 Running Running 18 seconds ago

y0pb194wsg9e nginx-svc-1.6 hub.harbor.com/library/nginx:v1 sw1 Running Running 17 seconds ago 发现sw1节点工作了两个服务,其他节点都是1个

4.4 服务裁减

将服务缩容,还是使用scale指定副本数量

powershell

[root@sm1 ~]# docker service scale nginx-svc-1=2

nginx-svc-1 scaled to 2

overall progress: 2 out of 2 tasks

1/2: running [==================================================>]

2/2: running [==================================================>]

verify: Service converged由6个变为两个

powershell

[root@sm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

jiibbvhsugcl nginx-svc-1 replicated 2/2 hub.harbor.com/library/nginx:v1 *:80->80/tcp还是我们最开始的那两个服务

powershell

[root@sm1 ~]# docker service ps nginx-svc-1

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

nr46gcgughgy nginx-svc-1.1 hub.harbor.com/library/nginx:v1 sm2 Running Running 2 hours ago

m1hflyisjrca nginx-svc-1.2 hub.harbor.com/library/nginx:v1 sm1 Running Running 55 minutes ago4.5 负载均衡

修改sm1主机中容器网页文件

powershell

[root@sm1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

911658a9d519 hub.harbor.com/library/nginx:v1 "/docker-entrypoint...." About an hour ago Up About an hour 80/tcp nginx-svc-1.2.m1hflyisjrcatk0ua53xexgfe

[root@sm1 ~]# docker exec -it 91165 bash

root@911658a9d519:/# echo "sm1 web" > /usr/share/nginx/html/index.html

root@911658a9d519:/# exit修改sm2主机中容器网页文件

powershell

[root@sm2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6250612e77ee hub.harbor.com/library/nginx:v1 "/docker-entrypoint...." 2 hours ago Up 2 hours 80/tcp nginx-svc-1.1.nr46gcgughgyy4g9ov6dsxhu0

[root@sm2 ~]# docker exec -it 62506 bash

root@6250612e77ee:/# echo "sm2 web" > /usr/share/nginx/html/index.html

root@6250612e77ee:/# exit访问测试

powershell

[root@sm1 ~]# curl http://192.168.150.108

sm1 web

[root@sm1 ~]# curl http://192.168.150.108

sm2 web

[root@sm1 ~]# curl http://192.168.150.108

sm1 web

[root@sm1 ~]# curl http://192.168.150.108

sm2 web服务中包含多个容器时,每次访问将以轮询的方式访问到每个容器

4.6 删除服务

已经发布了两个服务

powershell

[root@sm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

jiibbvhsugcl nginx-svc-1 replicated 2/2 hub.harbor.com/library/nginx:v1 *:80->80/tcpreplicated是副本模式,不要尝试删掉主机中的容器,固定两个副本是删不掉的,要直接删除服务

powershell

[root@sm1 ~]# docker service rm nginx-svc-1

nginx-svc-1成功删除

powershell

[root@sm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS4.7 服务版本更新

新建服务

powershell

[root@sm1 ~]# docker service create --name nginx-svc --replicas=1 --publish 80:80 hub.harbor.com/library/nginx:v1

ydys1r5j0weauabx7g1hb99af

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service ydys1r5j0weauabx7g1hb99af converged查看响应的版本

powershell

[root@sm1 ~]# curl http://192.168.150.108

v1服务版本升级

powershell

[root@sm1 ~]# docker service update nginx-svc --image hub.harbor.com/library/nginx:v2

nginx-svc

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service nginx-svc converged 访问测试

powershell

[root@sm1 ~]# curl http://192.168.150.108

v24.8 服务版本回退

将版本回退到v1

powershell

[root@sm1 ~]# docker service update nginx-svc --image hub.harbor.com/library/nginx:v1

nginx-svc

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service nginx-svc converged4.9 服务版本滚动间隔更新

滚动更新其实也就是渐进性更新,先来60个服务副本

powershell

[root@sm1 ~]# docker service create --name nginx-svc --replicas 60 --publish 80:80 hub.harbor.com/library/nginx:v1

j490xzxdjt945lnweexhbn5vs

overall progress: 60 out of 60 tasks

verify: Service j490xzxdjt945lnweexhbn5vs converged 测试渐进更新,同时更新时也能对外提供服务,看看得到的结果是v1还是v2还是都有呢

powershell

[root@sm1 ~]# docker service update --replicas 60 --image hub.harbor.com/library/nginx:v2 --update-parallelism 5 --update-delay 30s nginx-svc

nginx-svc

overall progress: 30 out of 60 tasks说明

--update-parallelism 5指定并行更新数量--update-delay 30s指定更新间隔时间

docker swarm 滚动更新会造成节点上有exit状态的容器,可以考虑清除,命令如下:

powershell

[root@sw1 ~]# docker container prune

WARNING! This will remove all stopped containers.

Are you sure you want to continue? [y/N] y4.10 副本控制器

副本控制器,用来为用户维护所期望的服务状态

powershell

[root@sm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

dybls8f9dwvx nginx-svc replicated 3/3 hub.harbor.com/library/nginx:v1 *:80->80/tcp查看运行在哪一台主机上

powershell

[root@sm1 ~]# docker service ps nginx-svc

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

tv5uvizjiats nginx-svc.1 hub.harbor.com/library/nginx:v1 sm1 Running Running about a minute ago

wny58obj1aj7 nginx-svc.2 hub.harbor.com/library/nginx:v1 sw2 Running Running about a minute ago

m800frly1g61 nginx-svc.3 hub.harbor.com/library/nginx:v1 sm3 Running Running about a minute ago将sw2上的容器删除

shell

docker stop wny58obj1aj7

docker rm wny58obj1aj7查看副本数还是三个,并且sw2上出现了一个新的的任务代替掉了原来的任务

powershell

[root@sm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

dybls8f9dwvx nginx-svc replicated 3/3 hub.harbor.com/library/nginx:v1 *:80->80/tcp

[root@sm1 ~]# docker service ps nginx-svc

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

tv5uvizjiats nginx-svc.1 hub.harbor.com/library/nginx:v1 sm1 Running Running 5 minutes ago

xni3t7djiywu nginx-svc.2 hub.harbor.com/library/nginx:v1 sw2 Running Running 2 minutes ago

wny58obj1aj7 \_ nginx-svc.2 hub.harbor.com/library/nginx:v1 sw2 Shutdown Failed 2 minutes ago "task: non-zero exit (137)"

m800frly1g61 nginx-svc.3 hub.harbor.com/library/nginx:v1 sm3 Running Running 5 minutes ago 4.11 在指定网络中发布服务

让服务稳定的运行在某一网络,即使不是同一台主机也能稳定通信,创建一个overlay网络,没有具体指定系统会自动分配。

shell

docker network create -d overlay tomcat-net查看所拥有的网络

shell

[root@sm1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

51efd7178934 bridge bridge local

653ac927bfd9 docker_gwbridge bridge local

ae7acb0f9f83 host host local

prs03lea4z3t ingress overlay swarm

11a5a5beef46 none null local

9pbn3r4qsy3f tomcat-net overlay swarm查看我们所创建的网络对应的信息

powershell

[root@sm1 ~]# docker network inspect tomcat-net

[

{

"Name": "tomcat-net",

"Id": "9pbn3r4qsy3fgo6d5tburh0h9",

"Created": "2024-12-10T01:20:58.415167806Z",

"Scope": "swarm",

"Driver": "overlay",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "10.0.1.0/24",

"Gateway": "10.0.1.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": null,

"Options": {

"com.docker.network.driver.overlay.vxlanid_list": "4097"

},

"Labels": null

}

]说明:

创建名为tomcat-net的覆盖网络(Overlay Netowork),这是个二层网络,处于该网络下的docker容器,即使宿主机不一样,也能相互访问

创建名为tomcat的服务,使用刚才创建的覆盖网络

shell

docker service create --name tomcat \

--network tomcat-net \

-p 8080:8080 \

--replicas 2 \

tomcat:7.0.96-jdk8-openjdk查看服务运行的状态

powershell

[root@sm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

7fqceyg1ypjh tomcat replicated 2/2 tomcat:7.0.96-jdk8-openjdk *:8080->8080/tcp

[root@sm1 ~]# docker service ps tomcat

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

zeyff5juwuk8 tomcat.1 tomcat:7.0.96-jdk8-openjdk sw1 Running Running 38 seconds ago

qf2jmtx1buid tomcat.2 tomcat:7.0.96-jdk8-openjdk sm2 Running Running 52 seconds ago

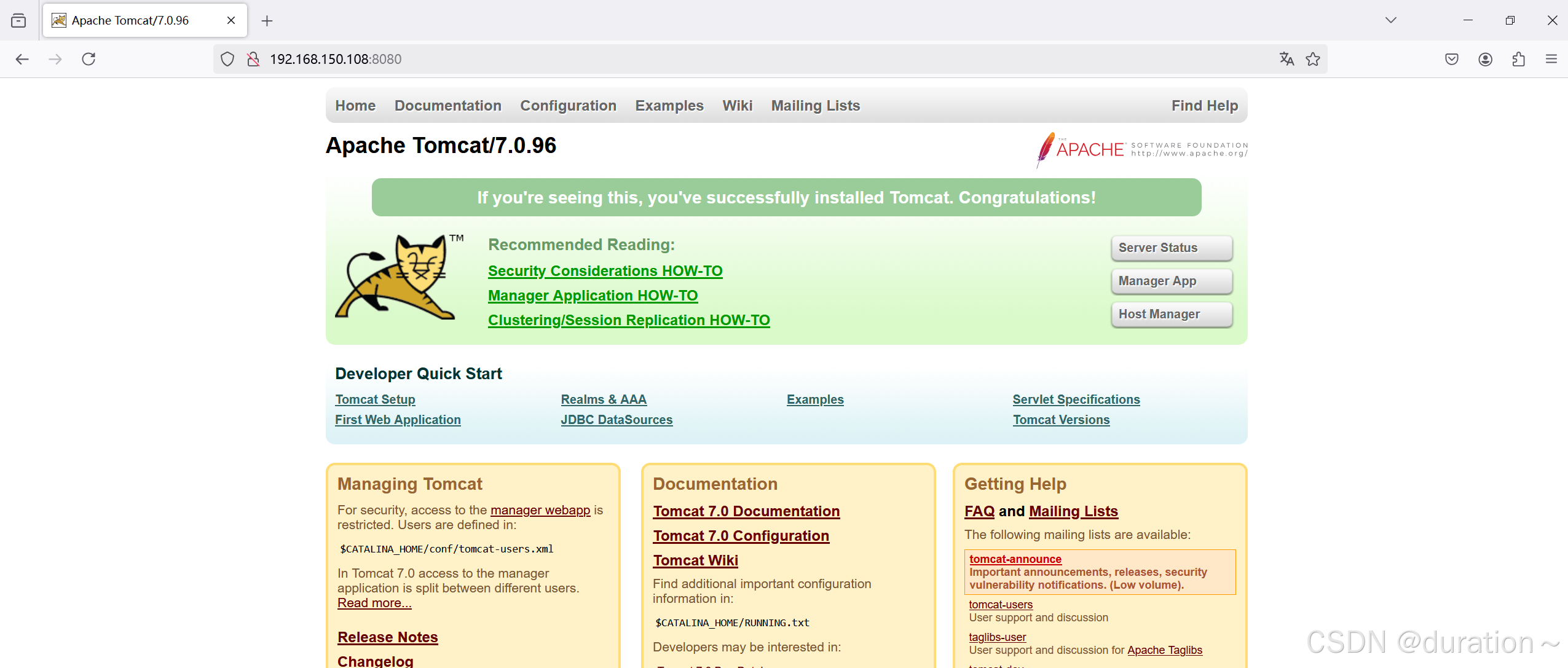

4.12 服务网络模式

服务模式一共有两种:Ingress和Host,如果不指定,则默认的是Ingress;

- Ingress模式下,到达Swarm任何节点的8080端口的流量,都会映射到任何服务副本的内部8080端口,就算该节点上没有tomcat服务副本也会映射;

- Host模式下,仅在运行有容器副本的机器上开放端口,

创建ingress模式服务

shell

docker service create --name tomcat \

--network tomcat-net \

-p 8080:8080 \

--replicas 2 \

tomcat:7.0.96-jdk8-openjdk查看运行在哪台主机上

powershell

[root@sm2 ~]# docker service ps tomcat

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

zeyff5juwuk8 tomcat.1 tomcat:7.0.96-jdk8-openjdk sw1 Running Running about an hour ago

qf2jmtx1buid tomcat.2 tomcat:7.0.96-jdk8-openjdk sm2 Running Running about an hour ago 在运行的sm2主机上查看IP地址

powershell

[root@sm2 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

01bd65de39d0 tomcat:7.0.96-jdk8-openjdk "catalina.sh run" About an hour ago Up About an hour 8080/tcp tomcat.2.qf2jmtx1buidzvqhdptjl34fq

[root@sm2 ~]# docker inspect 01bd65de39d0 | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "10.0.0.138",ingress IP地址

"IPAddress": "10.0.1.4", 容器IP地址sw1:

powershell

[root@sw1 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

af487bcd3fff tomcat:7.0.96-jdk8-openjdk "catalina.sh run" About an hour ago Up About an hour 8080/tcp tomcat.1.zeyff5juwuk8dh8vc1uxz9okt

[root@sw1 ~]# docker inspect af487bcd3fff | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "10.0.0.137",

"IPAddress": "10.0.1.3",发现所有主机都在监听8080端口的消息

powershell

[root@sm1 ~]# ss -anput | grep ":8080"

tcp LISTEN 0 128 [::]:8080 [::]:* users:(("dockerd",pid=2727,fd=54))

[root@sm2 ~]# ss -anput | grep ":8080"

tcp LISTEN 0 128 [::]:8080 [::]:* users:(("dockerd",pid=1229,fd=26))

[root@sm3 ~]# ss -anput | grep ":8080"

tcp LISTEN 0 128 [::]:8080 [::]:* users:(("dockerd",pid=1226,fd=22))

[root@sw1 ~]# ss -anput | grep ":8080"

tcp LISTEN 0 128 [::]:8080 [::]:* users:(("dockerd",pid=1229,fd=39))

[root@sw2 ~]# ss -anput | grep ":8080"

tcp LISTEN 0 128 [::]:8080 [::]:* users:(("dockerd",pid=1229,fd=22))创建host模式服务,使用Host模式的命令如下:

shell

docker service create --name tomcat \

--network tomcat-net \

--publish published=8080,target=8080,mode=host \

--replicas 3 \

tomcat:7.0.96-jdk8-openjdk查看运行的节点

powershell

[root@sm1 ~]# docker service ps tomcat

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

6x80to0wjmze tomcat.1 tomcat:7.0.96-jdk8-openjdk sw1 Running Running 37 seconds ago *:8080->8080/tcp,*:8080->8080/tcp

ztedsoasva0b tomcat.2 tomcat:7.0.96-jdk8-openjdk sm2 Running Running 37 seconds ago *:8080->8080/tcp,*:8080->8080/tcp

wfzner3uepbo tomcat.3 tomcat:7.0.96-jdk8-openjdk sm1 Running Running 8 seconds ago *:8080->8080/tcp,*:8080->8080/tcp查看端口映射情况

powershell

[root@sm1 ~]# ss -anput | grep ":8080"

tcp LISTEN 0 128 *:8080 *:* users:(("docker-proxy",pid=71202,fd=4))

tcp LISTEN 0 128 [::]:8080 [::]:* users:(("docker-proxy",pid=71206,fd=4))

[root@sm2 ~]# ss -anput | grep ":8080"

tcp LISTEN 0 128 *:8080 *:* users:(("docker-proxy",pid=69094,fd=4))

tcp LISTEN 0 128 [::]:8080 [::]:* users:(("docker-proxy",pid=69102,fd=4))

[root@sm3 ~]# ss -anput | grep ":8080"

# 没有被映射端口

[root@sw1 ~]# ss -anput | grep ":8080"

tcp LISTEN 0 128 *:8080 *:* users:(("docker-proxy",pid=68251,fd=4))

tcp LISTEN 0 128 [::]:8080 [::]:* users:(("docker-proxy",pid=68260,fd=4))

[root@sw2 ~]# ss -anput | grep ":8080"

# 没有被映射端口4.13 服务数据持久化存储

4.13.1 本地存储

4.13.1.1 在集群所有主机上创建本地目录

在有主机上创建本地存储目录,

shell

mkdir -p /data/nginxdata4.13.1.2 发布服务时挂载本地目录到容器中

powershell

[root@sm1 ~]# docker service create --name nginx-svc --replicas 3 --mount "type=bind,source=/data/nginxdata,target=/usr/share/nginx/html" --publish 80:80 hub.harbor.com/library/nginx:v1

urk439pgs6as4u5jlkg7ct929

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service urk439pgs6as4u5jlkg7ct929 converged4.13.1.3 验证是否使用本地目录

查看服务运行在哪个节点上

powershell

[root@sm1 ~]# docker service ps nginx-svc

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

ezmb0p5nimi1 nginx-svc.1 hub.harbor.com/library/nginx:v1 sw2 Running Running about a minute ago

cmhqfi3sl9t1 nginx-svc.2 hub.harbor.com/library/nginx:v1 sm1 Running Running about a minute ago

bkhj7ffeu2d8 nginx-svc.3 hub.harbor.com/library/nginx:v1 sw1 Running Running about a minute ago 在sw1上执行

shell

ls /data/nginxdata/

echo "sw1 web" > /data/nginxdata/index.html在sw2上执行

shell

ls /data/nginxdata/

echo "sw2 web" > /data/nginxdata/index.html在sm1上执行

shell

ls /data/nginxdata

echo "sm1 web" > /data/nginxdata/index.html访问测试

powershell

[root@sm1 ~]# curl http://192.168.150.108

sw1 web

[root@sm1 ~]# curl http://192.168.150.108

sw2 web

[root@sm1 ~]# curl http://192.168.150.108

sm1 web发现存在数据一致性问题

4.13.2 网络存储

网络存储卷可以实现跨docker宿主机的数据共享,数据持久保存到网络存储卷中

在创建service时添加卷的挂载参数,网络存储卷可以帮助自动挂载(但需要集群节点都创建该网络存储卷)

4.13.2.1 部署NFS存储

本案例以NFS提供远程存储为例

在192.168.150.145服务器上部署NFS服务,共享目录为docker swarm集群主机使用。

shell

mkdir /opt/dockervolume安装nfs网络存储

shell

yum -y install nfs-utils配置共享目录

shell

echo "/opt/dockervolume *(rw,sync,no_root_squash)" >> /etc/exports启动nfs,并设置开机自启

shell

systemctl enable nfs-server

systemctl start nfs-server查询本地 NFS 服务器的共享目录

powershell

[root@harbor ~]# showmount -e

Export list for harbor:

/opt/dockervolume *4.13.2.2 为集群所有主机安装nfs-utils软件

安装

shell

yum -y install nfs-utils测试

powershell

[root@sm1 ~]# showmount -e 192.168.150.145

Export list for 192.168.150.145:

/opt/dockervolume *4.13.2.3 创建存储卷

集群中所有节点执行,使用nfs存储卷

powershell

docker volume create --driver local --opt type=nfs --opt o=addr=192.168.150.145,rw --opt device=:/opt/dockervolume nginx_volume在任意一节点查看存储卷

powershell

[root@sm1 ~]# docker volume ls

DRIVER VOLUME NAME

local nginx_volume查看详细信息

powershell

[root@sm1 ~]# docker volume inspect nginx_volume

[

{

"CreatedAt": "2024-12-10T21:48:46+08:00",

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/nginx_volume/_data",

"Name": "nginx_volume",

"Options": {

"device": ":/opt/dockervolume",

"o": "addr=192.168.150.145,rw",

"type": "nfs"

},

"Scope": "local"

}

]4.13.2.4 发布服务

重新发布服务使用存储卷

powershell

[root@sm1 ~]# docker service create --name nginx-svc --replicas 3 --publish 80:80 --mount "type=volume,source=nginx_volume,target=/usr/share/nginx/html" hub.harbor.com/library/nginx:v1

sdl5c9icw8spyq3ifms7glb7f

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service sdl5c9icw8spyq3ifms7glb7f converged 4.13.2.5 验证

查看挂载服务的节点

powershell

[root@sm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

sdl5c9icw8sp nginx-svc replicated 3/3 hub.harbor.com/library/nginx:v1 *:80->80/tcp

[root@sm1 ~]# docker service ps nginx-svc

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

aqji1emxlhhp nginx-svc.1 hub.harbor.com/library/nginx:v1 sw1 Running Running about a minute ago

0n8f5e4lur1r nginx-svc.2 hub.harbor.com/library/nginx:v1 sw2 Running Running about a minute ago

qmcpkxbbhbzb nginx-svc.3 hub.harbor.com/library/nginx:v1 sm1 Running Running about a minute ago查看挂载信息,发现sm1、sw1、sw2已经使用了nfs存储

powershell

[root@sm1 ~]# df -Th | grep nfs

:/opt/dockervolume nfs 37G 14G 24G 36% /var/lib/docker/volumes/nginx_volume/_data

[root@sm2 ~]# df -Th | grep nfs

# 无输出

[root@sm3 ~]# df -Th | grep nfs

# 无输出

[root@sw1 ~]# df -Th | grep nfs

:/opt/dockervolume nfs 37G 14G 24G 36% /var/lib/docker/volumes/nginx_volume/_data

[root@sw2 ~]# df -Th | grep nfs

:/opt/dockervolume nfs 37G 14G 24G 36% /var/lib/docker/volumes/nginx_volume/_data在nfs主机上生成nginx首页文件

shell

echo "nfs test" > /opt/dockervolume/index.html测试

powershell

[root@sm1 ~]# curl http://192.168.150.108

nfs test

[root@sm1 ~]# curl http://192.168.150.108

nfs test

[root@sm1 ~]# curl http://192.168.150.108

nfs test

[root@sm1 ~]# curl http://192.168.150.108

nfs test4.14 服务互联与服务发现

如果一个nginx服务与一个mysql服务之间需要连接,在docker swarm如何实现呢?

方法1:

把mysql服务也使用 --publish参数发布到外网,但这样做的缺点是:mysql这种服务发布到外网不安全

方法2:

将mysql服务等运行在内部网络,只需要nginx服务能够连接mysql就可以了,在docker swarm中可以使用overlay网络来实现。

但现在还有个问题,服务副本数发生变化时,容器内部的IP发生变化时,我们希望仍然能够访问到这个服务,这就是服务发现(service discovery).

通过服务发现,service的使用者都不需要知道service运行在哪里,IP是多少,有多少个副本,就能让service通信

下面使用docker network ls查看到的ingress网络就是一个overlay类型的网络,但它不支持服务发现

powershell

[root@sm1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

51efd7178934 bridge bridge local

653ac927bfd9 docker_gwbridge bridge local

ae7acb0f9f83 host host local

prs03lea4z3t ingress overlay swarm 此处

11a5a5beef46 none null local

9pbn3r4qsy3f tomcat-net overlay swarm我们需要自建一个overlay网络来实现服务发现,需要相互通信的service也必须属于同一个overlay网络

shell

docker network create --driver overlay --subnet 192.168.100.0/24 self-network说明:

--driver overlay:指定为overlay类型--subnet:分配网段self-network: 为自定义的网络名称

powershell

[root@sm1 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

51efd7178934 bridge bridge local

653ac927bfd9 docker_gwbridge bridge local

ae7acb0f9f83 host host local

prs03lea4z3t ingress overlay swarm

11a5a5beef46 none null local

rkgawik6dyms self-network overlay swarm 此处

9pbn3r4qsy3f tomcat-net overlay swarm验证自动发现

1、发布nignx-svc服务,指定在自建的overlay网络

powershell

[root@sm1 ~]# docker service create --name nginx-svc --replicas 3 --network self-network --publish 80:80 hub.harbor.com/library/nginx:v1

qi8cft81l6kthwtxf58ef9oyw

overall progress: 3 out of 3 tasks

1/3: running [==================================================>]

2/3: running [==================================================>]

3/3: running [==================================================>]

verify: Service qi8cft81l6kthwtxf58ef9oyw converged2、发布一个busybox服务,也指定在自建的overlay网络

powershell

[root@sm1 ~]# docker service create --name test --network self-network busybox sleep 100000

ucynn8aziz3ee0gqgo4gyuq1j

overall progress: 1 out of 1 tasks

1/1: running [==================================================>]

verify: Service ucynn8aziz3ee0gqgo4gyuq1j converged说明:

- 服务名为test

- busybox是一个集成了linux常用命令的软件,这里使用它可以比较方便的测试与nginx_service的连通性

- 没有指定副本,默认1个副本

- 因为它并不是长时间运行的daemon守护进程,所以运行一下就会退出。

- sleep 100000是指定一个长的运行时间,让它有足够的时间给我们测试

3、查出test服务在哪个节点运行的容器

powershell

[root@sm1 ~]# docker service ps test

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

wfcwg32u4ae3 test.1 busybox:latest sm3 Running Running 2 minutes ago 4、去运行test服务的容器节点查找容器的名称

powershell

[root@sm3 ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d3bbf1e846d0 busybox:latest "sleep 100000" 2 minutes ago Up 2 minutes test.1.wfcwg32u4ae32ua175phxfyn45, 使用查找出来的容器名称,执行命令测试

powershell

[root@sm3 ~]# docker exec -it test.1.wfcwg32u4ae32ua175phxfyn4 ping -c 2 nginx-svc

PING nginx-svc (192.168.100.2): 56 data bytes

64 bytes from 192.168.100.2: seq=0 ttl=64 time=0.090 ms

64 bytes from 192.168.100.2: seq=1 ttl=64 time=0.128 ms

--- nginx-svc ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.090/0.109/0.128 ms测试的结果为: test服务可以ping通nginx_service服务,并且返回的IP为自建网络的一个IP(192.168.100.2)

powershell

[root@sm1 ~]# docker service inspect nginx-svc

[

.......

"VirtualIPs": [

{

"NetworkID": "prs03lea4z3tfkcagy6hkpbhv",

"Addr": "10.0.0.72/24"

},

{

"NetworkID": "rkgawik6dymse7dc6jn1e9l6f",

"Addr": "192.168.100.2/24"

}

]

}

}

]6、分别去各个节点查找nginx_service服务的各个容器(3个副本),发现它们的IP与上面ping的IP都不同

powershell

[root@sm1 ~]# docker inspect nginx-svc.1.y5us4d4ia9ri4c9gfnjlb74ny | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "10.0.0.73",

"IPAddress": "192.168.100.3",

[root@sw1 ~]# docker inspect nginx-svc.2.ip2a7k68cgjs1zn9ua1nic7wj | grep -i ipaddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "10.0.0.74",

"IPAddress": "192.168.100.4",

[root@sm2 ~]# docker inspect nginx-svc.3.qxleseps0phhr0hkdf7qqi05y | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "10.0.0.75",

"IPAddress": "192.168.100.5",7、后续测试,将nginx_service服务扩展、裁减、更新、回退,都不影响test服务访问nginx-svc。

结论: 在自建的overlay网络内,通过服务发现可以实现服务之间通过服务名(不用知道对方的IP)互联,而且不会受服务内副本个数和容器内IP变化等的影响。

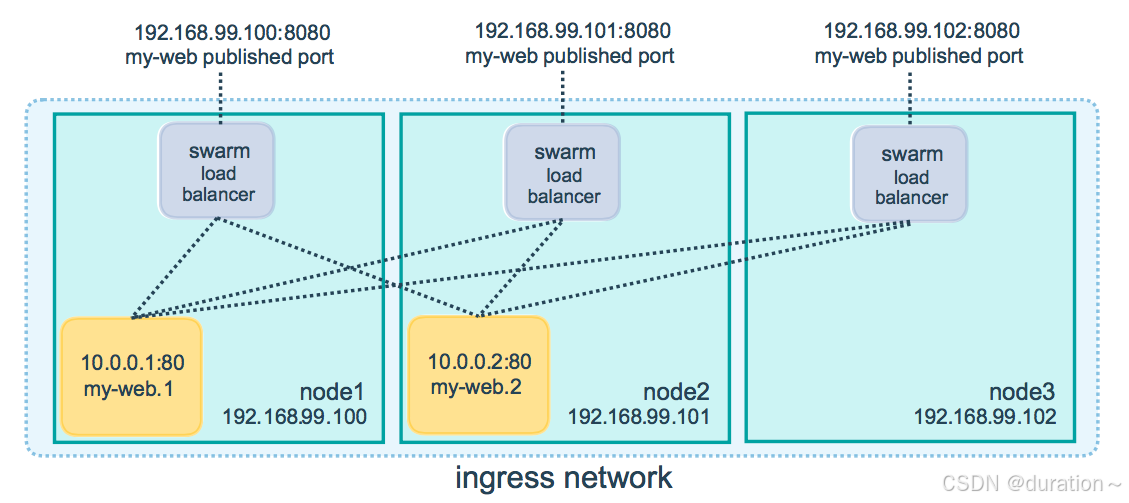

4.15 docker swarm网络

在 Swarm Service 中有三个重要的网络概念:

- Overlay networks 管理 Swarm 中 Docker 守护进程间的通信。你可以将服务附加到一个或多个已存在的

overlay网络上,使得服务与服务之间能够通信。 - ingress network 是一个特殊的

overlay网络,用于服务节点间的负载均衡。当任何 Swarm 节点在发布的端口上接收到请求时,它将该请求交给一个名为IPVS的模块。IPVS跟踪参与该服务的所有IP地址,选择其中的一个,并通过ingress网络将请求路由到它。

初始化或加入 Swarm 集群时会自动创建ingress网络,大多数情况下,用户不需要自定义配置,但是 docker 17.05 和更高版本允许你自定义。 - docker_gwbridge 是一种桥接网络,将

overlay网络(包括ingress网络)连接到一个单独的 Docker 守护进程的物理网络。默认情况下,服务正在运行的每个容器都连接到本地 Docker 守护进程主机的docker_gwbridge网络。

docker_gwbridge网络在初始化或加入 Swarm 时自动创建。大多数情况下,用户不需要自定义配置,但是 Docker 允许自定义。

| 名称 | 类型 | 注释 |

|---|---|---|

| docker_gwbridge | bridge | none |

| ingress | overlay | none |

| custom-network | overlay | none |

- docker_gwbridge和ingress是swarm自动创建的,当用户执行了docker swarm init/connect之后。

- docker_gwbridge是bridge类型的负责本机container和主机直接的连接

- ingress负责service在多个主机container之间的路由。

- custom-network是用户自己创建的overlay网络,通常我们都需要创建自己的network并把service挂在上面。

5、docker stack

5.1 docker stack介绍

早期使用service发布,每次只能发布一个service。

yaml可以发布多个服务,但是使用docker-compose只能在一台主机发布。

一个stack就是一组有关联的服务的组合,可以一起编排,一起发布,一起管理。

5.2 docker stack与docker compose区别

Docker stack 会忽略了"构建"指令,无法使用stack命令构建新镜像,它是需要镜像是预先已经构建好的,所以docker-compose更适合于开发场景;

Docker Compose是一个Python项目,在内部,它使用Docker API规范来操作容器,所以需要安装Docker-compose,以便与Docker一起在您的计算机上使用;

Docker Stack 功能包含在Docker引擎中。你不需要安装额外的包来使用它,docker stacks 只是swarm mode的一部分。

Docker stack 不支持基于第2版写的docker-compose.yml,也就是version版本至少为3。然而Docker Compose对版本为2和3的文件仍然可以处理;

docker stack把docker compose的所有工作都做完了,因此docker stack将占主导地位。同时,对于大多数用户来说,切换到使用docker stack既不困难,也不需要太多的开销。如果您是Docker新手,或正在选择用于新项目的技术,请使用docker stack。

5.3 docker stack常用命令

| 命令 | 描述 |

|---|---|

| docker stack --help | 查看更多命令帮助 |

| docker stack deploy | 部署新的堆栈或更新现有堆栈 |

| docker stack ls | 列出现有堆栈 |

| docker stack ps | 列出堆栈中的任务 |

| docker stack rm | 删除一个或多个堆栈 |

| docker stack services | 列出堆栈中的服务 |

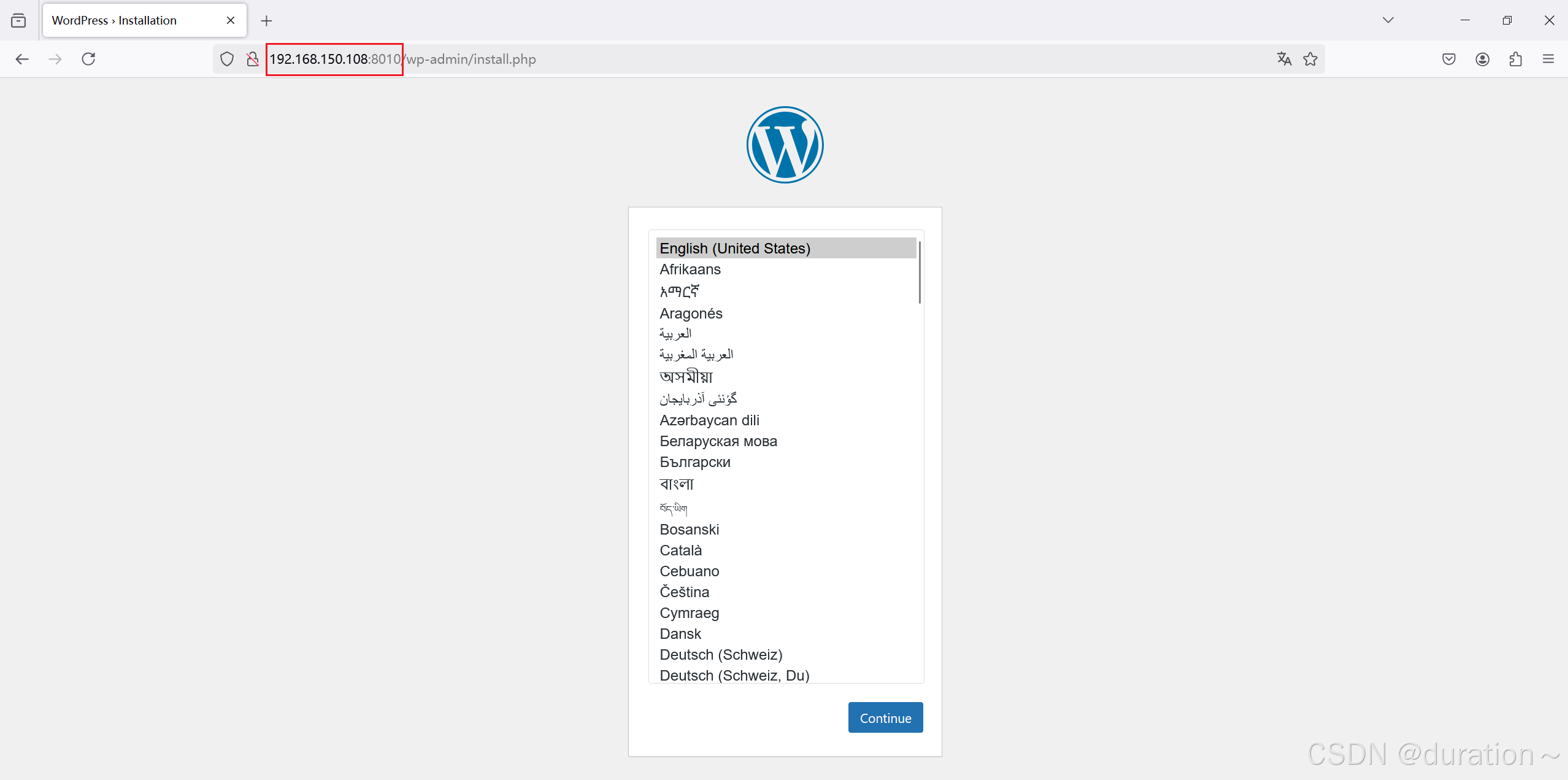

5.4 部署wordpress案例

1、编写YAML文件stack1.yaml

yaml

version: '3'

services:

db:

image: mysql:5.7

environment:

MYSQL_ROOT_PASSWORD: somewordpress

MYSQL_DATABASE: wordpress

MYSQL_USER: wordpress

MYSQL_PASSWORD: wordpress

deploy:

replicas: 1

wordpress:

depends_on:

- db

image: wordpress:latest

ports:

- "8010:80"

environment:

WORDPRESS_DB_HOST: db:3306

WORDPRESS_DB_USER: wordpress

WORDPRESS_DB_PASSWORD: wordpress

WORDPRESS_DB_NAME: wordpress

deploy:

replicas: 1

placement:

constraints: [node.role == manager]说明:

- placement的constraints限制此容器在manager节点

2、使用docker stack发布

powershell

[root@sm1 ~]# docker stack deploy -c stack1.yaml stack1

Creating network stack1_default 创建自建的overlay网络

Creating service stack1_db 创建stack1_db服务

Creating service stack1_wordpress 创建stack1_wordpress服务如果报错,使用 docker stack rm stack1删除,排完错再启动

查看服务

powershell

[root@sm1 ~]# docker stack ls

NAME SERVICES

stack1 2查看服务详细信息,下面这两种方式都可以

powershell

[root@sm1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

zal9b45ej93m stack1_db replicated 1/1 mysql:5.7

b1k3pg4etfwf stack1_wordpress replicated 1/1 wordpress:latest *:8010->80/tcp

[root@sm1 ~]# docker stack services stack1

ID NAME MODE REPLICAS IMAGE PORTS

zal9b45ej93m stack1_db replicated 1/1 mysql:5.7

b1k3pg4etfwf stack1_wordpress replicated 1/1 wordpress:latest *:8010->80/tcp查看运行在哪个节点上

powershell

[root@sm1 ~]# docker stack ps stack1

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

n0eeugcgw33w stack1_db.1 mysql:5.7 sm1 Running Running 8 minutes ago

dfcuy0hiek47 stack1_wordpress.1 wordpress:latest sm3 Running Running 8 minutes ago3、验证

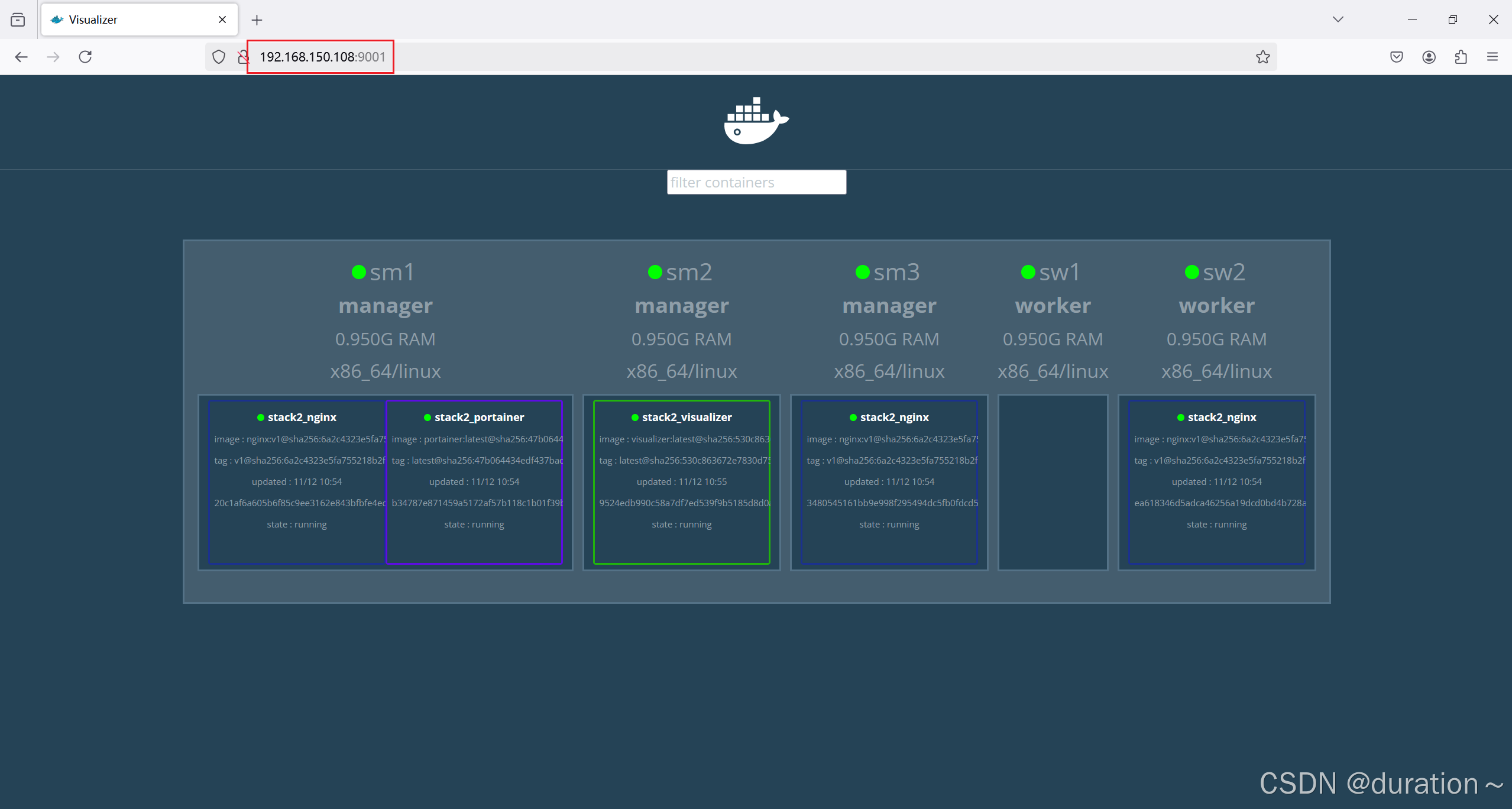

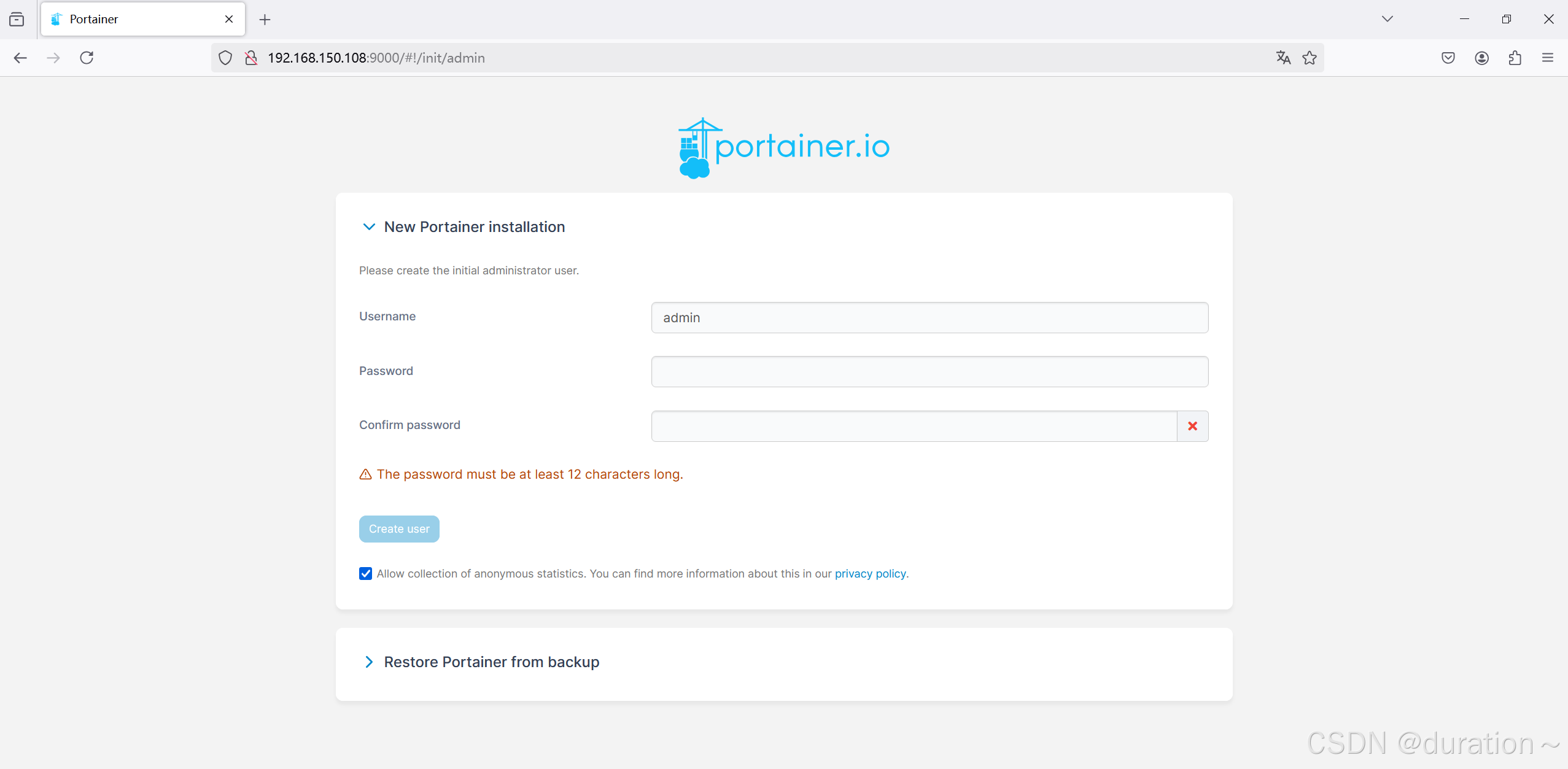

5.5 部署nginx与web管理服务案例

1、编写YAML文件stack2.yaml

yaml

version: "3"

services:

nginx:

image: hub.harbor.com/library/nginx:v1

ports:

- 80:80

deploy:

mode: replicated

replicas: 3

visualizer:

image: dockersamples/visualizer

ports:

- "9001:8080"

volumes:

- "/var/run/docker.sock:/var/run/docker.sock"

deploy:

replicas: 1

placement:

constraints: [node.role == manager]

portainer:

image: portainer/portainer

ports:

- "9000:9000"

volumes:

- "/var/run/docker.sock:/var/run/docker.sock"

deploy:

replicas: 1

placement:

constraints: [node.role == manager]说明:stack中共有3个service

- nginx服务,3个副本

- visualizer服务: 图形查看docker swarm集群

- portainer服务: 图形管理docker swarm集群

2、使用docker stack发布

powershell

[root@sm1 ~]# docker stack deploy -c stack2.yaml stack2

Since --detach=false was not specified, tasks will be created in the background.

In a future release, --detach=false will become the default.

Creating network stack2_default

Creating service stack2_nginx

Creating service stack2_visualizer

Creating service stack2_portainer查看服务信息

powershell

[root@sm1 ~]# docker stack ps stack2

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

zzk6qkuhiymi stack2_nginx.1 hub.harbor.com/library/nginx:v1 sm3 Running Running 19 seconds ago

q2gksal2tj9n stack2_nginx.2 hub.harbor.com/library/nginx:v1 sw2 Running Running 19 seconds ago

2k8471ee4d75 stack2_nginx.3 hub.harbor.com/library/nginx:v1 sm1 Running Running 19 seconds ago

ir4n15stkrxa stack2_portainer.1 portainer/portainer:latest sm1 Running Running 9 seconds ago

jtjvktl7mxki stack2_visualizer.1 dockersamples/visualizer:latest sm2 Running Preparing 14 seconds ago 3、验证

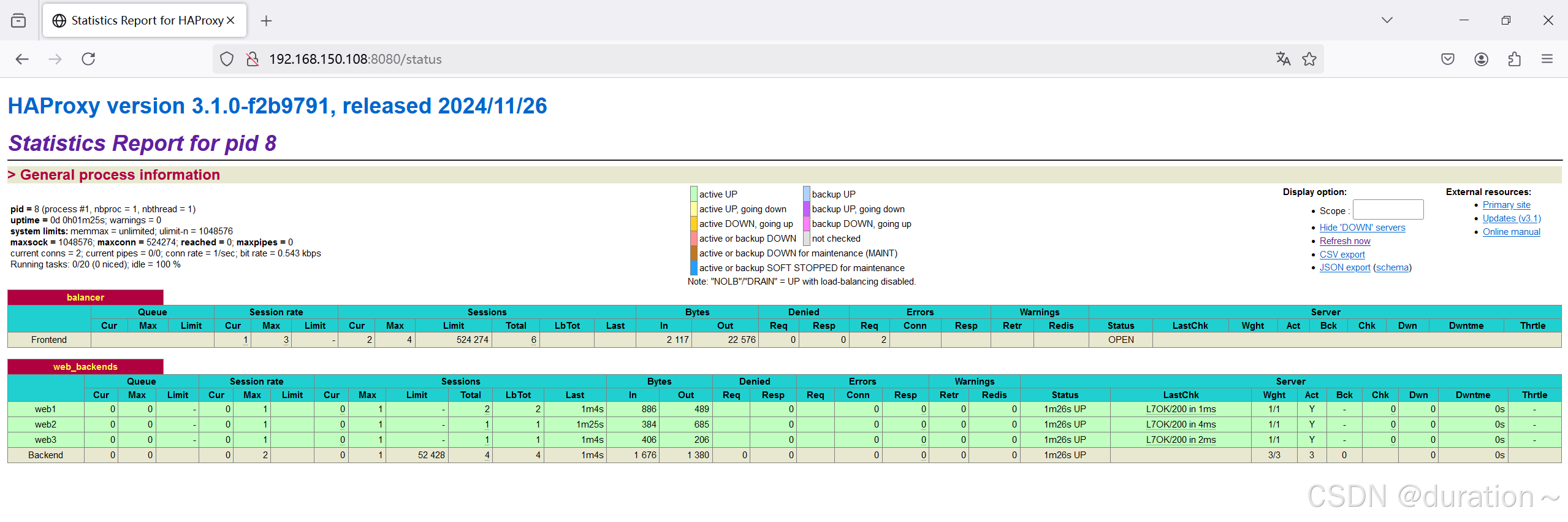

5.6 nginx+haproxy+nfs案例

1、准备工作目录

shell

mkdir -p /docker-stack/haproxy

cd /docker-stack/haproxy/2、在docker swarm管理节点上准备配置文件haproxy.cfg

powershell

global

log 127.0.0.1 local0

log 127.0.0.1 local1 notice

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

stats uri /status

frontend balancer

bind *:8080

mode http

default_backend web_backends

backend web_backends

mode http

option forwardfor

balance roundrobin

server web1 nginx1:80 check

server web2 nginx2:80 check

server web3 nginx3:80 check

option httpchk GET /

http-check expect status 2003、编写YAML编排文件stack3.yaml

powershell

version: "3"

services:

nginx1:

image: hub.harbor.com/library/nginx:v1

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

volumes:

- "nginx_vol:/usr/share/nginx/html"

nginx2:

image: hub.harbor.com/library/nginx:v1

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

volumes:

- "nginx_vol:/usr/share/nginx/html"

nginx3:

image: hub.harbor.com/library/nginx:v1

deploy:

mode: replicated

replicas: 1

restart_policy:

condition: on-failure

volumes:

- "nginx_vol:/usr/share/nginx/html"

haproxy:

image: haproxy:latest

volumes:

- "./haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg:ro"

ports:

- "8080:8080"

deploy:

replicas: 1

placement:

constraints: [node.role == manager]

volumes:

nginx_vol:

driver: local

driver_opts:

type: "nfs"

o: "addr=192.168.150.145,rw"

device: ":/opt/dockervolume"4、发布

powershell

[root@sm1 haproxy]# docker stack deploy -c stack3.yaml stack3

Since --detach=false was not specified, tasks will be created in the background.

In a future release, --detach=false will become the default.

Creating network stack3_default

Creating service stack3_haproxy

Creating service stack3_nginx1

Creating service stack3_nginx2

Creating service stack3_nginx35、验证