在上一篇爬虫文章(14爬虫:scrapy实现翻页爬取-CSDN博客)中,我们对翻页爬取17k网站中涂书的名称,但是17k网站改版加入了无限debug模式导致分页爬取失败。在本次内容中,我们在下载器中间件和selenium实现了分页爬取。

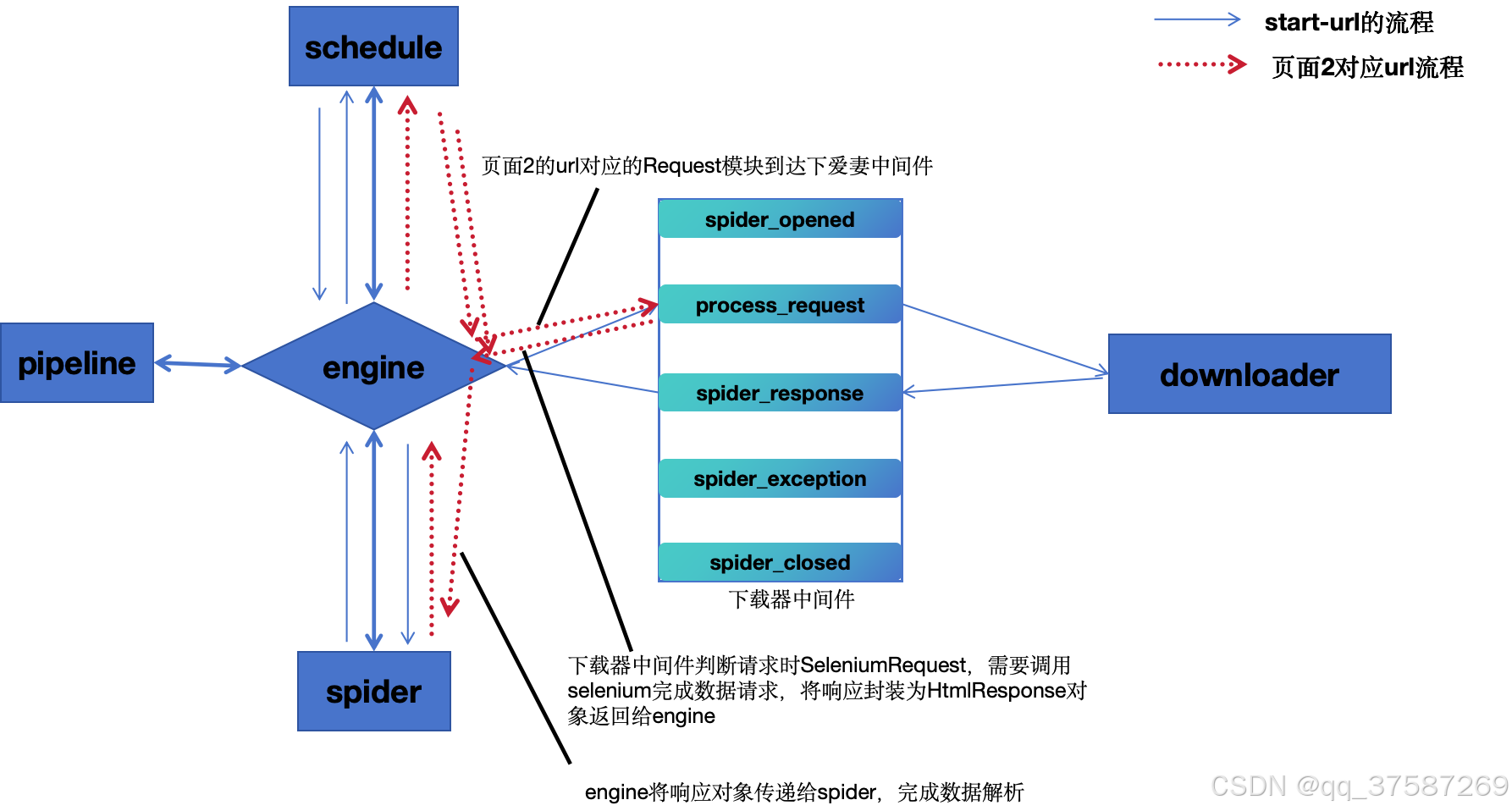

整个程序的逻辑如上图所示:

(1)初始url的响应在spider中解析完毕后,得到的数据通过engine传递给pipeline,实现数据的持久化保存。得到的下一页url封装为SeleniumRequest请求对象,通过engine加入schedule,在由engine提交给downloder。

(2)SeleniumRequest请求对象在传递给downloader的过程中,遇到了下载器中间件(中间件我们可以简单的理解为一堵墙,只有符合条件的才能放行)。在下载器中间件中,我们设置如果是普通的Request对象则直接放行,由downloader完成数据请求在通过下载器提交至engine;但是页面2的url我们封装的是seleniumRequest,因此在下载器中间的process_request方法中实现selenium数据获取并返回Response对象,按照scrapy规则,process_request方法返回Response对象直接给engine,相当于把流程在下载器中间件处截断。

(3)后续的流程就是解析数据,数据持久化保存,selenium请求第三页url。

接下来,我们就给出相应的代码:

python

from scrapy.cmdline import execute

if __name__ == '__main__':

execute("scrapy crawl novelName".split())

python

from scrapy import Request

class SeleniumRequest(Request):

passspider

python

import scrapy

from novelSpider.items import NovelspiderItem

from novelSpider.request import SeleniumRequest

'''

settings.py中进行的设置

USER_AGENT = "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36"

ROBOTSTXT_OBEY = False

LOG_LEVEL = "WARNING"

DOWNLOAD_DELAY = 3

ITEM_PIPELINES = {

"novelSpider.pipelines.NovelspiderPipeline": 300,

}

'''

class NovelnameSpider(scrapy.Spider):

name = "novelName"

allowed_domains = ["17k.com"]

start_urls = ["https://www.17k.com/all"] # 修改起始url为我们需要的地址

def parse(self, response, **kwargs):

# print(response.text)

# 当前页内容解析

items = response.xpath('//tbody//td[@class="td3"]/span/a')

for item in items:

name = item.xpath('.//text()').extract_first()

# print(name)

# 小说名称发送至pipeline

item = NovelspiderItem() # 构建item对象

item['name'] = name

yield item

# 翻页

page_urls = response.xpath('//div[@class="page"]/a')

for page_url in page_urls:

p_url = page_url.xpath('.//@href').extract_first()

if p_url.startswith("javascript"):

continue

# print(p_url)

# 拼接url,构建Request对象

p_url = response.urljoin(p_url)

print(p_url)

yield SeleniumRequest(url=p_url, method='GET', callback=self.parse) # 后续的网页请求零用selenium完成middleware

python

from scrapy import signals

from novelSpider.request import SeleniumRequest

from scrapy.http import HtmlResponse

from selenium.webdriver import Chrome

import time

class NovelspiderDownloaderMiddlewareBySelenium:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the downloader middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

crawler.signals.connect(s.spider_closed, signal=signals.spider_closed)

return s

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

# Must either:

# - return None: continue processing this request

# - or return a Response object

# - or return a Request object

# - or raise IgnoreRequest: process_exception() methods of

# installed downloader middleware will be called

# 首先判断是否需要selenium爬取数据

if isinstance(request, SeleniumRequest): # 需要selenium发送请求,返回响应给engine

self.web = Chrome()

time.sleep(5)

self.web.get(request.url)

return HtmlResponse(

url = request.url,

status = 200,

request = request,

body = self.web.page_source,

encoding = 'utf-8'

)

# 如果不需要selenium发送请求,则直接放行

return None

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object

# - return a Request object

# - or raise IgnoreRequest

return response

def process_exception(self, request, exception, spider):

# Called when a download handler or a process_request()

# (from other downloader middleware) raises an exception.

# Must either:

# - return None: continue processing this exception

# - return a Response object: stops process_exception() chain

# - return a Request object: stops process_exception() chain

pass

def spider_opened(self, spider):

# scrapy开始的时候创建selenium的Chrome对象

self.web = Chrome()

def spider_closed(self, spider):

# scrapy结束的时候关闭selenium

self.web.close()pipeline

python

class NovelspiderPipeline:

def process_item(self, item, spider):

print(item['name'])

return itemitem

python

import scrapy

class NovelspiderItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

name = scrapy.Field()settings

python

# Scrapy settings for novelSpider project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = "novelSpider"

SPIDER_MODULES = ["novelSpider.spiders"]

NEWSPIDER_MODULE = "novelSpider.spiders"

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36"

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

LOG_LEVEL = "WARNING"

# Configure maximum concurrent requests performed by Scrapy (default: 16)

#CONCURRENT_REQUESTS = 32

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

DOWNLOAD_DELAY = 3

# The download delay setting will honor only one of:

#CONCURRENT_REQUESTS_PER_DOMAIN = 16

#CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

#DEFAULT_REQUEST_HEADERS = {

# "Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

# "Accept-Language": "en",

#}

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

#SPIDER_MIDDLEWARES = {

# "novelSpider.middlewares.NovelspiderSpiderMiddleware": 543,

#}

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

DOWNLOADER_MIDDLEWARES = {

# "novelSpider.middlewares.NovelspiderDownloaderMiddleware": 543,

"novelSpider.middlewares.NovelspiderDownloaderMiddlewareBySelenium":543,

}

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

#EXTENSIONS = {

# "scrapy.extensions.telnet.TelnetConsole": None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

"novelSpider.pipelines.NovelspiderPipeline": 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

#AUTOTHROTTLE_ENABLED = True

# The initial download delay

#AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

#AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

#AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

#HTTPCACHE_ENABLED = True

#HTTPCACHE_EXPIRATION_SECS = 0

#HTTPCACHE_DIR = "httpcache"

#HTTPCACHE_IGNORE_HTTP_CODES = []

#HTTPCACHE_STORAGE = "scrapy.extensions.httpcache.FilesystemCacheStorage"

# Set settings whose default value is deprecated to a future-proof value

REQUEST_FINGERPRINTER_IMPLEMENTATION = "2.7"

TWISTED_REACTOR = "twisted.internet.asyncioreactor.AsyncioSelectorReactor"

FEED_EXPORT_ENCODING = "utf-8"运行结果