Llama 3.2 900亿参数视觉多模态大模型本地部署及案例展示

本文将介绍如何在本地部署Llama 3.2 90B(900亿参数)视觉多模态大模型,并开发一些Use Case,展示其强大的视觉理解能力。

Llama 3.2 介绍

今年9月,Meta公司发布了 Llama 3.2版本,包括11B 和 90B的中小型视觉大语言模型,适用于边缘计算和移动设备的1B 和 3B轻量级文本模型,,均预训练基础版和指令微调版,除此之外,还发布了一个安全模型Llama Guard 3。

Llama 3.2 Vision 是 Meta 发布的最强大的开源多模态模型。它具有出色的视觉理解和推理能力,可以用于完成各种任务,包括视觉推理与定位、文档问答和图像 - 文本检索,思维链 (Chain of Thought, CoT) 答案通常非常好,这使得视觉推理特别强大。

Llama 2于2023年7月发布,包含7B、13B和70B参数的模型。之后Meta在2024年4月推出了Llama 3,并在2024年7月迅速发布了Llama 3.1版本,更新了8B和70B的模型,最重要的是推出了一个拥有405B参数的基础级模型。这些模型支持8种语言,具备工具调用功能,并且拥有128K的上下文窗口。

2024年9月份刚刚发布了Llama 3.2模型,增强了Llama 3.1的8B和70B模型,构建出11B和90B多模态模型,使其具备了视觉能力。

Llama 3.2 系列中最大的两个模型 11B 和 90B 支持图像推理使用案例,例如文档级理解(包括图表和图形)、图像字幕和视觉接地任务(例如根据自然语言描述定向精确定位图像中的对象)。例如,可以问他们公司在上一年的哪个月销售额最好,然后 Llama 3.2 可以根据可用图表进行推理并快速提供答案。还可以使用地图进行推理并帮助回答诸如徒步旅行何时会遇到陡峭的地形,或在地图上标记路线的距离等问题。11B 和 90B 型号还可以通过从图像中提取细节、理解场景,然后生成一两个可用作图像标题的句子来帮助讲述故事,从而弥合视觉和语言之间的差距。

此外,Meta还发布了两个轻量级的模型:1B和3B模型,这些将帮助支持设备端的AI应用。

在本地运行这些模型有两个主要优势。首先,提示和响应可能会让人感觉是即时的,因为处理是在本地完成的。其次,在本地运行模型不会将消息和日历信息等数据发送到云中,从而保护隐私,从而使整个应用程序更加私密。由于处理是在本地处理的,因此应用程序可以清楚地控制哪些查询保留在设备上,哪些查询可能需要由云中的更大模型处理。

Llama Guard 3也是3.2版本的一部分,这是一种视觉安全模型,可以标记和过滤用户输入的有问题的图像和文本提示词。

GPU显卡内存估算

如何计算大模型到底需要多少显存,是常常被问起的问题,了解如何估算所需的 GPU 内存、正确调整硬件大小以服务这些模型至关重要。这是衡量你对这些大模型在生产中的部署和可扩展性的理解程度的关键指标。

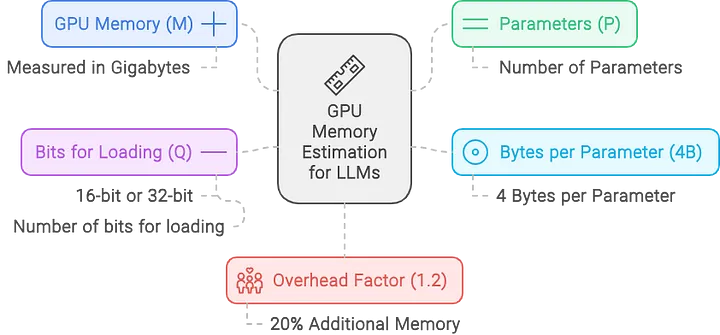

要估算服务大型语言模型所需的 GPU 内存,可以使用以下公式:

M=\\frac{(P \* 4 B)}{(32 / Q)} \* 1.2

- M是所需的 GPU 显卡内存(单位:GB千兆字节)。

- P是模型中的参数数量,表示模型的大小。例如,这里使用的 Llama 90B模型有 900 亿个参数,则该值将为 90。

- 4B表示每个参数使用 4 个字节。每个参数通常需要 4 个字节的内存。这是因为浮点精度通常占用 4 个字节(32 位)。但是,如果使用半精度(16 位),则计算将相应调整。

- Q是加载模型的位数(例如,16 位或 32 位)。根据以 16 位还是 32 位精度加载模型,此值将会发生变化。16 位精度在许多大模型部署中很常见,因为它可以减少内存使用量,同时保持足够的准确性。

- 1.2 乘数增加了 20% 的开销,以解决推理期间使用的额外内存问题。这不仅仅是一个安全缓冲区;它对于覆盖模型执行期间激活和其他中间结果所需的内存至关重要。

这里想要估算为具有 90B(900 亿)个参数、以 16 位精度加载的 Llama 3.2 90B 视觉大模型提供服务所需的内存:

M=\\frac{(90 \* 4)}{(32 / 16)} \* 1.2 = 216 GB

这个计算告诉我们,需要大约216 GB 的 GPU 内存来为 16 位模式下具有 900 亿个参数的 Llama 3.2 90B 大模型提供服务。

因此,单个具有 80 GB 内存的 NVIDIA A100 GPU 或者 H00 GPU 不足以满足此模型的需求,需要至少3张具有 80 GB 内存的 A100 GPU 才能有效处理内存负载。

此外,仅加载 CUDA 内核就会消耗 1-2GB 的内存。实际上,无法仅使用参数填满整个 GPU 内存作为估算依据。

如果是训练大模型情况(下一篇文章会介绍),则需要更多的 GPU 内存,因为优化器状态、梯度和前向激活每个参数都需要额外的内存。

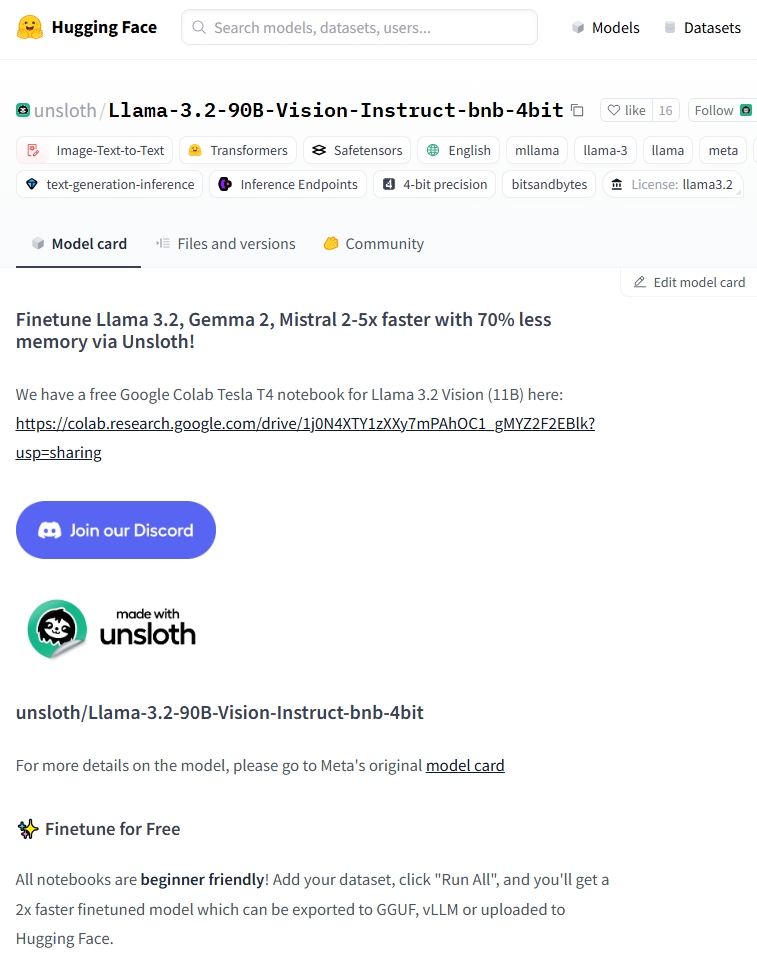

但博主囊中羞涩,为了完成这篇文章,选择 unsloth/Llama-3.2-90B-Vision-Instruct-bnb-4bit 的Llama 3.2 90B 视觉大模型的4bit量化模型,根据上面的估算公式,仅使用1张具有 80 GB 内存的 GPU 就可以运行完成本文案例所需的模型推理任务。

环境搭建

云服务器

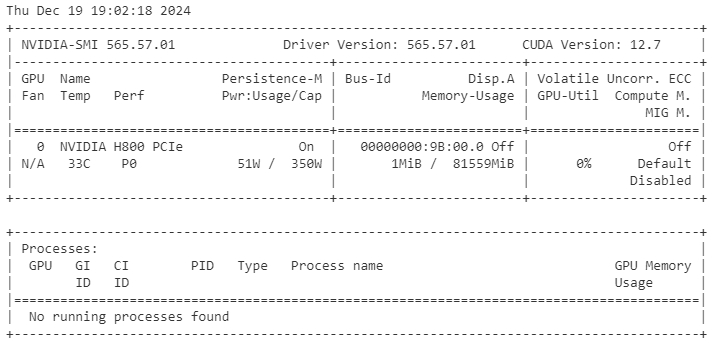

找一台带一张H800 GPU 显卡的服务器(博主租用了一台服务器,花费大概50元左右就能跑完本文案例use case,当然还需要一些降低费用的小技巧,比如提前租用配置的服务器把模型文件下载到服务器,这样就可以节省很多费用💰),具体配置如下:

- GPU:H800,80GB显存

- CPU:20 核,Xeon(R) Platinum 8458P

- 内存:100 GB

- 系统盘:30 GB

- 数据盘:200 GB(用于存放模型文件)

读者可以添加下面博主公众号,回复241220,获取租用云服务器的链接和方法,无需安装以下所需的CUDA、cuDNN、Python和PyTorch,直接使用。

如果读者自行安装CUDA和cuDNN,并配置环境变量,具体方法可以参考英伟达官网:

本文所需的的CUDA、cuDNN、Python和PyTorch版本如下:

- CUDA版本:12.4

- cuDNN版本:9.1.0.70

- Python版本:3.12.2

- PyTorch版本:2.5.1

读者可以使用VSCode、Cursor或者其它SSH客户端连接到云服务器,然后使用以下命令安装CUDA和cuDNN。

安装前需要确保服务器上已经安装了NVIDIA驱动,可以使用以下命令查看是否安装了NVIDIA驱动:

shell

nvidia-smi安装前,需要确保PyTorch与CUDA的版本对应,否则会报错。

1. 安装CUDA 12.4

shell

wget https://developer.download.nvidia.com/compute/cuda/12.4.0/local_installers/cuda_12.4.0_535.104.05_linux.run

sudo sh cuda_12.4.0_535.104.05_linux.run2. 安装cuDNN 9.1.0.70

shell

# 首先从NVIDIA开发者网站下载cuDNN

tar -xvf cudnn-linux-x86_64-9.1.0.70_cuda12-archive.tar.xz

sudo cp cudnn-*-archive/include/cudnn*.h /usr/local/cuda/include

sudo cp -P cudnn-*-archive/lib/libcudnn* /usr/local/cuda/lib64

sudo chmod a+r /usr/local/cuda/include/cudnn*.h /usr/local/cuda/lib64/libcudnn*3. 配置环境变量

shell

echo 'export PATH=/usr/local/cuda-12.4/bin:$PATH' >> ~/.bashrc

echo 'export LD_LIBRARY_PATH=/usr/local/cuda-12.4/lib64:$LD_LIBRARY_PATH' >> ~/.bashrc

source ~/.bashrc4. 安装Python 3.12.2

shell

sudo apt update

sudo apt install software-properties-common

sudo add-apt-repository ppa:deadsnakes/ppa

sudo apt install python3.12

sudo apt install python3.12-venv python3.12-dev5. 安装pip

shell

curl https://bootstrap.pypa.io/get-pip.py -o get-pip.py

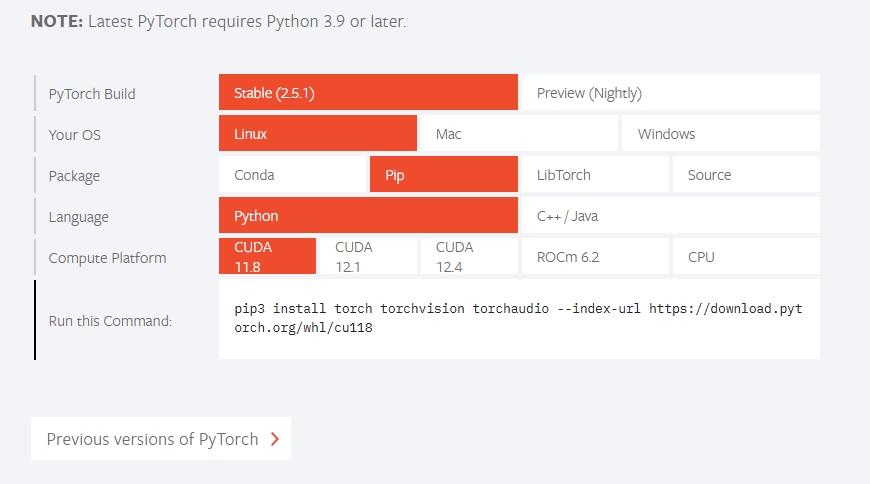

python3.12 get-pip.py6. 安装PyTorch 2.5.1

shell

pip3 install torch==2.5.1 torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121读者可以使用以下命令查看GPU内存信息

shell

nvidia-smi如果安装正确,则可以看到类似如下的GPU内存信息

下载模型文件

为了在本地部署模型,事先需要从Huggingface上手动下载Unsloth量化过的Llama 3.2 90B 视觉大模型的4 bit量化模型unsloth/Llama-3.2-90B-Vision-Instruct-bnb-4bit 。

- 安装Huggingface Hub客户端

shell

pip install -U huggingface_hub- 设置Huggingface镜像源(必须设置,否则下载模型会报错⚠️)

shell

export HF_ENDPOINT=https://hf-mirror.com- 登录Huggingface (跳过这步)

shell

huggingface-cli login- 下载模型

shell

huggingface-cli download --resume-download unsloth/Llama-3.2-90B-Vision-Instruct-bnb-4bit --local-dir 【这里替换为模型本地存放路径】下载成功后的Llama 3.2 90B视觉大模型的文件列表如下:

shell

drwxr-xr-x 3 root root 4096 Dec 19 16:33 ./

drwxr-xr-x 3 root root 4096 Dec 19 16:33 ../

drwxr-xr-x 3 root root 4096 Dec 19 10:08 .cache/

-rw-r--r-- 1 root root 1570 Dec 19 10:08 .gitattributes

-rw-r--r-- 1 root root 6131 Dec 19 10:08 README.md

-rw-r--r-- 1 root root 5151 Dec 19 10:08 chat_template.json

-rw-r--r-- 1 root root 5870 Dec 19 10:08 config.json

-rw-r--r-- 1 root root 210 Dec 19 10:08 generation_config.json

-rw-r--r-- 1 root root 4947107668 Dec 19 11:22 model-00001-of-00010.safetensors

-rw-r--r-- 1 root root 4977064274 Dec 19 10:58 model-00002-of-00010.safetensors

-rw-r--r-- 1 root root 4977064462 Dec 19 10:36 model-00003-of-00010.safetensors

-rw-r--r-- 1 root root 4977098481 Dec 19 10:40 model-00004-of-00010.safetensors

-rw-r--r-- 1 root root 4933796471 Dec 19 10:34 model-00005-of-00010.safetensors

-rw-r--r-- 1 root root 4977064512 Dec 19 10:40 model-00006-of-00010.safetensors

-rw-r--r-- 1 root root 4977064455 Dec 19 11:08 model-00007-of-00010.safetensors

-rw-r--r-- 1 root root 4977098479 Dec 19 10:47 model-00008-of-00010.safetensors

-rw-r--r-- 1 root root 4933796461 Dec 19 11:07 model-00009-of-00010.safetensors

-rw-r--r-- 1 root root 4263159971 Dec 19 16:33 model-00010-of-00010.safetensors

-rw-r--r-- 1 root root 678066 Dec 19 10:40 model.safetensors.index.json

-rw-r--r-- 1 root root 477 Dec 19 10:40 preprocessor_config.json

-rw-r--r-- 1 root root 454 Dec 19 10:40 special_tokens_map.json

-rw-r--r-- 1 root root 17210088 Dec 19 10:40 tokenizer.json

-rw-r--r-- 1 root root 55931 Dec 19 10:40 tokenizer_config.json安装模型运行环境Unsloth

安装Conda

shell

mkdir -p ~/miniconda3

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -O ~/miniconda3/miniconda.sh

bash ~/miniconda3/miniconda.sh -b -u -p ~/miniconda3

rm -rf ~/miniconda3/miniconda.sh

~/miniconda3/bin/conda init bash

~/miniconda3/bin/conda init zsh安装模型运行环境Unsloth

以下代码用于安装模型运行环境Unsloth,并导入模型运行所需的依赖包。

shell

conda create --name unsloth_env \

python=3.11 \

pytorch-cuda=12.1 \

pytorch cudatoolkit xformers -c pytorch -c nvidia -c xformers \

-y

conda activate unsloth_env

pip install "unsloth[colab-new] @ git+https://github.com/unslothai/unsloth.git"

pip install --no-deps trl peft accelerate bitsandbytes

python

import warnings

warnings.filterwarnings('ignore')

%pip install unsloth加载模型

python

from unsloth import FastVisionModel # 从unsloth库中导入FastVisionModel类,用于处理视觉相关的大语言模型

import torch

model, tokenizer = FastVisionModel.from_pretrained(

"/root/autodl-fs/model/unsloth/Llama-3.2-90B-Vision-Instruct-bnb-4bit", # 此处替换为Llama-3.2-90B-Vision-Instruct-bnb-4bit在模型本地存放的绝对路径

load_in_4bit = True, # 使用4bit以减少内存使用。False则使用16bit LoRA。

use_gradient_checkpointing = "unsloth", # True或"unsloth"用于长文本上下文

)

FastVisionModel.for_inference(model) # 启动视觉大模型的推理模式==((====))== Unsloth 2024.12.8: Fast Mllama vision patching. Transformers: 4.46.3.

\\ /| GPU: NVIDIA H800 PCIe. Max memory: 79.205 GB. Platform: Linux.

O^O/ \_/ \ Torch: 2.5.1+cu124. CUDA: 9.0. CUDA Toolkit: 12.4. Triton: 3.1.0

\ / Bfloat16 = TRUE. FA [Xformers = 0.0.28.post3. FA2 = False]

"-____-" Free Apache license: http://github.com/unslothai/unsloth

Unsloth: Fast downloading is enabled - ignore downloading bars which are red colored!

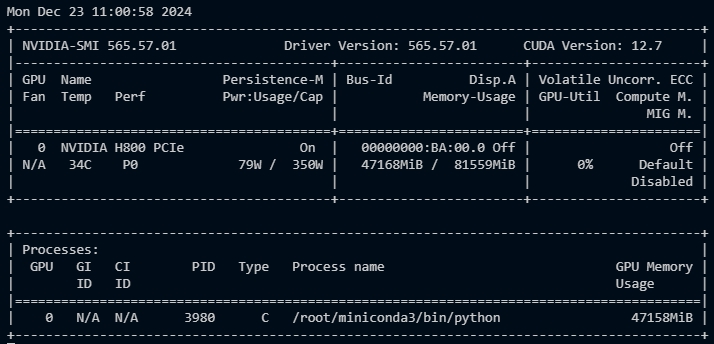

Loading checkpoint shards: 0%| | 0/10 [00:00<?, ?it/s]加载模型成功后,可以使用以下命令查看模型占用内存

shell

nvidia-smi -l 2

Llama 3.2 90B 多模态视觉大模型推理案例展示

本文以下内容使用jupyter notebook进行演示。

读者可以添加下面博主公众号,回复241220,获取本文所使用的notebook文件。

封装模型推理函数

python

def llama32_image(instruction, image_url):

from PIL import Image

image = Image.open(image_url)

messages = [

{"role": "user", "content": [

{"type": "image"},

{"type": "text", "text": instruction}

]}

]

input_text = tokenizer.apply_chat_template(messages, add_generation_prompt = True)

inputs = tokenizer(

image,

input_text,

add_special_tokens = False,

return_tensors = "pt",

).to("cuda")

from transformers import TextStreamer

text_streamer = TextStreamer(tokenizer, skip_prompt = True)

output = model.generate(**inputs, streamer = text_streamer, max_new_tokens = 2048,

use_cache = True, temperature = 1.5, min_p = 0.1)

return output

def llama32_text(instruction):

messages = [

{"role": "user", "content": [

{"type": "text", "text": instruction}

]}

]

input_text = tokenizer.apply_chat_template(messages, add_generation_prompt = True)

inputs = tokenizer(

None,

input_text,

add_special_tokens = False,

return_tensors = "pt",

).to("cuda")

from transformers import TextStreamer

text_streamer = TextStreamer(tokenizer, skip_prompt = True)

output = model.generate(**inputs, streamer = text_streamer, max_new_tokens = 2048,

use_cache = True, temperature = 1.5, min_p = 0.1)

return output

import os

import requests

from PIL import Image

from io import BytesIO

import matplotlib.pyplot as plt

def disp_image(address):

if address.startswith("http://") or address.startswith("https://"):

response = requests.get(address)

img = Image.open(BytesIO(response.content))

else:

img = Image.open(address)

plt.imshow(img)

plt.axis('off')

plt.show()1. 胎压警告识别

python

disp_image("images/tire_pressure.png")

上图是一张汽车仪表盘上轮胎压力告警的图片,下面针对该图片进行提问,问题在question变量中,模型推理结果在result变量中。

由于Llama 3.2目前没有中文版本,所以模型推理的输入输出都是英文,博主会将输入输出的英文翻译成中文,方便读者理解。

期待不久的将来Llama 3.2 90B 视觉大模型会推出中文版本。

python

question = ("What's the problem this is about?"

" What should be good numbers?")

# 这个是关于什么的问题?什么样的数值才是合适的?

result = llama32_image(question, "images/tire_pressure.png")

print(result)The problem being shown is a low tire pressure warning. The recommended tire pressures are displayed in the dashboard's display screen, with the current pressure displayed next to each tire. The recommended pressure for this particular car is 33 pounds per square inch (psi) for both the front and back tires.

To rectify this issue, one should inflate the tires to the recommended pressure to ensure proper safety and performance while driving. It's also a good idea to check tire pressures regularly, as under-inflated tires can lead to reduced fuel efficiency, uneven tire wear, and increased risk of tire failure.<|eot_id|>输出结果:

这个问题显示的是轮胎压力过低警告。仪表盘显示屏上显示了推荐的轮胎压力,每个轮胎旁边都显示了当前压力。对于这辆特定的汽车来说,前轮和后轮的推荐压力都是33磅/平方英寸(psi)。

为了解决这个问题,应该将轮胎充气到推荐的压力,以确保行驶时的安全性和性能。定期检查轮胎压力也是一个好主意,因为轮胎充气不足会导致燃油效率降低、轮胎磨损不均匀,并增加轮胎故障的风险。

2. 犬种识别

python

disp_image("images/ww1.jpg")

上图是一张狗狗的图片,下面针对该图片进行提问,问题在question变量中,模型推理结果在result变量中。

python

question = ("What dog breed is this? Describe in one paragraph,"

"and 3-5 short bullet points")

# 这是什么品种的狗?用一段话描述,并列出3-5个要点

result = llama32_image(question, "images/ww1.jpg")

print(result)The dog breed depicted in the image is a Labrador Retriever.

Here are 3 key details that support this answer:

* **Physical Characteristics**: The dog has a short, dense coat and a broad head with a well-defined stop at the eyes, which are hallmarks of the Labrador Retriever breed.

* **Size and Proportion**: The dog appears to be a puppy, but its overall size and proportion are consistent with that of an adult Labrador Retriever.

* **Temperament**: The dog's friendly and outgoing demeanor is also typical of the breed, which is known for its gentle nature and high intelligence.<|eot_id|>

tensor([[128000, 128006, 882, 128007, 271, 128256, 3923, 5679, 28875,输出结果:

图中显示的是一只拉布拉多犬。

以下是3个支持这个结论的关键细节:

- 体型特征:这只狗有短而浓密的被毛,宽阔的头部,眼睛处有明显的额骨,这些都是拉布拉多犬的典型特征。

- 体型和比例:虽然这只狗看起来是一只幼犬,但其整体体型和比例与成年拉布拉多犬一致。

- 性格特征:这只狗友好外向的性格也是该品种的典型特征,拉布拉多犬以其温和的性格和高智商而闻名。

python

disp_image("images/ww2.png")

再来看一张狗狗的图片,下面针对该图片进行同样的提问,问题在question变量中,模型推理结果在result变量中。

python

result = llama32_image(question, "images/ww2.png")

print(result) This dog appears to be a Labrador Retriever, a popular breed known for its friendly and outgoing nature.

Here are some key characteristics of Labradors:

• **Coat**: Short, dense, and smooth

• **Size**: Medium to large (55-80 pounds)

• **Eyes**: Brown or hazel

• **Ears**: Hanging, triangular in shape

• **Temperament**: Friendly, outgoing, and energetic<|eot_id|>输出结果:

这只狗看起来是一只拉布拉多犬,这是一种以友好和外向性格著称的流行犬种。

以下是拉布拉多犬的一些主要特征:

- 被毛:短、密、光滑

- 体型:中型到大型(25-36公斤)

- 眼睛:棕色或榛色

- 耳朵:下垂,呈三角形

- 性格:友好、外向、精力充沛

3. 植物识别

python

disp_image("images/tree.jpg")

这是花园里的一棵树,下面针对该图片进行提问,问题在question变量中,模型推理结果在result变量中。

python

question = ("What kind of plant is this in my garden?"

"Describe it in a short paragraph.")

# 这是我的花园里的一棵什么植物?用一段话描述

result = llama32_image(question, "images/tree.jpg")

print(result)This appears to be a pomegranate tree in your garden. Pomegranates are a shrubby tree with short spines in some species and smooth bark. They have many thin, leathery leaves, that are dark green on top and gray green below, that drop during winter and new leaves develop in the spring. The pomegranate flowers in the late spring and summer after the new leaves emerge. They produce small white flowers that produce large, fleshy fruit with many tiny seed pods.<|eot_id|>输出结果:

这看起来是一棵石榴树。石榴是一种灌木状的树,某些品种有短刺,树皮光滑。它有许多薄而革质的叶子,叶子上面深绿色,下面灰绿色,冬季会落叶,春季会长出新叶。石榴树在新叶长出后的晚春和夏季开花。它会开出小白花,结出含有许多小籽的大而多汁的果实。

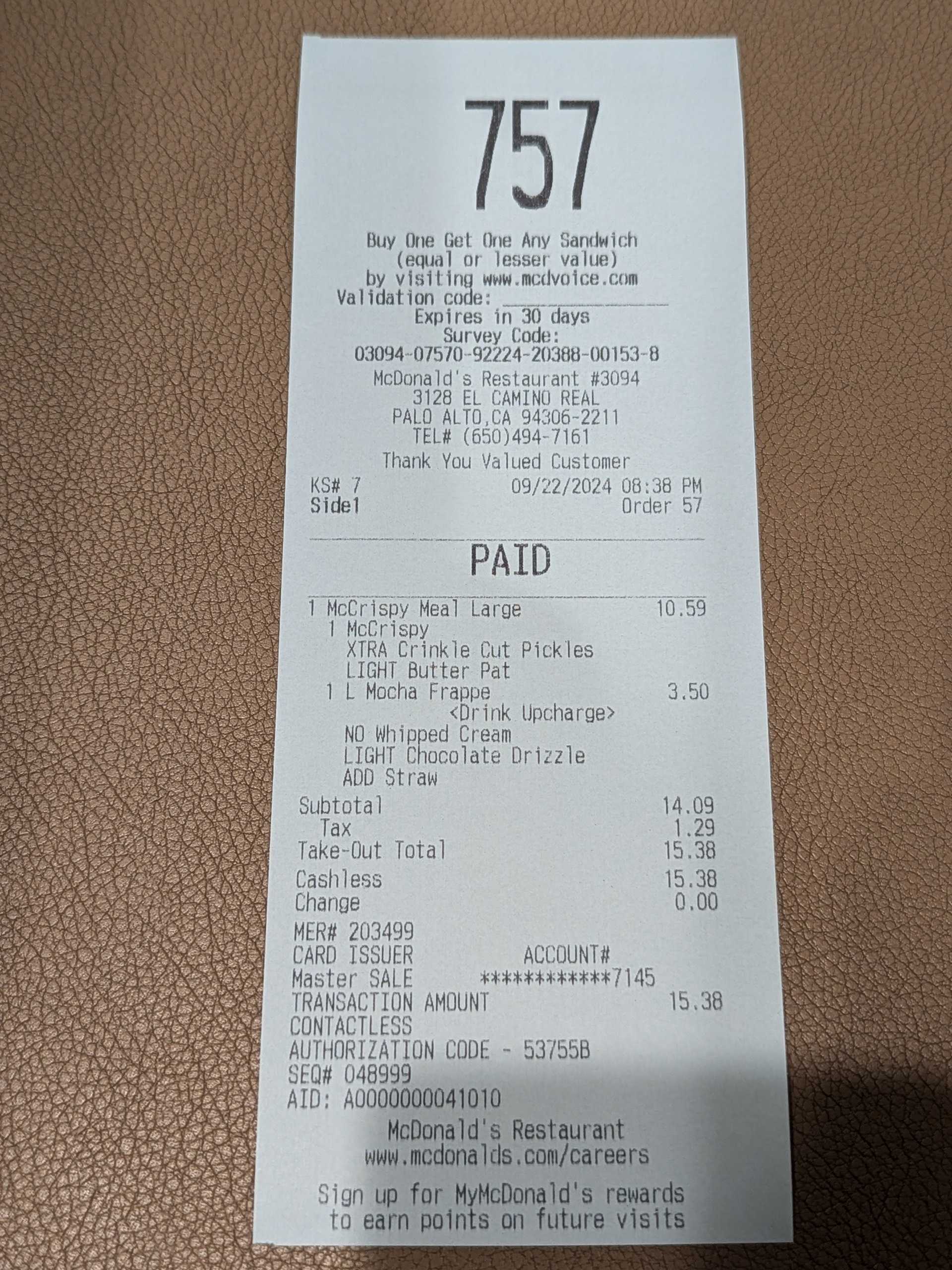

4. 发票OCR识别

python

for i in range(1, 4):

disp_image(f"images/receipt-{i}.jpg")

)

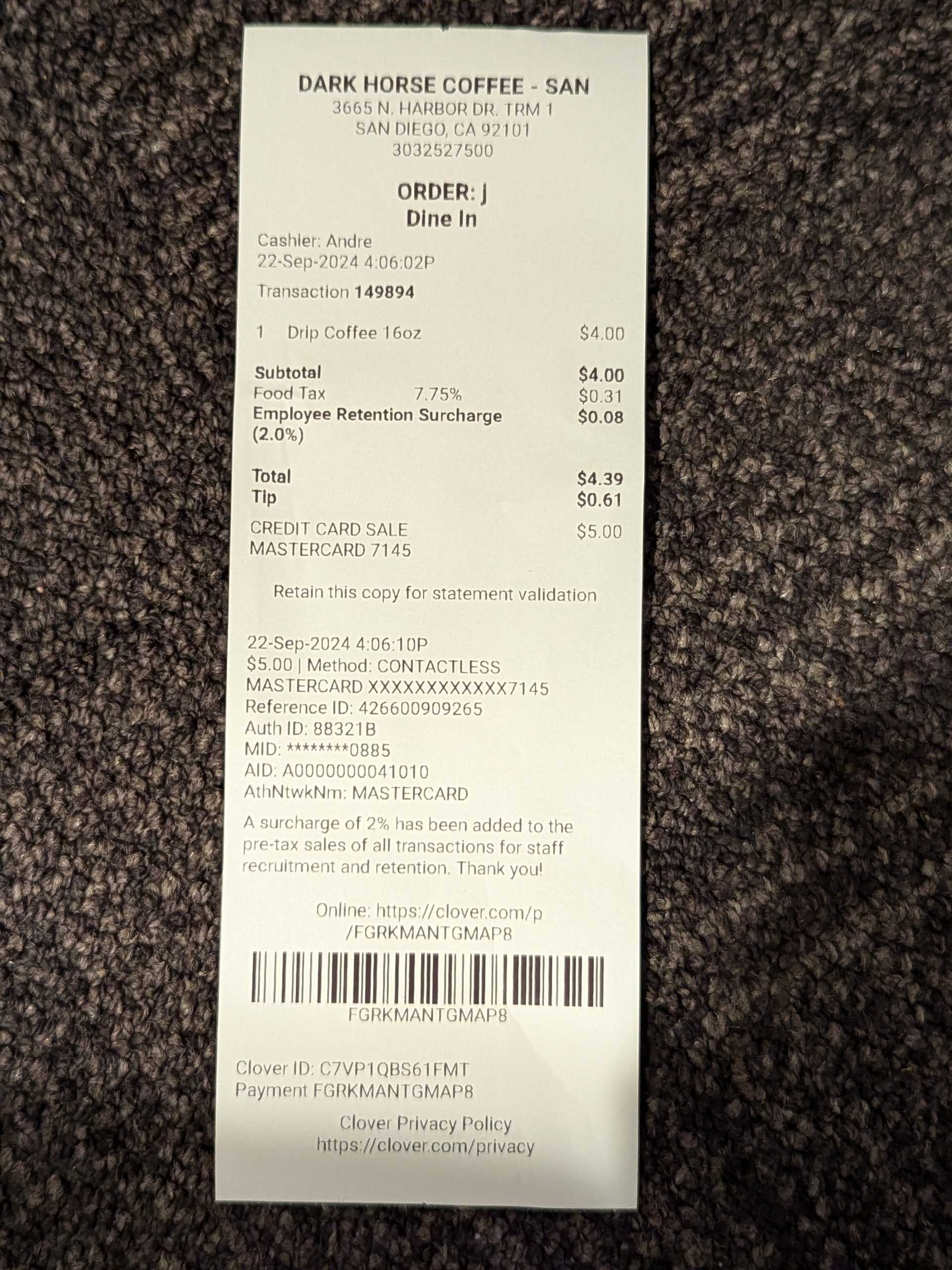

上面是三张发票的图片,下面针对这三张发票分别进行提问。

python

question = "What's the total charge in the receipt?"

# 这张发票的总金额是多少?

result = llama32_image(question, "images/receipt-1.jpg")

print(result)The total charge is $13.96, which includes the cost of two slices of pizza ($6.25) and a tip ($1.69).<|eot_id|>输出结果:

总金额是13.96,包括两片披萨的费用(6.25)和小费($1.69)。

python

question = "What's the total charge in the receipt?"

# 这张发票的总金额是多少?

result = llama32_image(question, "images/receipt-2.jpg")

print(result)According to the receipt, the total charge is $15.38, with $14.09 being the subtotal, and $1.29 for taxes. The total was paid in cash.<|eot_id|>输出结果:

根据发票,总金额是15.38,其中14.09是商品总价,$1.29是税。总金额以现金支付。

python

question = "What's the total charge in the receipt?"

# 这张发票的总金额是多少?

result = llama32_image(question, "images/receipt-3.jpg")

print(result)The total charge in the receipt is $4.39, which includes a subtotal of $4.00 for the purchase and a tip of $0.61, with a total tip of $5.00.<|eot_id|>输出结果:

发票的总金额是4.39,其中包括4.00的商品小计和0.61的小费,总小费为5.00。

5. 选择合适的饮料

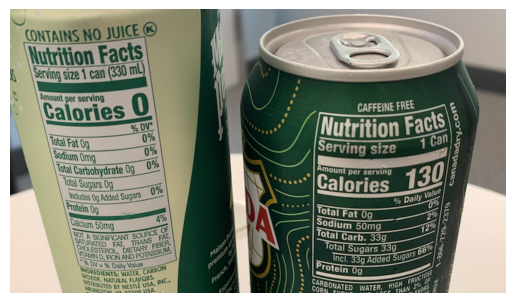

python

disp_image("images/drinks.png")

上面是一张2瓶饮料的图片,下面针对该图片进行提问。

python

question = "I am on a diet. Which drink should I drink?"

# 我正在节食,应该喝哪种饮料?

result = llama32_image(question, "images/drinks.png")

print(result)Since you are on a diet, the choice between the two drinks should be based on their caloric content.

The 7UP can is completely transparent, indicating that it has zero calories. On the other hand, the Rockstar energy drink has 130 calories per serving. Therefore, if you are trying to reduce your caloric intake, the 7UP would be the better choice.

**Key Points:**

* The 7UP can has 0 calories.

* The Rockstar energy drink has 130 calories.

**Recommendation:**

Based on the information provided, the 7UP would be the more suitable option for someone who is on a diet.<|eot_id|>输出结果:

由于您正在节食,两种饮料的选择应该基于它们的热量含量。

7UP罐装饮料完全透明,表明它不含热量。另一方面,Rockstar能量饮料每份含有130卡路里。因此,如果您想减少热量摄入,7UP将是更好的选择。

要点:

- 7UP含0卡路里。

- Rockstar能量饮料含130卡路里。

建议:

根据提供的信息,对于正在节食的人来说,7UP是更合适的选择。

下面针对该图片再次提问。

python

question = ("Generete nurtrition facts of the two drinks "

"in JSON format for easy comparison.")

# 生成两瓶饮料的营养成分,以JSON格式,便于比较

result = llama32_image(question, "images/drinks.png")

print(result) The provided image does not contain enough information about the drinks in the picture. However, the nutrition facts can be represented in JSON format as follows:

```

[

{

"serving_size": "1 can (330 ml)",

"calories": 0,

"fat": "0%",

"sodium": "0mg",

"carbohydrates": "0g",

"sugars": "0%",

"protein": "0g"

},

{

"serving_size": "1 can",

"calories": 130,

"fat": "0g",

"sodium": "50mg",

"carbohydrates": "33g",

"sugars": "33g",

"protein": "0g"

}

]

```

This JSON format provides a clear comparison of the nutritional information between the two drinks.<|eot_id|>输出结果:

图片中没有提供足够的饮料营养信息。但是,营养成分可以用JSON格式表示如下:

json

[

{

"serving_size": "1罐 (330毫升)",

"calories": 0,

"fat": "0%",

"sodium": "0毫克",

"carbohydrates": "0克",

"sugars": "0%",

"protein": "0克"

},

{

"serving_size": "1罐",

"calories": 130,

"fat": "0克",

"sodium": "50毫克",

"carbohydrates": "33克",

"sugars": "33克",

"protein": "0克"

}

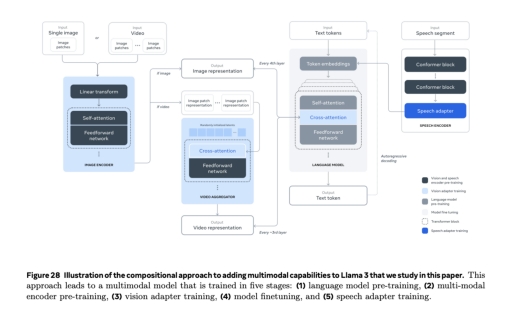

]6. 理解大模型架构图并生成实现代码

python

disp_image("images/llama32mm.png")

上面是一张Llama 3.2多模态大模型架构图,下面针对该图片进行提问。

这是一张Llama 3.2多模态大模型的架构图,展示了模型如何处理图像和文本输入。

图中显示了模型的主要组件和数据流向,包括图像编码器、文本编码器和解码器等部分。

下面我们将请求模型解释这个架构图,并生成相应的Python实现代码。

python

question = ("I see this diagram in the Llama 3 paper. "

"Summarize the flow in text and then return a "

"python script that implements the flow.")

# 我在Llama 3论文中看到这个图表。请用文字总结流程,然后返回一个实现该流程的Python脚本。

result = llama32_image(question, "images/llama32mm.png")

print(result) The diagram illustrates the compositional approach to adding multimodal capabilities to Llama 3. This approach leads to a multimodal model that is trained in five stages:

* **Language Model Pre-training**: The first stage involves pre-training a language model on text data.

* **Vision Adapter Training**: In the second stage, the pre-trained language model is fine-tuned as a vision adapter using a visual-linguistic dataset. This step helps the model to understand the relationship between visual inputs and text descriptions.

* **Multimodal Encoder Pre-training**: In the third stage, the vision adapter model is further pre-trained with a multimodal encoder. This step allows the model to learn how to combine visual and linguistic information effectively.

* **Model Finetuning**: The fourth stage involves fine-tuning the multimodal encoder on downstream tasks that require both visual and linguistic understanding. This step enables the model to learn task-specific representations that are relevant to the specific task at hand.

* **Speech Adapter Training**: In the final stage, the fine-tuned multimodal encoder model is trained as a speech adapter using audio-linguistic datasets. This step enables the model to learn how to incorporate speech inputs into its multimodal representation.

**Python Implementation**

To implement the flow outlined in the diagram, the following python script can be used as a reference. Please note that this implementation is a high-level overview and might require significant additional coding and refinement to make it operational.

```python

import torch

import torchvision

import numpy as np

# Define the model architectures

class LanguageModel(torch.nn.Module):

def __init__(self):

super(LanguageModel, self).__init__()

# Define the text encoder

self.text_encoder = torch.nn.LSTM(input_size=256, hidden_size=512, num_layers=2)

def forward(self, x):

# Pass the input through the text encoder

outputs, _ = self.text_encoder(x)

return outputs

class VisionAdapter(torch.nn.Module):

def __init__(self):

super(VisionAdapter, self).__init__()

# Define the visual-linguistic dataset adapter

self.visual_adapter = torch.nn.Linear(input_size=256, output_size=512)

def forward(self, x):

# Pass the input through the visual adapter

outputs = self.visual_adapter(x)

return outputs

class MultimodalEncoder(torch.nn.Module):

def __init__(self):

super(MultimodalEncoder, self).__init__()

# Define the multimodal encoder

self.multimodal_encoder = torch.nn.LSTM(input_size=1024, hidden_size=512, num_layers=2)

def forward(self, x):

# Pass the input through the multimodal encoder

outputs, _ = self.multimodal_encoder(x)

return outputs

class SpeechAdapter(torch.nn.Module):

def __init__(self):

super(SpeechAdapter, self).__init__()

# Define the audio-linguistic dataset adapter

self.speech_adapter = torch.nn.Linear(input_size=256, output_size=512)

def forward(self, x):

# Pass the input through the speech adapter

outputs = self.speech_adapter(x)

return outputs

# Initialize the models

language_model = LanguageModel()

vision_adapter = VisionAdapter()

multimodal_encoder = MultimodalEncoder()

speech_adapter = SpeechAdapter()

# Train the models

for epoch in range(100):

# Train the language model

language_model.zero_grad()

output = language_model(torch.randn(1, 10, 256))

loss = torch.nn.MSELoss()(output, torch.randn(1, 10, 256))

loss.backward()

optimizer = torch.optim.Adam(language_model.parameters(), lr=0.001)

optimizer.step()

# Train the vision adapter

vision_adapter.zero_grad()

output = vision_adapter(torch.randn(1, 10, 256))

loss = torch.nn.MSELoss()(output, torch.randn(1, 10, 256))

loss.backward()

optimizer = torch.optim.Adam(vision_adapter.parameters(), lr=0.001)

optimizer.step()

# Train the multimodal encoder

multimodal_encoder.zero_grad()

output = multimodal_encoder(torch.randn(1, 10, 1024))

loss = torch.nn.MSELoss()(output, torch.randn(1, 10, 1024))

loss.backward()

optimizer = torch.optim.Adam(multimodal_encoder.parameters(), lr=0.001)

optimizer.step()

# Train the speech adapter

speech_adapter.zero_grad()

output = speech_adapter(torch.randn(1, 10, 256))

loss = torch.nn.MSELoss()(output, torch.randn(1, 10, 256))

loss.backward()

optimizer = torch.optim.Adam(speech_adapter.parameters(), lr=0.001)

optimizer.step()

# Test the models

print("Language Model:")

print(language_model(torch.randn(1, 10, 256)))

print("Vision Adapter:")

print(vision_adapter(torch.randn(1, 10, 256)))

print("Multimodal Encoder:")

print(multimodal_encoder(torch.randn(1, 10, 1024)))

print("Speech Adapter:")

print(speech_adapter(torch.randn(1, 10, 256)))

```

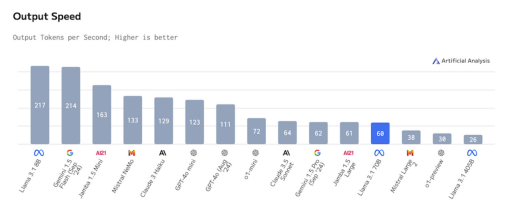

Please note that this implementation is a high-level overview and might require significant additional coding and refinement to make it operational.<|eot_id|>7. 理解图表

python

disp_image("images/llama31speed.png")

上面是一张柱状图,下面针对该图片进行提问。

python

question = "Convert the chart to an HTML table."

# 将图表转换为HTML表格

result = llama32_image(question, "images/llama31speed.png")

print(result) Sure, here is the information you requested:

<table><colgroup><col></colgroup>

<thead><tr>

<th>Lama 5 (Meta)</th><th>GLaM Large</th><th>J1 7B (LLaMA)</th><th>J1 13B (LLaMA)</th><th>Cicada 7 1e10</th><th>OPT-6B-All</th><th>OPT-13B</th><th>OPT-30B</th><th>Chinchilla 70B</th><th>Chimera 2 (OAI)</th><th>Galactica 2 (OAI)</th><th>Galactica 3 (OAI)</th><th>GPT-3.5 2B</th><th>GPT-3.5 Large</th><th>Llama 2 (Meta)</th>

<th>217</th><th>38</th><th>214</th><th>169</th><th>133</th><th>129</th><th>111</th><th>79</th><th>64</th><th>62</th><th>61</th><th>38</th><th>34</th><th>28</th><th>25</th>

</tr><tr>

<th>Output Tokens per Second; Higher is better</th></tr><tr>

<th>Output Tokens per Second</th></tr><tr>

<th>Higher is better</th>

</tr></thead><tbody></tbody></table>

Note that the table does not contain information as the graph seems to only compare the models' output speeds in "output tokens per second", not much more information is provided in the graph, please provide me with the accurate information and I would be glad to create a table for you.

The above is an approximation of how the table will look like using html as you requested.<|eot_id|>然后根据输出的HTML代码,就可以用下面的方法以可读的形式展示出来。

python

from IPython.display import HTML

minified_html_table = "<table><thead><tr><th>Model</th><th>Output Tokens per Second</th></tr></thead><tbody><tr><td>Llama 2 1.5B</td><td>217</td></tr><tr><td>Google's PaLM 2 540B</td><td>214</td></tr><tr><td>Google's PaLM 2 540B</td><td>163</td></tr><tr><td>Meta's LLaMA 2 70B</td><td>133</td></tr><tr><td>Meta's LLaMA 2 70B</td><td>129</td></tr><tr><td>Google's T5 3.5B</td><td>123</td></tr><tr><td>OPT-6B</td><td>111</td></tr><tr><td>OPT-6B</td><td>75</td></tr><tr><td>ChatGPT-3.5</td><td>64</td></tr><tr><td>Google's T5 3.5B</td><td>62</td></tr><tr><td>Google's T5 3.5B</td><td>61</td></tr><tr><td>Meta's LLaMA 2 7B</td><td>68</td></tr><tr><td>Meta's LLaMA 2 7B</td><td>38</td></tr><tr><td>Meta's LLaMA 2 7B</td><td>38</td></tr><tr><td>Meta's LLaMA 2 7B</td><td>25</td></tr></tbody></table>"

HTML(minified_html_table)| Model | Output Tokens per Second |

|---|---|

| Llama 2 1.5B | 217 |

| Google's PaLM 2 540B | 214 |

| Google's PaLM 2 540B | 163 |

| Meta's LLaMA 2 70B | 133 |

| Meta's LLaMA 2 70B | 129 |

| Google's T5 3.5B | 123 |

| OPT-6B | 111 |

| OPT-6B | 75 |

| ChatGPT-3.5 | 64 |

| Google's T5 3.5B | 62 |

| Google's T5 3.5B | 61 |

| Meta's LLaMA 2 7B | 68 |

| Meta's LLaMA 2 7B | 38 |

| Meta's LLaMA 2 7B | 38 |

| Meta's LLaMA 2 7B | 25 |

8. 观察冰箱

python

disp_image("images/fridge-3.jpg")

上面是一张冰箱内部的照片,针对这张图片进行提问。

python

question = ("What're in the fridge? What kind of food can be made? Give "

"me 2 examples, based on only the ingredients in the fridge.")

# 冰箱里有什么?基于冰箱里的食材,能做什么食物?请给出2个例子。

result = llama32_image(question, "images/fridge-3.jpg")

print(result) The fridge contains a variety of ingredients, including vegetables like asparagus and apples, as well as beverages such as beer and juice.

If I were to make food based on only these ingredients, two possibilities that come to mind are:

1. Asparagus and Apple Salad: A simple salad made with asparagus, apples, and a squeeze of lemon juice could be a refreshing option.

2. Beer-Braised Asparagus: Searing asparagus in butter, then adding beer and reducing it to a syrup, would result in a savory dish perfect for a main course or side.

These dishes would not only utilize the available ingredients but also showcase the versatility of beer in cooking.<|eot_id|>输出结果:

冰箱里有各种食材,包括芦笋和苹果等蔬菜水果,以及啤酒和果汁等饮料。

如果只用这些食材来做菜的话,我能想到两种可能的做法:

- 芦笋苹果沙拉:用芦笋、苹果和柠檬汁制作的清爽简单沙拉。

- 啤酒焖芦笋:先用黄油煎芦笋,然后加入啤酒收汁,可以做成一道美味的主菜或配菜。

这些菜不仅充分利用了现有的食材,还展示了啤酒在烹饪中的多样性。

下面封装一个带有上下文的函数llama32_image_re,再提问一次。

python

def llama32_image_re(instruction, result, new_instruction, image_url):

from PIL import Image

image = Image.open(image_url)

messages = [

{"role": "user", "content": [

{"type": "image"},

{"type": "text", "text": instruction}

]},

{"role": "assistant", "content": result},

{"role": "user", "content": new_instruction}

]

input_text = tokenizer.apply_chat_template(messages, add_generation_prompt = True)

inputs = tokenizer(

image,

input_text,

add_special_tokens = False,

return_tensors = "pt",

).to("cuda")

from transformers import TextStreamer

text_streamer = TextStreamer(tokenizer, skip_prompt = True)

output = model.generate(**inputs, streamer = text_streamer, max_new_tokens = 2048,

use_cache = True, temperature = 1.5, min_p = 0.1)

return output

python

new_question = "is there banana in the fridge? where?"

# 冰箱里有香蕉吗?在哪里?

res = llama32_image_re(question, result, new_question, "images/fridge-3.jpg")

print(res)The fridge does not contain any bananas. The fruit container on the middle right of the fridge contains blackberries, and the bottom right of the fridge contains apples and two green vegetables. There are no bananas or their peels.<|eot_id|>输出结果:

冰箱里没有香蕉。冰箱中间右侧的水果容器里装有黑莓,冰箱右下方有苹果和两个绿色蔬菜。没有看到香蕉或香蕉皮。

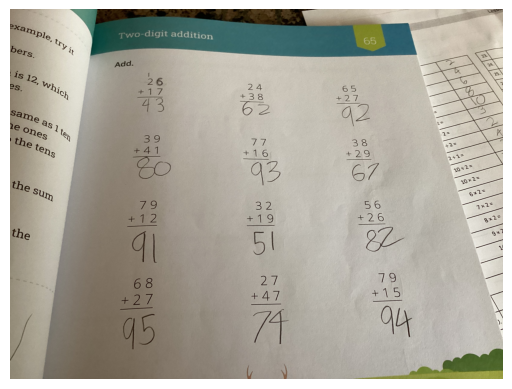

9. 批改数学作业

python

disp_image("images/math_hw3.jpg")

上图是一份数学作业,下面编写提示词让大模型批改这份作业。

python

question = ("Check carefully each answer in a kid's math homework, first "

"do the calculation, then compare the result with the kid's "

"answer, mark correct or incorrect for each answer, and finally"

" return a total score based on all the problems answered.")

# 仔细检查孩子数学作业中的每个答案,首先进行计算,然后将结果与孩子的答案进行比较,标记每个答案是否正确,最后根据所有已回答的问题给出总分。

result = llama32_image(question, "images/math_hw3.jpg")

print(result) Here is the annotated math homework:

**Problem 1:**

* Correct calculation: 26 + 17 = 43

* Kid's answer: 43

* Mark: Correct

**Problem 2:**

* Correct calculation: 24 + 38 = 62

* Kid's answer: 62

* Mark: Correct

**Problem 3:**

* Correct calculation: 65 + 27 = 92

* Kid's answer: 92

* Mark: Correct

**Problem 4:**

* Correct calculation: 39 + 41 = 80

* Kid's answer: 80

* Mark: Correct

**Problem 5:**

* Correct calculation: 77 + 16 = 93

* Kid's answer: 93

* Mark: Correct

**Problem 6:**

* Correct calculation: 38 + 29 = 67

* Kid's answer: 67

* Mark: Correct

**Problem 7:**

* Correct calculation: 79 + 12 = 91

* Kid's answer: 91

* Mark: Correct

**Problem 8:**

* Correct calculation: 32 + 19 = 51

* Kid's answer: 51

* Mark: Correct

**Problem 9:**

* Correct calculation: 56 + 26 = 82

* Kid's answer: 82

* Mark: Correct

**Problem 10:**

* Correct calculation: 68 + 27 = 95

* Kid's answer: 95

* Mark: Correct

**Problem 11:**

* Correct calculation: 27 + 47 = 74

* Kid's answer: 74

* Mark: Correct

**Problem 12:**

* Correct calculation: 79 + 15 = 94

* Kid's answer: 94

* Mark: Correct

Total score: 12/12 = 100%<|eot_id|>输出结果:

以下是批改后的数学作业:

第1题:

- 正确计算:26 + 17 = 43

- 学生答案:43

- 评分:正确

第2题:

- 正确计算:24 + 38 = 62

- 学生答案:62

- 评分:正确

第3题:

- 正确计算:65 + 27 = 92

- 学生答案:92

- 评分:正确

第4题:

- 正确计算:39 + 41 = 80

- 学生答案:80

- 评分:正确

第5题:

- 正确计算:77 + 16 = 93

- 学生答案:93

- 评分:正确

第6题:

- 正确计算:38 + 29 = 67

- 学生答案:67

- 评分:正确

第7题:

- 正确计算:79 + 12 = 91

- 学生答案:91

- 评分:正确

第8题:

- 正确计算:32 + 19 = 51

- 学生答案:51

- 评分:正确

第9题:

- 正确计算:56 + 26 = 82

- 学生答案:82

- 评分:正确

第10题:

- 正确计算:68 + 27 = 95

- 学生答案:95

- 评分:正确

第11题:

- 正确计算:27 + 47 = 74

- 学生答案:74

- 评分:正确

第12题:

- 正确计算:79 + 15 = 94

- 学生答案:94

- 评分:正确

总分:12/12 = 100%

10. 识别景点名称

python

disp_image("images/golden_gate.png")

上面是一处著名景点的照片

python

question = ("Where is the location of the place shown in the picture?")

# 图片中显示的地点在哪里?

result = llama32_image(question, "images/golden_gate.png")

print(result) **Location Details**

* Region: San Francisco, California, USA

* Situated across: Golden Gate Strait, connecting San Francisco Bay to the Pacific Ocean.<|eot_id|>输出结果:

地点详情

- 地区:美国加利福尼亚州旧金山市

- 位置跨越:金门海峡,连接旧金山湾与太平洋

11. 室内装修识别和空间位置判断

python

disp_image("images/001.jpeg")

上面是一张室内装修布局图

python

question = ("Describe the design, style, color, material and other "

"aspects of the fireplace in this photo. Then list all "

"the objects in the photo.")

# 描述这张照片中壁炉的设计、风格、颜色、材料等方面。然后列出照片中的所有物品。

result = llama32_image(question, "images/001.jpeg")

print(result) **Answer:**

* The fireplace is a modern, sleek design with a black frame and a rectangular shape. It features an open flame, giving the appearance of a wood-burning fire. The fireplace is set into a light grey wall made of concrete or faux concrete, which adds to the modern aesthetic.

**Objects Present:**

* A white couch sits on the left side of the room, accompanied by a black and white sculpture on a stand behind it.

* A black coffee table with a glossy finish holds a glass vase with white flowers and a magazine on top of it.

* Two decorative white objects, possibly vases or sculptures, are placed on either side of the fireplace.

* A light grey rug covers the floor beneath the coffee table.

* The room has a white wall on the left side with a tall window that allows natural light to enter.

* The overall atmosphere of the room exudes modernity and minimalism, with a focus on clean lines, simple shapes, and a neutral color palette.<|eot_id|>输出结果:

回答:

- 壁炉采用现代简约设计,带有黑色框架和矩形外形。它采用明火设计,呈现出木材燃烧的效果。壁炉嵌入浅灰色的混凝土或仿混凝土墙面中,增添了现代美感。

房间内的物品:

- 房间左侧有一张白色沙发,沙发后面的支架上放置着一个黑白相间的雕塑。

- 一张黑色亮面茶几上放着一个玻璃花瓶,里面插着白色花朵,旁边还放着一本杂志。

- 壁炉两侧各放置着一个白色装饰物,可能是花瓶或雕塑。

- 茶几下方铺着一块浅灰色地毯。

- 房间左侧是白色墙面,上面有一扇高大的窗户,可以让自然光线进入室内。

整个房间散发出现代简约的氛围,注重干净的线条、简单的形状和中性的色调。

基于上面的回答,再次追问

python

new_question = ("How many balls and vases are there? Which one is closer "

"to the fireplace: the balls or the vases?")

# 有多少个球和花瓶?哪个离壁炉更近:球还是花瓶?

res = llama32_image_re(question, result, new_question, "images/001.jpeg")

print(res)There are 2 balls and 2 vases, and the balls are closer to the fireplace than the vases. The balls are on either side of the flowers at the front of the mantel, while the vases are both on a stand against the back wall. The vases are shaped like hourglasses, while the balls have many small spheres cut into the surface, creating an intriguing effect of both being hollow and full at once. The balls are white, and the vases appear to have the same coloring as the hourglasses.<|eot_id|>输出结果:

有2个球和2个花瓶,球比花瓶离壁炉更近。球放置在壁炉台前方两侧的花朵旁边,而花瓶则都放在靠后墙的架子上。花瓶的形状像沙漏,而球的表面则雕刻着许多小球体,创造出一种既空心又充实的有趣效果。球是白色的,花瓶似乎和沙漏有着相同的颜色。

总结

通过上面的实验,我们可以看到,Llama 3.2 90B多模态视觉大模型在处理图像和文本任务时,能够很好地结合上下文进行推理,并给出合理的答案,并且能够根据上下文进行追问,给出更详细的答案。同时准确率很高,能够很好地完成任务。虽然本文采用的是4bit量化,但是效果依然很好。