Docker安装:

配置yum源:

bash

sudo yum install -y yum-utils

sudo yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo安装docker:

bash

sudo yum install -y docker-ce docker-ce-cli containerd.io

#以下是在安装k8s的时候使用

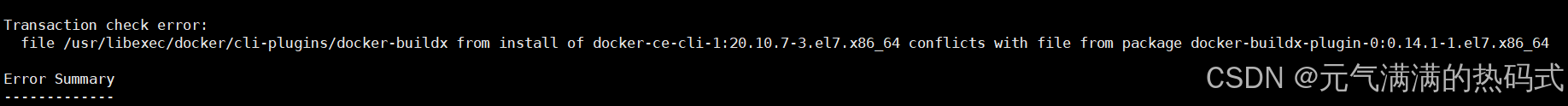

yum install -y docker-ce-20.10.7 docker-ce-cli-20.10.7 containerd.io-1.4.6安装时出现报错:

解决方法:

bash

先执行这条

[root@k8s-1 ~]# yum remove docker-buildx-plugin -y

[root@k8s-1 ~]#

[root@k8s-1 ~]# yum install -y docker-ce-20.10.7 docker-ce-cli-20.10.7 containerd.io-1.4.6启动:

bash

[root@k8s-2 ~]# systemctl enable docker --now

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.设置国内镜像加速:

bash

[root@k8s-1 ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": [

"https://hub-mirror.c.163.com",

"https://mirror.baidubce.com",

"https://ccr.ccs.tencentyun.com"

]

}测试:

bash

[root@k8s-2 ~]# docker pull nginx

Using default tag: latest

latest: Pulling from library/nginx

c353fd29d8c5: Pull complete

98b095d7d2b4: Pull complete

af5f0e3644c1: Pull complete

Digest: sha256:fad8e1cd52e24bce7b72cd7cb674a2efad671647b917055f5bd8a1f7ac9b1af8

Status: Downloaded newer image for nginx:latest

docker.io/library/nginx:latest

[root@k8s-2 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx <none> 66f8bdd3810c 3 weeks ago 192MBK8S集群安装:

前置条件

●如果是虚拟机则需要让三台机器互通,最简单的做法就是关闭防火墙。

bash

systemctl stop firewalld

systemctl disable firewalld设置主机名

命令:

bash

hostnamectl set-hostname <hostname>设置主机名解析

bash

[root@k8s-master ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.58.231 k8s-master

192.168.58.232 k8s-node1

192.168.58.233 k8s-node2时间同步

Kubernetes 要求集群中的节点时间必须精确一致,所以在每个节点上添加时间同步:

bash

yum install ntpdate -y

systemctl restart chronyd

systemctl enable chronyd关闭 SELinux

bash

关闭 SELinux

查看 SELinux 是否开启:

getenforce

永久关闭 SELinux ,需要重启:

sed -i 's/enforcing/disabled/' /etc/selinux/config

关闭当前会话的 SELinux ,重启之后无效:

setenforce 0关闭 swap 分区

bash

●永久关闭 swap ,需要重启:

sed -ri 's/.*swap.*/#&/' /etc/fstab

●关闭当前会话的 swap ,重启之后无效:

swapoff -a将桥接的 IPv4 流量传递到 iptables 的链

bash

将桥接的 IPv4 流量传递到 iptables 的链

●修改 /etc/sysctl.conf 文件:

# 如果有配置,则修改

sed -i "s#^net.ipv4.ip_forward.*#net.ipv4.ip_forward=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-ip6tables.*#net.bridge.bridge-nf-call-ip6tables=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-iptables.*#net.bridge.bridge-nf-call-iptables=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.all.disable_ipv6.*#net.ipv6.conf.all.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.default.disable_ipv6.*#net.ipv6.conf.default.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.lo.disable_ipv6.*#net.ipv6.conf.lo.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.all.forwarding.*#net.ipv6.conf.all.forwarding=1#g" /etc/sysctl.conf

# 可能没有,追加

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.all.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.default.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.lo.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.all.forwarding = 1" >> /etc/sysctl.conf

●加载 br_netfilter 模块:

modprobe br_netfilter

●持久化修改(保留配置包本地文件,重启系统或服务进程仍然有效)

sysctl -p开启 ipvs

bash

●在 Kubernetes 中 service 有两种代理模型,一种是基于 iptables ,另一种是基于 ipvs 的。ipvs 的性能要高于 iptables 的,但是如果要使用它,需要手动载入 ipvs 模块。

●在三台机器安装 ipset 和 ipvsadm :

yum -y install ipset ipvsadm

●在三台机器执行如下脚本:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

EOF

●授权、运行、检查是否加载:

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4添加阿里云的 Kubernetes 的 YUM 源

bash

●由于 Kubernetes 的镜像源在国外,非常慢,这里切换成国内的阿里云镜像源(三台机器均需执行下面命令):

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF安装 kubelet 、kubeadm 和 kubectl

bash

yum install -y kubelet-1.21.10 kubeadm-1.21.10 kubectl-1.21.10

bash

为了实现 Docker 使用的 cgroup drvier 和 kubelet 使用的 cgroup drver 一致,建议修改 /etc/sysconfig/kubelet 文件的内容:

vim /etc/sysconfig/kubelet

# 修改

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

设置为开机自启动即可,由于没有生成配置文件,集群初始化后自动启动:

systemctl enable kubelet查看 Kubernetes 安装所需镜像

bash

●查看 Kubernetes 安装所需镜像:

kubeadm config images list下载 Kubernetes 安装所需镜像

bash

GitHub - x-mirrors/gcr.io: 每天定时同步 gcr.io 镜像到 hub.docker.com。sync google container registry images to hub.docker.com

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.21.10

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.21.10

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.21.10

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.21.10

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.4.1

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.0

- 给 coredns 镜像重新打 tag :

bash

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.0 registry.cn-hangzhou.aliyuncs.com/google_containers/coredns/coredns:v1.8.0- 也可以使用如下的命令快速安装:

bash

kubeadm config images pull --kubernetes-version=v1.21.10 \

--image-repository registry.aliyuncs.com/google_containers部署 Kubernetes 的 Master 节点

●在 192.168.58.231 机器上部署 Kubernetes 的 Master 节点:

bash

# 由于默认拉取镜像地址k8s.gcr.io国内无法访问,这里需要指定阿里云镜像仓库地址

kubeadm init \

--apiserver-advertise-address=192.168.58.231 \

--image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version=v1.21.10 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=10.244.0.0/16初始化完成的日志:

bash

[root@k8s-master ~]# kubeadm init \

> --apiserver-advertise-address=192.168.58.231 \

> --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers \

> --kubernetes-version=v1.21.10 \

> --service-cidr=10.96.0.0/16 \

> --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.21.10

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.58.231]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.58.231 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.58.231 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 14.504848 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.21" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: ys25pt.qjw6je8sbck185zc

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.58.231:6443 --token ys25pt.qjw6je8sbck185zc \

--discovery-token-ca-cert-hash sha256:82bc8471036711f1c3d81b733082935177e773396e8bb9a5d15f2a0bf95b137e 根据日志提示操作,在 master 执行如下命令:

bash

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 如果是 root 用户,还可以执行如下命令

export KUBECONFIG=/etc/kubernetes/admin.conf默认的 token 有效期为 24 小时,当过期之后,该 token 就不能用了,这时可以使用如下的命令创建 token

bash

kubeadm token create --print-join-command

# 生成一个永不过期的token

kubeadm token create --ttl 0 --print-join-command部署 Kubernetes 的 Node节点

- 根据日志提示操作,在 node1和node2 执行如下命令:

bash

kubeadm join 192.168.58.231:6443 --token ys25pt.qjw6je8sbck185zc \

--discovery-token-ca-cert-hash sha256:82bc8471036711f1c3d81b733082935177e773396e8bb9a5d15f2a0bf95b137e 部署网络插件

下载calico的yaml配置文件

bash

wget https://docs.projectcalico.org/manifests/calico.yaml修改calico.yaml的配置文件

bash

将pod的网段修改为集群初始化时的网段

将拉取镜像的网址docker.io替换成swr.cn-north-4.myhuaweicloud.com/ddn-k8s/docker.io

sed -i s/192.168.0.0/10.244.0.0/g calico.yaml

sed -i 's/docker.io/swr.cn-north-4.myhuaweicloud.com\/ddn-k8s\/docker.io/g' calico.yaml

添加下面两行内容在calico.yaml(修改为自己的网卡名称)

4568 - name: IP_AUTODETECTION_METHOD

4569 value: "interface=ens33"启动calico

bash

kubectl apply -f calico.yaml - 等待3-5分钟 查看calico的pod运行情况

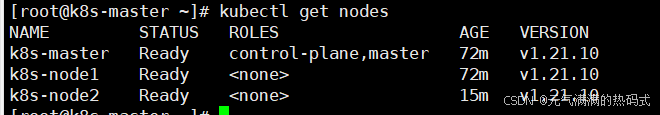

查看节点状态

查看节点状态