文章目录

- [aws(学习笔记第四十三课) s3_sns_sqs_lambda_chain](#aws(学习笔记第四十三课) s3_sns_sqs_lambda_chain)

- 学习内容:

-

- [1. 整体架构](#1. 整体架构)

-

- [1.1 代码链接](#1.1 代码链接)

- [1.2 整体架构](#1.2 整体架构)

- [1.3 测试代码需要的修改](#1.3 测试代码需要的修改)

-

- [1.3.1 `unit test`代码中引入`stack`的修改](#1.3.1

unit test代码中引入stack的修改) - [1.3.2 `test_outputs_created`代码中把错误的去掉](#1.3.2

test_outputs_created代码中把错误的去掉)

- [1.3.1 `unit test`代码中引入`stack`的修改](#1.3.1

- [2. 代码解析](#2. 代码解析)

-

- [2.1 生成`dead_letter_queue`死信队列和`upload_queue`文件上传队列](#2.1 生成

dead_letter_queue死信队列和upload_queue文件上传队列) - [2.3 创建`sqs topic`并对其进行订阅`subscribe`](#2.3 创建

sqs topic并对其进行订阅subscribe) - [2.4 创建`S3 bucket`](#2.4 创建

S3 bucket) - [2.5 为`S3 bucket`绑定`SNS Topic`](#2.5 为

S3 bucket绑定SNS Topic) - [2.6 创建`lambda`函数](#2.6 创建

lambda函数) - [2.7 对`upload_queue`绑定`lambda`函数](#2.7 对

upload_queue绑定lambda函数) - [2.8 输出`cdk`的`output`](#2.8 输出

cdk的output) -

- [2.8.1 `UploadFileToS3Example`](#2.8.1

UploadFileToS3Example) - [2.8.2 `UploadSqsQueueUrl`](#2.8.2

UploadSqsQueueUrl) - [2.8.3 `LambdaFunctionName`](#2.8.3

LambdaFunctionName) - [2.8.4 `LambdaFunctionLogGroupName`](#2.8.4

LambdaFunctionLogGroupName)

- [2.8.1 `UploadFileToS3Example`](#2.8.1

- [2.1 生成`dead_letter_queue`死信队列和`upload_queue`文件上传队列](#2.1 生成

- [3 执行`cdk`](#3 执行

cdk) -

- [3.1 执行`cdk`](#3.1 执行

cdk) - [3.2 执行`cdk`的结果](#3.2 执行

cdk的结果) - [3.3 `output`检查](#3.3

output检查) - [3.4 向`S3 bucket`上传`csv`文件](#3.4 向

S3 bucket上传csv文件)

- [3.1 执行`cdk`](#3.1 执行

- [4 使用`unit test`进行`aws cdk`的测试](#4 使用

unit test进行aws cdk的测试) -

- [4.1 `unit test`代码](#4.1

unit test代码) -

- [4.1.1 创建`mock stack`](#4.1.1 创建

mock stack) - [4.1.2 创建`test`](#4.1.2 创建

test)

- [4.1.1 创建`mock stack`](#4.1.1 创建

- [4.2 进行`unit test`](#4.2 进行

unit test)

- [4.1 `unit test`代码](#4.1

aws(学习笔记第四十三课) s3_sns_sqs_lambda_chain

- 使用

lambda监视S3 upload event,并使用unit test进行aws cdk的测试

学习内容:

- 使用

dead_letter_queue死信队列 - 使用

sqs的队列 - 使用

unit test进行aws cdk的测试

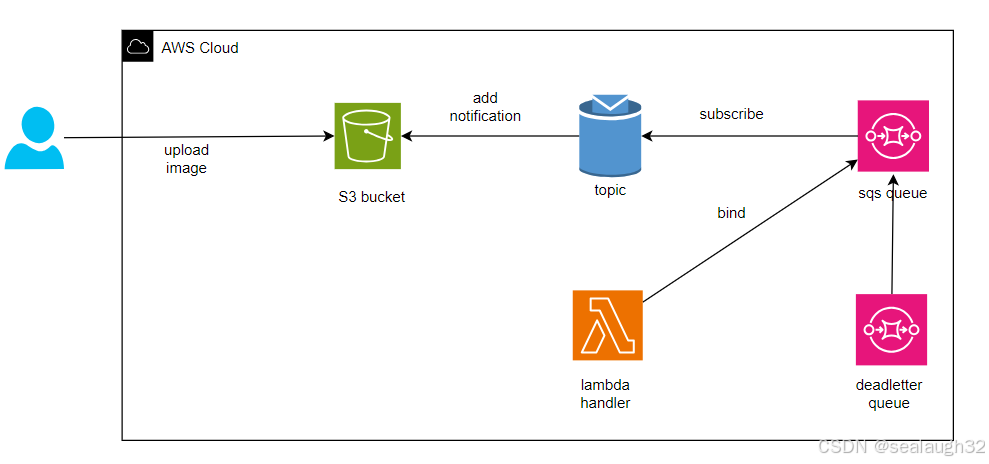

1. 整体架构

1.1 代码链接

1.2 整体架构

1.3 测试代码需要的修改

1.3.1 unit test代码中引入stack的修改

python

import aws_cdk as cdk

import pytest

from s3_sns_sqs_lambda_chain.s3_sns_sqs_lambda_chain_stack import S3SnsSqsLambdaChainStack s3_sns_sqs_lambda_chain这里如果不加上,那么会报错。

1.3.2 test_outputs_created代码中把错误的去掉

python

def test_outputs_created(template):

"""

Test for CloudFormation Outputs

- Upload File To S3 Instructions

- Queue Url

- Lambda Function Name

- Lambda Function Log Group Name

"""

# template.has

template.has_output("UploadFileToS3Example", {})

template.has_output("UploadSqsQueueUrl", {})

template.has_output("LambdaFunctionName", {})

template.has_output("LambdaFunctionLogGroupName", {})template.has这里要去掉,如果不去掉就会执行错误。

2. 代码解析

2.1 生成dead_letter_queue死信队列和upload_queue文件上传队列

python

# Note: A dead-letter queue is optional but it helps capture any failed messages

dlq = sqs.Queue(

self,

id="dead_letter_queue_id",

retention_period=Duration.days(7)

)

dead_letter_queue = sqs.DeadLetterQueue(

max_receive_count=1,

queue=dlq

)

upload_queue = sqs.Queue(

self,

id="sample_queue_id",

dead_letter_queue=dead_letter_queue,

visibility_timeout = Duration.seconds(LAMBDA_TIMEOUT * 6)

)

2.3 创建sqs topic并对其进行订阅subscribe

python

sqs_subscription = sns_subs.SqsSubscription(

upload_queue,

raw_message_delivery=True

)

upload_event_topic = sns.Topic(

self,

id="sample_sns_topic_id"

)

# This binds the SNS Topic to the SQS Queue

upload_event_topic.add_subscription(sqs_subscription)

2.4 创建S3 bucket

python

# Note: Lifecycle Rules are optional but are included here to keep costs

# low by cleaning up old files or moving them to lower cost storage options

s3_bucket = s3.Bucket(

self,

id="sample_bucket_id",

block_public_access=s3.BlockPublicAccess.BLOCK_ALL,

versioned=True,

lifecycle_rules=[

s3.LifecycleRule(

enabled=True,

expiration=Duration.days(365),

transitions=[

s3.Transition(

storage_class=s3.StorageClass.INFREQUENT_ACCESS,

transition_after=Duration.days(30)

),

s3.Transition(

storage_class=s3.StorageClass.GLACIER,

transition_after=Duration.days(90)

),

]

)

]

)这里考虑成本,所以30days之后s3.StorageClass.INFREQUENT_ACCESS,所以90days之后s3.StorageClass.GLACIER。

2.5 为S3 bucket绑定SNS Topic

python

# Note: If you don't specify a filter all uploads will trigger an event.

# Also, modifying the event type will handle other object operations

# This binds the S3 bucket to the SNS Topic

s3_bucket.add_event_notification(

s3.EventType.OBJECT_CREATED_PUT,

s3n.SnsDestination(upload_event_topic),

s3.NotificationKeyFilter(prefix="uploads", suffix=".csv")

)

2.6 创建lambda函数

python

function = _lambda.Function(self, "lambda_function",

runtime=_lambda.Runtime.PYTHON_3_9,

handler="lambda_function.handler",

code=_lambda.Code.from_asset(path=lambda_dir),

timeout = Duration.seconds(LAMBDA_TIMEOUT)

)lambda函数的实现:

python

def handler(event, context):

# output event to logs

print(event)

return {

'statusCode': 200,

'body': event

}

2.7 对upload_queue绑定lambda函数

python

# This binds the lambda to the SQS Queue

invoke_event_source = lambda_events.SqsEventSource(upload_queue)

function.add_event_source(invoke_event_source)

2.8 输出cdk的output

2.8.1 UploadFileToS3Example

这里创建了一个CfnOutput,用于输出上传本地文件到S3 bucket上的示例command。

python

CfnOutput(

self,

"UploadFileToS3Example",

value="aws s3 cp <local-path-to-file> s3://{}/".format(s3_bucket.bucket_name),

description="Upload a file to S3 (using AWS CLI) to trigger the SQS chain",

)2.8.2 UploadSqsQueueUrl

这里输出一个Output,用于表示upload_queue的queue_url。

python

CfnOutput(

self,

"UploadSqsQueueUrl",

value=upload_queue.queue_url,

description="Link to the SQS Queue triggered on S3 uploads",

)2.8.3 LambdaFunctionName

这里输出一个Output,用于表示lambda的函数名。

python

CfnOutput(

self,

"LambdaFunctionName",

value=function.function_name,

)2.8.4 LambdaFunctionLogGroupName

这里输出一个Output,用于表示lambda函数的log输出的log group。

python

CfnOutput(

self,

"LambdaFunctionLogGroupName",

value=function.log_group.log_group_name,

)3 执行cdk

3.1 执行cdk

shell

python -m venv .venv

source ./.venv/Scripts/activate

pip install -r requirement.txt

cdk --require-approval never deploy3.2 执行cdk的结果

3.3 output检查

3.4 向S3 bucket上传csv文件

shell

aws s3 cp ./uploads_bbb.csv s3://s3-sns-sqs-lambda-stack-samplebucketideae240bf-l2je2slhprg2/

这里,每次上传csv文件都会触发lambda函数调用。

4 使用unit test进行aws cdk的测试

4.1 unit test代码

4.1.1 创建mock stack

python

@pytest.fixture

def template():

"""

Generate a mock stack that embeds the orchestrator construct for testing

"""

script_dir = pathlib.Path(__file__).parent

lambda_dir = str(script_dir.joinpath("..", "..", "lambda"))

app = cdk.App()

stack = S3SnsSqsLambdaChainStack(

app,

"s3-sns-sqs-lambda-stack",

lambda_dir=lambda_dir

)

return cdk.assertions.Template.from_stack(stack)4.1.2 创建test

测试topic关联

python

def test_sns_topic_created(template):

"""

Test for SNS Topic and Subscription: S3 Uploadvent Notification

"""

template.resource_count_is("AWS::SNS::Subscription", 1)

template.resource_count_is("AWS::SNS::Topic", 1)

template.resource_count_is("AWS::SNS::TopicPolicy", 1)测试queue关联

python

def test_sqs_queue_created(template):

"""

Test for SQS Queue:

- Queue to process uploads

- Dead-letter Queue

"""

template.resource_count_is("AWS::SQS::Queue", 2)

template.resource_count_is("AWS::SQS::QueuePolicy", 1)测试lambda关联

python

def test_lambdas_created(template):

"""

Test for Lambdas created:

- Sample Lambda

- Bucket Notification Handler (automatically provisioned)

- Log Retention (automatically provisioned)

"""

template.resource_count_is("AWS::Lambda::Function", 3)

template.resource_count_is("AWS::Lambda::EventSourceMapping", 1)测试output关联

python

def test_outputs_created(template):

"""

Test for CloudFormation Outputs

- Upload File To S3 Instructions

- Queue Url

- Lambda Function Name

- Lambda Function Log Group Name

"""

template.has_output("UploadFileToS3Example", {})

template.has_output("UploadSqsQueueUrl", {})

template.has_output("LambdaFunctionName", {})

template.has_output("LambdaFunctionLogGroupName", {})4.2 进行unit test

- 进行依赖包的安装

shell

pip install -r requirements-dev.txt

- 进行

unit test

shell

pytest