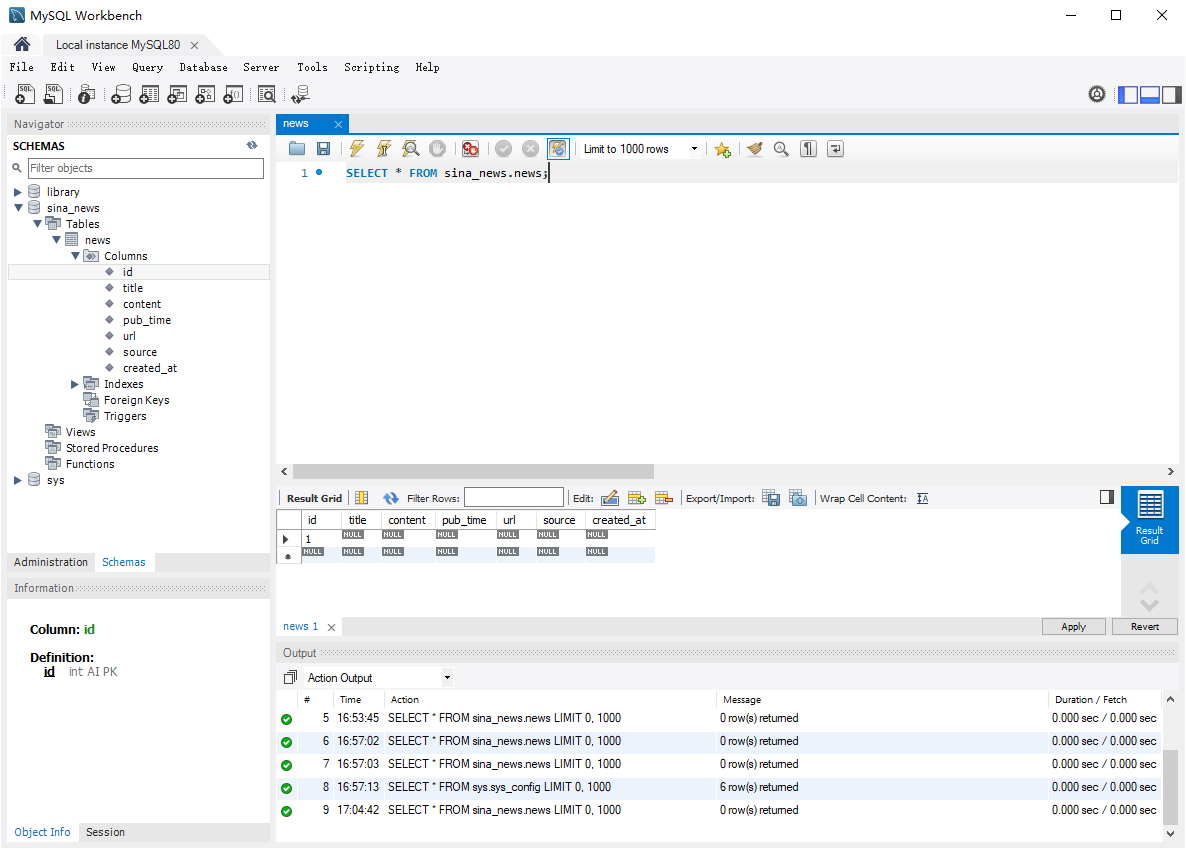

新浪新闻

爬虫文件

python

import scrapy

from sina_news_crawler.items import SinaNewsCrawlerItem

class SinaNewsSpider(scrapy.Spider):

name = "sina_news"

allowed_domains = ["news.sina.com.cn"]

start_urls = ["https://news.sina.com.cn"]

def parse(self, response):

# 提取新闻列表页链接

news_links = response.xpath('//a[contains(@href, "/news/") or contains(@href, "/article/")]/@href').getall()

for link in news_links:

# 确保URL是完整链接

full_url = response.urljoin(link)

# 只爬取新闻详情页

if ".shtml" in full_url or ".html" in full_url:

yield scrapy.Request(url=full_url, callback=self.parse_news_detail)

def parse_news_detail(self, response):

# 解析新闻详情页

item = SinaNewsCrawlerItem()

# 提取标题

item['title'] = response.xpath('//h1[@class="main-title"]/text()').get(default='').strip()

# 提取发布时间

item['pub_time'] = response.xpath('//span[@class="date"]/text() | //div[@class="date-source"]/span/text()').get(default='').strip()

# 提取新闻来源

item['source'] = response.xpath('//span[@class="source"]/text() | //div[@class="date-source"]/a/text()').get(default='').strip()

# 提取新闻内容

content_paragraphs = response.xpath('//div[@class="article"]/p/text() | //div[@id="artibody"]/p/text()').getall()

item['content'] = '\n'.join([p.strip() for p in content_paragraphs if p.strip()])

# 记录新闻URL

item['url'] = response.url

yield itemitems.py 文件

python

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class SinaNewsCrawlerItem(scrapy.Item):

# 新闻标题

title = scrapy.Field()

# 新闻内容

content = scrapy.Field()

# 发布时间

pub_time = scrapy.Field()

# 新闻URL

url = scrapy.Field()

# 新闻来源

source = scrapy.Field()middlewares 文件

python

# Define here the models for your spider middleware

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

class SinaNewsCrawlerSpiderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(self, response, spider):

# Called for each response that goes through the spider

# middleware and into the spider.

# Should return None or raise an exception.

return None

def process_spider_output(self, response, result, spider):

# Called with the results returned from the Spider, after

# it has processed the response.

# Must return an iterable of Request, or item objects.

for i in result:

yield i

def process_spider_exception(self, response, exception, spider):

# Called when a spider or process_spider_input() method

# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Request or item objects.

pass

async def process_start(self, start):

# Called with an async iterator over the spider start() method or the

# maching method of an earlier spider middleware.

async for item_or_request in start:

yield item_or_request

def spider_opened(self, spider):

spider.logger.info("Spider opened: %s" % spider.name)

class SinaNewsCrawlerDownloaderMiddleware:

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the downloader middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_request(self, request, spider):

# Called for each request that goes through the downloader

# middleware.

# Must either:

# - return None: continue processing this request

# - or return a Response object

# - or return a Request object

# - or raise IgnoreRequest: process_exception() methods of

# installed downloader middleware will be called

return None

def process_response(self, request, response, spider):

# Called with the response returned from the downloader.

# Must either;

# - return a Response object

# - return a Request object

# - or raise IgnoreRequest

return response

def process_exception(self, request, exception, spider):

# Called when a download handler or a process_request()

# (from other downloader middleware) raises an exception.

# Must either:

# - return None: continue processing this exception

# - return a Response object: stops process_exception() chain

# - return a Request object: stops process_exception() chain

pass

def spider_opened(self, spider):

spider.logger.info("Spider opened: %s" % spider.name)pipelines文件

python

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

import pymysql

from itemadapter import ItemAdapter

class SinaNewsCrawlerPipeline:

def process_item(self, item, spider):

return item

class MySQLPipeline:

def __init__(self, host, user, password, database, port):

self.host = host

self.user = user

self.password = password

self.database = database

self.port = port

self.db = None

self.cursor = None

@classmethod

def from_crawler(cls, crawler):

return cls(

host=crawler.settings.get('MYSQL_HOST', 'localhost'),

user=crawler.settings.get('MYSQL_USER', 'root'),

password=crawler.settings.get('MYSQL_PASSWORD', ''),

database=crawler.settings.get('MYSQL_DATABASE', 'sina_news'),

port=crawler.settings.getint('MYSQL_PORT', 3306)

)

def open_spider(self, spider):

# 连接数据库

self.db = pymysql.connect(

host=self.host,

user=self.user,

password=self.password,

database=self.database,

port=self.port,

charset='utf8mb4'

)

self.cursor = self.db.cursor()

# 创建新闻表

self.cursor.execute('''

CREATE TABLE IF NOT EXISTS news (

id INT AUTO_INCREMENT PRIMARY KEY,

title VARCHAR(255) NOT NULL,

content TEXT,

pub_time DATETIME,

url VARCHAR(255) UNIQUE NOT NULL,

source VARCHAR(100),

created_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4

''')

self.db.commit()

def close_spider(self, spider):

self.db.close()

def process_item(self, item, spider):

# 插入数据

try:

# 打印接收到的item

spider.logger.debug(f'接收到的item: {item}')

title = item.get('title', '')

content = item.get('content', '')

pub_time = item.get('pub_time', '')

url = item.get('url', '')

source = item.get('source', '')

spider.logger.debug(f'准备插入数据库: 标题={title}, 来源={source}')

self.cursor.execute('''

INSERT INTO news (title, content, pub_time, url, source)

VALUES (%s, %s, %s, %s, %s)

ON DUPLICATE KEY UPDATE

title=VALUES(title),

content=VALUES(content),

pub_time=VALUES(pub_time),

source=VALUES(source)

''', (

title,

content,

pub_time,

url,

source

))

self.db.commit()

spider.logger.debug(f'成功插入数据库: {url}')

except pymysql.MySQLError as e:

self.db.rollback()

spider.logger.error(f'Database error: {e}')

return itemsettings文件

python

# Scrapy settings for sina_news_crawler project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = "sina_news_crawler"

SPIDER_MODULES = ["sina_news_crawler.spiders"]

NEWSPIDER_MODULE = "sina_news_crawler.spiders"

ADDONS = {}

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/98.0.4758.102 Safari/537.36"

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# 配置日志级别

LOG_LEVEL = 'DEBUG'

# MySQL数据库配置

MYSQL_USER = 'root'

MYSQL_PASSWORD = '123456'

MYSQL_DATABASE = 'sina_news'

MYSQL_HOST = 'localhost'

MYSQL_PORT = 3306

# 请确保先在MySQL中创建数据库: CREATE DATABASE sina_news CHARACTER SET utf8mb4 COLLATE utf8mb4_unicode_ci;

# Concurrency and throttling settings

#CONCURRENT_REQUESTS = 16

CONCURRENT_REQUESTS_PER_DOMAIN = 1

DOWNLOAD_DELAY = 3 # 增加延迟以避免被封禁

# Disable cookies (enabled by default)

#COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

#TELNETCONSOLE_ENABLED = False

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

"Accept-Language": "zh-CN,zh;q=0.9,en;q=0.8",

}

# Enable or disable spider middlewares

#SPIDER_MIDDLEWARES = {

# "sina_news_crawler.middlewares.SinaNewsCrawlerSpiderMiddleware": 543,

#}

# Enable or disable downloader middlewares

#DOWNLOADER_MIDDLEWARES = {

# "sina_news_crawler.middlewares.SinaNewsCrawlerDownloaderMiddleware": 543,

#}

# Enable or disable extensions

#EXTENSIONS = {

# "scrapy.extensions.telnet.TelnetConsole": None,

#}

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

"sina_news_crawler.pipelines.MySQLPipeline": 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

AUTOTHROTTLE_ENABLED = True

# The initial download delay

AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

#AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

HTTPCACHE_ENABLED = True

HTTPCACHE_EXPIRATION_SECS = 3600 # 缓存1小时

HTTPCACHE_DIR = "httpcache"

HTTPCACHE_IGNORE_HTTP_CODES = []

HTTPCACHE_STORAGE = "scrapy.extensions.httpcache.FilesystemCacheStorage"

# Set settings whose default value is deprecated to a future-proof value

FEED_EXPORT_ENCODING = "utf-8"数据库