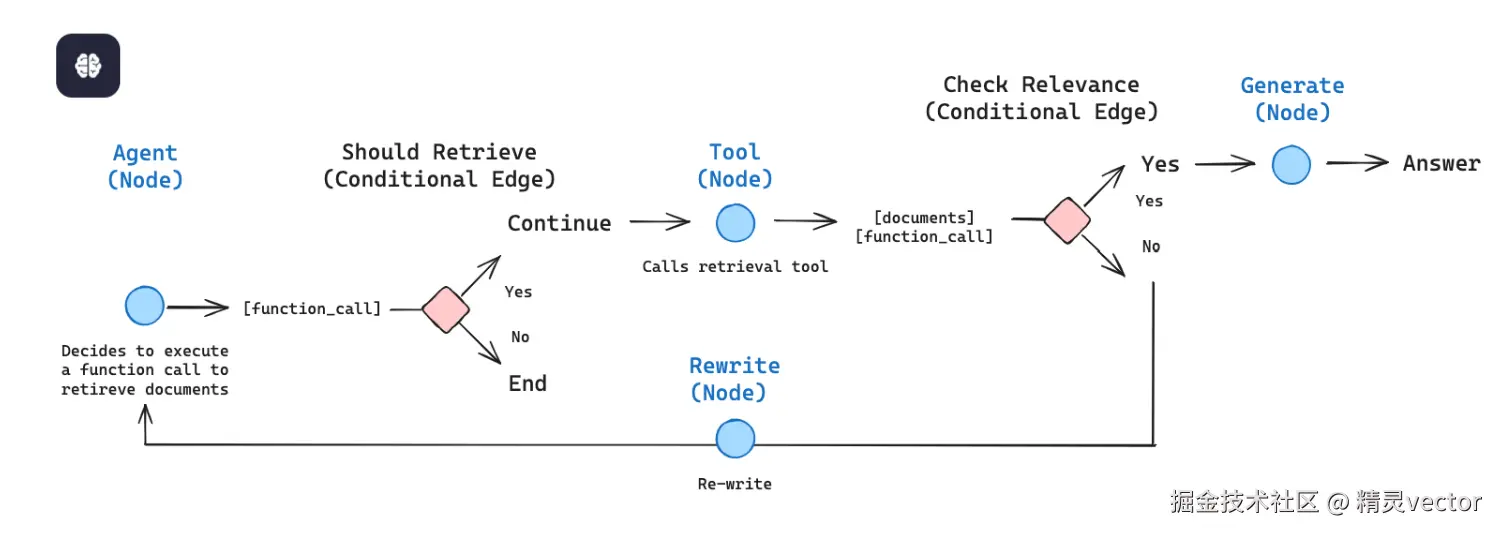

在本篇文章中,我们将通过LangGrah构建一个检索代理。我们让大语言模型(LLM)能够自主决定是从向量库中检索上下文,还是直接响应用户。大概的流程是:获取并预处理用于检索的文档;对这些文档进行语义搜索索引,并为代理创建一个检索工具;构建一个具备代理能力的RAG系统,使其能够自主决定何时使用检索工具。

一 处理文档

首先获取文档以在我们的 RAG 系统中使用。我们将使用某个博客中的三个最新页面(lilianweng.github.io/)。然后使用 WebBaseLoader 实用程序获取页面的内容:

python

from langchain_community.document_loaders import WebBaseLoader

urls = [

"https://lilianweng.github.io/posts/2024-11-28-reward-hacking/",

"https://lilianweng.github.io/posts/2024-07-07-hallucination/",

"https://lilianweng.github.io/posts/2024-04-12-diffusion-video/",

]

docs = [WebBaseLoader(url).load() for url in urls]

print("=== WebBaseLoader 加载结果 ===")

for i, doc in enumerate(docs):

print(f"\nDocument {i+1}:")

for element in doc:

print(f"Text: {element.page_content[:100]}...") # Print first 100 characters

if hasattr(element, 'metadata'):

print("Metadata:", element.metadata)

else:

print("No metadata available.")

print("\n=== 完成 WebBaseLoader 文档加载 ===")得出具体网站的输出内容之后,获取的文档拆分成更小的块,以便索引到我们的 vectorstore 中。

python

from langchain_text_splitters import RecursiveCharacterTextSplitter

docs_list = [item for sublist in docs for item in sublist]

text_splitter = RecursiveCharacterTextSplitter.from_tiktoken_encoder(

chunk_size=100, chunk_overlap=50

)

doc_splits = text_splitter.split_documents(docs_list)

print(doc_splits[0].page_content.strip())二 创建检索工具

现在我们有了拆分后的文档,我们可以将它们索引到用于语义搜索的向量存储中。我们可以使用内存中向量存储和 OpenAI 嵌入,

python

from langchain_core.vectorstores import InMemoryVectorStore

from langchain_openai import OpenAIEmbeddings

vectorstore = InMemoryVectorStore.from_documents(

documents=doc_splits, embedding=OpenAIEmbeddings()

)

retriever = vectorstore.as_retriever()然后使用 LangChain 中预构建方式 create_retriever_tool 创建一个检索工具。

python

from langchain.tools.retriever import create_retriever_tool

retriever_tool = create_retriever_tool(

retriever,

"retrieve_blog_posts",

"Search and return information about Lilian Weng blog posts.",

)调用这个检索工具:

python

retriever_tool.invoke({"query": "types of reward hacking"})三 生成查询

现在我们将开始为代理式 RAG 图构建组件(节点和边)。需要注意的是,这些组件将操作 MessagesState ------ 一种图状态,包含一个 messages 键,其值为聊天消息的列表。首先我们要构建一个 generate_query_or_respond 节点。它将调用一个大语言模型(LLM),根据当前图状态(即消息列表)生成回复。根据输入的消息,它将决定是使用之前创建的 retriever 工具进行检索,还是直接回复用户。请注意,我们通过 .bind_tools 方法将之前创建的 retriever_tool 提供给了聊天模型使用。

python

from langgraph.graph import MessagesState

from langchain.chat_models import init_chat_model

response_model = init_chat_model("openai:gpt-4.1", temperature=0)

def generate_query_or_respond(state: MessagesState):

"""Call the model to generate a response based on the current state. Given

the question, it will decide to retrieve using the retriever tool, or simply respond to the user.

"""

response = (

response_model

.bind_tools([retriever_tool]).invoke(state["messages"])

)

return {"messages": [response]}我们试着调用它:

python

input = {"messages": [{"role": "user", "content": "hello!"}]}

generate_query_or_respond(input)["messages"][-1].pretty_print()得到下面输出:

python

================================== Ai Message ==================================

Hello! How can I help you today?可以看到他并没有调用工具,然后我们换一个问题,问一个需要语义搜索的问题:

python

input = {

"messages": [

{

"role": "user",

"content": "What does Lilian Weng say about types of reward hacking?",

}

]

}

generate_query_or_respond(input)["messages"][-1].pretty_print()得到下面输出

python

================================== Ai Message ==================================

Tool Calls:

retrieve_blog_posts (call_tYQxgfIlnQUDMdtAhdbXNwIM)

Call ID: call_tYQxgfIlnQUDMdtAhdbXNwIM

Args:

query: types of reward hacking这个时候就调用了我们的retriever_tool搜索工具。

四 给文档评分

接下来我们添加一个条件边 ------ grade_documents,用于判断检索到的文档是否与问题相关。我们将使用一个具有结构化输出模式 GradeDocuments 的模型来进行文档评分。grade_documents 函数将根据评分结果返回下一个要跳转的节点名称(generate_answer 或 rewrite_question)。

python

from pydantic import BaseModel, Field

from typing import Literal

GRADE_PROMPT = (

"You are a grader assessing relevance of a retrieved document to a user question. \n "

"Here is the retrieved document: \n\n {context} \n\n"

"Here is the user question: {question} \n"

"If the document contains keyword(s) or semantic meaning related to the user question, grade it as relevant. \n"

"Give a binary score 'yes' or 'no' score to indicate whether the document is relevant to the question."

)

class GradeDocuments(BaseModel):

"""Grade documents using a binary score for relevance check."""

binary_score: str = Field(

description="Relevance score: 'yes' if relevant, or 'no' if not relevant"

)

grader_model = init_chat_model("openai:gpt-4.1", temperature=0)

def grade_documents(

state: MessagesState,

) -> Literal["generate_answer", "rewrite_question"]:

"""Determine whether the retrieved documents are relevant to the question."""

question = state["messages"][0].content

context = state["messages"][-1].content

prompt = GRADE_PROMPT.format(question=question, context=context)

response = (

grader_model

.with_structured_output(GradeDocuments).invoke(

[{"role": "user", "content": prompt}]

)

)

score = response.binary_score

if score == "yes":

return "generate_answer"

else:

return "rewrite_question"在工具返回的结果中包含无关文档的情况下运行该流程:

python

from langchain_core.messages import convert_to_messages

input = {

"messages": convert_to_messages(

[

{

"role": "user",

"content": "What does Lilian Weng say about types of reward hacking?",

},

{

"role": "assistant",

"content": "",

"tool_calls": [

{

"id": "1",

"name": "retrieve_blog_posts",

"args": {"query": "types of reward hacking"},

}

],

},

{"role": "tool", "content": "meow", "tool_call_id": "1"},

]

)

}

grade_documents(input)确认相关文档被正确分类为"相关"问题。

python

input = {

"messages": convert_to_messages(

[

{

"role": "user",

"content": "What does Lilian Weng say about types of reward hacking?",

},

{

"role": "assistant",

"content": "",

"tool_calls": [

{

"id": "1",

"name": "retrieve_blog_posts",

"args": {"query": "types of reward hacking"},

}

],

},

{

"role": "tool",

"content": "reward hacking can be categorized into two types: environment or goal misspecification, and reward tampering",

"tool_call_id": "1",

},

]

)

}

grade_documents(input)五 重写问题

我们构建一个 rewrite_question 节点。检索工具可能返回不了相关的文档,说明我们需要改进原始用户的提问问题。所以,我们将调用 rewrite_question 节点:

python

REWRITE_PROMPT = (

"Look at the input and try to reason about the underlying semantic intent / meaning.\n"

"Here is the initial question:"

"\n ------- \n"

"{question}"

"\n ------- \n"

"Formulate an improved question:"

)

def rewrite_question(state: MessagesState):

"""Rewrite the original user question."""

messages = state["messages"]

question = messages[0].content

prompt = REWRITE_PROMPT.format(question=question)

response = response_model.invoke([{"role": "user", "content": prompt}])

return {"messages": [{"role": "user", "content": response.content}]}然后试一下

python

input = {

"messages": convert_to_messages(

[

{

"role": "user",

"content": "What does Lilian Weng say about types of reward hacking?",

},

{

"role": "assistant",

"content": "",

"tool_calls": [

{

"id": "1",

"name": "retrieve_blog_posts",

"args": {"query": "types of reward hacking"},

}

],

},

{"role": "tool", "content": "meow", "tool_call_id": "1"},

]

)

}

response = rewrite_question(input)

print(response["messages"][-1]["content"])输出下面内容

python

What are the different types of reward hacking described by Lilian Weng, and how does she explain them?六 生成答案

我们构建 generate_answer 节点:如果通过评分器的检查,我们可以基于原始问题和检索到的上下文生成最终答案。

python

GENERATE_PROMPT = (

"You are an assistant for question-answering tasks. "

"Use the following pieces of retrieved context to answer the question. "

"If you don't know the answer, just say that you don't know. "

"Use three sentences maximum and keep the answer concise.\n"

"Question: {question} \n"

"Context: {context}"

)

def generate_answer(state: MessagesState):

"""Generate an answer."""

question = state["messages"][0].content

context = state["messages"][-1].content

prompt = GENERATE_PROMPT.format(question=question, context=context)

response = response_model.invoke([{"role": "user", "content": prompt}])

return {"messages": [response]}

input = {

"messages": convert_to_messages(

[

{

"role": "user",

"content": "What does Lilian Weng say about types of reward hacking?",

},

{

"role": "assistant",

"content": "",

"tool_calls": [

{

"id": "1",

"name": "retrieve_blog_posts",

"args": {"query": "types of reward hacking"},

}

],

},

{

"role": "tool",

"content": "reward hacking can be categorized into two types: environment or goal misspecification, and reward tampering",

"tool_call_id": "1",

},

]

)

}

response = generate_answer(input)

response["messages"][-1].pretty_print()输出下面内容

python

================================== Ai Message ==================================

Lilian Weng categorizes reward hacking into two types: environment or goal misspecification, and reward tampering. She considers reward hacking as a broad concept that includes both of these categories. Reward hacking occurs when an agent exploits flaws or ambiguities in the reward function to achieve high rewards without performing the intended behaviors.七 组装Graph

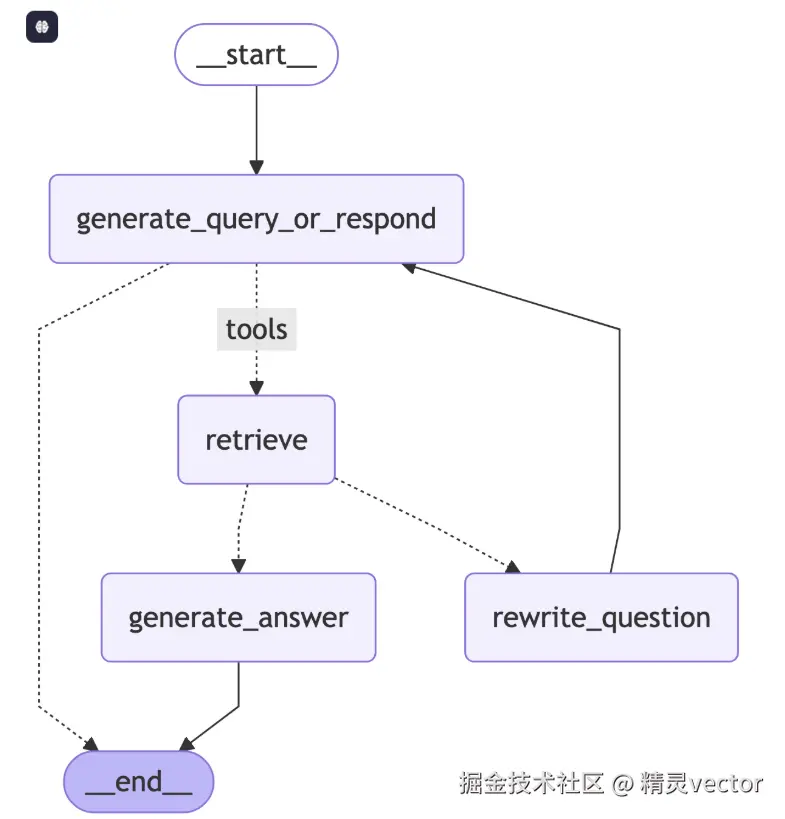

从 generate_query_or_respond 开始,并确定我们是否需要调用 retriever_tool,使用 tools_condition的下一步路由:如果 generate_query_or_respond 返回 tool_calls ,呼叫 retriever_tool 检索上下文 否则,直接响应用户,评分检索文档内容,(grade_documents)如果不相关,请使 rewrite_question重写问题,如果相关,请继续使用ToolMessage 使 generate_answer进行最终响应。

python

from langgraph.graph import StateGraph, START, END

from langgraph.prebuilt import ToolNode

from langgraph.prebuilt import tools_condition

workflow = StateGraph(MessagesState)

# Define the nodes we will cycle between

workflow.add_node(generate_query_or_respond)

workflow.add_node("retrieve", ToolNode([retriever_tool]))

workflow.add_node(rewrite_question)

workflow.add_node(generate_answer)

workflow.add_edge(START, "generate_query_or_respond")

# Decide whether to retrieve

workflow.add_conditional_edges(

"generate_query_or_respond",

# Assess LLM decision (call `retriever_tool` tool or respond to the user)

tools_condition,

{

# Translate the condition outputs to nodes in our graph

"tools": "retrieve",

END: END,

},

)

# Edges taken after the `action` node is called.

workflow.add_conditional_edges(

"retrieve",

# Assess agent decision

grade_documents,

)

workflow.add_edge("generate_answer", END)

workflow.add_edge("rewrite_question", "generate_query_or_respond")

# Compile

graph = workflow.compile()大致的图形是这样子:  运行我们构建的的Graph:

运行我们构建的的Graph:

python

for chunk in graph.stream(

{

"messages": [

{

"role": "user",

"content": "What does Lilian Weng say about types of reward hacking?",

}

]

}

):

for node, update in chunk.items():

print("Update from node", node)

update["messages"][-1].pretty_print()

print("\n\n")得到下面内容:

python

Update from node generate_query_or_respond

================================== Ai Message ==================================

Tool Calls:

retrieve_blog_posts (call_NYu2vq4km9nNNEFqJwefWKu1)

Call ID: call_NYu2vq4km9nNNEFqJwefWKu1

Args:

query: types of reward hacking

Update from node retrieve

================================= Tool Message ==================================

Name: retrieve_blog_posts

(Note: Some work defines reward tampering as a distinct category of misalignment behavior from reward hacking. But I consider reward hacking as a broader concept here.)

At a high level, reward hacking can be categorized into two types: environment or goal misspecification, and reward tampering.

Why does Reward Hacking Exist?#

Pan et al. (2022) investigated reward hacking as a function of agent capabilities, including (1) model size, (2) action space resolution, (3) observation space noise, and (4) training time. They also proposed a taxonomy of three types of misspecified proxy rewards:

Let's Define Reward Hacking#

Reward shaping in RL is challenging. Reward hacking occurs when an RL agent exploits flaws or ambiguities in the reward function to obtain high rewards without genuinely learning the intended behaviors or completing the task as designed. In recent years, several related concepts have been proposed, all referring to some form of reward hacking:

Update from node generate_answer

================================== Ai Message ==================================

Lilian Weng categorizes reward hacking into two types: environment or goal misspecification, and reward tampering. She considers reward hacking as a broad concept that includes both of these categories. Reward hacking occurs when an agent exploits flaws or ambiguities in the reward function to achieve high rewards without performing the intended behaviors.到这里,通过我们的代码实现了一个智能问答流程,集成了网页内容加载、文本拆分、向量检索、相关性评分、问题改写和答案生成,利用状态图组织流程控制,实现了一个能够动态判断是否检索和如何回答用户问题的智能代理。这就是一个通过LangGrah来实现RAG评分和重写的完整流程。