前言

前面我们了解了Hugging Face 核心库 Transformers 的使用方式,今天我们继续探索Hugging Face核心库 Diffusers 库的使用方式。对往期内容感兴趣的小伙伴也可以看往期:

- 【Hugging Face】Hugging Face Hub与Hugging Face CLI

- 【Hugging Face】Hugging Face Space空间的基本使用方式

- 【Hugging Face】Hugging Face数据集的基本使用

- 【Hugging Face】Hugging face模型的基本使用

- 【Hugging Face】Hugging Face Transformers的使用方式

简介

Diffusers 是Hugging Face开源的 扩散模型(Diffusion Models)一站式工具箱,把最前沿的扩散相关论文 / 权重 封装成简单、可组合的 API,让你用几行代码就能做文生图、图生图、音频生成、视频生成、3D 生成、分子生成、数据增强等任务,也支持训练 / 微调自己的模型。

Diffusers官网文档:huggingface.co/docs/diffus...

Diffusers核心API

- 管道:从高层次设计的多种类函数,目的在于方便部署和实现任务,能够快速的用于训练好的主流扩散模型来生成样本

- 模型:在训练新的扩散模型的时候需要用到网络结构,比如UNet

- 调度器:在推理的过程中使用多种不同的技巧来从噪声中生成图像,同时也可以生成训练过程中带噪声的图像。

安装

安装PyTorch

安装CPU版本

php

$ pip install torch torchvision torchaudio安装NVIDIA GPU版本,更多安装方式可以查看PyTorch官网:pytorch.org/

perl

$ pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu126安装Diffusers

php

# Accelerate 加速模型加载,用于推理和训练

# Transformers 是运行最受欢迎的扩散模型(如 Stable Diffusion)所必需的

$ uv add diffusers accelerate transformers

或者

$ pip install diffusers accelerate transformers基本使用

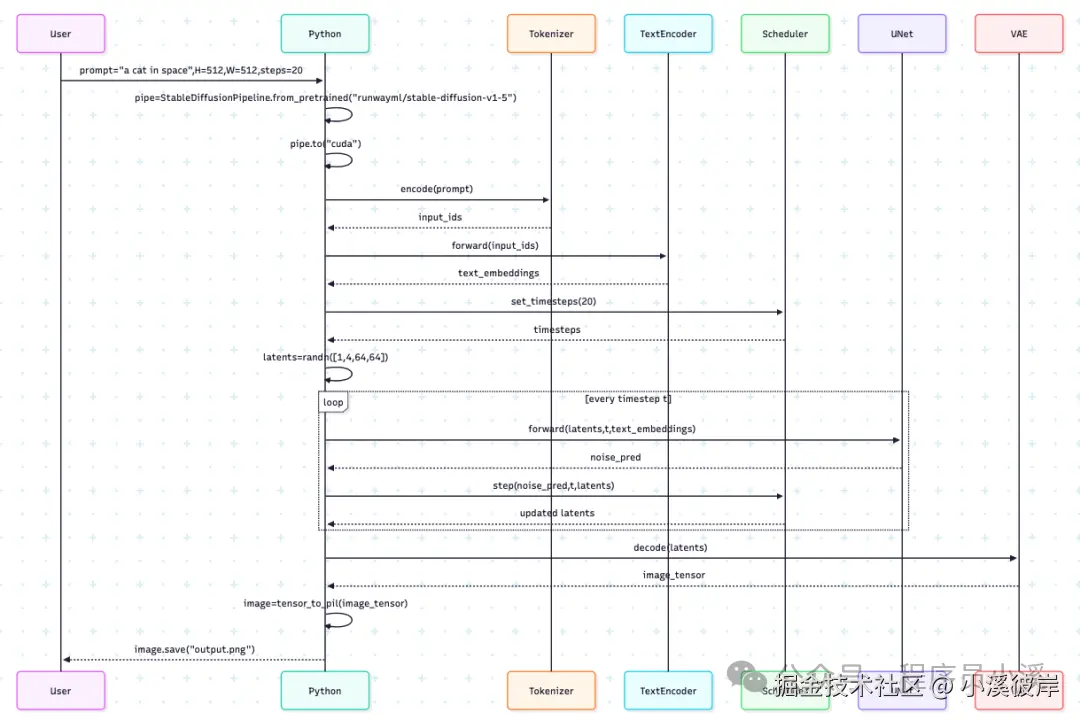

这是AI生成的Diffuser文生图的整个流程,有助于我们理解整个操作流程

Pipeline管道

在Diffusers中,Pipeline是把模型、调度器、处理等组件"粘合"起来的一条生产线。首先来看一下,Pipeline的默认使用方式。以sd-dreambooth-library/disco-diffusion-style文生图模型为例,新建一个Colab代码块

ini

from diffusers import DiffusionPipeline

import torch

device = "cuda" if torch.cuda.is_available() else "cpu"

# 加载管道,会先下载训练好的模型

pipeline = DiffusionPipeline.from_pretrained("sd-dreambooth-library/disco-diffusion-style")

pipeline.to(device)

# prompt,最好使用英文,中文效果不太好

prompt = "A cyberpunk-style building"

# 图片生成

image = pipeline(

prompt,

num_inference_steps=50, # 迭代步数

guidance_scale=7.5, # 引导系数

).images[0]

# 展示图片

display(image)点击运行,效果如下

Diffusers中还包含了很多Pipeline类型,下面是一些生图的Pipeline,感兴趣的小伙伴可以自行了解更多

- Text-To-Image:文生图,StableDiffusionPipeline

- Image-To-Image:图生图,StableDiffusionImg2ImgPipeline

- In-Painting:蒙版重绘,StableDiffusionInpaintPipeline

- Upscale Image:超分辨率(放大4倍),StableDiffusionUpscalePipeline

- Pix-To-Pix:图像画风编辑,StableDiffusionInstructPix2PixPipeline

- Depth-To-Image:深度绘图,StableDiffusionDepth2ImgPipeline

DiffusionPipeline

使用DiffusionPipeline实例化模型时,如果模型没有下载,会自动下载模型

Pipeline中包含多种管道,DiffusionPipeline是用预训练的扩散系统进行推理的最简单方法。它是一个包含模型和调度器的端到端系统,可以直接使用DiffusionPipeline完成许多任务。

Pipeline使用 from_pretrained() 方法加载模型

python

from diffusers import DiffusionPipeline

# 加载模型,use_safetensors使用安全无代码格式,建议默认加上

pipeline = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", use_safetensors=True)

print(pipeline)DiffusionPipeline会下载并缓存所有建模、分词和调度组件。打印pipeline对象可以发现Stable Diffusion管道由 UNet2DConditionModel 和 PNDMScheduler 等组件组成,DiffusionPipeline为抽象类底层会被更改为StableDiffusionPipeline

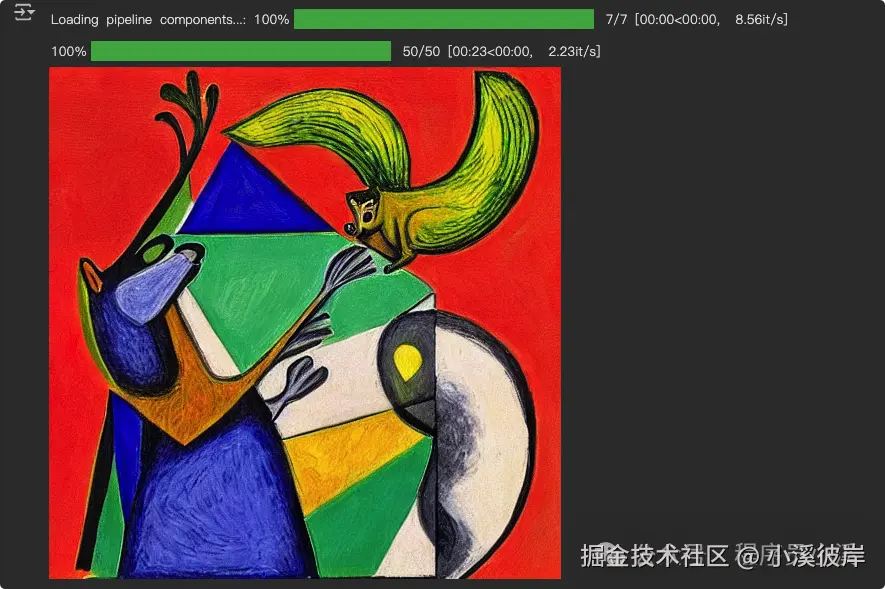

使用Pipeline生成图像

scss

image = pipeline("An image of a squirrel in Picasso style").images[0]

display(image)点击运行,生图效果如下:

GPU加速

Pipeline同样可以将管道放在 GPU 上,就像你使用任何 PyTorch 模块一样为管道添加GPU加速

ini

import torch

from diffusers import DiffusionPipeline

device = "cuda" if torch.cuda.is_available() else "cpu"

pipeline = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", use_safetensors=True)

# 在GPU上运行管道

pipeline.to(device)Pipeline参数

参数1:精度

默认情况下,DiffusionPipeline 使用完整 float32 精度进行 50 步推理,为了加速生图过程我们可以选择降低精度为 float16 或减少推理步数

python

import torch

from diffusers import DiffusionPipeline

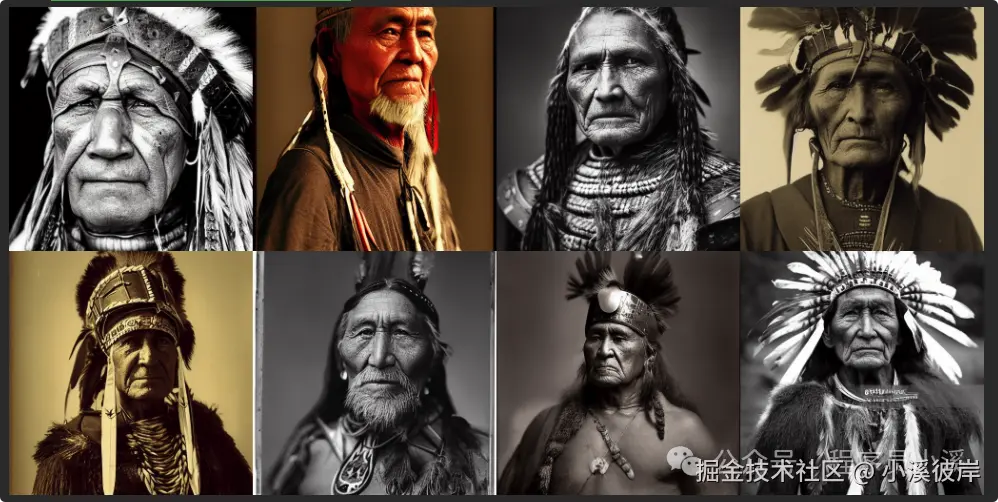

pipeline = DiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16, use_safetensors=True)参数2:提示词

提示词是生图主体的关键内容

ini

# 提示词

prompt = "portrait photo of a old warrior chief"

image = pipeline(prompt).images[0]参数3:随机种子

为了确保我们可以使用同一张图像并对其进行改进,我们使用 Generator 并设置一个种子以实现可重复性

ini

import torch

generator = torch.Generator("cuda").manual_seed(0)

image = pipeline(prompt, generator=generator).images[0]参数4:迭代步数

Stable Diffusion 模型默认使用 PNDMScheduler,通常需要~50 步推理,但像 DPMSolverMultistepScheduler 这样的性能更好的调度器,只需要 20 或 25 步推理。

ini

from diffusers import DiffusionPipeline

from diffusers import DPMSolverMultistepScheduler

import torch

pipeline = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True)

# 加载调度器

pipeline.scheduler = DPMSolverMultistepScheduler.from_config(pipeline.scheduler.config)

image = pipeline(

prompt,

num_inference_steps=25, # 迭代步数

).images[0]参数5:反向提示词

正反向提示词用于控制生图中想要和不想要内容

ini

negative_prompt = "low quality, bad anatomy, deformed, blurry"

image = pipeline(

prompt,

negative_prompt=negative_prompt, # 反向提示词

height=height,

width=width,

num_inference_steps=num_inference_steps,

generator=generator

).images[0]参数6:图片宽高

图片宽高用于控制生图的尺寸大小

ini

# 设置生成图像的参数

height = 512

width = 512

image = pipeline(

prompt,

height=height, # 图片高

width=width, # 图片宽

).images[0]参数7:提示词引导系数

提示词引导系数决定了生图效果与提示词的关系,提示词系数越大生图效果越贴近提示词,提示词系数接近 1 时提示词将被忽略

- 1.0-3.0:完全忽略提示词,自由生成

- 3.0-10.0:提示词与创意平衡,7.0-7.5为默认黄金区,官方示例都用7.5

- 10.0-15.0:需要精确还原 prompt

ini

image = pipeline(

prompt,

guidance_scale=7.5, # 提示词引导系数

).images[0]enable_attention_slicing(节省内存)

其他配置不变,只加上 enable_attention_slicing

ini

from diffusers import DiffusionPipeline

from diffusers import DPMSolverMultistepScheduler

import torch

from diffusers.utils import make_image_grid

device = "cuda" if torch.cuda.is_available() else "cpu"

# 加载pipeline

pipeline = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True)

pipeline.to(device)

# 开启enable_attention_slicing节省内存

pipeline.enable_attention_slicing()

# 加载调度器

pipeline.scheduler = DPMSolverMultistepScheduler.from_config(pipeline.scheduler.config)

prompt = "portrait photo of a old warrior chief"

def get_inputs(batch_size=1):

generator = [torch.Generator("cuda").manual_seed(i) for i in range(batch_size)]

prompts = batch_size * [prompt]

num_inference_steps = 20

return {"prompt": prompts, "generator": generator, "num_inference_steps": num_inference_steps}

images = pipeline(**get_inputs(batch_size=8)).images

make_image_grid(images, 2, 4)一次生成了8张图片且没有报 OOM 错误

VAE

VAE是一个优化显存和提速的操作,VAE 把高分辨率像素空间(512×512×3 ≈ 786 k 元素)压缩成低维潜空间(64×64×4 ≈ 16 k 元素),让扩散模型在更小、更快的空间里做"加噪 / 去噪",最后再解码回真实图像。

ini

from diffusers import DiffusionPipeline, AutoencoderKL

import torch

device = "cuda" if torch.cuda.is_available() else "cpu"

# 加载pipeline

pipeline = DiffusionPipeline.from_pretrained("stable-diffusion-v1-5/stable-diffusion-v1-5", torch_dtype=torch.float16, use_safetensors=True)

vae = AutoencoderKL.from_pretrained("stabilityai/sd-vae-ft-mse", torch_dtype=torch.float16).to(device)

pipeline.vae = vae

pipeline.to(device)

prompt = "portrait photo of a old warrior chief"

def get_inputs(batch_size=1):

generator = [torch.Generator(device).manual_seed(i) for i in range(batch_size)]

prompts = batch_size * [prompt]

num_inference_steps = 20

return {"prompt": prompts, "generator": generator, "num_inference_steps": num_inference_steps}

images = pipeline(**get_inputs(batch_size=8)).images

make_image_grid(images, 2, 4)AutoPipeline

AutoPipeline 类的设计旨在简化 Diffusers 中的各种管道。它是一个通用的任务优先级管道,让你专注于任务(AutoPipelineForText2Image、AutoPipelineForImage2Image 和 AutoPipelineForInpainting),而无需知道具体的管道类。AutoPipeline 会自动检测应使用的正确管道类。

AutoPipelineForText2Image文生图示例

ini

from diffusers import AutoPipelineForText2Image

import torch

pipe_txt2img = AutoPipelineForText2Image.from_pretrained(

"dreamlike-art/dreamlike-photoreal-2.0", torch_dtype=torch.float16, use_safetensors=True

).to("cuda")

prompt = "cinematic photo of Godzilla eating sushi with a cat in a izakaya, 35mm photograph, film, professional, 4k, highly detailed"

generator = torch.Generator(device="cpu").manual_seed(37)

image = pipe_txt2img(prompt, generator=generator).images[0]

imageAutoPipelineForImage2Image图生图示例

ini

from diffusers import AutoPipelineForImage2Image

from diffusers.utils import load_image

import torch

pipe_img2img = AutoPipelineForImage2Image.from_pretrained(

"dreamlike-art/dreamlike-photoreal-2.0", torch_dtype=torch.float16, use_safetensors=True

).to("cuda")

pipeline.enable_model_cpu_offload()

# remove following line if xFormers is not installed or you have PyTorch 2.0 or higher installed

pipeline.enable_xformers_memory_efficient_attention()

init_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/autopipeline-text2img.png")

prompt = "cinematic photo of Godzilla eating burgers with a cat in a fast food restaurant, 35mm photograph, film, professional, 4k, highly detailed"

generator = torch.Generator(device="cpu").manual_seed(53)

image = pipe_img2img(prompt, image=init_image, generator=generator).images[0]

imageAutoPipelineForInpainting图片修复示例

ini

from diffusers import AutoPipelineForInpainting

from diffusers.utils import load_image

import torch

pipeline = AutoPipelineForInpainting.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0", torch_dtype=torch.float16, use_safetensors=True

).to("cuda")

init_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/autopipeline-img2img.png")

mask_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/autopipeline-mask.png")

prompt = "cinematic photo of a owl, 35mm photograph, film, professional, 4k, highly detailed"

generator = torch.Generator(device="cpu").manual_seed(38)

image = pipeline(prompt, image=init_image, mask_image=mask_image, generator=generator, strength=0.4).images[0]

image模型

如果不确定要使用哪个模型,可以使用 AutoModel API 自动选择模型

在 Diffusers 中,扩散模型在进行生成图片的时候,是噪声扩散的逆过程,模型是真正负责学习、预测噪声或压缩/还原功能的可学习网络。

Diffusers中有4类最常用的模型:

- UNet(条件/无条件):学习「当前带噪图 → 噪声残差」或「直接预测原图」, 典型实例 UNet2DConditionModel、UNet2DModel, 默认模型

- VAE/AutoencoderKL:把高维像素空间压缩到低维潜空间,节省计算;推理时再解码,典型实例 AutoencoderKL

- Text Encoder/CLIP:把提示词编码成「条件向量」供条件扩散模型使用,典型实例 CLIPTextModel

- ControlNet/LoRA:在不改原 UNet 权重的前提下,注入额外条件或微调,典型实例 ControlNetModel、LoRAAdapter

官网图片降噪示例

下面通过官方示例看一下,完整的降噪过程,我对图片展示部分做了修改,其他的代码和官方是保持一致的。

ini

from diffusers import UNet2DModel, DDPMScheduler

import torch

import tqdm

import PIL.Image

import numpy as np

from diffusers.utils import make_image_grid

device = "cuda" if torch.cuda.is_available() else "cpu"

# 加载模型

model_id = "google/ddpm-cat-256"

model = UNet2DModel.from_pretrained(model_id)

# 将模型放在GPU上

model.to(device)

# 加载调度器

scheduler = DDPMScheduler.from_config(model.config)

# 生成随机种子

torch.manual_seed(0)

# 设置图片尺寸

noisy_sample = torch.randn(1, model.config.in_channels, model.config.sample_size, model.config.sample_size)

# 将照片放到GPU上

noisy_sample = noisy_sample.to(device)

sample = noisy_sample

# Helper function to convert tensor to PIL Image

def convert_to_pil_image(sample_tensor):

image_processed = sample_tensor.cpu().permute(0, 2, 3, 1)

image_processed = (image_processed + 1.0) * 127.5

image_processed = image_processed.numpy().astype(np.uint8)

image_pil = PIL.Image.fromarray(image_processed[0])

return image_pil

# 开始生成一个只猫

images = []

for i, t in enumerate(tqdm.tqdm(scheduler.timesteps)):

# 1. predict noise residual

with torch.no_grad():

residual = model(sample, t).sample

# 2. compute less noisy image and set x_t -> x_t-1

sample = scheduler.step(residual, t, sample).prev_sample

# 3. optionally look at image

if (i + 1) % 50 == 0:

pil_image = convert_to_pil_image(sample)

images.append(pil_image) # Append the PIL image to the list

# Display the collected images after the loop

make_image_grid(images, 5, 4)运行完成,我们将得到图片的整个去噪过程图,虽然最终生成的效果不是很好看,但是大概也能看出整个图片生成的过程了

有条件/无条件模型

- UNet2DModel:无条件 2D UNet,只认识「带噪图 + 时间步」。

- UNet2DConditionModel:有条件 2D UNet,额外吃「文本/深度/姿态等条件向量」,是 Stable Diffusion、ControlNet 等的核心网络。

模型通过 from_pretrained() 方法初始化,该方法还会在本地缓存模型权重,因此下次加载模型时会更快。

ini

from diffusers import scheduler, UNet2DModel

repo_id = "google/ddpm-cat-256"

scheduler = DDPMScheduler.from_pretrained("google/ddpm-cat-256")

model = UNet2DModel.from_pretrained(repo_id, use_safetensors=True)

print(model.config)不知道使用哪个模型类,也可以使用AutoModel

ini

from diffusers import AutoModel

repo_id = "google/ddpm-cat-256"

model = AutoModel.from_pretrained(repo_id, use_safetensors=True)

print(model.config)模型打印结果如下

模型参数:

- sample_size:输入样本的高度和宽度尺寸

- in_channels:输入样本的输入通道数

- down_block_types和up_block_types:用于创建U-Net架构的下采样和上采样块的类型

- block_out_channels:下采样块的输出通道数;也以相反的顺序用于上采样块的输入通道数

- layers_per_block:每个U-Net块中存在的ResNet块的数量。

无条件降噪生图案例,可以看到上面的官方示例,这里注意了解下,有条件的生图过程

ini

from PIL import Image

import torch

from transformers import CLIPTextModel, CLIPTokenizer

from diffusers import AutoencoderKL, UNet2DConditionModel, UniPCMultistepScheduler

from tqdm.auto import tqdm

device = "cuda" if torch.cuda.is_available() else "cpu"

model_name = "CompVis/stable-diffusion-v1-4"

# vae

vae = AutoencoderKL.from_pretrained(model_name, subfolder="vae", use_safetensors=True)

# 分词器

tokenizer = CLIPTokenizer.from_pretrained(model_name, subfolder="tokenizer")

# 文本编码器

text_encoder = CLIPTextModel.from_pretrained(model_name, subfolder="text_encoder", use_safetensors=True)

# 模型

unet = UNet2DConditionModel.from_pretrained(model_name, subfolder="unet", use_safetensors=True)

# 调度器

scheduler = UniPCMultistepScheduler.from_pretrained(model_name, subfolder="scheduler")

# 加速推理

vae.to(device)

text_encoder.to(device)

unet.to(device)

prompt = ["a photograph of an astronaut riding a horse"]

height = 512 # default height of Stable Diffusion

width = 512 # default width of Stable Diffusion

num_inference_steps = 25 # Number of denoising steps

guidance_scale = 7.5 # Scale for classifier-free guidance

# Seed generator to create the initial latent noise

generator = torch.Generator(device).manual_seed(0) # Ensure generator is on the correct device

batch_size = len(prompt)

# 对提示词进行分词

text_input = tokenizer(

prompt, padding="max_length", max_length=tokenizer.model_max_length, truncation=True, return_tensors="pt"

)

with torch.no_grad():

text_embeddings = text_encoder(text_input.input_ids.to(device))[0]

max_length = text_input.input_ids.shape[-1]

# 填充标记的嵌入

uncond_input = tokenizer([""] * batch_size, padding="max_length", max_length=max_length, return_tensors="pt")

uncond_embeddings = text_encoder(uncond_input.input_ids.to(device))[0]

# 将条件嵌入和无条件嵌入连接成一个批次,以避免进行两次前向传递

text_embeddings = torch.cat([uncond_embeddings, text_embeddings])

print(device)

# 之所以被"8"整除,因为vae模型有3次下采样

latents = torch.randn(

(batch_size, unet.config.in_channels, height // 8, width // 8),

generator=generator,

device=device,

)

# 去噪图像

latents = latents * scheduler.init_noise_sigma

# 循环时间步长

scheduler.set_timesteps(num_inference_steps)

for t in tqdm(scheduler.timesteps):

# expand the latents if we are doing classifier-free guidance to avoid doing two forward passes.

latent_model_input = torch.cat([latents] * 2)

latent_model_input = scheduler.scale_model_input(latent_model_input, timestep=t)

# predict the noise residual

with torch.no_grad():

noise_pred = unet(latent_model_input, t, encoder_hidden_states=text_embeddings).sample

# perform guidance

noise_pred_uncond, noise_pred_text = noise_pred.chunk(2)

noise_pred = noise_pred_uncond + guidance_scale * (noise_pred_text - noise_pred_uncond)

# compute the previous noisy sample x_t -> x_t-1

latents = scheduler.step(noise_pred, t, latents).prev_sample

# 图片解码

latents = 1 / 0.18215 * latents

with torch.no_grad():

image = vae.decode(latents).sample

image = (image / 2 + 0.5).clamp(0, 1).squeeze()

image = (image.permute(1, 2, 0) * 255).to(torch.uint8).cpu().numpy()

image = Image.fromarray(image)

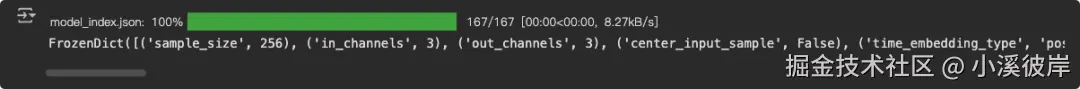

image示例中CLIPTextModel是文本编码器,用于把toknes编码为向量,用来控制扩散模型的生成。CLIPTextModel需要接收通过CLIPTokenizer分词器处理的提示词文本,这就和我们上期了解到的transformers联系起来了。这是手动实现有条件生图的过程,通过Pipeline将会大大简化生图流程,最后看下生图效果:

ControlNetModel

ControlNetModel通过在边缘图、深度图、分割图和姿态检测关键点等额外输入条件下对模型进行调节,从而在文本到图像生成方面提供了更高的控制度。

ini

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel

url = "https://huggingface.co/lllyasviel/ControlNet-v1-1/blob/main/control_v11p_sd15_canny.pth" # can also be a local path

controlnet = ControlNetModel.from_single_file(url)

url = "https://huggingface.co/stable-diffusion-v1-5/stable-diffusion-v1-5/blob/main/v1-5-pruned.safetensors" # can also be a local path

pipeline = StableDiffusionControlNetPipeline.from_single_file(url, controlnet=controlnet)调度器

使用过stable diffusion的小伙伴应该听过一个词叫采样器,调度器其实就是stable diffusion中的采样器。调度器是一个非常重要的部分,不同的调度器具有不同的去噪速度和质量权衡,Pipeline的默认调度器是PNDMScheduler。

Euler调度器

ini

from diffusers import DiffusionPipeline, EulerDiscreteScheduler

import torch

device = "cuda" if torch.cuda.is_available() else "cpu"

# 加载管道,会先下载训练好的模型

pipeline = DiffusionPipeline.from_pretrained("sd-dreambooth-library/disco-diffusion-style")

# 添加调度器

pipeline.scheduler = EulerDiscreteScheduler.from_config(pipeline.scheduler.config)

pipeline.to(device)

# prompt,最好使用英文,中文效果不太好

prompt = "A cyberpunk-style building"

# 图片生成

image = pipeline(

prompt,

num_inference_steps=50, # 迭代步数

guidance_scale=7.5, # 引导系数

).images[0]

# 展示图片

display(image)

EulerA调度器

ini

from diffusers import DiffusionPipeline, EulerAncestralDiscreteScheduler

import torch

device = "cuda" if torch.cuda.is_available() else "cpu"

# 加载管道,会先下载训练好的模型

pipeline = DiffusionPipeline.from_pretrained("sd-dreambooth-library/disco-diffusion-style")

# 添加调度器

pipeline.scheduler = EulerAncestralDiscreteScheduler.from_config(pipeline.scheduler.config)

pipeline.to(device)

# prompt,最好使用英文,中文效果不太好

prompt = "A cyberpunk-style building"

# 图片生成

image = pipeline(

prompt,

num_inference_steps=50, # 迭代步数

guidance_scale=7.5, # 引导系数

).images[0]

# 展示图片

display(image)

DPM++2M调度器

ini

from diffusers import DiffusionPipeline, DPMSolverMultistepScheduler

import torch

device = "cuda" if torch.cuda.is_available() else "cpu"

# 加载管道,会先下载训练好的模型

pipeline = DiffusionPipeline.from_pretrained("sd-dreambooth-library/disco-diffusion-style")

# 添加调度器

pipeline.scheduler = DPMSolverMultistepScheduler.from_config(pipeline.scheduler.config)

pipeline.to(device)

# prompt,最好使用英文,中文效果不太好

prompt = "A cyberpunk-style building"

# 图片生成

image = pipeline(

prompt,

num_inference_steps=50, # 迭代步数

guidance_scale=7.5, # 引导系数

).images[0]

# 展示图片

display(image)

DPM++ 2M Karras调度器(推荐)

ini

from diffusers import DiffusionPipeline, DPMSolverMultistepScheduler

import torch

device = "cuda" if torch.cuda.is_available() else "cpu"

# 加载管道,会先下载训练好的模型

pipeline = DiffusionPipeline.from_pretrained("sd-dreambooth-library/disco-diffusion-style")

# 添加调度器

pipeline.scheduler = DPMSolverMultistepScheduler.from_config(pipeline.scheduler.config, use_karras_sigmas=True)

pipeline.to(device)

# prompt,最好使用英文,中文效果不太好

prompt = "A cyberpunk-style building"

# 图片生成

image = pipeline(

prompt,

num_inference_steps=50, # 迭代步数

guidance_scale=7.5, # 引导系数

).images[0]

# 展示图片

display(image)

训练扩散模型

第1步:训练配置

创建一个 TrainingConfig 来包含训练参数

ini

from dataclasses import dataclass

@dataclass

class TrainingConfig:

image_size = 128 # the generated image resolution

train_batch_size = 16

eval_batch_size = 16 # how many images to sample during evaluation

num_epochs = 50

gradient_accumulation_steps = 1

learning_rate = 1e-4

lr_warmup_steps = 500

save_image_epochs = 10

save_model_epochs = 30

mixed_precision = "fp16" # `no` for float32, `fp16` for automatic mixed precision

output_dir = "ddpm-butterflies-128" # the model name locally and on the HF Hub

push_to_hub = True # whether to upload the saved model to the HF Hub

hub_model_id = "<your-username>/<my-awesome-model>" # the name of the repository to create on the HF Hub

hub_private_repo = None

overwrite_output_dir = True # overwrite the old model when re-running the notebook

seed = 0

config = TrainingConfig()第2步:加载数据集,调整训练数据

加载训练数据集

ini

from datasets import load_dataset

config.dataset_name = "huggan/smithsonian_butterflies_subset"

dataset = load_dataset(config.dataset_name, split="train")可视化数据集Image

css

import matplotlib.pyplot as plt

fig, axs = plt.subplots(1, 4, figsize=(16, 4))

for i, image in enumerate(dataset[:4]["image"]):

axs[i].imshow(image)

axs[i].set_axis_off()

fig.show()

对图片尺寸进行调整

ini

from torchvision import transforms

preprocess = transforms.Compose(

[

transforms.Resize((config.image_size, config.image_size)),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.5], [0.5]),

]

)将图像通道转换为RGB

arduino

def transform(examples):

images = [preprocess(image.convert("RGB")) for image in examples["image"]]

return {"images": images}

dataset.set_transform(transform)再次可视化确认图像大小

ini

import torch

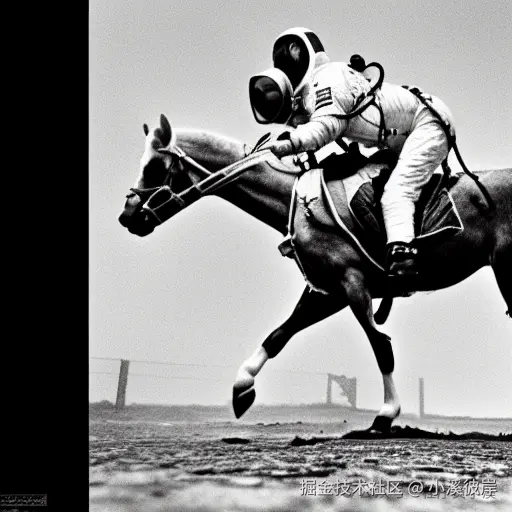

train_dataloader = torch.utils.data.DataLoader(dataset, batch_size=config.train_batch_size, shuffle=True)第3步:创建UNet2DModel

ini

from diffusers import UNet2DModel

model = UNet2DModel(

sample_size=config.image_size, # the target image resolution

in_channels=3, # the number of input channels, 3 for RGB images

out_channels=3, # the number of output channels

layers_per_block=2, # how many ResNet layers to use per UNet block

block_out_channels=(128, 128, 256, 256, 512, 512), # the number of output channels for each UNet block

down_block_types=(

"DownBlock2D", # a regular ResNet downsampling block

"DownBlock2D",

"DownBlock2D",

"DownBlock2D",

"AttnDownBlock2D", # a ResNet downsampling block with spatial self-attention

"DownBlock2D",

),

up_block_types=(

"UpBlock2D", # a regular ResNet upsampling block

"AttnUpBlock2D", # a ResNet upsampling block with spatial self-attention

"UpBlock2D",

"UpBlock2D",

"UpBlock2D",

"UpBlock2D",

),

)

sample_image = dataset[0]["images"].unsqueeze(0)

print("Input shape:", sample_image.shape)

print("Output shape:", model(sample_image, timestep=0).sample.shape)第4步:创建一个调度器

ini

import torch

from PIL import Image

from diffusers import DDPMScheduler

# 调度器

noise_scheduler = DDPMScheduler(num_train_timesteps=1000)

noise = torch.randn(sample_image.shape)

timesteps = torch.LongTensor([50])

# 调度器为图片添加噪声

noisy_image = noise_scheduler.add_noise(sample_image, noise, timesteps)

# 查看噪声图

Image.fromarray(((noisy_image.permute(0, 2, 3, 1) + 1.0) * 127.5).type(torch.uint8).numpy()[0])

计算训练损失

ini

import torch.nn.functional as F

noise_pred = model(noisy_image, timesteps).sample

loss = F.mse_loss(noise_pred, noise)第5步:训练模型

创建优化器和学习率调度器

ini

from diffusers.optimization import get_cosine_schedule_with_warmup

optimizer = torch.optim.AdamW(model.parameters(), lr=config.learning_rate)

lr_scheduler = get_cosine_schedule_with_warmup(

optimizer=optimizer,

num_warmup_steps=config.lr_warmup_steps,

num_training_steps=(len(train_dataloader) * config.num_epochs),

)创建评估模型

ini

from diffusers import DDPMPipeline

from diffusers.utils import make_image_grid

import os

def evaluate(config, epoch, pipeline):

# Sample some images from random noise (this is the backward diffusion process).

# The default pipeline output type is `List[PIL.Image]`

images = pipeline(

batch_size=config.eval_batch_size,

generator=torch.Generator(device='cpu').manual_seed(config.seed), # Use a separate torch generator to avoid rewinding the random state of the main training loop

).images

# Make a grid out of the images

image_grid = make_image_grid(images, rows=4, cols=4)

# Save the images

test_dir = os.path.join(config.output_dir, "samples")

os.makedirs(test_dir, exist_ok=True)

image_grid.save(f"{test_dir}/{epoch:04d}.png")

ini

from accelerate import Accelerator

from huggingface_hub import create_repo, upload_folder

from tqdm.auto import tqdm

from pathlib import Path

import os

def train_loop(config, model, noise_scheduler, optimizer, train_dataloader, lr_scheduler):

# Initialize accelerator and tensorboard logging

accelerator = Accelerator(

mixed_precision=config.mixed_precision,

gradient_accumulation_steps=config.gradient_accumulation_steps,

log_with="tensorboard",

project_dir=os.path.join(config.output_dir, "logs"),

)

if accelerator.is_main_process:

if config.output_dir is not None:

os.makedirs(config.output_dir, exist_ok=True)

if config.push_to_hub:

repo_id = create_repo(

repo_id=config.hub_model_id or Path(config.output_dir).name, exist_ok=True

).repo_id

accelerator.init_trackers("train_example")

# Prepare everything

# There is no specific order to remember, you just need to unpack the

# objects in the same order you gave them to the prepare method.

model, optimizer, train_dataloader, lr_scheduler = accelerator.prepare(

model, optimizer, train_dataloader, lr_scheduler

)

global_step = 0

# Now you train the model

for epoch in range(config.num_epochs):

progress_bar = tqdm(total=len(train_dataloader), disable=not accelerator.is_local_main_process)

progress_bar.set_description(f"Epoch {epoch}")

for step, batch in enumerate(train_dataloader):

clean_images = batch["images"]

# Sample noise to add to the images

noise = torch.randn(clean_images.shape, device=clean_images.device)

bs = clean_images.shape[0]

# Sample a random timestep for each image

timesteps = torch.randint(

0, noise_scheduler.config.num_train_timesteps, (bs,), device=clean_images.device,

dtype=torch.int64

)

# Add noise to the clean images according to the noise magnitude at each timestep

# (this is the forward diffusion process)

noisy_images = noise_scheduler.add_noise(clean_images, noise, timesteps)

with accelerator.accumulate(model):

# Predict the noise residual

noise_pred = model(noisy_images, timesteps, return_dict=False)[0]

loss = F.mse_loss(noise_pred, noise)

accelerator.backward(loss)

if accelerator.sync_gradients:

accelerator.clip_grad_norm_(model.parameters(), 1.0)

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

progress_bar.update(1)

logs = {"loss": loss.detach().item(), "lr": lr_scheduler.get_last_lr()[0], "step": global_step}

progress_bar.set_postfix(**logs)

accelerator.log(logs, step=global_step)

global_step += 1

# After each epoch you optionally sample some demo images with evaluate() and save the model

if accelerator.is_main_process:

pipeline = DDPMPipeline(unet=accelerator.unwrap_model(model), scheduler=noise_scheduler)

if (epoch + 1) % config.save_image_epochs == 0 or epoch == config.num_epochs - 1:

evaluate(config, epoch, pipeline)

if (epoch + 1) % config.save_model_epochs == 0 or epoch == config.num_epochs - 1:

if config.push_to_hub:

upload_folder(

repo_id=repo_id,

folder_path=config.output_dir,

commit_message=f"Epoch {epoch}",

ignore_patterns=["step_*", "epoch_*"],

)

else:

pipeline.save_pretrained(config.output_dir)启动训练

ini

from accelerate import notebook_launcher

args = (config, model, noise_scheduler, optimizer, train_dataloader, lr_scheduler)

notebook_launcher(train_loop, args, num_processes=1)第6步:验证效果

python

import glob

sample_images = sorted(glob.glob(f"{config.output_dir}/samples/*.png"))

Image.open(sample_images[-1])训练50轮,时间太久了,测试我只测试了5轮,这里是我训练了5轮的效果

参考来源

友情提示

见原文:【Hugging Face】Hugging Face Diffusers的使用方式

本文同步自微信公众号 "程序员小溪" ,这里只是同步,想看及时消息请移步我的公众号,不定时更新我的学习经验。