文章目录

- 一、视频输入基础

-

- [1. VIN camera驱动框架](#1. VIN camera驱动框架)

- [2. Camera通路框架](#2. Camera通路框架)

- [3. 视频输入组件](#3. 视频输入组件)

-

- [3.1 ISP](#3.1 ISP)

- [3.2 VIPP](#3.2 VIPP)

- [3.3 虚通道](#3.3 虚通道)

- [4. VI 组件内部结构](#4. VI 组件内部结构)

- [5. 全志视频输入组件使用示例](#5. 全志视频输入组件使用示例)

-

- [5.1 开启和关闭摄像头](#5.1 开启和关闭摄像头)

- [5.2 获得摄像头数据并保存](#5.2 获得摄像头数据并保存)

- 二、音视频同步原理-媒体时钟

-

- [1. 全志平台媒体同步原理](#1. 全志平台媒体同步原理)

- 三、视频输出基础

-

- [1. LCD 驱动](#1. LCD 驱动)

- [2. 视频输出模块框图](#2. 视频输出模块框图)

- [3. VO 组件-显示引擎 DE](#3. VO 组件-显示引擎 DE)

- [4. 全志视频输出组件使用示例](#4. 全志视频输出组件使用示例)

-

- [4.1 读取视频文件实时显示](#4.1 读取视频文件实时显示)

- [4.2 绑定摄像头实时显示](#4.2 绑定摄像头实时显示)

一、视频输入基础

1. VIN camera驱动框架

涉及 V4l2 驱动部分的知识在这里不深入讲解,后面有时间再深入分析一下。

VIN camera 驱动使用过程可简单的看成是 vin 模块 + device 模块 + af driver + flash 控制模块的方式。

- vin.c 是驱动的主要功能实现,包括注册/注销、参数读取、与 v4l2 上层接口、与各 device 的下层接口、中断处理、buffer 申请切换等;

- modules/sensor 文件夹里面是各个 sensor 的器件层实现,一般包括上下电、初始化,各分辨率切换,yuv sensor 包括绝大部分的v4l2 定义的ioctrl 命令的实现;而 raw sensor 的话大部分 ioctrl 命令在 vin 层调用 isp 库实现,少数如曝光/增益调节会透过 vin 层到实际器件层;

- modules/actuator 文件夹内是各种 vcm 的驱动;

- modules/flash 文件夹内是闪光灯控制接口实现;

- vin-csi 和 vin-mipi 为对 csi 接口和 mipi 接口的控制文件;

- vin-isp 文件夹为 isp 的库操作文件;

- vin-video 文件夹内主要是 video 设备操作文件;

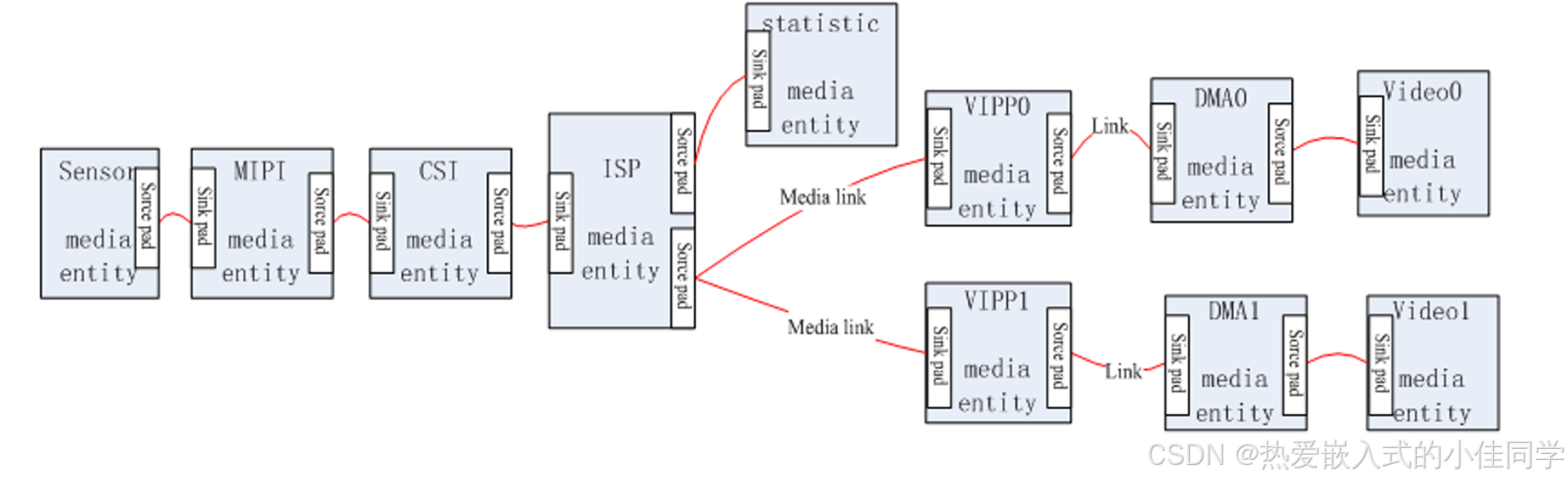

2. Camera通路框架

- VIN 支持灵活配置单/双路输入双 ISP 多通路输出的规格

- 引入 media 框架实现 pipeline 管理

- 将 libisp 移植到用户空间解决 GPL 问题

- 将统计 buffer 独立为 v4l2 subdev

- 将的 scaler(vipp)模块独立为 v4l2 subdev

- 将 video buffer 修改为 mplane 方式,使用户层取图更方便

- 采用 v4l2-event 实现事件管理

- 采用 v4l2-controls 新特性

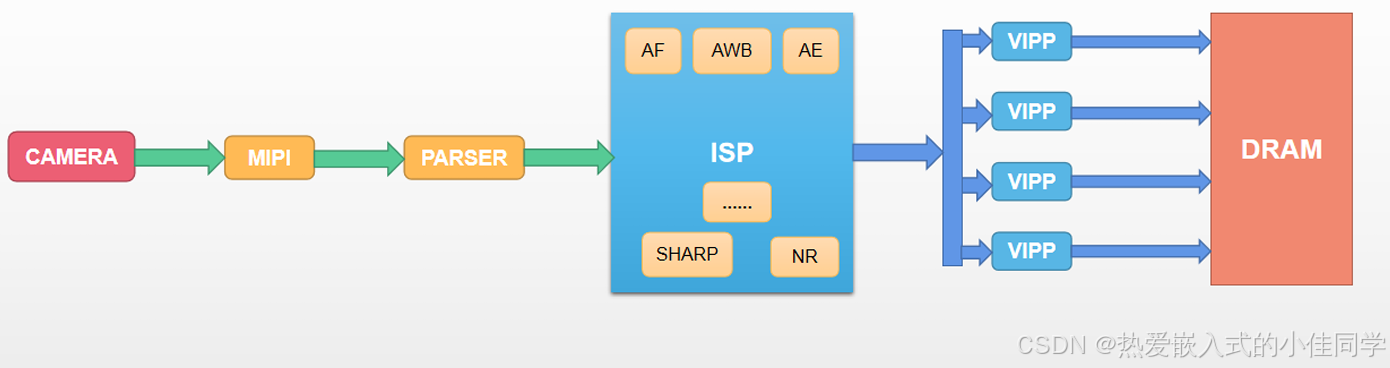

3. 视频输入组件

3.1 ISP

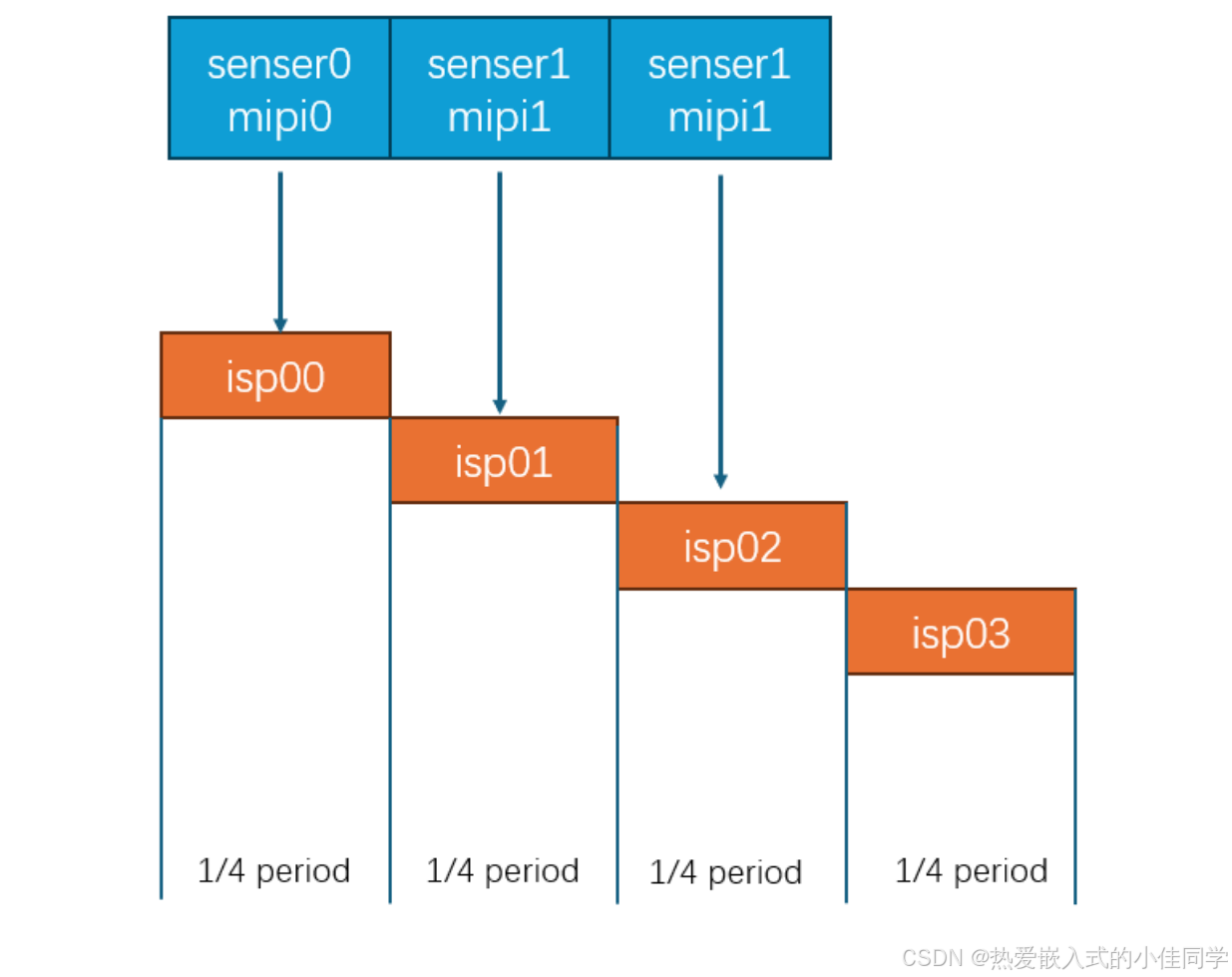

以全志为例,在全志 V853 芯片中,ISP组 件在使用过程中是支持分时复用的,ISP 分时复用周期图如下所示:

isp00/isp01/isp02/isp03 分别代表一个周期的1/4,每一个周期中的会被分为4等分,当该周期运行到那一等分的时候,就会去连接到一个摄像头并采集图像数据。通过分时复用的方式,使得芯片端的 ISP 硬件可以同时连接4个摄像头。

注意:

- V853芯片支持两路 MIPI+一路并口的摄像头,分时复用只会有三个源。

- 在线编码无法使用分时复用,因为在线编码对实时性要求很高,使用分时复用可能会发生带宽不连续,延迟叠加等。

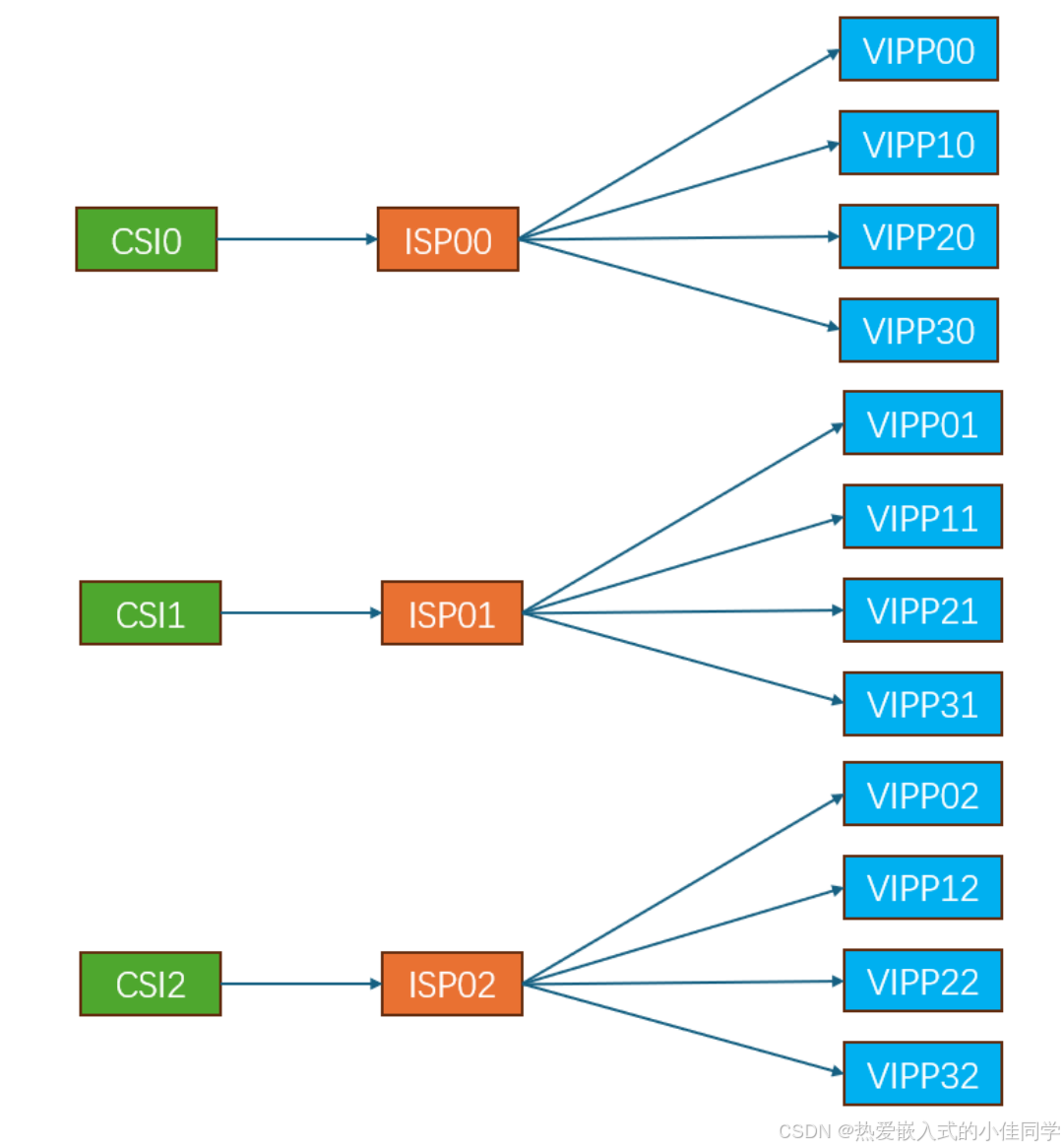

3.2 VIPP

Video Input Post Processor,即视频输入后处理器。它是一种用于图像传感器的硬件组件,可以对图像进行缩小、打水印、去坏点、增强等处理。它可以支持不同的图像数据格式,如 Bayer raw data 或 YUV 格式。

VIPP 硬件有4个VIPP通道,4个VIPP通道与ISP一致也可以分时复用,对于应用层来说,我们可以从VIPP通道中取数据,例如上图中 VIPP10 ,1表示VIPP硬件通道号,0表示分时复用的情况。

注意:由于硬件中只有4路VIPP,那么在开发中只能获取4路不同分辨率的图像。

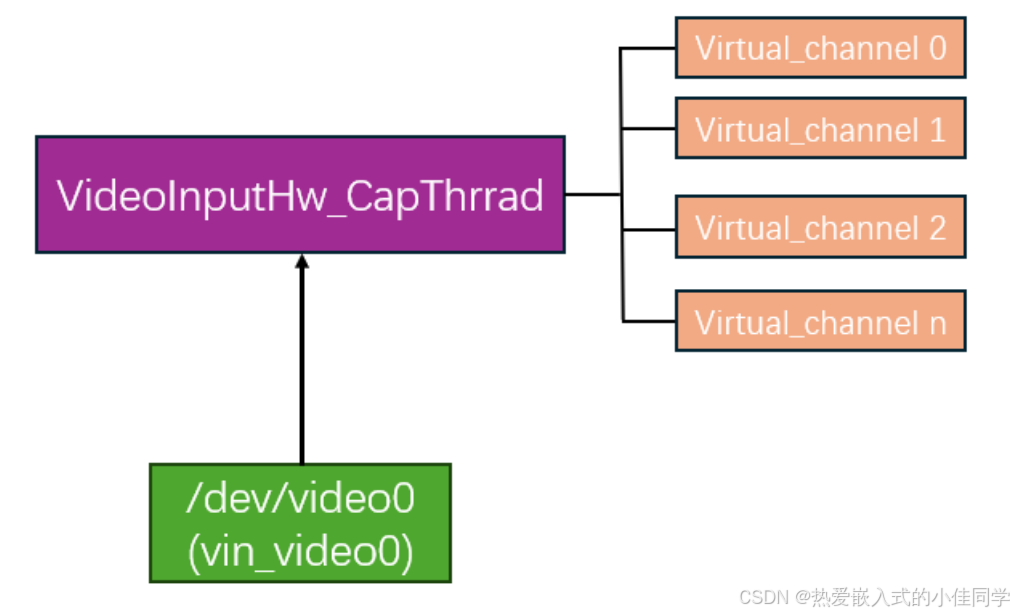

3.3 虚通道

虚通道(Virtual Channel,简称 VC)是一种用于管理和绑定不同功能模块之间的通信路径。在MPP中,音频和视频的处理是独立的,为了避免混乱和通道错乱,引入了虚通道的概念。

虚通道允许将不同的功能模块绑定在一起,以便更好地控制和管理视频处理流程。例如,虚通道可以将视频输入(VI)、视频处理(VPSS)、视频编码(VENC)等模块连接起来,形成一个完整的视频处理流程。

基于前面的VIPP硬件,会为它创建若干个虚通道,虚通道的数量在理论上是不受限制的,我们可以创建很多个虚通道用于传输到其他地方去使用。因为虚通道的本质是对实际VIPP通道中的每一帧图像做引用技术,每个虚通道从VIPP通道获取这一帧图像后,会把这一帧的引用技术+1,如果将这一帧数据还回去的话就将这一帧的引用技术-1。只有当这一帧的引用技术降为0的时候,这一帧数据才会真正还给V4L2驱

动 /dev/video0。

注意:

- 如果很多个虚通道都使用同一个 VIPP 通道的数据,那么它们就是公用一个 buffer

- 只要有一个虚通道没有及时还帧,就意味着这一帧 buffer 没有被还回去,其他虚通道也无法使用

- 如果占用太多帧没有还,那么其他虚通道也拿不到帧

- 虚通道要满足:及时还帧,用时创建,用完销毁

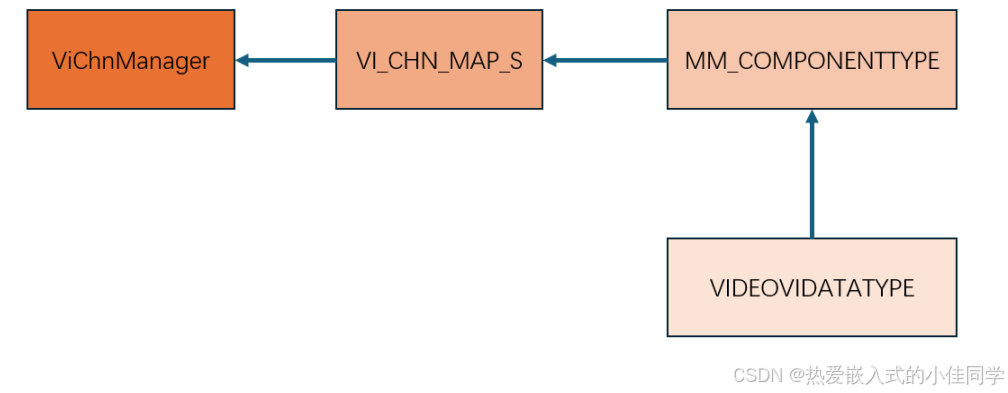

4. VI 组件内部结构

视频输入组件内部主要是由通道管理器组成,如下图所示:

ViChnManager:是通道管理器,维护一个通道的链表(通道指的是虚通道)负责管理和调度虚拟通道资源。

VI_CHN_MAP_S 是一个结构体或配置,用于定义视频输入通道(VI Channel)的属性和映射关系。这通常涉及到视频捕获硬件的配置,如分辨率、像素格式、缓冲区数量等。

MM_COMPONENTTYPE 通常指的是多媒体组件类型,它可能是一个接口或抽象类,用于定义多媒体处理组件的通用属性和行为。这样的组件可能包括编解码器、过滤器、转换器等。

5. 全志视频输入组件使用示例

5.1 开启和关闭摄像头

示例:演示 mpi_vi 组件的reset流程,vi 组件运行过程中停止,销毁,再重新创建运行。

步骤:

- 启动 MPP 和 Glog 库

- 获取配置文件信息

- 初始化 ISP

- 创建 VI 组件

- 获取图像帧

- 停止并销毁 VI 组件

- 退出 MPP 平台

示例代码解析:

sample_vi_reset.c

c

#include <endian.h>

#include <errno.h>

#include <fcntl.h>

#include <getopt.h>

#include <pthread.h>

#include <signal.h>

#include <stdint.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <utils/plat_log.h>

#include "media/mpi_sys.h"

#include "media/mm_comm_vi.h"

#include "media/mpi_vi.h"

#include "media/mpi_isp.h"

#include <mpi_videoformat_conversion.h>

#include <confparser.h>

#include "sample_vi_reset.h"

#include "sample_vi_reset_config.h"

#define TEST_FLIP (0)

// 启动一路虚拟 VI 通道

int hal_virvi_start(VI_DEV ViDev, VI_CHN ViCh, void *pAttr)

{

ERRORTYPE ret = -1;

ret = AW_MPI_VI_CreateVirChn(ViDev, ViCh, pAttr);

if(ret < 0)

{

aloge("Create VI Chn failed,VIDev = %d,VIChn = %d",ViDev,ViCh);

return ret ;

}

ret = AW_MPI_VI_SetVirChnAttr(ViDev, ViCh, pAttr);

if(ret < 0)

{

aloge("Set VI ChnAttr failed,VIDev = %d,VIChn = %d",ViDev,ViCh);

return ret ;

}

ret = AW_MPI_VI_EnableVirChn(ViDev, ViCh);

if(ret < 0)

{

aloge("VI Enable VirChn failed,VIDev = %d,VIChn = %d",ViDev,ViCh);

return ret ;

}

return 0;

}

// 关闭并销毁一路虚拟 VI 通道

int hal_virvi_end(VI_DEV ViDev, VI_CHN ViCh)

{

int ret = -1;

ret = AW_MPI_VI_DisableVirChn(ViDev, ViCh);

if(ret < 0)

{

aloge("Disable VI Chn failed,VIDev = %d,VIChn = %d",ViDev,ViCh);

return ret ;

}

ret = AW_MPI_VI_DisableVirChn(ViDev, ViCh);

if(ret < 0)

{

aloge("Destory VI Chn failed,VIDev = %d,VIChn = %d",ViDev,ViCh);

return ret ;

}

return 0;

}

// 解析命令行参数

static int parseCmdLine(SampleViResetContext *pContext, int argc, char** argv)

{

int ret = -1;

alogd("argc=%d", argc);

if (argc != 3)

{

printf("CmdLine param:\n"

"\t-path ./sample_CodecParallel.conf\n");

return -1;

}

while (*argv)

{

if (!strcmp(*argv, "-path"))

{

argv++;

if (*argv)

{

ret = 0;

if (strlen(*argv) >= MAX_FILE_PATH_SIZE)

{

aloge("fatal error! file path[%s] too long:!", *argv);

}

if (pContext)

{

strncpy(pContext->mCmdLinePara.mConfigFilePath, *argv, MAX_FILE_PATH_SIZE-1);

pContext->mCmdLinePara.mConfigFilePath[MAX_FILE_PATH_SIZE-1] = '\0';

}

}

}

else if(!strcmp(*argv, "-h"))

{

printf("CmdLine param:\n"

"\t-path ./sample_CodecParallel.conf\n");

break;

}

else if (*argv)

{

argv++;

}

}

return ret;

}

// 从配置文件加载测试参数

static ERRORTYPE LoadSampleViResetConfig(SampleViResetConfig *pConfig, const char *conf_path)

{

if (NULL == pConfig)

{

aloge("pConfig is NULL!");

return FAILURE;

}

if (NULL == conf_path)

{

aloge("user not set config file!");

return FAILURE;

}

/* 先给默认值 */

pConfig->mTestCount = 0;

pConfig->mFrameCountStep1 = 300;

pConfig->mbRunIsp = true;

pConfig->mIspDev = 0;

pConfig->mVippStart = 0;

pConfig->mVippEnd = 0;

pConfig->mPicWidth = 1920;

pConfig->mPicHeight = 1080;

pConfig->mSubPicWidth = 640;

pConfig->mSubPicHeight = 360;

pConfig->mFrameRate = 20;

pConfig->mPicFormat = MM_PIXEL_FORMAT_YVU_SEMIPLANAR_420;

CONFPARSER_S stConfParser;

if(0 > createConfParser(conf_path, &stConfParser))

{

aloge("load conf fail!");

return FAILURE;

}

pConfig->mTestCount = GetConfParaInt(&stConfParser, SAMPLE_VI_RESET_TEST_COUNT, 0);

alogd("mTestCount=%d", pConfig->mTestCount);

pConfig->mVippStart = GetConfParaInt(&stConfParser, SAMPLE_VI_RESET_VIPP_ID_START, 0);

pConfig->mVippEnd = GetConfParaInt(&stConfParser, SAMPLE_VI_RESET_VIPP_ID_END, 0);

alogd("vi config: vipp scope=[%d,%d], captureSize:[%dx%d,%dx%d] frameRate:%d, pixelFormat:0x%x",

pConfig->mVippStart, pConfig->mVippEnd,

pConfig->mPicWidth, pConfig->mPicHeight,

pConfig->mSubPicWidth, pConfig->mSubPicHeight,

pConfig->mFrameRate, pConfig->mPicFormat);

destroyConfParser(&stConfParser);

return SUCCESS;

}

// 采集线程------不断取 CSI 帧

static void *GetCSIFrameThread(void *pArg)

{

VI_DEV ViDev;

VI_CHN ViCh;

int ret = 0;

int i = 0, j = 0;

VirViChnInfo *pCap = (VirViChnInfo*)pArg;

ViDev = pCap->mVipp;

ViCh = pCap->mVirChn;

alogd("Cap threadid=0x%lx, ViDev = %d, ViCh = %d", pCap->mThid, ViDev, ViCh);

int nFlipValue = 0;

int nFlipTestCnt = 0;

// 循环抓取指定数量的帧

while (pCap->mCaptureFrameCount > j)

{

if ((ret = AW_MPI_VI_GetFrame(ViDev, ViCh, &pCap->mFrameInfo, pCap->mMilliSec)) != 0)

{

alogw("Vipp[%d,%d] Get Frame failed!", ViDev, ViCh);

continue;

}

i++;

// 每 20 帧打印一次时间戳

if (i % 20 == 0)

{

time_t now;

struct tm *timenow;

time(&now);

timenow = localtime(&now);

alogd("Cap threadid=0x%lx, ViDev=%d, VirVi=%d, mpts=%lld; local time is %s",

pCap->mThid, ViDev, ViCh, pCap->mFrameInfo.VFrame.mpts, asctime(timenow));

#if 0 // 如需保存 YUV,可打开此处

FILE *fd;

char filename[128];

sprintf(filename, "/tmp/%dx%d_%d.yuv",

pCap->pstFrameInfo.VFrame.mWidth,

pCap->pstFrameInfo.VFrame.mHeight,

i);

fd = fopen(filename, "wb+");

fwrite(pCap->pstFrameInfo.VFrame.mpVirAddr[0],

pCap->pstFrameInfo.VFrame.mWidth * pCap->pstFrameInfo.VFrame.mHeight,

1, fd);

fwrite(pCap->pstFrameInfo.VFrame.mpVirAddr[1],

pCap->pstFrameInfo.VFrame.mWidth * pCap->pstFrameInfo.VFrame.mHeight >> 1,

1, fd);

fclose(fd);

#endif

}

AW_MPI_VI_ReleaseFrame(ViDev, ViCh, &pCap->mFrameInfo);

j++;

#if TEST_FLIP

// 测试镜像/翻转功能

if(j == pCap->mCaptureFrameCount)

{

j = 0;

if(0 == ViDev)

{

nFlipValue = !nFlipValue;

nFlipTestCnt++;

AW_MPI_VI_SetVippMirror(ViDev, nFlipValue);

AW_MPI_VI_SetVippFlip(ViDev, nFlipValue);

alogd("vipp[%d] mirror and flip:%d, test count:%d begin.",

ViDev, nFlipValue, nFlipTestCnt);

}

}

#endif

}

return NULL;

}

// 创建并启动一路 Vipp(含虚拟通道和采集线程)

int RunOneVipp(VI_DEV nVippIndex, SampleViResetContext *pContext)

{

ERRORTYPE ret = SUCCESS;

/* 配置 VI 属性 */

memset(&pContext->mViAttr, 0, sizeof(VI_ATTR_S));

pContext->mViAttr.type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

pContext->mViAttr.memtype = V4L2_MEMORY_MMAP;

pContext->mViAttr.format.pixelformat =

map_PIXEL_FORMAT_E_to_V4L2_PIX_FMT(pContext->mConfigPara.mPicFormat);

pContext->mViAttr.format.field = V4L2_FIELD_NONE;

alogd("nVippIndex=%d", nVippIndex);

// VIPP0 用主码流分辨率,其余用子码流

if(0 == nVippIndex)

{

pContext->mViAttr.format.width = pContext->mConfigPara.mPicWidth;

pContext->mViAttr.format.height = pContext->mConfigPara.mPicHeight;

}

else

{

pContext->mViAttr.format.width = pContext->mConfigPara.mSubPicWidth;

pContext->mViAttr.format.height = pContext->mConfigPara.mSubPicHeight;

}

pContext->mViAttr.nbufs = 3;

pContext->mViAttr.nplanes = 2;

pContext->mViAttr.fps = pContext->mConfigPara.mFrameRate;

/* 是否沿用当前配置 */

if(false == pContext->mFirstVippRunFlag)

{

pContext->mViAttr.use_current_win = 0; // 强制更新

pContext->mFirstVippRunFlag = true;

}

else

{

pContext->mViAttr.use_current_win = 1;

}

pContext->mViAttr.wdr_mode = 0;

pContext->mViAttr.capturemode = V4L2_MODE_VIDEO;

pContext->mViAttr.drop_frame_num = 0;

ret = AW_MPI_VI_CreateVipp(nVippIndex);

if(ret != SUCCESS)

{

aloge("fatal error! create vipp[%d] fail[0x%x]", nVippIndex, ret);

}

ret = AW_MPI_VI_SetVippAttr(nVippIndex, &pContext->mViAttr);

if(ret != SUCCESS)

{

aloge("fatal error! set vipp attr[%d] fail[0x%x]", nVippIndex, ret);

}

// 首次启动 ISP(仅需一次)

if(pContext->mConfigPara.mbRunIsp && false == pContext->mbIspRunningFlag)

{

ret = AW_MPI_ISP_Run(pContext->mConfigPara.mIspDev);

if(ret != SUCCESS)

{

aloge("fatal error! isp[%d] run fail[0x%x]", pContext->mConfigPara.mIspDev, ret);

}

pContext->mbIspRunningFlag = true;

}

ret = AW_MPI_VI_EnableVipp(nVippIndex);

if(ret != SUCCESS)

{

aloge("fatal error! enable vipp[%d] fail[0x%x]", nVippIndex, ret);

}

// 创建虚拟通道并启动采集线程

VI_CHN virvi_chn = 0;

for (virvi_chn = 0; virvi_chn < 1 /*MAX_VIR_CHN_NUM*/; virvi_chn++)

{

VirViChnInfo *pChnInfo = &pContext->mVirViChnArray[nVippIndex][virvi_chn];

memset(pChnInfo, 0, sizeof(VirViChnInfo));

pChnInfo->mVipp = nVippIndex;

pChnInfo->mVirChn = virvi_chn;

pChnInfo->mMilliSec = 5000; // 5 秒超时

pChnInfo->mCaptureFrameCount = pContext->mConfigPara.mFrameCountStep1;

ret = hal_virvi_start(nVippIndex, virvi_chn, NULL);

if(ret != 0)

{

aloge("virvi start failed!");

}

pChnInfo->mThid = 0;

ret = pthread_create(&pChnInfo->mThid, NULL, GetCSIFrameThread, (void *)pChnInfo);

if(0 == ret)

{

alogd("pthread create success, vipp[%d], virChn[%d]",

pChnInfo->mVipp, pChnInfo->mVirChn);

}

else

{

aloge("fatal error! pthread_create failed, vipp[%d], virChn[%d]",

pChnInfo->mVipp, pChnInfo->mVirChn);

}

}

return ret;

}

// 销毁一路 Vipp(停止线程、销毁通道)

int DestroyOneVipp(VI_DEV nVippIndex, SampleViResetContext *pContext)

{

int ret = 0;

VI_CHN virvi_chn = 0;

for (virvi_chn = 0; virvi_chn < 1; virvi_chn++)

{

ret = hal_virvi_end(nVippIndex, virvi_chn);

if(ret != 0)

{

aloge("fatal error! virvi[%d,%d] end failed!", nVippIndex, virvi_chn);

}

}

ret = AW_MPI_VI_DisableVipp(nVippIndex);

if(ret != SUCCESS)

{

aloge("fatal error! disable vipp[%d] fail", nVippIndex);

}

ret = AW_MPI_VI_DestroyVipp(nVippIndex);

if(ret != SUCCESS)

{

aloge("fatal error! destroy vipp[%d] fail", nVippIndex);

}

alogd("vipp[%d] is destroyed!", nVippIndex);

return ret;

}

// 主函数

int main(int argc, char *argv[])

{

int result = 0;

ERRORTYPE ret;

int virvi_chn;

// 配置 glog 日志

GLogConfig stGLogConfig =

{

.FLAGS_logtostderr = 1,

.FLAGS_colorlogtostderr = 1,

.FLAGS_stderrthreshold = _GLOG_INFO,

.FLAGS_minloglevel = _GLOG_INFO,

.FLAGS_logbuflevel = -1,

.FLAGS_logbufsecs = 0,

.FLAGS_max_log_size = 1,

.FLAGS_stop_logging_if_full_disk = 1,

};

strcpy(stGLogConfig.LogDir, "/tmp/log");

strcpy(stGLogConfig.InfoLogFileNameBase, "LOG-");

strcpy(stGLogConfig.LogFileNameExtension, "IPC-");

log_init(argv[0], &stGLogConfig);

alogd("app:[%s] begin! time=%s, %s", argv[0], __DATE__, __TIME__);

SampleViResetContext *pContext =

(SampleViResetContext*)malloc(sizeof(SampleViResetContext));

if(NULL == pContext)

{

aloge("fatal error! malloc fail!");

return -1;

}

memset(pContext, 0, sizeof(SampleViResetContext));

/* 解析命令行 */

char *pConfigFilePath = NULL;

if (parseCmdLine(pContext, argc, argv) != SUCCESS)

{

aloge("fatal error! parse cmd line fail");

result = -1;

goto _exit;

}

pConfigFilePath = pContext->mCmdLinePara.mConfigFilePath;

/* 解析配置文件 */

if(LoadSampleViResetConfig(&pContext->mConfigPara, pConfigFilePath) != SUCCESS)

{

aloge("fatal error! no config file or parse conf file fail");

result = -1;

goto _exit;

}

/* 初始化 MPP 系统 */

MPP_SYS_CONF_S stSysConf;

memset(&stSysConf, 0, sizeof(MPP_SYS_CONF_S));

stSysConf.nAlignWidth = 32;

AW_MPI_SYS_SetConf(&stSysConf);

ret = AW_MPI_SYS_Init();

if (ret != SUCCESS)

{

aloge("sys Init failed!");

goto _exit;

}

/* 主循环:按配置次数重复测试 */

while (pContext->mTestNum < pContext->mConfigPara.mTestCount ||

0 == pContext->mConfigPara.mTestCount)

{

alogd("======================================");

alogd("Auto Test count : %d. (MaxCount==%d)",

pContext->mTestNum, pContext->mConfigPara.mTestCount);

alogd("======================================");

VI_DEV nVippIndex;

/* 1. 依次启动所有 Vipp */

for(nVippIndex = pContext->mConfigPara.mVippStart;

nVippIndex <= pContext->mConfigPara.mVippEnd;

nVippIndex++)

{

RunOneVipp(HVIDEO(nVippIndex, 0), pContext);

}

/* 2. 等待各通道采集线程结束 */

for(nVippIndex = pContext->mConfigPara.mVippStart;

nVippIndex <= pContext->mConfigPara.mVippEnd;

nVippIndex++)

{

for (virvi_chn = 0; virvi_chn < 1; virvi_chn++)

{

pthread_join(

pContext->mVirViChnArray[HVIDEO(nVippIndex, 0)][virvi_chn].mThid,

NULL);

alogd("vipp[%d]virChn[%d] capture thread is exit!",

HVIDEO(nVippIndex, 0), virvi_chn);

}

}

/* 3. 依次销毁 Vipp */

for(nVippIndex = pContext->mConfigPara.mVippStart;

nVippIndex <= pContext->mConfigPara.mVippEnd;

nVippIndex++)

{

DestroyOneVipp(HVIDEO(nVippIndex, 0), pContext);

}

/* 4. 停止 ISP(如需) */

if(pContext->mbIspRunningFlag)

{

AW_MPI_ISP_Stop(pContext->mConfigPara.mIspDev);

alogd("isp[%d] is stopped!", pContext->mConfigPara.mIspDev);

pContext->mbIspRunningFlag = false;

}

pContext->mTestNum++;

pContext->mFirstVippRunFlag = false;

alogd("[%d] test time is done!", pContext->mTestNum);

}

_exit:

free(pContext);

alogd("%s test result: %s", argv[0], ((0==result) ? "success" : "fail"));

return result;

}sample_vi_reset.h

c

#ifndef _SAMPLE_VIRVI_H_

#define _SAMPLE_VIRVI_H_

#include <plat_type.h> // 平台相关类型定义

#include <tsemaphore.h> // 信号量相关接口

#define MAX_FILE_PATH_SIZE (256) // 文件路径最大长度

// 命令行参数结构体:保存配置文件路径

typedef struct SampleViResetCmdLineParam

{

char mConfigFilePath[MAX_FILE_PATH_SIZE]; // 配置文件完整路径

}SampleViResetCmdLineParam;

// 测试配置结构体:定义一次完整测试的所有参数

typedef struct SampleViResetConfig

{

int mTestCount; // 总共需要重复测试的次数

int mFrameCountStep1; // 第一阶段捕获的帧数;达到该帧数后执行VI复位

bool mbRunIsp; // 是否同时跑ISP(图像信号处理)线程

ISP_DEV mIspDev; // 使用的ISP设备号

VI_DEV mVippStart; // 起始VIPPI(Video Input PPI)设备号,取值范围 0~3

VI_DEV mVippEnd; // 结束VIPPI设备号,取值范围 0~3

int mPicWidth; // 主通道图像宽度

int mPicHeight; // 主通道图像高度

int mSubPicWidth; // 子通道图像宽度

int mSubPicHeight; // 子通道图像高度

int mFrameRate; // 帧率,单位 fps

PIXEL_FORMAT_E mPicFormat; // 像素格式,例如 MM_PIXEL_FORMAT_YUV_PLANAR_420

}SampleViResetConfig;

// 虚拟VI通道信息:每个线程/通道的运行时数据

typedef struct VirViChnInfo

{

pthread_t mThid; // 工作线程 ID

VI_DEV mVipp; // 物理 VIPP 设备号

VI_CHN mVirChn; // 虚拟通道号

AW_S32 mMilliSec; // 获取图像的超时时间(毫秒)

VIDEO_FRAME_INFO_S mFrameInfo; // 当前帧信息结构体

int mCaptureFrameCount; // 本通道已捕获的帧数

} VirViChnInfo;

// 全局上下文:保存整个测试过程的状态与数据

typedef struct SampleViResetContext

{

SampleViResetCmdLineParam mCmdLinePara; // 命令行参数

SampleViResetConfig mConfigPara; // 配置参数

VI_ATTR_S mViAttr; // VI 属性配置

VI_CHN mVirChn; // 当前使用的虚拟通道号

// 二维数组:保存每个 VIPP 下每个虚拟通道的运行时信息

VirViChnInfo mVirViChnArray[MAX_VIPP_DEV_NUM][MAX_VIR_CHN_NUM];

int mCaptureNum; // 当前已捕获的总帧数(调试用)

bool mFirstVippRunFlag; // 标记与 ISP 连接的 VIPP 是否已启动

bool mbIspRunningFlag; // 标记 ISP 是否在运行

int mTestNum; // 当前已执行的测试轮次

// VIDEO_FRAME_INFO_S mFrameInfo; // 已注释:原打算全局缓存一帧,后未使用

}SampleViResetContext;

#endif /* _SAMPLE_VIRVI_H_ */在全志 SDK 目录激活环境,并选择方案:

shell

source build/envsetup.sh

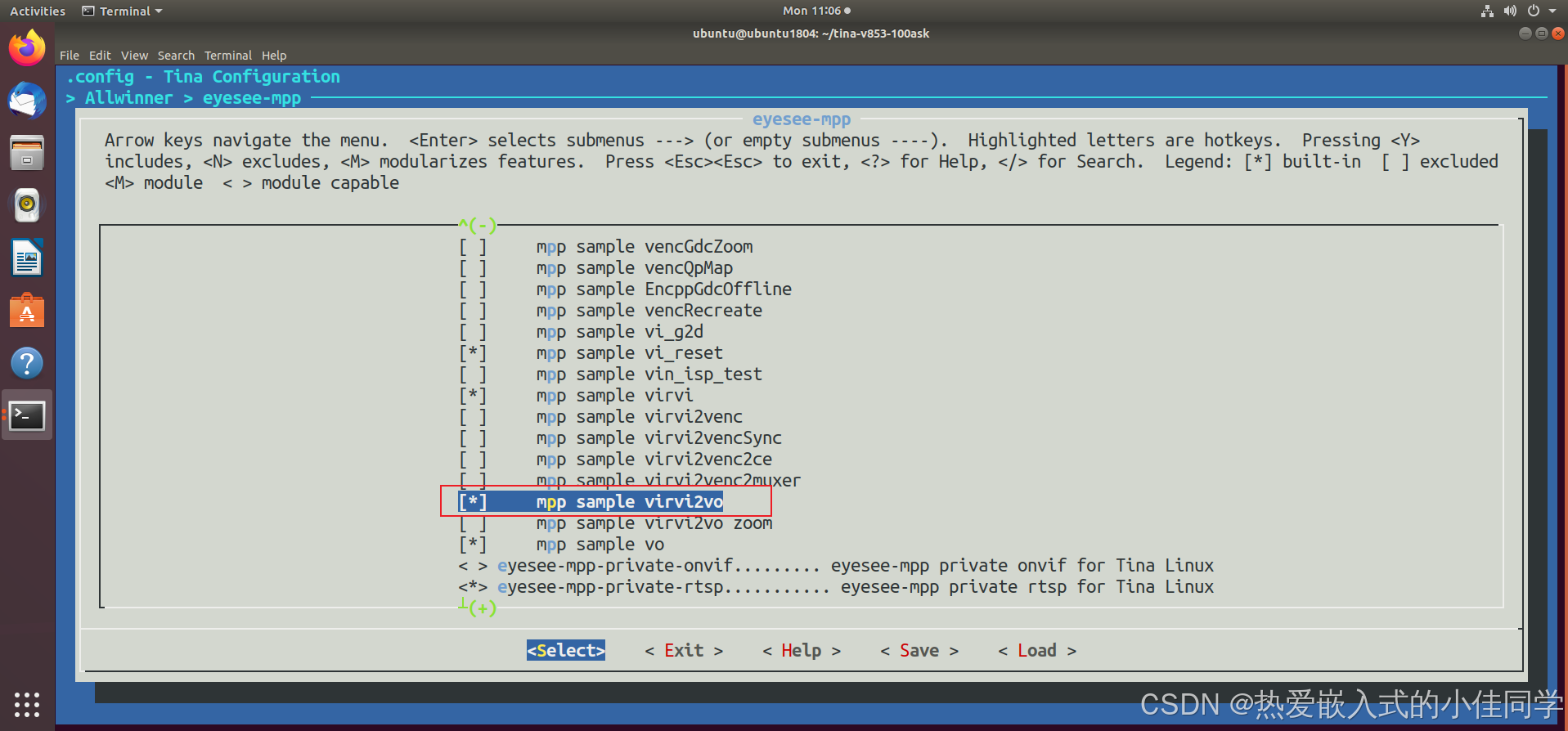

lunch进入配置界面:

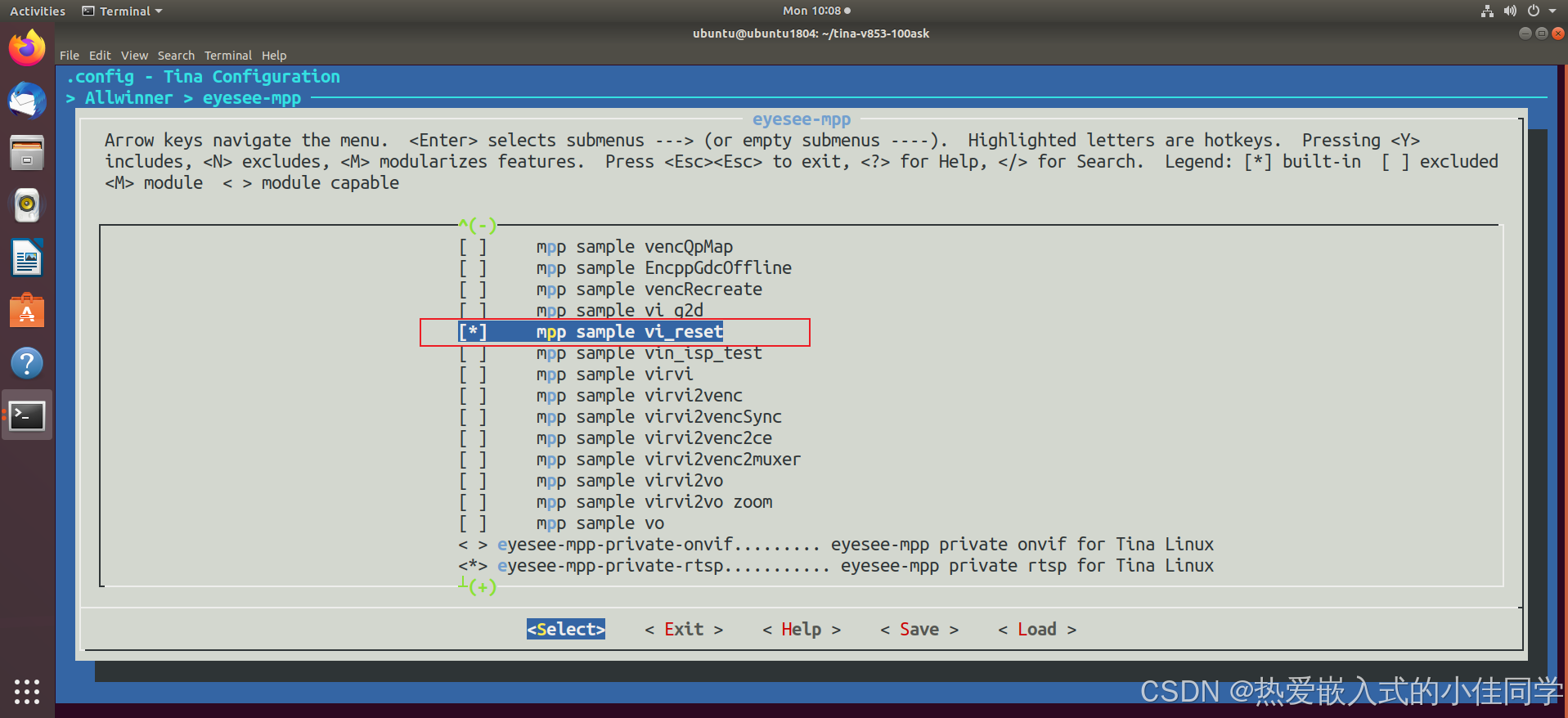

shell

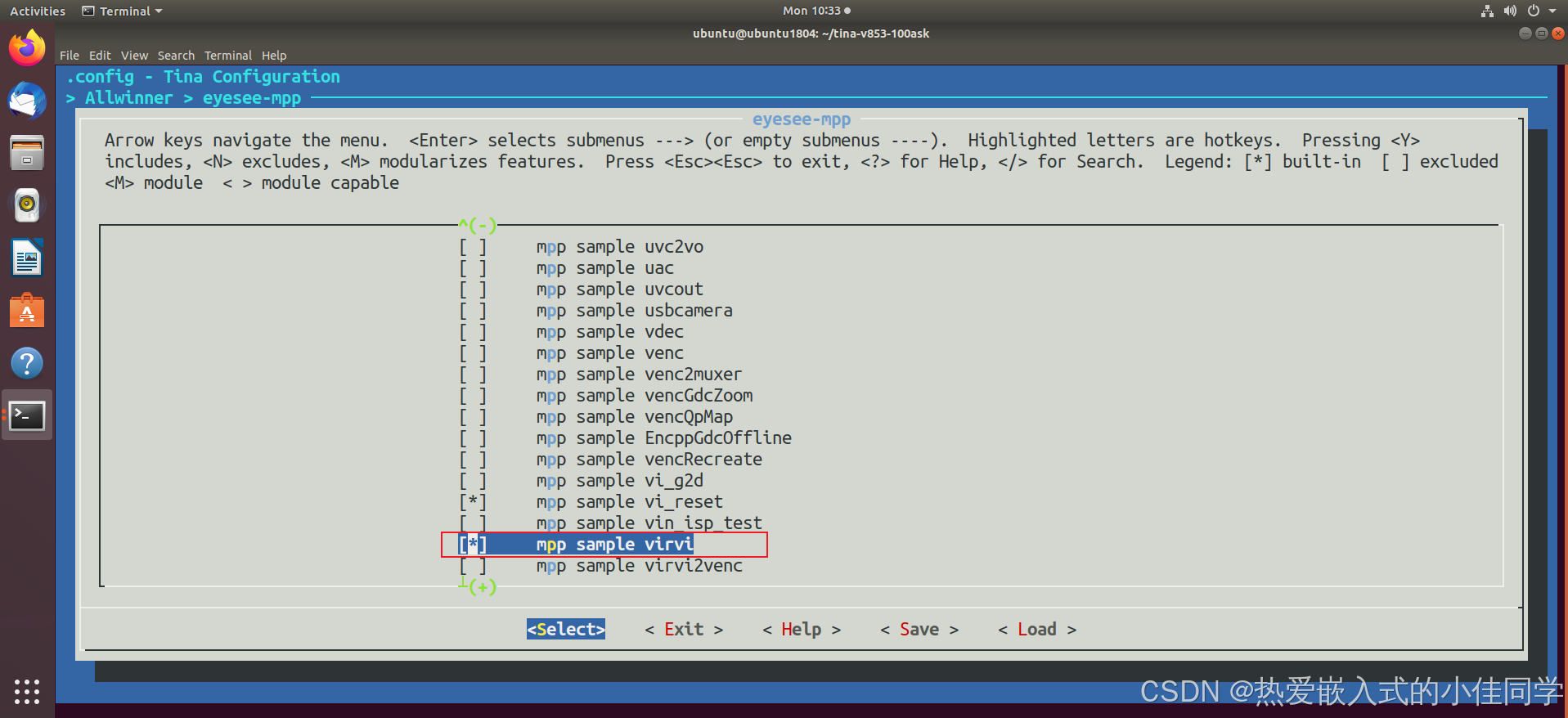

make menuconfig选择 MPP 示例程序,保存并退出:

shell

Allwinner --->

eyesee-mpp --->

[*] select mpp sample

清理和编译MPP程序:

shell

cleanmpp

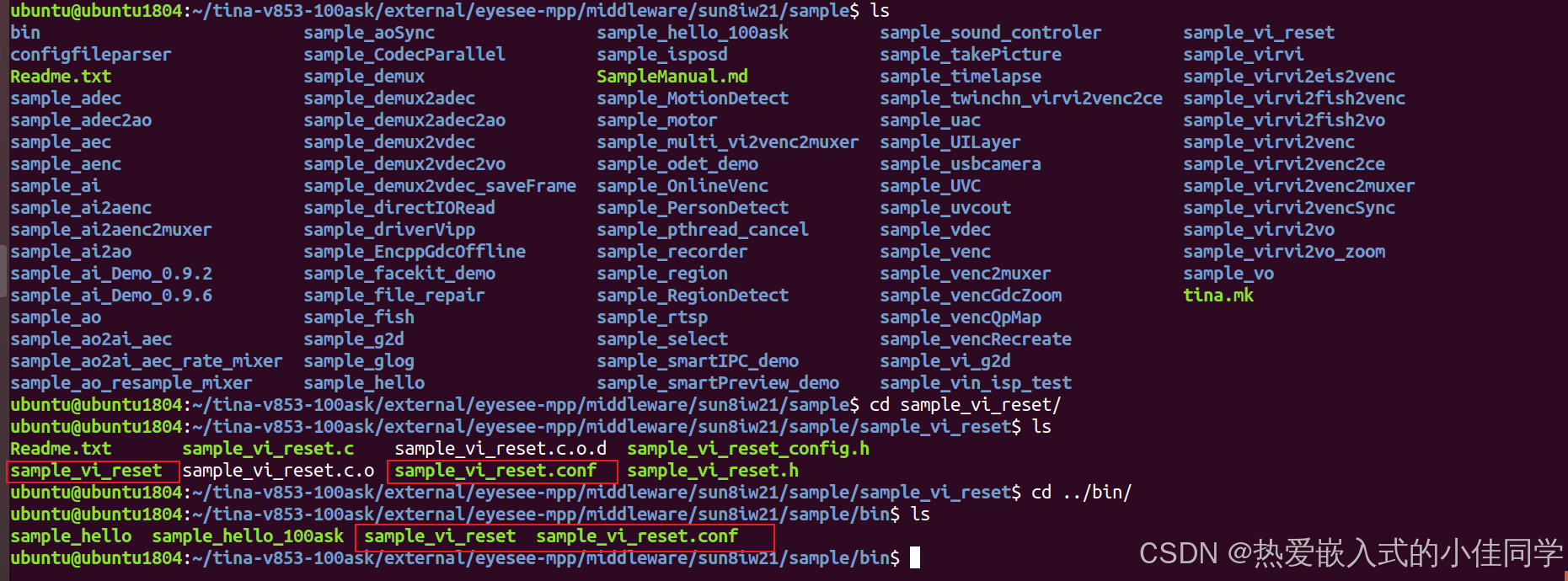

mkmpp编译后 MPP sample 测试程序和配置文件存放位置:

有两个位置:

(1)每个 MPP sample 的源码目录下

tina\external\eyesee-mpp\middleware\sun8iw21\sample\sample_xxx

(2)统一存放到bin目录下

tina\external\eyesee-mpp\middleware\sun8iw21\sample\bin\

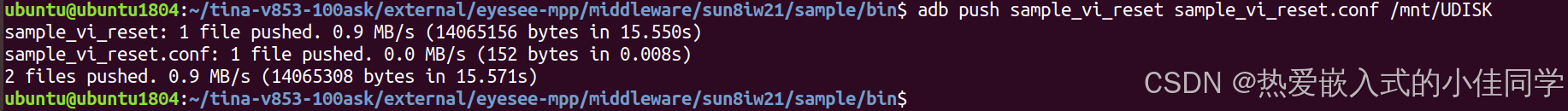

将编译出的文件上传到开发板:

shell

adb push sample_vi_reset sample_vi_reset.conf /mnt/UDISK

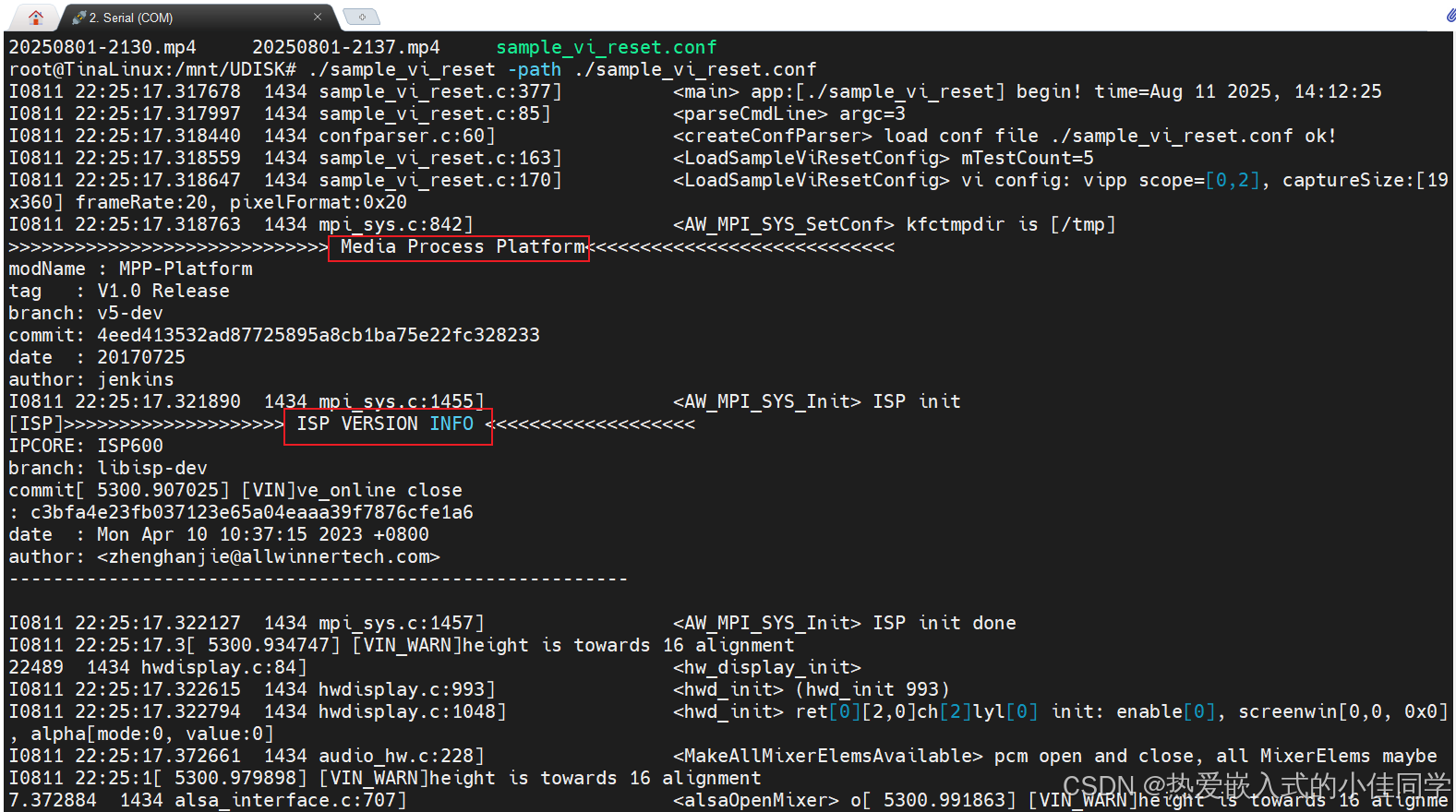

运行程序:

shell

./sample_vi_reset -path ./sample_vi_reset.conf

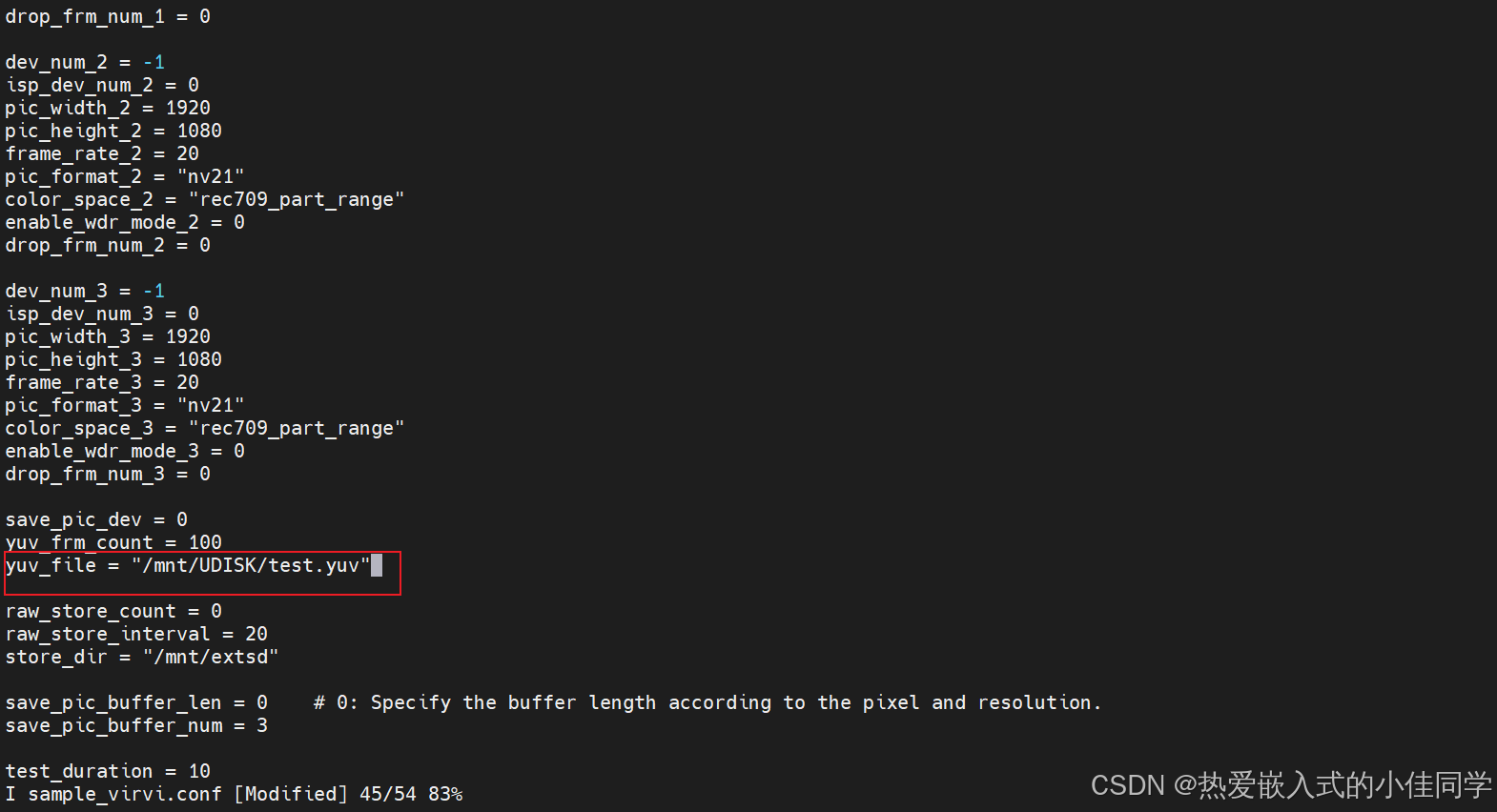

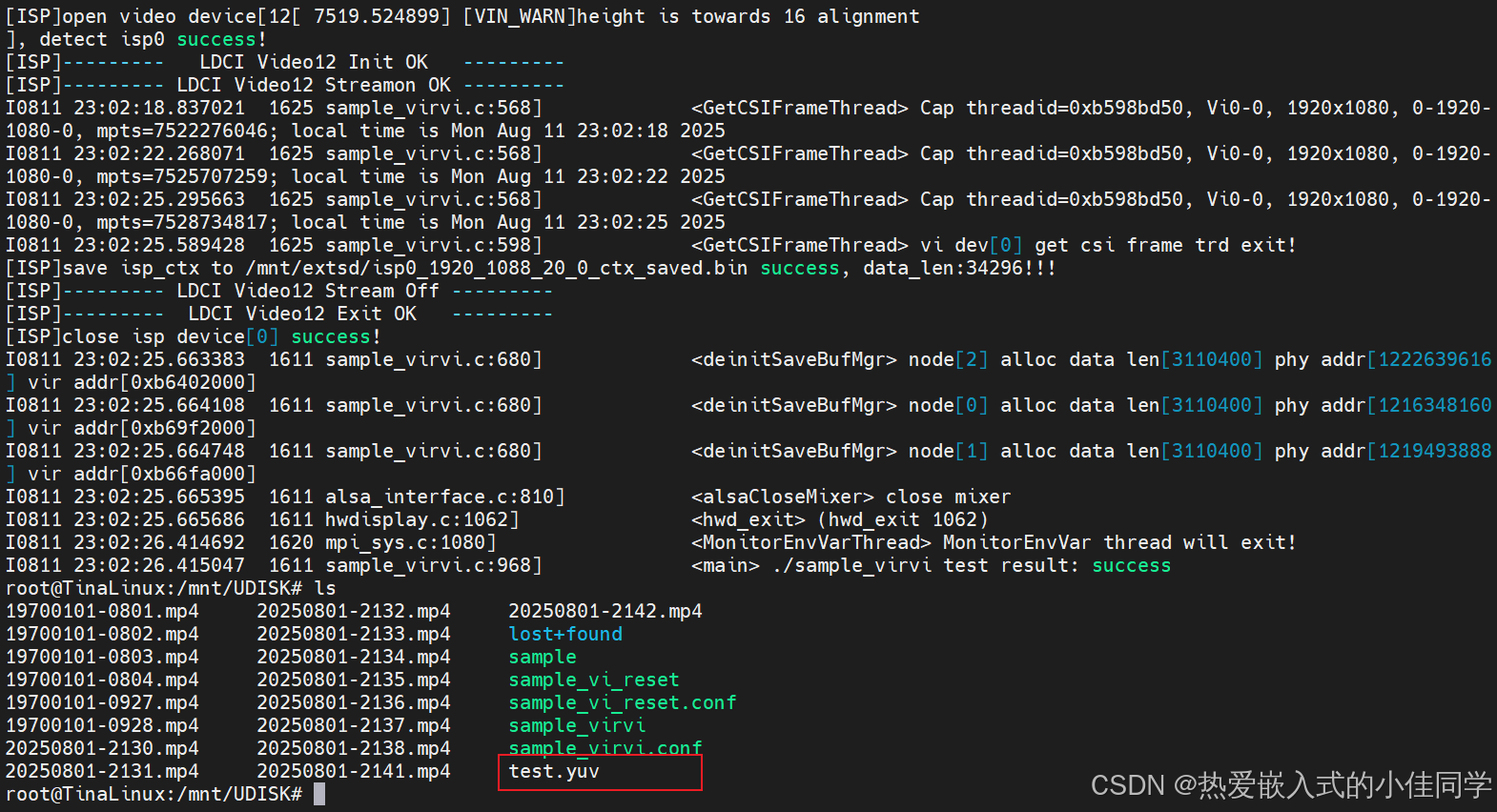

5.2 获得摄像头数据并保存

示例:使用 VI 组件采集摄像头图像输出并生成 YUV 文件保存至开发板端

步骤:

- 加载参数

- 初始化摄像头

- 启动 MPP 平台

- 初始化缓冲区管理器

- 创建保存图像线程

- 配置 VI 组件参数并启动

- 启动虚通道并录制视频

- 关闭 VI 组件

- 退出 MPP 平台

示例代码解析:

sample_virvi.c

c

#include <endian.h>

#include <errno.h>

#include <fcntl.h>

#include <getopt.h>

#include <pthread.h>

#include <signal.h>

#include <stdint.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <utils/plat_log.h>

#include "media/mpi_sys.h"

#include "media/mm_comm_vi.h"

#include "media/mpi_vi.h"

#include "media/mpi_isp.h"

#include <mpi_venc.h>

#include <utils/VIDEO_FRAME_INFO_S.h>

#include <mpi_videoformat_conversion.h>

#include <confparser.h>

#include <cdx_list.h>

#include "sample_virvi.h"

#include "sample_virvi_config.h"

#define ISP_RUN 1

#define DEFAULT_SAVE_PIC_DIR "/tmp"

/* 全局变量:方便信号处理函数访问 */

SampleVirViContext *gpSampleVirViContext = NULL;

/* 捕获到 Ctrl+C 后,触发主线程退出 */

static void handle_exit()

{

alogd("user want to exit!");

if(NULL != gpSampleVirViContext)

{

cdx_sem_up(&gpSampleVirViContext->mSemExit);//信号量增加操作

}

}

/* 解析命令行参数:目前只支持 -path xxx.conf */

static int ParseCmdLine(int argc, char **argv, SampleVirViCmdLineParam *pCmdLinePara)

{

alogd("sample virvi path:[%s], arg number is [%d]", argv[0], argc);

int ret = 0;

int i=1;

memset(pCmdLinePara, 0, sizeof(SampleVirViCmdLineParam));

while(i < argc)

{

if(!strcmp(argv[i], "-path"))

{

if(++i >= argc)

{

aloge("fatal error! use -h to learn how to set parameter!!!");

ret = -1;

break;

}

if(strlen(argv[i]) >= MAX_FILE_PATH_SIZE)

{

aloge("fatal error! file path[%s] too long: [%d]>=[%d]!", argv[i], strlen(argv[i]), MAX_FILE_PATH_SIZE);

}

strncpy(pCmdLinePara->mConfigFilePath, argv[i], MAX_FILE_PATH_SIZE-1);

pCmdLinePara->mConfigFilePath[MAX_FILE_PATH_SIZE-1] = '\0';

}

else if(!strcmp(argv[i], "-h"))

{

alogd("CmdLine param:\n"

"\t-path /home/sample_virvi.conf\n");

ret = 1;

break;

}

else

{

alogd("ignore invalid CmdLine param:[%s], type -h to get how to set parameter!", argv[i]);

}

i++;

}

return ret;

}

/* 根据 key 与序号生成配置项名称,例如 virvi_width_0 */

static char *parserKeyCfg(char *pKeyCfg, int nKey)

{

static char keyCfg[MAX_FILE_PATH_SIZE] = {0};

if (pKeyCfg)

{

sprintf(keyCfg, "%s_%d", pKeyCfg, nKey);

return keyCfg;

}

aloge("fatal error! key cfg is null key num[%d]", nKey);

return NULL;

}

/* 根据字符串判断像素格式 */

static void judgeCaptureFormat(char *pFormatConf, PIXEL_FORMAT_E *pCapFromat)

{

if (NULL != pFormatConf)

{

if (!strcmp(pFormatConf, "nv21"))

{

*pCapFromat = MM_PIXEL_FORMAT_YVU_SEMIPLANAR_420;

}

else if (!strcmp(pFormatConf, "yv12"))

{

*pCapFromat = MM_PIXEL_FORMAT_YVU_PLANAR_420;

}

else if (!strcmp(pFormatConf, "nv12"))

{

*pCapFromat = MM_PIXEL_FORMAT_YUV_SEMIPLANAR_420;

}

else if (!strcmp(pFormatConf, "yu12"))

{

*pCapFromat = MM_PIXEL_FORMAT_YUV_PLANAR_420;

}

/* aw compression format */

else if (!strcmp(pFormatConf, "aw_afbc"))

{

*pCapFromat = MM_PIXEL_FORMAT_YUV_AW_AFBC;

}

else if (!strcmp(pFormatConf, "aw_lbc_2_0x"))

{

*pCapFromat = MM_PIXEL_FORMAT_YUV_AW_LBC_2_0X;

}

else if (!strcmp(pFormatConf, "aw_lbc_2_5x"))

{

*pCapFromat = MM_PIXEL_FORMAT_YUV_AW_LBC_2_5X;

}

else if (!strcmp(pFormatConf, "aw_lbc_1_5x"))

{

*pCapFromat = MM_PIXEL_FORMAT_YUV_AW_LBC_1_5X;

}

else if (!strcmp(pFormatConf, "aw_lbc_1_0x"))

{

*pCapFromat = MM_PIXEL_FORMAT_YUV_AW_LBC_1_0X;

}

else if (!strcmp(pFormatConf, "srggb10"))

{

*pCapFromat = MM_PIXEL_FORMAT_RAW_SRGGB10;

}

/* aw_package_422 NOT support */

// else if (!strcmp(ptr, "aw_package_422"))

// {

// *pCapFromat = MM_PIXEL_FORMAT_YVYU_AW_PACKAGE_422;

// }

else

{

*pCapFromat = MM_PIXEL_FORMAT_YVU_PLANAR_420;

aloge("fatal error! wrong src pixfmt:%s", pFormatConf);

alogw("use the default pixfmt %d", *pCapFromat);

}

}

}

/* 根据字符串判断颜色空间 */

void judgeCaptureColorspace(char *pColorSpConf, enum v4l2_colorspace *pV4L2ColorSp)

{

if (NULL != pColorSpConf)

{

if (!strcmp(pColorSpConf, "jpeg"))

{

*pV4L2ColorSp = V4L2_COLORSPACE_JPEG;

}

else if (!strcmp(pColorSpConf, "rec709"))

{

*pV4L2ColorSp = V4L2_COLORSPACE_REC709;

}

else if (!strcmp(pColorSpConf, "rec709_part_range"))

{

*pV4L2ColorSp = V4L2_COLORSPACE_REC709_PART_RANGE;

}

else

{

aloge("fatal error! wrong color space:%s, use dafault jpeg", pColorSpConf);

*pV4L2ColorSp = V4L2_COLORSPACE_JPEG;

}

}

}

/* 读取配置文件,填充 SampleVirViContext */

static ERRORTYPE loadConfigPara(SampleVirViContext *pContext, const char *conf_path)

{

int ret = 0;

char *ptr = NULL;

SampleVirViConfig *pConfig = NULL;

SampleVirviSaveBufMgrConfig *pSaveBufMgrConfig = &pContext->mSaveBufMgrConfig;

//default onlu use vipp0

pConfig = &pContext->mCaps[0].mConfig;

memset(pConfig, 0, sizeof(SampleVirViConfig));

pConfig->DevNum = 0;

pConfig->mIspDevNum = 0;

pConfig->FrameRate = 20;

pConfig->PicWidth = 1920;

pConfig->PicHeight = 1080;

pConfig->PicFormat = MM_PIXEL_FORMAT_YVU_SEMIPLANAR_420;

pConfig->mColorSpace = V4L2_COLORSPACE_JPEG;

pConfig->mViDropFrmCnt = 0;

pConfig = &pContext->mCaps[1].mConfig;

memset(pConfig, 0, sizeof(SampleVirViConfig));

pConfig->DevNum = MM_INVALID_DEV;

pConfig->mIspDevNum = MM_INVALID_DEV;

pConfig = &pContext->mCaps[2].mConfig;

memset(pConfig, 0, sizeof(SampleVirViConfig));

pConfig->DevNum = MM_INVALID_DEV;

pConfig->mIspDevNum = MM_INVALID_DEV;

pConfig = &pContext->mCaps[3].mConfig;

memset(pConfig, 0, sizeof(SampleVirViConfig));

pConfig->DevNum = MM_INVALID_DEV;

pConfig->mIspDevNum = MM_INVALID_DEV;

memset(pSaveBufMgrConfig, 0, sizeof(SampleVirviSaveBufMgrConfig));

pSaveBufMgrConfig->mSavePicDev = 0;

pSaveBufMgrConfig->mYuvFrameCount = 5;

sprintf(pSaveBufMgrConfig->mYuvFile, "%s/test.yuv", DEFAULT_SAVE_PIC_DIR);

pSaveBufMgrConfig->mRawStoreCount = 5;

pSaveBufMgrConfig->mRawStoreInterval = 20;

sprintf(pSaveBufMgrConfig->mStoreDirectory, "%s", DEFAULT_SAVE_PIC_DIR);

pSaveBufMgrConfig->mSavePicBufferLen = 1920*1080*3/2;

pSaveBufMgrConfig->mSavePicBufferNum = 5;

if(conf_path != NULL)

{

CONFPARSER_S stConfParser;

ret = createConfParser(conf_path, &stConfParser);

if(ret < 0)

{

aloge("load conf fail");

return FAILURE;

}

for (int i = 0; i < MAX_CAPTURE_NUM; i++)

{

pConfig = &pContext->mCaps[i].mConfig;

pConfig->AutoTestCount = GetConfParaInt(&stConfParser, parserKeyCfg(SAMPLE_VirVi_Auto_Test_Count, i), 0);

pConfig->GetFrameCount = GetConfParaInt(&stConfParser, parserKeyCfg(SAMPLE_VirVi_Get_Frame_Count, i), 0);

pConfig->DevNum = GetConfParaInt(&stConfParser, parserKeyCfg(SAMPLE_VirVi_Dev_Num, i), 0);

pConfig->mIspDevNum = GetConfParaInt(&stConfParser, parserKeyCfg(SAMPLE_VirVi_Dev_Num, i), 0);

pConfig->FrameRate = GetConfParaInt(&stConfParser, parserKeyCfg(SAMPLE_VirVi_Frame_Rate, i), 0);

pConfig->PicWidth = GetConfParaInt(&stConfParser, parserKeyCfg(SAMPLE_VirVi_Pic_Width, i), 0);

pConfig->PicHeight = GetConfParaInt(&stConfParser, parserKeyCfg(SAMPLE_VirVi_Pic_Height, i), 0);

ptr = (char*)GetConfParaString(&stConfParser, parserKeyCfg(SAMPLE_VirVi_Pic_Format, i), NULL);

judgeCaptureFormat(ptr, &pConfig->PicFormat);

ptr = (char *)GetConfParaString(&stConfParser, parserKeyCfg(SAMPLE_VirVi_COLOR_SPACE, i), NULL);

judgeCaptureColorspace(ptr, &pConfig->mColorSpace);

pConfig->mEnableWDRMode = GetConfParaInt(&stConfParser, parserKeyCfg(SAMPLE_VirVi_Enable_WDR, i), 0);

pConfig->mViDropFrmCnt = GetConfParaInt(&stConfParser, parserKeyCfg(SAMPLE_VirVi_ViDropFrmCnt, i), 0);

alogd("capture[%d] dev num[%d], capture size[%dx%d] format[%d] framerate[%d] colorspace[%d] EnWDR[%d] drop frm[%d]", \

i, pConfig->DevNum, pConfig->PicWidth, pConfig->PicHeight, pConfig->PicFormat, \

pConfig->FrameRate, pConfig->mColorSpace, pConfig->mEnableWDRMode, pConfig->mViDropFrmCnt);

}

pSaveBufMgrConfig->mSavePicDev = GetConfParaInt(&stConfParser, SAMPLE_VirVi_SavePicDev, 0);

pSaveBufMgrConfig->mYuvFrameCount = GetConfParaInt(&stConfParser, SAMPLE_VirVi_YuvFrameCount, 0);

ptr = (char *)GetConfParaString(&stConfParser, SAMPLE_VirVi_YuvFilePath, NULL);

if (ptr)

strcpy(pSaveBufMgrConfig->mYuvFile, ptr);

pSaveBufMgrConfig->mRawStoreCount = GetConfParaInt(&stConfParser, SAMPLE_VirVi_RawStoreCount, 0);

pSaveBufMgrConfig->mRawStoreInterval = GetConfParaInt(&stConfParser, SAMPLE_VirVi_RawStoreInterval, 0);

ptr = (char *)GetConfParaString(&stConfParser, SAMPLE_VirVi_StoreDir, NULL);

if (ptr)

strcpy(pSaveBufMgrConfig->mStoreDirectory, ptr);

pSaveBufMgrConfig->mSavePicBufferNum = GetConfParaInt(&stConfParser, SAMPLE_VirVi_SavePicBufferNum, 0);

pSaveBufMgrConfig->mSavePicBufferLen = GetConfParaInt(&stConfParser, SAMPLE_VirVi_SavePicBufferLen, 0);

if (0 == pSaveBufMgrConfig->mSavePicBufferLen)

{

SampleVirViConfig *pSaveConfig = NULL;

for (int i = 0; i < MAX_CAPTURE_NUM; i++)

{

pSaveConfig = &pContext->mCaps[i].mConfig;

if (pSaveConfig->DevNum

== pSaveBufMgrConfig->mSavePicDev)

break;

}

if (pSaveConfig)

{

pSaveBufMgrConfig->mSavePicBufferLen = pSaveConfig->PicWidth*pSaveConfig->PicHeight*3/2;

}

alogd("user did not specify buf len. set a default value %d bytes", pSaveBufMgrConfig->mSavePicBufferLen);

}

pContext->mTestDuration = GetConfParaInt(&stConfParser, SAMPLE_VirVi_Test_Duration, 0);

alogd("save pic dev[%d], yuv count[%d] file[%s], raw count[%d] interval[%d] save dir[%s], buf len[%d] num[%d]", \

pSaveBufMgrConfig->mSavePicDev, pSaveBufMgrConfig->mYuvFrameCount, pSaveBufMgrConfig->mYuvFile, \

pSaveBufMgrConfig->mRawStoreCount, pSaveBufMgrConfig->mRawStoreInterval, pSaveBufMgrConfig->mStoreDirectory, \

pSaveBufMgrConfig->mSavePicBufferLen, pSaveBufMgrConfig->mSavePicBufferNum);

alogd("test duration[%d]", pContext->mTestDuration);

destroyConfParser(&stConfParser);

}

return SUCCESS;

}

/* 把一帧 RAW/YUV 数据写入磁盘 */

static int saveRawData(SampleVirviSaveBufMgr *pSaveBufMgr, SampleVirviSaveBufNode *pNode)

{

//对文件名进行了组装。文件名包括存储目录、帧尺寸、像素格式、设备编号、帧计数和文件类型等信息。

SampleVirviSaveBufMgrConfig *pConfig = &pSaveBufMgr->mConfig;

//make file name, e.g., /mnt/extsd/pic[0].NV21M

char strPixFmt[16] = {0};

char fileType[16] = {0};

strcpy(fileType, "yuv");

switch(pNode->mFrmFmt)

{

case MM_PIXEL_FORMAT_YVU_SEMIPLANAR_420:

{

strcpy(strPixFmt,"nv21");

break;

}

case MM_PIXEL_FORMAT_YUV_SEMIPLANAR_420:

{

strcpy(strPixFmt,"nv12");

break;

}

case MM_PIXEL_FORMAT_YVU_PLANAR_420:

{

strcpy(strPixFmt,"yv12");

break;

}

case MM_PIXEL_FORMAT_YUV_PLANAR_420:

{

strcpy(strPixFmt,"yu12");

break;

}

case MM_PIXEL_FORMAT_YUV_AW_AFBC:

{

strcpy(strPixFmt, "afbc");

break;

}

case MM_PIXEL_FORMAT_YUV_AW_LBC_1_0X:

{

strcpy(strPixFmt, "lbc1_0x");

break;

}

case MM_PIXEL_FORMAT_YUV_AW_LBC_1_5X:

{

strcpy(strPixFmt, "lbc1_5x");

break;

}

case MM_PIXEL_FORMAT_YUV_AW_LBC_2_0X:

{

strcpy(strPixFmt, "lbc2_0x");

break;

}

case MM_PIXEL_FORMAT_YUV_AW_LBC_2_5X:

{

strcpy(strPixFmt, "lbc2_5x");

break;

}

case MM_PIXEL_FORMAT_RAW_SRGGB10:

{

strcpy(strPixFmt, "srggb10");

memset(fileType, 0, sizeof(fileType));

strcpy(fileType, "raw");

break;

}

default:

{

strcpy(strPixFmt,"unknown");

memset(fileType, 0, sizeof(fileType));

strcpy(fileType, "bin");

break;

}

}

char strFilePath[MAX_FILE_PATH_SIZE];

snprintf(strFilePath, MAX_FILE_PATH_SIZE, "%s/%dx%d_%s_vipp%d_%d.%s", \

pConfig->mStoreDirectory, pNode->mFrmSize.Width, pNode->mFrmSize.Height, strPixFmt, \

pConfig->mSavePicDev, pNode->mFrmCnt, fileType);

int nLen;

FILE *fpPic = fopen(strFilePath, "wb");//以二进制写入模式打开文件

if(fpPic != NULL)

{

nLen = fwrite(pNode->mpDataVirAddr, 1, pNode->mFrmLen, fpPic);//将节点 pNode 中的数据写入到文件中

if(nLen != pNode->mFrmLen)

{

aloge("fatal error! fwrite fail, write len[%d], frm len[%d], virAddr[%p]", \

nLen, pNode->mFrmLen, pNode->mpDataVirAddr);

}

alogd("virAddr[%p], length=[%d]", pNode->mpDataVirAddr, pNode->mFrmLen);

fclose(fpPic);

fpPic = NULL;

alogd("store raw frame in file[%s]", strFilePath);

}

else

{

aloge("fatal error! open file[%s] fail!", strFilePath);

}

return 0;

}

/* 将 VIDEO_FRAME_INFO_S 拷贝到缓存节点,并计算各分量长度 */

static int copyVideoFrame(VIDEO_FRAME_INFO_S *pSrc, SampleVirviSaveBufNode *pNode)

{

unsigned int nFrmsize[3] = {0,0,0};

void *pTmp = pNode->mpDataVirAddr;

unsigned int dataWidth = pSrc->VFrame.mOffsetRight - pSrc->VFrame.mOffsetLeft;//图像宽度

unsigned int dataHeight = pSrc->VFrame.mOffsetBottom - pSrc->VFrame.mOffsetTop;//图像高度

alogv("dataWidth:%d, dataHeight:%d", dataWidth, dataHeight);

switch(pSrc->VFrame.mPixelFormat)

{

case MM_PIXEL_FORMAT_YUV_SEMIPLANAR_420:

case MM_PIXEL_FORMAT_YVU_SEMIPLANAR_420:

case MM_PIXEL_FORMAT_AW_NV21M:

nFrmsize[0] = dataWidth*dataHeight; //Y

nFrmsize[1] = dataWidth*dataHeight/2; //VU

nFrmsize[2] = 0;

break;

case MM_PIXEL_FORMAT_YVU_PLANAR_420:

case MM_PIXEL_FORMAT_YUV_PLANAR_420:

nFrmsize[0] = dataWidth*dataHeight;

nFrmsize[1] = dataWidth*dataHeight/4;

nFrmsize[2] = dataWidth*dataHeight/4;

break;

case MM_PIXEL_FORMAT_YUV_AW_LBC_2_0X:

case MM_PIXEL_FORMAT_YUV_AW_LBC_2_5X:

case MM_PIXEL_FORMAT_YUV_AW_LBC_1_5X:

case MM_PIXEL_FORMAT_YUV_AW_LBC_1_0X:

nFrmsize[0] = pSrc->VFrame.mStride[0];

nFrmsize[1] = pSrc->VFrame.mStride[1];

nFrmsize[2] = pSrc->VFrame.mStride[2];

break;

default:

aloge("fatal error! not support pixel format[0x%x]", pSrc->VFrame.mPixelFormat);

return -1;

}

if (pNode->mDataLen < (nFrmsize[0]+nFrmsize[1]+nFrmsize[2]))

{

aloge("fatal error! buffer len[%d] < frame len[%d]", \

pNode->mDataLen, nFrmsize[0]+nFrmsize[1]+nFrmsize[2]);

return -1;

}

pNode->mFrmSize.Width = dataHeight;

pNode->mFrmSize.Height = dataWidth;

pNode->mFrmFmt = pSrc->VFrame.mPixelFormat;

for (int i = 0; i < 3; i++)

{

if (pSrc->VFrame.mpVirAddr[i])

{

memcpy(pTmp, pSrc->VFrame.mpVirAddr[i], nFrmsize[i]);

pTmp += nFrmsize[i];

pNode->mFrmLen += nFrmsize[i];

}

}

return 0;

}

/* 保存线程:从就绪链表取节点,写文件后放回空闲链表 */

static void *SaveCsiFrameThrad(void *pThreadData)

{

SampleVirViContext *pContext = (SampleVirViContext *)pThreadData;

SampleVirviSaveBufMgr *pBufMgr = pContext->mpSaveBufMgr;

SampleVirviSaveBufMgrConfig *pConfig = &pBufMgr->mConfig;

int nRawStoreNum = 0;

FILE *fp = NULL;

fp = fopen(pConfig->mYuvFile, "wb");

pBufMgr->mbTrdRunningFlag = TRUE;

while (1)

{

if (pContext->mbSaveCsiTrdExitFlag)

{

break;

}

SampleVirviSaveBufNode *pEntry = \

list_first_entry_or_null(&pBufMgr->mReadyList, SampleVirviSaveBufNode, mList);//获取链表中的第一个元素

if (pEntry)

{

pthread_mutex_lock(&pBufMgr->mIdleListLock);

if (pEntry->mFrmCnt <= pConfig->mYuvFrameCount)

{

if (fp)

{

alogv("save frm[%d] len[%d] file[%s] success!", \

pEntry->mFrmCnt, pEntry->mFrmLen, pConfig->mYuvFile);

fwrite(pEntry->mpDataVirAddr, 1, pEntry->mFrmLen, fp);

}

}

if (pConfig->mRawStoreCount > 0

&& nRawStoreNum < pConfig->mRawStoreCount

&& 0 == pEntry->mFrmCnt % pConfig->mRawStoreInterval)

{

saveRawData(pBufMgr, pEntry);//保存原始数据到文件

nRawStoreNum++;

}

/*重置帧节点的数据,然后将其移到空闲列表。*/

pEntry->mFrmLen = 0;

pEntry->mFrmFmt = 0;

memset(&pEntry->mFrmSize, 0, sizeof(SIZE_S));

memset(pEntry->mpDataVirAddr, 0, pEntry->mDataLen);

pthread_mutex_lock(&pBufMgr->mReadyListLock);

list_move_tail(&pEntry->mList, &pBufMgr->mIdleList);

pthread_mutex_unlock(&pBufMgr->mReadyListLock);

pthread_mutex_unlock(&pBufMgr->mIdleListLock);

}

else

{

usleep(10*1000);

}

}

if (fp) //关闭文件指针

{

fclose(fp);

fp = NULL;

}

return (void *)NULL;

}

/* 取流线程:循环从 VI 拿帧并放入缓存队列 */

static void *GetCSIFrameThread(void *pThreadData)

{

int ret = 0;

int frm_cnt = 0;

SampleVirviCap *pCap = (SampleVirviCap *)pThreadData;

SampleVirViConfig *pConfig = &pCap->mConfig;

SampleVirViContext *pContext = pCap->mpContext;

SampleVirviSaveBufMgr *pBufMgr = pContext->mpSaveBufMgr;

SampleVirviSaveBufMgrConfig *pBufMgrConfig = &pBufMgr->mConfig;

alogd("loop Sample_virvi, Cap threadid=0x%lx, ViDev = %d, ViCh = %d", \

pCap->thid, pCap->Dev, pCap->Chn);

pCap->mbTrdRunning = TRUE;

pCap->mRawStoreNum = 0;

while (1)

{

if (pCap->mbExitFlag)

{

break;

}

// vi get frame

if ((ret = AW_MPI_VI_GetFrame(pCap->Dev, pCap->Chn, &pCap->pstFrameInfo, pCap->s32MilliSec)) != 0)

{

// printf("VI Get Frame failed!\n");

continue ;

}

frm_cnt++;

if (frm_cnt % 60 == 0)

{

time_t now;

struct tm *timenow;

time(&now);

timenow = localtime(&now);

alogd("Cap threadid=0x%lx, Vi%d-%d, %dx%d, %d-%d-%d-%d, mpts=%lld; local time is %s", \

pCap->thid, pCap->Dev, pCap->Chn,

pCap->pstFrameInfo.VFrame.mWidth, pCap->pstFrameInfo.VFrame.mHeight,

pCap->pstFrameInfo.VFrame.mOffsetLeft, pCap->pstFrameInfo.VFrame.mOffsetRight,

pCap->pstFrameInfo.VFrame.mOffsetBottom, pCap->pstFrameInfo.VFrame.mOffsetTop,

pCap->pstFrameInfo.VFrame.mpts, asctime(timenow));

}

if (NULL != pBufMgr)

{

if (pBufMgrConfig->mSavePicDev == pCap->Dev)

{

SampleVirviSaveBufNode *pEntry = \

list_first_entry_or_null(&pBufMgr->mIdleList, SampleVirviSaveBufNode, mList);

if (pEntry)

{

pthread_mutex_lock(&pBufMgr->mIdleListLock);

ret = copyVideoFrame(&pCap->pstFrameInfo, pEntry);

if (!ret)

{

pEntry->mpCap = (void *)pCap;

pEntry->mFrmCnt = frm_cnt;

pthread_mutex_lock(&pBufMgr->mReadyListLock);

list_move_tail(&pEntry->mList, &pBufMgr->mReadyList);

pthread_mutex_unlock(&pBufMgr->mReadyListLock);

}

pthread_mutex_unlock(&pBufMgr->mIdleListLock);

}

}

}

// vi release frame

AW_MPI_VI_ReleaseFrame(pCap->Dev, pCap->Chn, &pCap->pstFrameInfo);

}

alogd("vi dev[%d] get csi frame trd exit!", pCap->Dev);

return NULL;

}

/* 初始化缓存管理器:分配若干缓存节点 */

static SampleVirviSaveBufMgr *initSaveBufMgr(SampleVirViContext *pContext)

{

SampleVirviSaveBufMgr *pSaveBufMgr = NULL;

SampleVirviSaveBufMgrConfig *pConfig = NULL;

pSaveBufMgr = malloc(sizeof(SampleVirviSaveBufMgr));

if (NULL == pSaveBufMgr)

{

aloge("fatal error! save buffer mgr malloc fail!");

return NULL;

}

memset(pSaveBufMgr, 0, sizeof(SampleVirviSaveBufMgr));

pConfig = &pSaveBufMgr->mConfig;

memset(pConfig, 0, sizeof(SampleVirviSaveBufMgrConfig));

memcpy(pConfig, &pContext->mSaveBufMgrConfig, sizeof(SampleVirviSaveBufMgrConfig));

//初始化缓冲区管理器

INIT_LIST_HEAD(&pSaveBufMgr->mIdleList);

INIT_LIST_HEAD(&pSaveBufMgr->mReadyList);

pthread_mutex_init(&pSaveBufMgr->mIdleListLock, NULL);

pthread_mutex_init(&pSaveBufMgr->mReadyListLock, NULL);

for (int i = 0; i < pConfig->mSavePicBufferNum; i++)

{

SampleVirviSaveBufNode *pNode = malloc(sizeof(SampleVirviSaveBufNode));

if (NULL == pNode)

{

aloge("fatal error! malloc save buf node fail!");

return NULL;

}

memset(pNode, 0, sizeof(SampleVirviSaveBufNode));

pNode->mId = i;

pNode->mDataLen = pConfig->mSavePicBufferLen;

AW_MPI_SYS_MmzAlloc_Cached(&pNode->mDataPhyAddr, &pNode->mpDataVirAddr, pNode->mDataLen);

if ((0 == pNode->mDataPhyAddr) || (NULL == pNode->mpDataVirAddr))

{

aloge("fatal error! alloc buf[%d] fail!", i);

return NULL;

}

memset(pNode->mpDataVirAddr, 0, pNode->mDataLen);

alogd("node[%d] alloc data len[%d] phy addr[%d] vir addr[%p]", \

i, pNode->mDataLen, pNode->mDataPhyAddr, pNode->mpDataVirAddr);

pthread_mutex_lock(&pSaveBufMgr->mIdleListLock);

list_add_tail(&pNode->mList, &pSaveBufMgr->mIdleList);

pthread_mutex_unlock(&pSaveBufMgr->mIdleListLock);

}

return pSaveBufMgr;

}

/* 反初始化缓存管理器:释放所有节点与互斥锁 */

static int deinitSaveBufMgr(SampleVirviSaveBufMgr *pSaveBufMgr)

{

if (NULL == pSaveBufMgr)

{

alogw("why save buf mgr is null");s

return -1;

}

SampleVirviSaveBufNode *pEntry = NULL, *pTmp = NULL;

pthread_mutex_lock(&pSaveBufMgr->mReadyListLock);//获得就绪列表的链表锁

if (list_empty(&pSaveBufMgr->mReadyList))//判断mReadyList是否为空

{

list_for_each_entry_safe(pEntry, pTmp, &pSaveBufMgr->mReadyList, mList) //遍历mReadyList

{

list_move_tail(&pEntry->mList, &pSaveBufMgr->mIdleList);//将每个节点移动到 mIdleList 尾部

}

}

pthread_mutex_unlock(&pSaveBufMgr->mReadyListLock);//释放就绪列表的链表锁

pthread_mutex_lock(&pSaveBufMgr->mIdleListLock);////获得空闲列表的链表锁

list_for_each_entry_safe(pEntry, pTmp, &pSaveBufMgr->mIdleList, mList) //遍历mIdleList

{

if (NULL == pEntry) //检查节点是否为 NULL

continue;

if (0 != pEntry->mDataPhyAddr && NULL != pEntry->mpDataVirAddr)//如果数据的物理地址和虚拟地址不为空

{

alogd("node[%d] alloc data len[%d] phy addr[%d] vir addr[%p]", \

pEntry->mId, pEntry->mDataLen, pEntry->mDataPhyAddr, pEntry->mpDataVirAddr);

AW_MPI_SYS_MmzFree(pEntry->mDataPhyAddr, pEntry->mpDataVirAddr);//释放分配的物理和虚拟地址

pEntry->mDataPhyAddr = 0;

pEntry->mpDataVirAddr = NULL;

}

list_del(&pEntry->mList);//链表中删除该节点

free(pEntry);//释放其内存

pEntry = NULL;

}

pthread_mutex_unlock(&pSaveBufMgr->mIdleListLock);//解锁空闲列表的链表锁

//销毁互斥锁

pthread_mutex_destroy(&pSaveBufMgr->mIdleListLock);

pthread_mutex_destroy(&pSaveBufMgr->mReadyListLock);

return 0;

}

/* 根据配置初始化四个 capture 槽位 */

static void initCaps(SampleVirViContext *pContext)

{

SampleVirviCap *pCap = NULL;

SampleVirViConfig *pConfig = NULL;

for (int i = 0; i < MAX_CAPTURE_NUM; i++)

{

pCap = &pContext->mCaps[i];

pConfig = &pCap->mConfig;

pCap->Dev = MM_INVALID_DEV;

pCap->mIspDev = MM_INVALID_DEV;

pCap->Chn = MM_INVALID_CHN;

if ((pConfig->DevNum < 0) || \

(0 == pConfig->PicWidth) || (0 == pConfig->PicHeight))

{

pCap->mbCapValid = FALSE;

}

else

{

pCap->mbCapValid = TRUE;

pCap->mpContext = (void *)pContext;

}

}

}

/* 根据配置生成 VI_ATTR_S */

static void configViAttr(VI_ATTR_S *pViAttr, SampleVirViConfig *pConfig)

{

pViAttr->type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;

pViAttr->memtype = V4L2_MEMORY_MMAP;

pViAttr->format.pixelformat = map_PIXEL_FORMAT_E_to_V4L2_PIX_FMT(pConfig->PicFormat);

pViAttr->format.field = V4L2_FIELD_NONE;

pViAttr->format.colorspace = pConfig->mColorSpace;

pViAttr->format.width = pConfig->PicWidth;

pViAttr->format.height = pConfig->PicHeight;

pViAttr->nbufs = 3;

pViAttr->nplanes = 2;

pViAttr->fps = pConfig->FrameRate;

pViAttr->use_current_win = 0;

pViAttr->wdr_mode = pConfig->mEnableWDRMode;

pViAttr->capturemode = V4L2_MODE_VIDEO; /* V4L2_MODE_VIDEO; V4L2_MODE_IMAGE; V4L2_MODE_PREVIEW */

pViAttr->drop_frame_num = pConfig->mViDropFrmCnt; // drop 2 second video data, default=0

}

/* 创建 vipp、isp、virchn */

static int prepare(SampleVirviCap *pCasp)

{

ERRORTYPE eRet = SUCCESS;

SampleVirViConfig *pConfig = &pCap->mConfig;

if (!pCap->mbCapValid)

{

return 0;

}

VI_ATTR_S stVIAttr;

memset(&stVIAttr, 0, sizeof(VI_ATTR_S));

configViAttr(&stVIAttr, &pCap->mConfig);

pCap->Dev = pConfig->DevNum;

eRet = AW_MPI_VI_CreateVipp(pCap->Dev);

if (eRet)

{

pCap->Dev = MM_INVALID_DEV;

aloge("fatal error! vi dev[%d] create fail!", pCap->Dev);

return -1;

}

eRet = AW_MPI_VI_SetVippAttr(pCap->Dev, &stVIAttr);

if (eRet)

{

aloge("fatal error! vi dev[%d] set vipp attr fail!", pCap->Dev);

}

pCap->mIspDev = pConfig->mIspDevNum;

if (pCap->mIspDev >= 0)

{

eRet = AW_MPI_ISP_Run(pCap->mIspDev);

if (eRet)

{

pCap->mIspDev = MM_INVALID_DEV;

aloge("fatal error! isp[%d] start fail!", pCap->mIspDev);

}

}

eRet = AW_MPI_VI_EnableVipp(pCap->Dev);

if (eRet)

{

aloge("fatal error! vi dev[%d] enable fail!", pCap->Dev);

}

pCap->Chn = 0;

eRet = AW_MPI_VI_CreateVirChn(pCap->Dev, pCap->Chn, NULL);

if (eRet)

{

pCap->Chn = MM_INVALID_DEV;

aloge("fatal error! vi dev[%d] create vi chn[0] fail!", pCap->Dev);

return -1;

}

alogd("create vi dev[%d] vi chn[%d] success!", pCap->Dev, pCap->Chn);

return 0;

}

/* 使能 virchn 并启动取流线程 */

static int start(SampleVirviCap *pCap)

{

ERRORTYPE eRet = SUCCESS;

if (pCap->Dev < 0 || pCap->Chn < 0)

{

return 0;

}

eRet = AW_MPI_VI_EnableVirChn(pCap->Dev, pCap->Chn);

if (eRet)

{

aloge("fatal error! vi dev[%d] enable virvi chn[%d] fail!", pCap->Dev, pCap->Chn);

return -1;

}

pthread_create(&pCap->thid, NULL, GetCSIFrameThread, (void *)pCap);

return 0;

}

/* 停止取流线程并销毁所有 VI/ISP 资源 */

static int stop(SampleVirviCap *pCap)

{

if (pCap->mbTrdRunning)

{

pCap->mbExitFlag = TRUE;

pthread_join(pCap->thid, NULL); //停止获取图像线程

}

if (pCap->Chn >= 0)

{

AW_MPI_VI_DisableVirChn(pCap->Dev, pCap->Chn);

AW_MPI_VI_DestroyVirChn(pCap->Dev, pCap->Chn);

}

if (pCap->Dev >= 0)

{

AW_MPI_VI_DisableVipp(pCap->Dev);

if (pCap->mIspDev >= 0)

AW_MPI_ISP_Stop(pCap->mIspDev);

AW_MPI_VI_DestroyVipp(pCap->Dev);

}

return 0;

}

int main(int argc, char *argv[])

{

int ret = 0,result = 0;

int vipp_dev, virvi_chn;

int count = 0;

pthread_t saveFrmTrd;

printf("Sample virvi buile time = %s, %s.\r\n", __DATE__, __TIME__);

/* 申请上下文 */

SampleVirViContext *pContext = (SampleVirViContext*)malloc(sizeof(SampleVirViContext));

memset(pContext, 0, sizeof(SampleVirViContext));

gpSampleVirViContext = pContext;

SampleVirviCap *pCap = NULL;

/* 初始化退出信号量 */

cdx_sem_init(&pContext->mSemExit, 0);

if (signal(SIGINT, handle_exit) == SIG_ERR)

{

aloge("fatal error! can't catch SIGSEGV");

}

/* 1. 解析命令行 */

char *pConfigFilePath;

if (argc > 1)

{

/* parse command line param,read sample_virvi.conf */

if(ParseCmdLine(argc, argv, &pContext->mCmdLinePara) != 0)

{

aloge("fatal error! command line param is wrong, exit!");

result = -1;

goto _exit;

}

if(strlen(pContext->mCmdLinePara.mConfigFilePath) > 0)

{

pConfigFilePath = pContext->mCmdLinePara.mConfigFilePath;

}

else

{

pConfigFilePath = DEFAULT_SAMPLE_VIRVI_CONF_PATH;

}

}

else

{

pConfigFilePath = NULL;

}

/* 2. 解析配置文件 */

if(loadConfigPara(pContext, pConfigFilePath) != SUCCESS)

{

aloge("fatal error! no config file or parse conf file fail");

result = -1;

goto _exit;

}

/* 3. 初始化 MPP 系统 */

initCaps(pContext);

MPP_SYS_CONF_S stConf;

memset(&stConf, 0, sizeof(MPP_SYS_CONF_S));

AW_MPI_SYS_SetConf(&stConf);

AW_MPI_SYS_Init();

/* 4. 若需要保存帧,则初始化缓存管理器并启动保存线程 */

if (pContext->mSaveBufMgrConfig.mSavePicDev >= 0 && \

pContext->mSaveBufMgrConfig.mSavePicBufferLen >= 0 && \

pContext->mSaveBufMgrConfig.mSavePicBufferNum >= 0)

{

pContext->mpSaveBufMgr = initSaveBufMgr(pContext);//初始化缓冲区管理器

if (NULL == pContext->mpSaveBufMgr)

{

aloge("fatal error! init save csi frame mgr fail!");

goto _deinit_buf_mgr;

}

pthread_create(&saveFrmTrd, NULL, SaveCsiFrameThrad, (void *)pContext);

}

/* 5. 依次 prepare / start 每个 capture */

for (int i = 0; i < MAX_CAPTURE_NUM; i++)

{

pCap = &pContext->mCaps[i];

ret = prepare(pCap);

if (ret)

{

aloge("fatal error! capture[%d] prepare fail!", i);

}

}

for (int i = 0; i < MAX_CAPTURE_NUM; i++)

{

pCap = &pContext->mCaps[i];

ret = start(pCap);

if (ret)

{

aloge("fatal error! capture[%d] start fail!", i);

}

}

/* 6. 等待退出信号(或超时)*/

if (pContext->mTestDuration > 0)

{

cdx_sem_down_timedwait(&pContext->mSemExit, pContext->mTestDuration*1000);//带超时的等待函数,会在超时时间内阻塞,直到信号量可用或者超时发生

}

else

{

cdx_sem_wait(&pContext->mSemExit);//阻塞等待函数,会一直等待,直到信号量被释放。

}

/* 7. 停止所有 capture */

for (int i = 0; i < MAX_CAPTURE_NUM; i++)

{

pCap = &pContext->mCaps[i];

ret = stop(pCap);

if (ret)

{

aloge("fatal error! capture[%d] stop fail!", i);

}

}

/* 8. 清理保存线程及缓存 */

_deinit_buf_mgr:

if (pContext->mpSaveBufMgr)

{

pContext->mbSaveCsiTrdExitFlag = TRUE;

if (pContext->mpSaveBufMgr->mbTrdRunningFlag)

pthread_join(saveFrmTrd, NULL);

deinitSaveBufMgr(pContext->mpSaveBufMgr);

}

AW_MPI_SYS_Exit();

cdx_sem_deinit(&pContext->mSemExit);

free(pContext);

_exit:

alogd("%s test result: %s", argv[0], ((0 == result) ? "success" : "fail"));

return result;

}sample_virvi.h

c

#ifndef _SAMPLE_VIRVI_H_

#define _SAMPLE_VIRVI_H_

#include <plat_type.h>

#include <tsemaphore.h>

#define MAX_FILE_PATH_SIZE (256)

#define MAX_CAPTURE_NUM (4)

typedef struct SampleVirViCmdLineParam

{

char mConfigFilePath[MAX_FILE_PATH_SIZE];

} SampleVirViCmdLineParam;

typedef struct SampleVirViConfig

{

int AutoTestCount; //自动测试计数

int GetFrameCount; //获取帧计数

int DevNum; //设备数量

int mIspDevNum; //ISP 设备数量

int PicWidth; //图片宽度

int PicHeight; //图片高度

int FrameRate; //帧率

PIXEL_FORMAT_E PicFormat; //图片格式

int mEnableWDRMode; //是否启用 WDR 模式的标志

enum v4l2_colorspace mColorSpace; //颜色空间

int mViDropFrmCnt; //VI 丢帧计数

} SampleVirViConfig;

typedef struct SampleVirviCap

{

BOOL mbCapValid; //采集是否有效的布尔值

BOOL mbExitFlag; //线程退出的布尔值

BOOL mbTrdRunning; //线程是否正在运行

pthread_t thid; //线程 ID

VI_DEV Dev; //视频输入设备

ISP_DEV mIspDev; //ISP 设备

VI_CHN Chn; //视频通道

int s32MilliSec; //采集时间间隔(毫秒)

VIDEO_FRAME_INFO_S pstFrameInfo; //视频帧信息

int mRawStoreNum; //current save raw picture num

void *mpContext; //SampleVirViContext*

SampleVirViConfig mConfig; //采集配置信息

} SampleVirviCap;

//管理和存储视频帧的信息

typedef struct SampleVirviSaveBufNode

{

int mId; //缓冲区的编号

int mFrmCnt; //视频帧的计数

int mDataLen; //数据长度

unsigned int mDataPhyAddr; //数据的物理地址

void *mpDataVirAddr; //数据的虚拟地址

int mFrmLen; //帧长度

SIZE_S mFrmSize; //帧尺寸

PIXEL_FORMAT_E mFrmFmt; //帧格式

void *mpCap; //SampleVirViContext*

struct list_head mList; //构建链表的成员变量

}SampleVirviSaveBufNode;

typedef struct SampleVirviSaveBufMgrConfig

{

VI_DEV mSavePicDev; //设备号

int mYuvFrameCount; //YUV 帧的计数

char mYuvFile[MAX_FILE_PATH_SIZE]; //存储 YUV 文件的路径

int mRawStoreCount; //the picture number of storing. 0 means don't store pictures. 要存储的原始图片数量。如果为0,表示不存储图片

int mRawStoreInterval; //n: store one picture of n pictures. 存储图片的间隔。例如,如果设置为 n,表示每隔 n 张图片存储一张。

char mStoreDirectory[MAX_FILE_PATH_SIZE]; //e.g.: /mnt/extsd 存储目录的字符串数组

int mSavePicBufferNum; //保存图片的缓冲区数量

int mSavePicBufferLen; //保存图片的缓冲区长度

}SampleVirviSaveBufMgrConfig;

typedef struct SampleVirviSaveBufMgr

{

BOOL mbTrdRunningFlag; //管理器的线程是否正在运行

struct list_head mIdleList; //SampleVirviSaveBufNode

struct list_head mReadyList; //SampleVirviSaveBufNode

pthread_mutex_t mIdleListLock; //保护 mIdleList 链表的互斥锁

pthread_mutex_t mReadyListLock; //保护 mReadyList 链表的互斥锁

SampleVirviSaveBufMgrConfig mConfig; //配置信息结构体

}SampleVirviSaveBufMgr;

typedef struct SampleVirViContext

{

SampleVirViCmdLineParam mCmdLinePara;

SampleVirviCap mCaps[MAX_CAPTURE_NUM];

int mTestDuration;

cdx_sem_t mSemExit;

BOOL mbSaveCsiTrdExitFlag;

SampleVirviSaveBufMgrConfig mSaveBufMgrConfig;

SampleVirviSaveBufMgr *mpSaveBufMgr;

} SampleVirViContext;

#endif /* _SAMPLE_VIRVI_H_ */在全志 SDK 目录激活环境,并选择方案:

shell

source build/envsetup.sh

lunch进入配置界面:

shell

make menuconfig选择 MPP 示例程序,保存并退出:

shell

Allwinner --->

eyesee-mpp --->

[*] select mpp sample

清理和编译MPP程序:

shell

cleanmpp

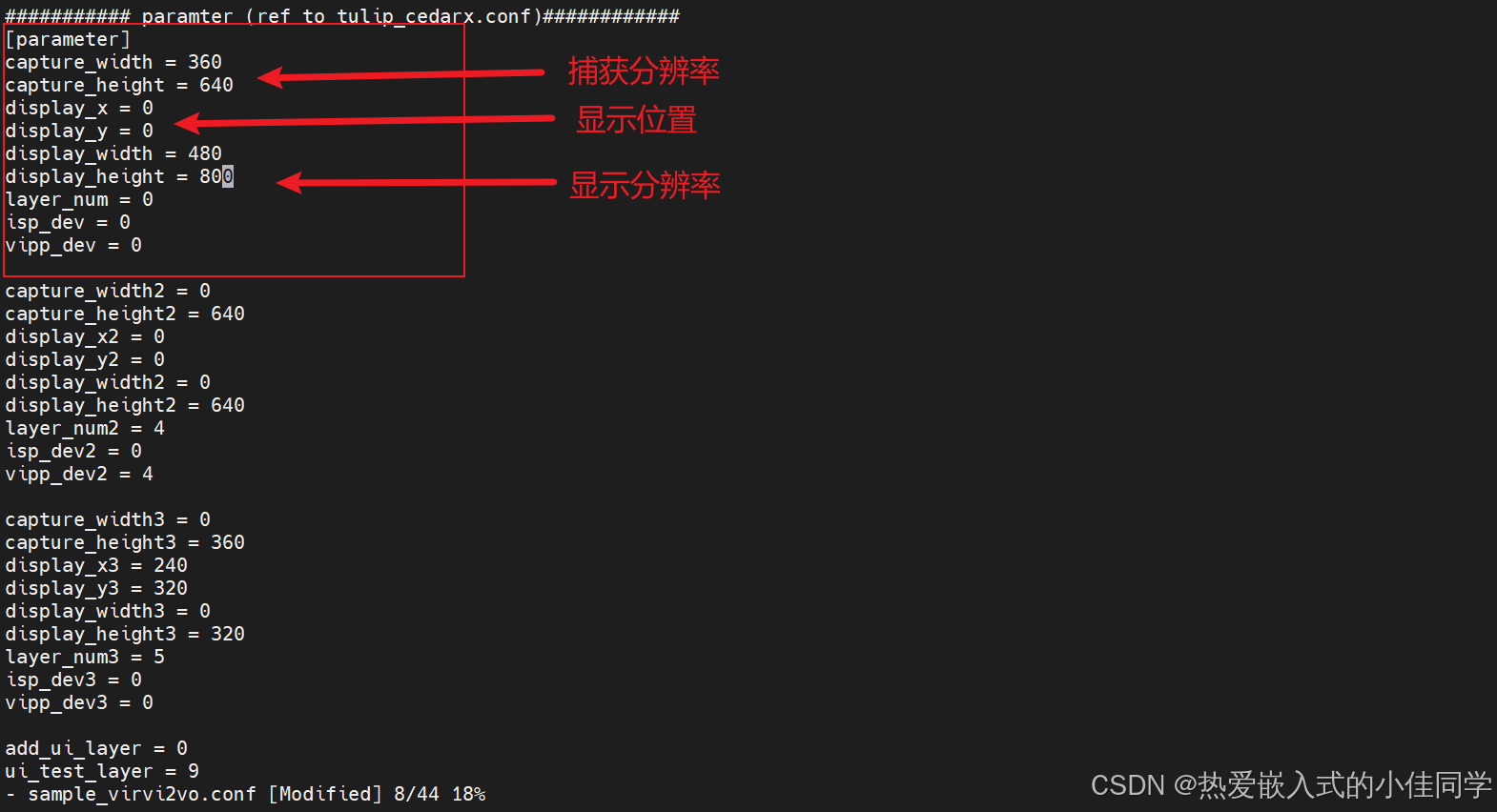

mkmpp进入目录,将编译出的文件上传到开发板:

shell

cd ~/tina-v853-100ask/external/eyesee-mpp/middleware/sun8iw21/sample/bin

adb push sample_virvi sample_virvi.conf /mnt/UDISK在开发板上运行程序:

shell

./sample_virvi -path ./sample_virvi.conf

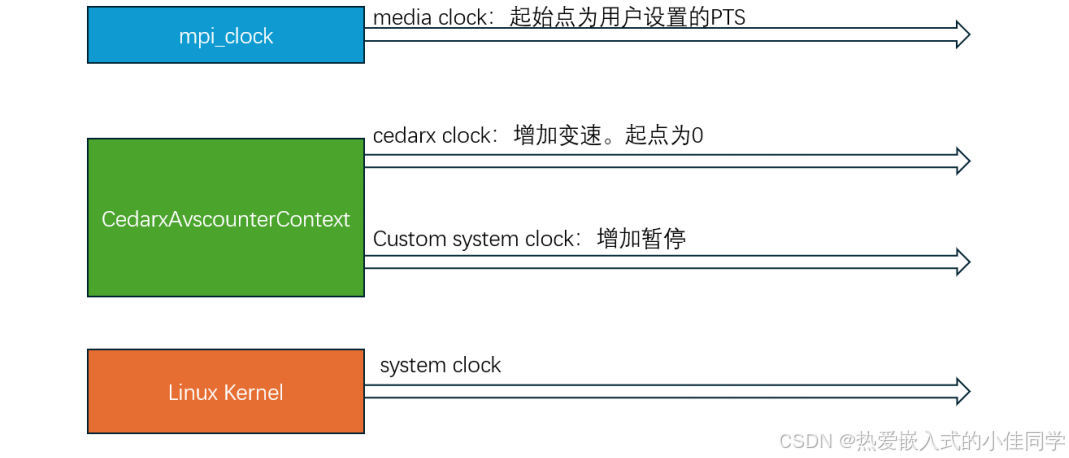

二、音视频同步原理-媒体时钟

媒体时钟(media clock)是指用于同步和控制多媒体系统中音频和视频信号的时序的参考时钟。

媒体时钟的主要功能包括:

- 同步音视频信号:在多媒体系统中,需要确保音频和视频信号同步播放,以避免音画不同步的现象。例如,在电影播放中,观众希望看到的对话与听到的声音完全同步。

- 数据采样和回放:音频和视频信号通常需要以固定的速率进行采样和回放。媒体时钟提供了这个固定速率的参考。例如,音频的采样率可能是 44.1 kHz 或 48 kHz,而视频的帧率可能是 30 fps 或 60 fps。

1. 全志平台媒体同步原理

在全志MPP平台中,媒体时钟通常指的是用于同步音频和视频播放的时钟。这个时钟的主要作用是确保音频和视频数据以正确的速率和时间间隔进行传输和播放,以避免音视频不同步或者出现卡顿等问题。

在媒体播放过程中,媒体时钟会与其他时钟源(例如音频解码器、视频解码器等)进行同步,以确保整个系统的正常运行。

system clock:系统时钟。使用 clock_gettime(CLOCK_MONOTONIC, &t)得到。这是 monotonic 类型,记录从硬件启动到当前的持续时间,所以绝不会被修改,可以保证时间绝对准确,也不会暂停。

custom system clock:定制的系统时钟。基于 system clock,加入了暂停的概念,如果有暂停,custom system clock 也会暂停计时,并记录暂停的持续时间。因此 system clock 和 custom system clock 只是暂停时间上的差距,而起始时间相同。

cedarx clock:cedarx 时钟,基于custom system clock, 起始时间为0。但是加入了变速的计算,所以 cedarx clock 是一条可变速的时钟,这对于av同步相当重要。

media clock:媒体时钟,基于 cedarx clock。唯一的不同在于 media clock 的起始时间是上层组件设置的第一个视频帧或音频帧的 pts,而cedarx clock 的起始时间是0。因此, media clock 也是一条可变速的时钟,并且变速值始终和 cedarx clock 一致,只是起始时间的不同。

三、视频输出基础

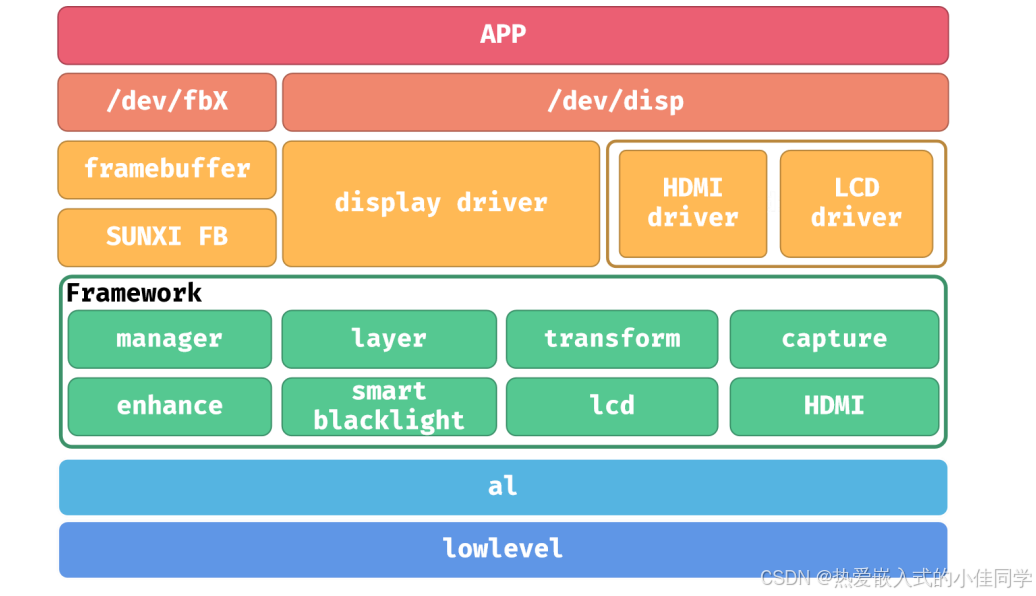

1. LCD 驱动

显示驱动可划分为三个层面:驱动层,框架层及底层。

- 底层与图形硬件相接,主要负责将上层配置的功能参数转换成硬件所需要的参数,并配置到相应寄存器中。

- 显示框架层对底层进行抽象封装成一个个的功能模块。

- 驱动层对外封装功能接口,通过内核向用户空间提供相应的设备结点及统一的接口。

在驱动层,分为三个驱动,分别是 framebuffer 驱动, display 驱动, LCD&HDMI 驱动。

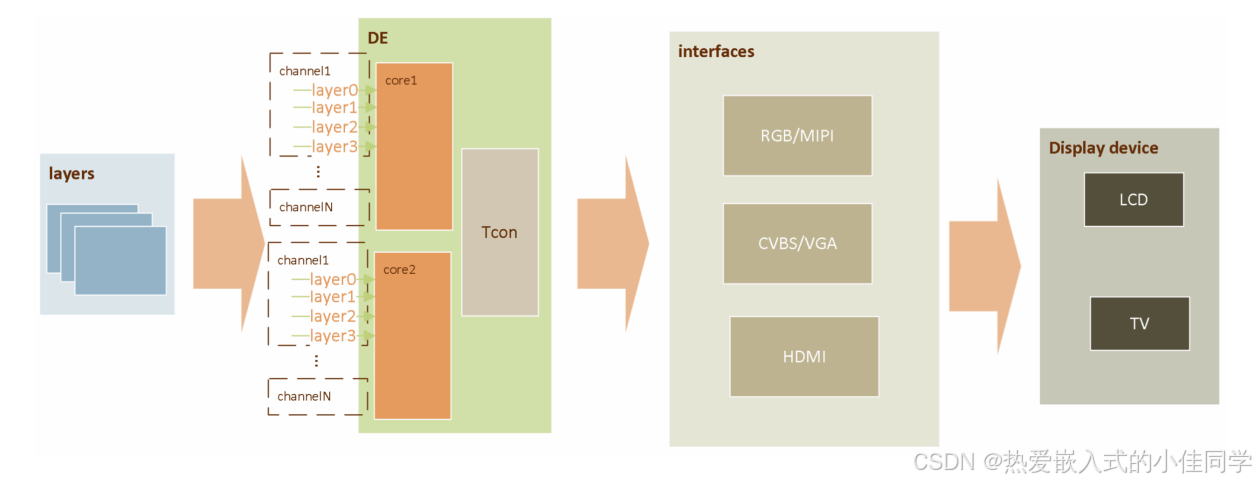

2. 视频输出模块框图

视频输出模块框图如上,由显示引擎(DE)和各类型控制器(tcon)组成。

视频输出模块框图如上,由显示引擎(DE)和各类型控制器(tcon)组成。

输入图层(layers)在DE中进行显 示相关处理后,通过一种或多种接口输出到显示设备上显示,以达到将众多应用渲染的图层合成后在显 示器呈现给用户观看的作用。DE 有2 个独立单元(可以简称de0、de1),可以分别接受用户输入的图层进行合成,输出到不同的显示器,以实现双显。

DE 的每个独立的单元有1-4 个通道(典型地,de0 有 4 个,de1 有2 个),每个通道可以同时处理接受 4 个格式相同的图层。

sunxi 平台有视频通道和 UI 通道之分。视频通道功能强大,可以支持 YUV 格式和RGB图层。UI 通道只支持 RGB 图层。

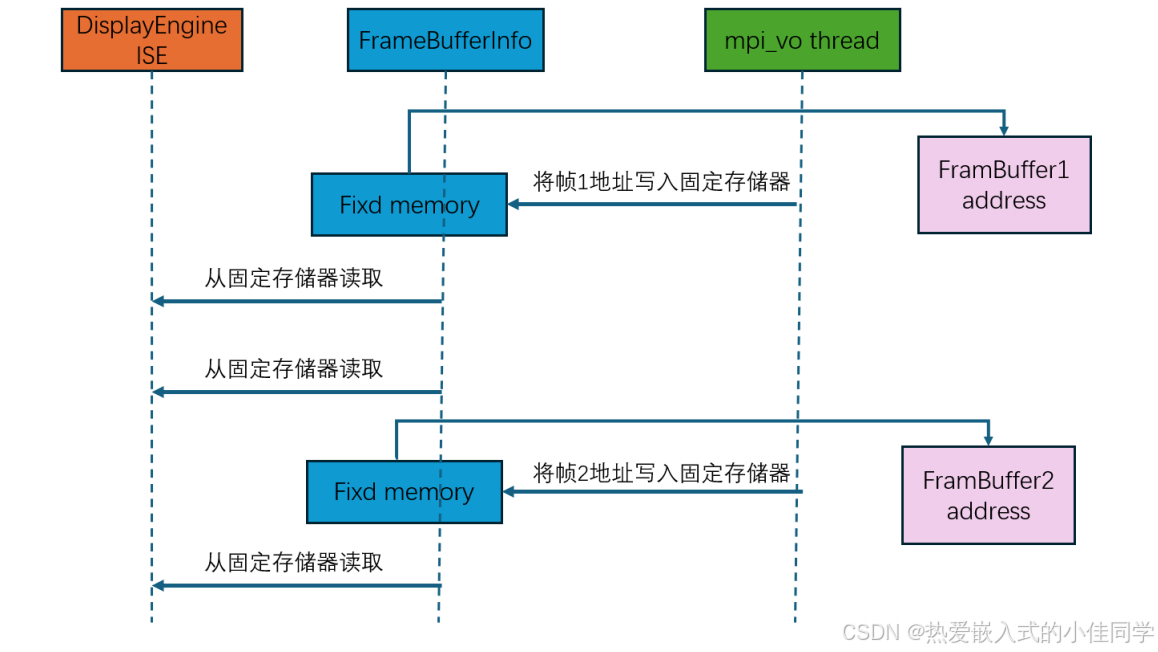

3. VO 组件-显示引擎 DE

内核中的 DE 驱动是在中断处理函数中从指定的地址去读取帧的显示信息(起始地址、显示/特效参数)。

每次 DE 驱动的中断到来,都去特定的内存去取帧的各种信息,再去配置寄存器。特定的内存中的信息是由软件去填充,也就是由 mpi_vo thread 去填充起始地址和显示信息。

为了确保平滑的显示效果,并且避免出现撕裂或其他显示问题,通常会使用双缓冲技术。双缓冲技术意味着同时准备两帧的显示内容,一帧用于当前显示,另一帧用于下一次显示。当一帧显示完成后,可以直接切换到下一帧,而无需等待新的显示内容准备好,从而提高显示效率和流畅度。

为了保证双缓冲正常运行,帧率需要控制在一个合适的范围内,确保每帧之间的时间间隔比屏幕的刷新间隔大。这样可以确保在一帧显示完成后,下一帧已经准备就绪,从而实现连续流畅的显示效果。如果帧率过高,可能会导致显示内容准备不及时,造成撕裂或卡顿;如果帧率过低,可能会导致画面卡顿或闪烁。

通过合理控制帧率和使用双缓冲技术,可以有效地提高显示效果的稳定性和流畅度,确保图像在屏幕上的呈现达到最佳效果。

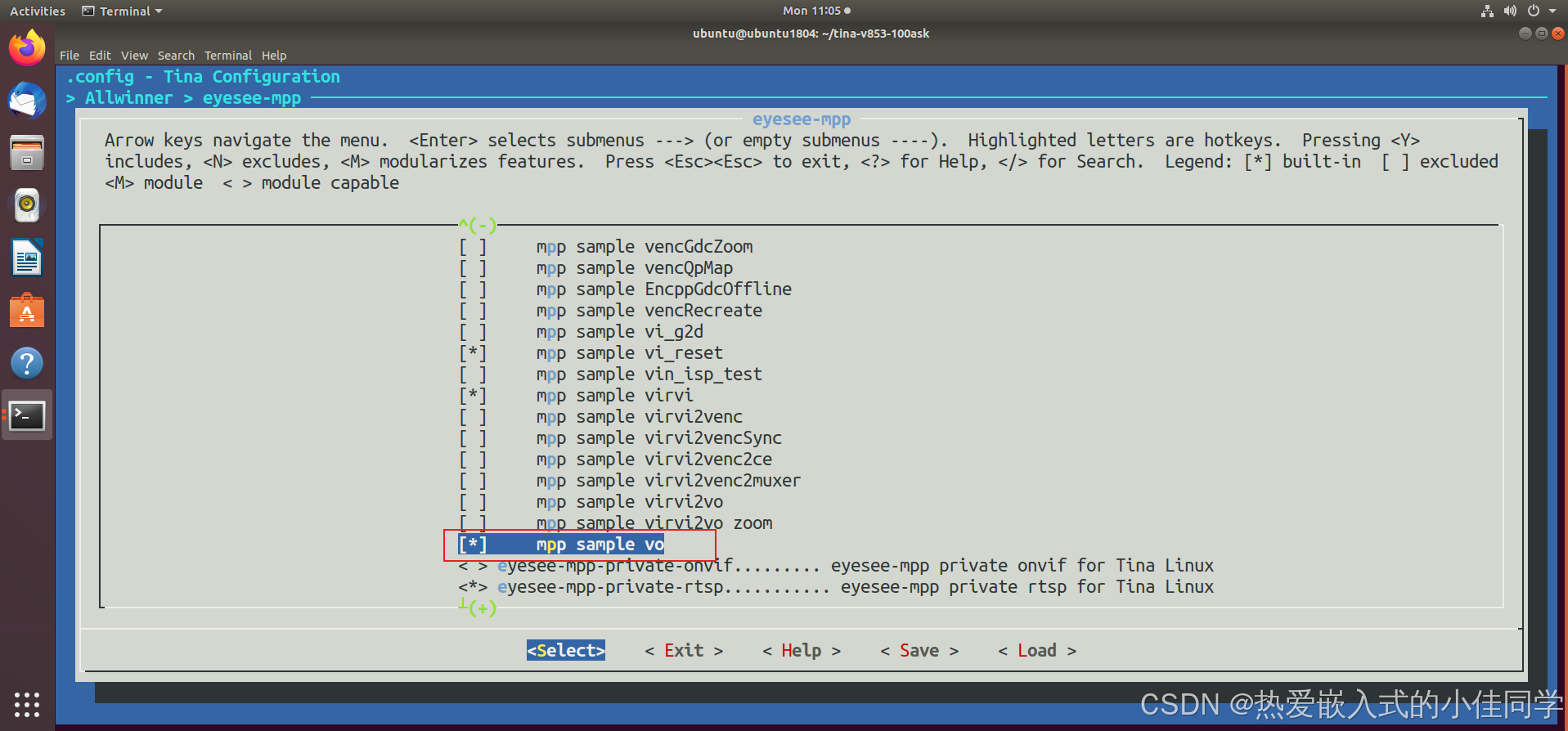

4. 全志视频输出组件使用示例

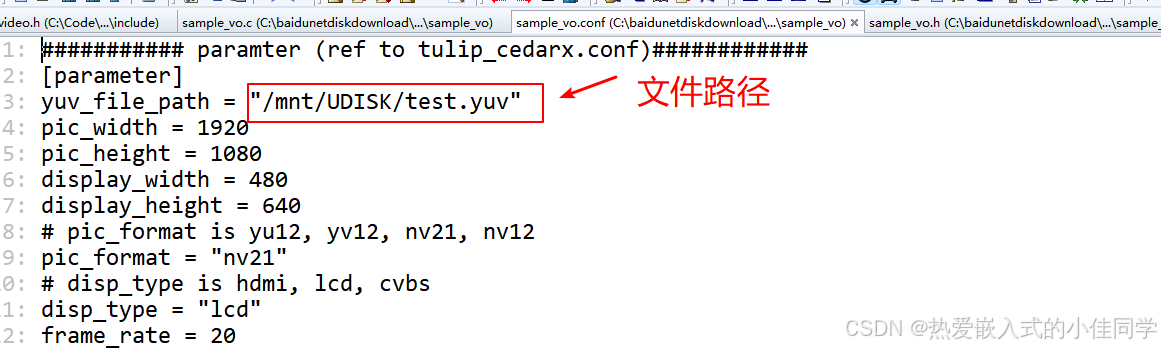

4.1 读取视频文件实时显示

示例:从 yuv 原始数据文件 xxx.yuv 中读取视频帧,标记时间戳,送给 mpi_vo 组件显示。

步骤:

- 初始化 MPP 系统

- 初始化帧管理器

- 打开 VO 设备

- 申请视频图层

- 设置公共属性

- 创建 VO 通道

- 创建 Clock 通道

- 绑定 Clock 到 VO

- 启动 Clock 与 VO

- 读取 YUV 并送显

示例代码解析:

sample_vo.c

c

#define LOG_TAG "sample_vo"

#include <utils/plat_log.h>

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <string.h>

#include <pthread.h>

#include <mm_common.h>

#include <vo/hwdisplay.h>

#include <mpi_sys.h>

#include <mpi_vo.h>

#include <ClockCompPortIndex.h>

#include <confparser.h>

#include "sample_vo_config.h"

#include "sample_vo.h"

#include <cdx_list.h>

/* 从空闲链表取出第一个空闲帧 */

VIDEO_FRAME_INFO_S* SampleVOFrameManager_PrefetchFirstIdleFrame(void *pThiz)

{

SampleVOFrameManager *pFrameManager = (SampleVOFrameManager*)pThiz;

SampleVOFrameNode *pFirstNode;

VIDEO_FRAME_INFO_S *pFrameInfo = NULL;

pthread_mutex_lock(&pFrameManager->mLock);

if (!list_empty(&pFrameManager->mIdleList))

{

pFirstNode = list_first_entry(&pFrameManager->mIdleList, SampleVOFrameNode, mList);

pFrameInfo = &pFirstNode->mFrame;

}

pthread_mutex_unlock(&pFrameManager->mLock);

return pFrameInfo;

}

/* 把空闲链表的第一帧移到"正在使用"链表 */

int SampleVOFrameManager_UseFrame(void *pThiz, VIDEO_FRAME_INFO_S *pFrame)

{

int ret = 0;

SampleVOFrameManager *pFrameManager = (SampleVOFrameManager*)pThiz;

if (!pFrame)

{

aloge("fatal error! pNode == NULL!");

return -1;

}

pthread_mutex_lock(&pFrameManager->mLock);

SampleVOFrameNode *pFirstNode = list_first_entry_or_null(&pFrameManager->mIdleList, SampleVOFrameNode, mList);

if (pFirstNode && &pFirstNode->mFrame == pFrame)

{

list_move_tail(&pFirstNode->mList, &pFrameManager->mUsingList);

}

else

{

aloge("fatal error! node not match [%p]!=[%p]", pFrame, pFirstNode ? &pFirstNode->mFrame : NULL);

ret = -1;

}

pthread_mutex_unlock(&pFrameManager->mLock);

return ret;

}

/* 根据帧 ID 把帧从"正在使用"链表放回空闲链表 */

int SampleVOFrameManager_ReleaseFrame(void *pThiz, unsigned int nFrameId)

{

int ret = 0;

SampleVOFrameManager *pFrameManager = (SampleVOFrameManager*)pThiz;

pthread_mutex_lock(&pFrameManager->mLock);

int bFindFlag = 0;

SampleVOFrameNode *pEntry, *pTmp;

list_for_each_entry_safe(pEntry, pTmp, &pFrameManager->mUsingList, mList)

{

if (pEntry->mFrame.mId == nFrameId)

{

list_move_tail(&pEntry->mList, &pFrameManager->mIdleList);

bFindFlag = 1;

break;

}

}

if (!bFindFlag)

{

aloge("fatal error! frameId[%d] not found", nFrameId);

ret = -1;

}

pthread_mutex_unlock(&pFrameManager->mLock);

return ret;

}

/* 初始化帧管理器:分配 nFrameNum 个帧节点 */

int initSampleVOFrameManager(SampleVOFrameManager *pFrameManager, int nFrameNum, SampleVOConfig *pConfigPara)

{

memset(pFrameManager, 0, sizeof(SampleVOFrameManager));

if (pthread_mutex_init(&pFrameManager->mLock, NULL))

{

aloge("fatal error! pthread mutex init fail!");

return -1;

}

INIT_LIST_HEAD(&pFrameManager->mIdleList);

INIT_LIST_HEAD(&pFrameManager->mUsingList);

unsigned int nFrameSize;

switch (pConfigPara->mPicFormat)

{

case MM_PIXEL_FORMAT_YUV_PLANAR_420:

case MM_PIXEL_FORMAT_YVU_PLANAR_420:

case MM_PIXEL_FORMAT_YUV_SEMIPLANAR_420:

case MM_PIXEL_FORMAT_YVU_SEMIPLANAR_420:

nFrameSize = pConfigPara->mPicWidth * pConfigPara->mPicHeight * 3 / 2;

break;

default:

aloge("fatal error! unknown pixel format[0x%x]", pConfigPara->mPicFormat);

nFrameSize = pConfigPara->mPicWidth * pConfigPara->mPicHeight;

break;

}

for (int i = 0; i < nFrameNum; ++i)

{

SampleVOFrameNode *pNode = (SampleVOFrameNode*)malloc(sizeof(SampleVOFrameNode));

memset(pNode, 0, sizeof(SampleVOFrameNode));

pNode->mFrame.mId = i;

unsigned int phyAddr;

void *virAddr;

AW_MPI_SYS_MmzAlloc_Cached(&phyAddr, &virAddr, nFrameSize);

pNode->mFrame.VFrame.mPhyAddr[0] = phyAddr;

pNode->mFrame.VFrame.mPhyAddr[1] = phyAddr + pConfigPara->mPicWidth * pConfigPara->mPicHeight;

pNode->mFrame.VFrame.mPhyAddr[2] = phyAddr + pConfigPara->mPicWidth * pConfigPara->mPicHeight * 5 / 4;

pNode->mFrame.VFrame.mpVirAddr[0] = virAddr;

pNode->mFrame.VFrame.mpVirAddr[1] = virAddr + pConfigPara->mPicWidth * pConfigPara->mPicHeight;

pNode->mFrame.VFrame.mpVirAddr[2] = virAddr + pConfigPara->mPicWidth * pConfigPara->mPicHeight * 5 / 4;

pNode->mFrame.VFrame.mWidth = pConfigPara->mPicWidth;

pNode->mFrame.VFrame.mHeight = pConfigPara->mPicHeight;

pNode->mFrame.VFrame.mField = VIDEO_FIELD_FRAME;

pNode->mFrame.VFrame.mPixelFormat = pConfigPara->mPicFormat;

pNode->mFrame.VFrame.mVideoFormat = VIDEO_FORMAT_LINEAR;

pNode->mFrame.VFrame.mCompressMode = COMPRESS_MODE_NONE;

pNode->mFrame.VFrame.mOffsetTop = 0;

pNode->mFrame.VFrame.mOffsetBottom = pConfigPara->mPicHeight;

pNode->mFrame.VFrame.mOffsetLeft = 0;

pNode->mFrame.VFrame.mOffsetRight = pConfigPara->mPicWidth;

list_add_tail(&pNode->mList, &pFrameManager->mIdleList);

}

pFrameManager->mNodeCnt = nFrameNum;

/* 注册回调函数指针 */

pFrameManager->PrefetchFirstIdleFrame = SampleVOFrameManager_PrefetchFirstIdleFrame;

pFrameManager->UseFrame = SampleVOFrameManager_UseFrame;

pFrameManager->ReleaseFrame = SampleVOFrameManager_ReleaseFrame;

return 0;

}

/* 销毁帧管理器:释放所有帧节点与锁 */

int destroySampleVOFrameManager(SampleVOFrameManager *pFrameManager)

{

if (!list_empty(&pFrameManager->mUsingList))

aloge("fatal error! using list not empty");

int cnt = 0;

struct list_head *pList;

list_for_each(pList, &pFrameManager->mIdleList) cnt++;

if (cnt != pFrameManager->mNodeCnt)

aloge("fatal error! frame count mismatch [%d]!=[%d]", cnt, pFrameManager->mNodeCnt);

SampleVOFrameNode *pEntry, *pTmp;

list_for_each_entry_safe(pEntry, pTmp, &pFrameManager->mIdleList, mList)

{

AW_MPI_SYS_MmzFree(pEntry->mFrame.VFrame.mPhyAddr[0], pEntry->mFrame.VFrame.mpVirAddr[0]);

list_del(&pEntry->mList);

free(pEntry);

}

pthread_mutex_destroy(&pFrameManager->mLock);

return 0;

}

/* 初始化 VO 全局上下文 */

int initSampleVOContext(SampleVOContext *pContext)

{

memset(pContext, 0, sizeof(SampleVOContext));

pthread_mutex_init(&pContext->mWaitFrameLock, NULL);

cdx_sem_init(&pContext->mSemFrameCome, 0);

pContext->mUILayer = HLAY(2, 0); /* UI 图层 */

return 0;

}

int destroySampleVOContext(SampleVOContext *pContext)

{

pthread_mutex_destroy(&pContext->mWaitFrameLock);

cdx_sem_deinit(&pContext->mSemFrameCome);

return 0;

}

/* VO 事件回调:处理帧释放、尺寸变化、开始渲染等 */

static ERRORTYPE SampleVOCallbackWrapper(void *cookie, MPP_CHN_S *pChn, MPP_EVENT_TYPE event, void *pEventData)

{

SampleVOContext *pContext = (SampleVOContext*)cookie;

if (MOD_ID_VOU != pChn->mModId || pChn->mChnId != pContext->mVOChn)

{

aloge("fatal error! VO chnId[%d]!=[%d]", pChn->mChnId, pContext->mVOChn);

return ERR_VO_ILLEGAL_PARAM;

}

switch (event)

{

case MPP_EVENT_RELEASE_VIDEO_BUFFER:

{

VIDEO_FRAME_INFO_S *pFrame = (VIDEO_FRAME_INFO_S*)pEventData;

pContext->mFrameManager.ReleaseFrame(&pContext->mFrameManager, pFrame->mId);

pthread_mutex_lock(&pContext->mWaitFrameLock);

if (pContext->mbWaitFrameFlag)

{

pContext->mbWaitFrameFlag = 0;

cdx_sem_up(&pContext->mSemFrameCome);

}

pthread_mutex_unlock(&pContext->mWaitFrameLock);

break;

}

case MPP_EVENT_SET_VIDEO_SIZE:

{

SIZE_S *pDispSize = (SIZE_S*)pEventData;

alogd("vo report display size[%dx%d]", pDispSize->Width, pDispSize->Height);

break;

}

case MPP_EVENT_RENDERING_START:

alogd("vo report rendering start");

break;

default:

aloge("unknown event[0x%x]", event);

return ERR_VO_ILLEGAL_PARAM;

}

return SUCCESS;

}

/* Clock 事件回调占位 */

static ERRORTYPE SampleVO_CLOCKCallbackWrapper(void *cookie, MPP_CHN_S *pChn, MPP_EVENT_TYPE event, void *pEventData)

{

alogw("clock chn[%d] event[0x%x]", pChn->mChnId, event);

return SUCCESS;

}

/* 解析命令行:仅支持 -path xxx.conf */

static int ParseCmdLine(int argc, char **argv, SampleVOCmdLineParam *pCmdLinePara)

{

alogd("sample vo path:[%s], arg number is [%d]", argv[0], argc);

memset(pCmdLinePara, 0, sizeof(SampleVOCmdLineParam));

int i = 1, ret = 0;

while (i < argc)

{

if (!strcmp(argv[i], "-path"))

{

if (++i >= argc)

{

aloge("fatal error! use -h to learn how to set parameter!!!");

ret = -1;

break;

}

if (strlen(argv[i]) >= MAX_FILE_PATH_SIZE)

{

aloge("file path too long!");

ret = -1;

break;

}

strncpy(pCmdLinePara->mConfigFilePath, argv[i], MAX_FILE_PATH_SIZE - 1);

pCmdLinePara->mConfigFilePath[MAX_FILE_PATH_SIZE - 1] = '\0';

}

else if (!strcmp(argv[i], "-h"))

{

printf("CmdLine param:\n\t-path /home/sample_vo.conf\n");

ret = 1;

break;

}

else

{

alogd("ignore invalid param[%s]", argv[i]);

}

++i;

}

return ret;

}

/* 读取配置文件填充 SampleVOConfig */

static ERRORTYPE loadSampleVOConfig(SampleVOConfig *pConfig, const char *conf_path)

{

CONFPARSER_S stConfParser;

if (createConfParser(conf_path, &stConfParser) < 0)

{

aloge("load conf fail");

return FAILURE;

}

memset(pConfig, 0, sizeof(SampleVOConfig));

char *ptr = (char*)GetConfParaString(&stConfParser, SAMPLE_VO_KEY_YUV_FILE_PATH, NULL);

strncpy(pConfig->mYuvFilePath, ptr, MAX_FILE_PATH_SIZE - 1);

pConfig->mPicWidth = GetConfParaInt(&stConfParser, SAMPLE_VO_KEY_PIC_WIDTH, 0);

pConfig->mPicHeight = GetConfParaInt(&stConfParser, SAMPLE_VO_KEY_PIC_HEIGHT, 0);

pConfig->mDisplayWidth = GetConfParaInt(&stConfParser, SAMPLE_VO_KEY_DISPLAY_WIDTH, 0);

pConfig->mDisplayHeight = GetConfParaInt(&stConfParser, SAMPLE_VO_KEY_DISPLAY_HEIGHT, 0);

ptr = (char*)GetConfParaString(&stConfParser, SAMPLE_VO_KEY_PIC_FORMAT, NULL);

if (!strcmp(ptr, "yu12")) pConfig->mPicFormat = MM_PIXEL_FORMAT_YUV_PLANAR_420;

else if (!strcmp(ptr, "yv12")) pConfig->mPicFormat = MM_PIXEL_FORMAT_YVU_PLANAR_420;

else if (!strcmp(ptr, "nv21")) pConfig->mPicFormat = MM_PIXEL_FORMAT_YVU_SEMIPLANAR_420;

else if (!strcmp(ptr, "nv12")) pConfig->mPicFormat = MM_PIXEL_FORMAT_YUV_SEMIPLANAR_420;

else

{

aloge("unknown pic_format[%s], use yu12", ptr);

pConfig->mPicFormat = MM_PIXEL_FORMAT_YUV_PLANAR_420;

}

ptr = (char*)GetConfParaString(&stConfParser, SAMPLE_VO_KEY_DISP_TYPE, NULL);

if (!strcmp(ptr, "hdmi")) { pConfig->mDispType = VO_INTF_HDMI; pConfig->mDispSync = VO_OUTPUT_1080P25; }

else if (!strcmp(ptr, "lcd")) { pConfig->mDispType = VO_INTF_LCD; pConfig->mDispSync = VO_OUTPUT_NTSC; }

else if (!strcmp(ptr, "cvbs")) { pConfig->mDispType = VO_INTF_CVBS; pConfig->mDispSync = VO_OUTPUT_NTSC; }

pConfig->mFrameRate = GetConfParaInt(&stConfParser, SAMPLE_VO_KEY_FRAME_RATE, 0);

destroyConfParser(&stConfParser);

return SUCCESS;

}

int main(int argc, char *argv[])

{

int result = 0;

printf("sample_vo running!\n");

SampleVOContext stContext;

initSampleVOContext(&stContext);

/* 1. 解析命令行 */

if (ParseCmdLine(argc, argv, &stContext.mCmdLinePara) != 0)

{

aloge("fatal error! command line param wrong, exit!");

result = -1;

goto _exit;

}

char *pConfigFilePath = strlen(stContext.mCmdLinePara.mConfigFilePath) ?

stContext.mCmdLinePara.mConfigFilePath :

DEFAULT_SAMPLE_VO_CONF_PATH;

/* 2. 读取配置文件 */

if (loadSampleVOConfig(&stContext.mConfigPara, pConfigFilePath) != SUCCESS)

{

aloge("fatal error! no config file or parse fail");

result = -1;

goto _exit;

}

/* 3. 打开 YUV 文件 */

stContext.mFpYuvFile = fopen(stContext.mConfigPara.mYuvFilePath, "rb");

if (!stContext.mFpYuvFile)

{

aloge("fatal error! can't open yuv file[%s]", stContext.mConfigPara.mYuvFilePath);

result = -1;

goto _exit;

}

/* 4. 初始化 MPP 系统 */

stContext.mSysConf.nAlignWidth = 32;

AW_MPI_SYS_SetConf(&stContext.mSysConf);

AW_MPI_SYS_Init();

/* 5. 初始化帧管理器 */

initSampleVOFrameManager(&stContext.mFrameManager, 5, &stContext.mConfigPara);

/* 6. 打开 VO 设备 */

stContext.mVoDev = 0;

AW_MPI_VO_Enable(stContext.mVoDev);

AW_MPI_VO_AddOutsideVideoLayer(stContext.mUILayer);

AW_MPI_VO_CloseVideoLayer(stContext.mUILayer);

/* 7. 申请视频图层 */

int hlay0 = 0;

while (hlay0 < VO_MAX_LAYER_NUM)

{

if (AW_MPI_VO_EnableVideoLayer(hlay0) == SUCCESS) break;

++hlay0;

}

if (hlay0 >= VO_MAX_LAYER_NUM)

{

aloge("fatal error! enable video layer fail!");

result = -1;

goto _exit;

}

/* 8. 设置公共属性 */

VO_PUB_ATTR_S stPubAttr;

AW_MPI_VO_GetPubAttr(stContext.mVoDev, &stPubAttr);

stPubAttr.enIntfType = stContext.mConfigPara.mDispType;

stPubAttr.enIntfSync = stContext.mConfigPara.mDispSync;

AW_MPI_VO_SetPubAttr(stContext.mVoDev, &stPubAttr);

stContext.mVoLayer = hlay0;

AW_MPI_VO_GetVideoLayerAttr(stContext.mVoLayer, &stContext.mLayerAttr);

stContext.mLayerAttr.stDispRect.X = 0;

stContext.mLayerAttr.stDispRect.Y = 0;

stContext.mLayerAttr.stDispRect.Width = stContext.mConfigPara.mDisplayWidth;

stContext.mLayerAttr.stDispRect.Height = stContext.mConfigPara.mDisplayHeight;

AW_MPI_VO_SetVideoLayerAttr(stContext.mVoLayer, &stContext.mLayerAttr);

/* 9. 创建 VO 通道 */

ERRORTYPE ret;

BOOL bSuccess = FALSE;

stContext.mVOChn = 0;

while (stContext.mVOChn < VO_MAX_CHN_NUM)

{

ret = AW_MPI_VO_CreateChn(stContext.mVoLayer, stContext.mVOChn);

if (ret == SUCCESS) { bSuccess = TRUE; break; }

else if (ret == ERR_VO_CHN_NOT_DISABLE) ++stContext.mVOChn;

else break;

}

if (!bSuccess)

{

stContext.mVOChn = MM_INVALID_CHN;

aloge("create vo channel fail!");

result = -1;

goto _exit;

}

MPPCallbackInfo cbInfo = { .cookie = &stContext, .callback = SampleVOCallbackWrapper };

AW_MPI_VO_RegisterCallback(stContext.mVoLayer, stContext.mVOChn, &cbInfo);

AW_MPI_VO_SetChnDispBufNum(stContext.mVoLayer, stContext.mVOChn, 2);

/* 10. 创建 Clock 通道 */

bSuccess = FALSE;

stContext.mClockChnAttr.nWaitMask = 1 << CLOCK_PORT_INDEX_VIDEO;

stContext.mClockChn = 0;

while (stContext.mClockChn < CLOCK_MAX_CHN_NUM)

{

ret = AW_MPI_CLOCK_CreateChn(stContext.mClockChn, &stContext.mClockChnAttr);

if (ret == SUCCESS) { bSuccess = TRUE; break; }

else if (ret == ERR_CLOCK_EXIST) ++stContext.mClockChn;

else break;

}

if (!bSuccess)

{

stContext.mClockChn = MM_INVALID_CHN;

aloge("create clock channel fail!");

result = -1;

goto _exit;

}

cbInfo.callback = SampleVO_CLOCKCallbackWrapper;

AW_MPI_CLOCK_RegisterCallback(stContext.mClockChn, &cbInfo);

/* 11. 绑定 Clock -> VO */

MPP_CHN_S ClockChn = { MOD_ID_CLOCK, 0, stContext.mClockChn };

MPP_CHN_S VOChn = { MOD_ID_VOU, stContext.mVoLayer, stContext.mVOChn };

AW_MPI_SYS_Bind(&ClockChn, &VOChn);

/* 12. 启动 Clock 与 VO */

AW_MPI_CLOCK_Start(stContext.mClockChn);

AW_MPI_VO_StartChn(stContext.mVoLayer, stContext.mVOChn);

/* 13. 读取 YUV 并送显 */

uint64_t nPts = 0;

uint64_t nFrameInterval = 1000000 / stContext.mConfigPara.mFrameRate; // us

int nFrameSize;

switch (stContext.mConfigPara.mPicFormat)

{

case MM_PIXEL_FORMAT_YUV_PLANAR_420:

case MM_PIXEL_FORMAT_YVU_PLANAR_420:

case MM_PIXEL_FORMAT_YUV_SEMIPLANAR_420:

case MM_PIXEL_FORMAT_YVU_SEMIPLANAR_420:

nFrameSize = stContext.mConfigPara.mPicWidth * stContext.mConfigPara.mPicHeight * 3 / 2;

break;

default:

nFrameSize = stContext.mConfigPara.mPicWidth * stContext.mConfigPara.mPicHeight;

break;

}

VIDEO_FRAME_INFO_S *pFrameInfo;

while (1)

{

pFrameInfo = stContext.mFrameManager.PrefetchFirstIdleFrame(&stContext.mFrameManager);

if (!pFrameInfo)

{

pthread_mutex_lock(&stContext.mWaitFrameLock);

pFrameInfo = stContext.mFrameManager.PrefetchFirstIdleFrame(&stContext.mFrameManager);

if (!pFrameInfo)

{

stContext.mbWaitFrameFlag = 1;

pthread_mutex_unlock(&stContext.mWaitFrameLock);

cdx_sem_down_timedwait(&stContext.mSemFrameCome, 500);

continue;

}

pthread_mutex_unlock(&stContext.mWaitFrameLock);

}

AW_MPI_SYS_MmzFlushCache(pFrameInfo->VFrame.mPhyAddr[0], pFrameInfo->VFrame.mpVirAddr[0], nFrameSize);

if (fread(pFrameInfo->VFrame.mpVirAddr[0], 1, nFrameSize, stContext.mFpYuvFile) < nFrameSize)

{

if (feof(stContext.mFpYuvFile)) alogd("read file finish!");

break;

}

pFrameInfo->VFrame.mpts = nPts;

nPts += nFrameInterval;

AW_MPI_SYS_MmzFlushCache(pFrameInfo->VFrame.mPhyAddr[0], pFrameInfo->VFrame.mpVirAddr[0], nFrameSize);

stContext.mFrameManager.UseFrame(&stContext.mFrameManager, pFrameInfo);

if (AW_MPI_VO_SendFrame(stContext.mVoLayer, stContext.mVOChn, pFrameInfo, 0) != SUCCESS)

{

stContext.mFrameManager.ReleaseFrame(&stContext.mFrameManager, pFrameInfo->mId);

}

}

/* 14. 清理资源 */

AW_MPI_VO_StopChn(stContext.mVoLayer, stContext.mVOChn);

AW_MPI_CLOCK_Stop(stContext.mClockChn);

AW_MPI_VO_DestroyChn(stContext.mVoLayer, stContext.mVOChn);

AW_MPI_CLOCK_DestroyChn(stContext.mClockChn);

AW_MPI_VO_DisableVideoLayer(stContext.mVoLayer);

AW_MPI_VO_RemoveOutsideVideoLayer(stContext.mUILayer);

AW_MPI_VO_Disable(stContext.mVoDev);

destroySampleVOFrameManager(&stContext.mFrameManager);

AW_MPI_SYS_Exit();

fclose(stContext.mFpYuvFile);

_exit:

destroySampleVOContext(&stContext);

alogd("%s test result: %s", argv[0], result == 0 ? "success" : "fail");

return result;

}sample_vo.h

c

#ifndef _SAMPLE_VO_H_

#define _SAMPLE_VO_H_

#include <plat_type.h>

#include <tsemaphore.h>

#include <mpi_clock.h>

#include <mm_comm_vo.h>

#define MAX_FILE_PATH_SIZE (256)

typedef struct SampleVOCmdLineParam

{

char mConfigFilePath[MAX_FILE_PATH_SIZE];

}SampleVOCmdLineParam;

typedef struct SampleVOConfig

{

int mPicWidth; //图片的宽度

char mYuvFilePath[MAX_FILE_PATH_SIZE]; // YUV 文件的路

int mPicHeight; //图片的高度

int mDisplayWidth; //显示的宽度

int mDisplayHeight; //显示的高度

PIXEL_FORMAT_E mPicFormat; //MM_PIXEL_FORMAT_YUV_PLANAR_420 存储图片的像素格式

int mFrameRate; //帧率

VO_INTF_TYPE_E mDispType; //显示接口类型

VO_INTF_SYNC_E mDispSync; //显示同步类型

}SampleVOConfig;

typedef struct SampleVOFrameNode

{

VIDEO_FRAME_INFO_S mFrame;

struct list_head mList;

}SampleVOFrameNode;

typedef struct SampleVOFrameManager

{

struct list_head mIdleList; //SampleVOFrameNode

struct list_head mUsingList;

int mNodeCnt;//帧节点数量

pthread_mutex_t mLock; //互斥锁,保护对结构体中其他成员的并发访问

VIDEO_FRAME_INFO_S* (*PrefetchFirstIdleFrame)(void *pThiz); //函数指针,从空闲列表中预取第一个空闲帧的操作

int (*UseFrame)(void *pThiz, VIDEO_FRAME_INFO_S *pFrame);//用于将指定的帧 pFrame 加入到正在使用的列表中,并返回操作结果的整数值。

int (*ReleaseFrame)(void *pThiz, unsigned int nFrameId);//释放指定 nFrameId 的帧资源

}SampleVOFrameManager;

int initSampleVOFrameManager();

int destroySampleVOFrameManager();

typedef struct SampleVOContext

{

SampleVOCmdLineParam mCmdLinePara; //命令行参数相关的配置

SampleVOConfig mConfigPara; //视频输出的配置参数,包括文件路径、尺寸、格式、帧率等信息

FILE *mFpYuvFile; //YUV 文件

SampleVOFrameManager mFrameManager; //视频帧管理器,用于管理视频帧资源

pthread_mutex_t mWaitFrameLock; //保护等待帧的操

int mbWaitFrameFlag; //等待帧的标志

cdx_sem_t mSemFrameCome; //帧到达的信号量

int mUILayer; // UI 层级

MPP_SYS_CONF_S mSysConf; //存储系统配置信息

VO_DEV mVoDev; //VO 设备

VO_LAYER mVoLayer; //VO 图层

VO_VIDEO_LAYER_ATTR_S mLayerAttr; // VO 视频图层属性