目录

[一、 服务器环境及初始化](#一、 服务器环境及初始化)

一、 服务器环境及初始化

1、架构分析

使用三台OpenEuler24.03主机

| 集群角色 | 主机名 | 操作系统 | IP地址 |

|---|---|---|---|

| master | k8s-master | OpenEuler24.03 | 192.168.58.180 |

| node | k8s-node1 | OpenEuler24.03 | 192.168.58.181 |

| node | k8s-node2 | OpenEuler24.03 | 192.168.58.182 |

2、初始化

所有节点都需要初始化!

2.1、关闭SELINUX

setenforce 0

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config 2.2、关闭Swap交换空间

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab 2.3、设置主机名

hostnamectl set-hostname k8s-master

hostnamectl set-hostname k8s-node1

hostnamectl set-hostname k8s-node2

2.4、编写hosts文件

cat <<EOF > /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.58.180 k8s-master

192.168.58.181 k8s-node1

192.168.58.182 k8s-node2

EOF

###拷贝到node节点

scp /etc/hosts 192.168.58.181:/etc

scp /etc/hosts 192.168.58.182:/etc

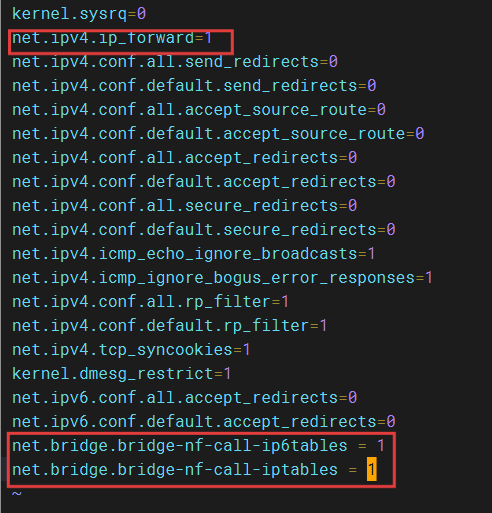

2.5、设置内核参数

安装完成docker-ce并启动之后设置!

vim /etc/sysctl.conf

net.ipv4.ip_forward=1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

modprobe br_netfilter

sysctl net.bridge.bridge-nf-call-ip6tables=1

sysctl net.bridge.bridge-nf-call-iptables=1

sysctl -p

二、安装Docker环境

所有节点都需要安装!

1、安装Docker

1.1、配置阿里源

[root@k8s-master yum.repos.d]# cat <<EOF > /etc/yum.repos.d/docker-ce.repo

[docker-ce-stable]

name=Docker CE Stable - $basearch

baseurl=https://mirrors.aliyun.com//docker-ce/linux/centos/9/x86_64/stable/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/docker-ce/linux/centos/gpg

EOF1.2、安装docker

yum install -y docker-ce

1.3、启动docker

systemctl enable --now docker

2、安装cri-docker

下载地址:https://github.com/Mirantis/cri-dockerd/releases

yum install -y libcgroup

yum install -y lrzsz

rz cri-dockerd-0.3.8-3.el8.x86_64.rpm

rpm -ivh cri-dockerd-0.3.8-3.el8.x86_64.rpm

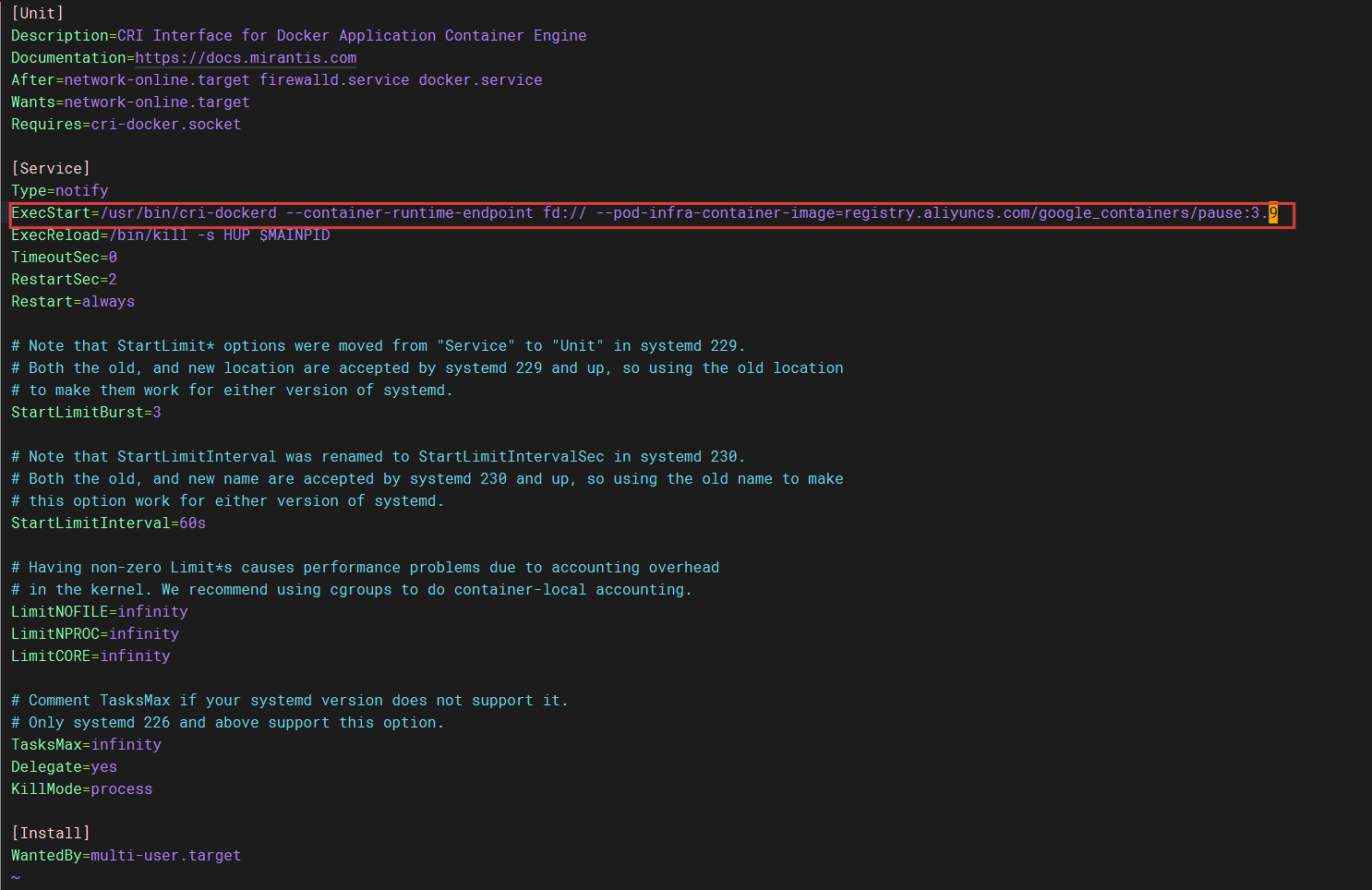

修改CRI启动脚本

vim /usr/lib/systemd/system/cri-docker.service

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9

启动cri

systemctl daemon-reload

systemctl enable --now cri-docker

三、安装kubeadm和kubectl

所有节点都需要安装!

1、配置yum源

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.28/rpm/

enabled=1

gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.28/rpm/repodata/repomd.xml.key

EOF

2、安装

yum install -y kubelet kubeadm kubectl

3、设置kubectl开机自启动

systemctl enable kubelet && systemctl start kubelet

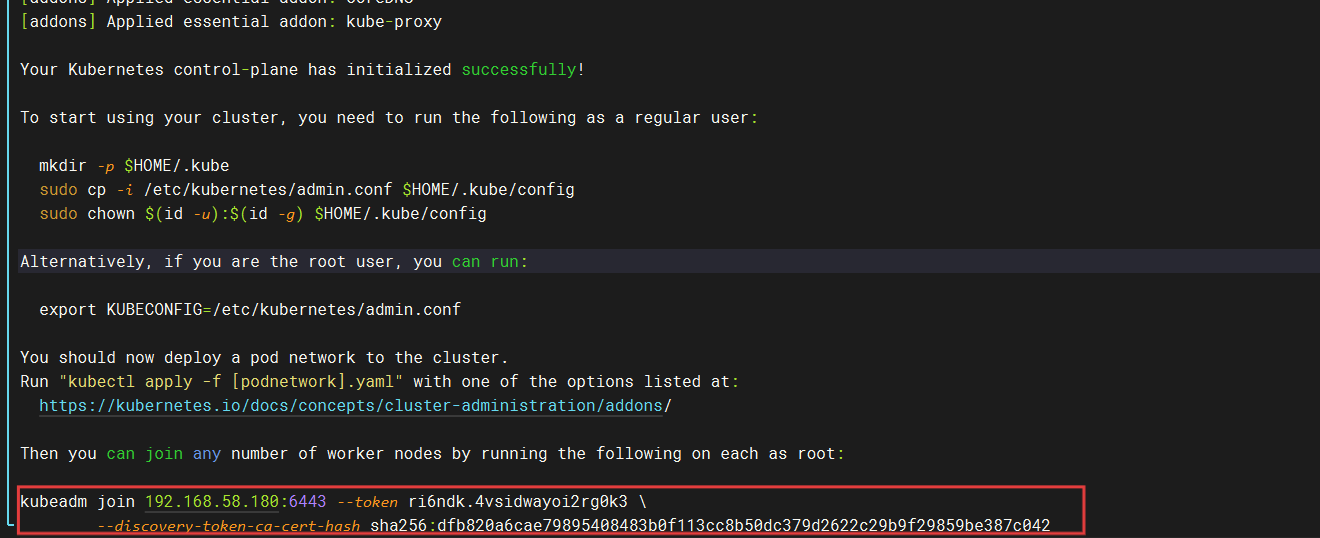

四、部署Master节点

在k8s-master节点执行下述命令:

kubeadm init --apiserver-advertise-address=192.168.58.180 --image-repository=registry.aliyuncs.com/google_containers --kubernetes-version=v1.28.15 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --cri-socket=unix:///var/run/cri-dockerd.sock

注意保存证书文件

每个人的证书是不一致的,注意查看自己的证书。

kubeadm join 192.168.58.180:6443 --token ri6ndk.4vsidwayoi2rg0k3 \

--discovery-token-ca-cert-hash sha256:dfb820a6cae79895408483b0f113cc8b50dc379d2622c29b9f29859be387c042

配置管理集群文件

mkdir -p $HOME/.kube

cd /root/.kube

cp /etc/kubernetes/admin.conf ./config

###查看集群状态

[root@k8s-master .kube]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane 2m27s v1.28.15五、部署node节点

分别在k8s-node1和k8s-node2中执行:

利用主节点生成的证书操作

kubeadm join 192.168.58.180:6443 --token ri6ndk.4vsidwayoi2rg0k3 \

--discovery-token-ca-cert-hash sha256:dfb820a6cae79895408483b0f113cc8b50dc379d2622c29b9f29859be387c042 --cri-socket=unix:///var/run/cri-dockerd.sock查看集群状态:

[root@k8s-master .kube]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane 5m42s v1.28.15

k8s-node1 NotReady <none> 36s v1.28.15

k8s-node2 NotReady <none> 28s v1.28.15目前看到的是NotReady状态,是由于没有安装网络插件的原因。ROLES角色一栏显示"none",可以通过一下命令修改角色名称:

kubectl label node k8s-master node-role.kubernetes.io/master=master

kubectl label node k8s-node1 node-role.kubernetes.io/worker=worker

kubectl label node k8s-node2 node-role.kubernetes.io/worker=worker

-----------------------------------------------------------------------------------

[root@k8s-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 3h2m v1.28.15

k8s-node1 NotReady worker 176m v1.28.15

k8s-node2 NotReady worker 176m v1.28.15六、部署网络插件

三台节点同样操作,还原镜像

rz calico.tar

docker load -i calico.tar 主节点操作

rz tigera-operator.yaml custom-resources.yaml

-----------------------------------------------

vim custom-resources.yaml

apiVersion: operator.tigera.io/v1

kind: Installation

metadata:

name: default

spec:

calicoNetwork:

ipPools:

- blockSize: 26

cidr: 10.244.0.0/16 # 修改此值,与“kubeadm init”命令中指定的 Pod 网络CIDR 地址范围保持一致

encapsulation: VXLANCrossSubnet

natOutgoing: Enabled

nodeSelector: all()

...

kubectl create -f tigera-operator.yaml

kubectl create -f custom-resources.yaml查看结果

[root@k8s-master ~]# kubectl get namespaces

NAME STATUS AGE

default Active 141m

kube-node-lease Active 141m

kube-public Active 141m

kube-system Active 141m

tigera-operator Active 75s

[root@k8s-master ~]# kubectl -n kube-system get pod

NAME READY STATUS RESTARTS AGE

coredns-66f779496c-bgk4h 1/1 Running 0 146m

coredns-66f779496c-dtpvj 1/1 Running 0 146m

etcd-k8s-master 1/1 Running 0 146m

kube-apiserver-k8s-master 1/1 Running 0 146m

kube-controller-manager-k8s-master 1/1 Running 0 146m

kube-proxy-252qp 1/1 Running 0 141m

kube-proxy-rgv26 1/1 Running 0 141m

kube-proxy-vghtk 1/1 Running 0 146m

kube-scheduler-k8s-master 1/1 Running 0 146m

[root@k8s-master ~]# kubectl get ns

NAME STATUS AGE

calico-apiserver Active 11m

calico-system Active 11m

default Active 154m

kube-node-lease Active 154m

kube-public Active 154m

kube-system Active 154m

tigera-operator Active 14m

[root@k8s-master ~]# kubectl -n calico-system describe pod | grep "Image:" | sort | uniq

Image: docker.io/calico/cni:v3.29.2

Image: docker.io/calico/csi:v3.29.2

Image: docker.io/calico/kube-controllers:v3.29.2

Image: docker.io/calico/node-driver-registrar:v3.29.2

Image: docker.io/calico/node:v3.29.2

Image: docker.io/calico/pod2daemon-flexvol:v3.29.2

Image: docker.io/calico/typha:v3.29.2

[root@k8s-master ~]# kubectl -n calico-apiserver describe pod calico-apiserver | grep -i 'image:'

Image: docker.io/calico/apiserver:v3.29.2

Image: docker.io/calico/apiserver:v3.29.2七、单个pod部署lnmp论坛

制作安装论坛镜像

1、目录

[root@k8s-master lnmp1]# ls

default.conf Dockerfile init.sql my.cnf start.sh upload www.conf2、主文件

vim Dockerfile

FROM alpine:latest

# 安装所有组件

RUN apk update && apk add \

nginx \

mariadb \

php82 \

php82-fpm \

php82-mysqli \

php82-xml \

php82-json \

php82-pdo \

php82-pdo_mysql \

php82-tokenizer \

mariadb-client && \

rm -f /etc/nginx/http.d/default.conf && \

mkdir -p /run/nginx /run/php /run/mysql /var/lib/mysql /run/mysqld && \

chown -R nginx:nginx /run/nginx /run/php && \

chown -R mysql:mysql /var/lib/mysql /run/mysqld && \

chmod 755 /run/nginx

# 复制配置文件

COPY upload /usr/share/nginx/html/

COPY www.conf /etc/php82/php-fpm.d/www.conf

COPY default.conf /etc/nginx/http.d/

COPY my.cnf /etc/mysql/conf.d/

COPY init.sql /docker-entrypoint-initdb.d/

RUN chown -R 101:101 /usr/share/nginx/html/

RUN mysql_install_db --user=mysql --datadir=/var/lib/mysql && \

mariadbd --user=mysql --bootstrap --skip-grant-tables=0 < /docker-entrypoint-initdb.d/init.sql

# 启动脚本

COPY start.sh /start.sh

RUN chmod +x /start.sh

EXPOSE 80

CMD ["/start.sh"]3、php服务

vim www.conf

[www]

user = nginx

group = nginx

listen = 0.0.0.0:9000

pm = dynamic

pm.max_children = 5

pm.start_servers = 2

pm.min_spare_servers = 1

pm.max_spare_servers = 34、nginx服务

vim default.conf

server {

listen 80;

listen [::]:80;

server_name localhost;

root /usr/share/nginx/html;

index index.php index.html index.htm;

location ~ \.php$ {

fastcgi_pass 127.0.0.1:9000; # 您的PHP服务器IP

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

}5、mysql服务

vim my.cnf

[mysqld]

character-set-server=utf8mb4

collation-server=utf8mb4_unicode_ci

default-authentication-plugin=mysql_native_password

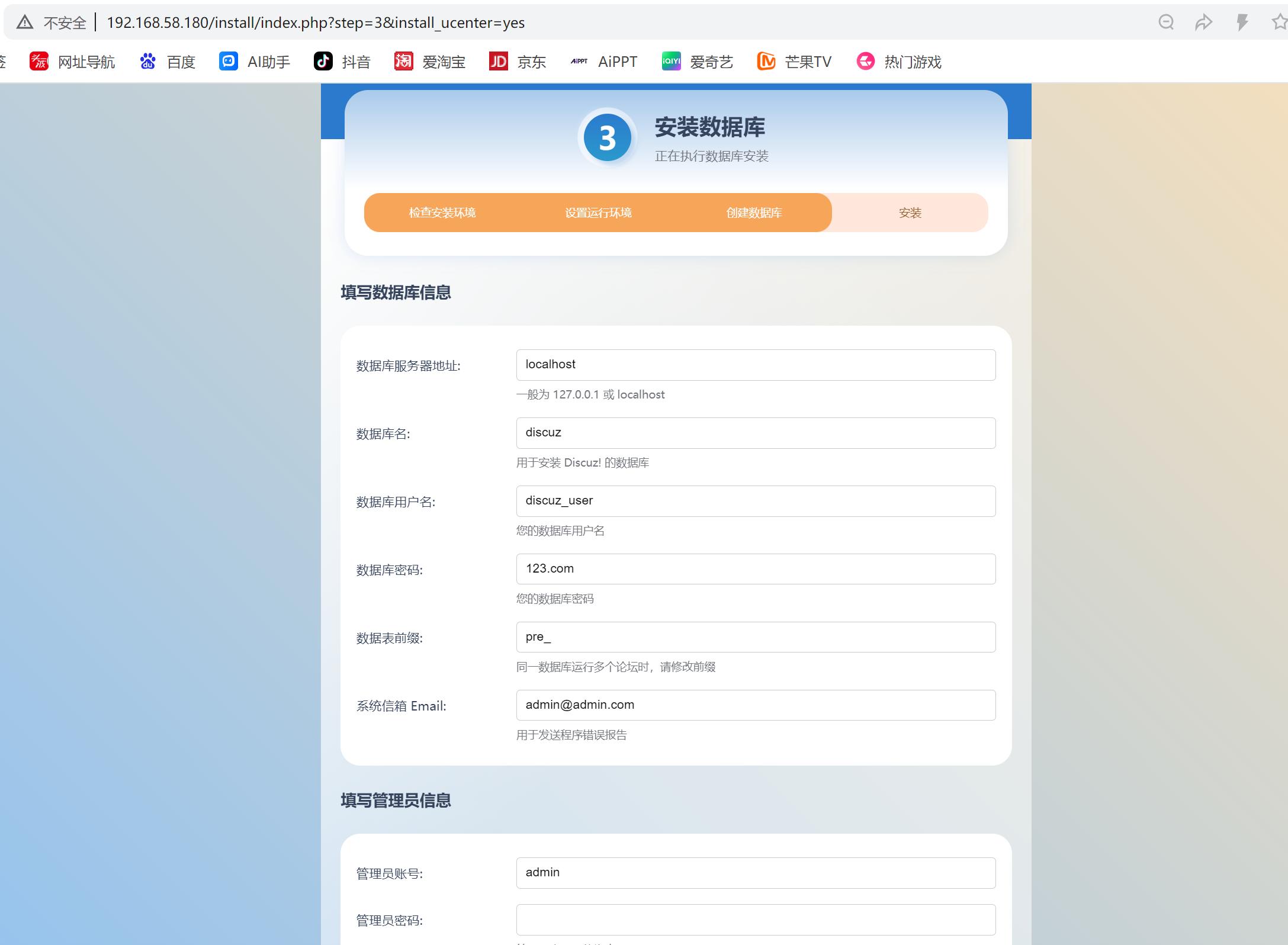

max_connections=200vim init.sql

-- 创建额外数据库

CREATE DATABASE discuz;

-- 创建额外用户和权限

CREATE USER 'discuz_user'@'localhost' IDENTIFIED BY '123.com';

GRANT ALL PRIVILEGES ON discuz.* TO 'discuz_user'@'localhost';

FLUSH PRIVILEGES;6、服务启动脚本

vim start.sh

#!/bin/sh

# 启动MySQL服务

mysqld --user=mysql &

# 启动PHP-FPM服务

php-fpm82 &

# 启动Nginx服务

nginx -g 'daemon off;'7、discuz目录

unzip Discuz_X3.5_SC_UTF8_20240520.zip

cp -R upload/ /root/lnmp1/discuz8、生成lnmp单容器

docker build -t alpine:lnmp .

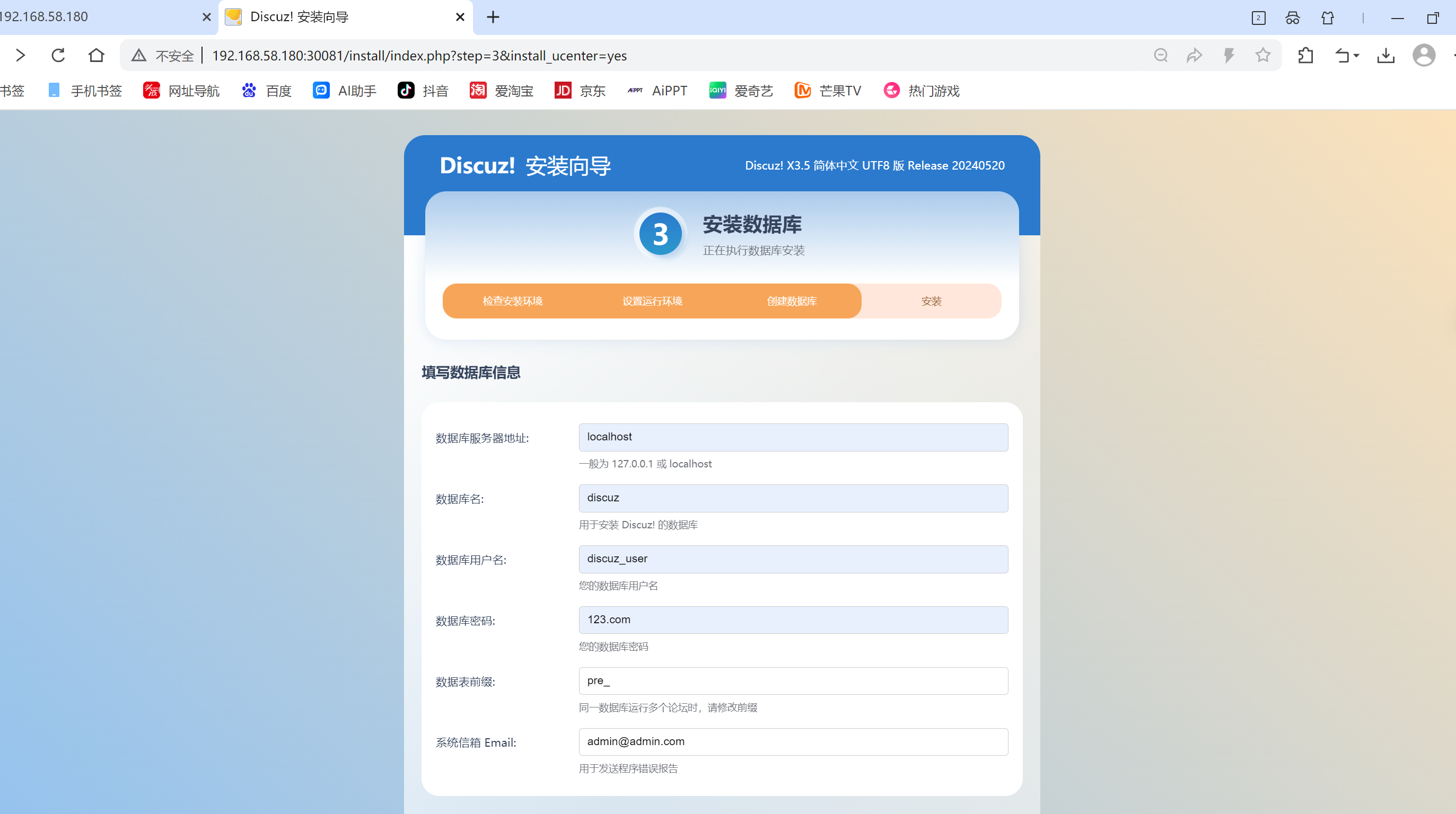

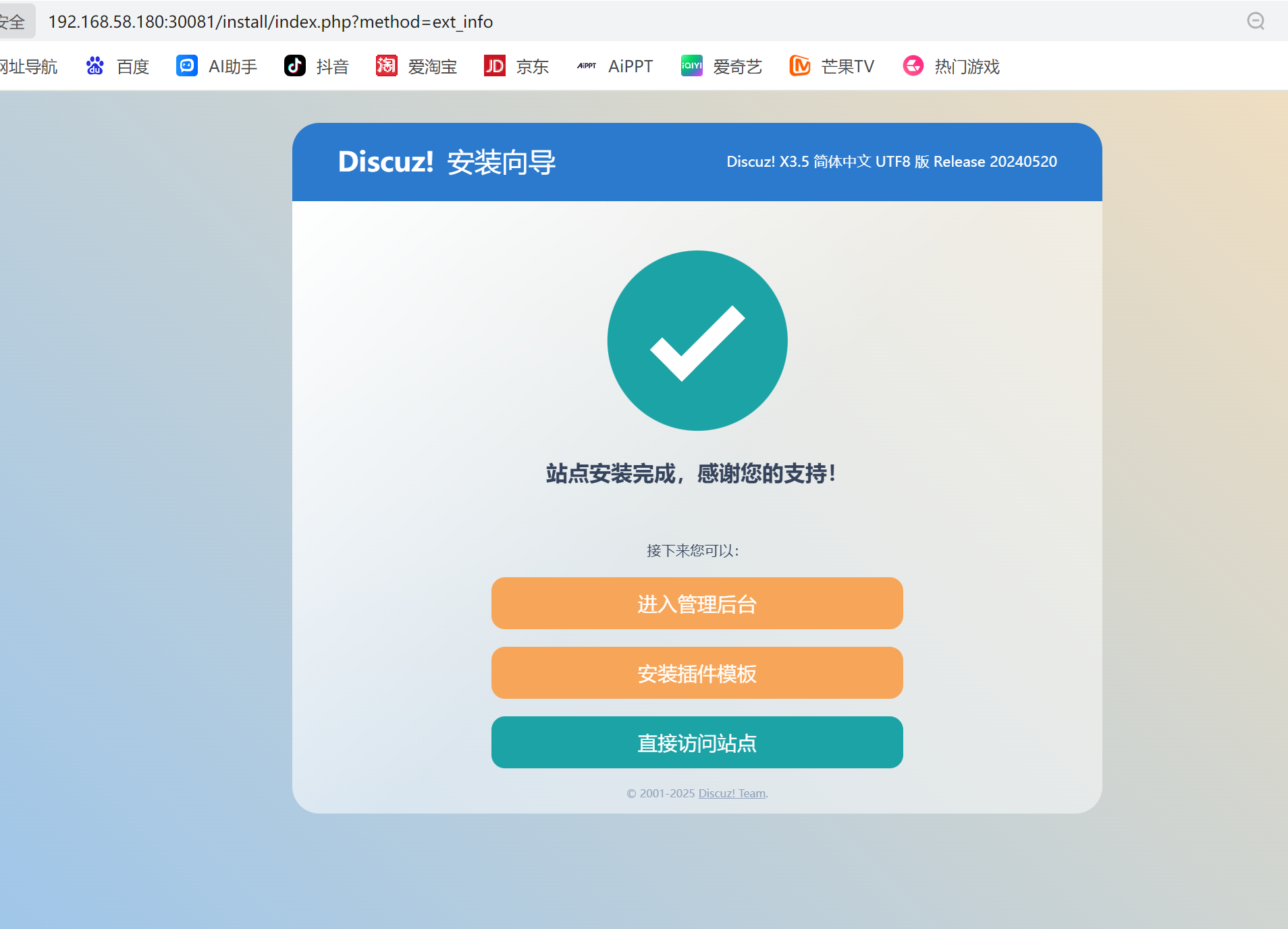

docker run -itd -p 80:80 --name lnmp alpine:lnmp9、网站安装

访问宿主机ip

10、生成新镜像发给nodes节点

docker save alpine:lnmp -o lnmp-images.tar

scp lnmp-images.tar k8s-node1:/root

scp lnmp-images.tar k8s-node2:/root两个node节点操作

docker load -i lnmp-images.tar 使用deployment部署pod

vim lnmp.yaml

##Deployment 部分

apiVersion: apps/v1 # 使用的 Kubernetes API 版本

kind: Deployment # 资源类型为 Deployment

metadata:

labels:

app: lnmp # 给 Deployment 打上标签 app=lnmp

name: lnmp-deployment # Deployment 名称

namespace: default # 部署在 default 命名空间

spec:

replicas: 1 # 只运行 1 个 Pod 副本

selector:

matchLabels:

app: lnmp # 选择器匹配标签为 app=lnmp 的 Pod

template: # Pod 模板

metadata:

labels:

app: lnmp # 给 Pod 打上标签 app=lnmp

spec:

containers:

- name: nginx # 容器名称

image: alpine:lnmp # 使用的镜像

imagePullPolicy: IfNotPresent # 镜像拉取策略:如果本地存在就不拉取

ports:

- containerPort: 80 # 容器暴露的端口

livenessProbe: # 存活探针配置

httpGet: # 使用 HTTP GET 请求检查

path: /index.html # 检查的路径

port: 80 # 检查的端口

initialDelaySeconds: 5 # 容器启动后 5 秒开始检查

periodSeconds: 5 # 每 5 秒检查一次

---

#Service 部分

apiVersion: v1 # 使用的 Kubernetes API 版本

kind: Service # 资源类型为 Service

metadata:

labels:

svc: lnmp-nodeport # 给 Service 打上标签 svc=nginx-nodeport

name: lnmp-nodeport # Service 名称

spec:

type: NodePort # Service 类型为 NodePort会暴露到集群节点端口

ports:

- port: 80 # Service 的端口

targetPort: 80 # 目标 Pod 的端口

nodePort: 30081 # 节点上暴露的端口30000-32767 之间

selector:

app: lnmp # 选择标签为 app=lnmp 的 Pod生成pod

kubectl apply -f lnmp.yaml 查看

[root@k8s-master lnmp1]# kubectl get po

NAME READY STATUS RESTARTS AGE

lnmp-deployment-6fb5d4fb8f-x9tf2 1/1 Running 0 12s

[root@k8s-master lnmp1]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 17h

lnmp-nodeport NodePort 10.105.180.137 <none> 80:30088/TCP 18s

[root@k8s-master lnmp1]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

lnmp-deployment 1/1 1 1 23s网站安装