目录

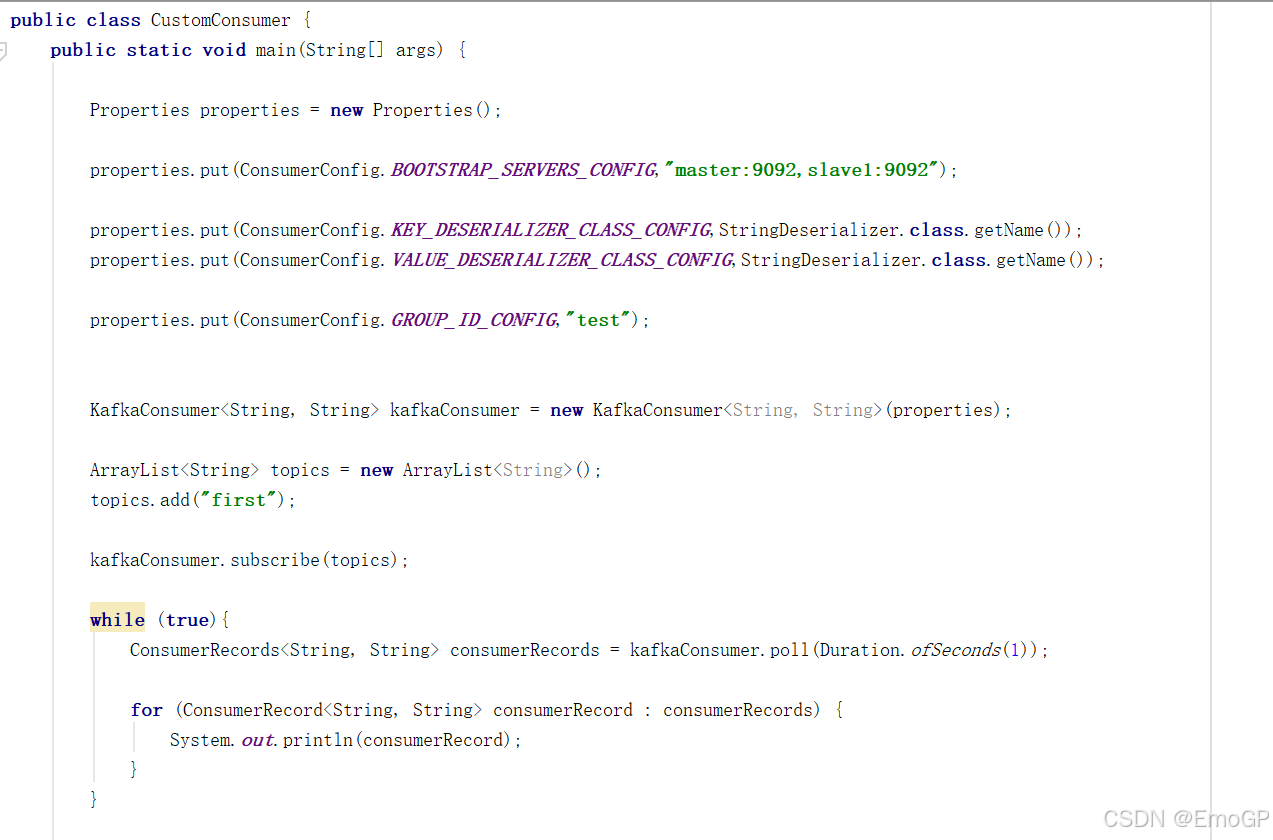

独立消费者案例(订阅主题)

c

package com.tsg.kafka.consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.time.Duration;

import java.util.ArrayList;

import java.util.Properties;

public class CustomConsumer {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"master:9092,slave1:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"test");

KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<String, String>(properties);

ArrayList<String> topics = new ArrayList<String>();

topics.add("first");

kafkaConsumer.subscribe(topics);

while (true){

ConsumerRecords<String, String> consumerRecords = kafkaConsumer.poll(Duration.ofSeconds(1));

for (ConsumerRecord<String, String> consumerRecord : consumerRecords) {

System.out.println(consumerRecord);

}

}

}

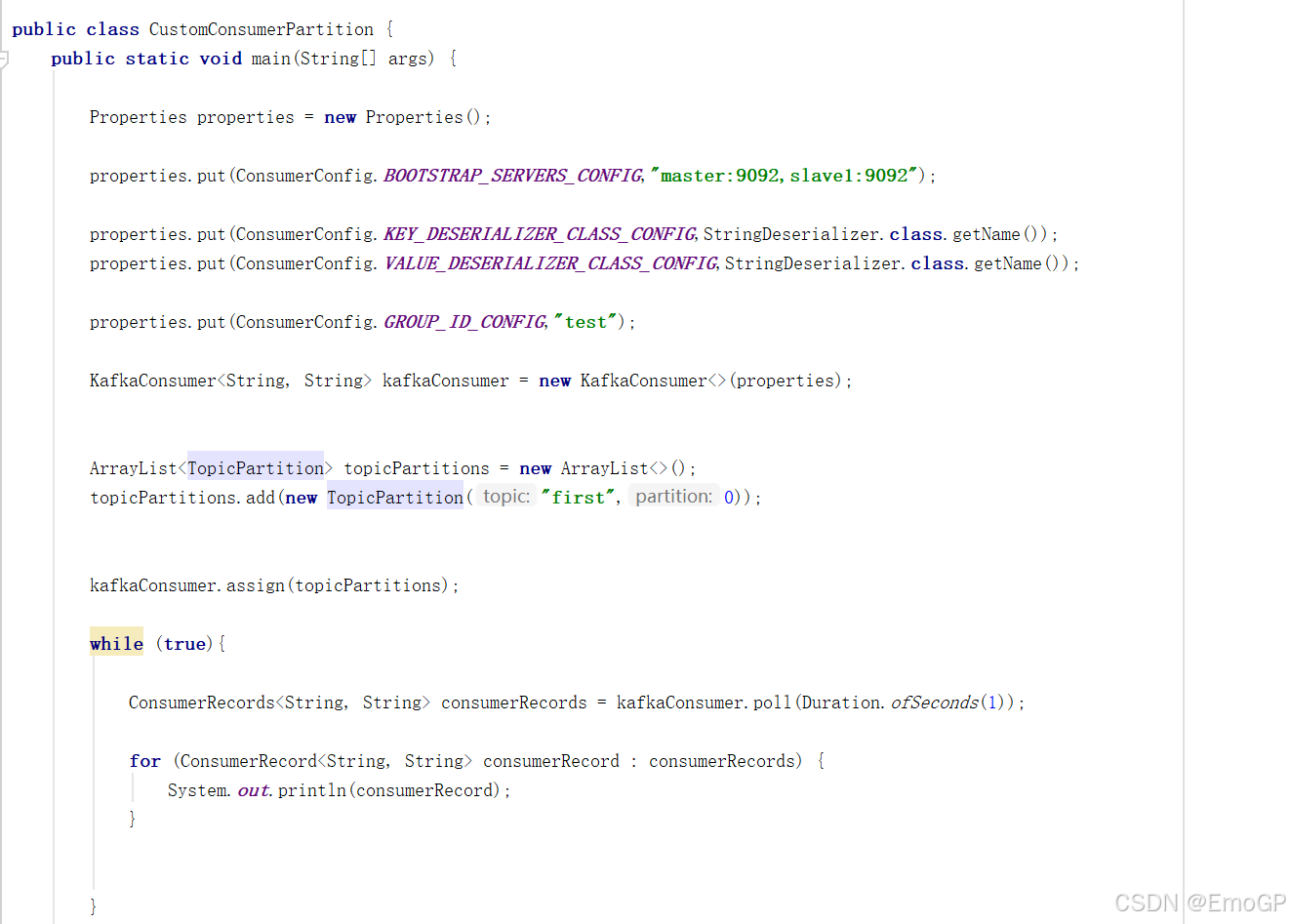

}独立消费者案例(订阅分区)

c

package com.tsg.kafka.consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.TopicPartition;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.time.Duration;

import java.util.ArrayList;

import java.util.Properties;

public class CustomConsumerPartition {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"master:9092,slave1:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"test");

KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<>(properties);

ArrayList<TopicPartition> topicPartitions = new ArrayList<>();

topicPartitions.add(new TopicPartition("first",0));

kafkaConsumer.assign(topicPartitions);

while (true){

ConsumerRecords<String, String> consumerRecords = kafkaConsumer.poll(Duration.ofSeconds(1));

for (ConsumerRecord<String, String> consumerRecord : consumerRecords) {

System.out.println(consumerRecord);

}

}

}

}消费者组案例

测试同一个主题的分区数据,只能由于一个消费者组中的一个一个消费

消费者1

c

package com.tsg.kafka.consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.time.Duration;

import java.util.ArrayList;

import java.util.Properties;

public class CustomConsumer {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"master:9092,slave1:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"test");

KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<String, String>(properties);

ArrayList<String> topics = new ArrayList<String>();

topics.add("four");

kafkaConsumer.subscribe(topics);

while (true){

ConsumerRecords<String, String> consumerRecords = kafkaConsumer.poll(Duration.ofSeconds(1));

for (ConsumerRecord<String, String> consumerRecord : consumerRecords) {

System.out.println(consumerRecord);

}

}

}

}消费者2

c

package com.tsg.kafka.consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.time.Duration;

import java.util.ArrayList;

import java.util.Properties;

public class CustomConsumer1 {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"master:9092,slave1:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"test");

KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<String, String>(properties);

ArrayList<String> topics = new ArrayList<String>();

topics.add("four");

kafkaConsumer.subscribe(topics);

while (true){

ConsumerRecords<String, String> consumerRecords = kafkaConsumer.poll(Duration.ofSeconds(1));

for (ConsumerRecord<String, String> consumerRecord : consumerRecords) {

System.out.println(consumerRecord);

}

}

}

}消费者3

c

package com.tsg.kafka.consumer;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.common.serialization.StringDeserializer;

import java.time.Duration;

import java.util.ArrayList;

import java.util.Properties;

public class CustomConsumer2 {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"master:9092,slave1:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"test");

KafkaConsumer<String, String> kafkaConsumer = new KafkaConsumer<String, String>(properties);

ArrayList<String> topics = new ArrayList<String>();

topics.add("four");

kafkaConsumer.subscribe(topics);

while (true){

ConsumerRecords<String, String> consumerRecords = kafkaConsumer.poll(Duration.ofSeconds(1));

for (ConsumerRecord<String, String> consumerRecord : consumerRecords) {

System.out.println(consumerRecord);

}

}

}

}三个消费者的组ID相同,会形成消费者组,每个消费者消费一个分区数据

生产者发送数据

c

package com.tsg.kafka.producer;

import org.apache.kafka.clients.producer.*;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

public class CustomProducerCallback {

public static void main(String[] args) {

Properties properties = new Properties();

// 连接集群

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "master:9092,slave1:9092");

// 指定对应的key和value的序列化类型

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

// 设置acks

properties.put(ProducerConfig.ACKS_CONFIG,"all");

// 重试次数retries,默认是int最大值,2^31 - 1

properties.put(ProducerConfig.RETRIES_CONFIG,3);

properties.put(ProducerConfig.PARTITIONER_CLASS_CONFIG,"com.tsg.kafka.producer.MyPartitioner");

// 创建kafka生产者对象

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<String, String>(properties);

// 发送数据

for (int i = 0; i < 5; i++) {

kafkaProducer.send(new ProducerRecord<String, String>("four",2,"","分区2"), new Callback() {

public void onCompletion(RecordMetadata metadata, Exception exception) {

if(exception == null){

System.out.println("主题:" +metadata.topic() + " 分区: " +metadata.partition());

}

}

});

}

// 关闭资源

kafkaProducer.close();

}

}

c

package com.tsg.kafka.producer;

import org.apache.kafka.clients.producer.*;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

public class CustomProducerCallback {

public static void main(String[] args) {

Properties properties = new Properties();

// 连接集群

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "master:9092,slave1:9092");

// 指定对应的key和value的序列化类型

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

// 设置acks

properties.put(ProducerConfig.ACKS_CONFIG,"all");

// 重试次数retries,默认是int最大值,2^31 - 1

properties.put(ProducerConfig.RETRIES_CONFIG,3);

properties.put(ProducerConfig.PARTITIONER_CLASS_CONFIG,"com.tsg.kafka.producer.MyPartitioner");

// 创建kafka生产者对象

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<String, String>(properties);

// 发送数据

for (int i = 0; i < 5; i++) {

kafkaProducer.send(new ProducerRecord<String, String>("four",1,"","分区2"), new Callback() {

public void onCompletion(RecordMetadata metadata, Exception exception) {

if(exception == null){

System.out.println("主题:" +metadata.topic() + " 分区: " +metadata.partition());

}

}

});

}

// 关闭资源

kafkaProducer.close();

}

}

c

```c

package com.tsg.kafka.producer;

import org.apache.kafka.clients.producer.*;

import org.apache.kafka.common.serialization.StringSerializer;

import java.util.Properties;

public class CustomProducerCallback {

public static void main(String[] args) {

Properties properties = new Properties();

// 连接集群

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "master:9092,slave1:9092");

// 指定对应的key和value的序列化类型

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

// 设置acks

properties.put(ProducerConfig.ACKS_CONFIG,"all");

// 重试次数retries,默认是int最大值,2^31 - 1

properties.put(ProducerConfig.RETRIES_CONFIG,3);

properties.put(ProducerConfig.PARTITIONER_CLASS_CONFIG,"com.tsg.kafka.producer.MyPartitioner");

// 创建kafka生产者对象

KafkaProducer<String, String> kafkaProducer = new KafkaProducer<String, String>(properties);

// 发送数据

for (int i = 0; i < 5; i++) {

kafkaProducer.send(new ProducerRecord<String, String>("four",0,"","分区2"), new Callback() {

public void onCompletion(RecordMetadata metadata, Exception exception) {

if(exception == null){

System.out.println("主题:" +metadata.topic() + " 分区: " +metadata.partition());

}

}

});

}

// 关闭资源

kafkaProducer.close();

}

}