【GPT入门】第66 课 llamaIndex调用远程llm模型与embedding模型的方法

- [1. 调用私有模型的方法](#1. 调用私有模型的方法)

-

- [1. OpenAILike](#1. OpenAILike)

- [2. OpenAILikeEmbedding](#2. OpenAILikeEmbedding)

- [2. 调用公开平台的模型](#2. 调用公开平台的模型)

-

- [2.1 调用GLM](#2.1 调用GLM)

https://docs.llamaindex.org.cn/en/stable/api_reference/llms/openai_like/

1. 调用私有模型的方法

1. OpenAILike

OpenAILike 是对 OpenAI 模型的轻量级封装,使其兼容提供 OpenAI 兼容 API 的第三方工具。

官网:

https://docs.llamaindex.org.cn/en/stable/api_reference/llms/openai_like/

pip install llama-index-llms-openai-like

from llama_index.llms.openai_like import OpenAILike

llm = OpenAILike(

model="my model",

api_base="https://hostname.com/v1",

api_key="fake",

context_window=128000,

is_chat_model=True,

is_function_calling_model=False,

)

response = llm.complete("Hello World!")

print(str(response))2. OpenAILikeEmbedding

https://docs.llamaindex.org.cn/en/stable/api_reference/embeddings/openai_like/

pip install llama-index-embeddings-openai-like

embedding = OpenAILikeEmbedding(

model_name="my-model-name",

api_base="https://:1234/v1",

api_key="fake",

embed_batch_size=10,

)2. 调用公开平台的模型

2.1 调用GLM

参考官网:https://docs.bigmodel.cn/cn/guide/develop/http/introduction, 找到api_base,填入下面

from llama_index.llms.openai_like import OpenAILike

llm = OpenAILike(

model="glm-4",

api_base="https://open.bigmodel.cn/api/paas/v4/",

api_key="f45f06dfa35cf6c6110407aaae3b8ccf.Yl9o05aEpQqcC3yN",

context_window=128000,

is_chat_model=True,

is_function_calling_model=False,

max_tokens=1024,

temperature=0.3,

)

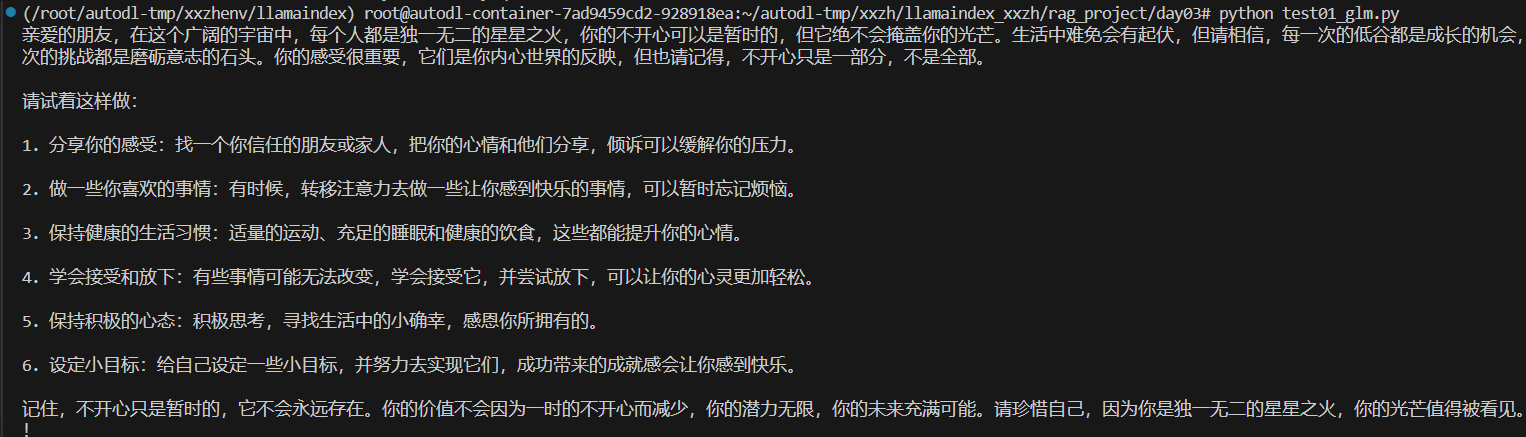

response = llm.complete("我是星星之火,我不开心,开导我!")

print(str(response))执行结果: