模型部署:(三)安卓端部署Yolov8-v8.2.99目标检测项目全流程记录

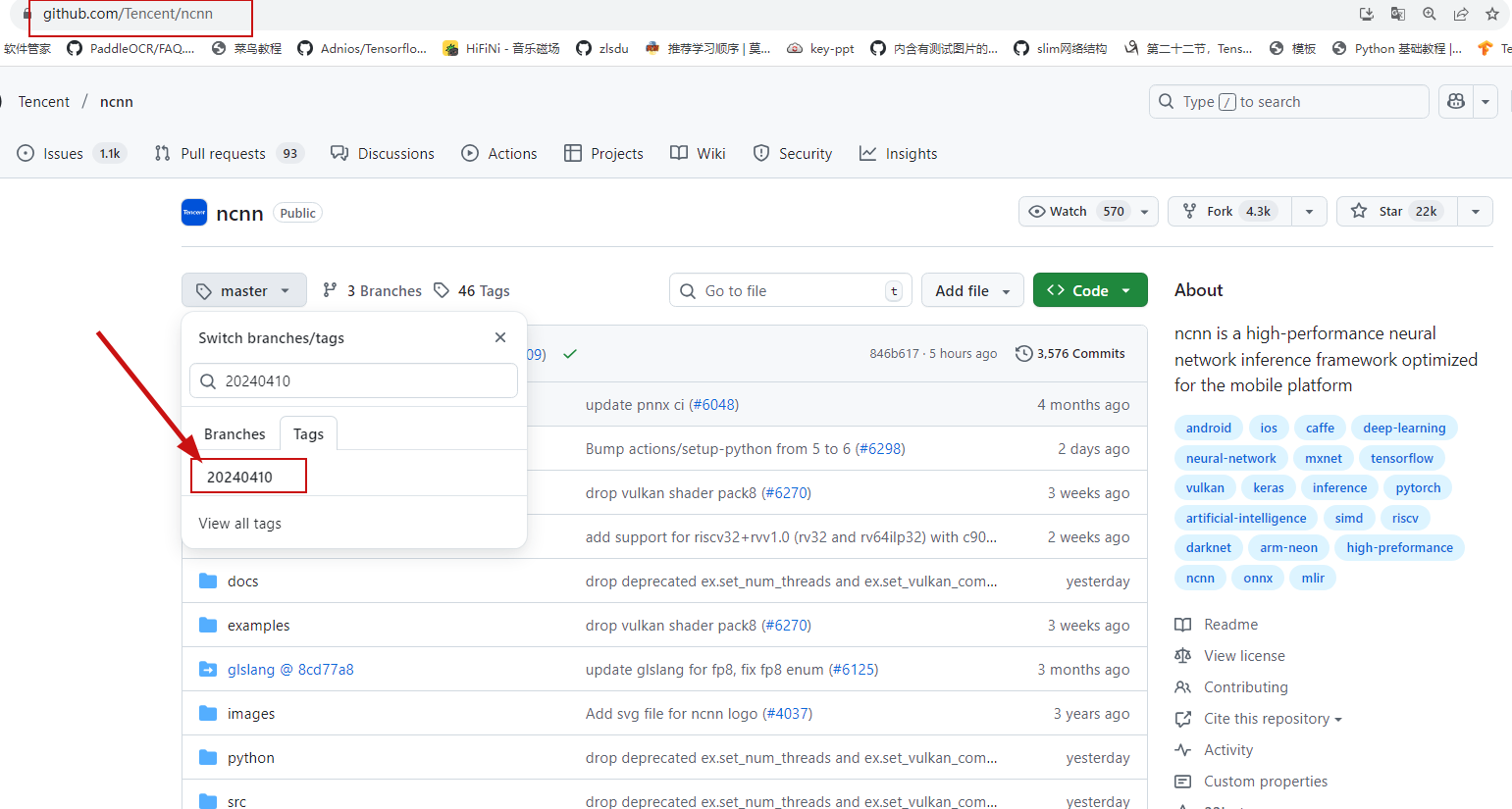

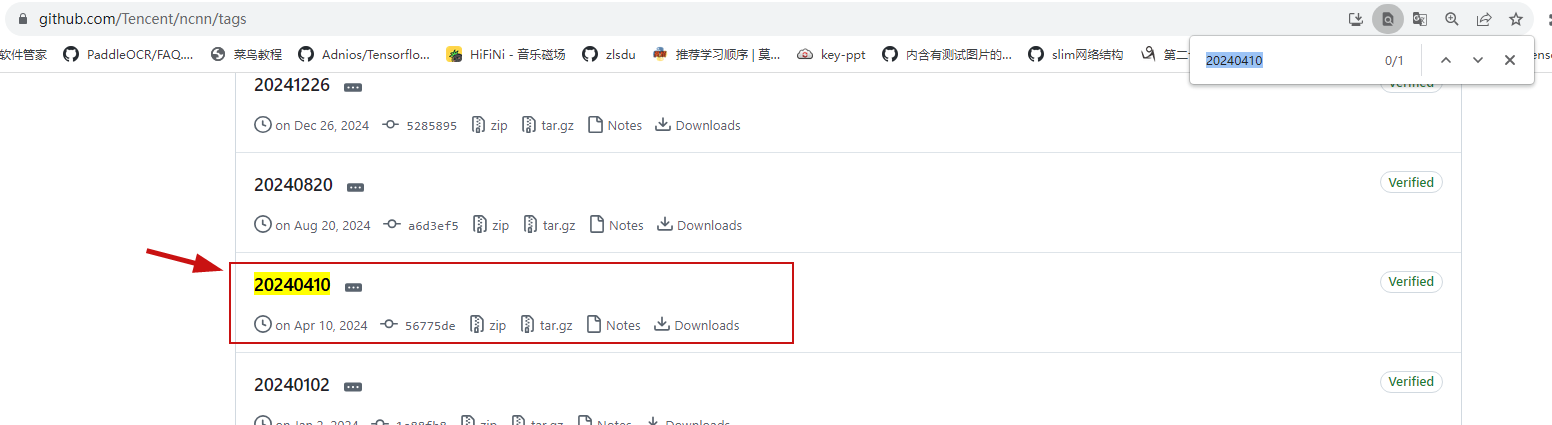

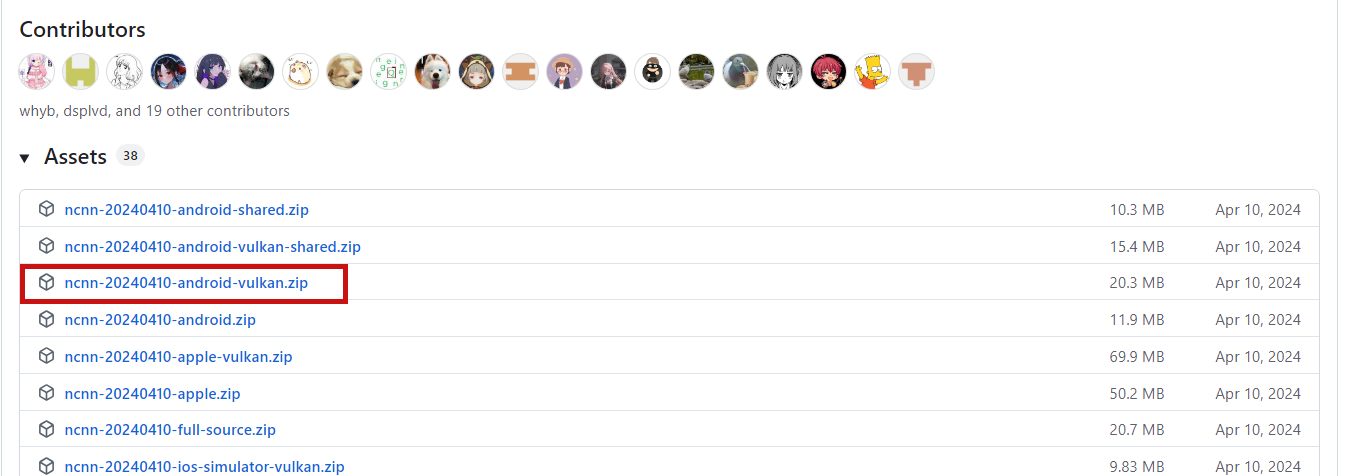

1、下载ncnn

python

https://github.com/Tencent/ncnn/releases/tag/20240410

下载并解压

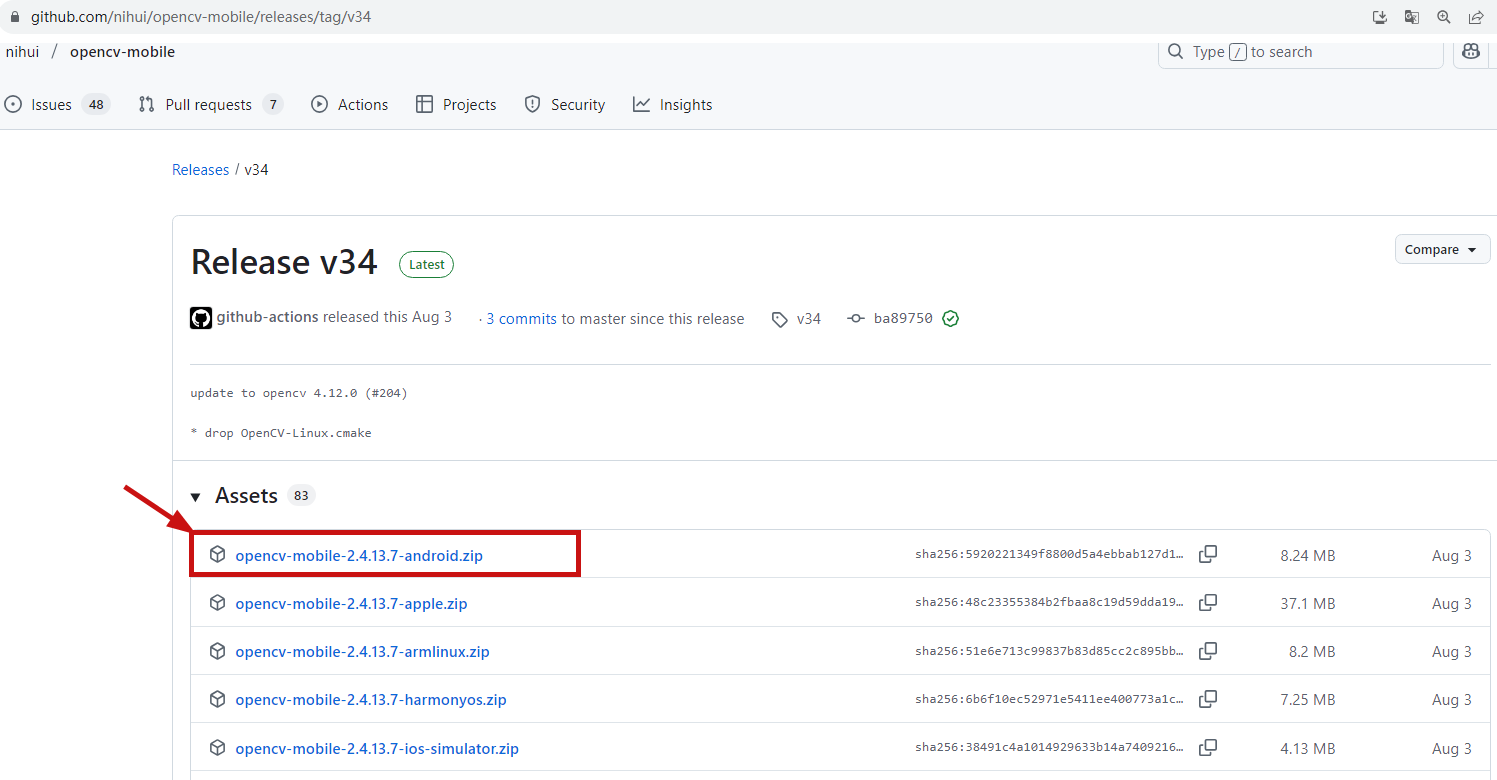

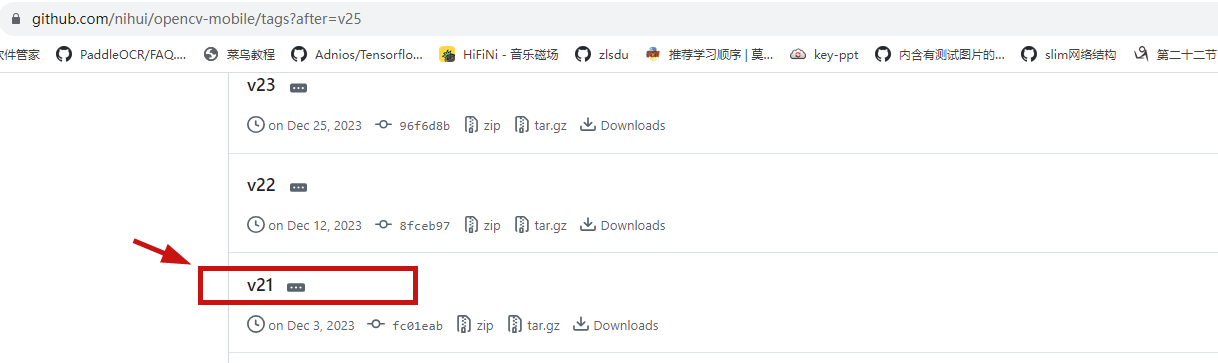

2、下载opencv-mobile

python

https://github.com/nihui/opencv-mobile

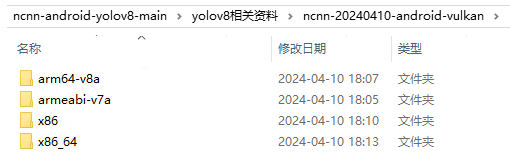

4、依赖文件拷贝与配置

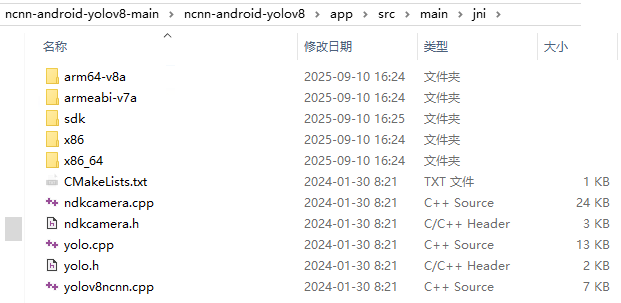

文件拷贝:

将ncnn-20240410-android-vulkan解压后的四个文件复制到ncnn-android-yolov8\app\src\main\jni文件夹目录内

然后再将opencv-mobile-XYZ-android.zip解压后拷贝复制到ncnn-android-yolov8\app\src\main\jni文件夹目录内

文件拷贝完毕后如下所示:

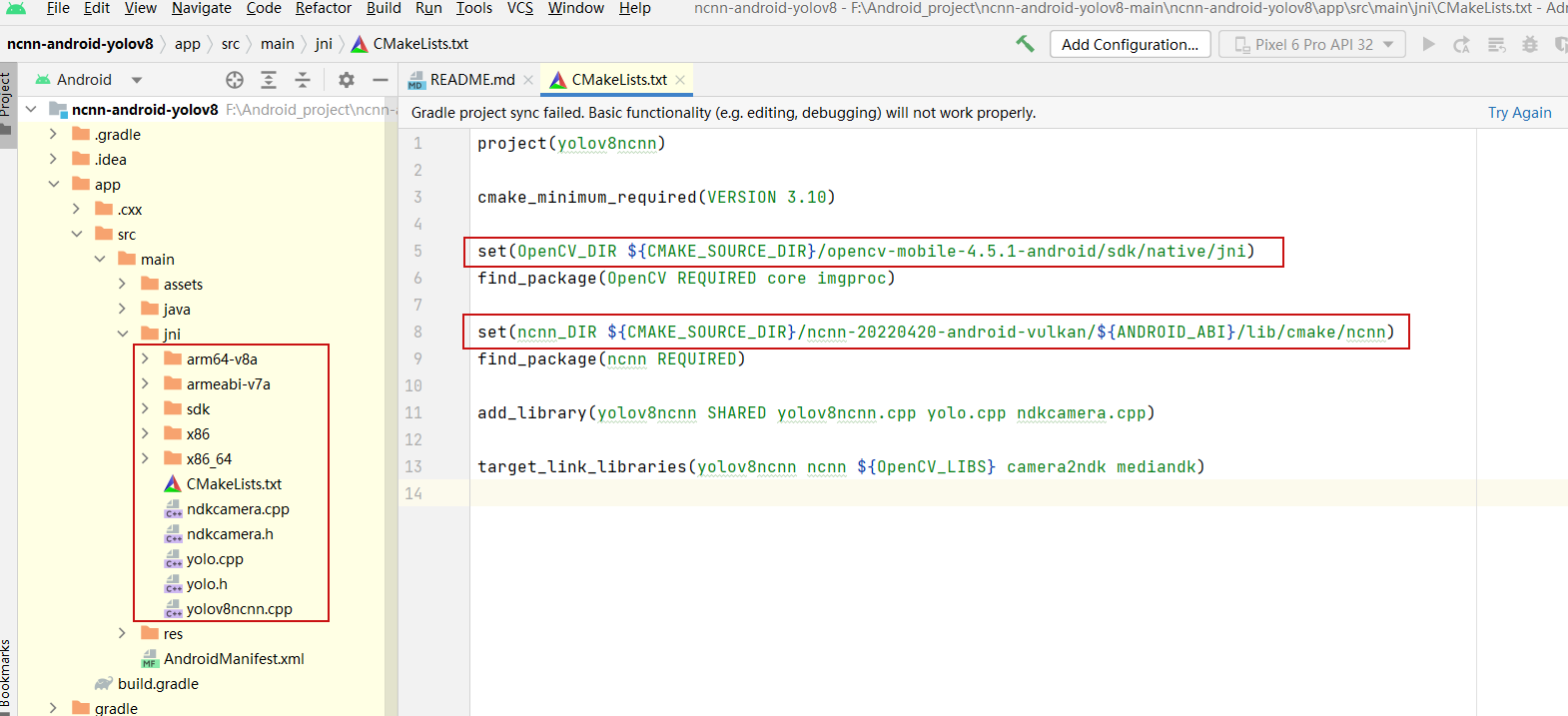

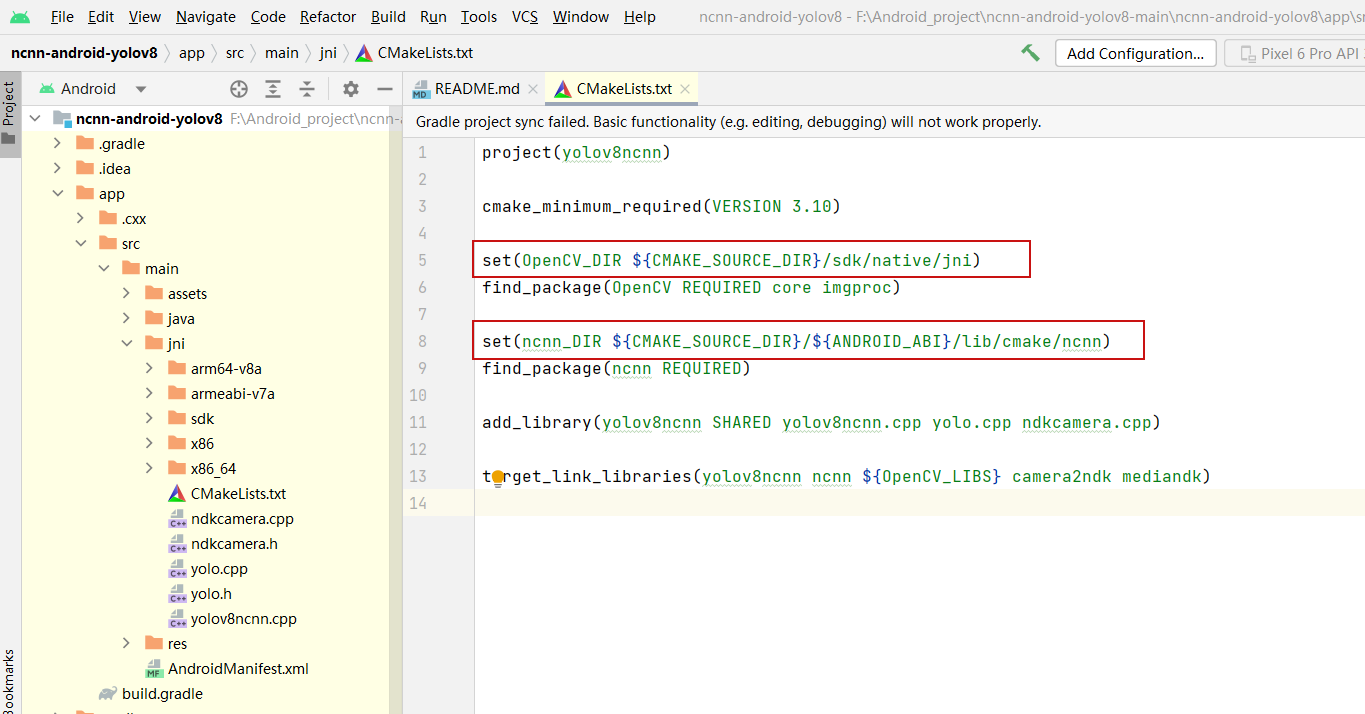

配置文件修改:

修改后↓↓↓:

修改前↓↓↓ :

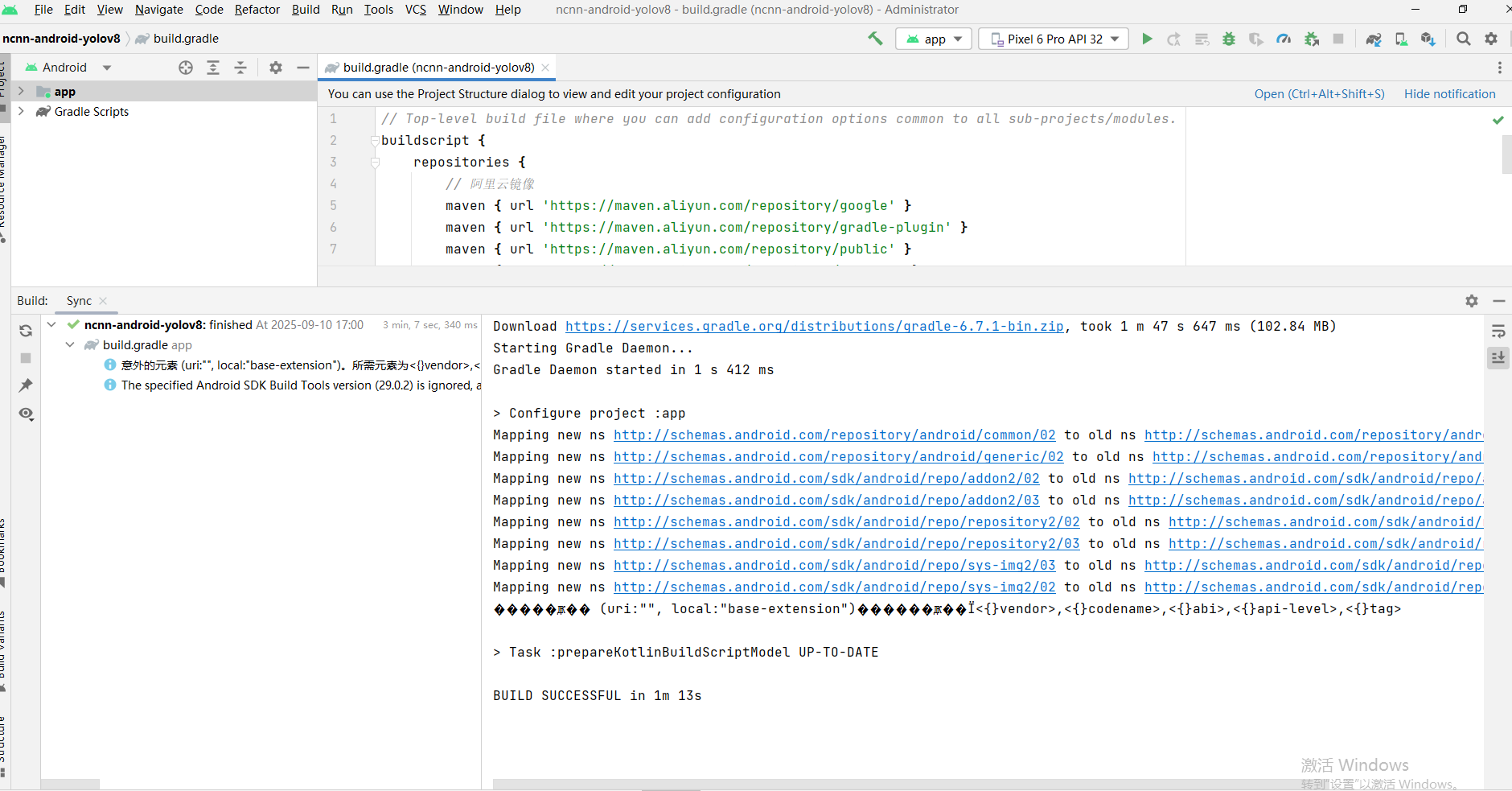

修改镜像源:

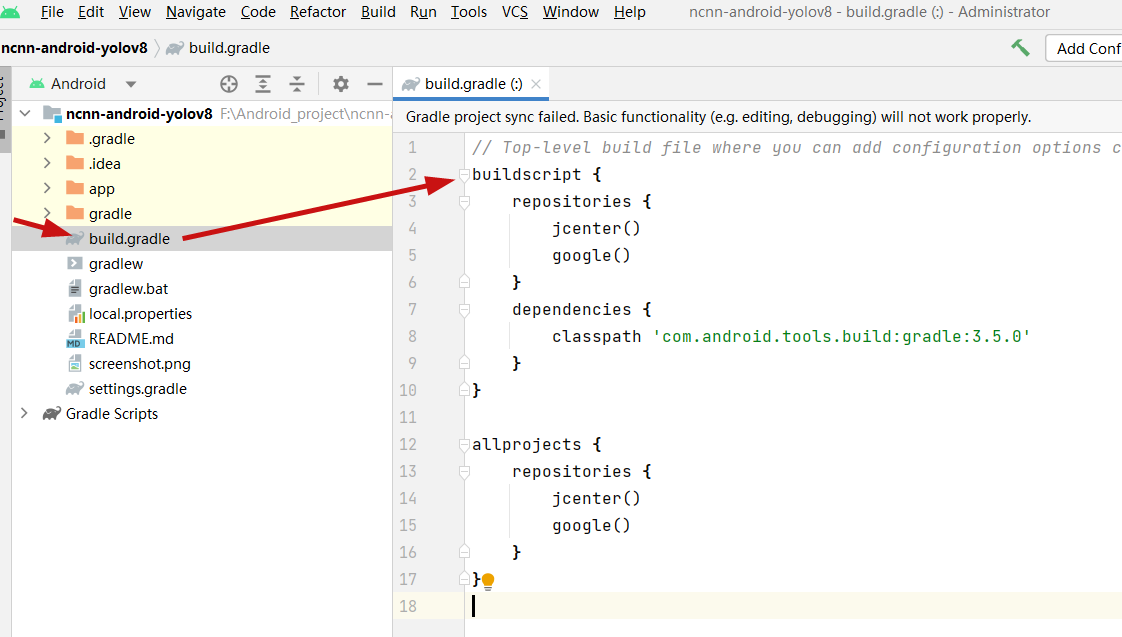

将build.gradle文件修改成如下内容:

python

// Top-level build file where you can add configuration options common to all sub-projects/modules.

buildscript {

repositories {

// 阿里云镜像

maven { url 'https://maven.aliyun.com/repository/google' }

maven { url 'https://maven.aliyun.com/repository/gradle-plugin' }

maven { url 'https://maven.aliyun.com/repository/public' }

maven { url 'https://maven.aliyun.com/repository/central' }

// 清华镜像(备用)

maven { url 'https://mirrors.tuna.tsinghua.edu.cn/maven/google' }

maven { url 'https://mirrors.tuna.tsinghua.edu.cn/maven/central' }

mavenCentral()

}

dependencies {

classpath 'com.android.tools.build:gradle:4.2.2'

}

}

allprojects {

repositories {

// 阿里云镜像

maven { url 'https://maven.aliyun.com/repository/google' }

maven { url 'https://maven.aliyun.com/repository/public' }

maven { url 'https://maven.aliyun.com/repository/central' }

// 清华镜像(备用)

maven { url 'https://mirrors.tuna.tsinghua.edu.cn/maven/google' }

maven { url 'https://mirrors.tuna.tsinghua.edu.cn/maven/central' }

mavenCentral()

}

}完成如下配置:

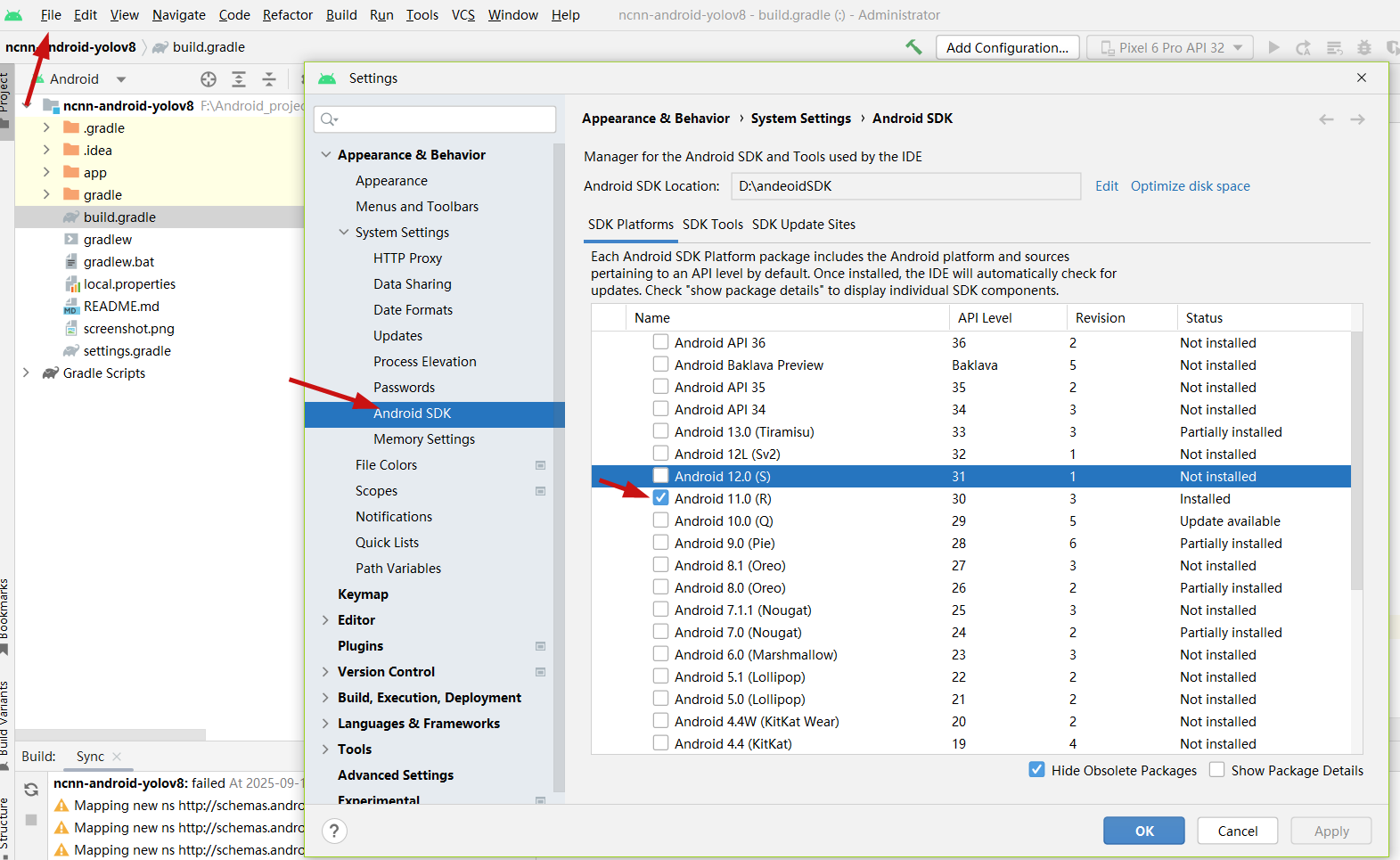

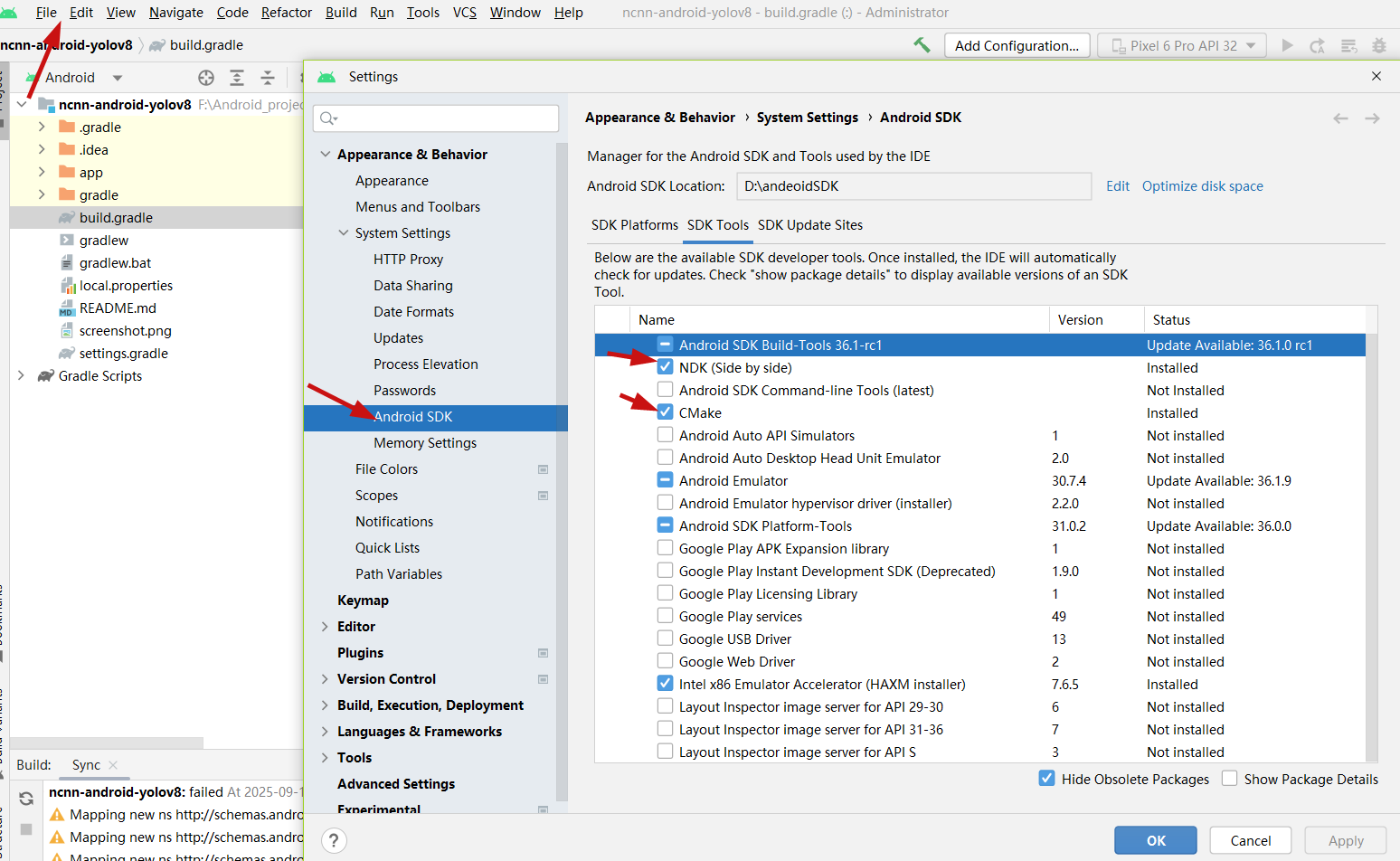

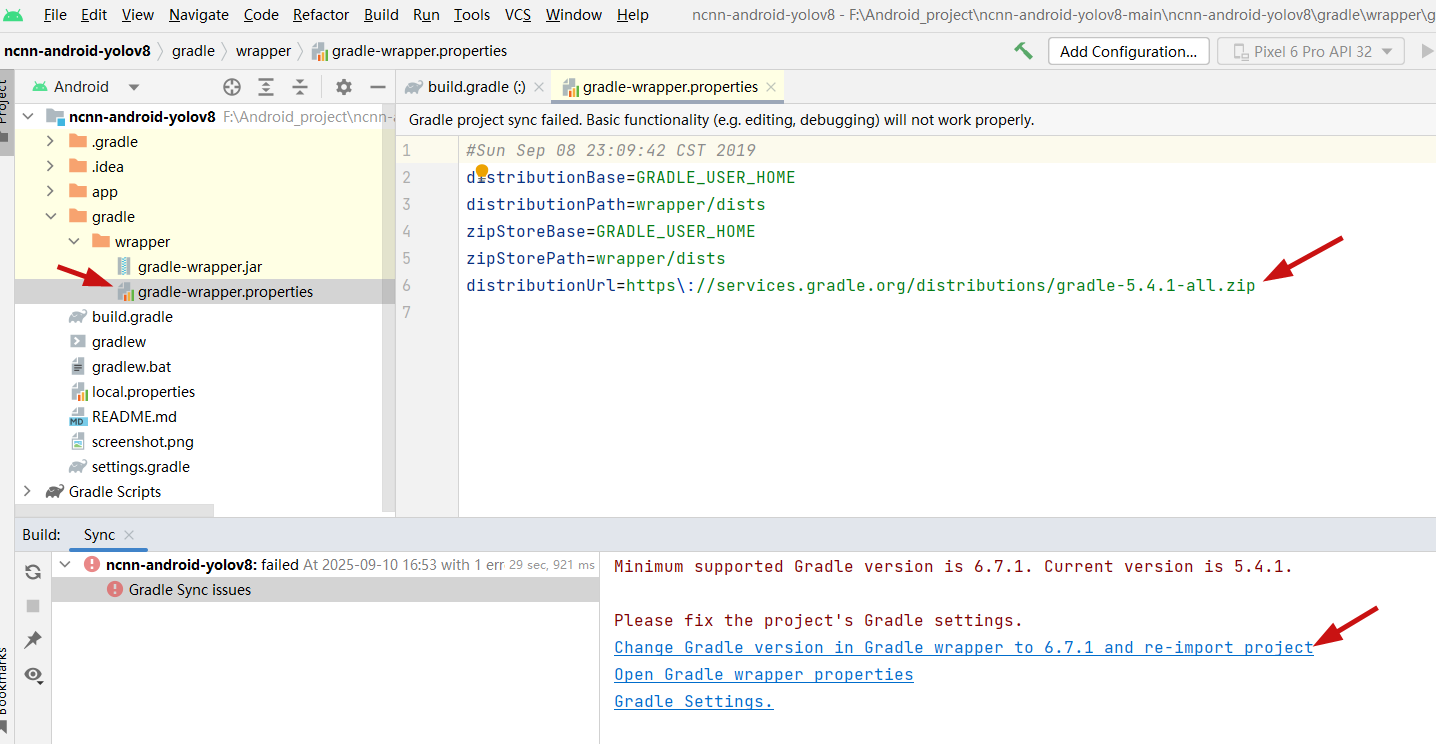

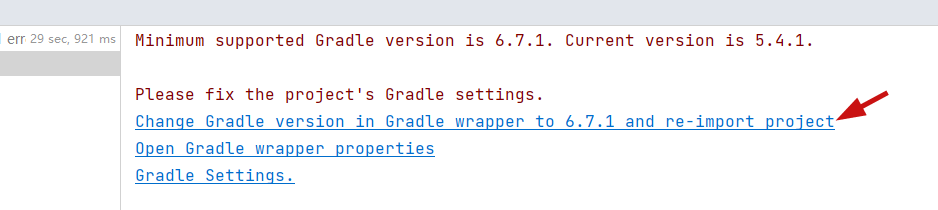

在编译过程中出现如下提示:

然后点击

python

Change Gradle version in Gradle wrapper to 6.7.1 and re-import project

点击后会开始下载相关依赖,如果速度较慢可以

python

distributionUrl=https://mirrors.cloud.tencent.com/gradle/gradle-6.7.1-bin.zip如下所示,构建完毕:

5、修改相关配置文件

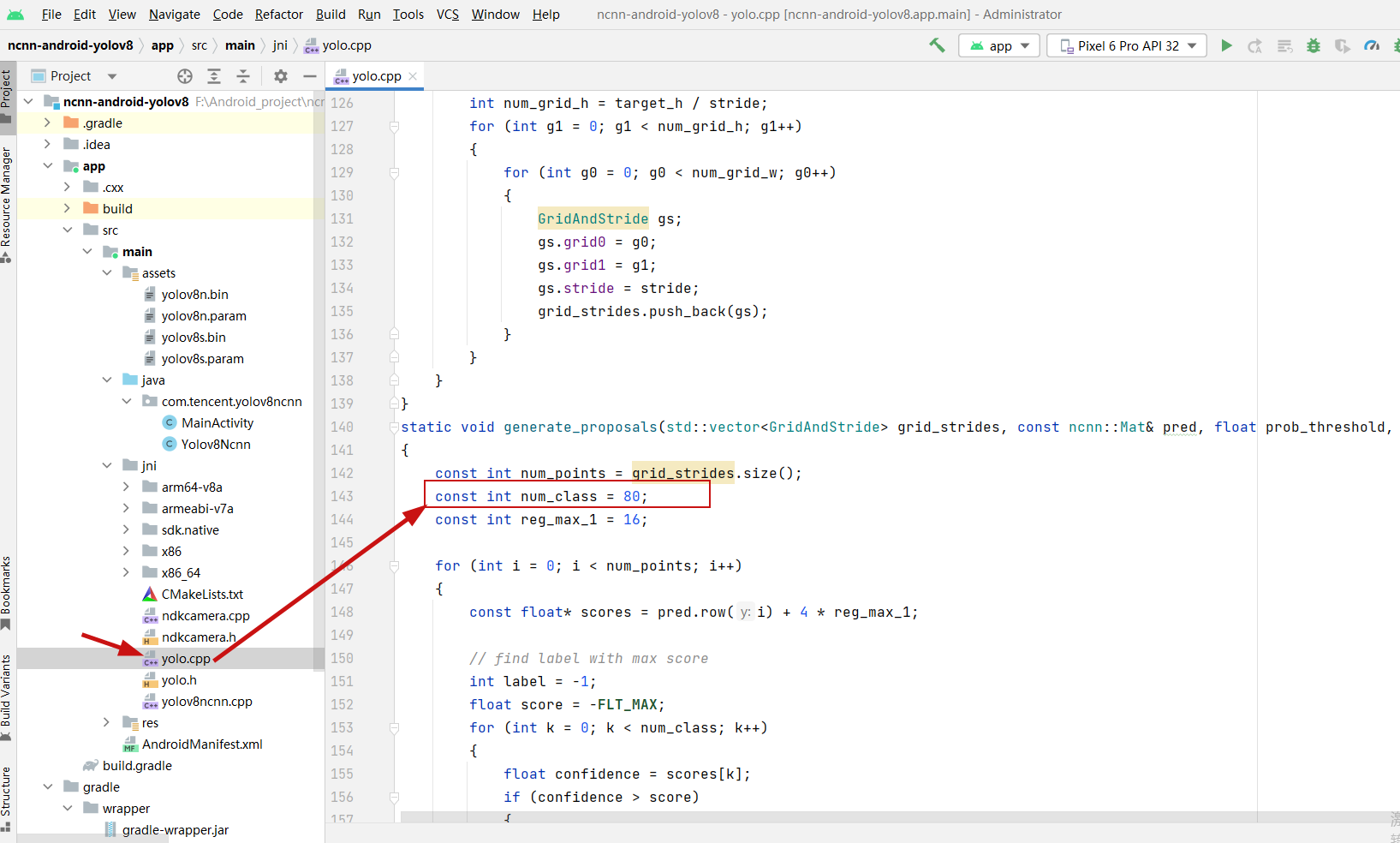

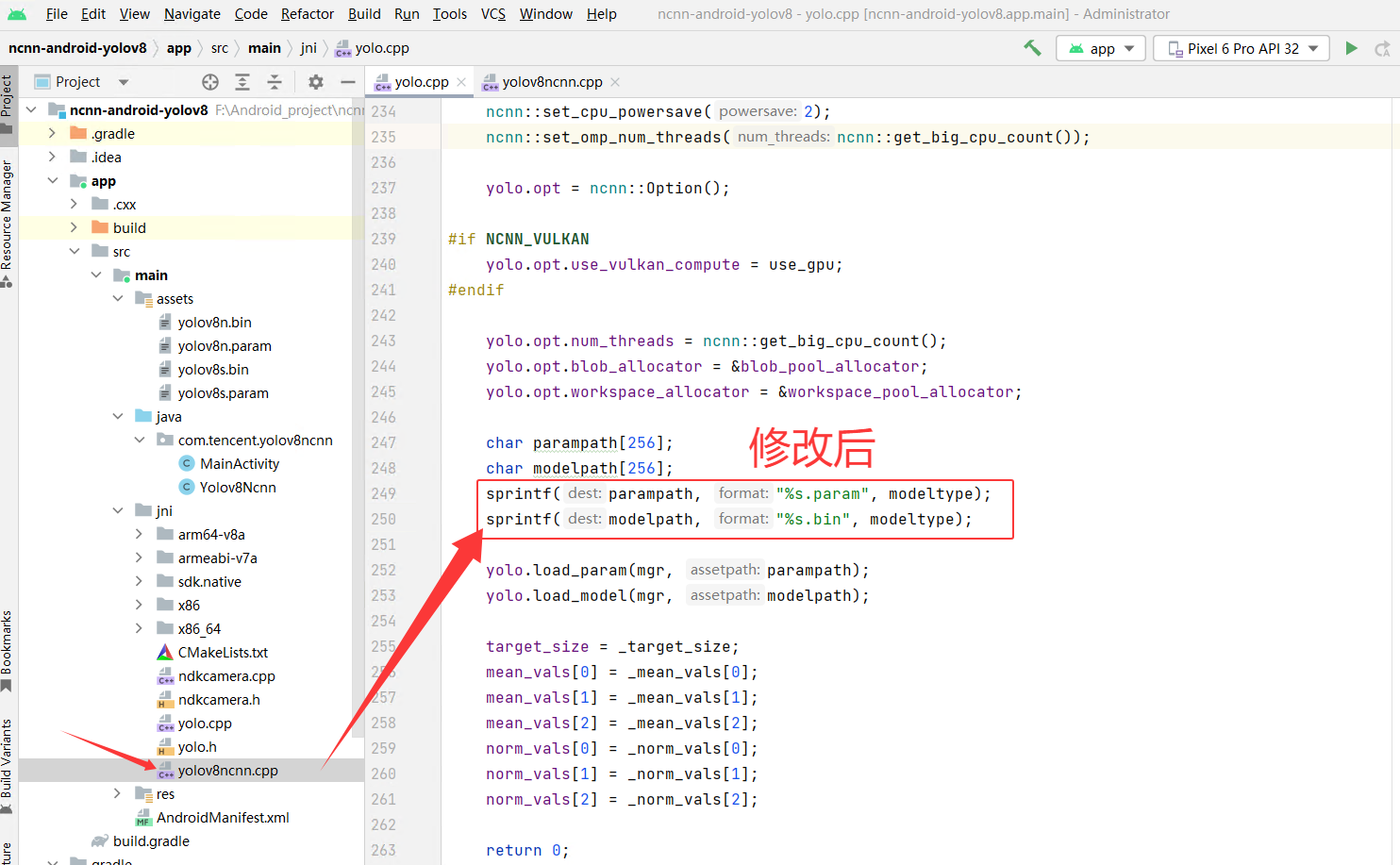

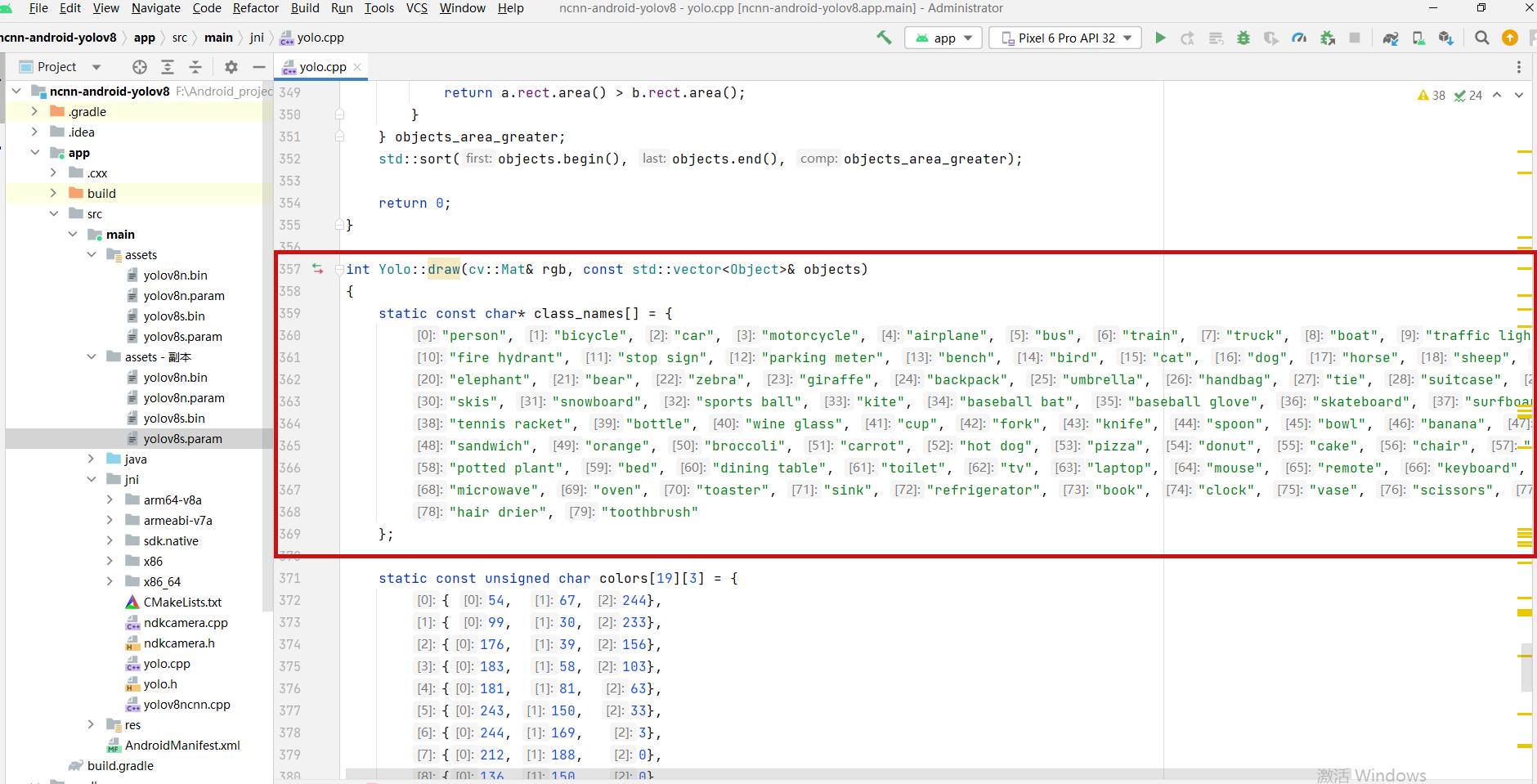

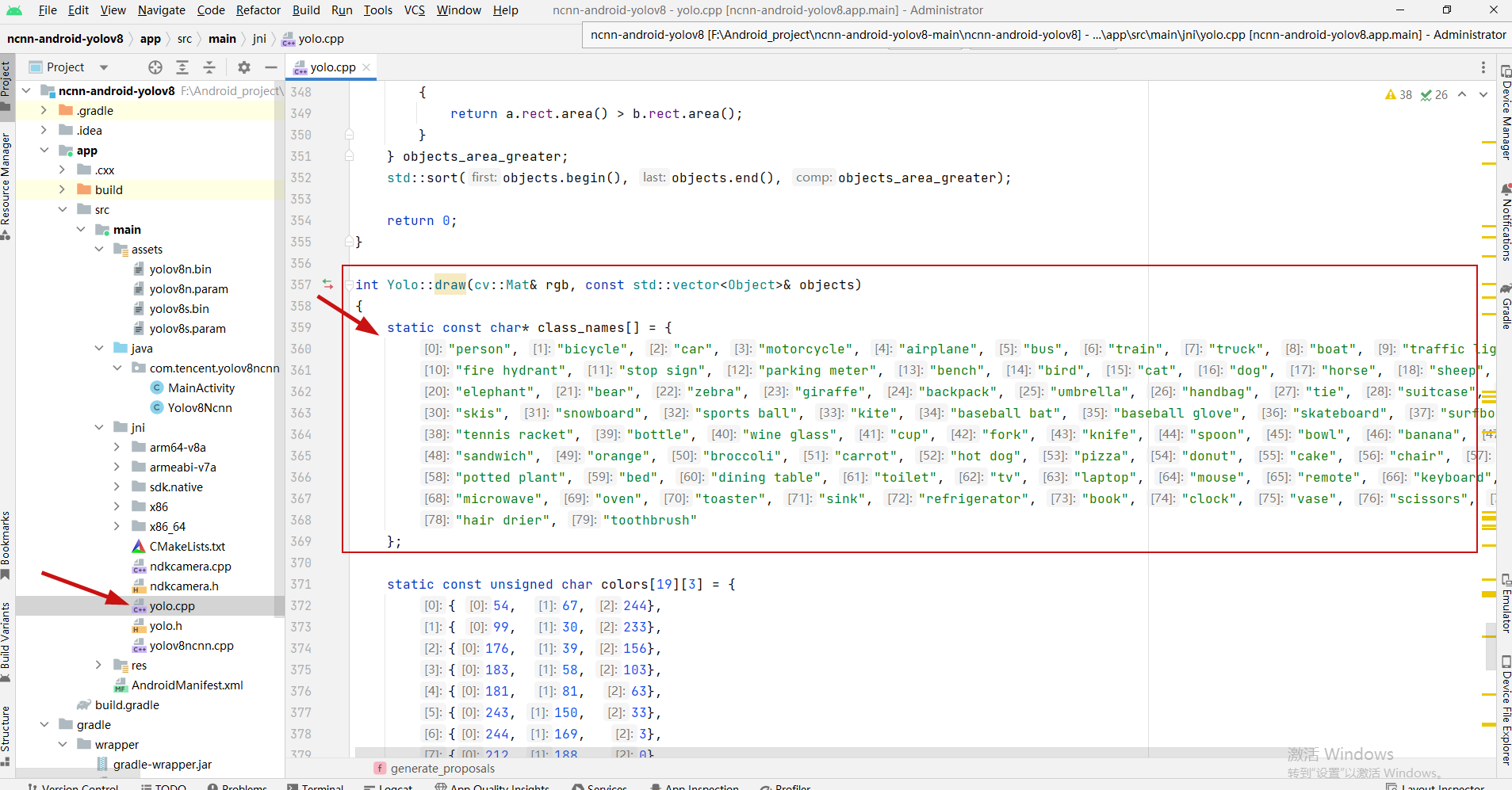

1、修改yolo.cpp文件

修改类别数量

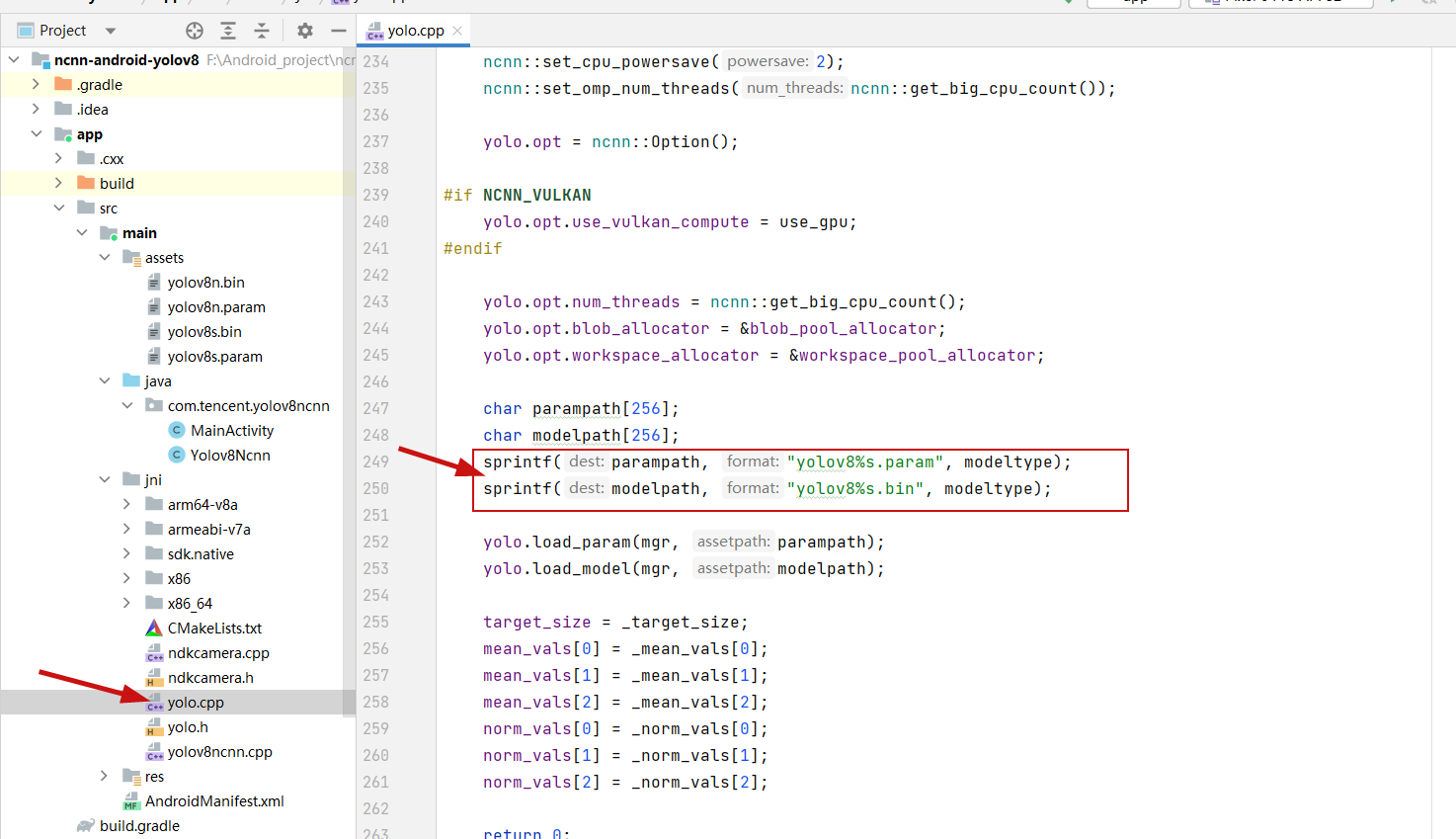

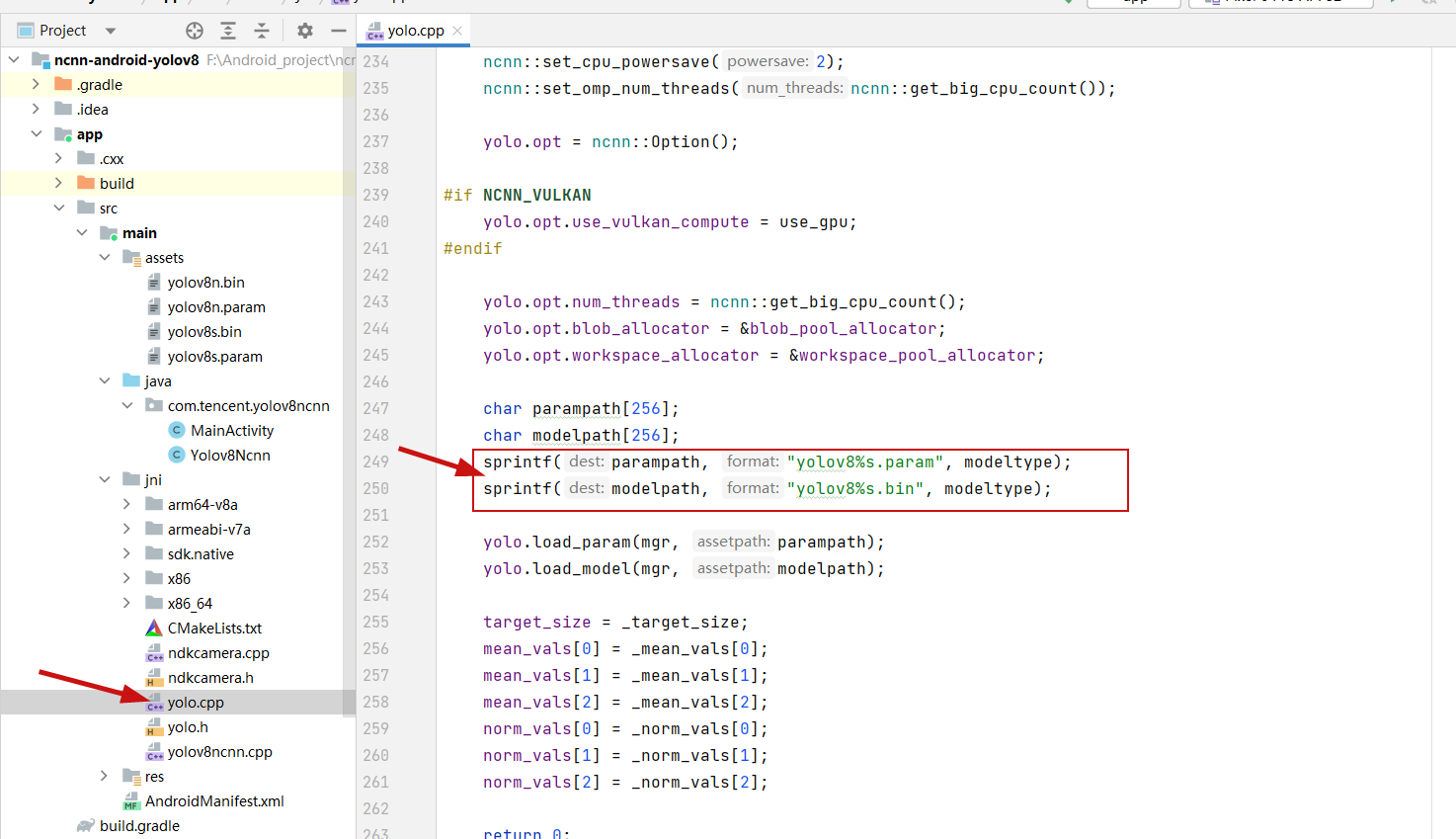

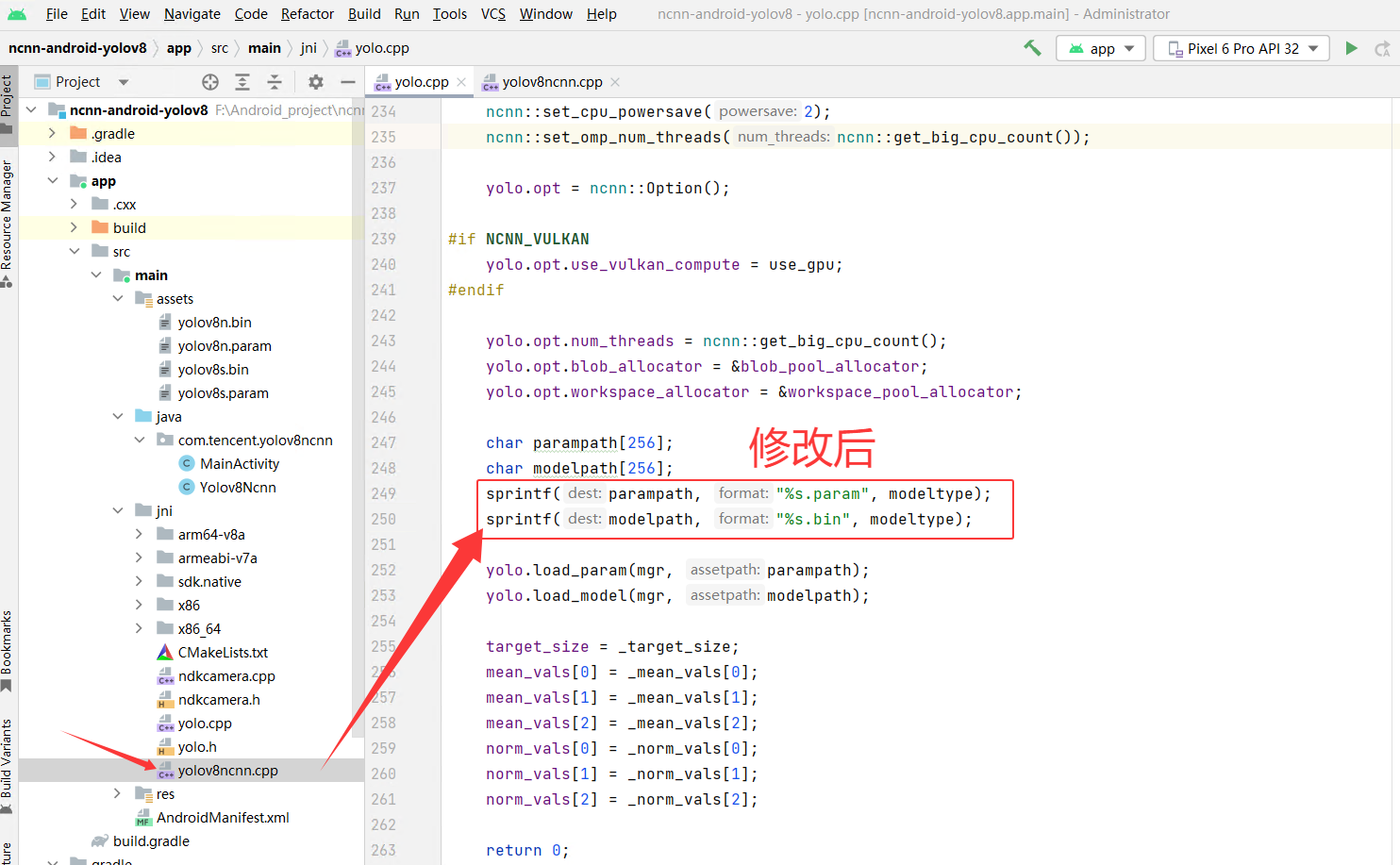

修改模型名称

修改前:

修改后:

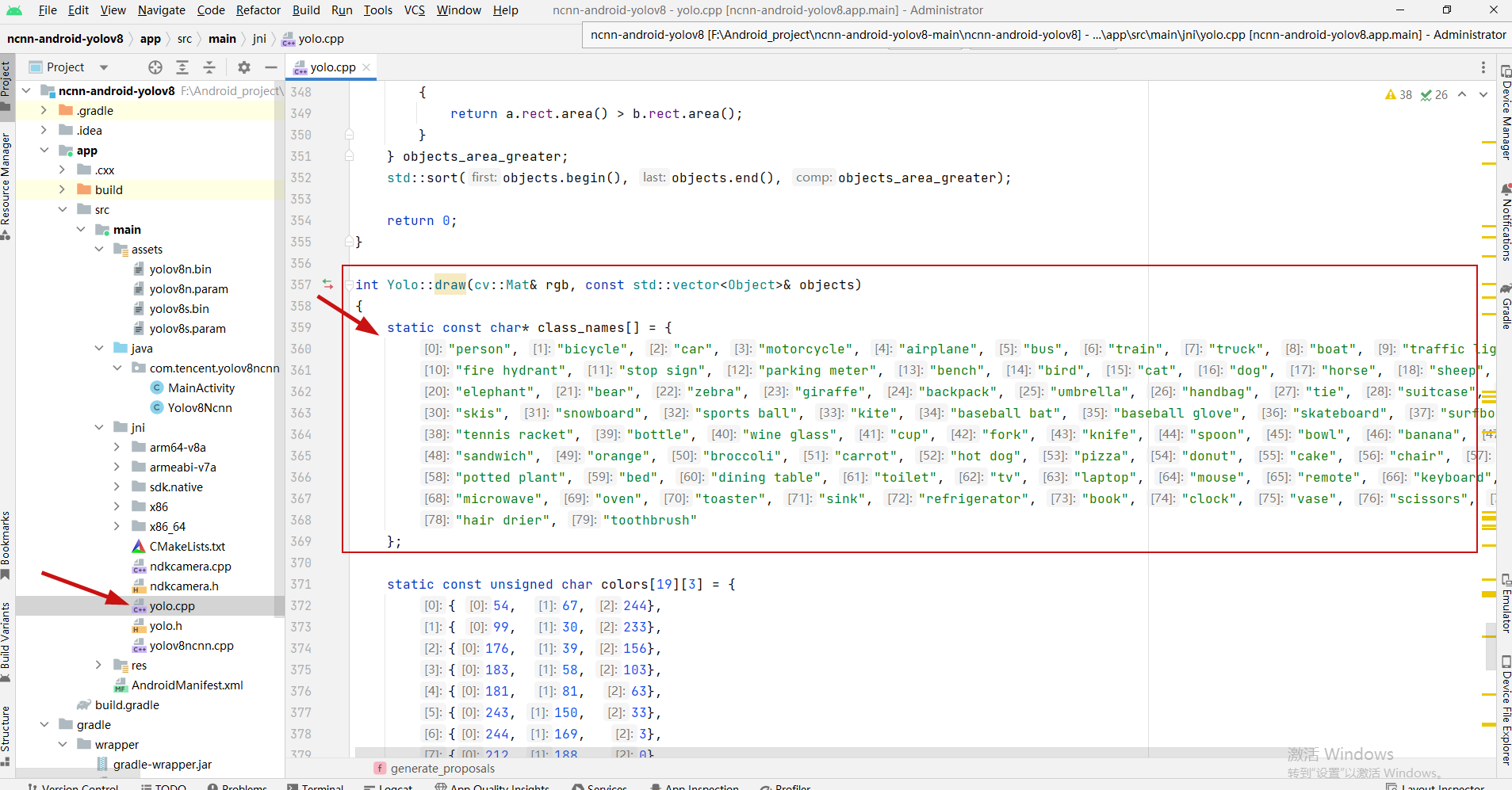

修改待检测类别标签:

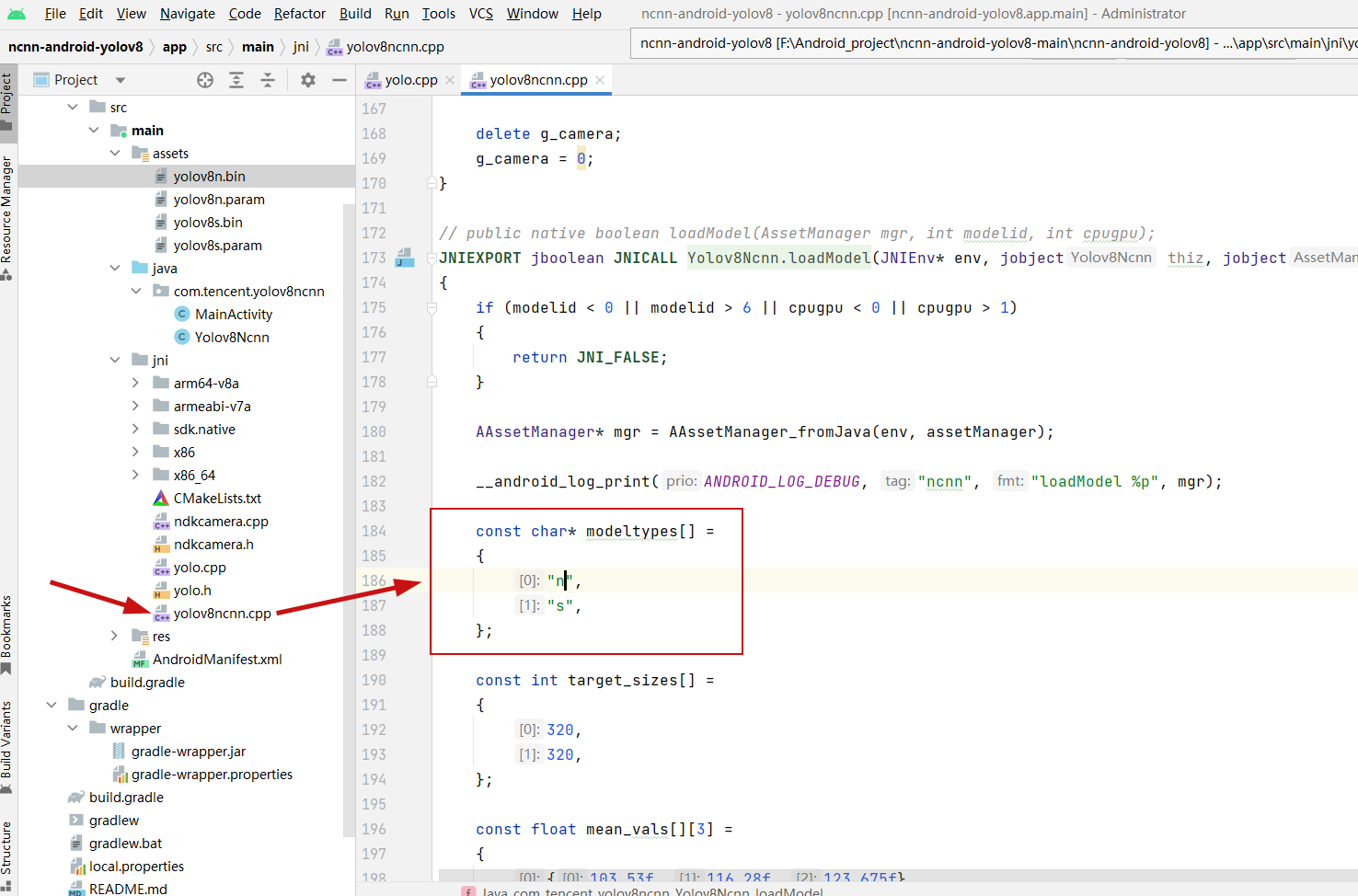

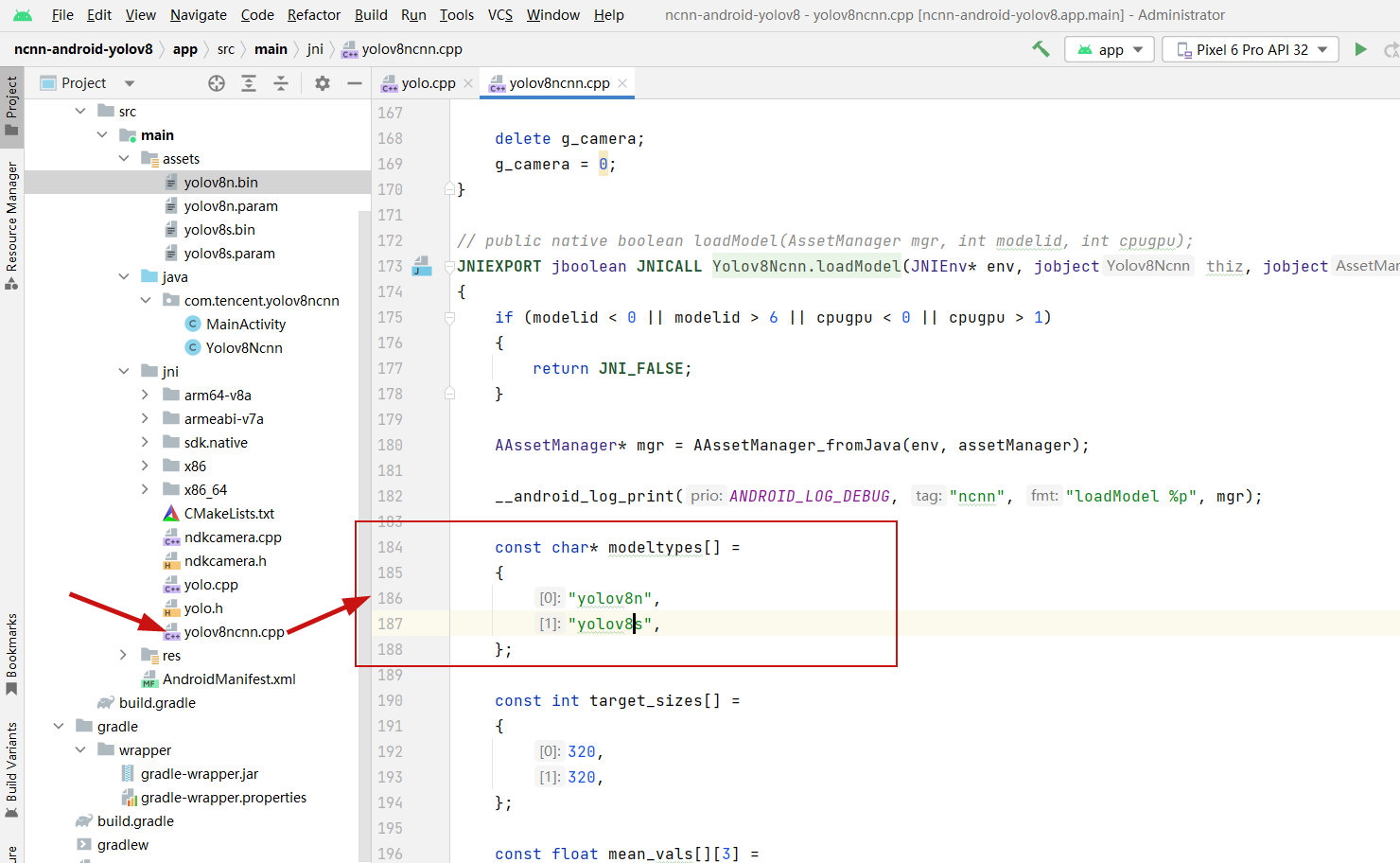

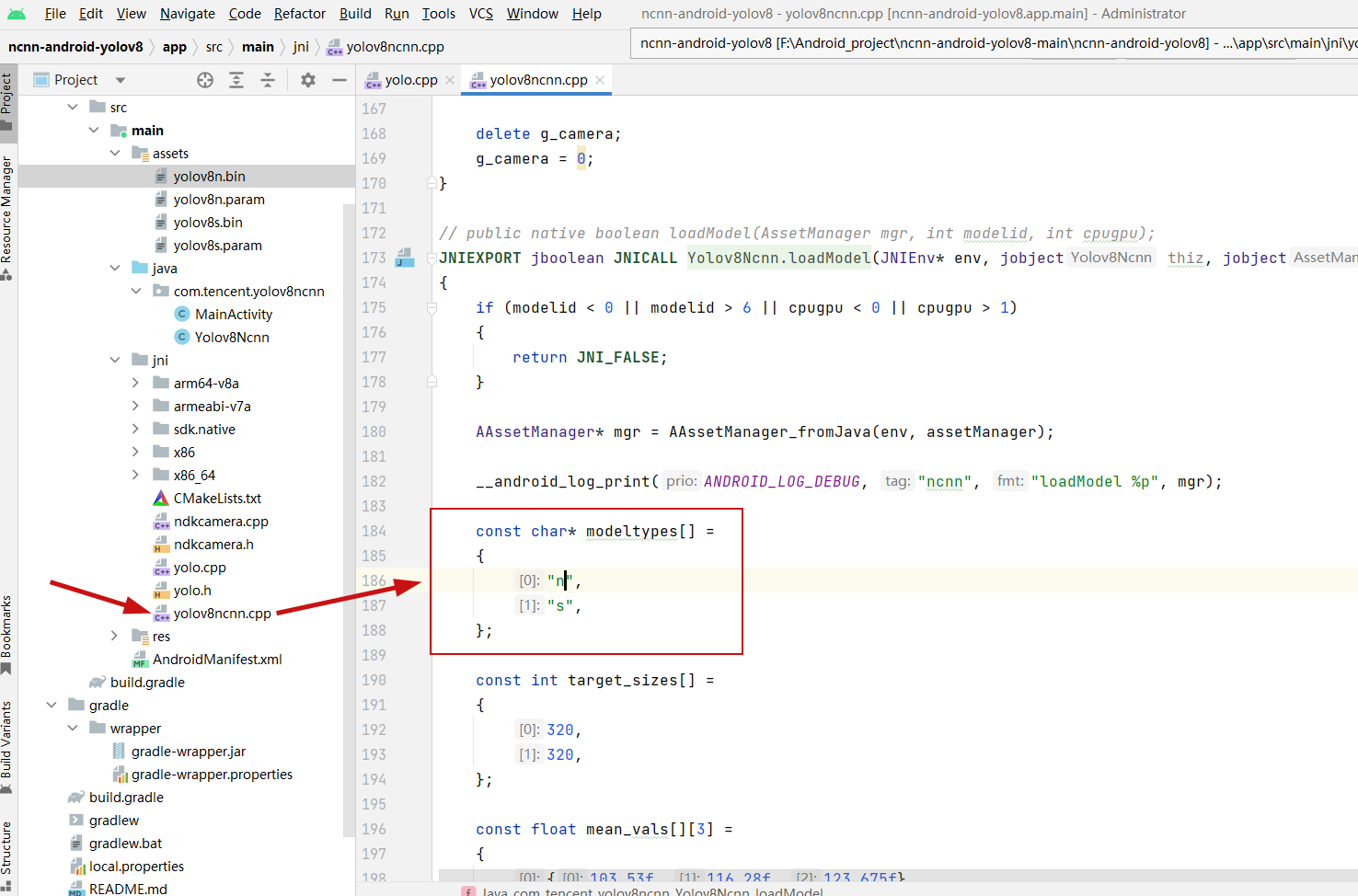

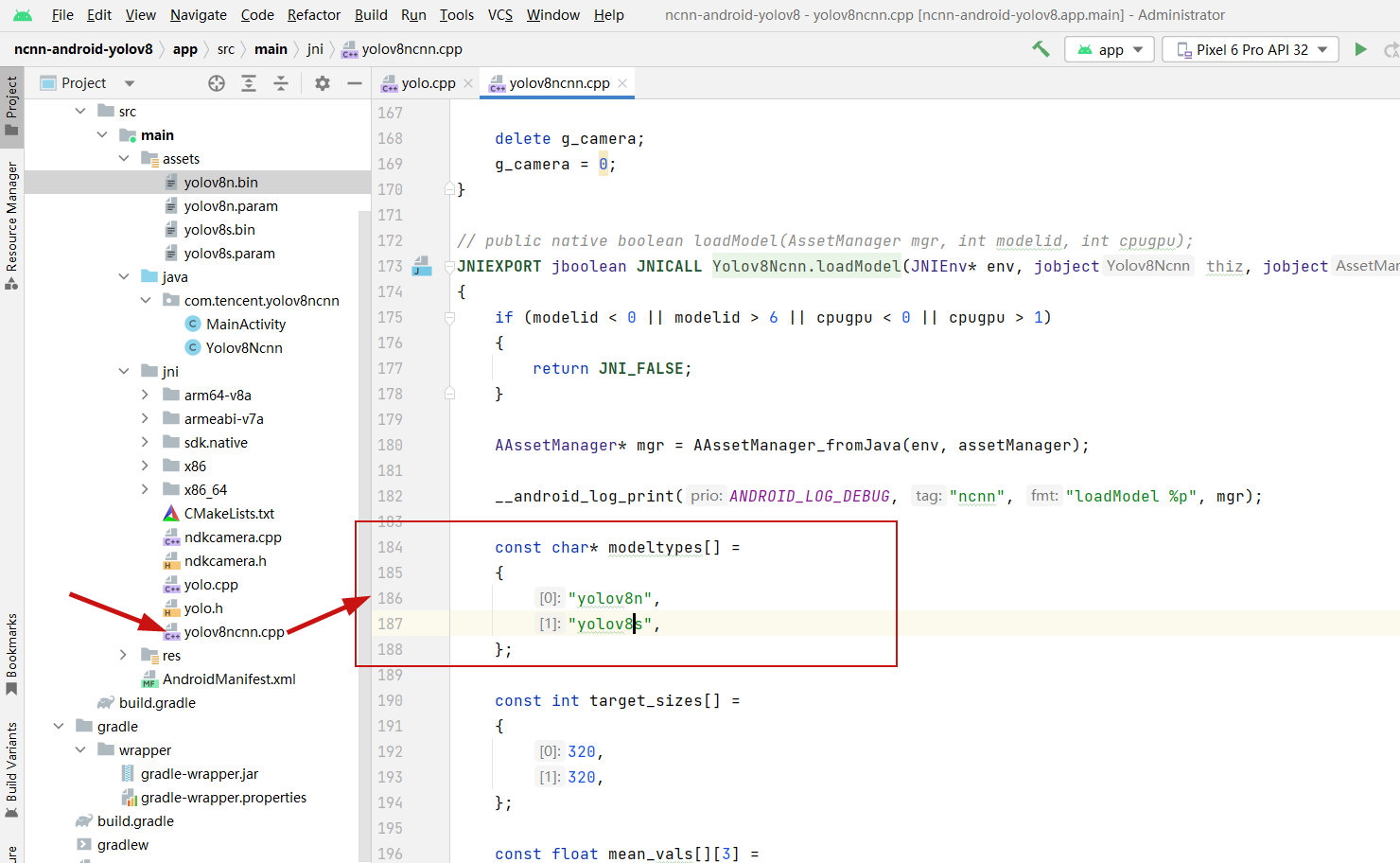

2、修改yolov8ncnn.cpp文件

在相同位置下,有yolov8ncnn.cpp文件

修改前:

修改后:

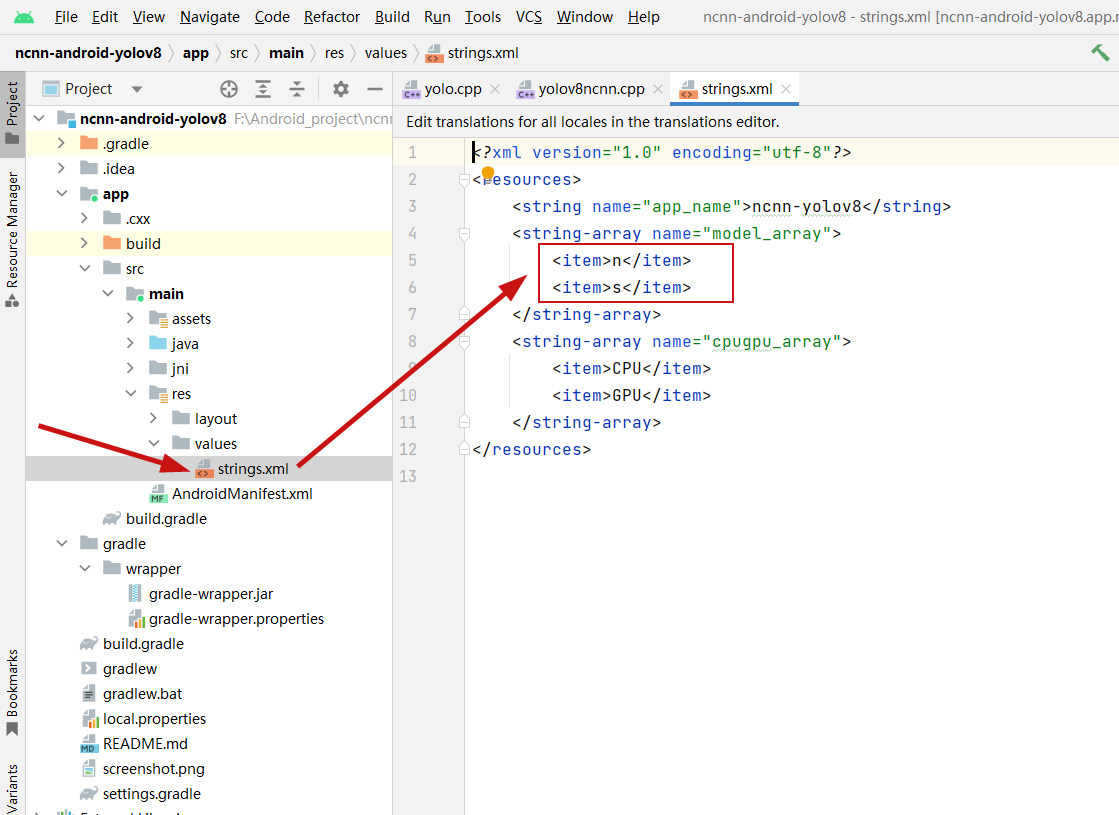

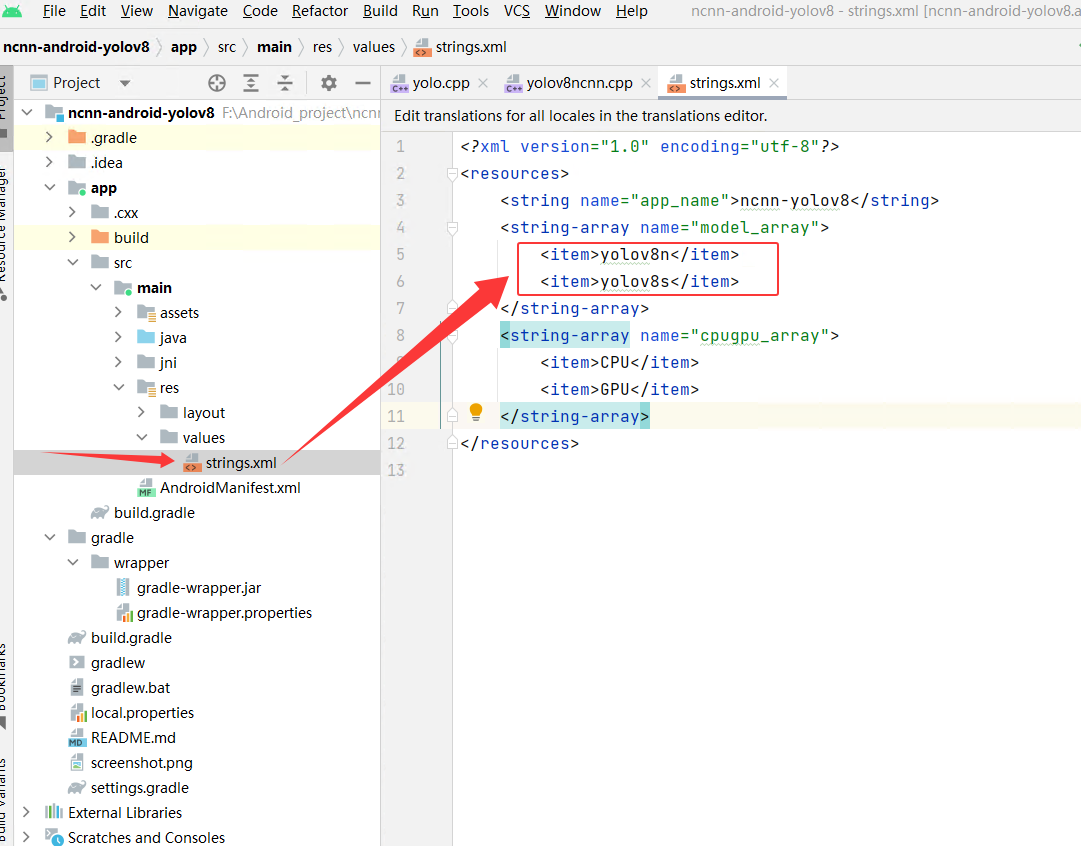

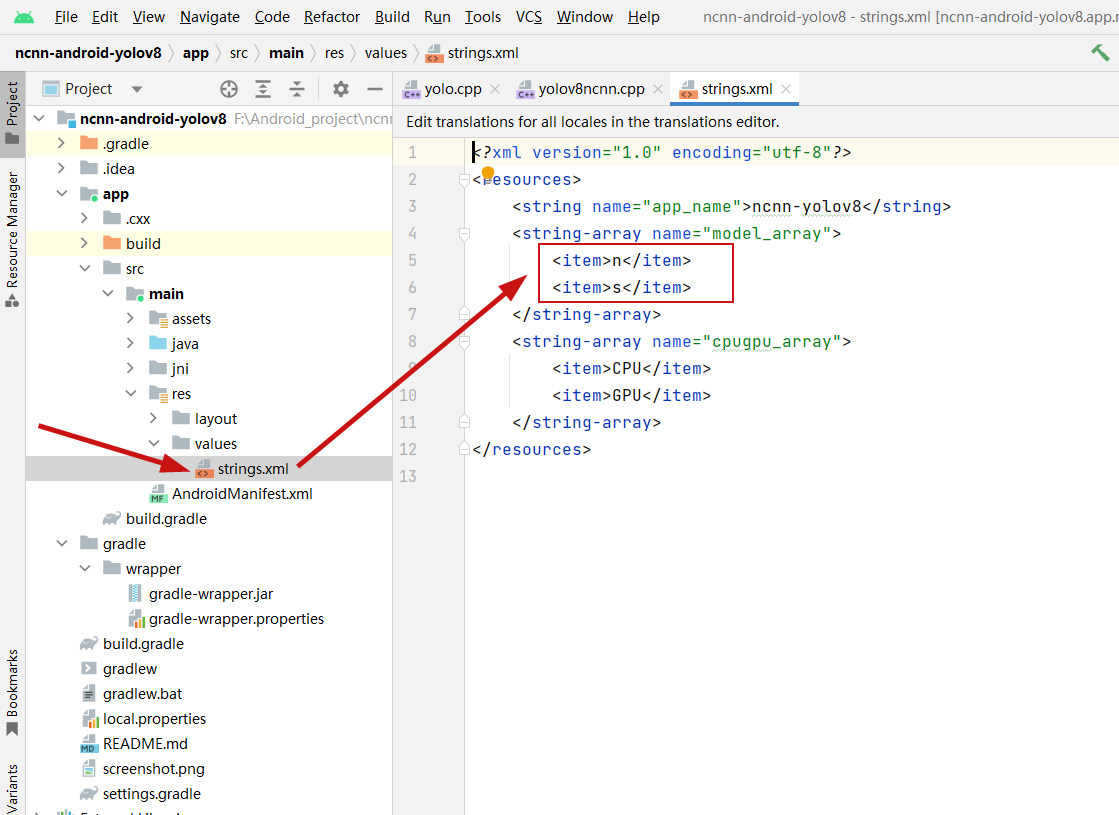

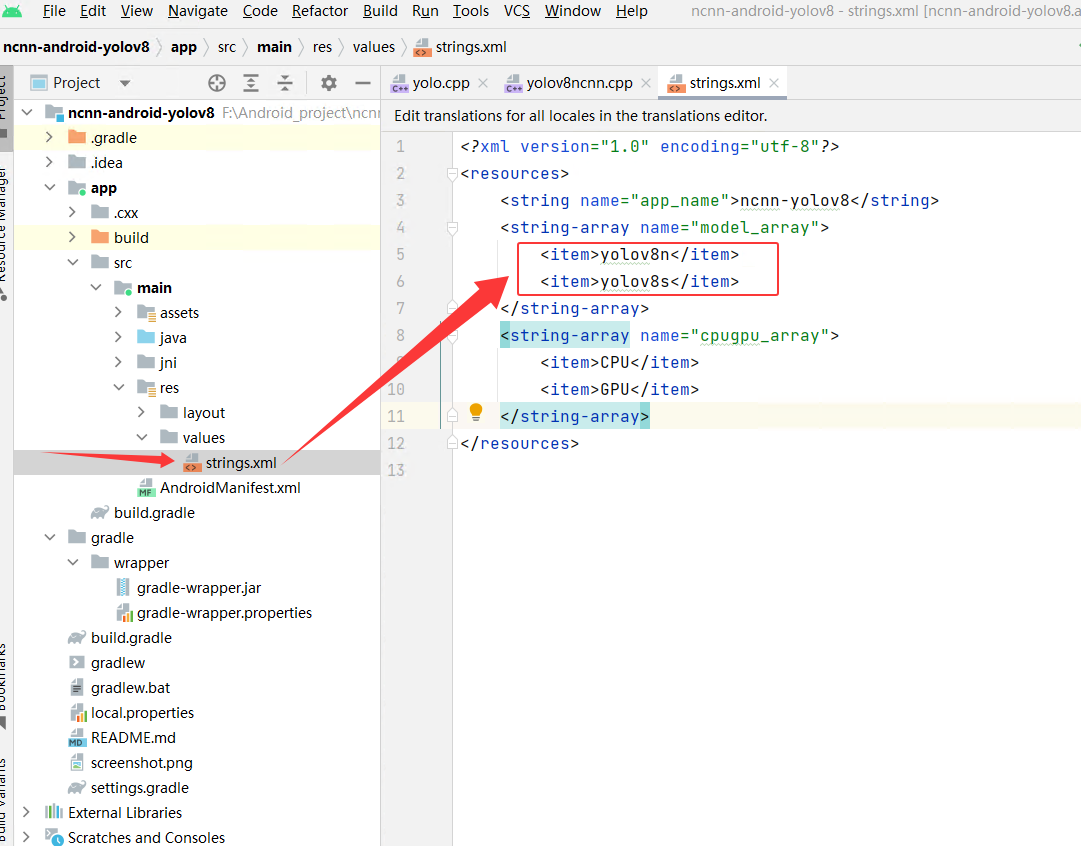

3、修改strings.xml文件

在ncnn-android-yolov8\app\src\main\res\values目录下:

修改前:

修改后:

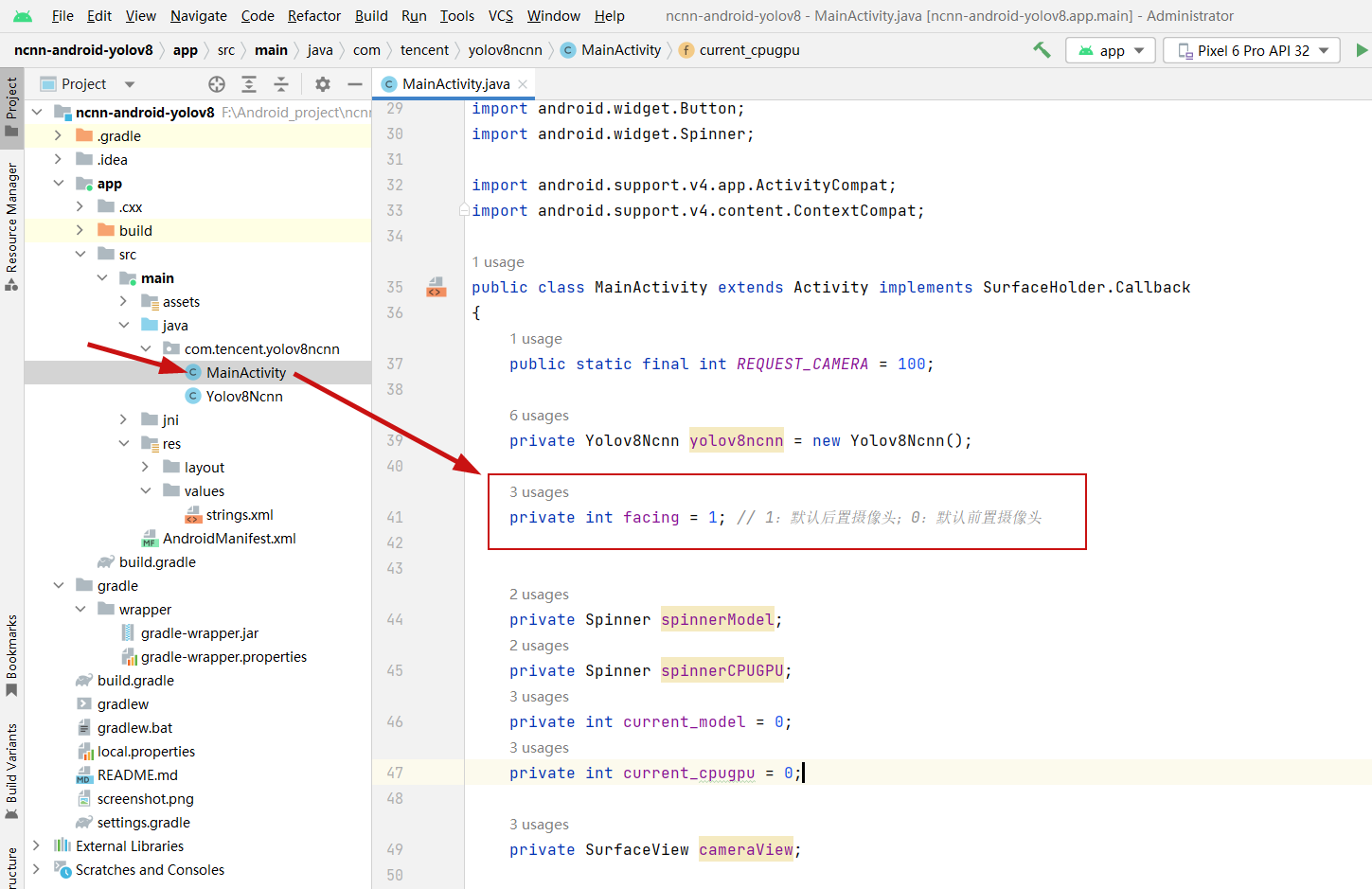

4、设置程序默认打开后置/前置摄像头

文件:ncnn-android-yolov8\app\src\main\java\com\tencent\yolov8ncnn\MainActivity.java

6、打包成APK包

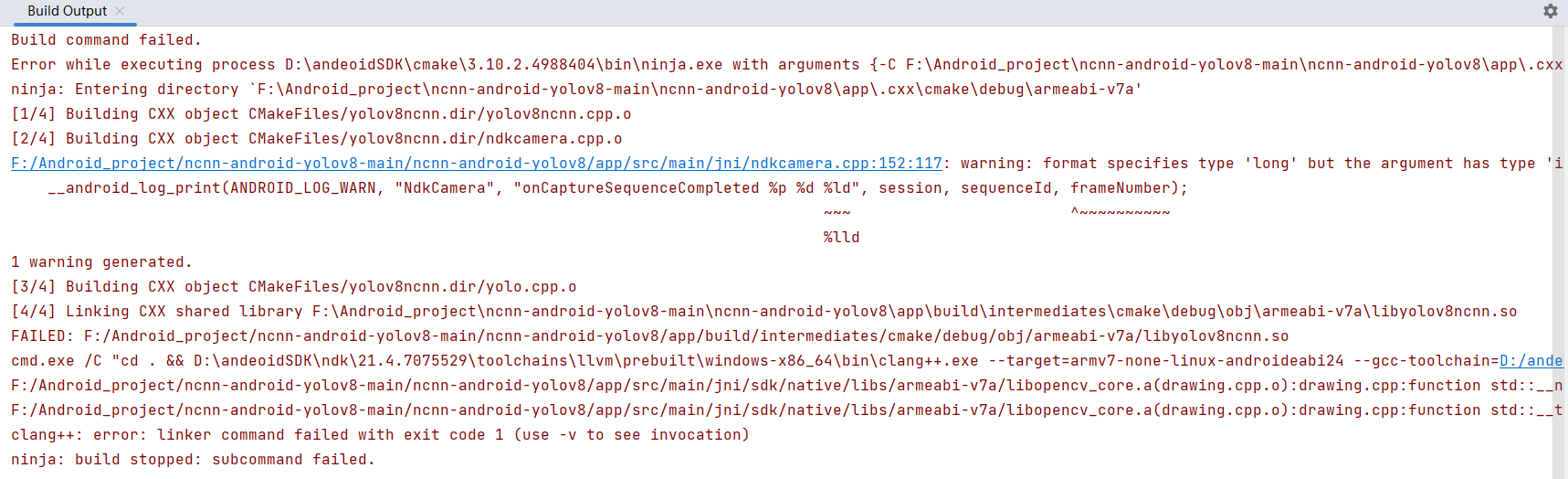

异常报错处理:

在打包过程中会出现如下报错:

python

Build command failed.

Error while executing process D:\andeoidSDK\cmake\3.10.2.4988404\bin\ninja.exe with arguments {-C F:\Android_project\ncnn-android-yolov8-main\ncnn-android-yolov8\app\.cxx\cmake\debug\armeabi-v7a yolov8ncnn}

ninja: Entering directory `F:\Android_project\ncnn-android-yolov8-main\ncnn-android-yolov8\app\.cxx\cmake\debug\armeabi-v7a'

[1/4] Building CXX object CMakeFiles/yolov8ncnn.dir/yolov8ncnn.cpp.o

[2/4] Building CXX object CMakeFiles/yolov8ncnn.dir/ndkcamera.cpp.o

F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/ndkcamera.cpp:152:117: warning: format specifies type 'long' but the argument has type 'int64_t' (aka 'long long') [-Wformat]

__android_log_print(ANDROID_LOG_WARN, "NdkCamera", "onCaptureSequenceCompleted %p %d %ld", session, sequenceId, frameNumber);

~~~ ^~~~~~~~~~~

%lld

1 warning generated.

[3/4] Building CXX object CMakeFiles/yolov8ncnn.dir/yolo.cpp.o

[4/4] Linking CXX shared library F:\Android_project\ncnn-android-yolov8-main\ncnn-android-yolov8\app\build\intermediates\cmake\debug\obj\armeabi-v7a\libyolov8ncnn.so

FAILED: F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/build/intermediates/cmake/debug/obj/armeabi-v7a/libyolov8ncnn.so

cmd.exe /C "cd . && D:\andeoidSDK\ndk\21.4.7075529\toolchains\llvm\prebuilt\windows-x86_64\bin\clang++.exe --target=armv7-none-linux-androideabi24 --gcc-toolchain=D:/andeoidSDK/ndk/21.4.7075529/toolchains/llvm/prebuilt/windows-x86_64 --sysroot=D:/andeoidSDK/ndk/21.4.7075529/toolchains/llvm/prebuilt/windows-x86_64/sysroot -fPIC -g -DANDROID -fdata-sections -ffunction-sections -funwind-tables -fstack-protector-strong -no-canonical-prefixes -D_FORTIFY_SOURCE=2 -march=armv7-a -mthumb -Wformat -Werror=format-security -O0 -fno-limit-debug-info -Wl,--exclude-libs,libgcc.a -Wl,--exclude-libs,libgcc_real.a -Wl,--exclude-libs,libatomic.a -static-libstdc++ -Wl,--build-id -Wl,--fatal-warnings -Wl,--exclude-libs,libunwind.a -Wl,--no-undefined -Qunused-arguments -shared -Wl,-soname,libyolov8ncnn.so -o F:\Android_project\ncnn-android-yolov8-main\ncnn-android-yolov8\app\build\intermediates\cmake\debug\obj\armeabi-v7a\libyolov8ncnn.so CMakeFiles/yolov8ncnn.dir/yolov8ncnn.cpp.o CMakeFiles/yolov8ncnn.dir/yolo.cpp.o CMakeFiles/yolov8ncnn.dir/ndkcamera.cpp.o F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/armeabi-v7a/lib/libncnn.a F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/sdk/native/libs/armeabi-v7a/libopencv_core.a F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/sdk/native/libs/armeabi-v7a/libopencv_imgproc.a -lcamera2ndk -lmediandk -Wl,-wrap,__kmp_affinity_determine_capable F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/armeabi-v7a/lib/libglslang.a F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/armeabi-v7a/lib/libSPIRV.a F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/armeabi-v7a/lib/libMachineIndependent.a F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/armeabi-v7a/lib/libOGLCompiler.a F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/armeabi-v7a/lib/libOSDependent.a F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/armeabi-v7a/lib/libGenericCodeGen.a -landroid -ljnigraphics F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/sdk/native/libs/armeabi-v7a/libopencv_core.a -fopenmp -static-openmp -lm -ldl -llog -latomic -lm && cd ."

F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/sdk/native/libs/armeabi-v7a/libopencv_core.a(drawing.cpp.o):drawing.cpp:function std::__ndk1::__throw_length_error[abi:nn190000](char const*): error: undefined reference to 'std::__ndk1::__libcpp_verbose_abort(char const*, ...)'

F:/Android_project/ncnn-android-yolov8-main/ncnn-android-yolov8/app/src/main/jni/sdk/native/libs/armeabi-v7a/libopencv_core.a(drawing.cpp.o):drawing.cpp:function std::__throw_bad_array_new_length[abi:nn190000](): error: undefined reference to 'std::__ndk1::__libcpp_verbose_abort(char const*, ...)'

clang++: error: linker command failed with exit code 1 (use -v to see invocation)

ninja: build stopped: subcommand failed.

解决方案是:

去 OpenCV 官方 Android SDK下载与 NDK21 兼容的版本。

重新打包生成apk!!!

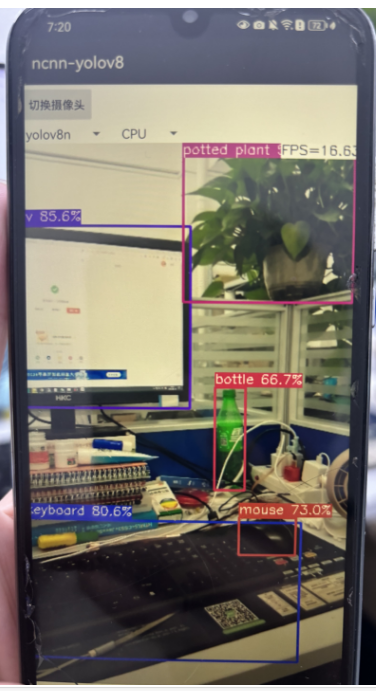

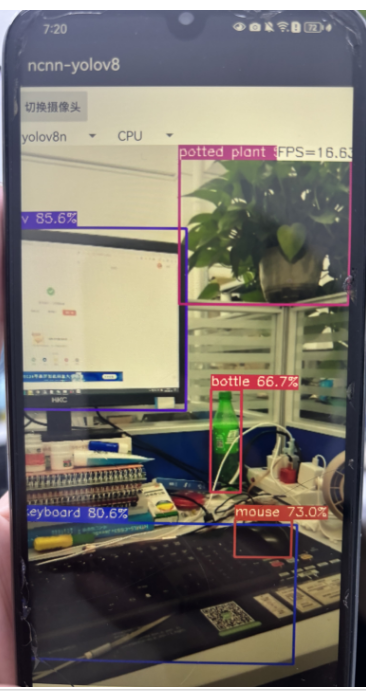

7、测试效果

8、部署自己训练的模型

我选择的yolov8版本是v8.2.99

python

https://github.com/ultralytics/ultralytics/tree/v8.2.99我安装的pytorch版本是torch==2.3.0+cu118

python

pip install ultralytics==8.2.99 -i https://pypi.tuna.tsinghua.edu.cn/simple1、配置文件修改

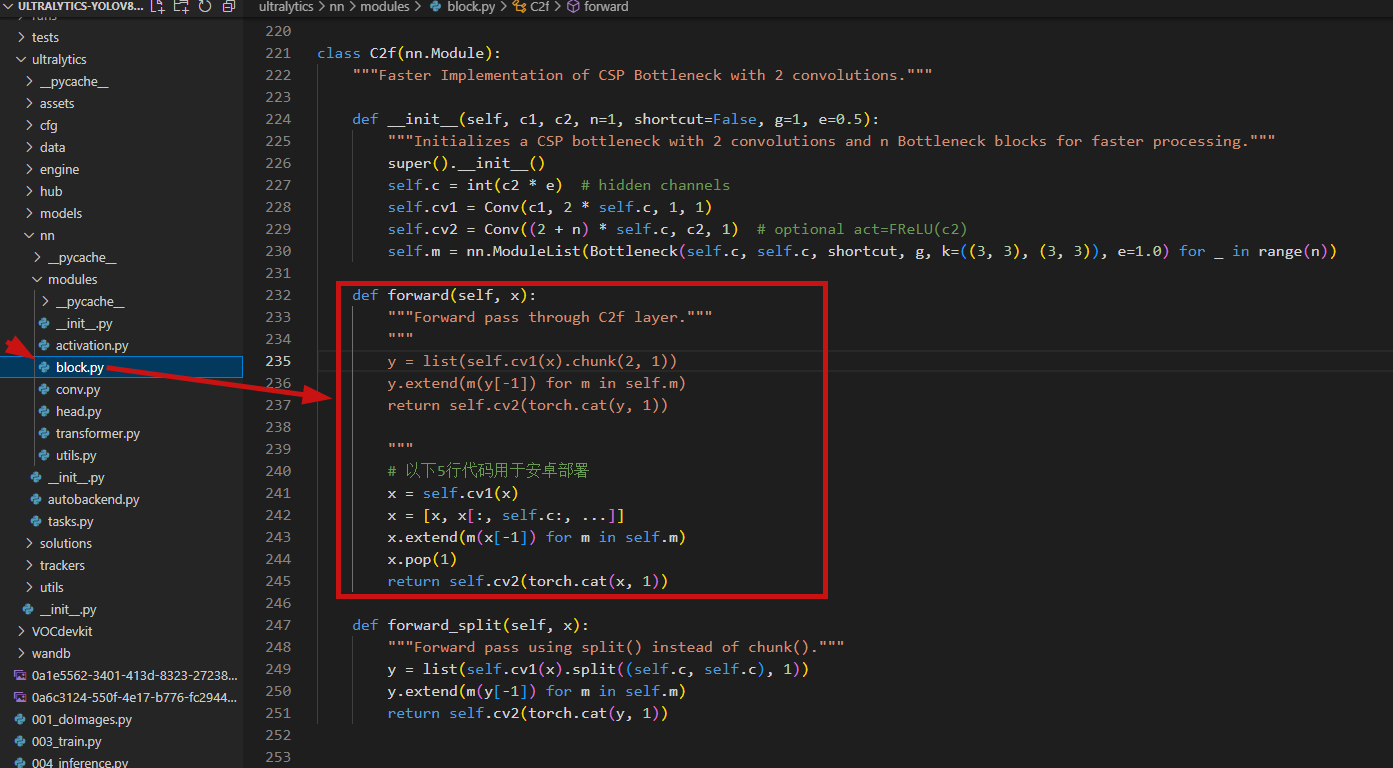

ultralytics/nn/modules/block.py

以下代码用于导出.onnx模型文件阶段,模型训练时不用调整,所以建议使用两套代码,一套训练,一套导出

↓↓↓↓↓

python

def forward(self, x):

"""Forward pass through C2f layer."""

"""

y = list(self.cv1(x).chunk(2, 1))

y.extend(m(y[-1]) for m in self.m)

return self.cv2(torch.cat(y, 1))

"""

# 以下5行代码用于安卓部署

x = self.cv1(x)

x = [x, x[:, self.c:, ...]]

x.extend(m(x[-1]) for m in self.m)

x.pop(1)

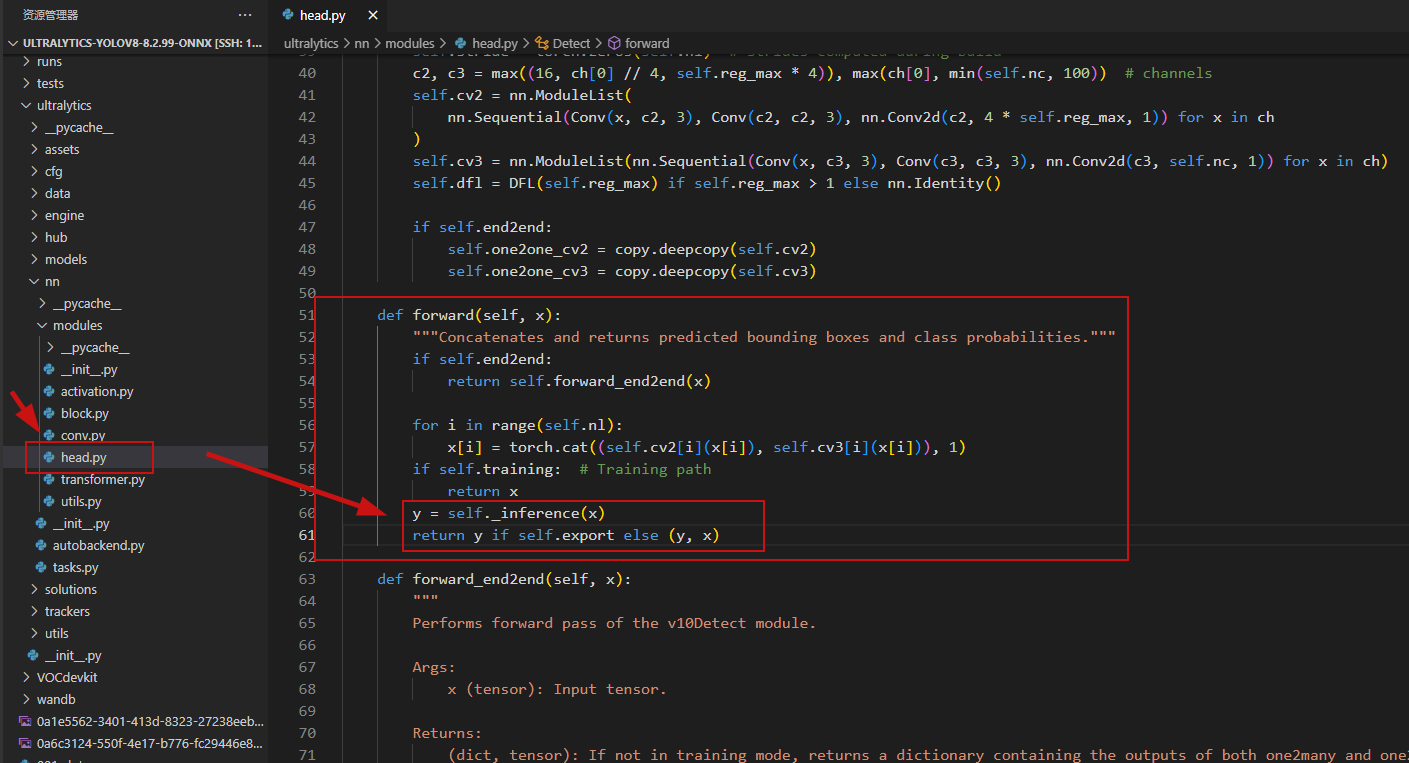

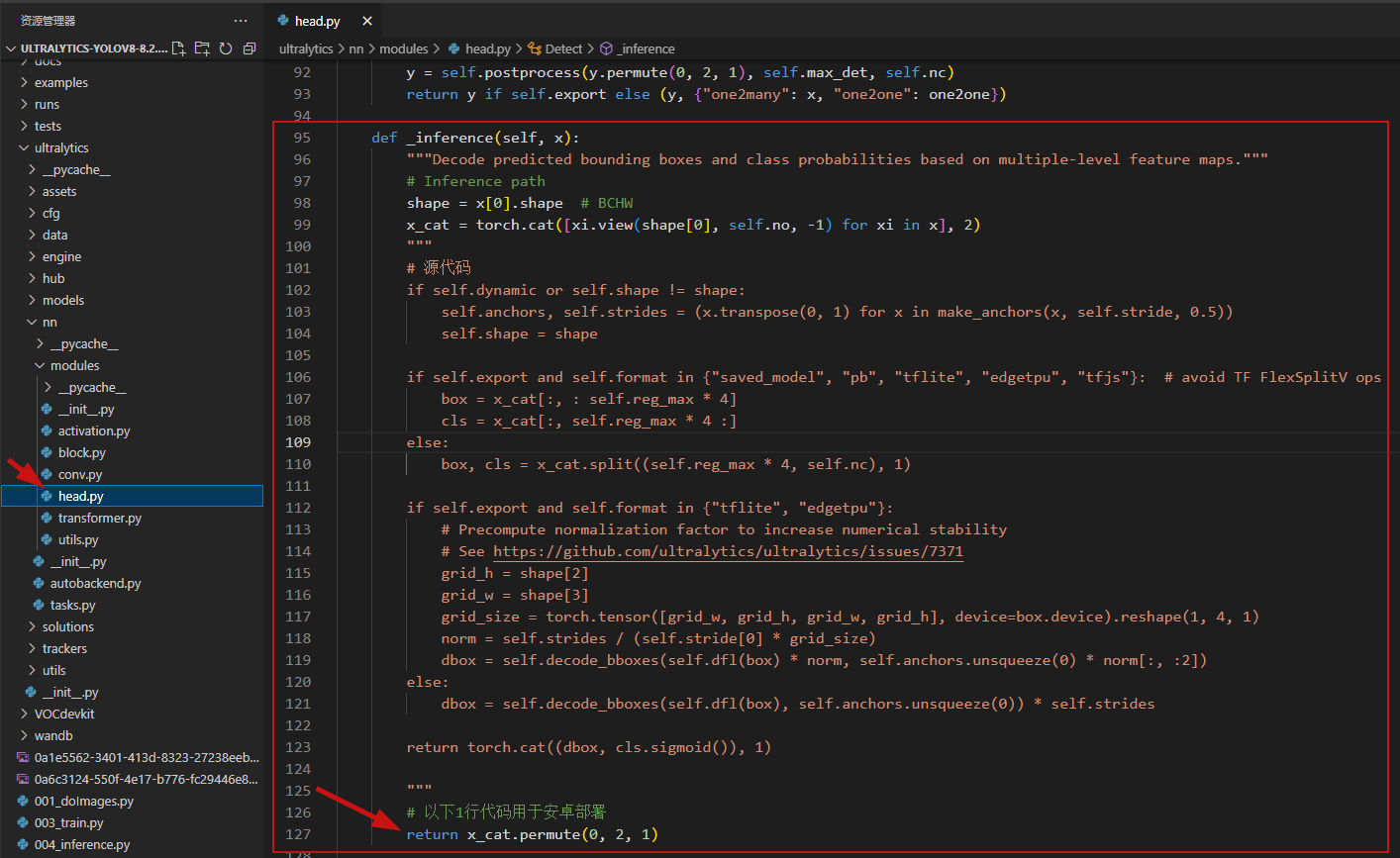

return self.cv2(torch.cat(x, 1))ultralytics/nn/modules/head.py

以下代码用于导出.onnx模型文件阶段,模型训练时不用调整,所以建议使用两套代码,一套训练,一套导出

↓↓↓↓↓

python

def forward(self, x):

"""Concatenates and returns predicted bounding boxes and class probabilities."""

if self.end2end:

return self.forward_end2end(x)

for i in range(self.nl):

x[i] = torch.cat((self.cv2[i](x[i]), self.cv3[i](x[i])), 1)

if self.training: # Training path

return x

"""

y = self._inference(x)

return y if self.export else (y, x)

"""

# 以下2行代码用于安卓部署

shape = x[0].shape

return torch.cat([xi.view(shape[0], self.no, -1) for xi in x], 2).permute(0, 2, 1)ultralytics/nn/modules/head.py

以下代码用于导出.onnx模型文件阶段,模型训练时不用调整,所以建议使用两套代码,一套训练,一套导出

↓↓↓↓↓

python

def _inference(self, x):

"""Decode predicted bounding boxes and class probabilities based on multiple-level feature maps."""

# Inference path

shape = x[0].shape # BCHW

x_cat = torch.cat([xi.view(shape[0], self.no, -1) for xi in x], 2)

"""

# 源代码

if self.dynamic or self.shape != shape:

self.anchors, self.strides = (x.transpose(0, 1) for x in make_anchors(x, self.stride, 0.5))

self.shape = shape

if self.export and self.format in {"saved_model", "pb", "tflite", "edgetpu", "tfjs"}: # avoid TF FlexSplitV ops

box = x_cat[:, : self.reg_max * 4]

cls = x_cat[:, self.reg_max * 4 :]

else:

box, cls = x_cat.split((self.reg_max * 4, self.nc), 1)

if self.export and self.format in {"tflite", "edgetpu"}:

# Precompute normalization factor to increase numerical stability

# See https://github.com/ultralytics/ultralytics/issues/7371

grid_h = shape[2]

grid_w = shape[3]

grid_size = torch.tensor([grid_w, grid_h, grid_w, grid_h], device=box.device).reshape(1, 4, 1)

norm = self.strides / (self.stride[0] * grid_size)

dbox = self.decode_bboxes(self.dfl(box) * norm, self.anchors.unsqueeze(0) * norm[:, :2])

else:

dbox = self.decode_bboxes(self.dfl(box), self.anchors.unsqueeze(0)) * self.strides

return torch.cat((dbox, cls.sigmoid()), 1)

"""

# 以下1行代码用于安卓部署

return x_cat.permute(0, 2, 1)2、导出onnx文件

安装相关依赖库:

python

pip install onnx==1.12.0 onnxslim==0.1.34 --default-timeout=100 -i https://pypi.tuna.tsinghua.edu.cn/simple在根目录新建一个export.py脚本,打开键入以下代码并运行:

python

# 在根目录新建一个export.py脚本,打开键入以下代码并运行:

from ultralytics import YOLO

model = YOLO("runs/detect/train/weights/best.pt")

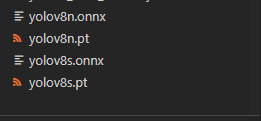

model.export(format="onnx", opset=12, simplify=True)运行完毕上述脚本后,得到相关的.onnx格式文件

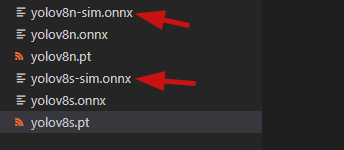

3、onnx去冗余

.pt模型导出的.onnx模型包含许多冗余的维度,这是ncnn不支持的,所以需要去掉冗余的维度。一定要去冗余!!!!要不后面转换容易报错

安装onnx-simplifier的指令:

python

pip install onnx-simplifier --default-timeout=100 -i https://pypi.tuna.tsinghua.edu.cn/simple去冗余指令:

python

# 转换yolov8n

python -m onnxsim yolov8n.onnx yolov8n-sim.onnx

# 转换yolov8s

python -m onnxsim yolov8s.onnx yolov8s-sim.onnx

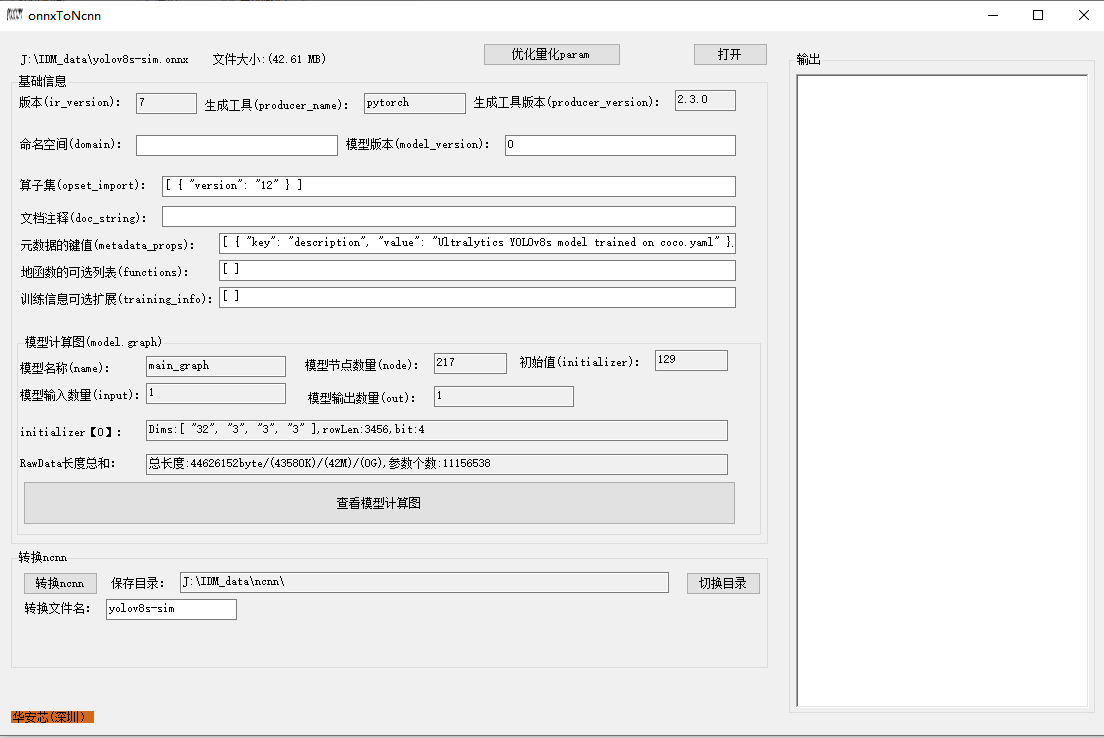

4、将得到的.onnx文件转换成.ncnn格式文件:

转换工具下载地址:

python

https://download.csdn.net/download/guoqingru0311/91903993

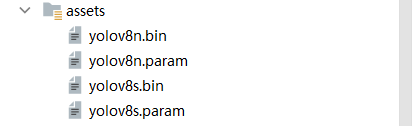

转换完毕后得到如下格式的文件

5、相关配置文件的修改

将上述转换得到的文件重命名,放置在

ncnn-android-yolov8\app\src\main\assets目录下

修改如下文件:

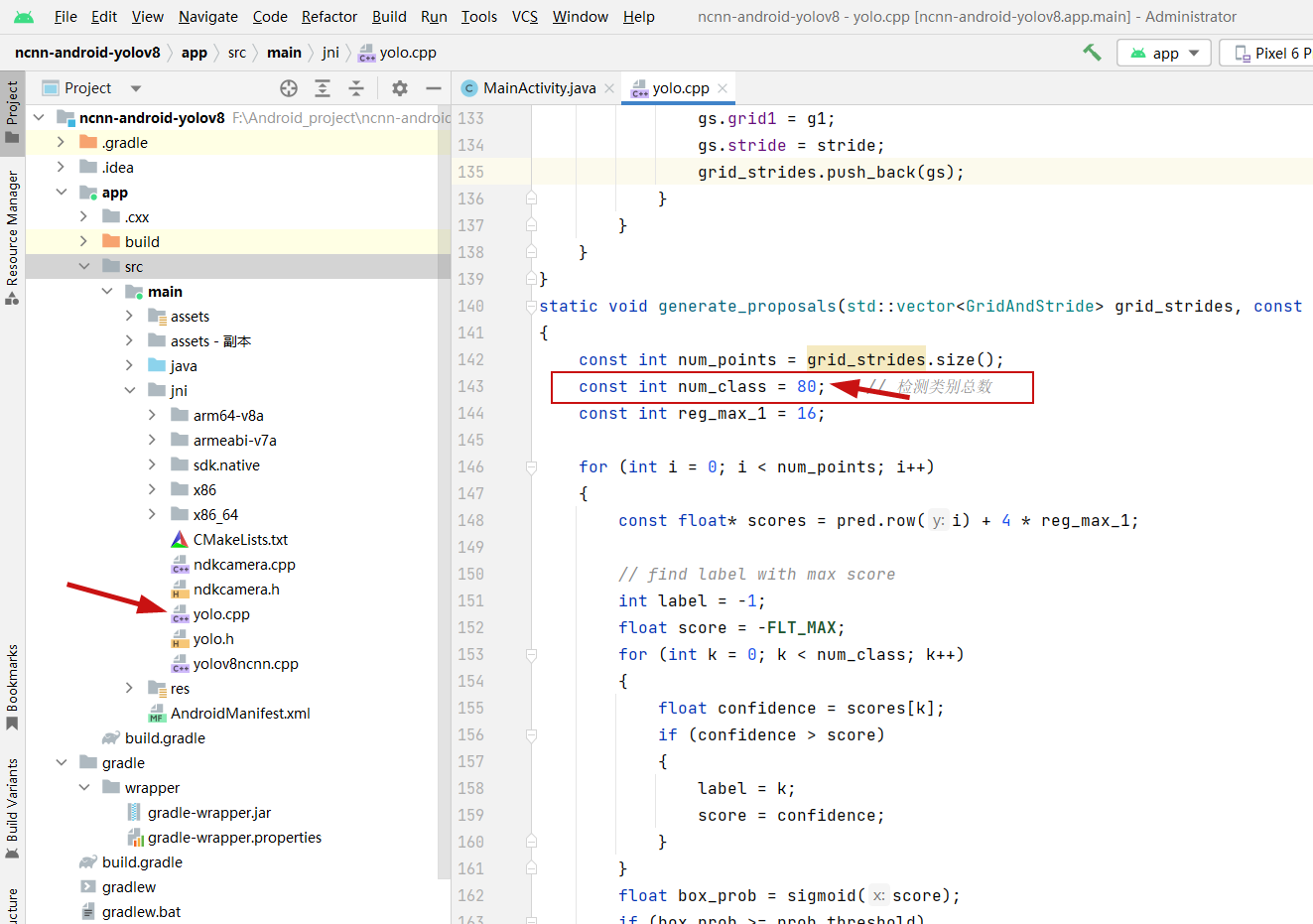

调整检查类别数

ncnn-android-yolov8\app\src\main\jni\yolo.cpp

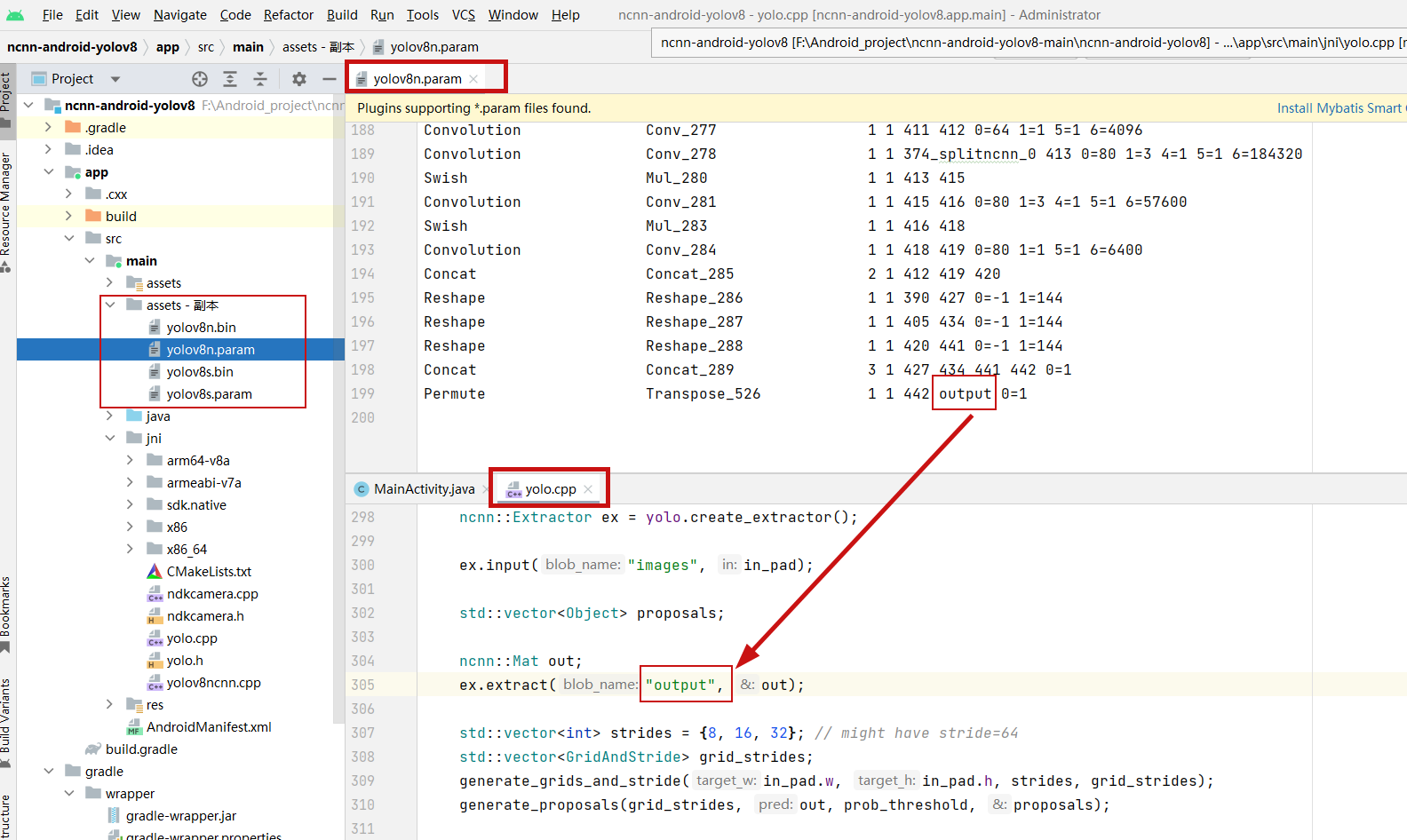

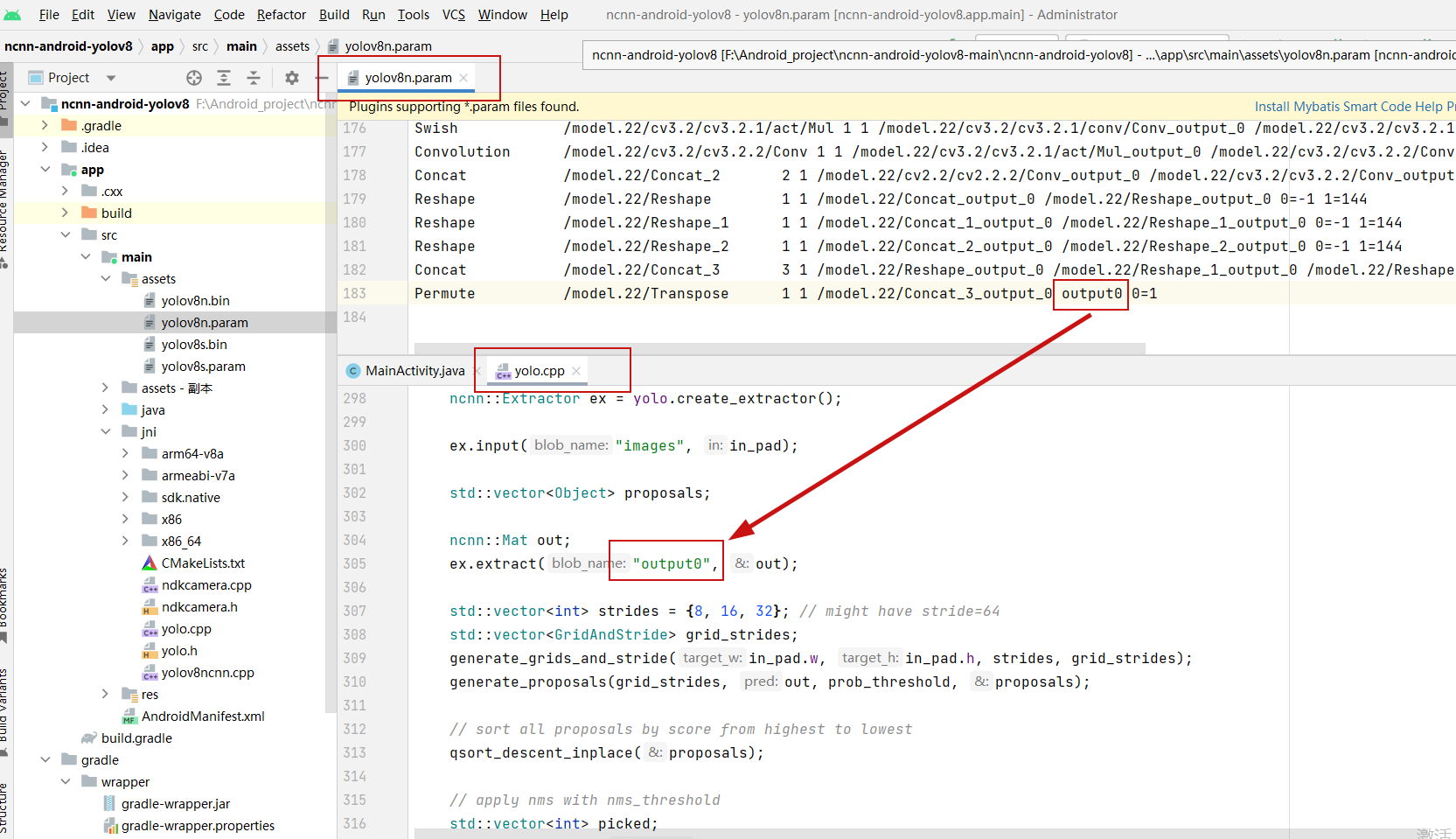

修改输出节点字段:

原始项目自带的模型字段如下所示:

修改过后如下所示:

↓↓↓↓↓

调整如下类别标签:

ncnn-android-yolov8\app\src\main\jni\yolo.cpp

修改模型名称

修改前:

修改后:

修改待检测类别标签:

6、修改yolov8ncnn.cpp文件

在相同位置下,有yolov8ncnn.cpp文件

修改前:

修改后:

7、修改strings.xml文件

在ncnn-android-yolov8\app\src\main\res\values目录下:

修改前:

修改后:

8、测试效果

9、相关源码下载地址

python

https://download.csdn.net/download/guoqingru0311/91922680