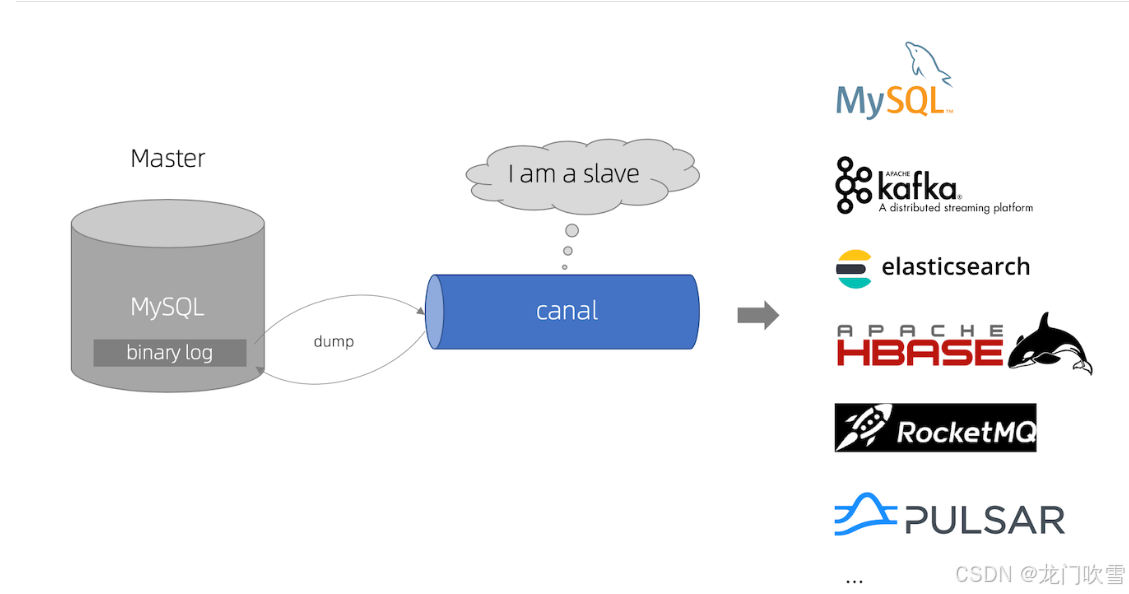

一、canal 简介

canal [kə'næl],译意为水道/管道/沟渠,主要用途是基于 MySQL 数据库增量日志解析,提供增量数据订阅和消费。

- 基于日志增量订阅&消费支持的业务:

- 数据库镜像

- 数据库实时备份

- 多级索引 (卖家和买家各自分库索引)

- search build

- 业务cache刷新

- 价格变化等重要业务消息

- 工作原理

- canal 模拟 MySQL slave 的交互协议,伪装自己为 MySQL slave ,向 MySQL master 发送 dump 协议

- MySQL master 收到 dump 请求,开始推送 binary log 给 slave (即 canal )

- canal 解析 binary log 对象(原始为 byte 流) 。

- 官网地址

Home · alibaba/canal Wiki · GitHub

二、docker 安装 Mysql 服务

2.1 创建 docker network 网络

bash

docker network create canal_net新建的 docker 容器Mysql、canal-admin、canal-server 都统一使用 canal-net 网络进行通信,彼此访问时可以通过容器名直接访问,不用通过 IP 地址。

2.1 docker 安装 mysql8.0

bash

docker run --name mysql -e MYSQL_ROOT_PASSWORD=123456 -v /root/mysqlData:/var/lib/mysql --privileged=true -d -p 3306:3306 --network canal_net mysql

//--name test 为容器指定一个名称(可替换test为自己喜欢的名称)。

//-e MYSQL_ROOT_PASSWORD=1 设置MySQL的root密码。你应该替换1为你的实际密码。

//-d 使容器在后台运行。

//mysql:latest 是要运行的MySQL镜像和标签(在这里是最新版本)

//以数据卷的形式,创建并启动mysql容器,容器内的mysql数据不会因为容器的删除而被删除

//-v /root/mysqlData:/var/lib/mysql:将数据卷/root/mysqlData映射到容器的/var/lib/mysql目录

//-p 3306:3306:将容器的3306端口映射到主机的3306端口

//--privileged=true: 使容器内的root拥有真正的root权限,解决挂载目录没有权限的问题此处只简单列了docker安装mysql 的命令。

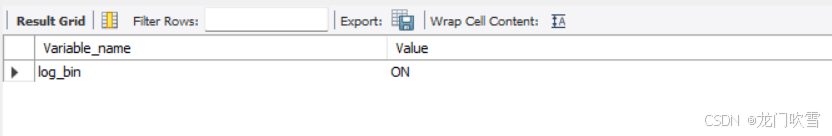

2.3 检查配置mysql 是否开启了binlog日志

sql

-- 查看是否开启 binlog

show variables like "%log_bin%";

-- 选择 ROW 模式

show variables like "binlog_format";

-- 配置 MySQL replaction 需要定义,不要和 canal 的 slaveId 重复

show variables like "server_id";mysql8.0默认开启 binlog 日志 ,无需修改配置。

开启binlog日志方法:

打开mysql挂载目录下( /mydata/mysql/conf )的 my.cnf 配置文件,添加以下配置

bash

[mysqld]

log-bin=mysql-bin # 开启 binlog

binlog-format=ROW # 选择 ROW 模式

server_id=1 # 配置 MySQL replaction 需要定义,不要和 canal 的 slaveId 重复2.4 在 mysql 中新建canal专用账户并设置权限

sql

create user canal@'%' IDENTIFIED by 'canal';

GRANT SELECT, REPLICATION SLAVE, REPLICATION CLIENT,SUPER ON *.* TO 'canal'@'%' identified by 'canal';

FLUSH PRIVILEGES;三、docker 安装 canal-admin

3.1 拉取 canal-admin 镜像

bash

docker pull canal/canal-admin3.2 创建 canal_manager 数据库

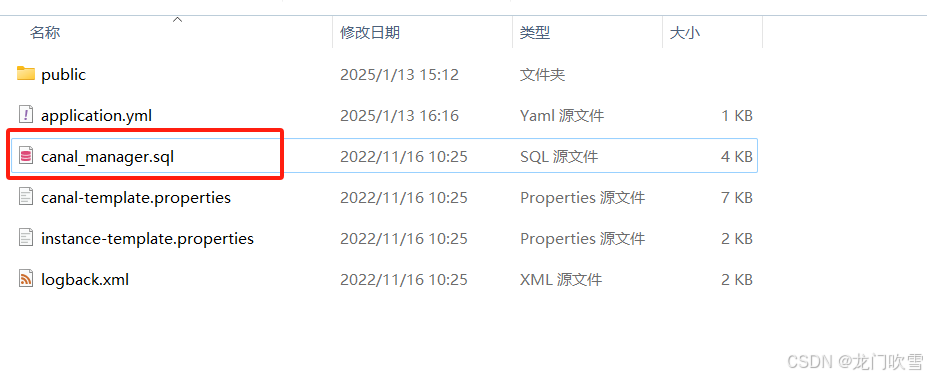

在官网 https://github.com/alibaba/canal/releases的canal.admin-1.1.8-SNAPSHOT.tar.gz文件的解压包中找到canal_manager.sql,并在mysql中执行脚本。

mysql 脚本(1.1.8 版本)如下:

sql

CREATE DATABASE /*!32312 IF NOT EXISTS*/ `canal_manager` /*!40100 DEFAULT CHARACTER SET utf8 COLLATE utf8_bin */;

USE `canal_manager`;

SET NAMES utf8;

SET FOREIGN_KEY_CHECKS = 0;

-- ----------------------------

-- Table structure for canal_adapter_config

-- ----------------------------

DROP TABLE IF EXISTS `canal_adapter_config`;

CREATE TABLE `canal_adapter_config` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`category` varchar(255) NOT NULL,

`name` varchar(255) NOT NULL,

`status` varchar(45) DEFAULT NULL,

`content` text NOT NULL,

`modified_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- ----------------------------

-- Table structure for canal_cluster

-- ----------------------------

DROP TABLE IF EXISTS `canal_cluster`;

CREATE TABLE `canal_cluster` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`name` varchar(255) NOT NULL,

`zk_hosts` varchar(255) NOT NULL,

`modified_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- ----------------------------

-- Table structure for canal_config

-- ----------------------------

DROP TABLE IF EXISTS `canal_config`;

CREATE TABLE `canal_config` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`cluster_id` bigint(20) DEFAULT NULL,

`server_id` bigint(20) DEFAULT NULL,

`name` varchar(255) NOT NULL,

`status` varchar(45) DEFAULT NULL,

`content` text NOT NULL,

`content_md5` varchar(128) NOT NULL,

`modified_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`),

UNIQUE KEY `sid_UNIQUE` (`server_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- ----------------------------

-- Table structure for canal_instance_config

-- ----------------------------

DROP TABLE IF EXISTS `canal_instance_config`;

CREATE TABLE `canal_instance_config` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`cluster_id` bigint(20) DEFAULT NULL,

`server_id` bigint(20) DEFAULT NULL,

`name` varchar(255) NOT NULL,

`status` varchar(45) DEFAULT NULL,

`content` text NOT NULL,

`content_md5` varchar(128) DEFAULT NULL,

`modified_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`),

UNIQUE KEY `name_UNIQUE` (`name`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- ----------------------------

-- Table structure for canal_node_server

-- ----------------------------

DROP TABLE IF EXISTS `canal_node_server`;

CREATE TABLE `canal_node_server` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`cluster_id` bigint(20) DEFAULT NULL,

`name` varchar(255) NOT NULL,

`ip` varchar(255) NOT NULL,

`admin_port` int(11) DEFAULT NULL,

`tcp_port` int(11) DEFAULT NULL,

`metric_port` int(11) DEFAULT NULL,

`status` varchar(45) DEFAULT NULL,

`modified_time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

-- ----------------------------

-- Table structure for canal_user

-- ----------------------------

DROP TABLE IF EXISTS `canal_user`;

CREATE TABLE `canal_user` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`username` varchar(255) NOT NULL,

`password` varchar(255) NOT NULL,

`name` varchar(255) NOT NULL,

`roles` varchar(255) NOT NULL,

`introduction` varchar(255) DEFAULT NULL,

`avatar` varchar(255) DEFAULT NULL,

`creation_date` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

SET FOREIGN_KEY_CHECKS = 1;

-- ----------------------------

-- Records of canal_user

-- ----------------------------

BEGIN;

INSERT INTO `canal_user` VALUES (1, 'admin', '6BB4837EB74329105EE4568DDA7DC67ED2CA2AD9', 'Canal Manager', 'admin', NULL, NULL, '2019-07-14 00:05:28');

COMMIT;

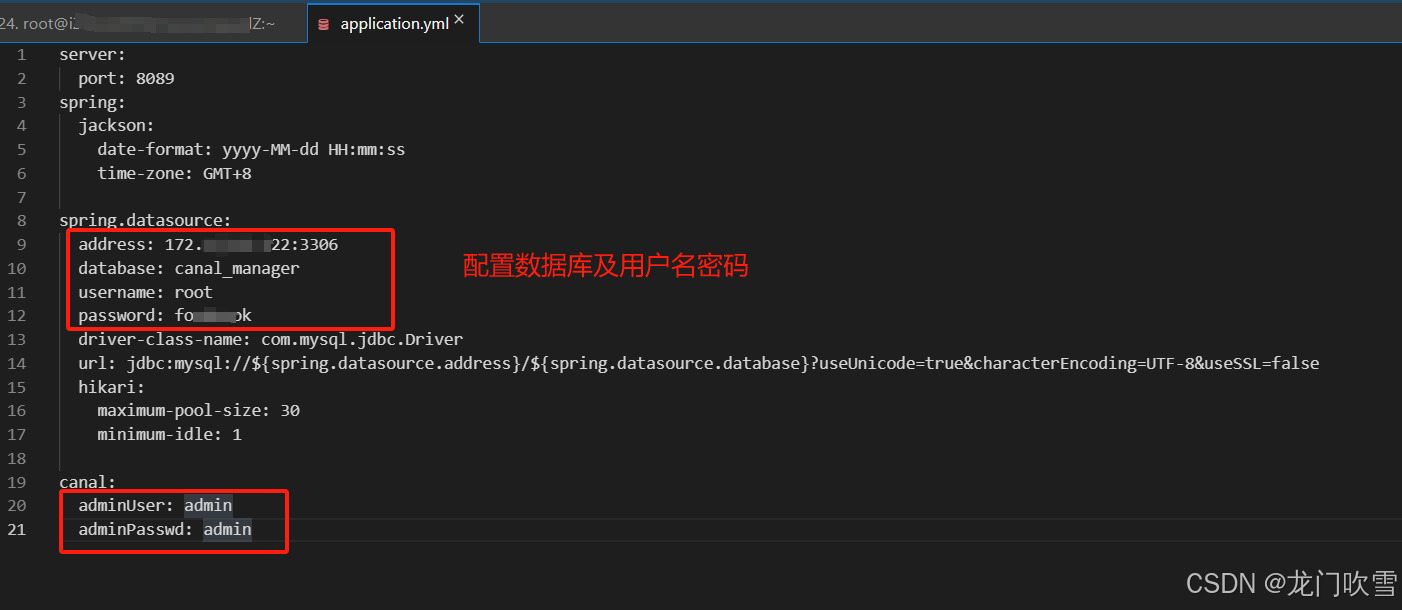

SET FOREIGN_KEY_CHECKS = 1;3.3 配置application.yml

在宿主机新建目录/mydata/canal/admin/conf,并新建application.yml文件

bash

// application.yml 文件的内容

server:

port: 8089

spring:

jackson:

date-format: yyyy-MM-dd HH:mm:ss

time-zone: GMT+8

spring.datasource:

address: mysql:3306

database: canal_manager

username: root

password: root

driver-class-name: com.mysql.jdbc.Driver

url: jdbc:mysql://${spring.datasource.address}/${spring.datasource.database}?useUnicode=true&characterEncoding=UTF-8&useSSL=false

hikari:

maximum-pool-size: 30

minimum-idle: 1

canal:

adminUser: admin

adminPasswd: admin3.4 docker 运行 canal-admin

bash

// 以参数形式运行,无需在application.yml文件中填写参数

docker run -d --name canal-admin -e spring.datasource.address=mysql:3306 -e spring.datasource.database=canal_manager -e spring.datasource.username=root -e spring.datasource.password=foodbook --network canal_net -p 8089:8089 canal/canal-admin

// 以挂载文件方式运行,数据库的配置在application.yml文件中填好

docker run -d --name canal-admin \

-v /mydata/canal/admin/conf/application.yml:/home/admin/canal-admin/conf/application.yml \

-v /mydata/canal/admin/logs/:/home/admin/canal-admin/logs/ \

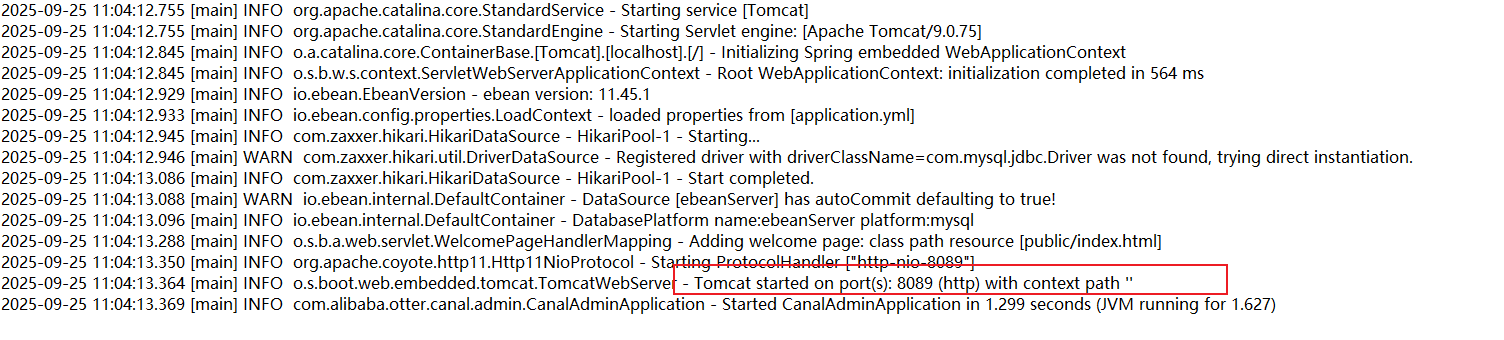

--network canal_net -p 8089:8089 canal/canal-admin启动成功后,可在挂载的日志目录下查看 canal-admin 的启动日志:

此时代表canal-admin已经启动成功。

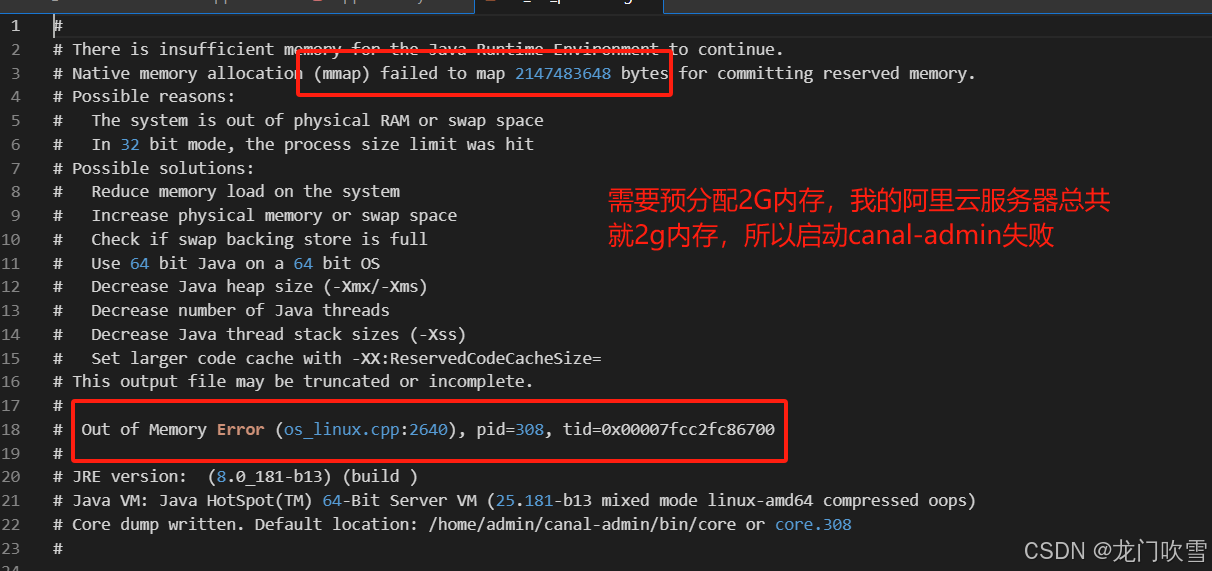

备注:我在阿里云服务器上,用docker安装 canal-admin 后,启动失败,查询日志,发现运行该服务需要2g内存,我服务器内存不够。

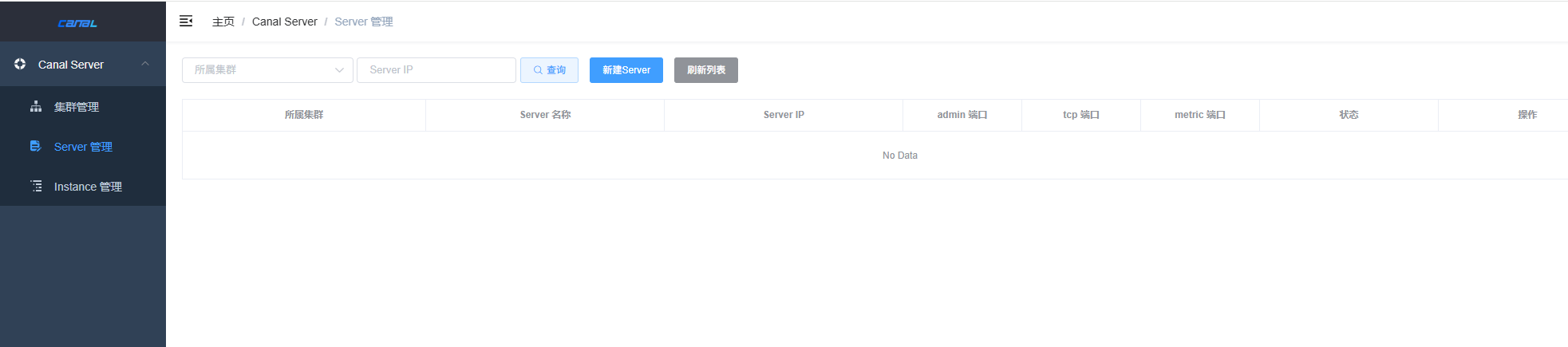

3.5 访问 canal-admin 后台管理页面

可以通过 http://127.0.0.1:8089/ 访问,默认密码:admin/123456

四、docker 安装 canal 服务

官网安装方法:https://github.com/alibaba/canal/wiki/Docker-QuickStart

4.1 拉取 canal 镜像

bash

docker pull canal/canal-server4.2 运行 canal

bash

docker run --name canal-server -d canal/canal-server4.3 复制canal容器内的配置文件到宿主机

bash

# 创建文件夹并复制文件

mkdir /mydata/canal

chmod 777 /mydata/canal

docker cp canal-server:/home/admin/canal-server/conf/canal.properties /mydata/canal/conf

docker cp canal-server:/home/admin/canal-server/conf/example/instance.properties /mydata/canal/conf/example4.4 修改 canal.properties 配置文件

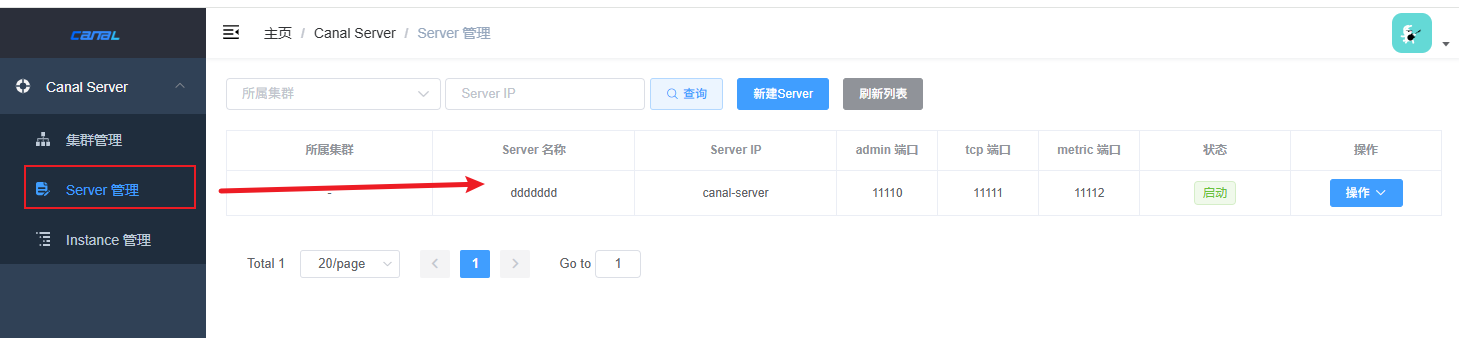

canal.properties 文件配置 canal-server 服务的参数,主要修改内容:

bash

# register ip to zookeeper # canal-server 服务自身的IP,因为运行 docker 时使用了 dockers network 网络,所以这里直接配置了 canal-server 的服务名

canal.register.ip = canal-server

canal.port = 11111

canal.metrics.pull.port = 11112

# canal instance user/passwd

# canal.user = canal

# canal.passwd =

# canal admin config 这里配置 canal-admin 的IP和端口,以便 canal-admin 管理页面能显示和配置canal 服务,注意用户名和密码来自于 canal-admin 服务配置的 aplication.yml 中的adminUser、adminPassword,默认都为 admin(4ACFE3202A5FF5CF467898FC58AAB1D615029441是admin加密字符串)

canal.admin.manager = canal-admin:8089

canal.admin.port = 11110

canal.admin.user = admin

canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

# admin auto register 自动注册

canal.admin.register.auto = true

#canal.admin.register.cluster =

canal.admin.register.name = ddddddd # canal-server 的服务名具体配置项如下:

bash

#################################################

######### common argument #############

#################################################

# tcp bind ip

canal.ip =

# register ip to zookeeper # canal-server 服务自身的IP,因为运行 docker 时使用了 dockers network 网络,所以这里直接配置了 canal-server 的服务名

canal.register.ip = canal-server

canal.port = 11111

canal.metrics.pull.port = 11112

# canal instance user/passwd

# canal.user = canal

# canal.passwd =

# canal admin config 这里配置 canal-admin 的IP和端口,以便 canal-admin 管理页面能显示和配置canal 服务,注意用户名和密码来自于 canal-admin 服务配置的 aplication.yml 中的adminUser、adminPassword,默认都为 admin(4ACFE3202A5FF5CF467898FC58AAB1D615029441是admin加密字符串)

canal.admin.manager = canal-admin:8089

canal.admin.port = 11110

canal.admin.user = admin

canal.admin.passwd = 4ACFE3202A5FF5CF467898FC58AAB1D615029441

# admin auto register 自动注册

canal.admin.register.auto = true

#canal.admin.register.cluster =

canal.admin.register.name = ddddddd # canal-server 的服务名

canal.zkServers =

# flush data to zk

canal.zookeeper.flush.period = 1000

canal.withoutNetty = false

# tcp, kafka, rocketMQ, rabbitMQ, pulsarMQ

canal.serverMode = tcp

# flush meta cursor/parse position to file

canal.file.data.dir = ${canal.conf.dir}

canal.file.flush.period = 1000

## memory store RingBuffer size, should be Math.pow(2,n)

canal.instance.memory.buffer.size = 16384

## memory store RingBuffer used memory unit size , default 1kb

canal.instance.memory.buffer.memunit = 1024

## meory store gets mode used MEMSIZE or ITEMSIZE

canal.instance.memory.batch.mode = MEMSIZE

canal.instance.memory.rawEntry = true

## detecing config

canal.instance.detecting.enable = false

#canal.instance.detecting.sql = insert into retl.xdual values(1,now()) on duplicate key update x=now()

canal.instance.detecting.sql = select 1

canal.instance.detecting.interval.time = 3

canal.instance.detecting.retry.threshold = 3

canal.instance.detecting.heartbeatHaEnable = false

# support maximum transaction size, more than the size of the transaction will be cut into multiple transactions delivery

canal.instance.transaction.size = 1024

# mysql fallback connected to new master should fallback times

canal.instance.fallbackIntervalInSeconds = 60

# network config

canal.instance.network.receiveBufferSize = 16384

canal.instance.network.sendBufferSize = 16384

canal.instance.network.soTimeout = 30

# binlog filter config

canal.instance.filter.druid.ddl = true

canal.instance.filter.query.dcl = false

canal.instance.filter.query.dml = false

canal.instance.filter.query.ddl = false

canal.instance.filter.table.error = false

canal.instance.filter.rows = false

canal.instance.filter.transaction.entry = false

canal.instance.filter.dml.insert = false

canal.instance.filter.dml.update = false

canal.instance.filter.dml.delete = false

# binlog format/image check

canal.instance.binlog.format = ROW,STATEMENT,MIXED

canal.instance.binlog.image = FULL,MINIMAL,NOBLOB

# binlog ddl isolation

canal.instance.get.ddl.isolation = false

# parallel parser config

canal.instance.parser.parallel = true

## concurrent thread number, default 60% available processors, suggest not to exceed Runtime.getRuntime().availableProcessors()

#canal.instance.parser.parallelThreadSize = 16

## disruptor ringbuffer size, must be power of 2

canal.instance.parser.parallelBufferSize = 256

# table meta tsdb info

canal.instance.tsdb.enable = true

canal.instance.tsdb.dir = ${canal.file.data.dir:../conf}/${canal.instance.destination:}

canal.instance.tsdb.url = jdbc:h2:${canal.instance.tsdb.dir}/h2;CACHE_SIZE=1000;MODE=MYSQL;

canal.instance.tsdb.dbUsername = canal

canal.instance.tsdb.dbPassword = canal

# dump snapshot interval, default 24 hour

canal.instance.tsdb.snapshot.interval = 24

# purge snapshot expire , default 360 hour(15 days)

canal.instance.tsdb.snapshot.expire = 360

#################################################

######### destinations #############

#################################################

canal.destinations = example

# conf root dir

canal.conf.dir = ../conf

# auto scan instance dir add/remove and start/stop instance

canal.auto.scan = true

canal.auto.scan.interval = 5

# set this value to 'true' means that when binlog pos not found, skip to latest.

# WARN: pls keep 'false' in production env, or if you know what you want.

canal.auto.reset.latest.pos.mode = false

canal.instance.tsdb.spring.xml = classpath:spring/tsdb/h2-tsdb.xml

#canal.instance.tsdb.spring.xml = classpath:spring/tsdb/mysql-tsdb.xml

canal.instance.global.mode = spring

canal.instance.global.lazy = false

canal.instance.global.manager.address = ${canal.admin.manager}

#canal.instance.global.spring.xml = classpath:spring/memory-instance.xml

canal.instance.global.spring.xml = classpath:spring/file-instance.xml

#canal.instance.global.spring.xml = classpath:spring/default-instance.xml

##################################################

######### MQ Properties #############

##################################################

# aliyun ak/sk , support rds/mq

canal.aliyun.accessKey =

canal.aliyun.secretKey =

canal.aliyun.uid=

canal.mq.flatMessage = true

canal.mq.canalBatchSize = 50

canal.mq.canalGetTimeout = 100

# Set this value to "cloud", if you want open message trace feature in aliyun.

canal.mq.accessChannel = local

canal.mq.database.hash = true

canal.mq.send.thread.size = 30

canal.mq.build.thread.size = 8

##################################################

######### Kafka #############

##################################################

kafka.bootstrap.servers = 127.0.0.1:9092

kafka.acks = all

kafka.compression.type = none

kafka.batch.size = 16384

kafka.linger.ms = 1

kafka.max.request.size = 1048576

kafka.buffer.memory = 33554432

kafka.max.in.flight.requests.per.connection = 1

kafka.retries = 0

kafka.kerberos.enable = false

kafka.kerberos.krb5.file = ../conf/kerberos/krb5.conf

kafka.kerberos.jaas.file = ../conf/kerberos/jaas.conf

# sasl demo

# kafka.sasl.jaas.config = org.apache.kafka.common.security.scram.ScramLoginModule required \\n username=\"alice\" \\npassword="alice-secret\";

# kafka.sasl.mechanism = SCRAM-SHA-512

# kafka.security.protocol = SASL_PLAINTEXT

##################################################

######### RocketMQ #############

##################################################

rocketmq.producer.group = test

rocketmq.enable.message.trace = false

rocketmq.customized.trace.topic =

rocketmq.namespace =

rocketmq.namesrv.addr = 127.0.0.1:9876

rocketmq.retry.times.when.send.failed = 0

rocketmq.vip.channel.enabled = false

rocketmq.tag =

##################################################

######### RabbitMQ #############

##################################################

rabbitmq.host =

rabbitmq.virtual.host =

rabbitmq.exchange =

rabbitmq.username =

rabbitmq.password =

rabbitmq.queue =

rabbitmq.routingKey =

rabbitmq.deliveryMode =

##################################################

######### Pulsar #############

##################################################

pulsarmq.serverUrl =

pulsarmq.roleToken =

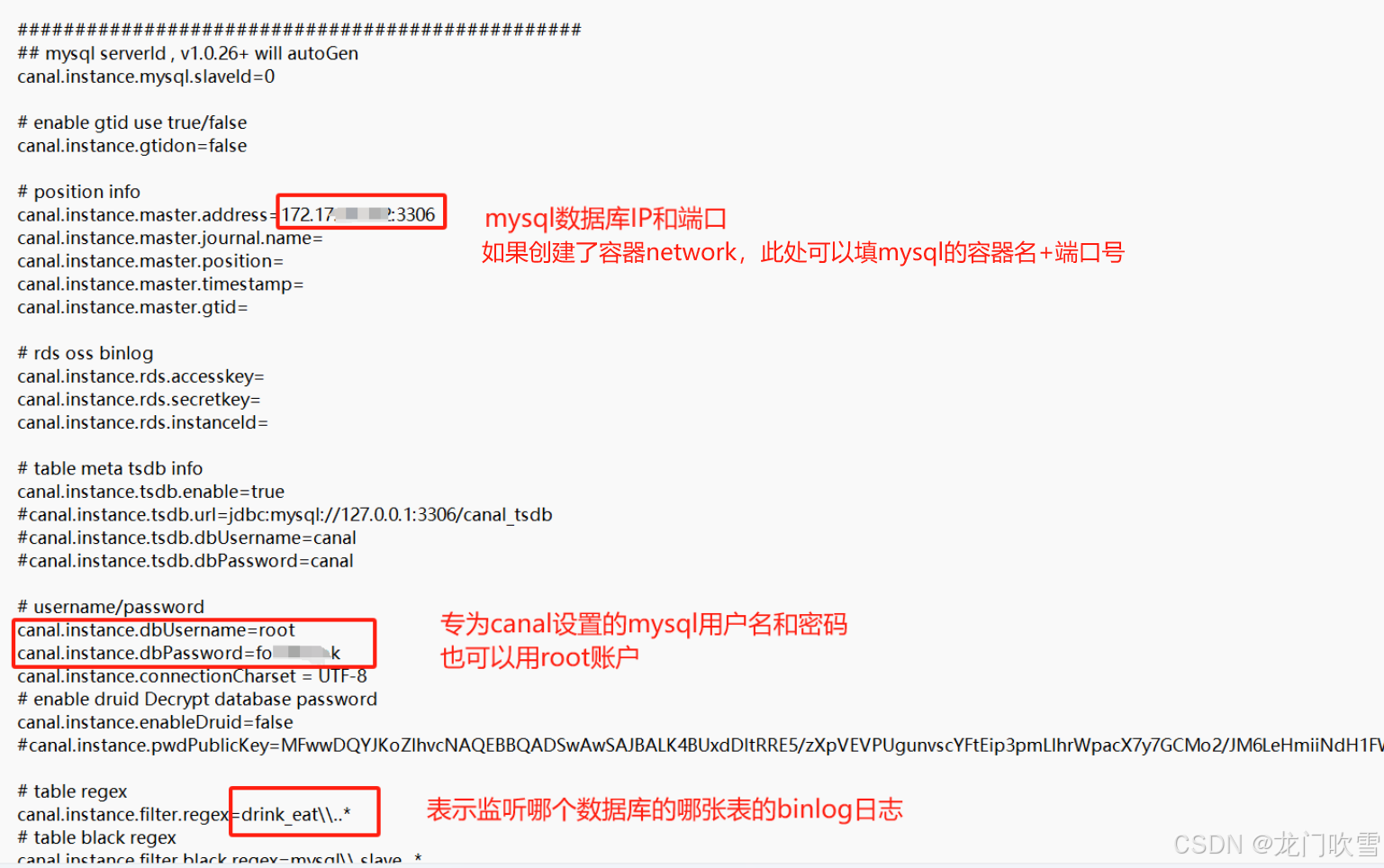

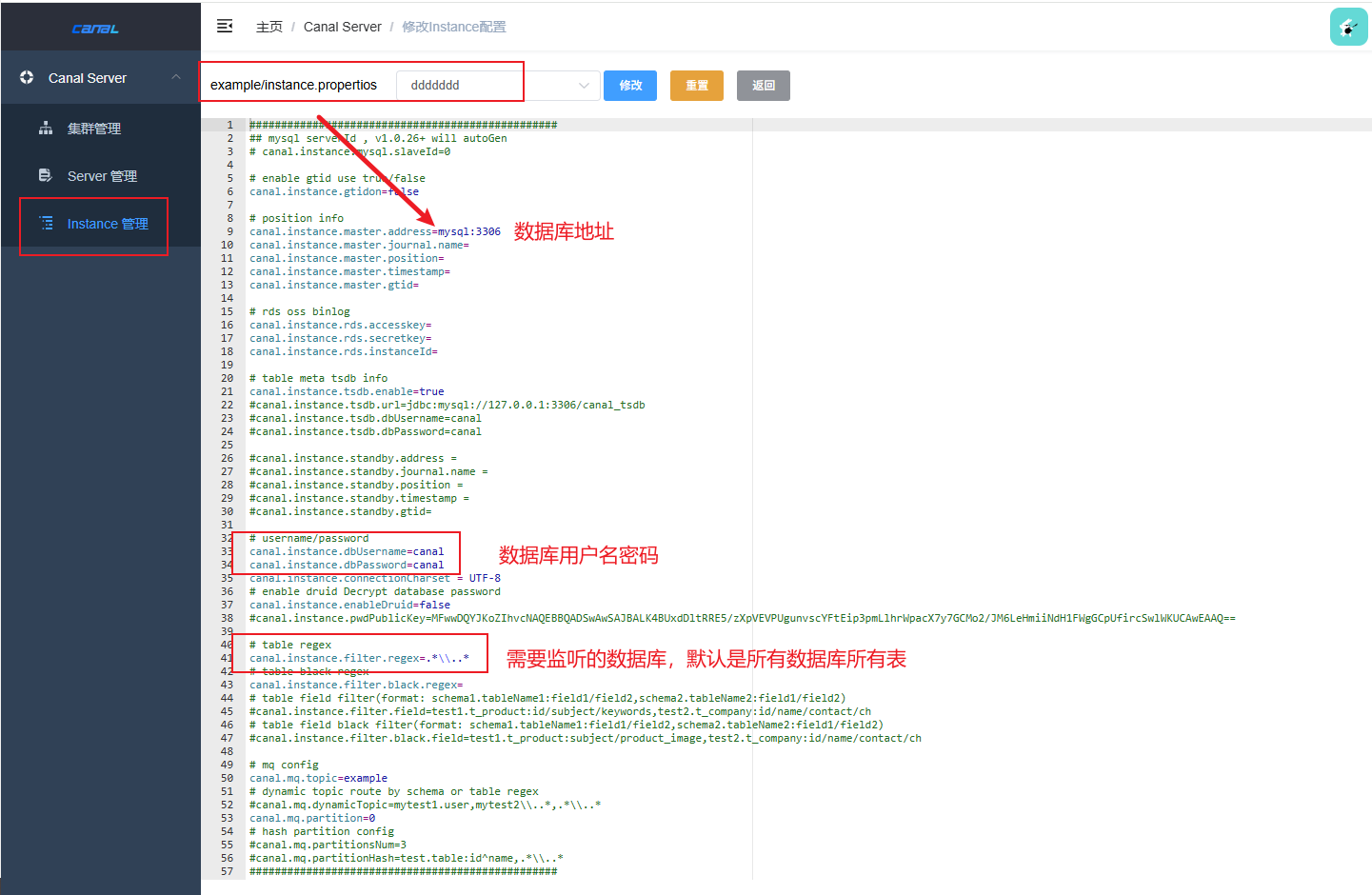

pulsarmq.topicTenantPrefix =4.5 修改 example 文件夹下的 instance.properties 配置参数

bash

server:

port: 8089

spring:

jackson:

date-format: yyyy-MM-dd HH:mm:ss

time-zone: GMT+8

spring.datasource:

address: mysql:3306

database: canal_manager

username: root

password: 123456

driver-class-name: com.mysql.jdbc.Driver

url: jdbc:mysql://${spring.datasource.address}/${spring.datasource.database}?useUnicode=true&characterEncoding=UTF-8&useSSL=false

hikari:

maximum-pool-size: 30

minimum-idle: 1

canal:

adminUser: admin

adminPasswd: admin4.6 删除canal容器

bash

docker rm -f canal-server4.7 以挂载文件的方式运行 canal 容器

bash

// 重要:注意挂载日志文件到宿主机,方便查看容器运行日志

docker run --name canal-server -p 11111:11111 -d \

-v /mydata/canal/conf/example/instance.properties:/home/admin/canal-server/conf/example/instance.properties \

-v /mydata/canal/conf/canal.properties:/home/admin/canal-server/conf/canal.properties \

-v /mydata/canal/logs/:/home/admin/canal-server/logs/ \

--network canal_net \

canal/canal-server备注:canal 容器中日志文件路径为:/home/admin/canal-server/logs/

注意:如果在宿主机上配置好了 instance.properties 文件内容,就无需进行4.2到4.6步骤,直接执行4.7步骤即可。

4.8 canal-admin 后台页面中管理服务及实例

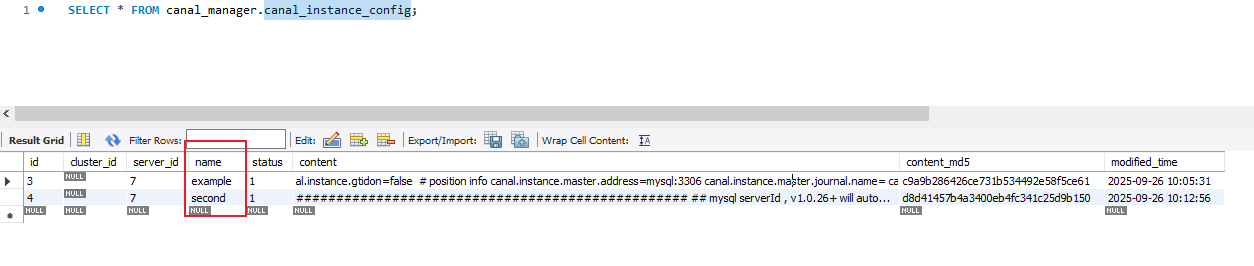

可以在 【instance 管理】中新增或修改 instance 实例,在此新增的实例会将配置文件信息保存到 canal-manager 数据库的表 canal_instance_config 中。

在 canal-manager 数据库中查看新配置的实例,如下:

五、基于 go 语言订阅消费 canal-server

参考 github 库:https://github.com/withlin/canal-go

5.1 go 代码

Go

// Licensed to the Apache Software Foundation (ASF) under one

// or more contributor license agreements. See the NOTICE file

// distributed with this work for additional information

// regarding copyright ownership. The ASF licenses this file

// to you under the Apache License, Version 2.0 (the

// "License"); you may not use this file except in compliance

// with the License. You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an "AS IS" BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

package main

import (

"fmt"

"log"

"os"

"time"

"github.com/withlin/canal-go/client"

pbe "github.com/withlin/canal-go/protocol/entry"

"google.golang.org/protobuf/proto"

)

func main() {

// 192.168.199.17 替换成你的canal server的地址

// example 替换成-e canal.destinations=example 你自己定义的名字

connector := client.NewSimpleCanalConnector("172.28.203.173", 11111, "", "", "example", 60000, 60*60*1000)

err := connector.Connect()

if err != nil {

log.Println(err)

os.Exit(1)

}

// https://github.com/alibaba/canal/wiki/AdminGuide

//mysql 数据解析关注的表,Perl正则表达式.

//

//多个正则之间以逗号(,)分隔,转义符需要双斜杠(\\)

//

//常见例子:

//

// 1. 所有表:.* or .*\\..*

// 2. canal schema下所有表: canal\\..*

// 3. canal下的以canal打头的表:canal\\.canal.*

// 4. canal schema下的一张表:canal\\.test1

// 5. 多个规则组合使用:canal\\..*,mysql.test1,mysql.test2 (逗号分隔)

err = connector.Subscribe(".*\\..*")

if err != nil {

log.Println(err)

os.Exit(1)

}

for {

message, err := connector.Get(100, nil, nil)

if err != nil {

log.Println(err)

os.Exit(1)

}

batchId := message.Id

if batchId == -1 || len(message.Entries) <= 0 {

time.Sleep(300 * time.Millisecond)

fmt.Println("===没有数据了===")

continue

}

printEntry(message.Entries)

}

}

func printEntry(entrys []pbe.Entry) {

for _, entry := range entrys {

if entry.GetEntryType() == pbe.EntryType_TRANSACTIONBEGIN || entry.GetEntryType() == pbe.EntryType_TRANSACTIONEND {

continue

}

rowChange := new(pbe.RowChange)

err := proto.Unmarshal(entry.GetStoreValue(), rowChange)

checkError(err)

if rowChange != nil {

eventType := rowChange.GetEventType()

header := entry.GetHeader()

fmt.Println(fmt.Sprintf("================> binlog[%s : %d],name[%s,%s], eventType: %s", header.GetLogfileName(), header.GetLogfileOffset(), header.GetSchemaName(), header.GetTableName(), header.GetEventType()))

for _, rowData := range rowChange.GetRowDatas() {

if eventType == pbe.EventType_DELETE {

printColumn(rowData.GetBeforeColumns())

} else if eventType == pbe.EventType_INSERT {

printColumn(rowData.GetAfterColumns())

} else {

fmt.Println("-------> before")

printColumn(rowData.GetBeforeColumns())

fmt.Println("-------> after")

printColumn(rowData.GetAfterColumns())

}

}

}

}

}

func printColumn(columns []*pbe.Column) {

for _, col := range columns {

fmt.Println(fmt.Sprintf("%s : %s update= %t", col.GetName(), col.GetValue(), col.GetUpdated()))

}

}

func checkError(err error) {

if err != nil {

fmt.Fprintf(os.Stderr, "Fatal error: %s", err.Error())

os.Exit(1)

}

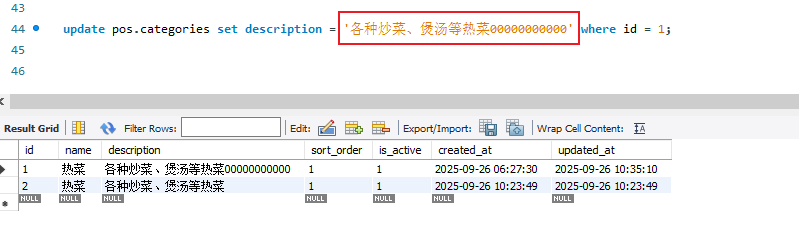

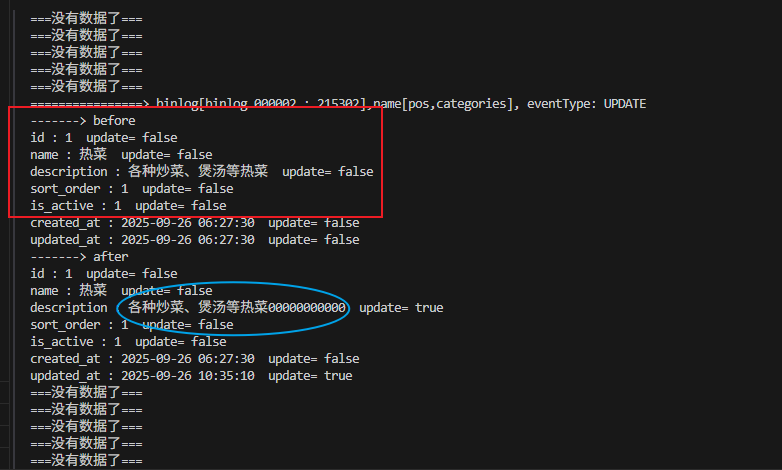

}5.2 效果演示

更新数据库表中的数据,可以看到 go 程序打印出 mysql 数据更新前后的变化:

六、报错问题

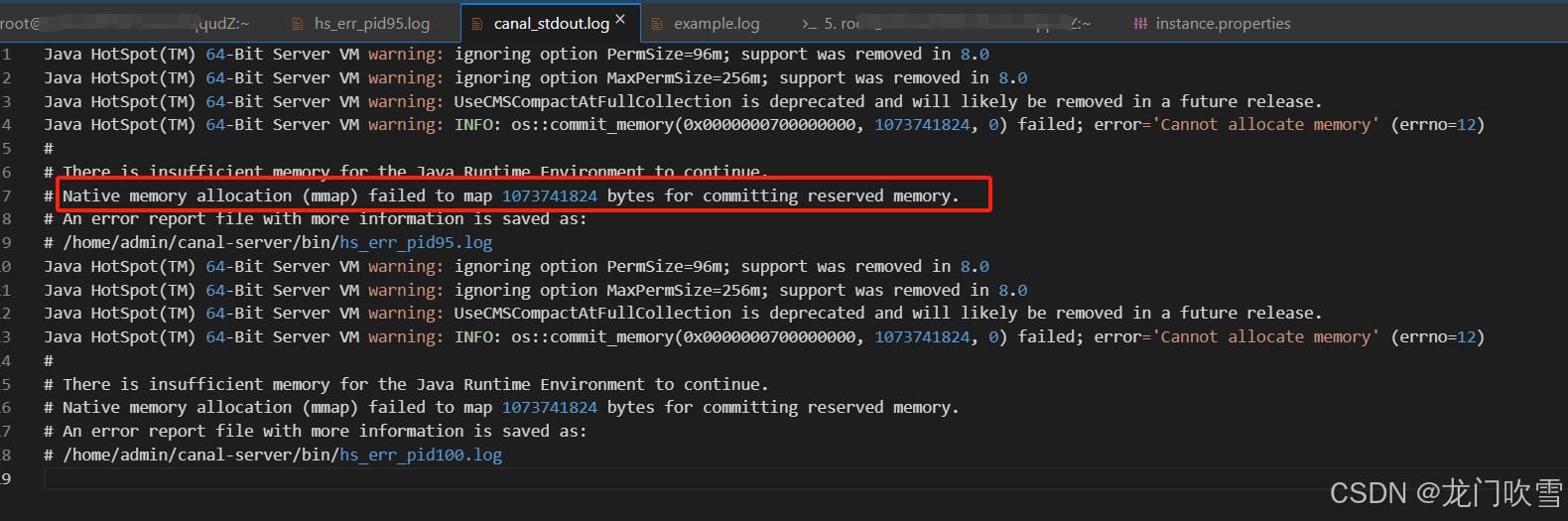

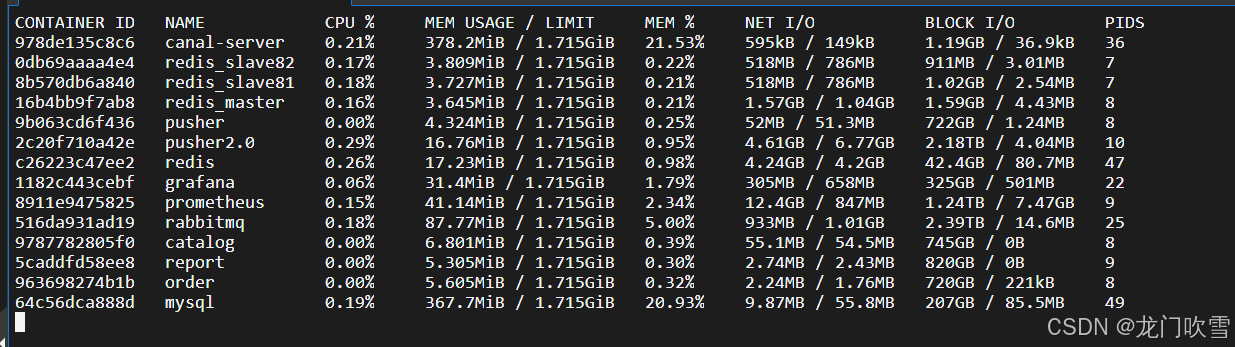

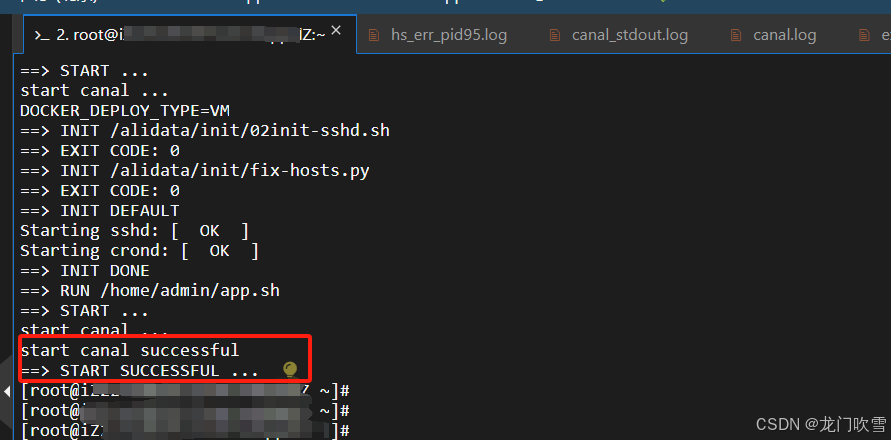

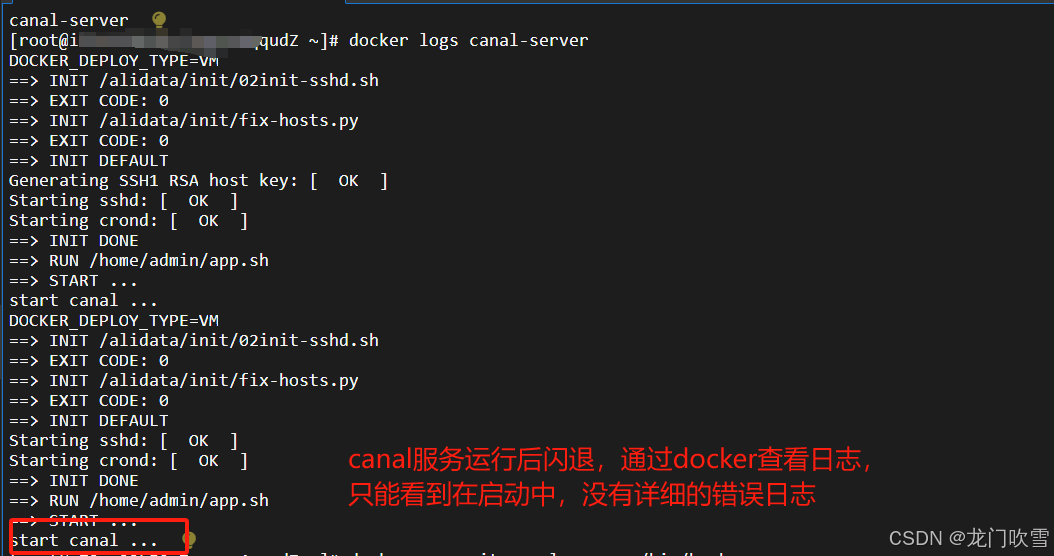

6.1 canal 服务运行后闪退

如何查看详细错误日志?

docker 运行 canal 服务时,将容器的错误日志(canal容器日志文件默认路径:/home/admin/canal-server/logs/)挂载到宿主机上, 即可查看详细日志:

发现是宿主机运行内存不够,用 docker status 查看,果然剩余内存不够运行 canal 服务,停止暂时不用的容器,再启动 canal-server,成功运行。