#作者:程宏斌

文章目录

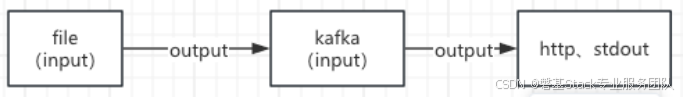

业务需求

file采集的时候,input是透传的json,没加处理。但从kafka作为input时候,我out的日志里面多了一层payload。报错400格式异常。

原数据格式:

{"hostname":"uos20","output":"10:16:32.056070324: Critical High-risk command executed outside maintenance window:\nrm -i extract_payload.lua\nbash\n/usr/sbin/sshd\n/usr/sbin/sshd\n/usr/sbin/sshd\n/usr/lib/systemd/systemd\ncgroups=cpuset=/ cpu=/user.slice cpuacct=/user.slice blkio=/user.slice memory=/user.slice/user-0.slice/session-4.scope\nproc_exe_ino_ctime=1686728950587813749\nprocess=rm\npid=2320\nprocexe=rm\nfile=<NA>\naction=execve\nparent_process=bash\nparent_exepath=/usr/bin/rm\nuser=root user_uid=0 user_loginuid=0\nterminal=34817\ncontainer_info=container_id=host container_name=host","output_fields":{"container.id":"host","container.name":"host","evt.time":1734401792056070324,"evt.type":"execve","fd.name":null,"proc.acmdline[0]":"rm -i extract_payload.lua","proc.acmdline[1]":"bash","proc.aexepath[2]":"/usr/sbin/sshd","proc.aexepath[3]":"/usr/sbin/sshd","proc.aexepath[4]":"/usr/sbin/sshd","proc.aexepath[5]":"/usr/lib/systemd/systemd","proc.exe":"rm","proc.exe_ino.ctime":1686728950587813749,"proc.exepath":"/usr/bin/rm","proc.name":"rm","proc.pid":2320,"proc.pname":"bash","proc.tty":34817,"thread.cgroups":"cpuset=/ cpu=/user.slice cpuacct=/user.slice blkio=/user.slice memory=/user.slice/user-0.slice/session-4.scope","user.loginuid":0,"user.name":"root","user.uid":0},"priority":"Critical","rule":"High-Risk Command Executed Outside Maintenance Window","source":"syscall","tags":["attack_detection","host","process","security"],"time":"2024-12-17T02:16:32.056070324Z"}从kafka轮转之后输出的格式如下:

[0]kafka: [[1734422739.976720818, {}], {"topic"=>"Fluentbit", "partition"=>0, "offset"=>3, "error"=>nil, "key"=>nil, "payload"=>"{"@timestamp":1734422739.232958,"hostname":"uos20","output":"10:16:32.056070324: Critical High-risk command executed outside maintenance window:\nrm -i extract_payload.lua\nbash\n/usr/sbin/sshd\n/usr/sbin/sshd\n/usr/sbin/sshd\n/usr/lib/systemd/systemd\ncgroups=cpuset=/ cpu=/user.slice cpuacct=/user.slice blkio=/user.slice memory=/user.slice/user-0.slice/session-4.scope\nproc_exe_ino_ctime=1686728950587813749\nprocess=rm\npid=2320\nprocexe=rm\nfile=<NA>\naction=execve\nparent_process=bash\nparent_exepath=/usr/bin/rm\nuser=root user_uid=0 user_loginuid=0\nterminal=34817\ncontainer_info=container_id=host container_name=host","output_fields":{"container.id":"host","container.name":"host","evt.time":1734401792056070324,"evt.type":"execve","fd.name":null,"proc.acmdline[0]":"rm -i extract_payload.lua","proc.acmdline[1]":"bash","proc.aexepath[2]":"/usr/sbin/sshd","proc.aexepath[3]":"/usr/sbin/sshd","proc.aexepath[4]":"/usr/sbin/sshd","proc.aexepath[5]":"/usr/lib/systemd/systemd","proc.exe":"rm","proc.exe_ino.ctime":1686728950587813749,"proc.exepath":"/usr/bin/rm","proc.name":"rm","proc.pid":2320,"proc.pname":"bash","proc.tty":34817,"thread.cgroups":"cpuset=/ cpu=/user.slice cpuacct=/user.slice blkio=/user.slice memory=/user.slice/user-0.slice/session-4.scope","user.loginuid":0,"user.name":"root","user.uid":0},"priority":"Critical","rule":"High-Risk Command Executed Outside Maintenance Window","source":"syscall","tags":["attack_detection","host","process","security"],"time":"2024-12-17T02:16:32.056070324Z"}"}]前面加了kafka的信息如:topic、partition等,数据被多套了一层,payload是实际想要的内容,把payload提取到最外层,且不带key,只要value。

实现方案

配置fluent-bit的过滤规则如下

添加 Parsers 配置

- 将 payload 字段解析为独立的 JSON 内容,配置 parsers.conf 文件。

PARSER

Name json_payload

Format json

Time_Key @timestamp

Time_Format %s - 使用 Filter 提取 payload 字段

Fluent Bit 的 Modify 插件可以帮助我们提取和替换消息中的字段。将 Kafka 输入中的 payload 字段提取并解析成独立的 JSON。

示例 fluent-bit.conf 配置继续添加:

[FILTER]

Name modify

Match *

Rename payload message_raw

[FILTER]

Name parser

Match *

Key_Name message_raw

Parser json_payload-

输出配置

将处理后的数据输出到 stdout(终端)以进行验证:

OUTPUT

Name stdout

Match *

Format json_lines

-

配置说明

Kafka Input

使用 Format json 读取 Kafka 消息,使 Fluent Bit 能够识别并读取消息中的 payload 字段。

Parsers

json_payload 解析器专门用于将 payload 字段中的内容解析成 JSON。

Filters

modify 插件重命名原始 payload 为 message_raw,防止覆盖其他字段。

parser 插件将 message_raw 作为 JSON 解析,从而获取你需要的日志内容。

Output

输出到 stdout 进行调试,确保数据正确提取后可替换为其他输出。

json_lines: 将每条日志作为单独的 JSON 对象输出,并以换行符 \n 分隔。

实现效果

如下格式在output到客户http端正常。@timestamp,这个官方有去掉参数 但是还没开放出来 标准版本不能去掉。

{"date":1734428094.976708,"@timestamp":1734428094.549618,"hostname":"uos20","output":"10:16:32.056070324: Critical High-risk command executed outside maintenance window:\nrm -i extract_payload.lua\nbash\n/usr/sbin/sshd\n/usr/sbin/sshd\n/usr/sbin/sshd\n/usr/lib/systemd/systemd\ncgroups=cpuset=/ cpu=/user.slice cpuacct=/user.slice blkio=/user.slice memory=/user.slice/user-0.slice/session-4.scope\nproc_exe_ino_ctime=1686728950587813749\nprocess=rm\npid=2320\nprocexe=rm\nfile=<NA>\naction=execve\nparent_process=bash\nparent_exepath=/usr/bin/rm\nuser=root user_uid=0 user_loginuid=0\nterminal=34817\ncontainer_info=container_id=host container_name=host","output_fields":{"container.id":"host","container.name":"host","evt.time":1734401792056070324,"evt.type":"execve","fd.name":null,"proc.acmdline[0]":"rm -i extract_payload.lua","proc.acmdline[1]":"bash","proc.aexepath[2]":"/usr/sbin/sshd","proc.aexepath[3]":"/usr/sbin/sshd","proc.aexepath[4]":"/usr/sbin/sshd","proc.aexepath[5]":"/usr/lib/systemd/systemd","proc.exe":"rm","proc.exe_ino.ctime":1686728950587813749,"proc.exepath":"/usr/bin/rm","proc.name":"rm","proc.pid":2320,"proc.pname":"bash","proc.tty":34817,"thread.cgroups":"cpuset=/ cpu=/user.slice cpuacct=/user.slice blkio=/user.slice memory=/user.slice/user-0.slice/session-4.scope","user.loginuid":0,"user.name":"root","user.uid":0},"priority":"Critical","rule":"High-Risk Command Executed Outside Maintenance Window","source":"syscall","tags":["attack_detection","host","process","security"],"time":"2024-12-17T02:16:32.056070324Z"}

{"date":1734428094.978698,"@timestamp":1734428094.549646,"log":""}