参考市面app应用的水印相机功能,通常是实时拍照,然后可以选择水印样式和内容并添加到拍摄的照片上,再执行保存操作。水印相机的好处除了为照片留下美好印记也有一些需要巡检打卡等应用场景,核心是拍摄同时获取同步的位置信息等要素。

开发环境核心依赖版本

- "react-native": "^0.81.4",

- "expo": "^54.0.12",

- "react-native-canvas": "^0.1.40",

- "expo-image-picker": "~17.0.8",

技术方案

使用RN技术开发app应用的同仁毋庸置疑几乎都是前端开发工程师,因此提到水印一定会想到使用canvas的方案:

方案1:react-native-canvas依赖库

该依赖文档不是很清晰,可能需要参考 [github项目地址地址](github.com/iddan/react...) 通过在canvas上添加文字 drawImage等api添加图片以及toImageURL api得到图片,事实上在RN中使用canvas也是要借助webview来实现的:

tsx

1mport React, { useState, useRef, type FC } from 'react';

import { View, StyleSheet, TouchableOpacity, useWindowDimensions } from 'react-native';

import { useSafeAreaInsets } from 'react-native-safe-area-context';

import {

CameraView, useCameraPermissions, PermissionStatus,

} from 'expo-camera';

import Canvas, { Image as CanvasImage } from 'react-native-canvas';

import { Ionicons } from '@expo/vector-icons';

import { Image } from 'expo-image';

import Animated, { useSharedValue, useAnimatedStyle, withTiming } from 'react-native-reanimated';

import WaterPrintList from './WaterPrintList';

import { readAsStringAsync, EncodingType } from 'expo-file-system/legacy';

import NoPermission from './NoPermission';

const WaterPrint: FC = () => {

const [permission, requestPermission] = useCameraPermissions();

const cameraRef = useRef<CameraView>(null);

const [faceing, setFaceing] = useState<"front" | "back">("back");

const { top, bottom } = useSafeAreaInsets();

const [torch, setTorch] = useState<boolean>(false);

const [zoom, setZoom] = useState<number>(0);

const [cameraShow, setCameraShow] = useState<boolean>(true);

const { View: AnimatedView } = Animated;

const canvasRef = useRef<Canvas>(null);

const topTranslateY = useSharedValue(0);

const topOpacity = useSharedValue(1);

const topViewStyle = useAnimatedStyle(() => ({

transform: [{ translateY: topTranslateY.value }],

opacity: topOpacity.value

}))

const { width: screenWidth, height: screenHeight } = useWindowDimensions();

/**

* 拍照

* 1.获取拍摄图片uri

* 2.暂停相机

*/

const handleTakePhoto = async () => {

if (cameraShow) {

setCameraShow(false);

const cameraInstance = cameraRef.current;

/**

* 拍照获取uri

*/

let { uri, width = 0, height = 0 } = await cameraInstance?.takePictureAsync() ?? {};

if (uri) {

/**

* 暂停相机

*/

cameraInstance?.pausePreview();

/**

* 顶栏向上滑动

*/

topTranslateY.value = withTiming(-100, { duration: 500 });

topOpacity.value = withTiming(0, { duration: 500 });

const canvas = canvasRef.current;

if (canvas) {

/**设定canvas的显示尺寸 */

const maxWidth = screenWidth;

const maxHeight = screenHeight - top - bottom;

/**

* 计算缩放比例

* 1.如果图片宽度大于最大宽度,则缩放比例为最大宽度/图片宽度

*/

let scale = 1, displayWidth, displayHeight;

if (width > maxWidth || height > maxHeight) {

scale = Math.min(maxWidth / width, maxHeight / height);

};

/**

* 设置canvas的实际宽高,并清空画布

*/

displayWidth = width * scale;

displayHeight = height * scale;

console.log('displayWidth', displayWidth, 'displayHeight', displayHeight);

canvas.width = displayWidth;

canvas.height = displayHeight;

const ctx = canvas?.getContext('2d');

ctx.clearRect(0, 0, width, height);

const image = new CanvasImage(canvas);

const base64 = await readAsStringAsync(uri, { encoding: EncodingType.Base64 })

// image.src = uri;

image.src = 'data:image/png;base64,' + base64

image.addEventListener('load', async () => {

ctx.drawImage(image, 0, 0, displayWidth, displayHeight);

ctx.font = `16px Simhei`;

ctx.fillText('2025-10-2', displayWidth - 100, displayHeight - 100);

/**

* 多行水印

*/

const watermarkTexts = ['水印文字', '2025-10-2'];

const lineHeight = 20;

watermarkTexts.forEach((text, index) => {

ctx.fillText(text, 10, 30 + index * lineHeight);

});

let res = await canvas.toDataURL('image/png');

console.log(res);

})

}

}

} else {

setCameraShow(true);

cameraRef.current?.resumePreview();

topTranslateY.value = withTiming(0, { duration: 500 });

topOpacity.value = withTiming(1, { duration: 500 });

}

};

if (!permission || permission.status !== PermissionStatus.GRANTED) {

return (

<NoPermission

top={top}

requestPermission={requestPermission}

tipConfig={{ tipText: '请允许使用相机', tipTitle: '相机权限' }}

/>

)

};

return (

<View

style={[styles.container, { paddingTop: top, paddingBottom: bottom }]}

>

<AnimatedView style={[styles.top, topViewStyle]}>

<TouchableOpacity onPress={() => setTorch(!torch)} >

<Ionicons name={torch ? "flash-off" : "flash"} size={24} color="white" />

</TouchableOpacity>

<TouchableOpacity>

<Ionicons name='settings-outline' size={24} color="white" />

</TouchableOpacity>

</AnimatedView>

<CameraView

ref={cameraRef}

mode="picture"

facing={faceing}

enableTorch={torch}

zoom={zoom}

responsiveOrientationWhenOrientationLocked

//

style={[styles.cameraView, { display: cameraShow ? 'flex' : 'none' }]}

/>

<Canvas

ref={canvasRef}

//

style={[styles.canvasView, { display: cameraShow ? 'none' : 'flex' }]}

/>

<View style={[styles.bottom, { paddingBottom: bottom }]}>

<WaterPrintList />

<View style={styles.operations}>

<Image source={require('@/assets/images/singer/singer1.png')} style={styles.picture} />

<TouchableOpacity

style={styles.circle}

onPress={handleTakePhoto}

>

<View style={styles.outerCircle} />

</TouchableOpacity>

<TouchableOpacity >

<Ionicons name='refresh-sharp' size={24} color="white" />

</TouchableOpacity>

</View>

</View>

</View>

)

};

const { create } = StyleSheet;

const styles = create({

container: {

flex: 1,

},

top: {

width: '100%',

height: 100,

backgroundColor: 'rgba(0,0,0,.9)',

paddingHorizontal: 20,

flexDirection: 'row',

justifyContent: 'space-between',

alignItems: 'center',

borderBottomLeftRadius: 2,

borderBottomRightRadius: 2,

position: 'absolute',

top: 0,

zIndex: 10

},

cameraView: {

flex: 1,

borderRadius: 2,

},

cameraContainer: {

flex: 1,

backgroundColor: '#ccc',

},

bottom: {

width: '100%',

height: 150,

backgroundColor: 'rgba(0,0,0,.9)',

borderTopLeftRadius: 2,

borderTopRightRadius: 2,

paddingHorizontal: 20,

position: 'absolute',

bottom: 0,

},

canvasView: {

flex: 1,

width: '100%',

},

operations: {

flexDirection: 'row',

justifyContent: 'space-between',

alignItems: 'center',

paddingVertical: 10,

},

picture: {

width: 40,

height: 40,

borderRadius: 4,

borderWidth: 1,

borderColor: '#fff',

},

circle: {

width: 45,

height: 45,

borderRadius: '50%',

borderWidth: 3,

borderColor: '#fff',

justifyContent: 'center',

alignItems: 'center',

},

outerCircle: {

width: 33,

height: 33,

borderRadius: '50%',

borderColor: 'yellow',

borderWidth: 2,

},

});如上代码使用了expo-camera调用相机硬件进行拍摄,并将拍照得到的图片uri等信息添加到了canvas画布上, 但是如上方案有诸多缺陷:

- react-native-canvas内置的Image(代码中的CanvasImage)它的src不能直接读取拍照后得到的uri,因此 需要使用readAsStringAsync方法读取uri并转换为base64,前端朋友应该都知道,图片转换base64格式,体积通 常膨胀为之前的3/4,因此仅在拍照后绘制到canvas画布上就发现耗时问题

- 缺陷2:需要手动计算合适比例后显示到canvas画布上,因为在手机设备上实际拍照的图片分辨率通常会远大于canvas在屏幕上的显示范围,如果不手动控制canvas尺寸和拍照图片一致,最终保存的图片可能会因为没有满铺canvas而留白

- 相机功能使用了expo-camera,它适合深度定制相机功能,如果无需深度定制相机功能也可以直接调用手机相机,就不需要expo-camera依赖了

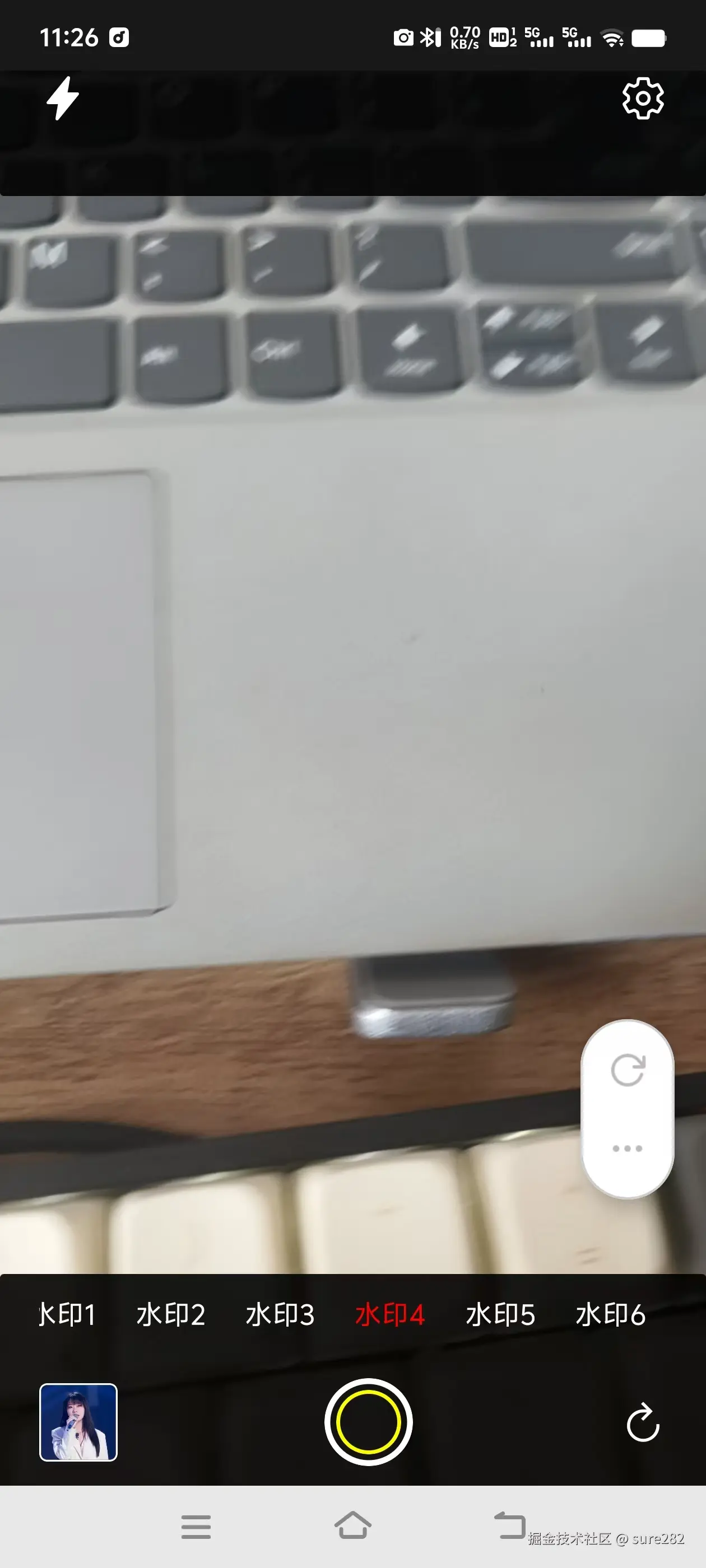

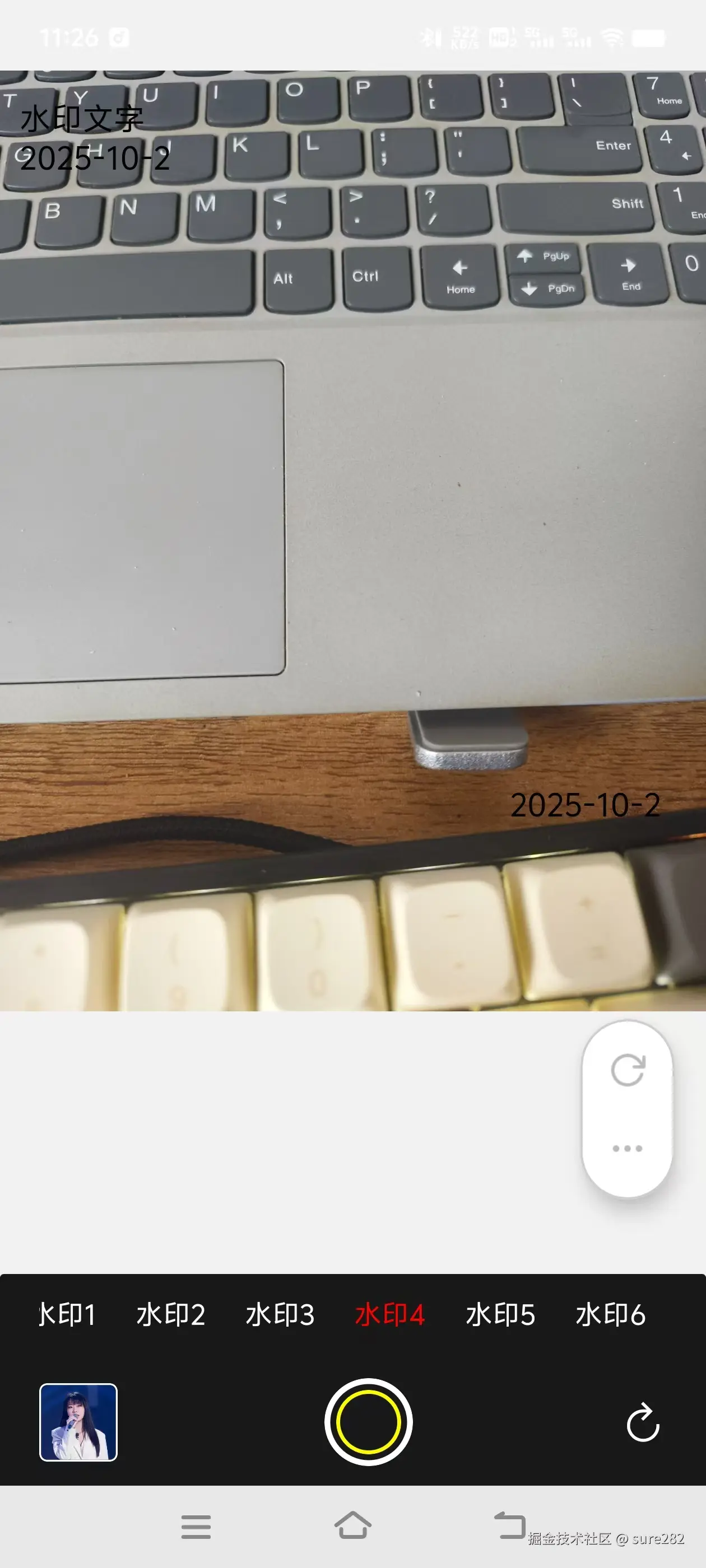

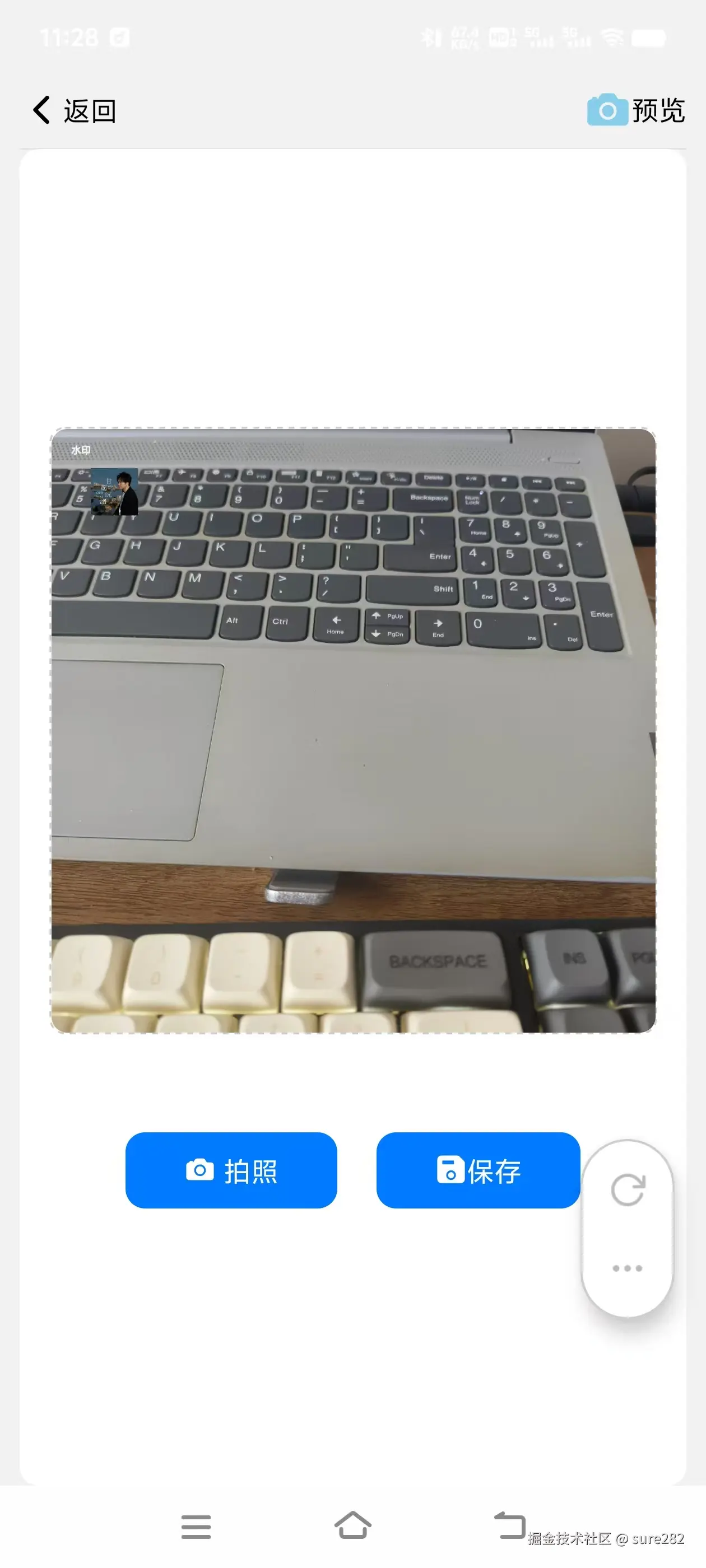

基本效果图:

方案2 使用react-native-photo-manipulator依赖库

由于开发环境为expo创建的rn项目,该依赖库使用了原生代码,因此调试时需要进行 [expo的开发构建](Introduction to development builds - Expo Documentation)

该方案为了简便直接使用launchCameraAsync方法调用系统相机, [react-native-photo-manipulator](github.com/guhungry/re...)主要提供裁剪,图片质量,文字(text),图片(overlay)水印添加等功能

tsx

import React, { useState, type FC } from "react";

import { View, Text, StyleSheet, TouchableOpacity, useWindowDimensions, Alert, type ViewStyle } from "react-native";

import Animated from "react-native-reanimated";

import { Ionicons, AntDesign } from "@expo/vector-icons";

import { useApperance } from "@/components/music/musicplayer/hooks";

import { Image } from 'expo-image';

import RNPhotoManipulator, { type PhotoBatchOperations } from 'react-native-photo-manipulator';

import { requestCameraPermissionsAsync, launchCameraAsync, type ImagePickerAsset } from 'expo-image-picker';

import { type WaterPrintModel } from "./types";

import { saveToLibraryAsync } from 'expo-media-library';

// 引入项目中assets文件夹下图片作为添加到图片上的overlay

const singer4 = require('@/assets/images/singer/singer4.png');

type props = {

style?: ViewStyle

currentModel: WaterPrintModel

};

const TakePhoto: FC<props> = ({ currentModel, style }) => {

const [watermarkedImageUri, setWatermarkedImageUri] = useState('');

const { aniStyle } = useApperance(currentModel, 'takePhoto');

const { width } = useWindowDimensions();

/** 1. 获取相机权限 (expo 会自动处理权限申请)*/

const requestCameraPermission = async () => {

const { status } = await requestCameraPermissionsAsync();

return status === 'granted';

};

/**

* 调用相机进行拍照

*/

const takePhoto = async () => {

/**1.检查是否具有相机的使用权限 */

const hasPermission = await requestCameraPermission();

if (!hasPermission) {

alert('请允许相机权限');

return;

};

/** 2. 调用相机拍照 该方式将调用相机,不能使用相册中选择的图片 */

const { canceled, assets } = await launchCameraAsync({

mediaTypes: 'images',

allowsEditing: false,

// aspect: [4, 3],//宽高比

quality: 1,

base64: false,//是否返回base64编码的图片数据,uri更高效

exif: true,//是否返回exif数据

});

if (canceled) {

return;

};

if (assets && assets.length > 0) {

await addWaterMark(assets[0]);

};

};

/**

* 拍照后添加水印

* @param imageUri 拍照后的图片uri

*/

const addWaterMark = async (imageData: ImagePickerAsset) => {

try {

/**

* 为图片批量添加文字水印

* 可以添加多个文字并指定位置和样式

*/

// const text = [

// { position: { x: 100, y: 50 }, text: "Text 1", textSize: 30, color: "#000000" },

// { position: { x: 100, y: 100 }, text: "Text 1", textSize: 30, color: "#FFFFFF", thickness: 3 }

// ];

// /**创建成功后得到的图片uri */

// const manipulatedResult = await RNPhotoManipulator.printText(imageData.uri, text);

// console.log('manipulatedResult', manipulatedResult);

// setWatermarkedImageUri(manipulatedResult);

/**

* 可以使用catch批量操作

* 比如对图像裁剪,调整大小和执行其他操作,比如添加

* 图片覆盖,水印,文字,等,

* 批量操作裁剪参数必传,目前裁剪为原图大小

*/

const { uri, width, height } = imageData;

const cropRegion = { x: 0, y: 0, width, height };

/**这里添加类型标注避免报错 */

const operations: PhotoBatchOperations[] = [

{ operation: 'text', options: { position: { x: 100, y: 100 }, text: '水印', textSize: 50, color: '#fff', thickness: 3 } },

{ operation: 'overlay', overlay: singer4, position: { x: 200, y: 200 } }

];

const path = await RNPhotoManipulator.batch(uri, operations, cropRegion);

setWatermarkedImageUri(path);

} catch (error) {

alert('添加水印失败');

}

};

/**

* 保存添加水印的图片

* 使用

*/

const saveImage = async () => {

if (!watermarkedImageUri) {

return;

};

try {

Alert.alert('提示', '确定保存到相册吗?', [

{

text: '取消',

style: 'cancel'

},

{

text: '确定',

onPress: async () => {

if (!watermarkedImageUri) {

alert('请先拍照');

return;

}

await saveToLibraryAsync(watermarkedImageUri);

alert('保存成功');

}

}

]);

} catch (error) {

alert('保存失败');

}

};

return (

<Animated.View

style={[styles.container, style, aniStyle]}

>

<View

style={[styles.box, { width: width - 50, height: width - 50 }]}

>

{watermarkedImageUri ? (<Image

source={{ uri: watermarkedImageUri }}

contentFit="contain"

style={styles.image}

/>) : (<AntDesign

name="picture"

size={100}

color="skyblue"

/>)}

</View>

<View style={{ flexDirection: 'row', gap: 20 }}>

<TouchableOpacity

style={styles.btn}

onPress={takePhoto}

>

<Text

style={styles.text}

>

<Ionicons

name="camera"

size={16}

color="white"

/> 拍照</Text>

</TouchableOpacity>

<TouchableOpacity

style={[styles.btn, { display: watermarkedImageUri ? 'flex' : 'none' }]}

onPress={saveImage}

>

<Text

style={styles.text}

>

<Ionicons

name="save"

size={16}

color="white"

/>

保存</Text>

</TouchableOpacity>

</View>

</Animated.View>

)

};

const styles = StyleSheet.create({

container: {

flex: 1,

backgroundColor: '#fff',

alignItems: 'center',

justifyContent: 'center',

gap: 50,

borderRadius: 10,

},

box: {

borderWidth: 1,

borderColor: '#ccc',

borderStyle: 'dashed',

borderRadius: 8,

alignItems: 'center',

justifyContent: 'center',

},

btn: {

paddingVertical: 10,

paddingHorizontal: 30,

backgroundColor: '#007AFF',

borderRadius: 10,

},

text: {

color: '#fff'

},

image: {

borderRadius: 8,

width: '100%',

height: '100%',

/**

* expo-image 的resizeMode 属性 已废弃,

* 使用contentFit 代替,且直接将该属性配置到Image组件上

* */

// resizeMode: 'contain', // 保持图片比例

},

})

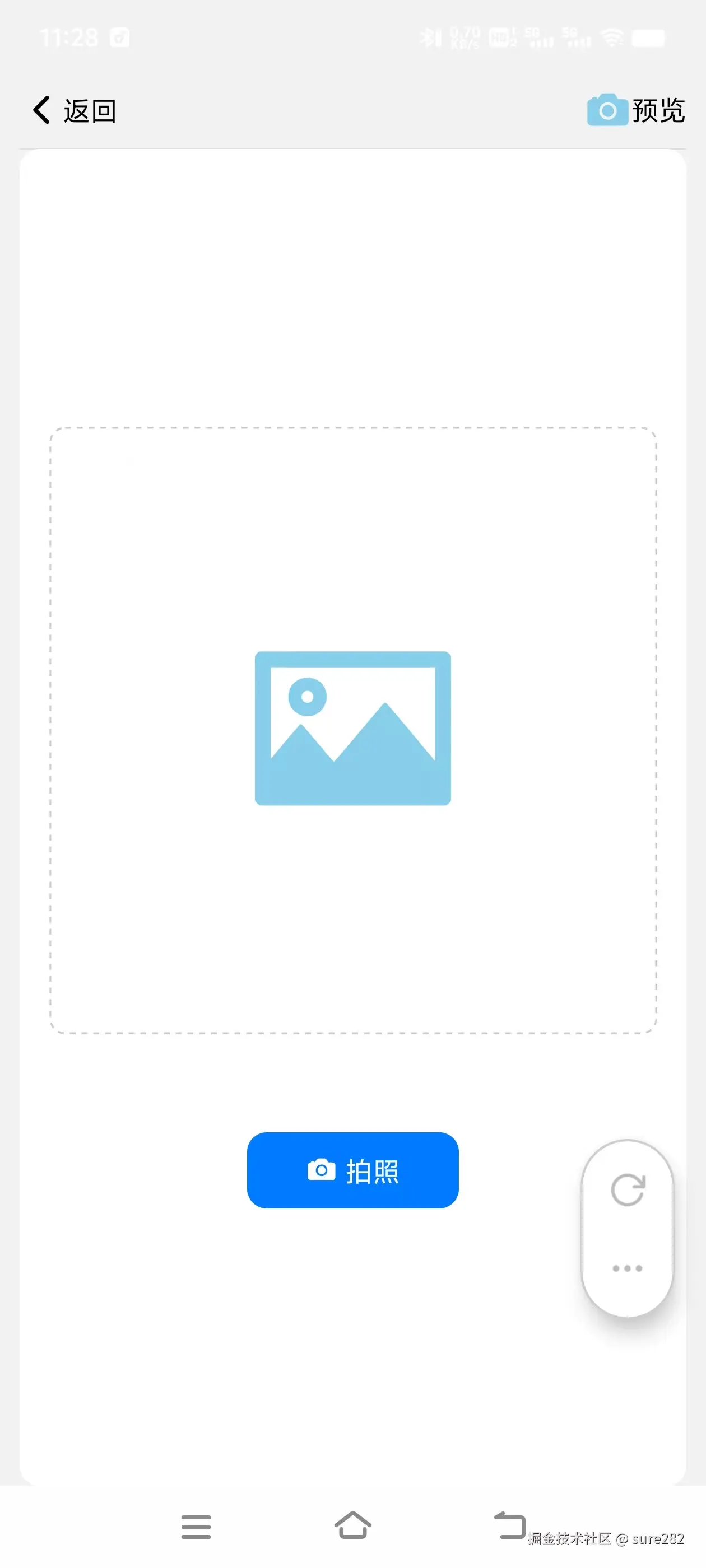

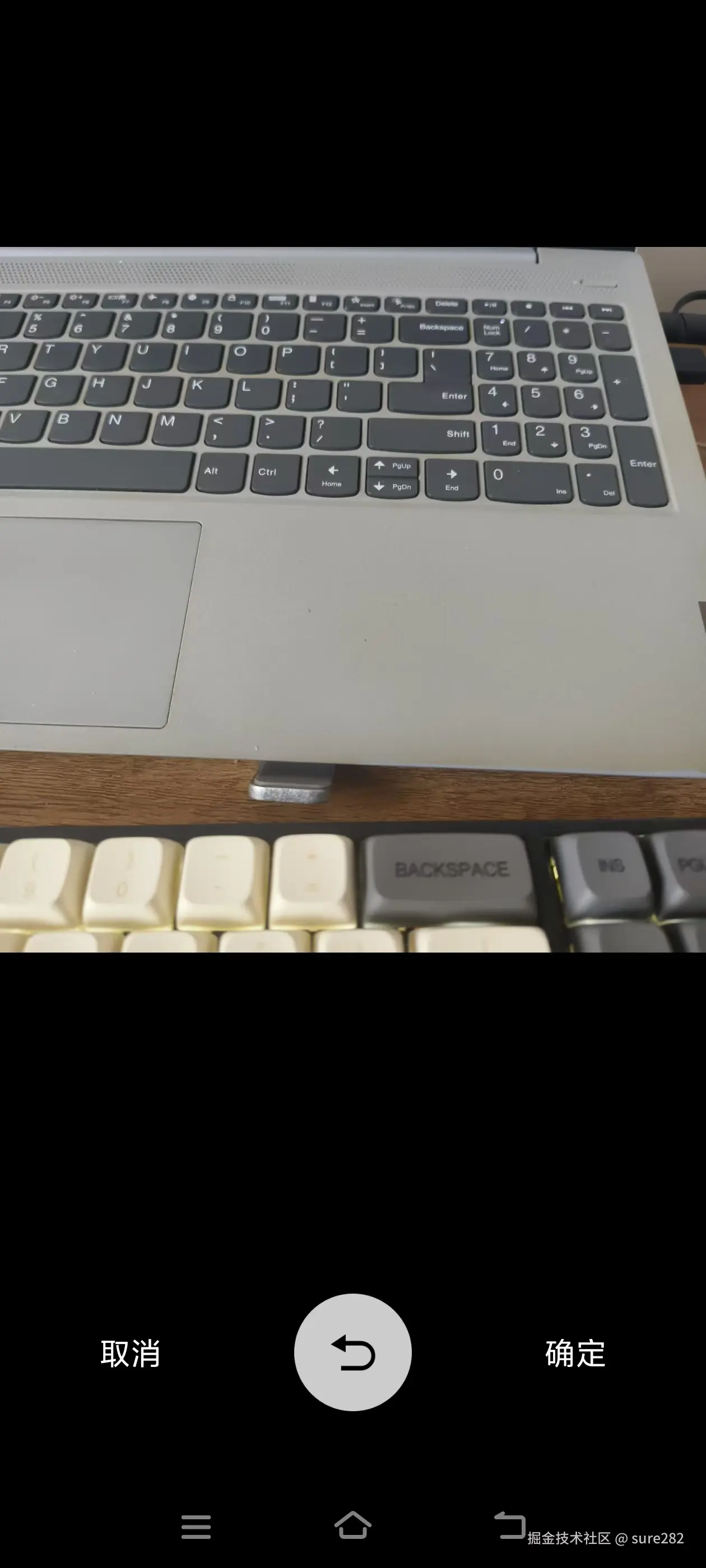

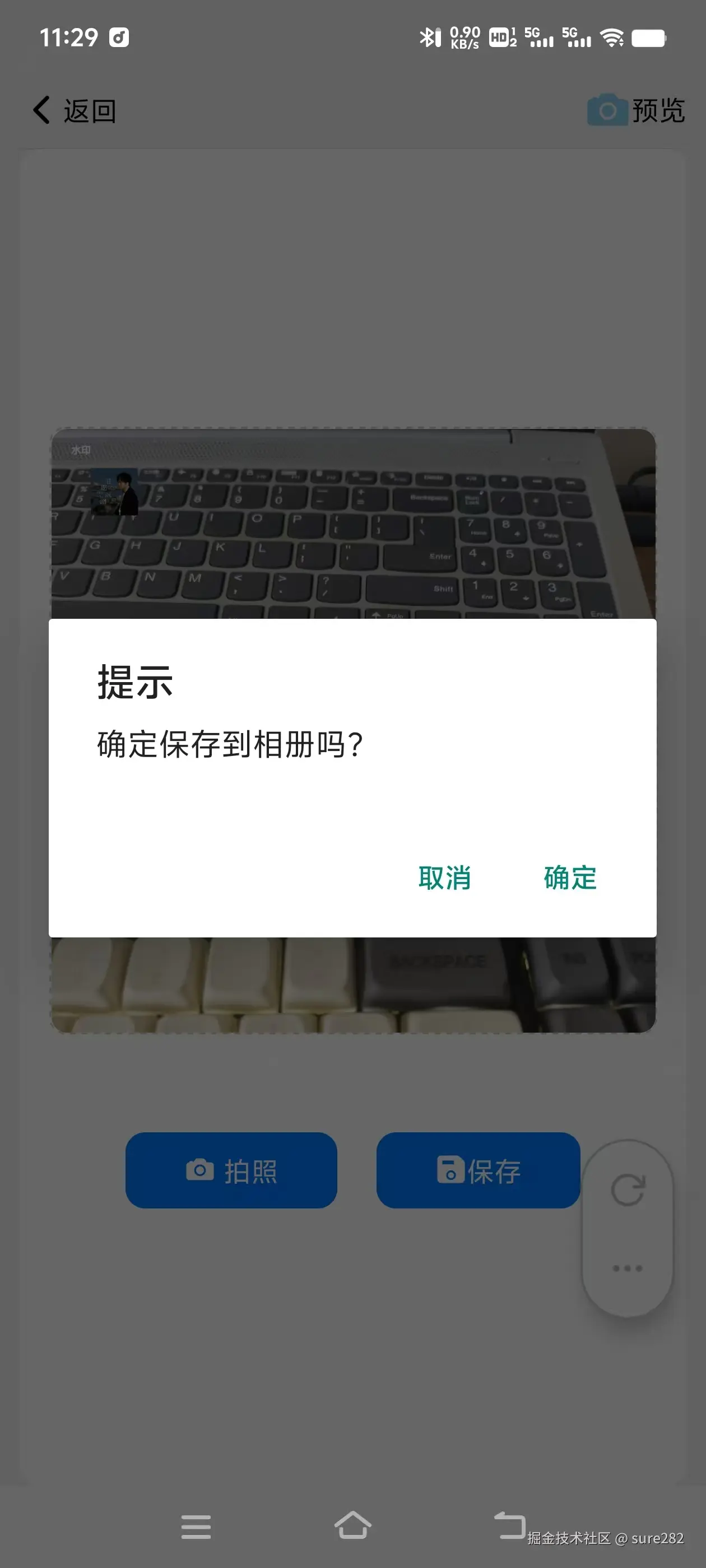

export default TakePhoto;基本效果图:

- 以上依赖库规避了拍照后图片转换为base64进行加载的问题,可以直接使用uri

- 加载速度明显提升

- 使用batch方法批量添加时注意要给内容数组定义ts类型

- 文档比较详细

总结

以上两种方案均可实现水印功能但是显然第二种更好,该依赖库主要功能之一就是水印功能,以上代码是基本实现,可以在如上代码基础上拓展,以上功能仅在Android上调试,没有在iOS上调试,可以根据不同平台按需开发