LLMs之SLMs:《Small Language Models are the Future of Agentic AI》的翻译与解读

导读: 该论文系统地论证了当前AI智能体领域对大型语言模型(LLM)的过度依赖是一种资源错配。文章的核心观点是:小型语言模型(SLM)在能力上已足够胜任智能体中的大量专业化、重复性任务,并且在成本、效率、灵活性和部署便捷性上具有压倒性优势。因此,论文倡导行业应从"LLM中心化"的现状,转向一种"SLM优先"的、由多个专业SLM和通用LLM协同工作的异构智能体架构。为了推动这一转变,论文不仅有力地反驳了主流的反对意见,指出了当前的实践障碍,还提供了一个具体可行的六步转换算法,最终宣告SLM才是智能体AI(Agentic AI)的未来方向,代表着一条更经济、高效、可持续和民主化的发展路径。

>> 背景痛点

● LLM中心化的主导地位与高昂成本: 当前,绝大多数现代AI智能体(Agentic AI)的核心都依赖于大型语言模型(LLMs)。整个行业已经形成了依赖少数几个通用LLM API端点的运营模式,并为此投入了数百亿美元的巨额资本用于中心化的云基础设施建设。这种模式虽然强大,但运行和调用成本极高。

● 资源错配与效率低下: 论文指出,智能体在执行任务时,大多数子任务实际上是重复的、范围狭窄且非对话性的。使用一个庞大、通用的LLM来处理这些相对简单的、专业化的任务,是一种严重的计算资源错配。这不仅在经济上效率低下,而且在规模化应用时对环境也不可持续。

● 行业惯性与发展局限: 现有以LLM为中心的模式已经根深蒂固,形成了强大的行业惯性。这导致了创新和工具开发都围绕着大型、中心化的模型展开,而忽视了其他可能更优的解决方案,限制了AI智能体技术的多样化和普惠化发展。

>> 具体的解决方案

● 转向"SLM优先"的架构(SLM-first architectures): 论文的核心倡议是,智能体系统的设计理念应从"LLM中心化"转变为"SLM优先"。即默认使用小型语言模型(SLMs)来处理大部分常规、专业的任务,只在确实需要极高通用性、复杂推理或开放域对话的少数情况下,才调用LLM。

● 采用异构智能体系统(Heterogeneous agentic systems): 提倡构建由多种不同模型构成的"异构"系统。在这种模块化系统中,多个轻量、高效、专门的SLM专家模型与一个(或多个)通用的LLM协同工作。系统可以根据任务的复杂度和类型,智能地将请求路由到最合适的模型(成本最低、效率最高的SLM,或能力最强的LLM),实现能力与成本的最佳平衡。

● 实施LLM到SLM的转换路径: 论文不仅提出了理念,还提供了一个具体的、可操作的"LLM-to-SLM智能体转换算法",帮助开发者将现有的、依赖LLM的智能体应用逐步迁移到以SLM为基础的架构上,从而降低实践门槛。

>> 核心思路步骤: 论文提出了一个将智能体从依赖LLM转向使用SLM的六步转换算法:

● 步骤一:安全地收集使用数据(Secure usage data collection): 首先,在现有智能体系统中部署日志工具,安全地记录所有非人机交互的模型调用,包括输入提示、输出响应和工具调用内容。此过程需确保数据加密和匿名化,保护用户隐私。

● 步骤二:数据策展与过滤(Data curation and filtering): 对收集到的数据进行清洗和整理,移除个人身份信息(PII)等敏感数据。这一步旨在创建一个高质量、安全的数据集,用于后续模型的训练。

● 步骤三:任务聚类(Task clustering): 使用无监督聚类等技术分析收集到的调用数据,识别出反复出现的请求模式或内部操作。这些聚类结果定义了哪些任务是标准化的、重复的,从而成为SLM专业化的候选目标。

● 步骤四:选择合适的SLM(SLM selection): 针对每个识别出的任务集群,根据任务类型(如指令遵循、代码生成)、性能要求、许可证和部署成本等标准,选择一个或多个候选的SLM。

● 步骤五:专门化的SLM微调(Specialized SLM fine-tuning): 使用步骤二中策展好的数据,对选定的SLM进行微调。可以采用参数高效微调(PEFT,如LoRA)或知识蒸馏等技术,让SLM学习模仿原先LLM在该特定任务上的表现,以低成本实现高能力的迁移。

● 步骤六:迭代与优化(Iteration and refinement): 这是一个持续改进的循环。定期使用新收集的数据重新训练SLM,以适应用户行为的变化和新出现的需求,确保持续的性能和效率。

>> 优势

● 能力充足(Sufficiently Powerful): 论文通过列举Microsoft Phi系列、NVIDIA Nemotron-H、SmolLM2等多个现代SLM的例子,论证了经过精心设计和训练的SLM在常识推理、代码生成、工具调用和指令遵循等关键能力上,已经可以媲美甚至超越比它们大数十倍的上一代LLM。

● 经济高效(More Economical): SLM在推理成本(延迟、能耗、FLOPs)上比LLM便宜10-30倍。同时,它们的微调成本极低(仅需数个GPU小时),支持快速迭代和修复。此外,SLM更容易实现边缘部署(如在消费级GPU上本地运行),降低了对昂贵云基础设施的依赖。

● 灵活性高(More Flexible): SLM的小尺寸使其在操作上极其灵活。开发者可以轻松地为不同任务训练和部署多个专门的"专家模型",快速响应市场变化、满足新的输出格式要求或遵守特定地区的法规。这种灵活性还促进了AI开发的"民主化",让更多个人和小型组织有能力参与模型开发。

● 架构与任务高度匹配(Better Fit for Agents):

* 功能匹配: 智能体通常只使用LLM庞大能力中的一小部分,一个为该任务精调的SLM完全足够。

* 行为对齐: 智能体与代码交互时需要严格的格式,SLM通过微调可以更容易、更可靠地实现这种行为对齐,减少"幻觉"错误。

* 系统兼容: 智能体系统天然支持模块化和异构化,可以自然地集成多个不同大小的模型。

* 数据飞轮: 智能体与工具的交互过程本身就是高质量的微调数据来源,为持续优化和创建专家SLM提供了天然的路径。

>> 结论和观点 (侧重经验与建议)

● 范式转变的必要性: 论文坚定地认为,从LLM中心转向SLM优先不仅是技术上的优化,更是一种必然趋势。随着AI产业对成本和可持续性的关注日益增加,采用SLM是推动AI负责任部署的关键一步。

● 承认并反驳对立观点: 论文系统性地讨论了"LLM通用性优势论"(认为LLM更强的通用理解能力使其在任何任务上都更优)和"规模经济成本优势论"(认为中心化LLM因规模效应而更便宜)等反方观点。并逐一进行反驳,例如指出模型架构创新和推理时计算缩放可以弥补SLM的规模劣势,而先进的推理调度系统正在削弱中心化部署的成本优势。

● 指出现实障碍: 论文明确指出了阻碍SLM广泛采用的三个主要障碍:1)对中心化LLM基础设施的巨额前期投资形成了行业惯性;2)SLM的开发和评估仍沿用为LLM设计的通用基准,未能凸显其在专业任务上的优势;3)公众和市场对SLM的认知度远低于LLM。

● 提供具体转型路径: 论文不仅停留在理论倡导,还提供了一个从LLM到SLM的六步转换算法。这为企业和开发者提供了一个清晰、可行的实践指南,展示了如何逐步、低风险地实现架构转型。

● 提供实践案例分析: 通过对MetaGPT、Open Operator和Cradle等三个开源智能体项目的案例研究,论文估算了可被SLM替代的LLM调用比例分别约为60%、40%和70%。这为SLM的替代潜力提供了具体的、经验性的证据。

● 呼吁广泛讨论: 论文最后向整个AI社区发出呼吁,希望激发关于有效利用AI资源、降低AI成本的广泛讨论,并承诺公开所有相关的贡献和批评,以共同推动该领域的发展。

目录

[《Small Language Models are the Future of Agentic AI》的翻译与解读](#《Small Language Models are the Future of Agentic AI》的翻译与解读)

[Figure 1: An illustration of agentic systems with different modes of agency. Left: Language model agency. The language model acts both as the HCI and the orchestrator of tool calls to carry out a task. Right: Code agency. The language model fills the role of the HCI (optionally) while a dedicated controller code orchestrates all interactions.图 1:具有不同代理模式的代理系统示例。左:语言模型代理。语言模型同时充当人机交互界面(HCI)和工具调用的协调者以完成任务。右:代码代理。语言模型可选地充当人机交互界面(HCI),而专用的控制器代码协调所有交互。](#Figure 1: An illustration of agentic systems with different modes of agency. Left: Language model agency. The language model acts both as the HCI and the orchestrator of tool calls to carry out a task. Right: Code agency. The language model fills the role of the HCI (optionally) while a dedicated controller code orchestrates all interactions.图 1:具有不同代理模式的代理系统示例。左:语言模型代理。语言模型同时充当人机交互界面(HCI)和工具调用的协调者以完成任务。右:代码代理。语言模型可选地充当人机交互界面(HCI),而专用的控制器代码协调所有交互。)

[6 LLM-to-SLM Agent Conversion Algorithm](#6 LLM-to-SLM Agent Conversion Algorithm)

[7 Call for Discussion](#7 Call for Discussion)

《Small Language Models are the Future of Agentic AI》 的翻译与解读

|------------|---------------------------------------------------------------------------------------------------------|

| 地址 | https://arxiv.org/abs/2506.02153 |

| 时间 | V1:2025年6月2日 V2:2025年9月15日 |

| 作者 | NVIDIA Research |

Abstract

|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| Large language models (LLMs) are often praised for exhibiting near-human performance on a wide range of tasks and valued for their ability to hold a general conversation. The rise of agentic AI systems is, however, ushering in a mass of applications in which language models perform a small number of specialized tasks repetitively and with little variation. Here we lay out the position that small language models (SLMs) are sufficiently powerful, inherently more suitable, and necessarily more economical for many invocations in agentic systems, and are therefore the future of agentic AI. Our argumentation is grounded in the current level of capabilities exhibited by SLMs, the common architectures of agentic systems, and the economy of LM deployment. We further argue that in situations where general-purpose conversational abilities are essential, heterogeneous agentic systems (i.e., agents invoking multiple different models) are the natural choice. We discuss the potential barriers for the adoption of SLMs in agentic systems and outline a general LLM-to-SLM agent conversion algorithm. Our position, formulated as a value statement, highlights the significance of the operational and economic impact even a partial shift from LLMs to SLMs is to have on the AI agent industry. We aim to stimulate the discussion on the effective use of AI resources and hope to advance the efforts to lower the costs of AI of the present day. Calling for both contributions to and critique of our position, we commit to publishing all such correspondence at this https URL. | 大型语言模型(LLMs)常被赞誉为在广泛任务上展现接近人类的表现,并因其进行通用对话的能力而受到重视。然而,代理式 AI 系统的兴起正引领着大量应用,这些应用中语言模型反复执行少量专门任务,且变化很小。 在本文中,我们提出这样一个观点:小型语言模型(SLMs)对于代理系统中的许多调用来说已经足够强大、本质上更适合,并且必然更经济,因此是代理式 AI 的未来。我们的论证基于 SLM 当前的能力水平、代理系统的常见架构以及语言模型部署的经济性。我们进一步论证,在需要通用对话能力的情境中,异构的代理系统(即由代理调用多个不同模型)是自然的选择。我们讨论了在代理系统中采用 SLM 的潜在障碍,并概述了通用 LLM 到 SLM 的代理转换算法。 我们将我们的立场表述为一种价值声明,强调即使从 LLM 部分转向 SLM 也将对 AI 代理行业产生重大的运营和经济影响。我们希望激发关于有效使用 AI 资源的讨论,并推动降低当今 AI 成本的努力。我们呼吁对我们的立场进行贡献和批评,并承诺将在此 https URL 发布所有相关通信。 |

1、Introduction

|---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| The deployment of agentic artificial intelligence is on a meteoric rise. Recent surveys show that more than a half of large IT enterprises are actively using AI agents, with 21% having adopted just within the last year [14]. Aside from the users, markets also see substantial economic value in AI agents: As of late 2024, the agentic AI sector had seen more than USD 2bn in startup funding, was valued at USD 5.2bn, and was expected to grow to nearly USD 200bn by 2034 [46, 51]. Put plainly, there is a growing expectation that AI agents will play a substantial role in the modern economy. The core components powering most modern AI agents are (very) large language models [52, 48]. It is the LLMs that provide the foundational intelligence that enables agents to make strategic decisions about when and how to use available tools, control the flow of operations needed to complete tasks, and, if necessary, to break down complex tasks into manageable subtasks and to perform reasoning for action planning and problem-solving [52, 17]. A typical AI agent then simply communicates with a chosen LLM API endpoint by making requests to centralized cloud infrastructure that hosts these models [52]. | 代理式人工智能的部署正在迅速上升。近期调查显示,超过一半的大型 IT 企业正在积极使用 AI 代理,其中 21% 是在过去一年内才采纳的 [14]。除了用户之外,市场也在 AI 代理中看到巨大的经济价值:截至 2024 年底,代理式 AI 领域已获得超过 20 亿美元的初创投资,估值达 52 亿美元,并预计到 2034 年将增长至近 2000 亿美元 [46, 51]。简单而言,人们越来越期待 AI 代理将在现代经济中发挥重要作用。 大多数现代 AI 代理的核心组件是(非常)大型语言模型 [52, 48]。正是 LLM 提供了基础智能,使代理能够就何时以及如何使用可用工具做出战略决策,控制完成任务所需的操作流程,并在必要时将复杂任务分解为可管理的子任务,执行针对行动规划和问题解决的推理 [52, 17]。典型的 AI 代理只需通过向托管这些模型的集中云基础设施发出请求,与所选的 LLM API 端点通信 [52]。 |

| LLM API endpoints are specifically designed to serve a large volume of diverse requests using one generalist LLM. This operational model is deeply ingrained in the industry. In fact, it forms the foundation of substantial capital investment in the hosting cloud infrastructure -- estimated at USD 57bn [16]. It is anticipated that this operational model will remain the cornerstone of the industry and that the large initial investment will deliver returns comparable to traditional software and internet solutions within 3-4 years [57]. In this work, we recognize the dominance of the standard operational model but verbally challenge one of its aspects, namely the custom that the agents' requests to access language intelligence are --in spite of their comparative simplicity -- handled by singleton choices of generalist LLMs. We state (Section 2), argue (Section 3), and defend (Section 4) the position that the small, rather than large, language models are the future of agentic AI. We, however, recognize the business commitment and the now-legacy praxis that is the cause for the contrary state of the present (Section 5). In remedy, we provide an outline of a conversion algorithm for the migration of agentic applications from LLMs to SLMs (Section 6), and call for a wider discussion (Section 7). If needed to concretize our stance, we attach a set of short case studies estimating the potential extent of LLM-to-SLM replacement in selected popular open-source agents (Section B). | LLM API 端点专为使用一个通用型 LLM 来服务大量多样化请求而设计。这种操作模式深深根植于行业之中。实际上,它构成了托管云基础设施(估计为 570 亿美元 [16])的大量资本投资的基础。预计这种操作模式将继续成为行业的基石,并将在 3--4 年内获得类似传统软件和互联网解决方案的回报 [57]。 在本文中,我们承认这种标准操作模式的主导地位,但口头挑战其中的一个方面,即尽管代理的请求相对简单,但其访问语言智能的请求仍由单一的通用 LLM 处理。我们在第 2 节提出立场,在第 3 节论证,在第 4 节进行辩护,即小型而非大型语言模型才是代理式 AI 的未来。我们也认识到商业承诺以及导致现状的既有实践(第 5 节)。为此,我们提供了 LLM 向 SLM 迁移的代理应用转换算法概述(第 6 节),并号召更广泛的讨论(第 7 节)。如需具体化我们的立场,我们附上简短案例研究,估计在一些流行开源代理中 LLM 被 SLM 替换的潜在程度(附录 B)。 |

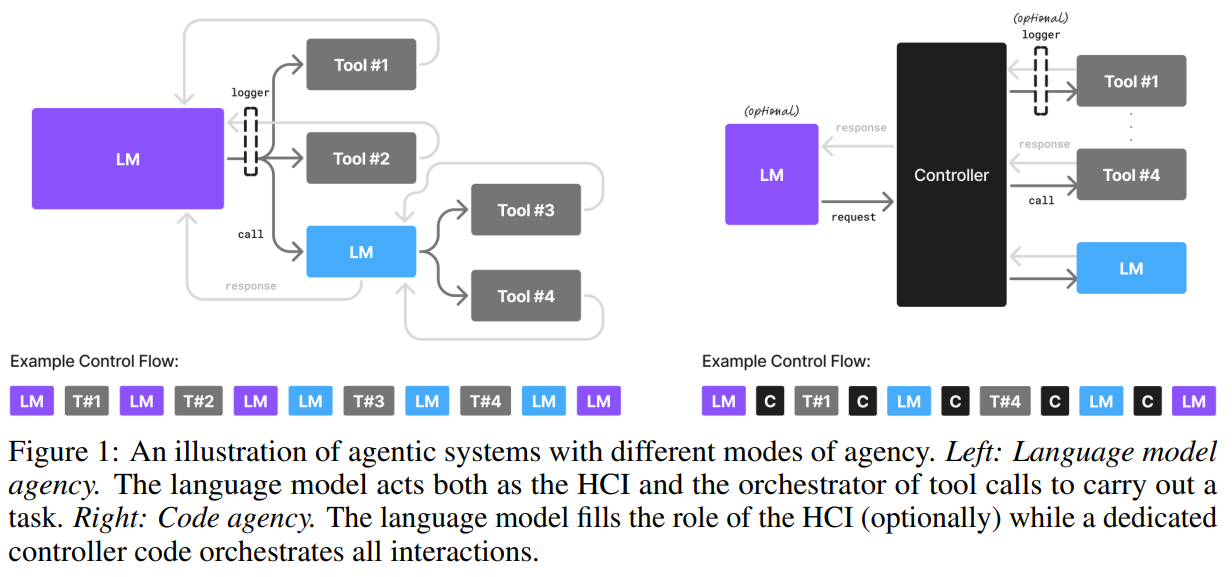

Figure 1: An illustration of agentic systems with different modes of agency. Left: Language model agency. The language model acts both as the HCI and the orchestrator of tool calls to carry out a task. Right: Code agency. The language model fills the role of the HCI (optionally) while a dedicated controller code orchestrates all interactions.图 1:具有不同代理模式的代理系统示例。左:语言模型代理。语言模型同时充当人机交互界面(HCI)和工具调用的协调者以完成任务。右:代码代理。语言模型可选地充当人机交互界面(HCI),而专用的控制器代码协调所有交互。

6 LLM-to-SLM Agent Conversion Algorithm

|-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| The very nature of agentic applications enables them to eventually switch from using LLM generalists to using SLM specialists at many of their interfaces. In the following steps, we outline an algorithm that describes one possible way to carry out the change of the underlying model painlessly. S1 Secure usage data collection. The initial step involves deploying instrumentation to log all non-HCI agent calls, capturing input prompts, output responses, contents of individual tool calls, and optionally latency metrics for a later targeted optimization. In terms of implementation, it is the recommended practice to set up encrypted logging pipelines with role-based access controls [55] and anonymize all data with respect to its origins before storage [74]. See logger in Figure 1 for an illustration. S2 Data curation and filtering. Begin collecting data through the pipelines of step S1. Once a satisfactory amount of data has been collected (10k-100k examples being sufficient for fine-tuning of small models as a rule of thumb [5, 22]), it is necessary to remove any PII, PHI, or any other application-specific sensitive data that could cause a data leak across user accounts once used to produce a SLM specialist. Many typical varieties of sensitive data can be detected and masked or removed using popular automated tools for dataset preparation [64, 62]. Application specific inputs (e.g. legal or internal documents) can be often be automatically paraphrased to obfuscate named entities and numerical details without compromising the general information content [11, 79, 76]. S3 Task clustering. Employ unsupervised clustering techniques on the collected prompts and agent actions to identify recurring patterns of requests or internal agent operations [35, 42, 21]. These clusters help define candidate tasks for SLM specialization. The granularity of tasks will depend on the diversity of operations; common examples include intent recognition, data extraction, summarization of specific document types, or code generation with respect to tools available to the agent. S4 SLM selection. For each identified task, select one or more candidate SLMs. Criteria for selection include the SLM's inherent capabilities (e.g., instruction following, reasoning, context window size), its performance on relevant benchmarks for the task type, its licensing, and its deployment footprint (memory, computational requirements). Models of Section 3.2 serve as good starting candidates. S5 Specialized SLM fine-tuning. For each selected task and corresponding SLM candidate, prepare a task-specific dataset from the curated data collected in steps S2 and S3. Then, fine-tune the chosen SLMs on these specialized datasets. PEFT techniques such as LoRA [34] or QLoRA [20] can be leveraged to reduce computational costs and memory requirements associated with fine-tuning, making the process more accessible. Full fine-tuning can also be considered if resources permit and maximal adaptation is required. In some cases, it may be beneficial to use knowledge distillation, where the specialist SLM is trained to mimic the outputs of the more powerful generalist LLM on the task-specific dataset. This can help transfer some of the more nuanced capabilities of the LLM to the SLM. S6 Iteration and refinement. One may retrain the SLMs and the router model periodically with new data to maintain performance and adapt to evolving usage patterns. This forms a continuous improvement loop, returning to step S2 or step S4 as appropriate. | 代理应用的本质决定了它们最终能够在许多接口上从使用通用 LLM 切换为使用 SLM 专家模型。以下步骤概述了一种可能的方法,以无痛实现底层模型的替换。 S1 安全使用数据收集。 初始步骤包括部署监控以记录所有非人机交互(non-HCI)代理调用,捕获输入提示、输出响应、各个工具调用的内容,以及可选的延迟指标,以便后续针对性优化。实现上,推荐设置带有基于角色访问控制的加密日志管道 [55],并在存储前将所有数据与其来源匿名化 [74]。如图 1 中 logger 所示。 S2 数据整理与过滤。 开始通过 S1 中设置的数据管道收集数据。当收集到足够数量的数据时(通常 1 万至 10 万示例足以对小模型进行微调 [5, 22]),需要移除任何 PII(个人身份信息)、PHI(受保护健康信息)或其他应用特定的敏感数据,以避免在使用 SLM 专家模型后导致跨用户的数据泄漏。许多典型的敏感数据类型可以通过流行的数据集准备自动工具检测并屏蔽或移除 [64, 62]。应用特定输入(如法律或内部文档)通常可以通过自动释义处理,以模糊命名实体和数字细节而不损害信息内容 [11, 79, 76]。 S3 任务聚类。 使用无监督聚类技术对收集的提示和代理行为进行分析,以识别重复出现的请求模式或代理内部操作 [35, 42, 21]。这些聚类有助于定义 SLM 专业化的候选任务。任务的细粒度取决于操作的多样性;常见示例包括意图识别、数据提取、特定文档类型的摘要、或与代理可用工具相关的代码生成。 S4 SLM 选择。 为每个识别的任务选择一个或多个 SLM 候选。选择标准包括模型的内在能力(如指令跟随、推理、上下文窗口大小)、其在相关基准测试上的表现、许可协议以及部署开销(内存、计算要求)。第 3.2 节中的模型是良好的初始候选。 S5 专用 SLM 微调。 对每个任务及其对应的 SLM 候选,从 S2 和 S3 的整理数据中准备任务特定的数据集,然后对所选 SLM 进行微调。PEFT 技术如 LoRA [34] 或 QLoRA [20] 可降低微调的计算与内存成本,使过程更易实现。如资源允许且需要最大适配度,也可以考虑完全微调。在某些情况下,使用知识蒸馏可能有益,即让 SLM 专家学习模仿更强的通用 LLM 在任务数据上的输出,从而转移某些更细致的能力。 S6 迭代与优化。 可周期性地使用新数据重新训练 SLM 和路由模型,以维持性能并适应不断变化的使用模式。这构成一个持续改进循环,按需要返回 S2 或 S4。 |

7 Call for Discussion

|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-----------------------------------------------------------------------------------------------------|

| The agentic AI industry is showing the signs of a promise to have a transformative effect on white collar work and beyond. It is the view of the authors that any expense savings or improvements on the sustainability of AI infrastructure would act as a catalyst for this transformation, and that it is thus eminently desirable to explore all options for doing so. | 代理式 AI 行业展现出有望对白领工作及更广泛领域产生变革性影响的迹象。 作者认为,任何在开销节约或 AI 基础设施可持续性方面的改进,都将成为这种变革的催化剂,因此探索所有可能的方式具有极高价值。 |