文章目录

- 1.GM461-E1相机

-

- [1.1 GM461-E1工作场景](#1.1 GM461-E1工作场景)

- [1.2 GM461-E1 IO线和数据线定义](#1.2 GM461-E1 IO线和数据线定义)

-

- [1.2.1 IO接口定义](#1.2.1 IO接口定义)

- [1.2.2 数据接口线](#1.2.2 数据接口线)

- [1.2.3 GM461-E1相机正面安装方向](#1.2.3 GM461-E1相机正面安装方向)

- [1.2.4 GM461相机连接拓扑网络](#1.2.4 GM461相机连接拓扑网络)

- [1.2.5 上下面螺纹孔安装](#1.2.5 上下面螺纹孔安装)

- [1.3 GM461-E1相机性能指标](#1.3 GM461-E1相机性能指标)

- 2.GM461-E1相机调参技巧

-

- [2.1 深度图滤波设置](#2.1 深度图滤波设置)

- [2.2 如何调整相机左右IR的参数?](#2.2 如何调整相机左右IR的参数?)

-

- [2.2.1 手动调整左右IR曝光](#2.2.1 手动调整左右IR曝光)

- [2.2.2 调整相机左右IR自动曝光](#2.2.2 调整相机左右IR自动曝光)

- [2.3 调试深度图](#2.3 调试深度图)

-

- [2.3.1 查看深度值](#2.3.1 查看深度值)

- [2.3.2 渲染范围调节方法](#2.3.2 渲染范围调节方法)

- [2.3.3 调节深度图渲染效果](#2.3.3 调节深度图渲染效果)

- [2.4 保存图像](#2.4 保存图像)

-

- [2.4.1 存图设置](#2.4.1 存图设置)

- [2.4.2 单张存图](#2.4.2 单张存图)

- [2.4.3 连续存图](#2.4.3 连续存图)

- [2.5 GM461-E1相机保存参数配置](#2.5 GM461-E1相机保存参数配置)

- [2.6 GM461-E1相机参数补充说明](#2.6 GM461-E1相机参数补充说明)

- 3.GM461-E1相机SDK相关

-

- [3.1 C++语言SDK(`推荐`)](#3.1 C++语言SDK(

推荐)) -

- [3.1.1 完整参考例子](#3.1.1 完整参考例子)

- [3.1.2 GM461-E1相机软触发注意事项](#3.1.2 GM461-E1相机软触发注意事项)

- [3.1.3 TYSendSoftTrigger软触发指令不生效](#3.1.3 TYSendSoftTrigger软触发指令不生效)

- [3.2 ROS1版本(`推荐`)](#3.2 ROS1版本(

推荐)) - [3.3 ROS2版本(`推荐`)](#3.3 ROS2版本(

推荐)) - 3.4 C#语言SDK

- [3.5 Python语言SDK](#3.5 Python语言SDK)

- [3.1 C++语言SDK(`推荐`)](#3.1 C++语言SDK(

- 4.GM461-E1相机常见FAQ

-

- [4.1 如何获取GM461-E1相机内参?](#4.1 如何获取GM461-E1相机内参?)

-

- [4.1.1 方法一](#4.1.1 方法一)

- [4.1.2 方法二](#4.1.2 方法二)

- [4.2 GM461-E1相机内参说明](#4.2 GM461-E1相机内参说明)

-

- [4.2.1 深度图内参](#4.2.1 深度图内参)

- [4.2.2 彩色图内参/畸变系数/外参](#4.2.2 彩色图内参/畸变系数/外参)

- [4.2.3 左右IR极限约束前内参/极限校正后内参](#4.2.3 左右IR极限约束前内参/极限校正后内参)

-

- [4.3 GM461-E1相机光心位置](#4.3 GM461-E1相机光心位置)

- 5.GM461-E1相机测试结果

-

- [5.1 GM461-E1 帧率测试](#5.1 GM461-E1 帧率测试)

-

- [5.1.1 GM461-E1 出图延迟时间](#5.1.1 GM461-E1 出图延迟时间)

- [5.1.2 GM461-E1 帧率测试](#5.1.2 GM461-E1 帧率测试)

- 6.其他学习资料

1.GM461-E1相机

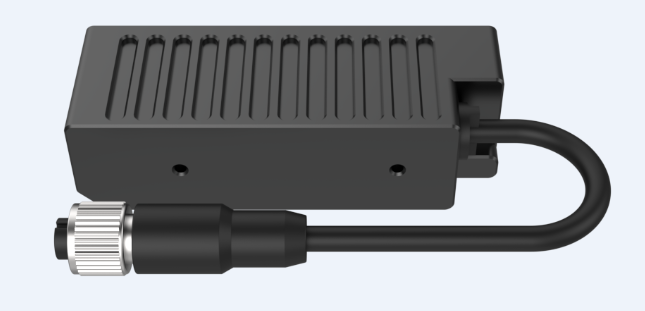

GM461-E1相机外观

1.1 GM461-E1工作场景

GM461是一款高性价比的3D工业相机新品,兼具工业级的稳定性和消费级的价格优势,产品通用性强,可广泛使用于如工业、物流、移动机器人、商业、安全、教育等多种应用场景。

1.2 GM461-E1 IO线和数据线定义

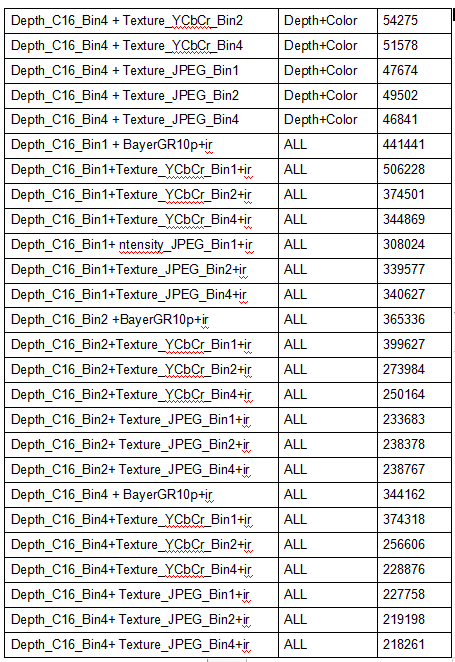

1.2.1 IO接口定义

相机的电源&数据接口线定义如下:

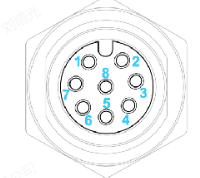

1.2.2 数据接口线

GM461-E1相机数据线如下图:

1.2.3 GM461-E1相机正面安装方向

GM461-E1相机正面安装方向如下:

从左到右依次是右IR镜头 ,散斑投射器 ,RGB相机 ,左IR镜头。

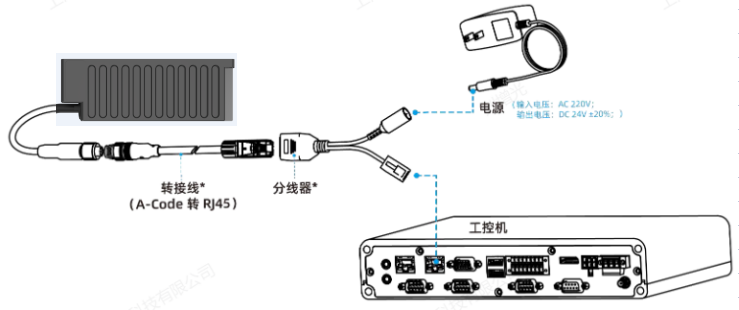

1.2.4 GM461相机连接拓扑网络

GM461相机拓扑网络如下图:

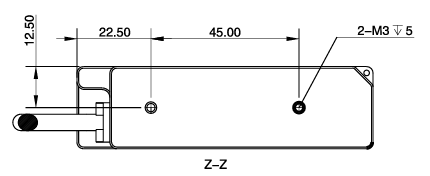

1.2.5 上下面螺纹孔安装

GM461-E1相机上下面有一组M3螺纹孔(螺纹深度5mm)

1.3 GM461-E1相机性能指标

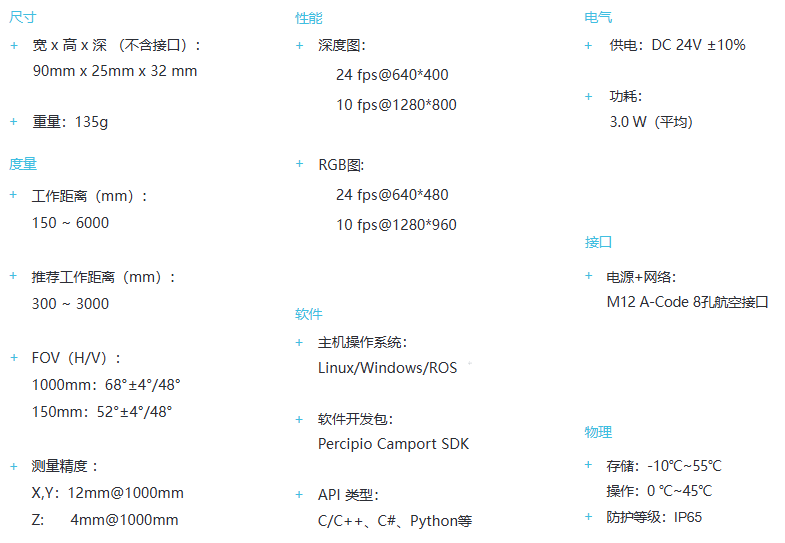

GM461-E1相机的性能指标如下图:

2.GM461-E1相机调参技巧

1.GM461-E1相机,需要搭配图漾新版本的看图软件使用,可联系图漾技术获取。

2.新的看图软件详细操作,可查看此链接:新版看图软件操作手册

2.1 深度图滤波设置

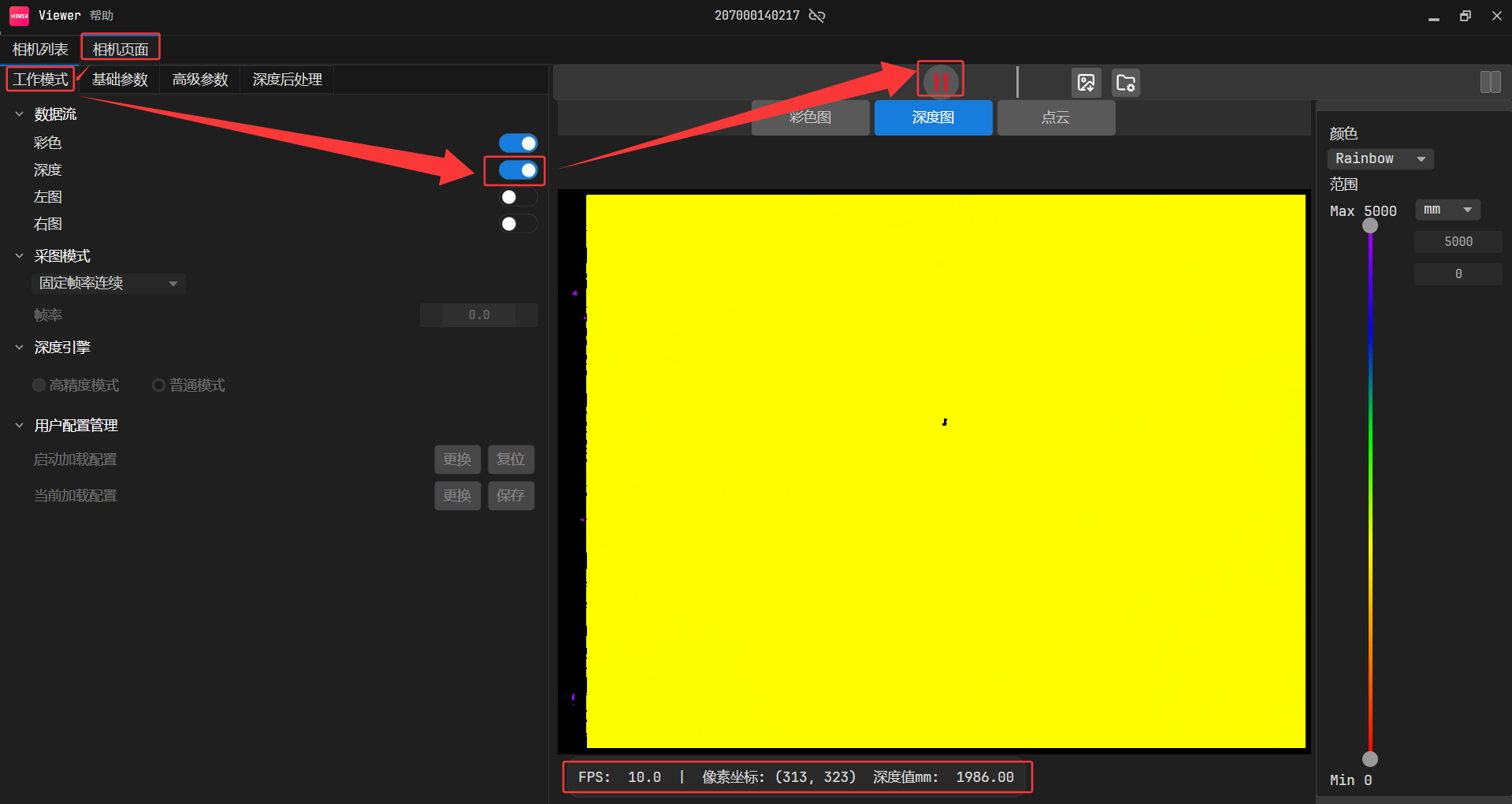

建议在开启相机取流前,打开散斑滤波 设置开关。

2.2 如何调整相机左右IR的参数?

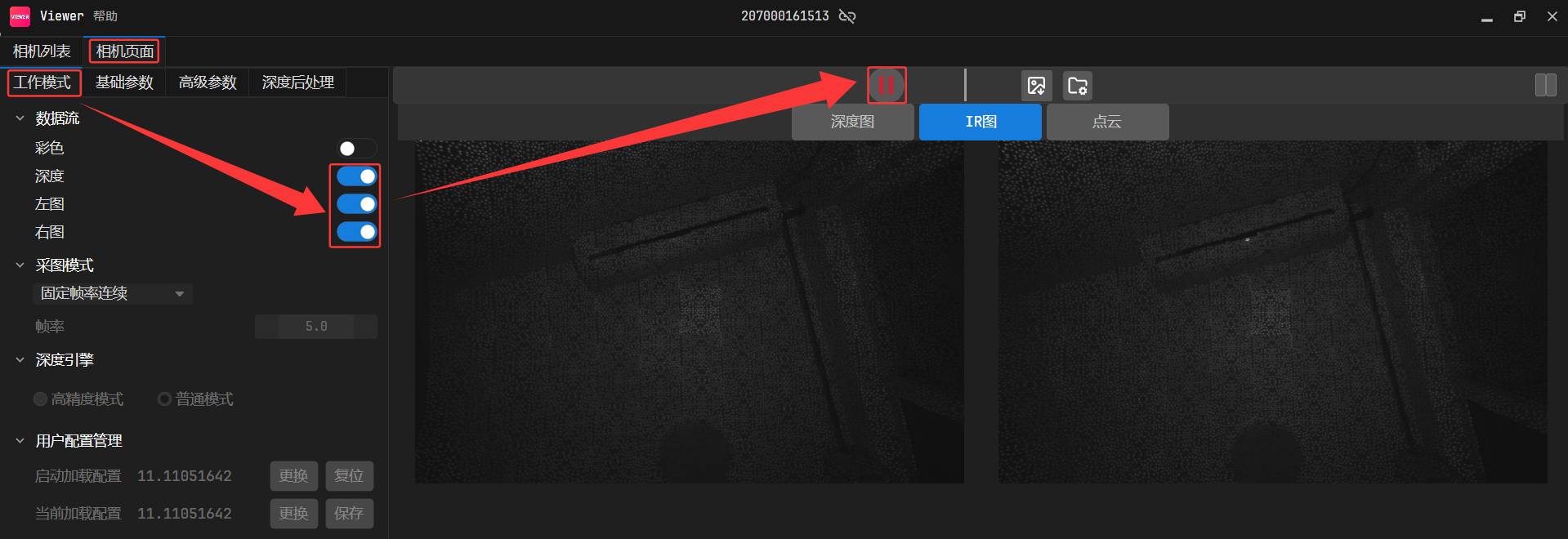

首先需要开启左右取流:

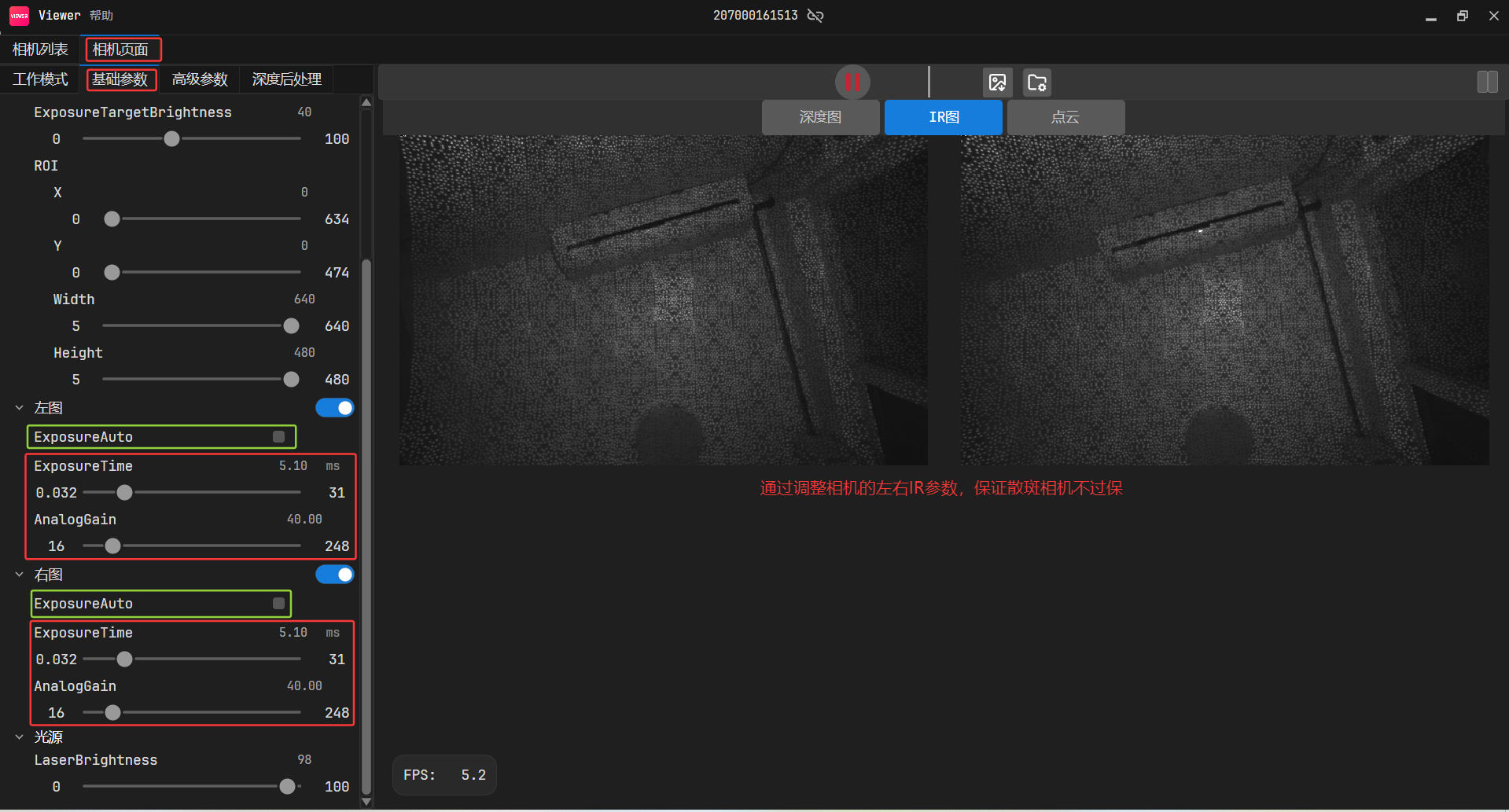

2.2.1 手动调整左右IR曝光

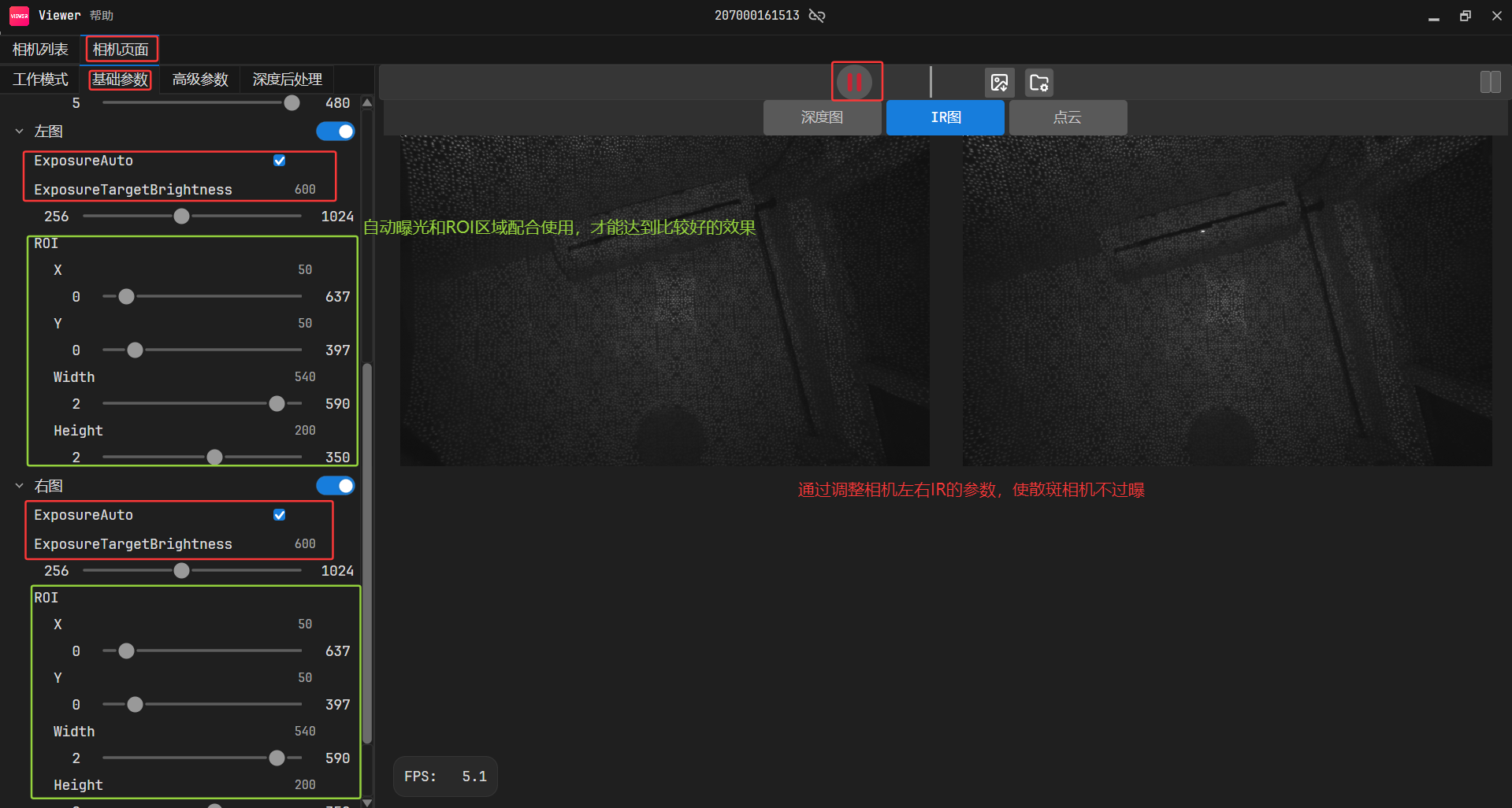

2.2.2 调整相机左右IR自动曝光

根据设置的AOI 区域的图像信息,来调整整个画面的亮度。

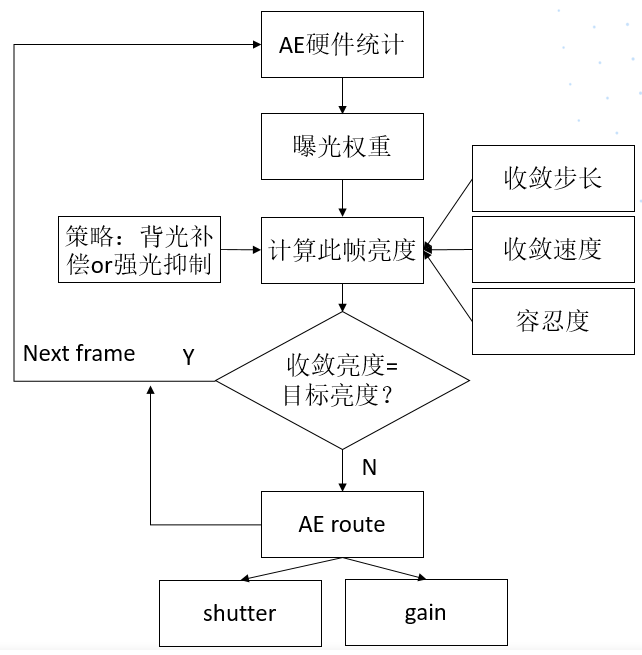

调整逻辑

1. 如果检测到划定区域的亮度较低,算法"觉得"应该增强亮度,于是会调亮图像画面。

2. 如果检测到划定区域的亮度较亮,算法"觉得"应该减弱亮度,于是会调暗图像画面。

2.3 调试深度图

2.3.1 查看深度值

打开深度取图开关,点击 按钮开始采图

按钮开始采图

2.将鼠标放置于深度图上,即可查看当前像素点对应的像素坐标与实际深度值(单位:mm)。

2.3.2 渲染范围调节方法

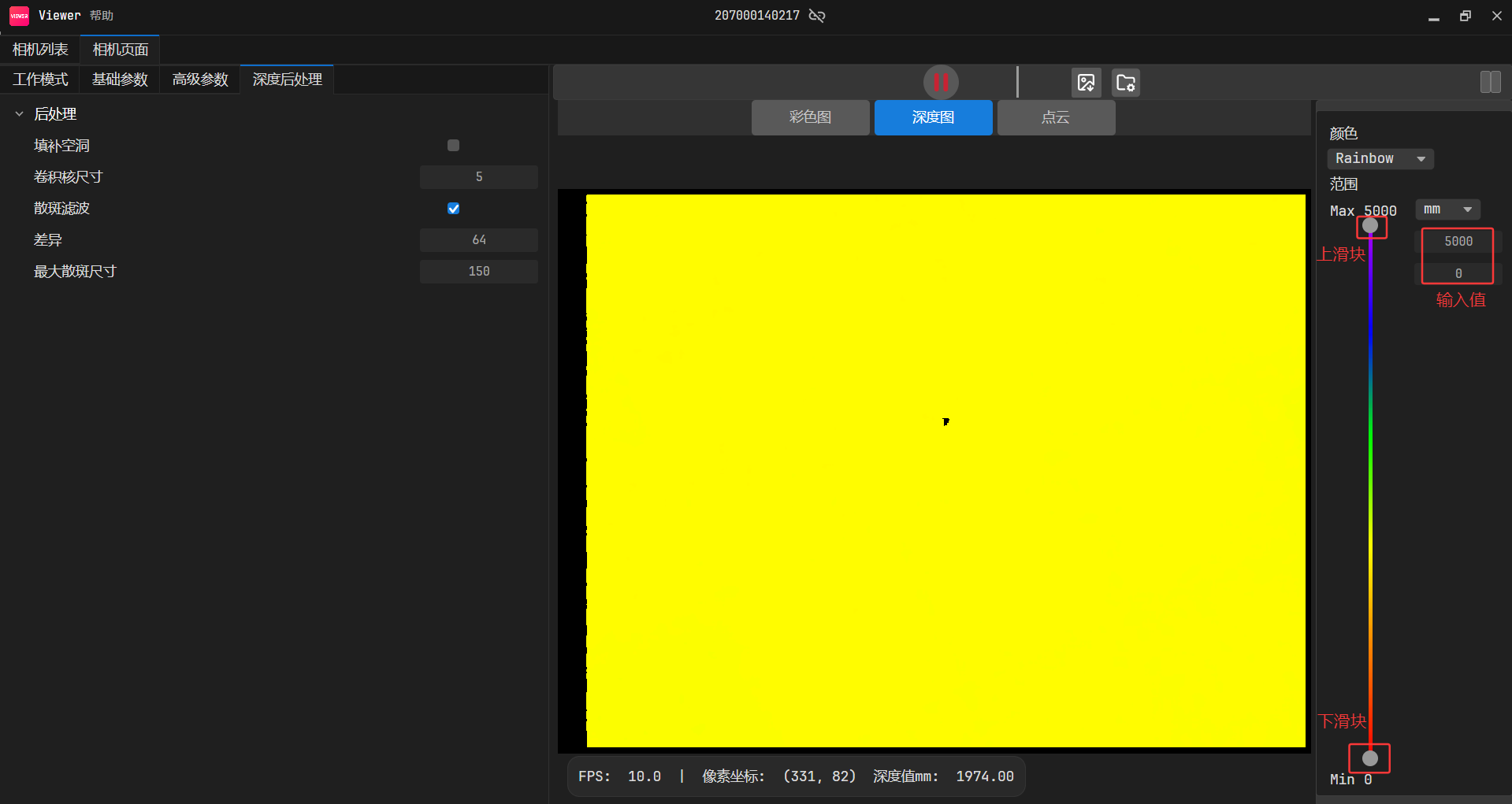

深度图支持动态渲染范围调整。通过优化目标深度区间的色彩映射 ,显著增强深度细节的可辨识度。

1.通过上滑块 调节渲染深度上限,通过下滑块调节渲染深度下限。

2.直接在输入框 键入目标值,或通过箭头微调。

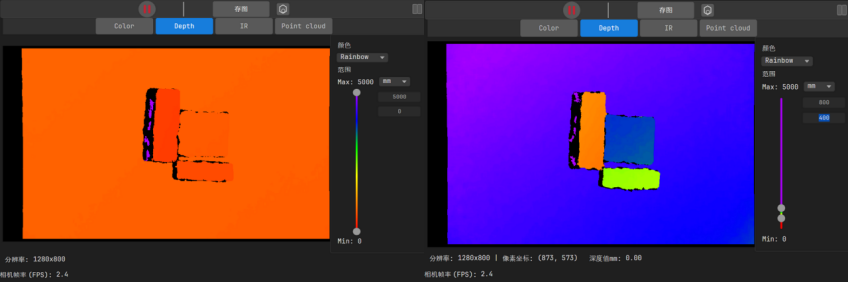

2.3.3 调节深度图渲染效果

1.深度值位于下滑块值和上滑块值之间时,色彩梯度均匀分布 ;

2.深度值小于下滑块值显示为红色,深度值大于上滑块值显示为紫色。

当场景深度集中分布在 400-800 mm 区间时:

使用默认渲染范围(0-5000 mm):如下方左图所示,色彩梯度平缓,细节难以区分**。

缩小范围至400--800mm:如下方右图所示,色彩对比鲜明,可清晰辨识三个物体的深度差异。

使用默认深度渲染范围(左)/调节渲染范围后(右)

2.4 保存图像

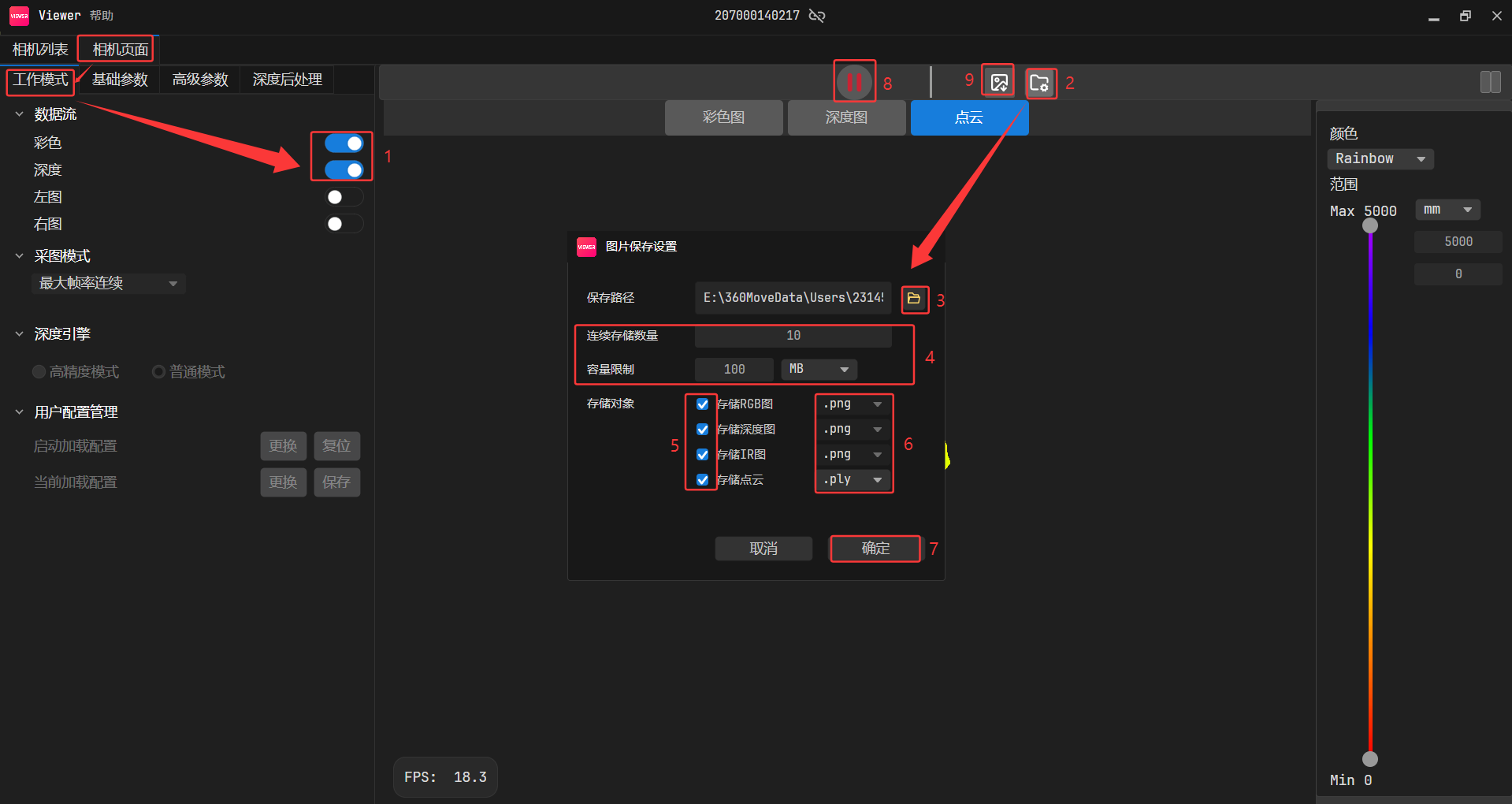

2.4.1 存图设置

新的Percipio Viewer支持保存当前帧图像,可同时保存彩色图、深度图、渲染后的深度图、左/右IR图、点云数据

(.ply/.pcd格式)。

操作步骤:

1.点击 ,弹出保存图片设置对话框。

,弹出保存图片设置对话框。

2.点击 ,选择存图文件夹,并点击 "确定"。

,选择存图文件夹,并点击 "确定"。

2.4.2 单张存图

2.4.3 连续存图

2.5 GM461-E1相机保存参数配置

保存相机参数配置,上电加载参数,具体操作流程如下图:

2.6 GM461-E1相机参数补充说明

1.开启DepthSgbmSaturateFilterEnable、DepthSgbmTextureFilterEnable这两个后处理后,深度图像帧率会减半

2.Userset默认配置是输出固定帧率,可设置帧率范围:[4,15] 或 [8,30]。

3.在相同场景和参数配置下,切换分辨率会导致深度图像处理效果出现差异。

这是由于深度后处理算法(如饱和度滤波、纹理滤波)的内部计算是基于像素的。如需不同分辨率呈现近似深度效果,可采用以下方案:

1).关闭深度后处理 :直接禁用相关滤波功能。

2).手动参数适配:开启后处理时,需按分辨率比例手动调整参数值。例如:640×400 分辨率下,参数设置为7。切换至 1280×800分辨率时,应将参数调整为28。

3.GM461-E1相机SDK相关

GM461-E1相机,推荐使用的编程语言和SDK版本如下:

3.1 C++语言SDK(推荐)

GM461-E1相机,只能搭配4.X.X版本的SDK使用,如果相机固件是106版本之后的,需要配合使用最新版本的SDK(4.1.9版本及以上)、最新版本的Viewer。

具体操作和Sample和案例,可打开如下链接:

3.1.1 完整参考例子

cpp

#include <limits>

#include <cassert>

#include <cmath>

#include "../common/common.hpp"

#include <TYImageProc.h>

#include <chrono>

//深度图对齐到彩色图开关,置1则将深度图对齐到彩色图坐标系,置0则不对齐

//因彩色图对齐到深度图时会有部分深度缺失的区域丢失彩色信息,因此默认使用深度图对齐到彩色图方式

#define MAP_DEPTH_TO_COLOR 1

//开启以下深度图渲染显示将会降低帧率

DepthViewer depthViewer0("OrgDepth");//用于显示渲染后的原深度图

DepthViewer depthViewer1("FillHoleDepth");//用于显示渲染后的填洞处理之后的深度图

DepthViewer depthViewer2("SpeckleFilterDepth"); //用于显示渲染后的经星噪滤波过的深度图

DepthViewer depthViewer3("EnhenceFilterDepth"); //用于显示渲染后的经时域滤波过的深度图

DepthViewer depthViewer4("MappedDepth"); //用于显示渲染后的对齐到彩色图坐标系的深度图

//设置相机参数开关,默认使用相机内保存的参数。(使用保存的参数,也可以修改参数)

//不同型号相机具备不同的参数属性,可以使用PercipioViewer看图软件确认相机支持的参数属性和参数取值范围

bool setParameters = false;

static void use_new_apis()

{

LOGD("This is a new device. We have provided GenICam style API in TYParameter.h to get/set parameters.");

}

static void use_old_apis()

{

LOGD("This is a old device. Please use the API in TYApi.h to get/set parameters");

}

//事件回调

void eventCallback(TY_EVENT_INFO *event_info, void *userdata)

{

if (event_info->eventId == TY_EVENT_DEVICE_OFFLINE)

{

LOGD("=== Event Callback: Device Offline!");

// Note:

// Please set TY_BOOL_KEEP_ALIVE_ON OFF feature to false if you need to debug with breakpoint!

}

else if (event_info->eventId == TY_EVENT_LICENSE_ERROR)

{

LOGD("=== Event Callback: License Error!");

}

}

//数据格式转换

//cv pixel format to TY_PIXEL_FORMAT

static int cvpf2typf(int cvpf)

{

switch(cvpf)

{

case CV_8U: return TY_PIXEL_FORMAT_MONO;

case CV_8UC3: return TY_PIXEL_FORMAT_RGB;

case CV_16UC1: return TY_PIXEL_FORMAT_DEPTH16;

default: return TY_PIXEL_FORMAT_UNDEFINED;

}

}

//数据格式转换

//mat to TY_IMAGE_DATA

static void mat2TY_IMAGE_DATA(int comp, const cv::Mat& mat, TY_IMAGE_DATA& data)

{

data.status = 0;

data.componentID = comp;

data.size = mat.total() * mat.elemSize();

data.buffer = mat.data;

data.width = mat.cols;

data.height = mat.rows;

data.pixelFormat = cvpf2typf(mat.type());

}

struct CallbackData

{

int index;

TY_DEV_HANDLE hDevice;

TY_CAMERA_CALIB_INFO depth_calib;

TY_CAMERA_CALIB_INFO color_calib;

float f_depth_scale;

bool isTof;

bool saveOneFramePoint3d;

bool exit_main;

int fileIndex;

bool map_depth_to_color;

};

static CallbackData cb_data;

//通过内参实训深度图转点云,方式供参考

//depth to pointcloud

cv::Mat depthToWorld(float* intr, const cv::Mat &depth,float scale_unit)

{

cv::Mat world(depth.rows, depth.cols, CV_32FC3);

float cx = intr[2];

float cy = intr[5];

float inv_fx = 1.0f / intr[0];

float inv_fy = 1.0f / intr[4];

for (int r = 0; r < depth.rows; r++)

{

uint16_t* pSrc = (uint16_t*)depth.data + r * depth.cols;

cv::Vec3f* pDst = (cv::Vec3f*)world.data + r * depth.cols;

for (int c = 0; c < depth.cols; c++)

{

uint16_t z = pSrc[c] * scale_unit;

if(z == 0){

pDst[c][0] = NAN;

pDst[c][1] = NAN;

pDst[c][2] = NAN;

} else {

pDst[c][0] = (c - cx) * z * inv_fx;

pDst[c][1] = (r - cy) * z * inv_fy;

pDst[c][2] = z;

}

}

}

return world;

}

//输出畸变校正的彩色图,并实现深度图对齐到彩色图

static void doRegister(const TY_CAMERA_CALIB_INFO& depth_calib

, const TY_CAMERA_CALIB_INFO& color_calib

, const cv::Mat& depth

, const float f_scale_unit

, const cv::Mat& color

, cv::Mat& undistort_color

, cv::Mat& out

, bool map_depth_to_color

)

{

// do undistortion RGB校正畸变

TY_IMAGE_DATA src;

src.width = color.cols;

src.height = color.rows;

src.size = color.size().area() * 3;

src.pixelFormat = TYPixelFormatRGB8;

src.buffer = color.data;

undistort_color = cv::Mat(color.size(), CV_8UC3);

TY_IMAGE_DATA dst;

dst.width = color.cols;

dst.height = color.rows;

dst.size = undistort_color.size().area() * 3;

dst.buffer = undistort_color.data;

dst.pixelFormat = TYPixelFormatRGB8;

ASSERT_OK(TYUndistortImage(&color_calib, &src, NULL, &dst));

//TM265相机这里,新相机的RGB加入了鱼眼标定,如果使用TM265相机,则用TYGetEnum(hDevice, comp_id, TY_ENUM_LENS_OPTICAL_TYPE, &lens_tpye);

// TY_LENS_PINHOLE是老的小孔成像,TY_LENS_FISHEYE是新的鱼眼

// ASSERT_OK(TYUndistortImage(&color_calib, &src, NULL, &dst , TY_LENS_FISHEYE));

// 新的do register,用了最邻近插值

if (map_depth_to_color)

{

int outW = depth.cols;

int outH = depth.cols * undistort_color.rows / undistort_color.cols;

out = cv::Mat::zeros(cv::Size(outW, outH), CV_16U);

ASSERT_OK(

TYMapDepthImageToColorCoordinate(

&depth_calib,

depth.cols, depth.rows, depth.ptr<uint16_t>(),

&color_calib,

out.cols, out.rows, out.ptr<uint16_t>(), f_scale_unit

)

);

cv::Mat temp;

cv::resize(out, temp, undistort_color.size(), 0, 0, cv::INTER_NEAREST);

out = temp;

}

}

//图像帧处理

void frameHandler(TY_FRAME_DATA* frame, void* userdata)

{

CallbackData* pData = (CallbackData*) userdata;

LOGD("=== Get frame %d", ++pData->index);

std::vector<TY_VECT_3F> P3dtoColor,P3d;//点云

std::vector<cv::Mat> depths;

cv::Mat depth, color;

auto StartParseFrame = std::chrono::steady_clock::now();

//解析图像帧

parseFrame(*frame, &depth, 0, 0, &color);//拿深度图和color图

auto ParseFrameFinished = std::chrono::steady_clock::now();

auto duration2 = std::chrono::duration_cast<std::chrono::microseconds>(ParseFrameFinished - StartParseFrame);

LOGI("*******ParseFrame spend Time : %lld", duration2);

//填洞开关,开启后会降低帧率

bool FillHole = 1;

//星噪滤波开关,深度图中离散点降噪处理

bool SpeckleFilter = 0;

//时域滤波,可降低单点抖动,提升点云平面度

bool EnhenceFilter = 0;

//如果需要使用时域滤波功能,可设置该值范围

int depthnum = 1;

//深度图处理

if (!depth.empty())

{

if (pData->isTof)

{

//TOF相机深度图校正畸变

TY_IMAGE_DATA src;

src.width = depth.cols;

src.height = depth.rows;

src.size = depth.size().area() * 2;

src.pixelFormat = TYPixelFormatCoord3D_C16;

src.buffer = depth.data;

cv::Mat undistort_depth = cv::Mat(depth.size(), CV_16U);

TY_IMAGE_DATA dst;

dst.width = depth.cols;

dst.height = depth.rows;

dst.size = undistort_depth.size().area() * 2;

dst.buffer = undistort_depth.data;

dst.pixelFormat = TYPixelFormatCoord3D_C16;

ASSERT_OK(TYUndistortImage(&cb_data.depth_calib, &src, NULL, &dst));

depth = undistort_depth.clone();

}

if (FillHole)

{

TY_IMAGE_DATA sfFilledDepth;

cv::Mat fillDepth(depth.size(), depth.type());

fillDepth = depth.clone();

mat2TY_IMAGE_DATA(TY_COMPONENT_DEPTH_CAM, fillDepth, sfFilledDepth);

//深度图填洞处理

struct DepthInpainterParameters inpainter = DepthInpainterParameters_Initializer;

inpainter.kernel_size = 10;

inpainter.max_internal_hole = 1800;

TYDepthImageInpainter(&sfFilledDepth, &inpainter);

//空洞填充后度图渲染

depthViewer1.show(fillDepth);

depth = fillDepth.clone();

}

if (SpeckleFilter)

{

//使用星噪滤波

TY_IMAGE_DATA sfFilteredDepth;

cv::Mat filteredDepth(depth.size(), depth.type());

filteredDepth = depth.clone();

mat2TY_IMAGE_DATA(TY_COMPONENT_DEPTH_CAM, filteredDepth, sfFilteredDepth);

struct DepthSpeckleFilterParameters sfparam = DepthSpeckleFilterParameters_Initializer;

sfparam.max_speckle_size = 300;//噪点面积小于该值将被过滤

sfparam.max_speckle_diff = 64;//相邻像素视差大于该值将被视为噪点

TYDepthSpeckleFilter(&sfFilteredDepth, &sfparam);

//显示星噪滤波后深度图渲染

depthViewer2.show(filteredDepth);

depth = filteredDepth.clone();

}

//深度图时域滤波设置

if (EnhenceFilter)

{

depths.push_back(depth.clone());

LOGD("depths_size %d", depths.size());

if (depths.size() >= depthnum)

{

// filter

//LOGD("count %d ", ++cnt);

LOGD("depthnum count %d ", depthnum);

std::vector<TY_IMAGE_DATA> tyDepth(depthnum);

for (size_t i = 0; i < depthnum; i++)

{

mat2TY_IMAGE_DATA(TY_COMPONENT_DEPTH_CAM, depths[i], tyDepth[i]);

}

//使用时域滤波

TY_IMAGE_DATA tyFilteredDepth;

cv::Mat filteredDepth2(depth.size(), depth.type());

mat2TY_IMAGE_DATA(TY_COMPONENT_DEPTH_CAM, filteredDepth2, tyFilteredDepth);

struct DepthEnhenceParameters param = DepthEnhenceParameters_Initializer;

param.sigma_s = 0; //空间滤波系数

param.sigma_r = 0; //深度滤波系数

param.outlier_rate = 0; //以像素为单位的滤波窗口

param.outlier_win_sz = 0.f;//噪音过滤系数

TYDepthEnhenceFilter(&tyDepth[0], depthnum, NULL, &tyFilteredDepth, ¶m);

depths.clear();

//显示时域滤波后深度图渲染

depthViewer3.show(filteredDepth2);

}

}

else if (!FillHole && !SpeckleFilter&& !EnhenceFilter)

{

//显示原深度图渲染

depthViewer0.show(depth);

}

}

//彩色图处理

cv::Mat color_data_mat,p3dtocolorMat, undistort_color;

uint8_t* color_data = NULL;

if (!color.empty())

{

cv::Mat undistort_color, MappedDepth;

bool hasColorCalib = false;

ASSERT_OK(TYHasFeature(pData->hDevice, TY_COMPONENT_RGB_CAM, TY_STRUCT_CAM_CALIB_DATA, &hasColorCalib));

if (hasColorCalib)

{

cv::Mat tmp;

//cv::resize(out, tmp, undistort_color.size(), 0, 0, cv::INTER_NEAREST);

switch (color.type())

{

case CV_16U:

color.convertTo(tmp, CV_8U, 1.f / 256);

cv::cvtColor(tmp, color, cv::COLOR_GRAY2BGR);

break;

case CV_16UC3:

tmp = color;

tmp.convertTo(color, CV_8UC3, 1.f / 256);

break;

default:

break;

}

if (MAP_DEPTH_TO_COLOR)

{

doRegister(pData->depth_calib, pData->color_calib, depth, pData->f_depth_scale, color, color_data_mat, MappedDepth, MAP_DEPTH_TO_COLOR);

//生成对齐到彩色图坐标系的点云,两种方法

//方法一:生成点云放在TY_VECT_3F---P3dtoColor

P3dtoColor.resize(MappedDepth.size().area());

ASSERT_OK(TYMapDepthImageToPoint3d(&pData->color_calib, MappedDepth.cols, MappedDepth.rows, (uint16_t*)MappedDepth.data, &P3dtoColor[0], pData->f_depth_scale));

//方法二:生成点云放在32FC3 Mat---p3dtocolorMat

//p3dtocolorMat = depthToWorld(pData->intri_color->data, MappedDepth, pData->scale_unit);

//显示畸变校正后的彩色图

imshow("undistort_color", color_data_mat);

//显示对齐到彩色图坐标系的深度图

depthViewer4.show(MappedDepth);

}

else

{

//彩色图去畸变,不对齐的深度图

doRegister(pData->depth_calib, pData->color_calib, depth, pData->f_depth_scale, color, color_data_mat, MappedDepth, MAP_DEPTH_TO_COLOR);

//显示畸变校正后的彩色图

imshow("undistort_color", color_data_mat);

//方法一:生成点云放在TY_VECT_3F---P3dtoColor

P3d.resize(MappedDepth.size().area());

ASSERT_OK(TYMapDepthImageToPoint3d(&pData->depth_calib, MappedDepth.cols, MappedDepth.rows

, (uint16_t*)MappedDepth.data, &P3d[0], pData->f_depth_scale));

//方法二 pointcloud in CV_32FC3 format

//newP3d = depthToWorld(pData->intri_depth->data, depth, pData->scale_unit);

}

}

}

//保存点云

//save pointcloud

if (pData->saveOneFramePoint3d)

{

char file[32];

if (MAP_DEPTH_TO_COLOR)

{

LOGD("Save p3dtocolor now!!!");

//保存对齐到color坐标系XYZRGB格式彩色点云

sprintf(file, "pointsToColor-%d.xyz", pData->fileIndex++);

//方式一点云保存

writePointCloud((cv::Point3f*)&P3dtoColor[0], (const cv::Vec3b*)color_data_mat.data, P3dtoColor.size(), file, PC_FILE_FORMAT_XYZ);

//方式二点云保存

//writePointCloud((cv::Point3f*)p3dtocolorMat.data, (const cv::Vec3b*)color_data_mat.data, p3dtocolorMat.total(), file, PC_FILE_FORMAT_XYZ);

}

else

{

LOGD("Save point3d now!!!");

//保存XYZ格式点云

sprintf(file, "points-%d.xyz", pData->fileIndex++);

//方式一点云保存

writePointCloud((cv::Point3f*)&P3d[0], 0, P3d.size(), file, PC_FILE_FORMAT_XYZ);

//方式二点云保存

//writePointCloud((cv::Point3f*)newP3d.data, 0, newP3d.total(), file, PC_FILE_FORMAT_XYZ);

}

pData->saveOneFramePoint3d = false;

}

//归还Buffer队列

LOGD("=== Re-enqueue buffer(%p, %d)", frame->userBuffer, frame->bufferSize);

ASSERT_OK( TYEnqueueBuffer(pData->hDevice, frame->userBuffer, frame->bufferSize) );

}

int main(int argc, char* argv[])

{

std::string ID, IP;

TY_INTERFACE_HANDLE hIface = NULL;

TY_DEV_HANDLE hDevice = NULL;

TY_CAMERA_INTRINSIC intri_depth;

TY_CAMERA_INTRINSIC intri_color;

TY_CAMERA_DISTORTION dist;

TY_CAMERA_EXTRINSIC extri;

int32_t resend = 1;

bool isTof = 1;

for(int i = 1; i < argc; i++)

{

if(strcmp(argv[i], "-id") == 0)

{

ID = argv[++i];

} else if(strcmp(argv[i], "-ip") == 0)

{

IP = argv[++i];

} else if(strcmp(argv[i], "-h") == 0)

{

LOGI("Usage: SimpleView_Callback [-h] [-id <ID>]");

return 0;

}

}

LOGD("=== Init lib");

ASSERT_OK( TYInitLib() );

TY_VERSION_INFO ver;

ASSERT_OK( TYLibVersion(&ver) );

LOGD("- lib version: %d.%d.%d", ver.major, ver.minor, ver.patch);

std::vector<TY_DEVICE_BASE_INFO> selected;

//选择相机

ASSERT_OK( selectDevice(TY_INTERFACE_ALL, ID, IP, 10, selected) );

ASSERT(selected.size() > 0);

//默认加载第一个相机

TY_DEVICE_BASE_INFO& selectedDev = selected[0];

if (TYIsNetworkInterface(selectedDev.iface.type))

{

LOGD(" - device %s:", selectedDev.id);

if (strlen(selectedDev.userDefinedName) != 0)

{

LOGD(" vendor : %s", selectedDev.userDefinedName);

}

else {

LOGD(" vendor : %s", selectedDev.vendorName);

}

LOGD(" model : %s", selectedDev.modelName);

LOGD(" device MAC : %s", selectedDev.netInfo.mac);

LOGD(" device IP : %s", selectedDev.netInfo.ip);

LOGD(" TL version : %s", selectedDev.netInfo.tlversion);

if (strcmp(selectedDev.netInfo.tlversion, "Gige_2_1") == 0)

{

use_new_apis();

}

else

{

use_old_apis();

}

}

else

{

TY_DEV_HANDLE handle;

int32_t ret = TYOpenDevice(hIface, selectedDev.id, &handle);

if (ret == 0)

{

TYGetDeviceInfo(handle, &selectedDev);

TYCloseDevice(handle);

LOGD(" - device %s:", selectedDev.id);

}

else

{

LOGD(" - device %s(open failed, error: %d)", selectedDev.id, ret);

}

if (strlen(selectedDev.userDefinedName) != 0)

{

LOGD(" vendor : %s", selectedDev.userDefinedName);

}

else {

LOGD(" vendor : %s", selectedDev.vendorName);

}

LOGD(" model : %s", selectedDev.modelName);

use_old_apis();

if (!setParameters)

{

std::string js_data;

int ret;

ret = load_parameters_from_storage(hDevice, js_data);//使用相机内保存参数

if (ret == TY_STATUS_ERROR)

{

LOGD("no save parameters in the camera");

setParameters = true;

}

else if (ret != TY_STATUS_OK)

{

LOGD("Failed: error %d(%s)", ret, TYErrorString(ret));

setParameters = true;

}

}

}

//打开接口和设备

ASSERT_OK( TYOpenInterface(selectedDev.iface.id, &hIface) );

ASSERT_OK( TYOpenDevice(hIface, selectedDev.id, &hDevice) );

//使能相机组件

TY_COMPONENT_ID allComps;

ASSERT_OK(TYGetComponentIDs(hDevice, &allComps));

ASSERT_OK(TYDisableComponents(hDevice, allComps));

//使能彩色相机

//try to enable color camera

if (allComps & TY_COMPONENT_RGB_CAM )

{

LOGD("Has RGB camera, open RGB cam");

ASSERT_OK(TYEnableComponents(hDevice, TY_COMPONENT_RGB_CAM));

}

//使能Depth相机

//try to enable color camera

if (allComps & TY_COMPONENT_DEPTH_CAM)

{

LOGD("Has Depth camera, open Depth cam");

ASSERT_OK(TYEnableComponents(hDevice, TY_COMPONENT_DEPTH_CAM));

}

//使能IR相机

//try to enable ir cam

if (allComps & TY_COMPONENT_IR_CAM_LEFT)

{

LOGD("=== Configure components, open ir cam");

ASSERT_OK(TYEnableComponents(hDevice, TY_COMPONENT_IR_CAM_LEFT));

}

//读取彩色相机标定数据

//TY_STRUCT_CAM_CALIB_DATA内参是相机出厂标定分辨率的内参

//TY_STRUCT_CAM_INTRINSIC内参是相机当前分辨率的内参

LOGD("=== Get color intrinsic");

ASSERT_OK(TYGetStruct(hDevice, TY_COMPONENT_RGB_CAM, TY_STRUCT_CAM_INTRINSIC, &intri_color, sizeof(intri_color)));

////打印当前彩色相机分辨率内参,分辨率不同,fx,fy,cx,cy不一样。

//打印这里也可引入C++标准库iomanip,之后调用setw()函数打印

LOGD("===%23s%f %f %f", "", intri_color.data[0], intri_color.data[1], intri_color.data[2]);

LOGD("===%23s%f %f %f", "", intri_color.data[3], intri_color.data[4], intri_color.data[5]);

LOGD("===%23s%f %f %f", "", intri_color.data[6], intri_color.data[7], intri_color.data[8]);

LOGD("=== Read color calib data");

ASSERT_OK(TYGetStruct(hDevice, TY_COMPONENT_RGB_CAM, TY_STRUCT_CAM_CALIB_DATA

, &cb_data.color_calib, sizeof(cb_data.color_calib)));

//读取深度相机内参和深度相机标定数据

//TY_STRUCT_CAM_CALIB_DATA内参是相机出厂标定分辨率的内参

//TY_STRUCT_CAM_INTRINSIC内参是相机当前分辨率的内参

LOGD("=== Get depth intrinsic");

ASSERT_OK(TYGetStruct(hDevice, TY_COMPONENT_DEPTH_CAM, TY_STRUCT_CAM_INTRINSIC, &intri_depth, sizeof(intri_depth)));

////打印当前深度图相机分辨率内参,分辨率不同,fx,fy,cx,cy不一样。

LOGD("===%23s%f %f %f", "", intri_depth.data[0], intri_depth.data[1], intri_depth.data[2]);

LOGD("===%23s%f %f %f", "", intri_depth.data[3], intri_depth.data[4], intri_depth.data[5]);

LOGD("===%23s%f %f %f", "", intri_depth.data[6], intri_depth.data[7], intri_depth.data[8]);

LOGD("=== Read depth calib data");

ASSERT_OK(TYGetStruct(hDevice, TY_COMPONENT_DEPTH_CAM, TY_STRUCT_CAM_CALIB_DATA

, &cb_data.depth_calib, sizeof(cb_data.depth_calib)));

//////获取彩色图畸变系数;

//////跑GM46X最新固件有问题,先屏蔽

//LOGD("=== Get color distortion");

//ASSERT_OK(TYGetStruct(hDevice, TY_COMPONENT_RGB_CAM, TY_STRUCT_CAM_DISTORTION, &dist, sizeof(dist)));

//LOGD("===%23s%f %f %f %f", "", dist.data[0], dist.data[1], dist.data[2], dist.data[3]);

//LOGD("===%23s%f %f %f %f", "", dist.data[4], dist.data[5], dist.data[6], dist.data[7]);

//LOGD("===%23s%f %f %f %f", "", dist.data[8], dist.data[9], dist.data[10], dist.data[11]);

////获取RGB至相机左IR的外参

// //TY_STRUCT_EXTRINSIC_TO_IR_LEFT

//////跑GM46X最新固件有问题,先屏蔽

//

//ASSERT_OK(TYGetStruct(hDevice, TY_COMPONENT_RGB_CAM_LEFT, TY_STRUCT_EXTRINSIC_TO_DEPTH, &extri, sizeof(extri)));

//LOGD("===%23s%f %f %f %f", "", extri.data[0], extri.data[1], extri.data[2], extri.data[3]);

//LOGD("===%23s%f %f %f %f", "", extri.data[4], extri.data[5], extri.data[6], extri.data[7]);

//LOGD("===%23s%f %f %f %f", "", extri.data[8], extri.data[9], extri.data[10], extri.data[11]);

//LOGD("===%23s%f %f %f %f", "", extri.data[12], extri.data[13], extri.data[14], extri.data[15]);

//获取所需Buffer大小

LOGD("=== Prepare image buffer");

uint32_t frameSize;

ASSERT_OK( TYGetFrameBufferSize(hDevice, &frameSize) );

LOGD(" - Get size of framebuffer, %d", frameSize);

//分配两个Buffer,并压入队列

LOGD(" - Allocate & enqueue buffers");

char* frameBuffer[2];

frameBuffer[0] = new char[frameSize];

frameBuffer[1] = new char[frameSize];

LOGD(" - Enqueue buffer (%p, %d)", frameBuffer[0], frameSize);

ASSERT_OK( TYEnqueueBuffer(hDevice, frameBuffer[0], frameSize) );

LOGD(" - Enqueue buffer (%p, %d)", frameBuffer[1], frameSize);

ASSERT_OK( TYEnqueueBuffer(hDevice, frameBuffer[1], frameSize) );

//注册事件回调

bool device_offline = false;;

LOGD("=== Register event callback");

ASSERT_OK(TYRegisterEventCallback(hDevice, eventCallback, &device_offline));

////触发模式设置

//bool hasTrigger;

//ASSERT_OK(TYHasFeature(hDevice, TY_COMPONENT_DEVICE, TY_STRUCT_TRIGGER_PARAM, &hasTrigger));

//if (hasTrigger)

//{

// TY_TRIGGER_PARAM trigger;

// LOGD("=== enable trigger mode");

// //trigger.mode = TY_TRIGGER_MODE_OFF;

// trigger.mode = TY_TRIGGER_MODE_SLAVE;//软触发和硬触发模式

// ASSERT_OK(TYSetStruct(hDevice, TY_COMPONENT_DEVICE, TY_STRUCT_TRIGGER_PARAM, &trigger, sizeof(trigger)));

//

//

//}

ASSERT_OK(TYEnumSetString(hDevice, "AcquisitionMode", "SingleFrame"));

ASSERT_OK(TYEnumSetString(hDevice, "TriggerSource", "Software"));//触发源是软触发

//4.0.7之后版本的SDK,删除Resned开关,逻辑修改为相机支持则默认打开。

////for network only

//LOGD("=== resend: %d", resend);

//if (resend)

//{

// bool hasResend;

// ASSERT_OK(TYHasFeature(hDevice, TY_COMPONENT_DEVICE, TY_BOOL_GVSP_RESEND, &hasResend));

// if (hasResend)

// {

// LOGD("=== Open resend");

// ASSERT_OK(TYSetBool(hDevice, TY_COMPONENT_DEVICE, TY_BOOL_GVSP_RESEND, true));

// }

// else

// {

// LOGD("=== Not support feature TY_BOOL_GVSP_RESEND");

// }

//}

//开始采集

LOGD("=== Start capture");

ASSERT_OK( TYStartCapture(hDevice) );

//回调数据初始化

cb_data.index = 0;

cb_data.hDevice = hDevice;

cb_data.saveOneFramePoint3d = false;

cb_data.fileIndex = 0;

// cb_data.intri_depth = &intri_depth;

// cb_data.intri_color = &intri_color;

//float scale_unit = 1.;

float scale_unit =1.;

TYGetFloat(hDevice, TY_COMPONENT_DEPTH_CAM, TY_FLOAT_SCALE_UNIT, &scale_unit);

std::cout << "scale_uint:" << scale_unit << std::endl;

cb_data.f_depth_scale = scale_unit;

cb_data.isTof = isTof;

depthViewer0.depth_scale_unit = scale_unit;

depthViewer1.depth_scale_unit = scale_unit;

depthViewer2.depth_scale_unit = scale_unit;

depthViewer3.depth_scale_unit = scale_unit;

depthViewer4.depth_scale_unit = scale_unit;

//循环取图

LOGD("=== While loop to fetch frame");

TY_FRAME_DATA frame;

bool exit_main = false;

int index = 0;

uint32_t source = 8;

while(!exit_main)

{

int key = cv::waitKey(1);

switch(key & 0xff)

{

case 0xff:

break;

case 'q':

exit_main = true;

break;

case 's':

cb_data.saveOneFramePoint3d = true;//图片显示窗口上按s键则存一张点云图

break;

default:

LOGD("Pressed key %d", key);

}

auto timeTrigger = std::chrono::steady_clock::now();

//GM461相机发送一次软触发

if (source == 8)

{

ASSERT_OK(TYCommandExec(hDevice, "TriggerSoftware"));

std::cout << "triggermode:" << source << std::endl;

}

//旧相机的发送软触发指令

//while (TY_STATUS_BUSY == TYSendSoftTrigger(hDevice));

//获取帧,默认超时设置为8s

int err = TYFetchFrame(hDevice, &frame, 8000);

//获取图像时间戳代码

LOGD("=== Time Stamp (%" PRIu64 ")", frame.image[0].timestamp);

time_t tick = (time_t)(frame.image[0].timestamp / 1000000);

struct tm tm;

char s[100];

tm = *localtime(&tick);

strftime(s, sizeof(s), "%Y-%m-%d %H:%M:%S", &tm);

int milliseconds = (int)((frame.image[0].timestamp % 1000000) / 1000);

char ms_str[5];

sprintf(ms_str, ".%d", milliseconds);

strcat(s, ms_str);

LOGD("===Time Stamp %d:%s\n", (int)tick, s);

auto timeGetFrame = std::chrono::steady_clock::now();

auto duration = std::chrono::duration_cast<std::chrono::microseconds>(timeGetFrame - timeTrigger);

LOGI("*******FetchFrame spend Time : %lld", duration);

if( err != TY_STATUS_OK )

{

LOGD("... Drop one frame");

continue;

}

if (err == TY_STATUS_OK)

{

LOGD("Get frame %d", ++index);

int fps = get_fps();

if (fps > 0)

{

LOGI("***************************fps: %d", fps);

}

}

frameHandler(&frame, &cb_data);

}

ASSERT_OK( TYStopCapture(hDevice) );

ASSERT_OK( TYCloseDevice(hDevice) );

ASSERT_OK( TYCloseInterface(hIface) );

ASSERT_OK( TYDeinitLib() );

delete frameBuffer[0];

delete frameBuffer[1];

LOGD("=== Main done!");

return 0;

}3.1.2 GM461-E1相机软触发注意事项

建议选择下面软触发指令,依次时选择触发模式--->选择触发源--->发送软触发指令

cpp

ASSERT_OK(TYEnumSetString(hDevice, "AcquisitionMode", "SingleFrame"));

ASSERT_OK(TYEnumSetString(hDevice, "TriggerSource", "Software"));//触发源是软触发

ASSERT_OK(TYCommandExec(hDevice, "TriggerSoftware"));//通过此命令控制相机采图3.1.3 TYSendSoftTrigger软触发指令不生效

而之前的Sample_V1里面提供的,对GM461-E1/相机是不生效的。

cpp

//触发模式设置

bool hasTrigger;

ASSERT_OK(TYHasFeature(hDevice, TY_COMPONENT_DEVICE, TY_STRUCT_TRIGGER_PARAM, &hasTrigger));

if (hasTrigger)

{

TY_TRIGGER_PARAM trigger;

//trigger.mode = TY_TRIGGER_MODE_OFF;//连续采集模式

LOGD("=== enable trigger mode");

trigger.mode = TY_TRIGGER_MODE_SLAVE;//软触发和硬触发模式

ASSERT_OK(TYSetStruct(hDevice, TY_COMPONENT_DEVICE, TY_STRUCT_TRIGGER_PARAM, &trigger, sizeof(trigger)));

//bool hasDI0_WORKMODE;

//ASSERT_OK(TYHasFeature(hDevice, TY_COMPONENT_DEVICE, TY_STRUCT_DI0_WORKMODE, &hasDI0_WORKMODE));

//if (hasDI0_WORKMODE)

//{

// //硬触发模式防抖

// TY_DI_WORKMODE di_wm;

// di_wm.mode = TY_DI_PE_INT;

// di_wm.int_act = TY_DI_INT_TRIG_CAP;

// uint32_t time_hw = 10;//单位ms,硬件滤波,小于设定时间的电平信号会被过滤

// uint32_t time_sw = 200;//单位ms,软件滤波,连续高频触发情形,小于设置周期的后一个触发信号将被过滤

// di_wm.reserved[0] = time_hw | (time_sw << 16);

// ASSERT_OK(TYSetStruct(hDevice, TY_COMPONENT_DEVICE, TY_STRUCT_DI0_WORKMODE, &di_wm, sizeof(di_wm)));

//}

}旧相机(GM46X和PMD系列相机除外)支持的软触发指令

cpp

auto timeTrigger = std::chrono::steady_clock::now();

//发送一次软触发

while (TY_STATUS_BUSY == TYSendSoftTrigger(hDevice));

//获取帧,默认超时设置为10s

int err = TYFetchFrame(hDevice, &frame, 3000);

auto timeGetFrame = std::chrono::steady_clock::now();

auto duration = std::chrono::duration_cast<std::chrono::microseconds>(timeGetFrame - timeTrigger);

LOGI("*******FetchFrame spend Time : %lld", duration);3.2 ROS1版本(推荐)

GM461-E1相机,只能搭配4.X.X版本的SDK使用,如果相机固件是106版本之后的,需要配合使用最新版本的SDK(4.1.9版本及以上)、最新版本的Viewer。

具体操作和Sample和案例,可打开如下链接:

图漾相机-ROS1_SDK_ubuntu 4.X.X版本编译

3.3 ROS2版本(推荐)

GM461-E1相机,只能搭配4.X.X版本的SDK使用,如果相机固件是106版本之后的,需要配合使用最新版本的SDK(4.1.9版本及以上)、最新版本的Viewer。

具体操作和Sample和案例,可打开如下链接:

图漾相机-ROS2-SDK-Ubuntu 4.X.X版本编译

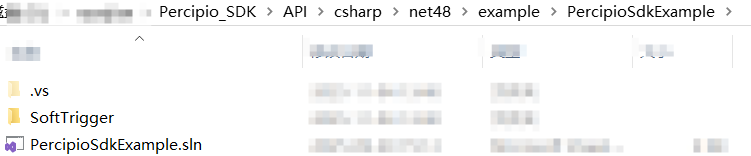

3.4 C#语言SDK

新的看图软件,自带的C#例程比较简陋,暂时不推荐使用。

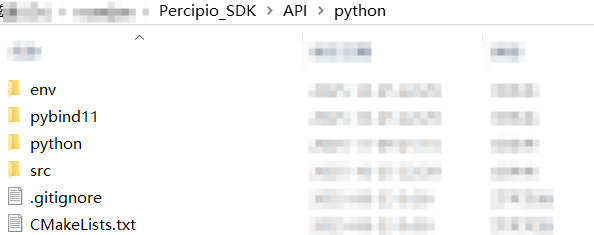

3.5 Python语言SDK

新的看图软件,自带的Python例程比较简陋,暂时不推荐使用。

4.GM461-E1相机常见FAQ

4.1 如何获取GM461-E1相机内参?

4.1.1 方法一

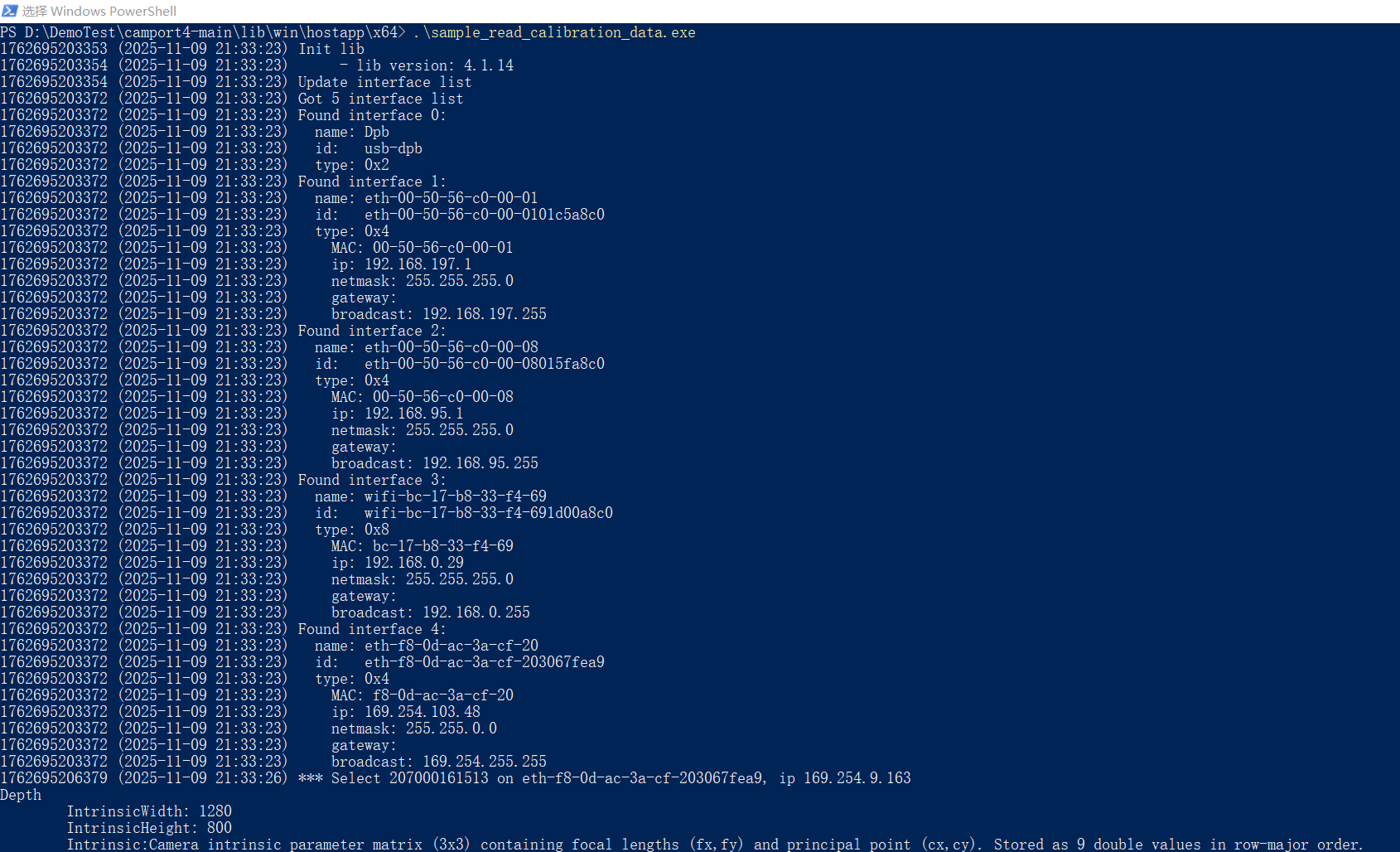

编译C++语言中的sample_read_calibration_data例子,之后运行,会打印GM461相机的内参。

4.1.2 方法二

使用本贴子中的x64 压缩包,通过鼠标右键+shift键 ,进入到PowerShell界面,运行sample_read_calibration_data例子,详细步骤如下:

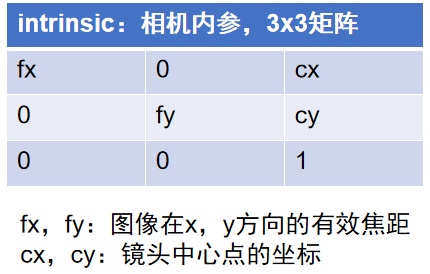

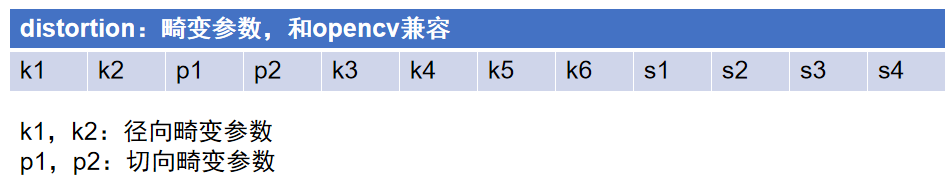

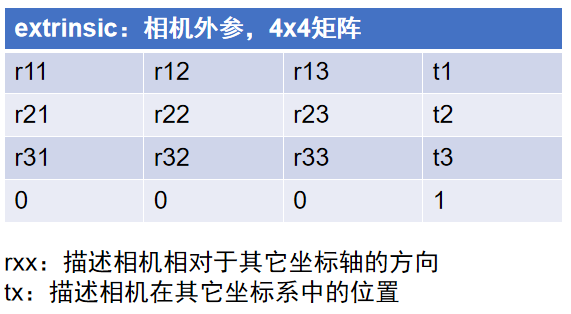

4.2 GM461-E1相机内参说明

运行sample_read_calibration_data例子,打印出来的内参如下:

cpp

Depth

IntrinsicWidth: 1280

IntrinsicHeight: 800

Intrinsic:Camera intrinsic parameter matrix (3x3) containing focal lengths (fx,fy) and principal point (cx,cy). Stored as 9 double values in row-major order.

849.295362 0.000000 631.821602

0.000000 849.295362 395.606600

0.000000 0.000000 1.000000

No rectifyed intrinsic data.

No rotation data.

No distortion data.

No extrinsic data.

Texture

IntrinsicWidth: 1280

IntrinsicHeight: 960

Intrinsic:Camera intrinsic parameter matrix (3x3) containing focal lengths (fx,fy) and principal point (cx,cy). Stored as 9 double values in row-major order.

803.841137 0.000000 629.107503

0.000000 803.935828 508.946834

0.000000 0.000000 1.000000

No rectifyed intrinsic data.

No rotation data.

Distortion:Camera distortion coefficients (12x1 vector) including radial (k1-k6), tangential (p1-p2), and prism (s1-s4) distortions. Stored as 12 double values.

0.231750 0.729891 -0.003311 0.002787 0.887200 0.258638 0.633182 0.999489 -0.003283 0.000037 0.002828 0.000965

Extrinsic:Camera extrinsic matrix (4x4) containing rotation and translation relative to world coordinate system. Stored as 16 double values in row-major order.

0.999979 0.002164 0.006161 10.500958

-0.002104 0.999951 -0.009630 0.064701

-0.006182 0.009617 0.999935 -0.374639

0.000000 0.000000 0.000000 1.000000

Left

IntrinsicWidth: 1280

IntrinsicHeight: 800

Intrinsic:Camera intrinsic parameter matrix (3x3) containing focal lengths (fx,fy) and principal point (cx,cy). Stored as 9 double values in row-major order.

846.596971 0.000000 630.892295

0.000000 846.503802 397.161103

0.000000 0.000000 1.000000

Rectifyed intrinsic:Rectified camera intrinsic parameter matrix (3x3) after distortion correction. Contains modified focal lengths and principal point. Stored as 9 double values.

849.295362 0.000000 631.821602

0.000000 849.295362 395.606600

0.000000 0.000000 1.000000

Rotation:Camera rotation matrix (3x3) representing orientation in 3D space. Orthonormal matrix with determinant +1. Stored as 9 double values in row-major order.

0.999997 -0.002445 -0.000869

0.002448 0.999990 0.003720

0.000860 -0.003722 0.999993

Distortion:Camera distortion coefficients (12x1 vector) including radial (k1-k6), tangential (p1-p2), and prism (s1-s4) distortions. Stored as 12 double values.

-0.065113 0.009064 -0.000904 -0.000084 0.598195 -0.126516 0.127444 0.540535 -0.000395 0.000427 -0.000143 0.000617

Extrinsic:Camera extrinsic matrix (4x4) containing rotation and translation relative to world coordinate system. Stored as 16 double values in row-major order.

1.000000 0.000000 0.000000 0.000000

0.000000 1.000000 0.000000 0.000000

0.000000 0.000000 1.000000 0.000000

0.000000 0.000000 0.000000 1.000000

Right

IntrinsicWidth: 1280

IntrinsicHeight: 800

Intrinsic:Camera intrinsic parameter matrix (3x3) containing focal lengths (fx,fy) and principal point (cx,cy). Stored as 9 double values in row-major order.

845.633045 0.000000 631.451000

0.000000 845.635807 395.112098

0.000000 0.000000 1.000000

Rectifyed intrinsic:Rectified camera intrinsic parameter matrix (3x3) after distortion correction. Contains modified focal lengths and principal point. Stored as 9 double values.

849.295362 0.000000 631.821602

0.000000 849.295362 395.606600

0.000000 0.000000 1.000000

Rotation:Camera rotation matrix (3x3) representing orientation in 3D space. Orthonormal matrix with determinant +1. Stored as 9 double values in row-major order.

0.999985 -0.005487 -0.000876

0.005484 0.999978 -0.003723

0.000896 0.003718 0.999993

Distortion:Camera distortion coefficients (12x1 vector) including radial (k1-k6), tangential (p1-p2), and prism (s1-s4) distortions. Stored as 12 double values.

-0.060512 0.028435 -0.001911 -0.000879 0.599802 -0.119581 0.137869 0.548687 0.000119 0.000392 0.002222 0.000146

Extrinsic:Camera extrinsic matrix (4x4) containing rotation and translation relative to world coordinate system. Stored as 16 double values in row-major order.

0.999995 0.003036 0.000048 -65.960244

-0.003036 0.999968 0.007443 0.361959

-0.000025 -0.007443 0.999972 0.057769

0.000000 0.000000 0.000000 1.0000004.2.1 深度图内参

cpp

Depth

IntrinsicWidth: 1280

IntrinsicHeight: 800

Intrinsic:Camera intrinsic parameter matrix (3x3) containing focal lengths (fx,fy) and principal point (cx,cy). Stored as 9 double values in row-major order.

849.295362 0.000000 631.821602

0.000000 849.295362 395.606600

0.000000 0.000000 1.000000

No rectifyed intrinsic data.

No rotation data.

No distortion data.

No extrinsic data.

1.cx和cy通常大约是图像分辨率W和H的一半,得出GM461相机深度图出厂标定分辨率为1280x800

2.由于深度相机是虚拟相机,所以其畸变参数以及外参都是零矩阵。

4.2.2 彩色图内参/畸变系数/外参

cpp

Texture

IntrinsicWidth: 1280

IntrinsicHeight: 960

Intrinsic:Camera intrinsic parameter matrix (3x3) containing focal lengths (fx,fy) and principal point (cx,cy). Stored as 9 double values in row-major order.

803.841137 0.000000 629.107503

0.000000 803.935828 508.946834

0.000000 0.000000 1.000000

No rectifyed intrinsic data.

No rotation data.

Distortion:Camera distortion coefficients (12x1 vector) including radial (k1-k6), tangential (p1-p2), and prism (s1-s4) distortions. Stored as 12 double values.

0.231750 0.729891 -0.003311 0.002787 0.887200 0.258638 0.633182 0.999489 -0.003283 0.000037 0.002828 0.000965

Extrinsic:Camera extrinsic matrix (4x4) containing rotation and translation relative to world coordinate system. Stored as 16 double values in row-major order.

0.999979 0.002164 0.006161 10.500958

-0.002104 0.999951 -0.009630 0.064701

-0.006182 0.009617 0.999935 -0.374639

0.000000 0.000000 0.000000 1.0000001.cx和cy通常大约是图像分辨率W和H的一半,得出GM461相机深度图出厂标定分辨率为1280x960

2.彩色图相机的畸变系数如下:

cpp

用TYGetEnum(hDevice, comp_id, TY_ENUM_LENS_OPTICAL_TYPE, &lens_tpye)来区分新旧相机。

//TY_LENS_PINHOLE是小孔成像,RGB畸变系数依次是k1,k2,p1,p2,k3,k4,k5,k6,s1,s2,s3,s4

//TY_LENS_FISHEYE是新的鱼眼标定,RGB畸变系数依次是k1,k2,k3,k43.彩色图相机的外参如下:

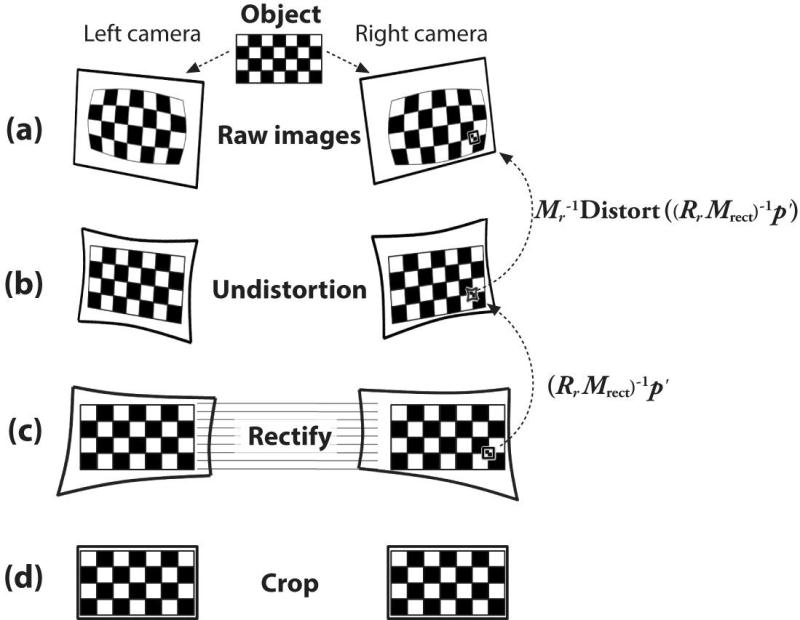

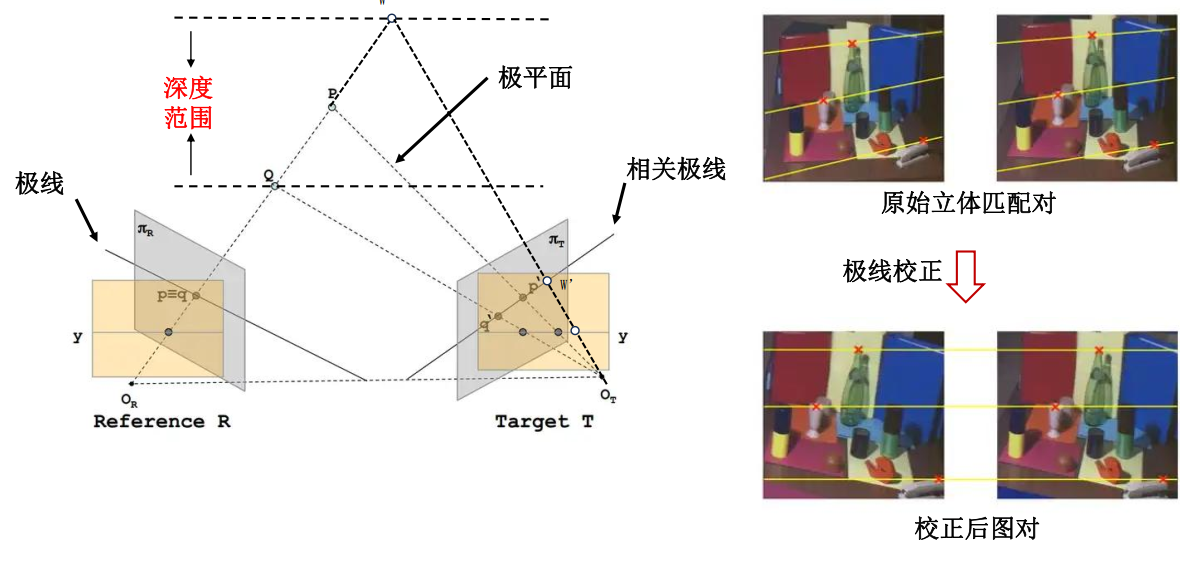

4.2.3 左右IR极限约束前内参/极限校正后内参

双目立体匹配模型

cpp

Left

IntrinsicWidth: 1280

IntrinsicHeight: 800

Intrinsic:Camera intrinsic parameter matrix (3x3) containing focal lengths (fx,fy) and principal point (cx,cy). Stored as 9 double values in row-major order.

846.596971 0.000000 630.892295

0.000000 846.503802 397.161103

0.000000 0.000000 1.000000

Rectifyed intrinsic:Rectified camera intrinsic parameter matrix (3x3) after distortion correction. Contains modified focal lengths and principal point. Stored as 9 double values.

849.295362 0.000000 631.821602

0.000000 849.295362 395.606600

0.000000 0.000000 1.000000

Rotation:Camera rotation matrix (3x3) representing orientation in 3D space. Orthonormal matrix with determinant +1. Stored as 9 double values in row-major order.

0.999997 -0.002445 -0.000869

0.002448 0.999990 0.003720

0.000860 -0.003722 0.999993

Distortion:Camera distortion coefficients (12x1 vector) including radial (k1-k6), tangential (p1-p2), and prism (s1-s4) distortions. Stored as 12 double values.

-0.065113 0.009064 -0.000904 -0.000084 0.598195 -0.126516 0.127444 0.540535 -0.000395 0.000427 -0.000143 0.000617

Extrinsic:Camera extrinsic matrix (4x4) containing rotation and translation relative to world coordinate system. Stored as 16 double values in row-major order.

1.000000 0.000000 0.000000 0.000000

0.000000 1.000000 0.000000 0.000000

0.000000 0.000000 1.000000 0.000000

0.000000 0.000000 0.000000 1.000000

cpp

Right

IntrinsicWidth: 1280

IntrinsicHeight: 800

Intrinsic:Camera intrinsic parameter matrix (3x3) containing focal lengths (fx,fy) and principal point (cx,cy). Stored as 9 double values in row-major order.

845.633045 0.000000 631.451000

0.000000 845.635807 395.112098

0.000000 0.000000 1.000000

Rectifyed intrinsic:Rectified camera intrinsic parameter matrix (3x3) after distortion correction. Contains modified focal lengths and principal point. Stored as 9 double values.

849.295362 0.000000 631.821602

0.000000 849.295362 395.606600

0.000000 0.000000 1.000000

Rotation:Camera rotation matrix (3x3) representing orientation in 3D space. Orthonormal matrix with determinant +1. Stored as 9 double values in row-major order.

0.999985 -0.005487 -0.000876

0.005484 0.999978 -0.003723

0.000896 0.003718 0.999993

Distortion:Camera distortion coefficients (12x1 vector) including radial (k1-k6), tangential (p1-p2), and prism (s1-s4) distortions. Stored as 12 double values.

-0.060512 0.028435 -0.001911 -0.000879 0.599802 -0.119581 0.137869 0.548687 0.000119 0.000392 0.002222 0.000146

Extrinsic:Camera extrinsic matrix (4x4) containing rotation and translation relative to world coordinate system. Stored as 16 double values in row-major order.

0.999995 0.003036 0.000048 -65.960244

-0.003036 0.999968 0.007443 0.361959

-0.000025 -0.007443 0.999972 0.057769

0.000000 0.000000 0.000000 1.0000004.3 GM461-E1相机光心位置

待补充

5.GM461-E1相机测试结果

5.1 GM461-E1 帧率测试

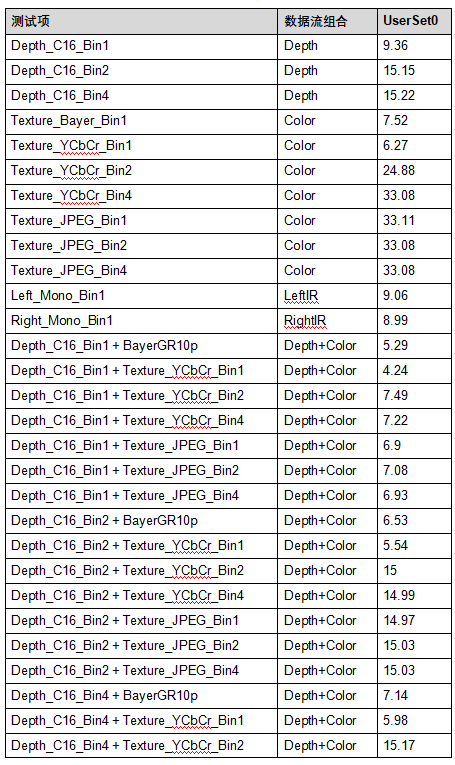

5.1.1 GM461-E1 出图延迟时间

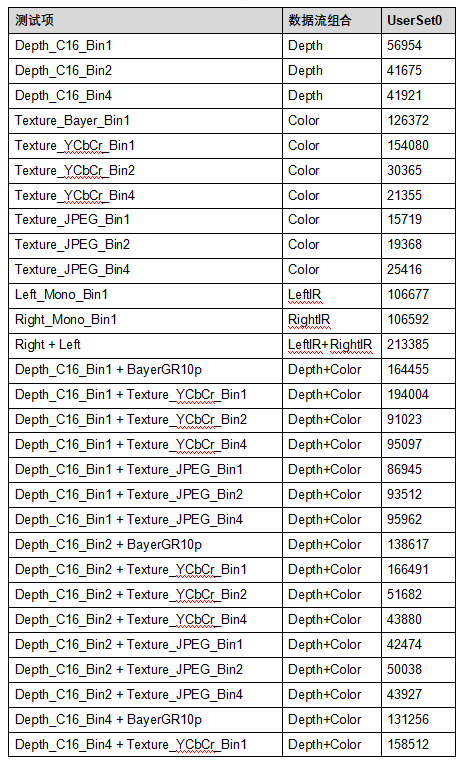

出图延迟时间:相机使用默认UserSet,在连续采集模式下统计从开始曝光到系统完全处理完图像的总之时间,单位微秒。

说明 :

Depth :Bin1- 1280x800,Bin2- 640x400,Bin4-320x200.

Texture :Bin1- 1280x960,Bin2- 640x480,Bin4-320x240.GM461相机

出图延时时间测试结果如下所示:

GM461-E1相机出图延迟时间结果

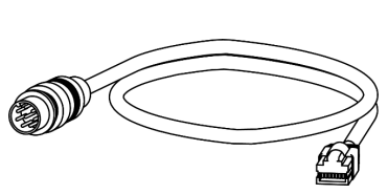

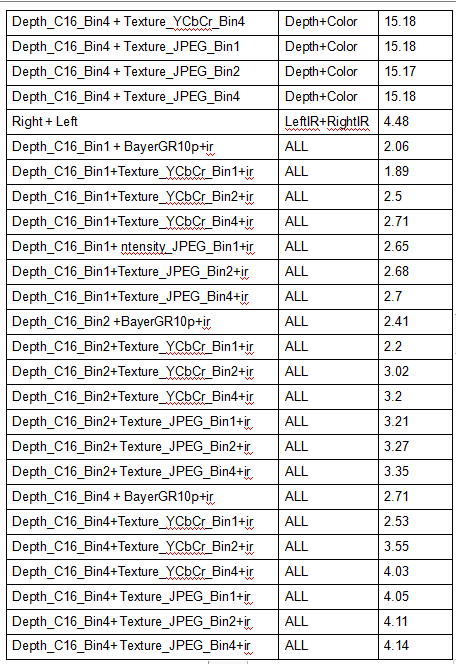

5.1.2 GM461-E1 帧率测试

FPS(帧率):指相机设置在自由采集模式下,上位机每秒采集的图像帧数。

说明 :

Depth :Bin1- 1280x800,Bin2- 640x400,Bin4-320x200.

Texture:Bin1- 1280x960,Bin2- 640x480,Bin4-320x240

GM461相机帧率测试结果如下所示:

GM461-E1相机帧率结果

6.其他学习资料

1. 图漾官网相机规格书