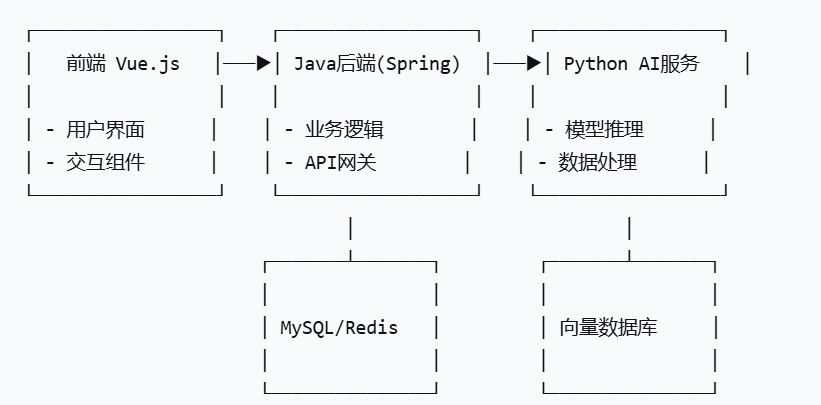

后端:使用Java作为主后端语言,处理业务逻辑、用户认证、数据持久化等。

- 集成Python服务调用

- java后端调用Python

大模型服务:使用Python编写,通过Flask或FastAPI等框架提供HTTP API。

- 提供API接口

前端:使用Vue.js或React等框架,这里我们选择Vue.js作为前端框架。

- 实现用户界面

通信:前端通过HTTP请求与Java后端交互,Java后端在需要调用大模型时,通过HTTP客户端调用Python服务。

项目架构

text

用户界面 (Vue.js)

|

| HTTP (RESTful API)

|

Java后端 (Spring Boot)

|

| 内部HTTP调用

|

Python大模型服务 (Flask/FastAPI)

AI核心代码

py

# ai-service/app/main.py

from fastapi import FastAPI,HTTPException

from pydantic import BaseModel

import torch

from transformers import AutoTokenizer,AutoModelForCausalLM

import logging

from typing import List,Optional

import asyncio

import json

import numpy as np

from sentence_transformers import SentenceTransformer

#配置日志

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

app = FastAPI(title="AI Model Service",version="1.0.0")

class ChatRequest(BaseModel):

message:str

conversation_id:Optional[str] = None

max_length:int = 512

temperature:float = 0.7

class ChatResponse(BaseModel):

response:str

conversation_id:str

tokens_used:int

processing_time:float

class EmbeddingRequest(BaseModel):

texts:List[List[float]]

model:str

class AIModelManager:

def __init__(self):

self.device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

self.tokenizer = None

self.model = None

self.embedding_model = None

self.load_models()

def load_models(self):

"""加载AI模型"""

try:

logger.info("Loading language model...")

# 使用一个较小的模型示例,实际项目中替换为您的模型

model_name = "microsoft/DialoGPT-medium"

self.tokenizer = AutoTokenizer.from_pretrained(model_name)

self.model = AutoModelForCausalLM.from_pretrained(model_name)

self.model.to(self.device)

logger.info("Loading embedding model...")

self.embedding_model = SentenceTransformer('all-MiniLM-L6-v2')

logger.info("All models loaded successfully")

except Exception as e:

logger.error(f"Error loading models: {e}")

raise

async def generate_response(self, request: ChatRequest)-> ChatResponse:

"""生成对话响应"""

import time

start_time = time.time()

try:

#编码输入

inputs = self.tokenizer.encode(request.message + self.tokenizer.eos_token,return_tensors='pt')

inputs = inputs.to(self.device)

#生成响应

with torch.no_grad():

outputs = self.model.generate(

inputs,

max_length=request.max_length,

temperature=request.temperature,

pad_token_id=self.tokenizer.eos_token_id,

do_sample=True

)

#解码响应

response = self.tokenizer.decode(outputs[:,inputs.shape[-1]:][0],skip_special_tokens=True)

processing_time = time.time() - start_time

return ChatResponse(

response=response,

conversation_id=request.conversation_id or "default",

tokens_used=len(outputs[0]),

processing_time=processing_time

)

except Exception as e:

logger.error(f"Error generating response: {e}")

raise HTTPException(status_code=500, detail=str(e))

async def get_embeddings(self, request: EmbeddingRequest)->EmbeddingResponse:

"""获取文本嵌入向量"""

try:

embeddings = self.embedding_model.encode(request.texts)

return EmbeddingResponse(

embeddings=embeddings.tolist(),

model="all-MiniLM-L6-v2"

)

except Exception as e:

logger.error(f"Error generating embeddings: {e}")

raise HTTPException(status_code=500, detail=str(e))

#全局模型管理器

model_manager = AIModelManager()

@app.on_event("startup")

async def startup_event():

"""应用启动时初始化模型"""

await asyncio.sleep(1)# 给其他服务启动时间

@app.post("/chat",response_model=ChatResponse)

async def chat_endpoint(request:ChatRequest):

"""对话接口"""

return await model_manager.generate_response(request)

@app.post("/embeddings", response_model=EmbeddingResponse)

async def embeddings_endpoint(request:EmbeddingRequest):

"""嵌入向量接口"""

return await model_manager.get_embeddings(request)

@app.get("/health")

async def health_check():

"""健康检查"""

return {"status": "healthy", "models_loaded": model_manager.model is not None}

if __name__ == "__main__":

import unicorn

uvicorn.run(app,host="0.0.0.0", port=8000)AI服务依赖文件

python

# ai-service/requirements.txt

fastapi==0.104.1

uvicorn==0.24.0

torch==2.1.0

transformers==4.35.0

sentence-transformers==2.2.2

numpy==1.24.3

pydantic==2.4.2

python-multipart==0.0.6AI服务Dockerfile

dockerfile

# ai-service/Dockerfile

FROM python:3.9-slim

WORKDIR /app

# 安装系统依赖

RUN apt-get update && apt-get install -y \

gcc \

g++ \

&& rm -rf /var/lib/apt/lists/*

# 复制依赖文件

COPY requirements.txt .

# 安装Python依赖

RUN pip install --no-cache-dir -r requirements.txt

# 复制应用代码

COPY . .

# 暴露端口

EXPOSE 8000

# 启动命令

CMD ["uvicorn", "app.main:app", "--host", "0.0.0.0", "--port", "8000"]Java后端服务实现

xml

<!-- backend/pom.xml -->

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.1.5</version>

</parent>

<groupId>com.example</groupId>

<artifactId>ai-backend</artifactId>

<version>1.0.0</version>

<properties>

<java.version>17</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-jpa</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-validation</artifactId>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>8.0.33</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.springdoc</groupId>

<artifactId>springdoc-openapi-starter-webmvc-ui</artifactId>

<version>2.2.0</version>

</dependency>

</dependencies>

</project>

yml

# backend/src/main/resources/application.yml

server:

port: 8080

servlet:

context-path: /

spring:

datasource:

url: jdbc:mysql://mysql:3306/ai_db?useSSL=false&serverTimezone=UTC

username: root

password: password

driver-class-name: com.mysql.cj.jdbc.Driver

jpa:

hibernate:

ddl-auto: update

show-sql: true

properties:

hibernate:

dialect: org.hibernate.dialect.MySQLDialect

redis:

host: redis

port: 6379

timeout: 2000

ai:

service:

url: http://ai-service:8000

logging:

level:

com.example.ai: DEBUGAI服务客户端

java

// backend/src/main/java/com/example/ai/service/AIServiceClient.java

package com.example.ai.service;

import com.example.ai.model.ChatRequest;

import com.example.ai.model.ChatResponse;

import com.example.ai.model.EmbeddingRequest;

import com.example.ai.model.EmbeddingResponse;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.http.HttpEntity;

import org.springframework.http.HttpHeaders;

import org.springframework.http.MediaType;

import org.springframework.http.ResponseEntity;

import org.springframework.stereotype.Service;

import org.springframework.web.client.RestTemplate;

import java.util.List;

@Service

public class AIServiceClient {

private final RestTemplate restTemplate;

@Value("${ai.service.url:http://ai-service:8000}")

private String aiServiceUrl;

public AIServiceClient(RestTemplate restTemplate) {

this.restTemplate = restTemplate;

}

public ChatResponse chat(ChatRequest request){

String url = aiServiceUrl + "/chat";

HttpHeaders headers = new HttpHeaders();

headers.setContentType(MediaType.APPLICATION_JSON);

HttpEntity<ChatRequest> entity = new HttpEntity<>(request, headers);

ResponseEntity<ChatResponse> response = restTemplate.postForEntity(

url, entity, ChatResponse.class);

return response.getBody();

}

public EmbeddingResponse getEmbeddings(List<String> texts){

String url = aiServiceUrl + "/embeddings";

HttpHeaders headers = new HttpHeaders();

headers.setContentType(MediaType.APPLICATION_JSON);

EmbeddingRequest request = new EmbeddingRequest(texts);

HttpEntity<EmbeddingRequest> entity = new HttpEntity<>(request, headers);

ResponseEntity<EmbeddingResponse> response = restTemplate.postForEntity(

url, entity, EmbeddingResponse.class);

return response.getBody();

}

public boolean healthCheck() {

try {

String url = aiServiceUrl + "/health";

ResponseEntity<String> response = restTemplate.getForEntity(url, String.class);

return response.getStatusCode().is2xxSuccessful();

} catch (Exception e) {

return false;

}

}

}数据模型

java

// backend/src/main/java/com/example/ai/model/ChatRequest.java

package com.example.ai.model;

import lombok.Data;

import jakarta.validation.constraints.NotBlank;

@Data

public class ChatRequest {

@NotBlank(message = "消息不能为空")

private String message;

private String conversationId;

private Integer maxLength = 512;

private Double temperature = 0.7;

}

// backend/src/main/java/com/example/ai/model/ChatResponse.java

package com.example.ai.model;

import lombok.Data;

import java.time.LocalDateTime;

@Data

public class ChatResponse {

private String response;

private String conversationId;

private Integer tokensUsed;

private Double processingTime;

private LocalDateTime timestamp;

}

// backend/src/main/java/com/example/ai/model/EmbeddingRequest.java

package com.example.ai.model;

import lombok.Data;

import java.util.List;

@Data

public class EmbeddingRequest {

private List<String> texts;

public EmbeddingRequest(List<String> texts) {

this.texts = texts;

}

}

// backend/src/main/java/com/example/ai/model/EmbeddingResponse.java

package com.example.ai.model;

import lombok.Data;

import java.util.List;

@Data

public class EmbeddingResponse {

private List<List<Double>> embeddings;

private String model;

}