Title

题目

Multi-contrast low-field MRI acceleration with k-space progressive learningand image-space hybrid attention fusion

基于k空间渐进式学习与图像空间混合注意力融合的多对比度低场MRI加速

01

文献速递介绍

磁共振成像(MRI)是一种无创且标准的医学检查与诊断方法。在MRI系统中,信号在k空间(即傅里叶空间)中采集,随后重建为图像(Weishaupt等人,2006)。近年来,低场MRI系统(<1T)因其便携性、适用于儿科成像及介入手术中的图像引导等优势,应用日益广泛(Lyu等人,2023b)。然而,低场强会导致信噪比(SNR)降低,限制低场MRI系统的图像质量。此外,受MRI物理特性影响,扫描过程可能耗时较长。为加快MRI扫描速度,k空间数据常采用欠采样方式采集,这会进一步降低图像质量(Tsao和Kozerke,2012)。因此,从低场MRI系统的欠采样含噪k空间数据中重建高质量MRI图像具有重要意义。在临床实践中,包含互补结构信息的多对比度MRI图像通常联合采集,用于医学诊断和治疗方案制定。例如,T1加权图像(T1WI)能提供详细的形态和结构信息,而T2加权图像(T2WI)可突出水肿和炎症区域。受MRI成像系统物理特性影响,T2WI的获取难度高于T1WI,因其需要更长的重复时间和回波时间(Xiang等人,2018)。近期研究表明,以易获取的模态(如T1WI)作为引导模态,加速并增强目标模态(如T2WI)的重建,是一种极具潜力的方法,尤其适用于扫描时间较长的模态(Feng等人,2021)。近年来,深度学习方法已广泛应用于多对比度MRI重建(Xiang等人,2018;Feng等人,2021、2022;Han等人,2019;Ding和Zhang,2022;Huang等人,2023b;Pan等人,2023;Zhou和Zhou,2020;Lyu等人,2022;Liu等人,2022)。这些方法大致可分为图像域重建、k空间重建和双域重建三类。图像域重建先将受污染的k空间数据转换为低质量图像,再在图像域进行去噪处理(Xiang等人,2018;Feng等人,2021、2022)。但欠采样违反奈奎斯特-香农采样定理,会在图像域正则化器生效前引入混叠伪影并导致图像细节丢失。第二类方法聚焦于k空间重建(Han等人,2019;Ding和Zhang,2022;Huang等人,2023b;Pan等人,2023),通过在傅里叶域进行插值和去噪恢复高质量k空间信号,再将优化后的k空间信号重建为图像。然而,k空间数据的频域特性复杂,难以解释和操控,且对噪声和伪影高度敏感------k空间中的微小误差可能导致重建图像出现广泛伪影,因此准确去噪和纠错至关重要(Singh等人,2023)。双域重建方法(Zhou和Zhou,2020;Lyu等人,2022;Liu等人,2022)先在傅里叶域进行插值和去噪以恢复高质量k空间数据,再将其转换至图像域进行进一步去噪和优化,最终提升图像质量。这类方法充分利用两个域的信息,有效增强图像质量并减轻伪影,实现更精准的MRI重建。尽管现有方法在多对比度MRI加速方面具有潜力,但尚未充分探索低场场景下的多对比度MRI加速问题------该场景需同时完成去混叠和去噪。大多数现有方法(Zhou和Zhou,2020;Lyu等人,2022;Liu等人,2022)通过数据一致性层强制模型预测结果与采集的k空间数据保持一致,以提高重建精度。但低场MRI系统中采集的信号信噪比更低,直接应用数据一致性约束面临额外挑战。为在该场景下有效利用数据一致性层,需引入额外约束或正则化项以抑制噪声、增强信号保真度,这会增加模型设计的复杂性。此外,现有方法通常对k空间和图像空间采用相同的网络结构(例如DudoRNet(Zhou和Zhou,2020)中的卷积网络和DudoCAF(Lyu等人,2022)中的Transformer),未考虑域特异性特征,也未针对不同域设计定制化模型。因此,需开发一种适配域特异性特征、能有效从k空间和图像域提取特征的方法,以实现低场强下高质量MRI的重建。在k空间学习中,低频(LF)成分对应图像的整体外观和基本结构,而高频(HF)成分决定图像的清晰度和细节结构。准确恢复这两种频率成分是重建高质量图像的关键。然而,不同频率成分的幅度存在显著不平衡------低频成分的幅度远高于高频成分(Pan等人,2023)。这导致在网络训练过程中,高幅度的低频成分占据主导地位,掩盖高频成分的恢复,进而导致重建效果不佳。此外,与低频成分相比,低幅度的高频信号更易受低场噪声影响,信噪比更低,恢复难度更大。因此,不应将低频和高频成分同等对待,而应重点关注高频成分的恢复,这对提升k空间学习网络的性能至关重要。在图像域去噪方面,由于不同模态和图像不同区域存在互补特征,充分挖掘多对比度图像中的互补信息尤为关键。现有研究表明,与卷积网络(Feng等人,2021;Li等人,2023)相比,Transformer(Feng等人,2022;Lyu等人,2023a)更擅长整合长程相关特征,能提升重建性能。但在整个特征图上进行自注意力运算会产生二次计算复杂度。为缓解这一问题,部分方法在局部窗口内计算特征相关性(Liu等人,2021;Liang等人,2021),但牺牲了捕捉长程依赖的能力。在低场场景下,含噪特征间的相关性计算难度进一步增大,因此亟需设计一种计算高效、能全面准确捕捉长程依赖的网络。为解决低场多对比度MRI加速面临的独特挑战,本文提出一种双域学习框架,包含k空间"低频到高频渐进式(LHFP)"学习网络和图像空间"混合注意力融合网络(HAFNet)"。k空间LHFP学习网络分为两个阶段:第一阶段通过对各频率成分赋予均等权重恢复低频成分;第二阶段通过降低低频成分的损失权重,重点强化高频成分的学习。模型通过掩码预测器模块自适应估计每个图像特有的高低频边界,并与k空间学习网络联合优化。LHFP网络输出的优化后k空间数据被重建为图像,输入至图像域HAFNet进行后续去噪处理。HAFNet通过卷积块提取每种模态的多尺度特征,再利用浅层和深层"混合窗式注意力融合(HWAF)"模块,在对应层级高效整合多对比度特征。通过交替在密集窗口和扩张窗口中计算自注意力,HAFNet能高效且全面地捕捉长程依赖。为降低复杂度并稳定训练过程,该框架采用串行流程而非端到端联合训练方式,k空间和图像域网络分别进行单次训练。这种设计使k空间网络能够优化频率成分,同时图像域网络能够增强细节并抑制伪影,最终提升重建质量。本文的主要贡献如下:1. 针对k空间学习中高频成分恢复不足的问题,提出一种新型LHFP学习网络,通过渐进式方式恢复低频和高频成分,并为每张图像自适应优化高低频边界。2. 提出HAFNet,通过交替在密集窗口和扩张窗口中计算自注意力,高效整合多对比度图像的长程依赖特征。3. 在BraTs数据集和低场M4Raw数据集上的实验表明,本文方法优于当前最先进的多对比度MRI重建方法。

Aastract

摘要

Multi-contrast MRI provides complementary tissue information for diagnosis and treatment planning but islimited by the long acquisition time and system noise, which deteriorates at low field strength. To jointlyaccelerate and denoise multi-contrast MRI acquired at low field strength, we present a novel dual-domainframework designed to reconstruct high-quality multi-contrast MR images from k-space data corrupted byunder-sampling and system noise. Our dual-domain framework first enhances k-space data quality througha k-space Low-to-High Frequency Progressive (LHFP) learning network, and then further refines the k-spaceoutputs with an image-space Hybrid Attention Fusion Network (HAFNet). In k-space learning, the magnitudeimbalance between the low- and high-frequency components may cause the network to be dominated bylow-frequency components, leading to sub-optimal recovery of high-frequency components. To tackle thischallenge, the two-stage LHFP learning network first recovers low-frequency components and then emphasizeshigh-frequency learning through patient-specific adaptive prediction of the low-high frequency boundary. Inimage domain learning, the challenge of efficiently capturing long-range dependencies across the multi-contrastimages is resolved through Hybrid Window-based Attention Fusion (HWAF) modules, which integrate featuresby alternately computing self-attention within dense and dilated windows. Extensive experiments on the BraTsMRI and M4Raw low-field MRI datasets demonstrate the superiority of our method over state-of-the-art MRIreconstruction methods. Our source code will be made publicly available upon acceptance.

多对比度磁共振成像(MRI)为疾病诊断和治疗方案制定提供了互补的组织信息,但受限于较长的采集时间和系统噪声------在低场强下,这些问题会进一步恶化。为实现低场强多对比度MRI的联合加速与去噪,本文提出一种新颖的双域框架,旨在从欠采样和系统噪声污染的k空间数据中重建高质量多对比度磁共振图像。该双域框架首先通过k空间"低频到高频渐进式(LHFP)"学习网络提升k空间数据质量,再利用图像空间"混合注意力融合网络(HAFNet)"对k空间输出结果进行进一步优化。在k空间学习中,低频与高频成分的幅值不平衡可能导致网络受低频成分主导,进而影响高频成分的恢复效果。为解决这一问题,两阶段LHFP学习网络先完成低频成分恢复,再通过患者特异性的高低频边界自适应预测,强化高频成分学习。在图像域学习中,通过"混合窗式注意力融合(HWAF)"模块解决多对比度图像间长程依赖的高效捕捉问题,该模块通过交替计算密集窗口和扩张窗口内的自注意力实现特征融合。在BraTs MRI数据集和M4Raw低场MRI数据集上的大量实验表明,本文方法优于当前最先进的磁共振图像重建方法。源代码将在论文录用后公开。

Method

方法

3. Method

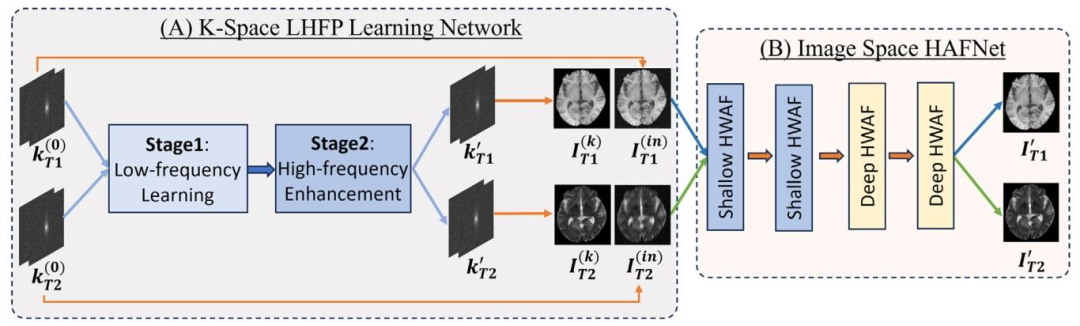

In MRI systems, high-quality T1-weighted (T1WI) and T2-weighted(T2WI) images, along with fully-sampled k-space signals, can be represented as 𝐼𝑇 1 , 𝐼**𝑇 2 ∈ 𝑅**ℎ×𝑤 and 𝑘**𝑇 1 , 𝑘**𝑇 2 ∈ 𝑅**ℎ×𝑤×2 , where ℎ and 𝑤 denotethe image height and width in pixels, and the k-space data comprisestwo channels (real and imaginary). Due to the system noise and undersampling, the k-space data is corrupted, denoted as 𝑘 (0) 𝑇 1 = 𝑘𝑇* 1 + 𝜖,𝑘(0) 𝑇 2= 𝐌 ⊙ (𝑘𝑇 2 + 𝜖), where 𝜖 represents low-field noise and 𝐌 isthe under-sampling mask. The T1WI data is affected only by low-fieldnoise, whereas the T2WI signal is both noisy and under-sampled for acceleration. In this work, we jointly reconstruct high-quality images 𝐼 𝑇 ′ 1and 𝐼 𝑇 ′ 2 from the corrupted k-space signals 𝑘 (0) 𝑇 1 and 𝑘 (0) 𝑇 2 by sequentiallyperforming k-space and image domain learning.As illustrated in Fig. 1, the corrupted k-space data 𝑘 (0) 𝑇 1 and 𝑘 (0) 𝑇 2 arerefined by a Low-to-High Frequency Progressive (LHFP) learning networkto generate higher quality 𝑘 ′ 𝑇 1 and 𝑘 ′ 𝑇 2 . The LHFP network includes alow-frequency learning stage and a high-frequency enhancement stage.To further refine image details and exploit multi-modal complementaryfeatures, the refined k-space data 𝑘 ′ 𝑇 1 and 𝑘 ′ 𝑇 2 are reconstructed into images 𝐼 𝑇 (𝑘 1 ) and 𝐼 𝑇 (𝑘 2 ) , and then jointly denoised by the image space HybridAttention Fusion Network* (HAFNet). Both the k-space LHFP network andimage space HAFNet are supervised by high-quality MRI images (𝐼𝑇 1 ,𝐼𝑇* 2 ) and k-space signals (𝑘𝑇 1 , 𝑘𝑇 2 ).

在磁共振成像(MRI)系统中,高质量的T1加权(T1WI)和T2加权(T2WI)图像,以及全采样k空间信号,可表示为I{T1}, I{T2} \\in \\mathbb{R}\^{h \\times w}和k{T1}, k{T2} \\in \\mathbb{R}\^{h \\times w \\times 2},其中h和w分别表示图像的像素高度和宽度,k空间数据包含实部和虚部两个通道。受系统噪声和欠采样影响,k空间数据会受到污染,记为k{T1}\^{(0)} = k{T1} + \\epsilon、k{T2}\^{(0)} = \\mathbf{M} \\odot (k{T2} + \\epsilon),其中\\epsilon代表低场噪声,\\mathbf{M}为欠采样掩码。T1WI数据仅受低场噪声影响,而T2WI信号为实现加速,既含噪声又经过欠采样。本研究通过依次进行k空间和图像域学习,从受污染的k空间信号k{T1}\^{(0)}和k{T2}\^{(0)}中联合重建高质量图像I{T1}'和I{T2}'。 如图1所示,受污染的k空间数据k{T1}\^{(0)}和k{T2}\^{(0)}经"低频到高频渐进式(LHFP)"学习网络优化,生成更高质量的k{T1}'和k{T2}'。该LHFP网络包含低频学习阶段和高频增强阶段。为进一步优化图像细节并挖掘多模态互补特征,经优化的k空间数据k{T1}'和k{T2}'被重建为图像I_T(k_1)和I_T(k_2),随后由图像空间"混合注意力融合网络(HAFNet)"联合去噪。k空间LHFP网络和图像空间HAFNet均由高质量MRI图像(I{T1}, I{T2})和k空间信号(k{T1}, k{T2})进行监督。 在磁共振成像(MRI)系统中,高质量的T1加权(T1WI)和T2加权(T2WI)图像,以及全采样k空间信号,可表示为I{T1}, I{T2} \\in \\mathbb{R}\^{h \\times w}和k{T1}, k{T2} \\in \\mathbb{R}\^{h \\times w \\times 2},其中h和w分别表示图像的像素高度和宽度,k空间数据包含实部和虚部两个通道。受系统噪声和欠采样影响,k空间数据会受到污染,记为k{T1}\^{(0)} = k{T1} + \\epsilon、k{T2}\^{(0)} = \\mathbf{M} \\odot (k{T2} + \\epsilon),其中\\epsilon代表低场噪声,\\mathbf{M}为欠采样掩码。T1WI数据仅受低场噪声影响,而T2WI信号为实现加速,既含噪声又经过欠采样。本研究通过依次进行k空间和图像域学习,从受污染的k空间信号k{T1}\^{(0)}和k{T2}\^{(0)}中联合重建高质量图像I{T1}'和I{T2}'。 如图1所示,受污染的k空间数据k{T1}\^{(0)}和k{T2}\^{(0)}经"低频到高频渐进式(LHFP)"学习网络优化,生成更高质量的k{T1}'和k{T2}'。该LHFP网络包含低频学习阶段和高频增强阶段。为进一步优化图像细节并挖掘多模态互补特征,经优化的k空间数据k{T1}'和k{T2}'被重建为图像I_T(k_1)和I_T(k_2),随后由图像空间"混合注意力融合网络(HAFNet)"联合去噪。k空间LHFP网络和图像空间HAFNet均由高质量MRI图像(I{T1}, I{T2})和k空间信号(k{T1}, k{T2})进行监督。

Conclusion

结论

In this work, we explore the realm of multi-contrast low-field MRIacceleration with a novel dual-domain framework comprising a kspace LHFP learning network and an image space HAFNet. In k-spacelearning, our proposed LHFP network first recovers low-frequency components and then emphasizes high-frequency learning in the secondstage. This progressive learning strategy effectively mitigates the challenge posed by the magnitude imbalance between low- and highfrequency components, leading to superior recovery outcomes. In image space learning, our HAFNet integrates long-range dependent features from multi-contrast images through shallow-level and deep-levelHWAF modules in their respective layers. Experimental results onthe BraTs dataset (with simulated low-field noise) and the M4Rawdataset (acquired from low-field MRI systems) demonstrate significantperformance gains over state-of-the-art methods, both qualitatively andquantitatively. These findings highlight the promising potential of ourapproach for clinical applications.

本文旨在探索多对比度低场磁共振成像(MRI)加速领域,提出一种包含k空间LHFP学习网络和图像空间HAFNet的新型双域框架。在k空间学习阶段,所提LHFP网络首先恢复低频成分,随后在第二阶段重点强化高频成分学习。该渐进式学习策略有效缓解了高低频成分幅度不平衡带来的挑战,实现了更优的恢复效果。在图像空间学习阶段,HAFNet通过各层中的浅层和深层HWAF模块,整合多对比度图像的长程依赖特征。在BraTs数据集(含模拟低场噪声)和M4Raw数据集(基于低场MRI系统采集)上的实验结果表明,无论从定性还是定量角度,本文方法均较当前最先进方法取得了显著的性能提升。这些发现充分彰显了本文方法在临床应用中的良好潜力。

Figure

图

Fig. 1. Illustration of our proposed multi-contrast MRI reconstruction framework. The low-quality k-space data 𝑘 (0) 𝑇 1 and 𝑘 (0) 𝑇 2 are first refined using the (A) K-spaceLow-to-High Frequency Progressive* (LHFP) learning network, resulting in 𝑘 ′ 𝑇 1 and 𝑘 ′ 𝑇 2 . The refined k-space data is then transformed into images 𝐼 𝑇 (𝑘 1 ) and 𝐼 𝑇 (𝑘 2 ) , whilethe original k-space data is converted into images 𝐼 𝑇 (𝑖𝑛 1 ) and 𝐼 𝑇 (𝑖𝑛 2 ) . These multi-contrast images 𝐼 𝑇 (𝑘 1 ) , 𝐼 𝑇 (𝑘 2 ) , 𝐼 𝑇 (𝑖𝑛 1 ) , and 𝐼 𝑇 (𝑖𝑛 2 ) are refined by the (B) Image space HybridAttention Fusion Network* (HAFNet) to produce high-quality images 𝐼 𝑇 ′ 1 and 𝐼 𝑇 ′ 2

图1 本文提出的多对比度磁共振成像(MRI)重建框架示意图。低质量k空间数据k{T1}\^{(0)}和k{T2}\^{(0)}首先通过(A)k空间"低频到高频渐进式(LHFP)"学习网络进行优化,得到k{T1}'和k{T2}';优化后的k空间数据被转换为图像I_T(k_1)和I_T(k_2),同时原始k空间数据被转换为图像I_T(in_1)和I_T(in_2);这些多对比度图像I_T(k_1)、I_T(k_2)、I_T(in_1)和I_T(in_2)经(B)图像空间"混合注意力融合网络(HAFNet)"优化后,生成高质量图像I{T1}'和I{T2}'。

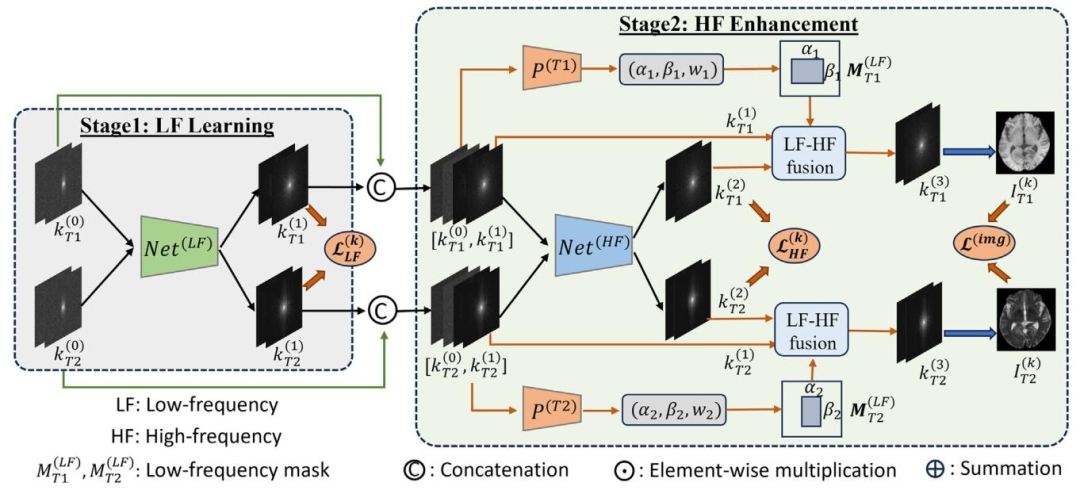

Fig. 2. Illustration of our proposed Low-to-High Frequency Progressive (LHFP) learning network, which consists of a Low-Frequency (LF) learning stage and aHigh-Frequency (HF) enhancement stage. In the LF learning stage, equal loss weights are applied to all frequency components. In the HF enhancement stage, thefocus shifts to enhancing HF components learning, guided by the estimated low-high frequency boundaries 𝐌 ( 𝑇 𝐿𝐹 1 ) and 𝐌 ( 𝑇 𝐿𝐹 2 ) . The refined k-space data 𝑘 (3) 𝑇 1 and𝑘* (3) 𝑇 2 are reconstructed into MR images 𝐼 𝑇 (𝑘 1 ) and 𝐼 𝑇 (𝑘 2

图2 本文提出的"低频到高频渐进式(LHFP)"学习网络示意图。该网络包含低频(LF)学习阶段和高频(HF)增强阶段:在低频学习阶段,对所有频率成分赋予相同的损失权重;在高频增强阶段,以估计出的高低频边界\\mathbf{M}{T1\^{LF}}和\\mathbf{M}{T2\^{LF}}为引导,重点强化高频成分的学习。优化后的k空间数据k{T1}\^{(3)}和k{T2}\^{(3)}被重建为磁共振图像I_T(k_1)和I_T(k_2)。

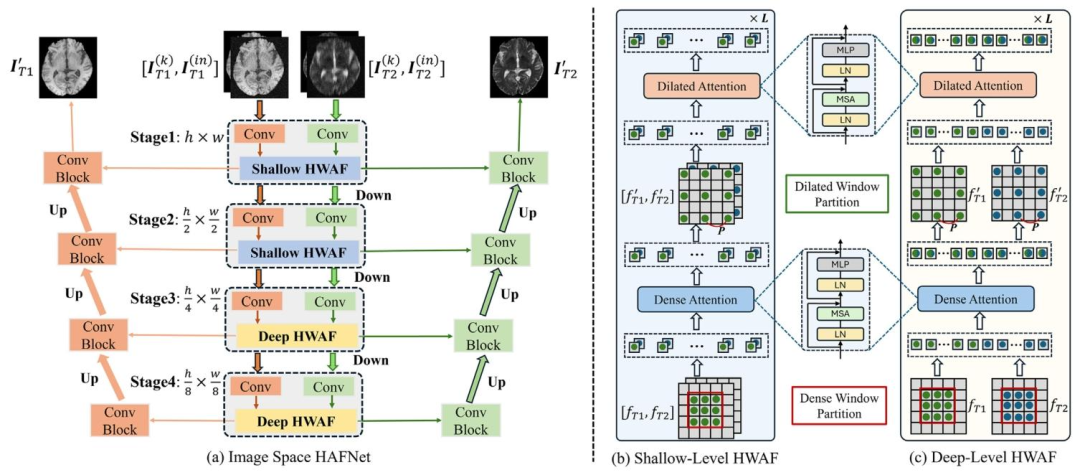

Fig. 3. Illustration of (a) the image-space HAFNet, which integrates multi-contrast features using (b) the Shallow-level HWAF in the first two stages and (c) theDeep-level HWAF modules in the last two stages, respectively

图3 (a)图像空间混合注意力融合网络(HAFNet)示意图。该网络分别通过(b)前两个阶段的浅层混合窗式注意力融合(HWAF)模块和(c)后两个阶段的深层混合窗式注意力融合(HWAF)模块,实现多对比度特征的整合。

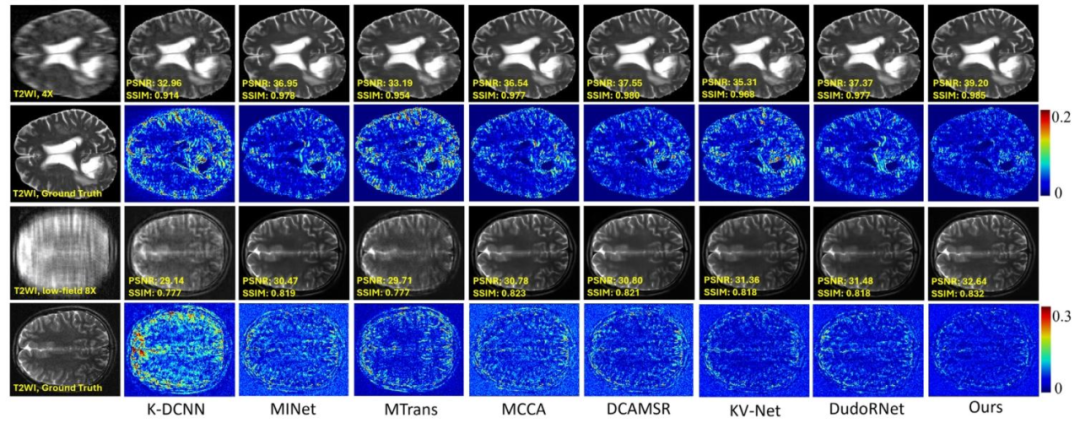

Fig. 4. Visualization of the images reconstructed with our method and state-of-the-art methods, including K-DCNN (Han et al., 2019), MINet (Feng et al., 2021),MTrans (Feng et al., 2022), MCCA (Li et al., 2023), DCAMSR (Huang et al., 2023a), KV-Net (Liu et al., 2022), and DudoRNet (Zhou and Zhou, 2020). The firsttwo rows show the 4× acceleration of a T2WI image from the BraTs dataset. The last two rows show the 8× acceleration of a T2WI image from the low-fieldM4Raw dataset

图4 本文方法与当前最先进方法的重建图像可视化对比。对比方法包括K-DCNN(Han等人,2019)、MINet(Feng等人,2021)、MTrans(Feng等人,2022)、MCCA(Li等人,2023)、DCAMSR(Huang等人,2023a)、KV-Net(Liu等人,2022)以及DuDoRNet(Zhou和Zhou,2020)。前两行展示BraTs数据集T2加权图像(T2WI)在4倍加速下的重建结果;后两行展示低场M4Raw数据集T2加权图像(T2WI)在8倍加速下的重建结果。

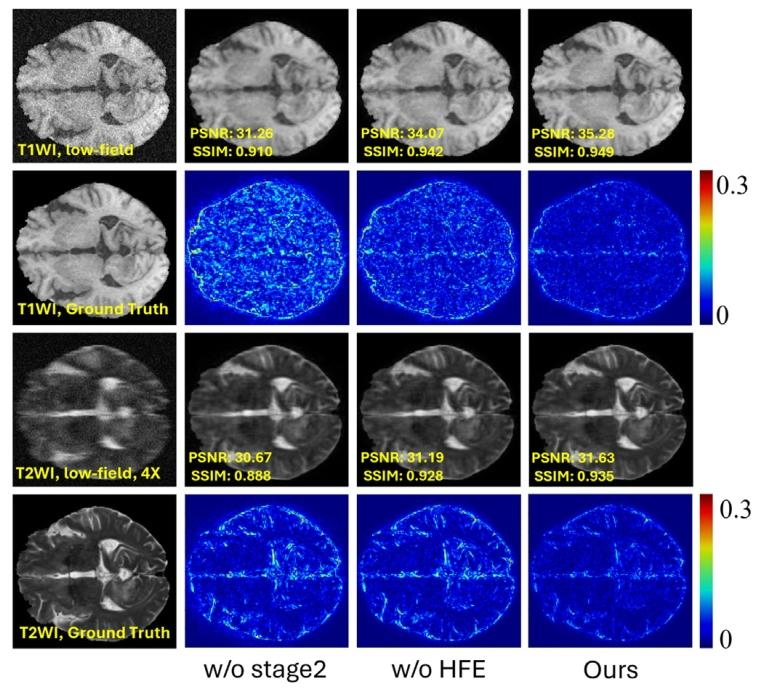

Fig. 5. For an example of the joint reconstruction of the low-field T1WI MRIand 4× accelerated low-field T2WI MRI in the BraTs dataset, visualization ofthe reconstructed images and error maps obtained from different versions ofthe k-space network.

图5 BraTs数据集低场T1加权磁共振图像(T1WI)与4倍加速低场T2加权磁共振图像(T2WI)联合重建示例,展示了不同版本k空间网络的重建图像及误差图可视化结果。

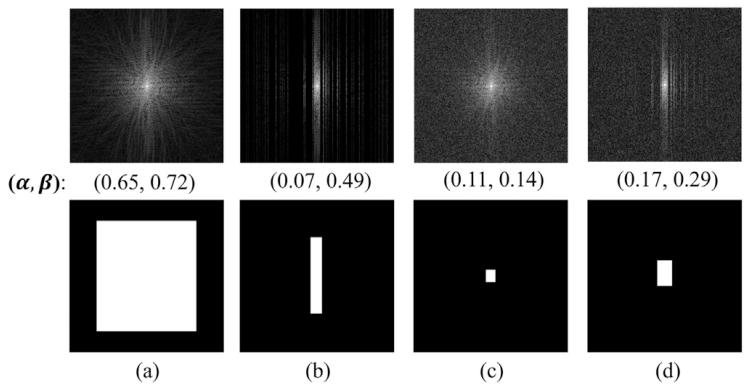

Fig. 6. Visualization of k-space data and predicted low-frequency masks for:(a) high-quality T1WI MRI, (b) under-sampled T2WI MRI, (c) low-field T1WIMRI, and (d) low-field under-sampled T2WI MRI

图6 k空间数据及预测低频掩码的可视化结果: (a)高质量T1加权磁共振图像(T1WI)、(b)欠采样T2加权磁共振图像(T2WI)、(c)低场T1加权磁共振图像(T1WI)、(d)低场欠采样T2加权磁共振图像(T2WI)。

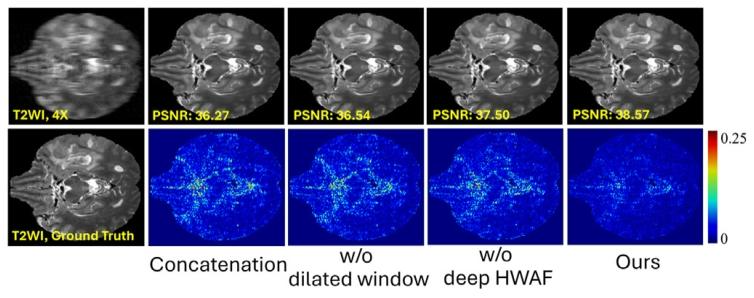

Fig. 7. For a 4× accelerated T2WI MRI in the BraTs dataset, visualization ofthe reconstructed images and error maps generated by different multi-modalfusion methods in the image domain network.

图7 BraTs数据集4倍加速T2加权磁共振图像(T2WI)的重建结果及误差图可视化,对比了图像域网络中不同多模态融合方法的性能。

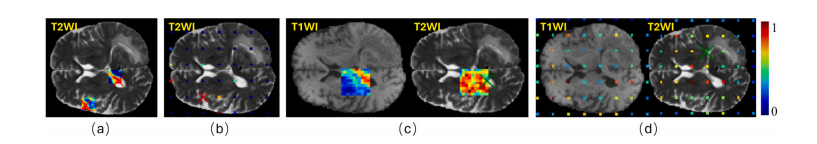

Fig. 8. Visualization of attention matrices in the (a) dense windows and (b) dilated window of the shallow-level HWAF module, and in the (c) dense windowsand (d) dilated windows in the deep-level HWAF module. The anchor points in the shallow and deep HWAF modules are indicated by red and green crosses,respectively

图8 注意力矩阵可视化结果:(a)浅层混合窗式注意力融合(HWAF)模块的密集窗口、(b)浅层HWAF模块的扩张窗口、(c)深层HWAF模块的密集窗口、(d)深层HWAF模块的扩张窗口。浅层和深层HWAF模块中的锚点分别用红色十字和绿色十字标记。

Table

表

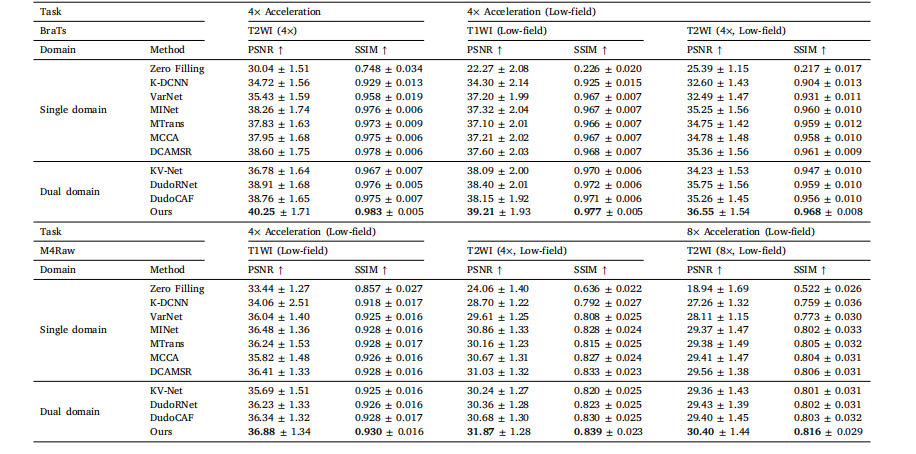

Table 1For the BraTs and M4Raw datasets, comparison of our method with state-of-the-art methods, including Zero Filling, K-DCNN (Han et al., 2019), VarNet (Sriramet al., 2020), MINet (Feng et al., 2021), MTrans (Feng et al., 2022), MCCA (Li et al., 2023), DCAMSR (Huang et al., 2023a), KV-Net (Liu et al., 2022), DudoRNet(Zhou and Zhou, 2020), and DudoCAF (Lyu et al., 2022). mean ± standard deviation are reported for each method, and the best metrics are highlighted in boldfont

表1 在BraTs数据集和M4Raw数据集上,本文方法与当前最先进方法的对比结果。对比方法包括零填充(Zero Filling)、K-DCNN(Han等人,2019)、VarNet(Sriram等人,2020)、MINet(Feng等人,2021)、MTrans(Feng等人,2022)、MCCA(Li等人,2023)、DCAMSR(Huang等人,2023a)、KV-Net(Liu等人,2022)、DuDoRNet(Zhou和Zhou,2020)以及DuDoCAF(Lyu等人,2022)。每种方法的结果均以"均值±标准差"形式呈现,最优指标以粗体突出显示。

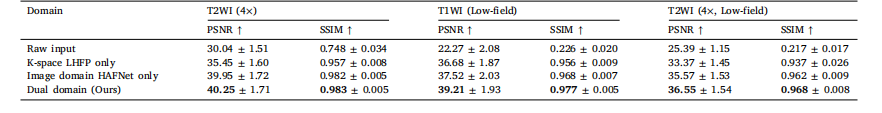

Table 2For the BraTs dataset, ablation of the k-space LHFP network and image domain HAFNet

表2 BraTs数据集上k空间LHFP网络与图像域HAFNet的消融实验结果。

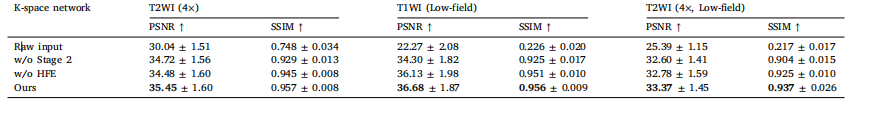

Table 3For the BraTs dataset, ablation of the key components in the k-space progressive learning network.

表3 BraTs数据集上k空间渐进式学习网络关键组件的消融实验结果。

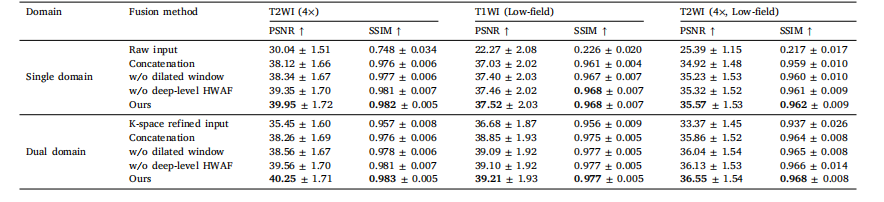

Table 4For the BraTs dataset, comparison of different multi-modal fusion methods in the image domain.

表4 BraTs数据集上图像域不同多模态融合方法的对比结果。

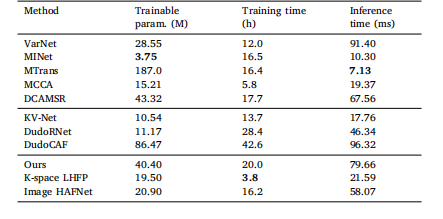

Table 5Computation efficiency of different methods

表5 不同方法的计算效率对比结果。