- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊

Word2Vec是Google在2013年开源的将词表征为实数值向量的高效工具。简单说,它能把"苹果"、"香蕉"这样的词变成100维的数字向量,让计算机能"理解"词语之间的语义关系。

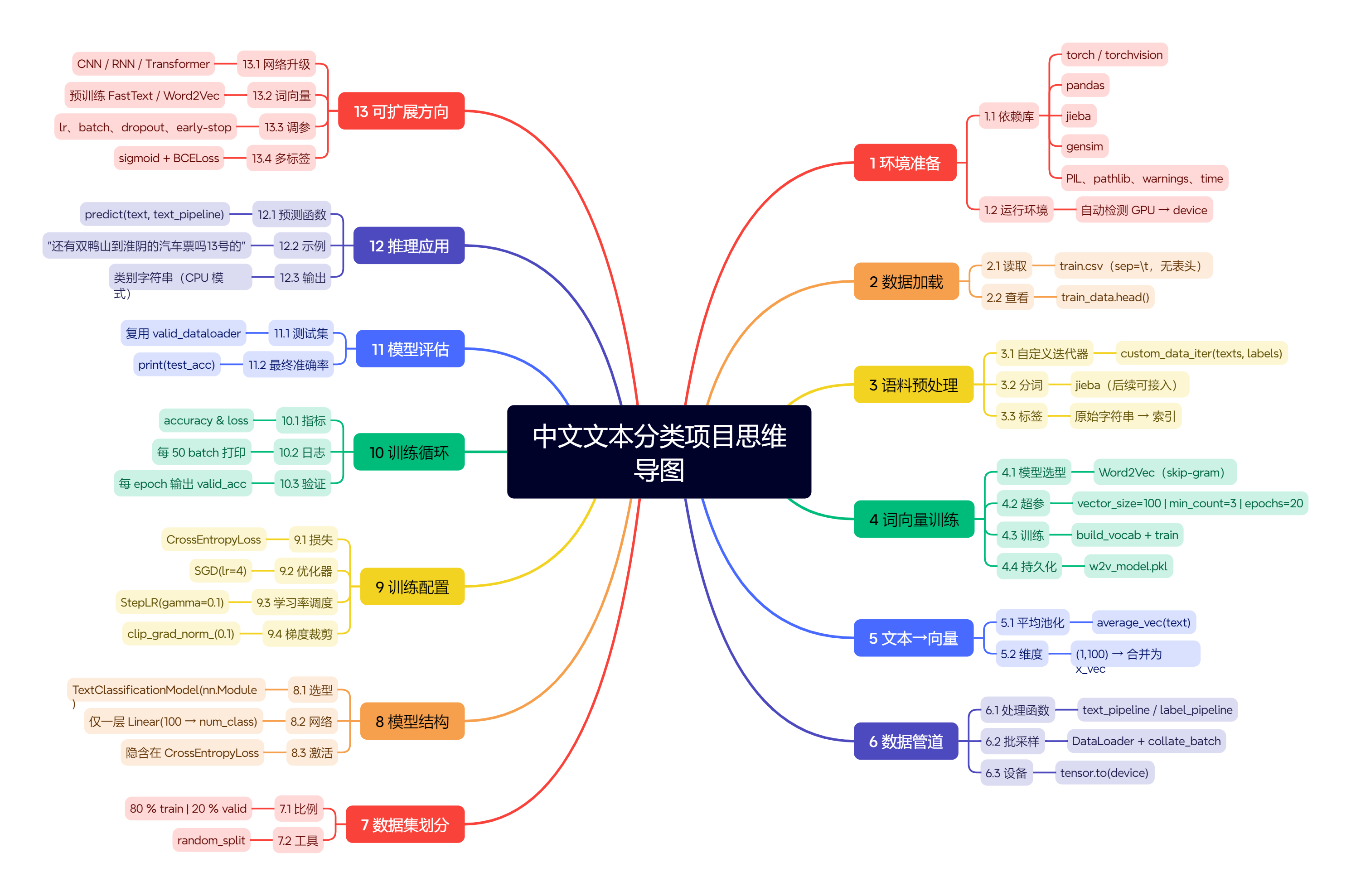

思维导图如下:

python

import torch

import os,PIL,pathlib,warnings

from torch import nn

import time

import pandas as pd

from torchvision import transforms, datasets

import jieba

# 🚫 关闭烦人的警告(就像关掉手机通知免得被消息轰炸)

warnings.filterwarnings("ignore")

# 🖥️ 检查是用电脑还是GPU干活(就像选坐地铁还是高铁)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(device) # 输出:cuda 或 cpu

# 📥 1.2 加载自定义中文数据

train_data = pd.read_csv('./train.csv', sep='\t', header=None)

print(train_data.head()) # 打印前5行数据(就像打开Excel看第一眼数据)

# 🧩 1.3 构造数据集迭代器

def custom_data_iter(texts, labels):

for x, y in zip(texts, labels):

yield x, y # 这就像快递员把包裹(文本)和地址(标签)配对打包

x = train_data[0].values[:] # 文本内容(快递包裹)

y = train_data[1].values[:] # 类别标签(包裹地址)

bash

0 1

0 还有双鸭山到淮阴的汽车票吗13号的 Travel-Query

1 从这里怎么回家 Travel-Query

2 随便播放一首专辑阁楼里的佛里的歌 Music-Play

3 给看一下墓王之王嘛 FilmTele-Play

4 我想看挑战两把s686打突变团竞的游戏视频 Video-Play

bash

# 🌱 1.4 构建词典(Word2Vec训练)

from gensim.models.word2vec import Word2Vec

import numpy as np

# 像教小朋友认字:100维向量=100个特征标签(身高/发型/颜色)

w2v = Word2Vec(vector_size=100, min_count=3) # 词频低于3的字不教

w2v.build_vocab(x) # 建立字典(教小朋友认字)

w2v.train(x, total_examples=w2v.corpus_count, epochs=20) # 训练20遍(就像反复读课文)输出如下

bash

(2732848, 3663560)

bash

# 📌 将文本转为向量(平均词向量法)

def average_vec(text):

vec = np.zeros(100).reshape((1, 100)) # 初始化100维向量(就像空盒子)

for word in text:

try:

vec += w2v.wv[word].reshape((1, 100)) # 每个字加进盒子(像积木拼图)

except KeyError:

continue # 读不懂的字跳过(就像跳过不认识的生词)

return vec

# 📦 将所有文本转为向量矩阵

x_vec = np.concatenate([average_vec(z) for z in x]) # 拼接成大矩阵(像把所有积木拼成大楼)

w2v.save('w2v_model.pkl') # 保存字典模型(像存下字典书)

train_iter = custom_data_iter(x_vec, y) # 数据迭代器(像快递分拣员)

print(len(x), len(x_vec)) # 原始数据量 vs 向量数据量(就像100个包裹变成100个向量盒子)

label_name = list(set(train_data[1].values[:])) # 类别名称(就像快递地址的分类:北京/上海/广州)

# 📦 生成数据批次和迭代器

text_pipeline = lambda x: average_vec(x) # 文本转向量(快递打包)

label_pipeline = lambda x: label_name.index(x) # 类别转数字(地址转编码)

print(text_pipeline("你在干嘛")) # 打印向量(像把"你在干嘛"变成100个数字)

print(label_pipeline("Travel-Query")) # 打印类别编码("Travel-Query"对应哪个数字)输出如下

bash

12100

['Audio-Play', 'Other', 'Calendar-Query', 'Alarm-Update', 'Weather-Query', 'HomeAppliance-Control', 'Travel-Query', 'TVProgram-Play', 'FilmTele-Play', 'Radio-Listen', 'Video-Play', 'Music-Play']

bash

# 📦 数据加载器(像快递分拣流水线)

def collate_batch(batch):

label_list, text_list = [], []

for (_text, _label) in batch:

label_list.append(label_pipeline(_label)) # 收集类别编码

processed_text = torch.tensor(text_pipeline(_text), dtype=torch.float32) # 文本转张量

text_list.append(processed_text)

label_list = torch.tensor(label_list, dtype=torch.int64)

text_list = torch.cat(text_list) # 合并成大矩阵(像把快递盒拼成大箱子)

return text_list.to(device), label_list.to(device) # 转移到GPU/CPU

dataloader = DataLoader(train_iter, batch_size=8, shuffle=False, collate_fn=collate_batch)

# 8个样本一打包(就像每8个快递装成一车)

# 🧱 2.1 搭建模型(文本分类神经网络)

class TextClassificationModel(nn.Module):

def __init__(self, num_class):

super(TextClassificationModel, self).__init__()

# 100维向量 → 类别数的分类器(就像100个特征输入到分类器)

self.fc = nn.Linear(100, num_class)

def forward(self, text):

return self.fc(text) # 通过全连接层输出

# 🧪 2.2 初始化模型

num_class = len(label_name) # 类别数量(比如5种快递类型)

vocab_size = 100000 # 词典大小(就像10万本字典)

em_size = 12 # 词向量维度(这里实际用100,但代码写12,可能是笔误)

model = TextClassificationModel(num_class).to(device) # 模型搬上GPU

# 📈 2.3 定义训练和评估函数

def train(dataloader):

model.train() # 切换训练模式(像打开学习模式)

total_acc, train_loss, total_count = 0, 0, 0

for idx, (text, label) in enumerate(dataloader):

predicted_label = model(text) # 模型预测

optimizer.zero_grad() # 梯度清零(像擦掉草稿纸)

loss = criterion(predicted_label, label) # 计算误差

loss.backward() # 反向传播(像倒着看解题步骤)

torch.nn.utils.clip_grad_norm_(model.parameters(), 0.1) # 梯度裁剪(防止梯度爆炸)

optimizer.step() # 更新参数(像修改学习策略)

def evaluate(dataloader):

model.eval() # 切换评估模式(像考试模式)

total_acc, train_loss, total_count = 0, 0, 0

with torch.no_grad(): # 关闭梯度计算(像考试不写草稿)

for idx, (text, label) in enumerate(dataloader):

predicted_label = model(text)

loss = criterion(predicted_label, label)

total_acc += (predicted_label.argmax(1) == label).sum().item() # 计算准确率

# 📊 数据集拆分(80%训练+20%验证)

from torch.utils.data.dataset import random_split

train_iter = custom_data_iter(train_data[0].values[:], train_data[1].values[:])

train_dataset = list(train_iter) # 转成列表(像把快递单子排成队列)

train_size = int(len(train_dataset) * 0.8) # 80%训练集

valid_size = len(train_dataset) - train_size # 20%验证集

# 分割数据集(像把快递单分成训练区和测试区)

split_train_, split_valid_ = random_split(train_dataset, [train_size, valid_size])

# 创建数据加载器(像设置快递分拣流水线)

train_dataloader = DataLoader(split_train_, batch_size=64, shuffle=True, collate_fn=collate_batch)

valid_dataloader = DataLoader(split_valid_, batch_size=64, shuffle=True, collate_fn=collate_batch)

# 🔥 3.2 正式训练(像马拉松比赛)

for epoch in range(1, EPOCHS + 1):

train(train_dataloader) # 每轮训练

val_acc, val_loss = evaluate(valid_dataloader) # 验证准确率

# 学习率调整(像比赛时调整配速)

if total_accu is not None and total_accu > val_acc:

scheduler.step() # 降速(学习率衰减)

else:

total_accu = val_acc输出如下

bash

|epoch1| 50/ 152 batches|train_acc0.746 train_loss0.02132

|epoch1| 100/ 152 batches|train_acc0.825 train_loss0.01539

|epoch1| 150/ 152 batches|train_acc0.835 train_loss0.01567

---------------------------------------------------------------------

| epoch 1 | time:1.46s | valid_acc 0.726 valid_loss 0.038 | lr 4.000000

---------------------------------------------------------------------

|epoch2| 50/ 152 batches|train_acc0.841 train_loss0.01451

|epoch2| 100/ 152 batches|train_acc0.835 train_loss0.01556

|epoch2| 150/ 152 batches|train_acc0.842 train_loss0.01566

---------------------------------------------------------------------

| epoch 2 | time:1.30s | valid_acc 0.851 valid_loss 0.014 | lr 4.000000

---------------------------------------------------------------------

|epoch3| 50/ 152 batches|train_acc0.843 train_loss0.01494

|epoch3| 100/ 152 batches|train_acc0.851 train_loss0.01482

|epoch3| 150/ 152 batches|train_acc0.850 train_loss0.01337

---------------------------------------------------------------------

| epoch 3 | time:1.46s | valid_acc 0.848 valid_loss 0.015 | lr 4.000000

---------------------------------------------------------------------

|epoch4| 50/ 152 batches|train_acc0.881 train_loss0.00891

|epoch4| 100/ 152 batches|train_acc0.885 train_loss0.00821

|epoch4| 150/ 152 batches|train_acc0.897 train_loss0.00704

---------------------------------------------------------------------

| epoch 4 | time:1.47s | valid_acc 0.895 valid_loss 0.008 | lr 0.400000

---------------------------------------------------------------------

|epoch5| 50/ 152 batches|train_acc0.901 train_loss0.00628

|epoch5| 100/ 152 batches|train_acc0.891 train_loss0.00674

|epoch5| 150/ 152 batches|train_acc0.898 train_loss0.00653

---------------------------------------------------------------------

| epoch 5 | time:1.59s | valid_acc 0.887 valid_loss 0.007 | lr 0.400000

---------------------------------------------------------------------

|epoch6| 50/ 152 batches|train_acc0.900 train_loss0.00581

|epoch6| 100/ 152 batches|train_acc0.904 train_loss0.00586

|epoch6| 150/ 152 batches|train_acc0.904 train_loss0.00558

---------------------------------------------------------------------

| epoch 6 | time:1.64s | valid_acc 0.893 valid_loss 0.007 | lr 0.040000

---------------------------------------------------------------------

|epoch7| 50/ 152 batches|train_acc0.908 train_loss0.00543

|epoch7| 100/ 152 batches|train_acc0.902 train_loss0.00546

|epoch7| 150/ 152 batches|train_acc0.903 train_loss0.00591

---------------------------------------------------------------------

| epoch 7 | time:1.50s | valid_acc 0.894 valid_loss 0.007 | lr 0.004000

---------------------------------------------------------------------

|epoch8| 50/ 152 batches|train_acc0.903 train_loss0.00609

|epoch8| 100/ 152 batches|train_acc0.905 train_loss0.00517

|epoch8| 150/ 152 batches|train_acc0.906 train_loss0.00552

---------------------------------------------------------------------

| epoch 8 | time:1.47s | valid_acc 0.894 valid_loss 0.007 | lr 0.000400

---------------------------------------------------------------------

|epoch9| 50/ 152 batches|train_acc0.897 train_loss0.00593

|epoch9| 100/ 152 batches|train_acc0.907 train_loss0.00526

|epoch9| 150/ 152 batches|train_acc0.910 train_loss0.00556

---------------------------------------------------------------------

| epoch 9 | time:1.53s | valid_acc 0.894 valid_loss 0.007 | lr 0.000040

---------------------------------------------------------------------

|epoch10| 50/ 152 batches|train_acc0.912 train_loss0.00542

|epoch10| 100/ 152 batches|train_acc0.901 train_loss0.00574

|epoch10| 150/ 152 batches|train_acc0.901 train_loss0.00564

---------------------------------------------------------------------

| epoch 10 | time:1.49s | valid_acc 0.894 valid_loss 0.007 | lr 0.000004

---------------------------------------------------------------------

模型准确率为:0.8938

python

# 🎯 测试指定数据

def predict(text, text_pipeline):

with torch.no_grad():

text = torch.tensor(text_pipeline(text), dtype=torch.float32) # 文本转向量

output = model(text) # 模型预测

return output.argmax(1).item() # 返回最高概率的类别

ex_text_str = "还有双鸭山到淮阴的汽车票吗13号的"

print("该文本的类别是: %s" % label_name[predict(ex_text_str, text_pipeline)])输出如下:

bash

torch.Size([1, 100])

该文本的类别是: Travel-Query