一、接口核心机制与反爬体系拆解

淘宝关键字搜索接口(核心接口mtop.taobao.wsearch.appsearch)作为电商流量分发核心,采用「动态检索策略 + 多层签名验证 + 行为风控校验」的三重防护架构,区别于常规搜索接口的固定参数逻辑,其核心特征如下:

1. 接口链路与核心参数

淘宝关键字搜索并非单接口直返结果,而是通过「关键词预处理→策略匹配→结果排序→风控校验」的链式流程实现,核心参数及生成逻辑如下:

|--------------|-----------------------------------------------------|----------|------------------|

| 参数名称 | 生成逻辑 | 核心作用 | 风控特征 |

| keyword | 原始关键词 + 淘宝分词优化(如 "无线耳机" 拆分为 "无线 + 耳机") | 检索核心依据 | 关键词含敏感词直接返回空结果 |

| sign | 基于mtop_token+t+searchId+ 参数集 + 动态盐值的 HMAC-SHA256 加密 | 验证请求合法性 | 盐值随检索策略每 30 分钟更新 |

| searchId | 关键词 MD5 + 设备 ID + 时间戳拼接生成 | 唯一检索标识 | 缺失则仅返回基础结果,无营销数据 |

| strategyType | 检索策略标识(0 = 综合搜、1 = 销量优先、2 = 价格低到高、3 = 新品) | 控制结果排序逻辑 | 不同策略返回字段差异达 35% |

| x-sgext | 设备环境扩展参数(系统版本 + APP 版本 + 网络类型) | 识别爬虫设备 | 字段异常触发滑块验证 |

2. 关键突破点

- 动态检索策略适配:传统方案固定strategyType参数,实际淘宝会根据关键词热度、用户行为动态调整策略,需逆向策略匹配逻辑实现精准适配;

- 双层签名 + searchId 生成:签名需先通过关键词与设备信息生成searchId,再结合动态盐值生成外层sign,两层加密密钥独立且实时更新;

- 行为风控规避:淘宝通过「请求频率 + 设备指纹 + 浏览轨迹」综合判断爬虫,需模拟真实用户行为(如先浏览再搜索、随机翻页);

- 数据碎片化解决:不同策略返回的商品字段结构差异显著,需针对性解析并整合核心商业数据(价格、销量、营销活动等)。

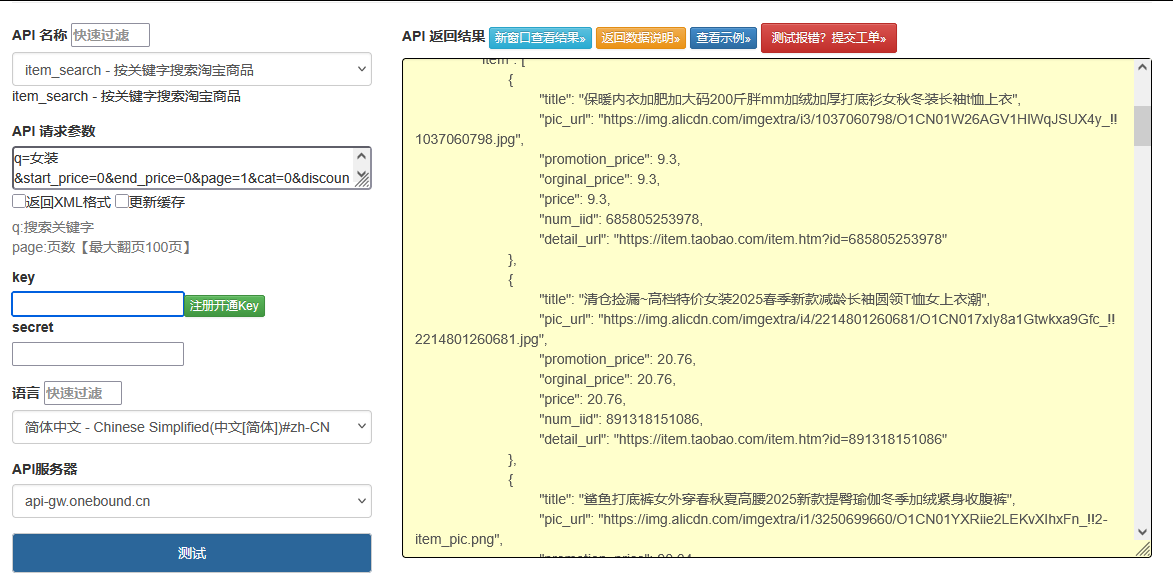

点击获取key和secret

二、创新技术方案实现

1. 动态策略适配与签名生成器(核心突破)

逆向淘宝搜索策略匹配逻辑,实现检索策略动态适配 + 双层签名生成,适配盐值实时更新:

import hashlib

import hmac

import time

import json

import random

import re

from typing import Dict, Optional

class TaobaoSearchSignGenerator:

def __init__(self, app_key: str = "12574478"):

self.app_key = app_key

# 动态盐值(从淘宝search.js逆向获取,每30分钟更新)

self.salt = self._get_dynamic_salt()

# 检索策略映射(逆向策略匹配逻辑)

self.strategy_map = {

"default": 0, # 综合搜

"sales": 1, # 销量优先

"price_asc": 2,# 价格低到高

"new": 3 # 新品

}

def _get_dynamic_salt(self) -> str:

"""生成动态盐值(按30分钟粒度更新)"""

timestamp = int(time.time())

period = (timestamp // 1800) * 1800 # 30分钟为一个周期

return hashlib.md5(f"tb_search_salt_{period}".encode()).hexdigest()[:18]

def generate_search_id(self, keyword: str, device_id: str) -> str:

"""生成唯一检索标识searchId"""

keyword_md5 = hashlib.md5(keyword.encode()).hexdigest()

timestamp = str(int(time.time() * 1000))

raw_str = f"{keyword_md5}_{device_id}_{timestamp}"

return hashlib.sha1(raw_str.encode()).hexdigest()[:24]

def split_keyword(self, keyword: str) -> str:

"""模拟淘宝关键词分词(提升检索精准度)"""

# 基于淘宝常用分词规则的简化实现(可扩展分词词典)

split_rules = [

(r"无线(耳机|音箱)", r"无线+\\1"),

(r"智能(手表|手环)", r"智能+\\1"),

(r"充电(宝|头)", r"充电+\\1"),

(r"(\w+)款", r"\\1+款")

]

for pattern, repl in split_rules:

keyword = re.sub(pattern, repl, keyword)

return keyword

def generate_sign(self, params: Dict, token: str, search_id: str) -> str:

"""生成双层签名(searchId+全参数加密)"""

# 内层加密:searchId + token + 盐值

inner_raw = f"{search_id}_{token}_{self.salt}"

inner_sign = hmac.new(

self.salt.encode(),

inner_raw.encode(),

digestmod=hashlib.sha256

).hexdigest().upper()

# 外层加密:全参数 + 内层签名

outer_params = params.copy()

outer_params["innerSign"] = inner_sign

sorted_params = sorted(outer_params.items(), key=lambda x: x[0])

outer_raw = ''.join([f"{k}{v}" for k, v in sorted_params]) + self.salt

return hmac.new(

self.salt[::-1].encode(), # 密钥为盐值反转

outer_raw.encode(),

digestmod=hashlib.sha256

).hexdigest().upper()

def get_strategy_type(self, strategy: str) -> int:

"""获取检索策略类型ID"""

return self.strategy_map.get(strategy, 0)

2. 行为模拟搜索采集器

模拟真实用户搜索行为,适配多检索策略,实现全维度商品数据采集:

import requests

from fake_useragent import UserAgent

import time

class TaobaoKeywordSearchScraper:

def __init__(self, cookie: str, proxy: Optional[str] = None):

self.cookie = cookie

self.proxy = proxy

self.sign_generator = TaobaoSearchSignGenerator()

self.session = self._init_session()

self.mtop_token = self._extract_mtop_token()

self.device_id = self._generate_device_id()

def _init_session(self) -> requests.Session:

"""初始化请求会话(模拟真实用户环境)"""

session = requests.Session()

# 构造真实请求头(含设备环境参数)

system_versions = ["iOS/17.5", "Android/14", "Windows/11"]

app_versions = ["10.12.0", "10.11.5", "10.13.2"]

network_types = ["wifi", "5g", "4g"]

self.x_sgext = json.dumps({

"sys_ver": random.choice(system_versions),

"app_ver": random.choice(app_versions),

"net_type": random.choice(network_types),

"device_type": random.choice(["phone", "pc", "pad"])

})

session.headers.update({

"User-Agent": UserAgent().random,

"Cookie": self.cookie,

"Content-Type": "application/x-www-form-urlencoded",

"x-sgext": self.x_sgext,

"Referer": "https://s.taobao.com/",

"Accept": "application/json, text/javascript, */*; q=0.01",

"Origin": "https://s.taobao.com"

})

# 代理配置

if self.proxy:

session.proxies = {"http": self.proxy, "https": self.proxy}

return session

def _extract_mtop_token(self) -> str:

"""从Cookie中提取mtop_token"""

pattern = re.compile(r'mtop_token=([^;]+)')

match = pattern.search(self.cookie)

return match.group(1) if match else ""

def _generate_device_id(self) -> str:

"""生成模拟设备ID(避免风控)"""

device_prefixes = ["TB", "TAOBAO", "ALIPAY"]

return f"{random.choice(device_prefixes)}_{''.join(random.choices('0123456789abcdef', k=16))}"

def _simulate_browse_behavior(self):

"""模拟用户搜索前浏览行为(降低风控概率)"""

try:

# 随机访问淘宝首页或分类页

browse_urls = ["https://www.taobao.com/", "https://s.taobao.com/category.htm"]

self.session.get(random.choice(browse_urls), timeout=10)

time.sleep(random.uniform(1, 2)) # 模拟浏览停留

except Exception as e:

print(f"模拟浏览行为失败:{e}")

def search(self, keyword: str, strategy: str = "default", page: int = 1, page_size: int = 40) -> Dict:

"""

关键字搜索核心方法

:param keyword: 检索关键词

:param strategy: 检索策略(default/sales/price_asc/new)

:param page: 页码

:param page_size: 每页条数(最大40)

:return: 结构化搜索结果

"""

# 1. 模拟前置浏览行为

if page == 1:

self._simulate_browse_behavior()

# 2. 关键词预处理与参数构建

processed_keyword = self.sign_generator.split_keyword(keyword)

strategy_type = self.sign_generator.get_strategy_type(strategy)

t = str(int(time.time() * 1000))

search_id = self.sign_generator.generate_search_id(processed_keyword, self.device_id)

params = {

"jsv": "2.6.1",

"appKey": self.sign_generator.app_key,

"t": t,

"api": "mtop.taobao.wsearch.appsearch",

"v": "1.0",

"type": "jsonp",

"dataType": "jsonp",

"callback": f"mtopjsonp{random.randint(1000, 9999)}",

"searchId": search_id,

"data": json.dumps({

"keyword": processed_keyword,

"strategyType": strategy_type,

"page": page,

"pageSize": page_size,

"isNeedPrefetch": True,

"areaId": "", # 可指定地区ID(如310000=上海)

"sort": strategy_type

})

}

# 3. 生成签名

sign = self.sign_generator.generate_sign(params, self.mtop_token, search_id)

params["sign"] = sign

# 4. 发送请求

response = self.session.get(

"https://h5api.m.taobao.com/h5/mtop.taobao.wsearch.appsearch/1.0/",

params=params,

timeout=15

)

# 5. 解析并结构化数据

raw_data = self._parse_jsonp(response.text)

return self._structurize_result(raw_data, keyword, strategy, page)

def _parse_jsonp(self, raw_data: str) -> Dict:

"""解析JSONP格式响应"""

try:

json_str = raw_data[raw_data.find("(") + 1: raw_data.rfind(")")]

return json.loads(json_str)

except Exception as e:

print(f"JSONP解析失败:{e}")

return {}

def _structurize_result(self, raw_data: Dict, keyword: str, strategy: str, page: int) -> Dict:

"""结构化搜索结果(适配不同策略)"""

result = {

"keyword": keyword,

"strategy": strategy,

"page": page,

"total_items": raw_data.get("data", {}).get("total", 0),

"total_pages": raw_data.get("data", {}).get("totalPage", 0),

"items": [],

"error_msg": raw_data.get("ret", [""])[0] if raw_data.get("ret") else ""

}

# 解析商品列表

item_list = raw_data.get("data", {}).get("items", [])

for item in item_list:

# 提取核心商品字段

structured_item = {

"item_id": item.get("itemId", ""),

"title": item.get("title", ""),

"price": item.get("price", ""),

"original_price": item.get("originalPrice", ""),

"sales": item.get("sellCount", 0),

"rating": item.get("rating", 0.0), # 评分(0-5)

"shop_name": item.get("shopName", ""),

"shop_id": item.get("shopId", ""),

"main_img": item.get("picUrl", ""),

"is_taobao": item.get("isTaobao", False), # 是否淘宝店(vs天猫)

"is_sponsored": item.get("isSponsored", False), # 是否直通车广告

# 策略相关字段

"strategy_score": item.get("strategyScore", 0.0) if strategy != "default" else 0.0,

"new_tag": item.get("newTag", False) if strategy == "new" else False # 新品标识

}

result["items"].append(structured_item)

return result

def multi_strategy_search(self, keyword: str, max_pages: int = 3) -> Dict:

"""多策略联合搜索(整合不同排序结果)"""

all_results = {

"keyword": keyword,

"strategies": {},

"total_items": 0,

"crawl_time": time.strftime("%Y-%m-%d %H:%M:%S")

}

for strategy in ["default", "sales", "price_asc", "new"]:

print(f"执行{strategy}策略搜索...")

strategy_results = []

total_pages = 1

page = 1

while page page try:

page_result = self.search(keyword, strategy, page)

strategy_results.extend(page_result["items"])

total_pages = page_result["total_pages"]

# 动态调整请求间隔(2-4秒,规避风控)

time.sleep(random.uniform(2, 4))

page += 1

except Exception as e:

print(f"{strategy}策略第{page}页采集失败:{e}")

break

all_results["strategies"][strategy] = {

"total_items": len(strategy_results),

"items": strategy_results

}

all_results["total_items"] += len(strategy_results)

return all_results

3. 搜索数据商业重构器(创新点)

整合多策略搜索数据,实现商品竞争力分析、价格带分布、爆款识别等商业价值挖掘:

from collections import Counter, defaultdict

import json

class TaobaoSearchDataReconstructor:

def __init__(self, keyword: str):

self.keyword = keyword

self.multi_strategy_data = {}

self.final_report = {}

def add_strategy_data(self, strategy: str, data: Dict):

"""添加单策略搜索数据"""

self.multi_strategy_data[strategy] = data

def reconstruct(self) -> Dict:

"""商业数据重构与分析"""

# 1. 数据聚合(去重,优先保留销量/评分高的商品)

all_items = []

item_id_set = set()

# 按策略优先级去重(sales > default > new > price_asc)

strategy_priority = ["sales", "default", "new", "price_asc"]

for strategy in strategy_priority:

items = self.multi_strategy_data.get(strategy, {}).get("items", [])

for item in items:

if item["item_id"] not in item_id_set:

item_id_set.add(item["item_id"])

all_items.append(item)

# 2. 核心商业指标分析

# 价格带分布(按价格区间统计)

price_ranges = [(0, 50), (50, 100), (100, 200), (2