编写一个输入经纬度获取天气预报,和输入城市名称获取天气警告的mcp服务

代码

mcp 1.24.0

python

from typing import Any, List

import random

from datetime import datetime, timedelta

from mcp.server.fastmcp import FastMCP

from starlette.middleware.trustedhost import TrustedHostMiddleware

import functools

import json

import time

from typing import Callable

def log_tool_call(func: Callable):

@functools.wraps(func)

async def wrapper(*args, **kwargs):

start = time.time()

print("\n================ MCP TOOL CALL ================")

print(f"Tool: {func.__name__}")

print("Input:")

print(json.dumps(kwargs, ensure_ascii=False, indent=2))

try:

result = await func(*args, **kwargs)

duration = round((time.time() - start) * 1000, 2)

print("Output:")

print(result)

print(f"Duration: {duration} ms")

print("================================================\n")

return result

except Exception as e:

print("ERROR:")

print(str(e))

print("================================================\n")

raise

return wrapper

# Initialize FastMCP server

mcp = FastMCP("weather")

# =========================

# Mock Data Generators

# =========================

def mock_alerts(state: str) -> List[dict[str, Any]]:

"""Generate fake weather alerts."""

possible_events = [

"Severe Thunderstorm Warning",

"Heat Advisory",

"Flood Watch",

"Winter Storm Warning",

]

if state.upper() == "CA":

return [

{

"event": "Heat Advisory",

"area": "Central Valley, CA",

"severity": "Moderate",

"description": "High temperatures are expected to reach dangerous levels.",

"instruction": "Stay hydrated and avoid outdoor activity during peak heat.",

}

]

# Randomly decide whether there are alerts

if random.random() < 0.4:

return []

return [

{

"event": random.choice(possible_events),

"area": f"{state.upper()} Statewide",

"severity": random.choice(["Minor", "Moderate", "Severe"]),

"description": "This is a simulated weather alert for testing purposes.",

"instruction": "Follow standard safety procedures.",

}

]

def format_alert(alert: dict) -> str:

"""Format an alert into a readable string."""

return f"""

Event: {alert.get("event", "Unknown")}

Area: {alert.get("area", "Unknown")}

Severity: {alert.get("severity", "Unknown")}

Description: {alert.get("description", "No description available")}

Instructions: {alert.get("instruction", "No specific instructions provided")}

""".strip()

def mock_forecast(latitude: float, longitude: float) -> List[dict[str, Any]]:

"""Generate fake forecast data."""

periods = []

base_temp = random.randint(10, 30)

for i in range(5):

day = datetime.now() + timedelta(days=i)

periods.append(

{

"name": day.strftime("%A"),

"temperature": base_temp + random.randint(-3, 3),

"temperatureUnit": "C",

"windSpeed": f"{random.randint(5, 20)} km/h",

"windDirection": random.choice(["N", "E", "S", "W"]),

"detailedForecast": "This is a simulated forecast with generally stable weather conditions.",

}

)

return periods

# =========================

# MCP Tools

# =========================

@mcp.tool()

@log_tool_call

async def get_alerts(state: str) -> str:

"""Get simulated weather alerts for a US state.

Args:

state: Two-letter US state code (e.g. 北京, 上海, 广州, 深圳)

"""

alerts = mock_alerts(state)

if not alerts:

return "No active alerts for this state (simulated data)."

formatted = [format_alert(alert) for alert in alerts]

return "\n---\n".join(formatted)

@mcp.tool()

@log_tool_call

async def get_forecast(latitude: float, longitude: float) -> str:

"""Get simulated weather forecast for a location.

Args:

latitude: Latitude of the location

longitude: Longitude of the location

"""

periods = mock_forecast(latitude, longitude)

forecasts = []

for period in periods:

forecast = f"""

{period["name"]}:

Temperature: {period["temperature"]}°{period["temperatureUnit"]}

Wind: {period["windSpeed"]} {period["windDirection"]}

Forecast: {period["detailedForecast"]}

""".strip()

forecasts.append(forecast)

return "\n---\n".join(forecasts)

def main():

mcp.run(transport="sse")

if __name__ == "__main__":

main()启动后

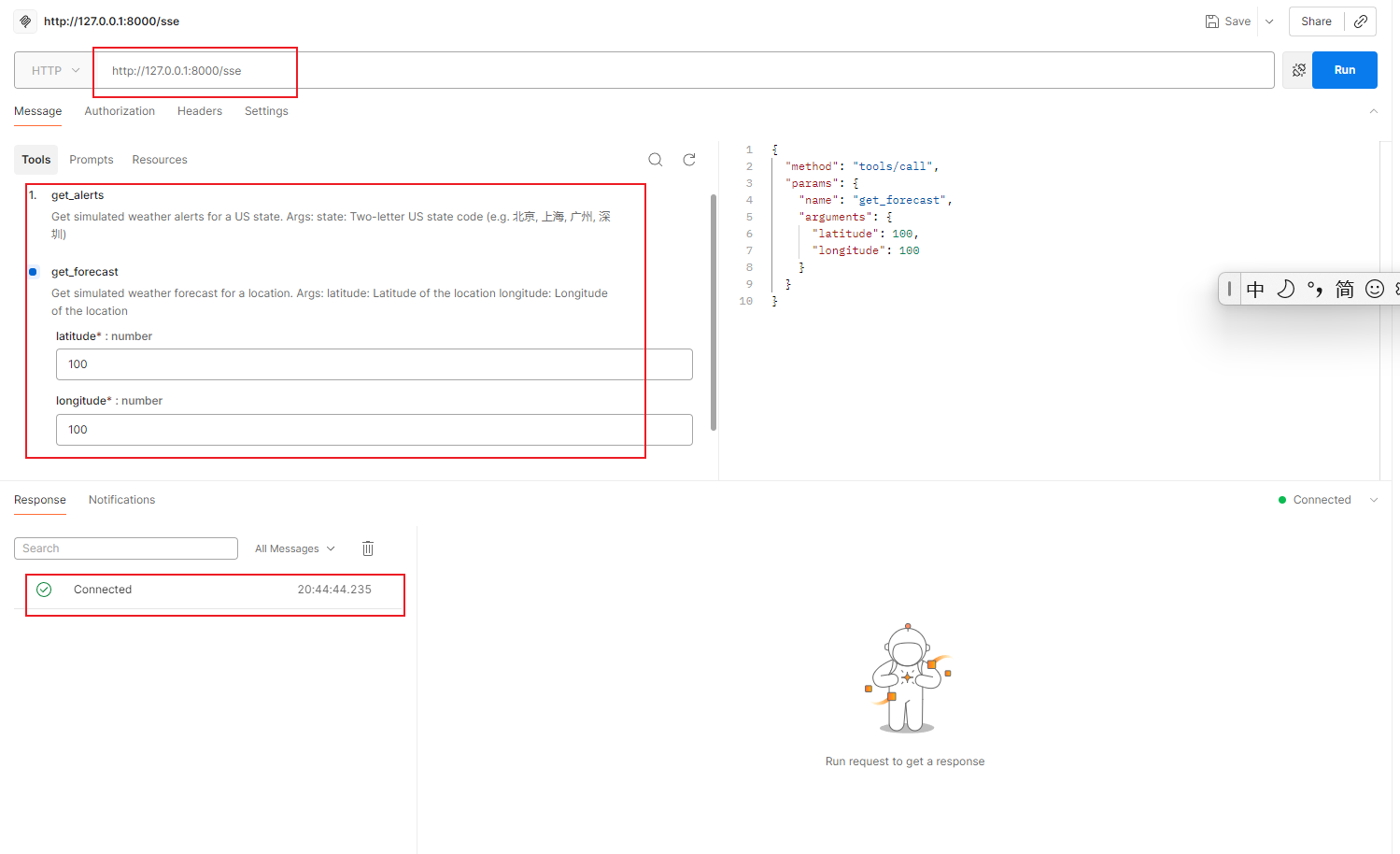

POST测试下

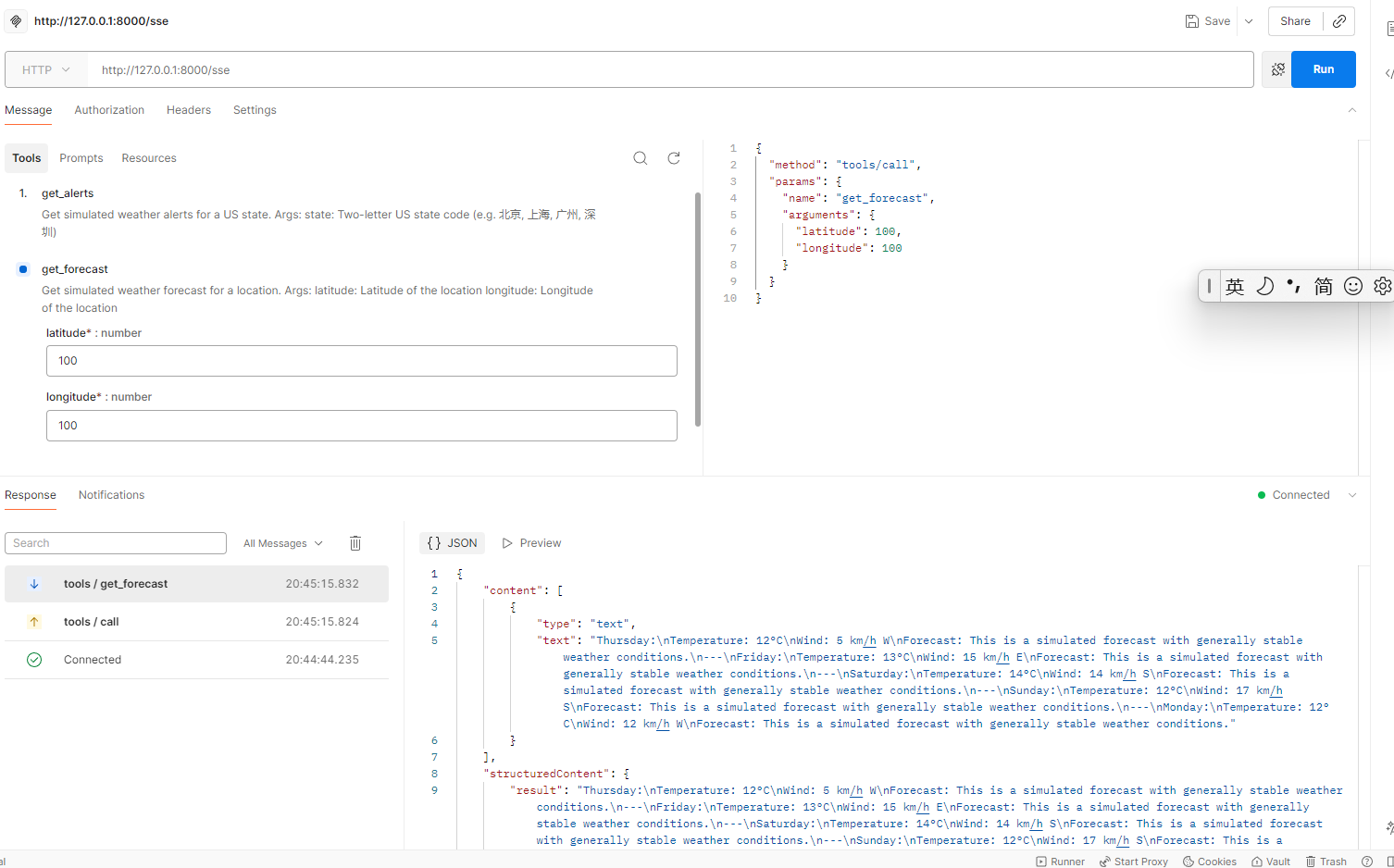

可以发现一共有两个tool,并且测试获取天气预报成功

并且可以看到是先发个GET建立长连接,后续通过POST session交流

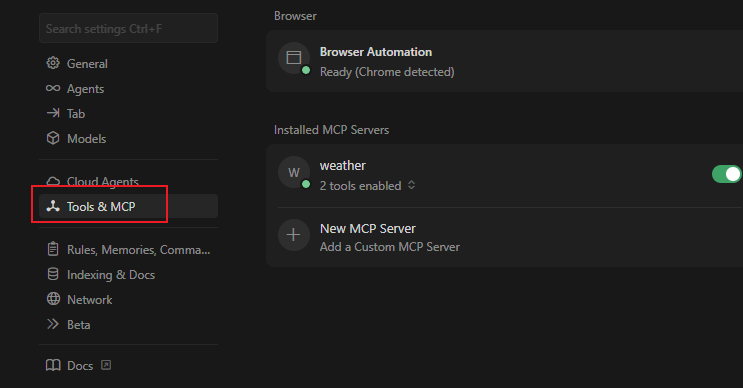

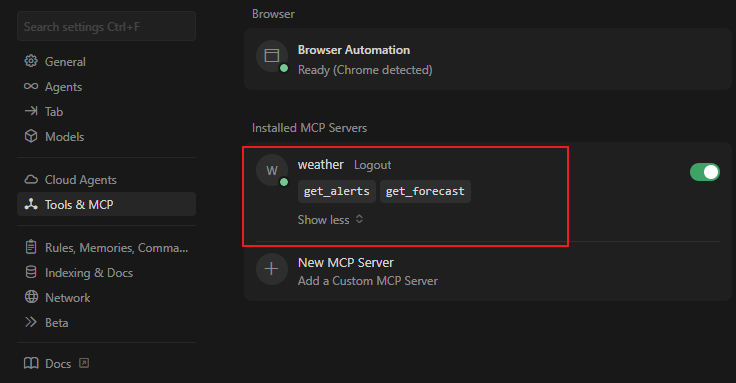

cursor测试

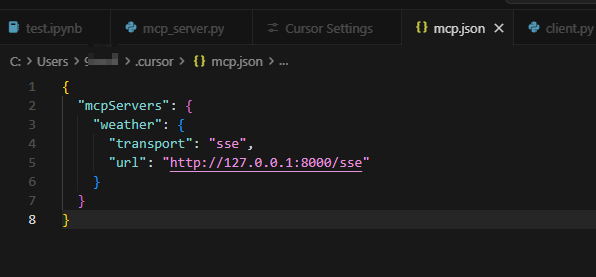

setting里面配置

python

{

"mcpServers": {

"weather": {

"transport": "sse",

"url": "http://127.0.0.1:8000/sse"

}

}

}

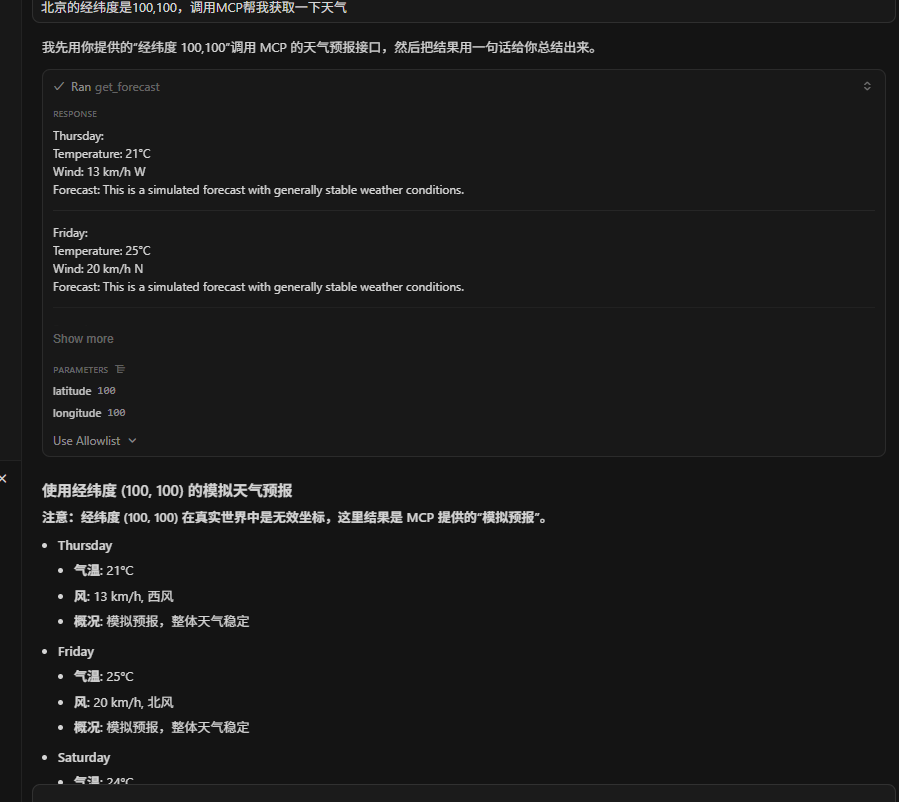

打开chat测试下

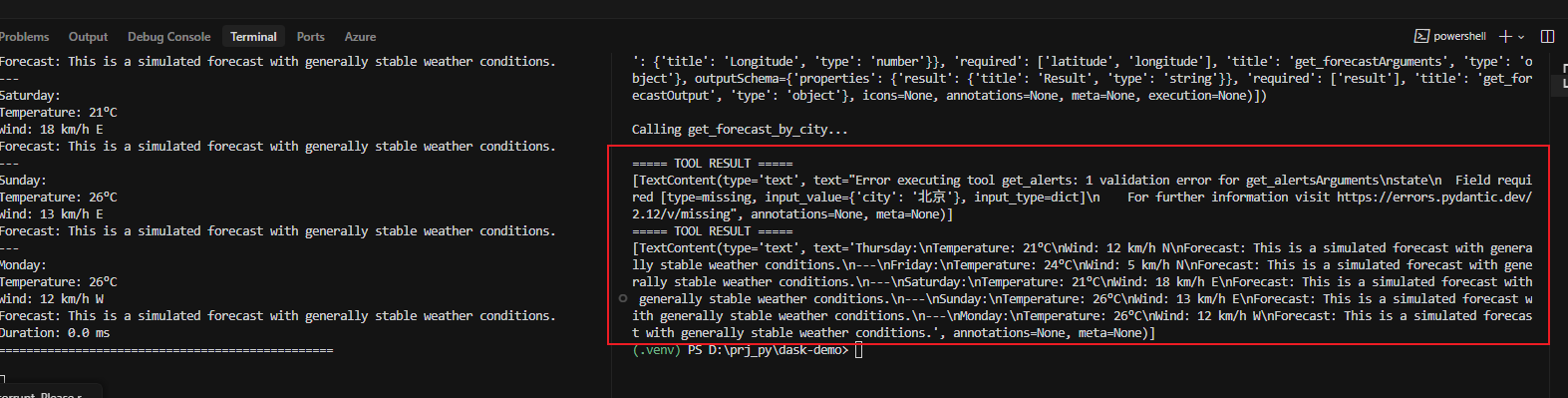

编写代码测试

直接调用mcp server

python

import asyncio

from mcp import ClientSession

from mcp.client.sse import sse_client

MCP_SSE_URL = "http://127.0.0.1:8000/sse"

async def main():

# 1. 建立 SSE 连接

async with sse_client(MCP_SSE_URL) as (read, write):

# 2. 创建 MCP 会话

async with ClientSession(read, write) as session:

# 3. 初始化(必须)

await session.initialize()

# 4. 看一下服务端暴露了哪些 tools

tools = await session.list_tools()

print("Available tools:")

for t in tools:

print(t)

# 5. 直接调用 tool

print("\nCalling get_forecast_by_city...\n")

result = await session.call_tool(

name="get_alerts", arguments={"state": "北京"}

)

# 6. 输出结果

print("===== TOOL RESULT =====")

print(result.content)

result = await session.call_tool(

name="get_forecast", arguments={"latitude": 100, "longitude": 100}

)

# 6. 输出结果

print("===== TOOL RESULT =====")

print(result.content)

if __name__ == "__main__":

asyncio.run(main())其实就是建立连接,发起调用啦

那么大模型能做的无非就是帮我们识别该调用哪个tool,该传入什么参入,都可以从用户输入给大模型的内容来提取出来,本质就是function calling

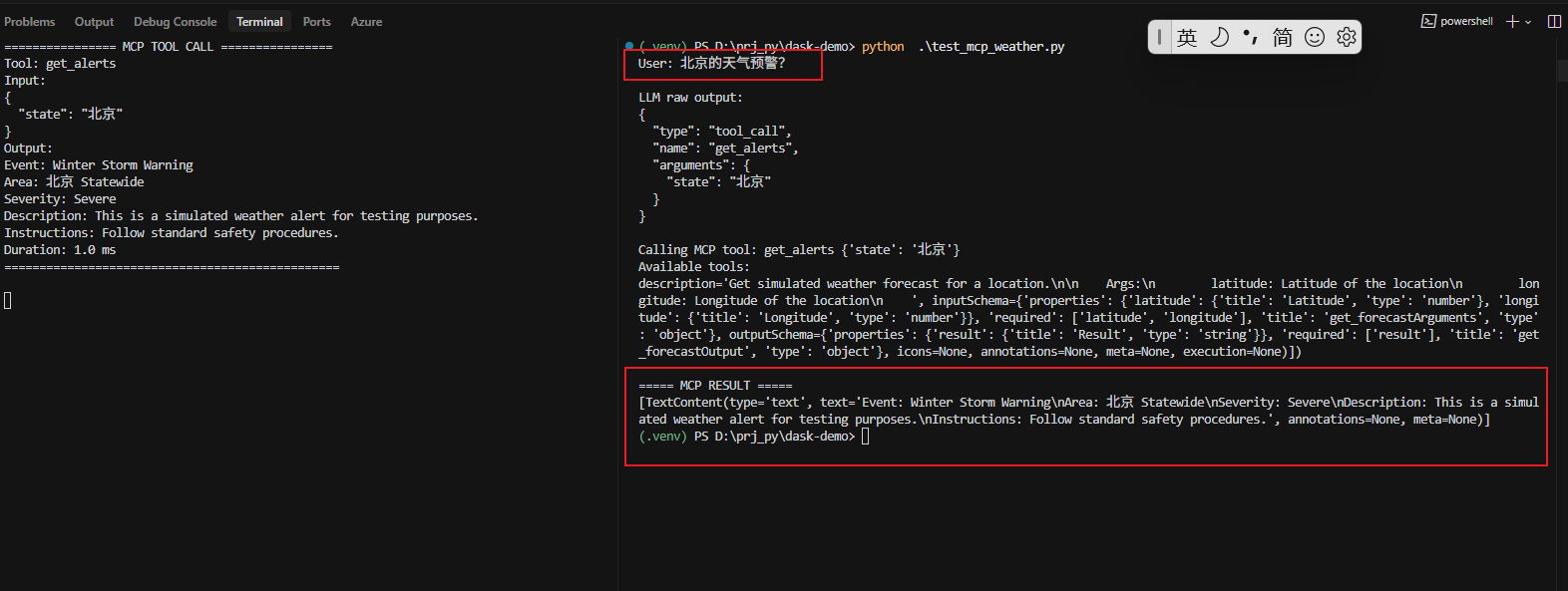

加入大模型

python

from mcp import ClientSession

from mcp.client.sse import sse_client

MCP_SSE_URL = "http://127.0.0.1:8000/sse"

async def call_mcp_tool(tool_name: str, arguments: dict) -> str:

async with sse_client(MCP_SSE_URL) as (read, write):

async with ClientSession(read, write) as session:

await session.initialize()

# 4. 看一下服务端暴露了哪些 tools

tools = await session.list_tools()

print("Available tools:")

for t in tools:

print(t)

result = await session.call_tool(

# name="get_alerts", arguments={"state": "北京"}

name=tool_name, arguments=arguments

)

return result.content

import asyncio

import json

import httpx

OLLAMA_URL = "http://127.0.0.1:11434/api/chat"

MODEL = "qwen3:8b"

SYSTEM_PROMPT = """

你是一个工具调度助手。

你只能以 JSON 格式回答,且只能是以下两种之一:

1. 如果需要调用工具:

{

"type": "tool_call",

"name": "<tool_name>",

"arguments": { ... }

}

2. 如果不需要工具:

{

"type": "final",

"content": "<answer>"

}

可用工具:

- get_alerts(state: string) 查询城市天气预警

示例:

用户:北京最近几天天气怎么样?

你应该返回 tool_call。

"""

def ask_llm(user_input: str) -> dict:

payload = {

"model": MODEL,

"messages": [

{"role": "system", "content": SYSTEM_PROMPT},

{"role": "user", "content": user_input},

],

"stream": False,

"options": {"temperature": 0},

}

resp = httpx.post(OLLAMA_URL, json=payload, timeout=60)

resp.raise_for_status()

content = resp.json()["message"]["content"]

return content

async def main():

user_input = "北京的天气预警?"

print("User:", user_input)

# 1. 问大模型

raw = ask_llm(user_input)

print("\nLLM raw output:")

print(raw)

# 2. 解析 JSON

try:

decision = json.loads(raw)

except json.JSONDecodeError:

raise RuntimeError("LLM did not return valid JSON")

# 3. 判断是否需要调用 tool

if decision["type"] == "tool_call":

tool_name = decision["name"]

arguments = decision["arguments"]

print(f"\nCalling MCP tool: {tool_name} {arguments}")

tool_result = await call_mcp_tool(tool_name, arguments)

print("\n===== MCP RESULT =====")

print(tool_result)

else:

print("\n===== FINAL ANSWER =====")

print(decision["content"])

if __name__ == "__main__":

asyncio.run(main())

大致原理功能就这样了,其他都是些精细的优化了,比如可以用的tool不能直接写死在prompt里等等。langchain无非就是把这些步骤抽象成了一些标准的流程,拿来照着填就是了。