镜像队列到仲裁队列迁移与混合部署策略

完整的迁移方案和混合部署策略,确保在零停机、零数据丢失的前提下完成架构升级

一、迁移风险评估与准备

1.1 兼容性分析

| 组件 | 镜像队列兼容性 | 仲裁队列兼容性 | 迁移影响 |

|---|---|---|---|

| RabbitMQ版本 | 所有版本 | 必须≥3.8.0 | 需要升级 |

| 客户端库 | 完全兼容 | 需要支持queue-type参数 | 低风险 |

| 管理工具 | 完全兼容 | 需要更新插件 | 中风险 |

| 监控系统 | 传统指标 | 需要Raft监控指标 | 需要调整 |

1.2 数据审计清单

在迁移前,必须完成以下审计:

**#!/bin/bash

audit_mirrored_queues.sh - 镜像队列审计脚本**

echo "=== 镜像队列集群审计报告 ==="

echo "生成时间: $(date)"

echo "=========================="

1. 统计镜像队列信息

echo "1. 队列统计信息:"

sudo rabbitmqctl list_queues --formatter json | jq '

map(select(.type == "classic" or .type == null)) |

{

"total_queues": length,

"mirrored_queues": map(select(.policy != null)) | length,

"non_mirrored_queues": map(select(.policy == null)) | length,

"total_messages": map(.messages // 0) | add,

"avg_messages_per_queue": (map(.messages // 0) | add) / length

}'

# 2. 详细队列列表

echo -e "\n2. 详细队列列表:"

sudo rabbitmqctl list_queues name messages policy state pid slave_pids consumers durable auto_delete | \

awk 'NR==1 {print} NR>1 {

queue=1; msgs=2; pol=3; st=4; pid=5; slaves=6; cons=7; dur=8; ad=$9;

printf "%-30s %-8s %-15s %-10s %-20s %-20s %-5s %-5s %-5s\n",

queue, msgs, pol, st, pid, slaves, cons, dur, ad

}' | head -20

# 3. 策略审计

echo -e "\n3. 镜像策略配置:"

sudo rabbitmqctl list_policies --formatter json | jq '

map({

vhost: .vhost,

name: .name,

pattern: .pattern,

definition: .definition,

apply_to: .apply_to

})'

# 4. 消费者审计

echo -e "\n4. 消费者分布:"

sudo rabbitmqctl list_consumers --formatter json | jq '

group_by(.queue_name) |

map({

queue: .[0].queue_name,

consumer_count: length,

channels: map(.channel_pid) | unique | length

}) | sort_by(-.consumer_count)'

# 5. 生产速率估算

echo -e "\n5. 建议监控以下队列的生产速率:"

sudo rabbitmqctl list_queues name messages messages_ready messages_unacknowledged --formatter json | \

jq -r '.[] | select(.messages > 1000) | "队列 \(.name): 当前消息数 \(.messages)"'

# 6. 导出当前配置

echo -e "\n6. 导出配置备份..."

sudo rabbitmqctl export_definitions /tmp/rabbitmq_backup_$(date +%Y%m%d_%H%M%S).json

echo "配置已备份到: /tmp/rabbitmq_backup_*.json"

二、混合部署架构设计

2.1 混合集群架构

混合集群节点规划(6节点示例)

节点分配:

镜像队列组 (3节点):

-

mq-mirror-01: 192.168.10.101

-

mq-mirror-02: 192.168.10.102

-

mq-mirror-03: 192.168.10.103

仲裁队列组 (3节点):

-

mq-quorum-01: 192.168.10.201

-

mq-quorum-02: 192.168.10.202

-

mq-quorum-03: 192.168.10.203

负载均衡器:

-

haproxy-01: 192.168.10.100 (VIP)

-

端口映射:

-

5670: 混合AMQP端口

-

15670: 混合管理端口

数据流向:

-

客户端 -> HAProxy -> 根据路由规则分发

-

监控系统: 同时监控两组集群

2.2 混合部署配置

步骤1:部署仲裁队列节点组

#!/bin/bash

deploy_quorum_nodes.sh - 部署仲裁队列节点组

QUORUM_NODES=("mq-quorum-01" "mq-quorum-02" "mq-quorum-03")

QUORUM_IPS=("192.168.10.201" "192.168.10.202" "192.168.10.203")

MIRROR_CLUSTER="rabbit@mq-mirror-01"

1. 在新节点上安装RabbitMQ 3.12.x

for i in ${!QUORUM_NODES[@]}; do

node={QUORUM_NODES\[i]}

ip={QUORUM_IPS\[i]}

echo "正在部署节点: node (ip)"

SSH到目标节点执行

ssh root@$ip << EOF

设置主机名

hostnamectl set-hostname $node

添加hosts记录

echo "192.168.10.101 mq-mirror-01" >> /etc/hosts

echo "192.168.10.102 mq-mirror-02" >> /etc/hosts

echo "192.168.10.103 mq-mirror-03" >> /etc/hosts

echo "ip node" >> /etc/hosts

安装RabbitMQ 3.12.x(参考仲裁队列安装步骤)

yum install -y erlang-25.3.2.6-1.el7

yum install -y rabbitmq-server-3.12.12-1.el7

复制Erlang Cookie(从镜像集群主节点)

scp mq-mirror-01:/var/lib/rabbitmq/.erlang.cookie /var/lib/rabbitmq/

chown rabbitmq:rabbitmq /var/lib/rabbitmq/.erlang.cookie

chmod 400 /var/lib/rabbitmq/.erlang.cookie

配置仲裁队列专用设置

cat > /etc/rabbitmq/rabbitmq.conf << CONFIGEOF

仲裁队列配置

quorum.queue.enabled = true

不自动加入现有集群(保持独立)

cluster_formation.peer_discovery_backend = rabbit_peer_discovery_classic_config

cluster_formation.classic_config.nodes.1 = rabbit@mq-quorum-01

cluster_formation.classic_config.nodes.2 = rabbit@mq-quorum-02

cluster_formation.classic_config.nodes.3 = rabbit@mq-quorum-03

性能优化

raft.segment_max_entries = 65536

raft.wal_max_size_bytes = 104857600

网络隔离

cluster_partition_handling = ignore

CONFIGEOF

启动服务

systemctl start rabbitmq-server

systemctl enable rabbitmq-server

启用管理插件

rabbitmq-plugins enable rabbitmq_management

EOF

done

2. 构建仲裁队列独立集群

echo "构建仲裁队列集群..."

ssh root@${QUORUM_IPS[0]} "rabbitmqctl stop_app; rabbitmqctl reset; rabbitmqctl start_app"

ssh root@${QUORUM_IPS[1]} "rabbitmqctl stop_app; rabbitmqctl reset; rabbitmqctl join_cluster rabbit@mq-quorum-01; rabbitmqctl start_app"

ssh root@${QUORUM_IPS[2]} "rabbitmqctl stop_app; rabbitmqctl reset; rabbitmqctl join_cluster rabbit@mq-quorum-01; rabbitmqctl start_app"

echo "仲裁队列集群部署完成"

步骤2:配置混合负载均衡器

haproxy_mixed_cluster.cfg

global

log /dev/log local0

maxconn 10000

user haproxy

group haproxy

daemon

stats socket /var/run/haproxy.sock mode 660 level admin

defaults

log global

mode tcp

option tcplog

option dontlognull

retries 3

timeout connect 5s

timeout client 50s

timeout server 50s

timeout check 10s

健康检查前端

frontend health_dashboard

bind *:8888

mode http

stats enable

stats uri /haproxy?stats

stats refresh 30s

stats auth admin:MixedCluster2024

混合AMQP前端 - 基于路由规则分发

frontend mixed_amqp_frontend

bind *:5670

mode tcp

tcp-request inspect-delay 5s

tcp-request content accept if { req_ssl_hello_type 1 }

路由规则:基于队列名前缀

use_backend quorum_backend if { req.payload(0,20) -m -i ^declare.*queue.*quorum }

use_backend quorum_backend if { req.payload(0,20) -m -i ^queue.declare.*x-queue-type.*quorum }

默认路由到镜像队列(向后兼容)

default_backend mirror_backend

镜像队列后端

backend mirror_backend

mode tcp

balance leastconn

option tcp-check

tcp-check connect port 5672

tcp-check send "PING\r\n"

tcp-check expect string "AMQP"

server mq-mirror-01 192.168.10.101:5672 check inter 2s rise 2 fall 3

server mq-mirror-02 192.168.10.102:5672 check inter 2s rise 2 fall 3

server mq-mirror-03 192.168.10.103:5672 check inter 2s rise 2 fall 3

仲裁队列后端

backend quorum_backend

mode tcp

balance leastconn

option tcp-check

tcp-check connect port 5672

tcp-check send "PING\r\n"

tcp-check expect string "AMQP"

server mq-quorum-01 192.168.10.201:5672 check inter 1s rise 2 fall 2

server mq-quorum-02 192.168.10.202:5672 check inter 1s rise 2 fall 2

server mq-quorum-03 192.168.10.203:5672 check inter 1s rise 2 fall 2

混合管理界面

listen mixed_management

bind *:15670

mode http

balance roundrobin

option httpchk GET /api/health/checks/node-is-mirror-sync-critical

镜像集群管理节点

server mq-mirror-01 192.168.10.101:15672 check inter 5s rise 2 fall 3

server mq-mirror-02 192.168.10.102:15672 check inter 5s rise 2 fall 3

server mq-mirror-03 192.168.10.103:15672 check inter 5s rise 2 fall 3

仲裁集群管理节点

server mq-quorum-01 192.168.10.201:15672 check inter 5s rise 2 fall 3

server mq-quorum-02 192.168.10.202:15672 check inter 5s rise 2 fall 3

server mq-quorum-03 192.168.10.203:15672 check inter 5s rise 2 fall 3

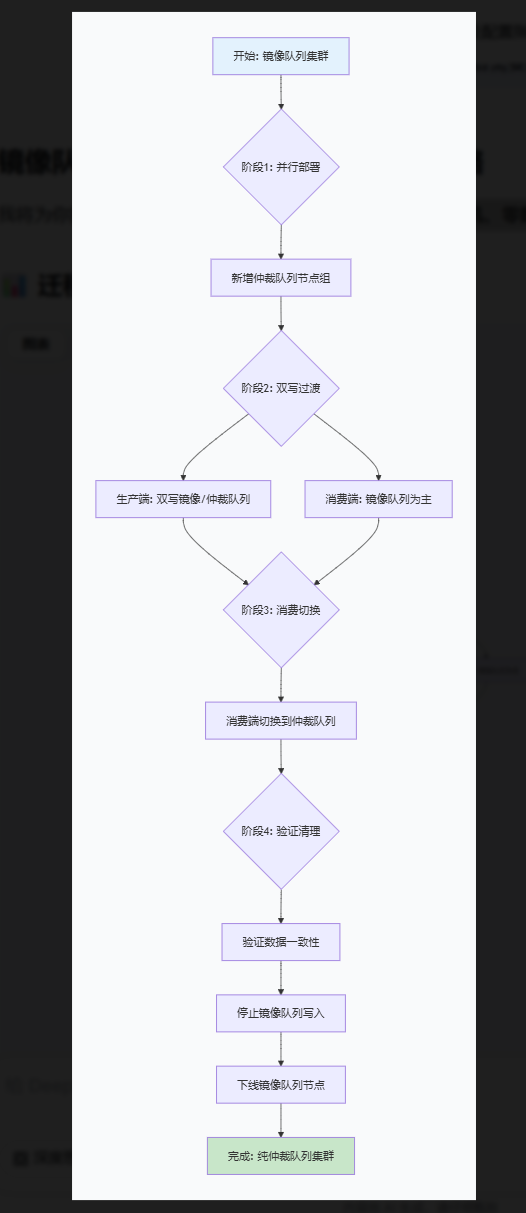

三、四阶段迁移方案

阶段1:并行运行与双写(2-4周)

1.1 生产端双写改造

dual_write_producer.py - 双写生产端

import pika

import json

import logging

from datetime import datetime

from retry import retry

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(name)

class DualWriteProducer:

def init(self):

连接配置

self.mirror_connection = None

self.quorum_connection = None

负载均衡器地址

self.lb_host = "192.168.10.100"

self.lb_port = 5670

@retry(tries=3, delay=1)

def connect_mirror_cluster(self):

"""连接到镜像队列集群"""

credentials = pika.PlainCredentials('app_user', 'AppPassword123')

直接连接镜像集群(绕过负载均衡器)

mirror_nodes = [

('192.168.10.101', 5672),

('192.168.10.102', 5672),

('192.168.10.103', 5672)

]

for host, port in mirror_nodes:

try:

params = pika.ConnectionParameters(

host=host, port=port,

credentials=credentials,

heartbeat=300,

connection_attempts=2

)

return pika.BlockingConnection(params)

except Exception as e:

logger.warning(f"无法连接到镜像节点 {host}:{port}: {e}")

continue

raise Exception("无法连接到任何镜像队列节点")

@retry(tries=3, delay=1)

def connect_quorum_cluster(self):

"""连接到仲裁队列集群"""

credentials = pika.PlainCredentials('app_user', 'AppPassword123')

通过负载均衡器连接仲裁集群

params = pika.ConnectionParameters(

host=self.lb_host,

port=self.lb_port,

credentials=credentials,

heartbeat=600,

socket_timeout=30

)

设置客户端属性,指示需要仲裁队列

params.client_properties = {

'connection_name': 'quorum-migration-client',

'queue_type': 'quorum'

}

return pika.BlockingConnection(params)

def dual_write(self, queue_name, message, is_critical=True):

"""

双写消息到镜像队列和仲裁队列

Args:

queue_name: 队列名

message: 消息内容

is_critical: 是否为关键业务(决定写入顺序)

"""

message_id = f"msg_{datetime.now().strftime('%Y%m%d_%H%M%S_%f')}"

enriched_message = {

'id': message_id,

'data': message,

'timestamp': datetime.now().isoformat(),

'source': 'dual-write-producer'

}

message_body = json.dumps(enriched_message, ensure_ascii=False)

写入结果跟踪

results = {

'mirror_success': False,

'quorum_success': False,

'errors': []

}

try:

根据业务重要性决定写入顺序

if is_critical:

关键业务:先写仲裁队列(更可靠)

results.update(self._write_to_quorum(queue_name, message_body))

results.update(self._write_to_mirror(queue_name, message_body))

else:

非关键业务:先写镜像队列(向后兼容)

results.update(self._write_to_mirror(queue_name, message_body))

results.update(self._write_to_quorum(queue_name, message_body))

检查写入结果

if not results['mirror_success'] and results['quorum_success']:

logger.warning(f"消息 {message_id} 只写入仲裁队列,镜像队列失败")

elif results['mirror_success'] and not results['quorum_success']:

logger.warning(f"消息 {message_id} 只写入镜像队列,仲裁队列失败")

elif not results['mirror_success'] and not results['quorum_success']:

raise Exception(f"双写失败: {results['errors']}")

else:

logger.info(f"消息 {message_id} 双写成功")

return results

except Exception as e:

logger.error(f"双写异常: {e}")

记录失败,触发告警

self._alert_failure(queue_name, message_id, str(e))

raise

def _write_to_mirror(self, queue_name, message_body):

"""写入镜像队列"""

try:

if not self.mirror_connection or self.mirror_connection.is_closed:

self.mirror_connection = self.connect_mirror_cluster()

channel = self.mirror_connection.channel()

声明镜像队列(使用现有策略)

channel.queue_declare(

queue=queue_name,

durable=True,

arguments={'x-queue-type': 'classic'} # 明确指定经典队列

)

发布消息

channel.basic_publish(

exchange='',

routing_key=queue_name,

body=message_body.encode('utf-8'),

properties=pika.BasicProperties(

delivery_mode=2,

content_type='application/json',

headers={'queue_type': 'mirror'}

)

)

return {'mirror_success': True}

except Exception as e:

logger.error(f"写入镜像队列失败: {e}")

return {'mirror_success': False, 'errors': [f'mirror: {str(e)}']}

def _write_to_quorum(self, queue_name, message_body):

"""写入仲裁队列"""

try:

if not self.quorum_connection or self.quorum_connection.is_closed:

self.quorum_connection = self.connect_quorum_cluster()

channel = self.quorum_connection.channel()

声明仲裁队列

channel.queue_declare(

queue=f"{queue_name}.quorum", # 添加后缀区分

durable=True,

arguments={

'x-queue-type': 'quorum',

'x-quorum-initial-group-size': 3,

'x-delivery-limit': 5

}

)

发布消息

channel.basic_publish(

exchange='',

routing_key=f"{queue_name}.quorum",

body=message_body.encode('utf-8'),

properties=pika.BasicProperties(

delivery_mode=2,

content_type='application/json',

headers={'queue_type': 'quorum'}

)

)

return {'quorum_success': True}

except Exception as e:

logger.error(f"写入仲裁队列失败: {e}")

return {'quorum_success': False, 'errors': [f'quorum: {str(e)}']}

def _alert_failure(self, queue_name, message_id, error):

"""发送告警"""

实现告警逻辑,如发送到监控系统

alert_msg = {

'level': 'ERROR',

'type': 'DUAL_WRITE_FAILURE',

'queue': queue_name,

'message_id': message_id,

'error': error,

'timestamp': datetime.now().isoformat()

}

logger.error(f"告警: {json.dumps(alert_msg)}")

可以集成到监控系统

send_to_monitoring_system(alert_msg)

使用示例

if name == "main":

producer = DualWriteProducer()

测试双写

test_message = {

'order_id': 'ORD-2024-001',

'amount': 199.99,

'customer': 'john.doe@example.com'

}

关键业务消息

result = producer.dual_write(

queue_name="orders.payment",

message=test_message,

is_critical=True

)

print(f"双写结果: {result}")

1.2 数据一致性验证脚本

#!/bin/bash

verify_data_consistency.sh - 验证双写数据一致性

echo "=== 双写数据一致性验证 ==="

echo "开始时间: $(date)"

echo "========================="

配置参数

MIRROR_NODE="192.168.10.101"

QUORUM_NODE="192.168.10.201"

QUEUE_PATTERN="orders.*" # 要验证的队列模式

1. 获取镜像队列消息列表

echo "1. 从镜像队列获取消息列表..."

MIRROR_MESSAGES_FILE="/tmp/mirror_messages_$(date +%s).json"

rabbitmqadmin -H MIRROR_NODE -u admin -p AdminPassword123 list queues name messages --format=json \> MIRROR_MESSAGES_FILE

2. 获取仲裁队列消息列表

echo "2. 从仲裁队列获取消息列表..."

QUORUM_MESSAGES_FILE="/tmp/quorum_messages_$(date +%s).json"

rabbitmqadmin -H QUORUM_NODE -u admin -p AdminPassword123 list queues name messages --format=json \> QUORUM_MESSAGES_FILE

3. 比较队列消息数

echo "3. 比较消息数量..."

echo "队列名称 | 镜像队列消息数 | 仲裁队列消息数 | 差异"

python3 << EOF

import json

import sys

with open('$MIRROR_MESSAGES_FILE', 'r') as f:

mirror_data = json.load(f)

with open('$QUORUM_MESSAGES_FILE', 'r') as f:

quorum_data = json.load(f)

创建映射

mirror_map = {item['name']: item['messages'] for item in mirror_data if '$QUEUE_PATTERN' in item['name']}

quorum_map = {item['name'].replace('.quorum', ''): item['messages'] for item in quorum_data if '$QUEUE_PATTERN' in item['name']}

print(f"{'队列名称':<30} {'镜像队列':<12} {'仲裁队列':<12} {'差异':<8}")

print("-" * 65)

total_diff = 0

for queue in set(list(mirror_map.keys()) + list(quorum_map.keys())):

mirror_count = mirror_map.get(queue, 0)

quorum_count = quorum_map.get(queue, 0)

diff = abs(mirror_count - quorum_count)

if diff > 0:

status = "❌"

total_diff += diff

else:

status = "✅"

print(f"{queue:<30} {mirror_count:<12} {quorum_count:<12} {diff:<8} {status}")

print(f"\n总差异消息数: {total_diff}")

sys.exit(0 if total_diff == 0 else 1)

EOF

CONSISTENCY_RESULT=$?

4. 详细消息内容抽样比较

if [ $CONSISTENCY_RESULT -ne 0 ]; then

echo -e "\n4. 详细内容抽样比较..."

选择差异最大的队列进行详细检查

DIFF_QUEUE=$(python3 << EOF

import json

with open('$MIRROR_MESSAGES_FILE', 'r') as f:

mirror_data = json.load(f)

with open('$QUORUM_MESSAGES_FILE', 'r') as f:

quorum_data = json.load(f)

mirror_map = {item['name']: item['messages'] for item in mirror_data}

quorum_map = {item['name'].replace('.quorum', ''): item['messages'] for item in quorum_data}

max_diff = 0

max_queue = ""

for queue in set(list(mirror_map.keys()) + list(quorum_map.keys())):

diff = abs(mirror_map.get(queue, 0) - quorum_map.get(queue, 0))

if diff > max_diff:

max_diff = diff

max_queue = queue

print(max_queue)

EOF

)

echo "检查队列: $DIFF_QUEUE"

抽样获取消息进行比较

echo "从镜像队列获取样本消息..."

rabbitmqadmin -H MIRROR_NODE -u admin -p AdminPassword123 get queue="DIFF_QUEUE" count=3 --format=json > /tmp/mirror_sample.json

echo "从仲裁队列获取样本消息..."

rabbitmqadmin -H QUORUM_NODE -u admin -p AdminPassword123 get queue="{DIFF_QUEUE}.quorum" count=3 --format=json > /tmp/quorum_sample.json

echo "样本消息比较完成"

fi

5. 清理临时文件

rm -f MIRROR_MESSAGES_FILE QUORUM_MESSAGES_FILE /tmp/mirror_sample.json /tmp/quorum_sample.json

echo -e "\n验证完成时间: $(date)"

if [ $CONSISTENCY_RESULT -eq 0 ]; then

echo "✅ 数据一致性验证通过"

else

echo "❌ 数据一致性验证失败,发现差异"

exit 1

fi

阶段2:消费者逐步迁移(3-6周)

2.1 智能消费代理

smart_consumer_proxy.py - 智能消费代理

import pika

import json

import threading

import time

import logging

from collections import deque

from datetime import datetime

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(name)

class SmartConsumerProxy:

def init(self, config):

"""

智能消费代理

Args:

config: 配置字典

-

mirror_nodes: 镜像节点列表

-

quorum_nodes: 仲裁节点列表

-

queue_mapping: 队列映射 {镜像队列: 仲裁队列}

-

migration_progress: 迁移进度 {队列: 百分比}

"""

self.config = config

self.mirror_connection = None

self.quorum_connection = None

消费状态跟踪

self.consumption_stats = {

'mirror': 0,

'quorum': 0,

'last_switch_time': {},

'errors': deque(maxlen=1000)

}

启动监控线程

self.monitor_thread = threading.Thread(target=self._monitor_consumption, daemon=True)

self.monitor_thread.start()

def consume_with_gradual_migration(self, queue_name, callback, migration_ratio=0.1):

"""

逐步迁移消费

Args:

queue_name: 队列名

callback: 消息处理回调函数

migration_ratio: 初始迁移比例 (0.1 = 10%流量到仲裁队列)

"""

mirror_queue = queue_name

quorum_queue = f"{queue_name}.quorum"

启动镜像队列消费者(主)

mirror_thread = threading.Thread(

target=self._consume_from_mirror,

args=(mirror_queue, callback, migration_ratio),

daemon=True

)

启动仲裁队列消费者(逐步增加)

quorum_thread = threading.Thread(

target=self._consume_from_quorum,

args=(quorum_queue, callback, migration_ratio),

daemon=True

)

mirror_thread.start()

quorum_thread.start()

logger.info(f"开始逐步迁移消费队列 {queue_name}, 初始仲裁比例: {migration_ratio*100}%")

返回控制句柄,用于动态调整比例

return {

'queue': queue_name,

'mirror_thread': mirror_thread,

'quorum_thread': quorum_thread,

'adjust_ratio': self.adjust_migration_ratio

}

def _consume_from_mirror(self, queue_name, callback, migration_ratio):

"""从镜像队列消费(主要流量)"""

try:

connection = self._get_mirror_connection()

channel = connection.channel()

设置QoS

channel.basic_qos(prefetch_count=10)

消费函数包装器

def wrapped_callback(ch, method, properties, body):

try:

根据迁移比例决定是否处理

import random

if random.random() < migration_ratio:

这部分流量应该由仲裁队列处理,跳过

ch.basic_ack(delivery_tag=method.delivery_tag)

return

处理消息

message = json.loads(body.decode('utf-8'))

result = callback(message, source='mirror')

if result:

ch.basic_ack(delivery_tag=method.delivery_tag)

self.consumption_stats['mirror'] += 1

else:

处理失败,重新入队

ch.basic_nack(delivery_tag=method.delivery_tag, requeue=True)

except Exception as e:

logger.error(f"处理镜像队列消息失败: {e}")

ch.basic_nack(delivery_tag=method.delivery_tag, requeue=False)

self.consumption_stats['errors'].append({

'queue': queue_name,

'source': 'mirror',

'error': str(e),

'timestamp': datetime.now().isoformat()

})

开始消费

channel.basic_consume(

queue=queue_name,

on_message_callback=wrapped_callback,

auto_ack=False

)

logger.info(f"开始从镜像队列消费: {queue_name}")

channel.start_consuming()

except Exception as e:

logger.error(f"镜像队列消费异常: {e}")

def _consume_from_quorum(self, queue_name, callback, migration_ratio):

"""从仲裁队列消费(逐步增加)"""

try:

connection = self._get_quorum_connection()

channel = connection.channel()

仲裁队列需要不同的QoS设置

channel.basic_qos(prefetch_count=5)

消费函数包装器

def wrapped_callback(ch, method, properties, body):

try:

处理消息

message = json.loads(body.decode('utf-8'))

result = callback(message, source='quorum')

if result:

ch.basic_ack(delivery_tag=method.delivery_tag)

self.consumption_stats['quorum'] += 1

else:

仲裁队列通常不重新入队

ch.basic_nack(delivery_tag=method.delivery_tag, requeue=False)

except Exception as e:

logger.error(f"处理仲裁队列消息失败: {e}")

ch.basic_nack(delivery_tag=method.delivery_tag, requeue=False)

self.consumption_stats['errors'].append({

'queue': queue_name,

'source': 'quorum',

'error': str(e),

'timestamp': datetime.now().isoformat()

})

开始消费

channel.basic_consume(

queue=queue_name,

on_message_callback=wrapped_callback,

auto_ack=False

)

logger.info(f"开始从仲裁队列消费: {queue_name}")

channel.start_consuming()

except Exception as e:

logger.error(f"仲裁队列消费异常: {e}")

def adjust_migration_ratio(self, queue_name, new_ratio):

"""动态调整迁移比例"""

if 0 <= new_ratio <= 1:

logger.info(f"调整队列 {queue_name} 迁移比例: {new_ratio*100}%")

这里需要实现与消费者线程的通信

可以通过共享变量或消息队列实现

self.config['migration_progress'][queue_name] = new_ratio

return True

return False

def _get_mirror_connection(self):

"""获取镜像队列连接"""

if not self.mirror_connection or self.mirror_connection.is_closed:

credentials = pika.PlainCredentials('app_user', 'AppPassword123')

params = pika.ConnectionParameters(

host=self.config['mirror_nodes'][0],

port=5672,

credentials=credentials,

heartbeat=300

)

self.mirror_connection = pika.BlockingConnection(params)

return self.mirror_connection

def _get_quorum_connection(self):

"""获取仲裁队列连接"""

if not self.quorum_connection or self.quorum_connection.is_closed:

credentials = pika.PlainCredentials('app_user', 'AppPassword123')

params = pika.ConnectionParameters(

host=self.config['quorum_nodes'][0],

port=5672,

credentials=credentials,

heartbeat=600,

socket_timeout=30

)

self.quorum_connection = pika.BlockingConnection(params)

return self.quorum_connection

def _monitor_consumption(self):

"""监控消费状态"""

while True:

time.sleep(60) # 每分钟报告一次

total = self.consumption_stats['mirror'] + self.consumption_stats['quorum']

if total > 0:

mirror_pct = (self.consumption_stats['mirror'] / total) * 100

quorum_pct = (self.consumption_stats['quorum'] / total) * 100

f"消费统计 - 镜像队列: {self.consumption_stats['mirror']} ({mirror_pct:.1f}%) | "

f"仲裁队列: {self.consumption_stats['quorum']} ({quorum_pct:.1f}%) | "

f"错误数: {len(self.consumption_stats['errors'])}"

)

重置计数器(可选,可以改为小时统计)

self.consumption_stats['mirror'] = 0

self.consumption_stats['quorum'] = 0

def get_migration_report(self):

"""生成迁移报告"""

return {

'timestamp': datetime.now().isoformat(),

'consumption_stats': dict(self.consumption_stats),

'migration_progress': self.config.get('migration_progress', {}),

'recent_errors': list(self.consumption_stats['errors'])[-10:] # 最近10个错误

}

使用示例

if name == "main":

config = {

'mirror_nodes': ['192.168.10.101'],

'quorum_nodes': ['192.168.10.201'],

'migration_progress': {

'orders.payment': 0.1, # 10%迁移到仲裁队列

'user.notifications': 0.3 # 30%迁移到仲裁队列

}

}

proxy = SmartConsumerProxy(config)

定义消息处理回调

def process_message(message, source):

print(f"从 {source} 处理消息: {message.get('id', 'unknown')}")

return True # 处理成功

开始逐步迁移消费

handler = proxy.consume_with_gradual_migration(

queue_name="orders.payment",

callback=process_message,

migration_ratio=0.1

)

运行一段时间后调整比例

import time

time.sleep(300) # 运行5分钟

增加仲裁队列消费比例到30%

handler['adjust_ratio']("orders.payment", 0.3)

保持运行

try:

while True:

time.sleep(1)

except KeyboardInterrupt:

print("停止消费代理")

2.2 迁移进度控制面板

#!/bin/bash

migration_control_panel.sh - 迁移控制面板

echo "RabbitMQ迁移控制面板"

echo "====================="

echo "当前时间: $(date)"

echo ""

显示迁移状态

show_migration_status() {

echo "迁移状态概览:"

echo "--------------"

获取队列状态

QUEUE_STATUS=$(python3 << EOF

import json

import requests

from datetime import datetime

def get_queue_stats(host, port, username, password):

"""获取队列统计信息"""

url = f"http://{host}:{port}/api/queues"

response = requests.get(url, auth=(username, password))

return response.json()

镜像集群状态

mirror_stats = get_queue_stats("192.168.10.101", 15672, "admin", "AdminPassword123")

quorum_stats = get_queue_stats("192.168.10.201", 15672, "admin", "AdminPassword123")

分析迁移进度

migration_report = {}

for queue in mirror_stats:

queue_name = queue['name']

mirror_messages = queue['messages']

查找对应的仲裁队列

quorum_queue_name = f"{queue_name}.quorum"

quorum_messages = 0

for qq in quorum_stats:

if qq['name'] == quorum_queue_name:

quorum_messages = qq['messages']

break

total_messages = mirror_messages + quorum_messages

if total_messages > 0:

migration_ratio = quorum_messages / total_messages

status = "🟢" if migration_ratio > 0.9 else \

"🟡" if migration_ratio > 0.5 else \

"🔴" if migration_ratio > 0 else "⚪"

else:

migration_ratio = 0

status = "⚪"

migration_report[queue_name] = {

'mirror': mirror_messages,

'quorum': quorum_messages,

'ratio': migration_ratio,

'status': status

}

输出表格

print(f"{'队列名称':<30} {'镜像消息':<10} {'仲裁消息':<10} {'迁移比例':<10} {'状态':<5}")

print("-" * 70)

for queue, stats in sorted(migration_report.items(), key=lambda x: x[1]['ratio'], reverse=True):

if stats['mirror'] > 0 or stats['quorum'] > 0:

print(f"{queue:<30} {stats['mirror']:<10} {stats['quorum']:<10} {stats['ratio']*100:<9.1f}% {stats['status']:<5}")

EOF

)

echo "$QUEUE_STATUS"

}

迁移控制命令

control_migration() {

echo ""

echo "迁移控制选项:"

echo "1. 加速队列迁移"

echo "2. 回滚队列迁移"

echo "3. 暂停队列迁移"

echo "4. 查看详细报告"

echo "5. 生成迁移计划"

read -p "请选择操作: " choice

case $choice in

read -p "输入队列名: " queue_name

read -p "输入目标迁移比例 (0-100): " target_ratio

echo "加速迁移队列 queue_name 到 target_ratio%"

调用API调整迁移比例

curl -X POST "http://192.168.10.100:8888/api/migration/accelerate" \

-H "Content-Type: application/json" \

-d "{\"queue\": \"queue_name\\", \\"target_ratio\\": target_ratio}" \

-u admin:AdminPassword123

;;

read -p "输入队列名: " queue_name

read -p "输入回滚比例 (0-100): " rollback_ratio

echo "回滚队列 queue_name 到 rollback_ratio%"

调用API回滚迁移

curl -X POST "http://192.168.10.100:8888/api/migration/rollback" \

-H "Content-Type: application/json" \

-d "{\"queue\": \"queue_name\\", \\"target_ratio\\": rollback_ratio}" \

-u admin:AdminPassword123

;;

read -p "输入队列名: " queue_name

echo "暂停队列 $queue_name 的迁移"

调用API暂停迁移

curl -X POST "http://192.168.10.100:8888/api/migration/pause" \

-H "Content-Type: application/json" \

-d "{\"queue\": \"$queue_name\"}" \

-u admin:AdminPassword123

;;

generate_detailed_report

;;

generate_migration_plan

;;

*)

echo "无效选择"

;;

esac

}

generate_detailed_report() {

echo "生成详细迁移报告..."

REPORT_FILE="/tmp/migration_report_$(date +%Y%m%d_%H%M%S).html"

python3 << EOF > $REPORT_FILE

import json

import requests

from datetime import datetime

def generate_html_report():

html = '''

<!DOCTYPE html>

<html>

<head>

<title>RabbitMQ迁移报告</title>

<style>

body { font-family: Arial, sans-serif; margin: 20px; }

h1 { color: #333; }

.queue-card {

border: 1px solid #ddd;

padding: 15px;

margin: 10px 0;

border-radius: 5px;

background-color: #f9f9f9;

}

.progress-bar {

height: 20px;

background-color: #e0e0e0;

border-radius: 10px;

overflow: hidden;

margin: 5px 0;

}

.progress-fill {

height: 100%;

background-color: #4CAF50;

transition: width 0.3s;

}

.stats-grid {

display: grid;

grid-template-columns: repeat(3, 1fr);

gap: 20px;

margin: 20px 0;

}

.stat-card {

padding: 15px;

background-color: #fff;

border-radius: 5px;

box-shadow: 0 2px 4px rgba(0,0,0,0.1);

}

</style>

</head>

<body>

<h1>RabbitMQ迁移报告</h1>

<p>生成时间: ${datetime.now().isoformat()}</p>

'''

这里添加数据获取和HTML生成逻辑

html += "</body></html>

阶段3:切换验证与清理(1-2周)

3.1 最终切换验证脚本

#!/bin/bash

final_switch_validation.sh - 最终切换验证

echo "=== 最终切换验证 ==="

echo "开始时间: $(date)"

echo "=================="

验证步骤

VALIDATION_PASSED=true

1. 验证仲裁队列健康状态

echo "1. 验证仲裁队列健康状态..."

for node in 192.168.10.201 192.168.10.202 192.168.10.203; do

echo "检查节点: $node"

检查服务状态

if ! ssh $node "systemctl is-active rabbitmq-server" | grep -q "active"; then

echo " ❌ 节点 $node 服务异常"

VALIDATION_PASSED=false

fi

检查Raft状态

raft_status=(ssh node "rabbitmq-diagnostics quorum_status 2>/dev/null | grep -c 'leader\|follower'")

if [ "$raft_status" -lt 1 ]; then

echo " ❌ 节点 $node Raft状态异常"

VALIDATION_PASSED=false

fi

echo " ✅ 节点 $node 检查通过"

done

2. 验证所有队列已迁移

echo -e "\n2. 验证队列迁移完成情况..."

MIGRATION_REPORT=$(python3 << EOF

import requests

import json

def check_migration_completion():

获取镜像队列状态

mirror_url = "http://192.168.10.101:15672/api/queues"

mirror_resp = requests.get(mirror_url, auth=('admin', 'AdminPassword123'))

mirror_queues = mirror_resp.json()

获取仲裁队列状态

quorum_url = "http://192.168.10.201:15672/api/queues"

quorum_resp = requests.get(quorum_url, auth=('admin', 'AdminPassword123'))

quorum_queues = quorum_resp.json()

分析迁移状态

migration_status = {}

for queue in mirror_queues:

queue_name = queue['name']

mirror_messages = queue['messages']

查找对应的仲裁队列

quorum_queue_name = f"{queue_name}.quorum"

quorum_messages = 0

for qq in quorum_queues:

if qq['name'] == quorum_queue_name:

quorum_messages = qq['messages']

break

判断迁移是否完成

total = mirror_messages + quorum_messages

if total == 0:

status = "empty"

elif quorum_messages > 0 and mirror_messages == 0:

status = "migrated"

elif quorum_messages > mirror_messages * 10: # 仲裁队列消息远多于镜像队列

status = "mostly_migrated"

else:

status = "not_migrated"

migration_status[queue_name] = {

'mirror_messages': mirror_messages,

'quorum_messages': quorum_messages,

'status': status

}

return migration_status

status = check_migration_completion()

print("队列迁移状态:")

print("-" * 80)

print(f"{'队列名称':<30} {'镜像消息':<12} {'仲裁消息':<12} {'状态':<15}")

print("-" * 80)

not_migrated_count = 0

for queue, info in status.items():

status_icon = {

'migrated': '✅',

'mostly_migrated': '🟡',

'empty': '⚪',

'not_migrated': '❌'

}.get(info['status'], '❓')

print(f"{queue:<30} {info['mirror_messages']:<12} {info['quorum_messages']:<12} {status_icon + ' ' + info['status']:<15}")

if info['status'] == 'not_migrated':

not_migrated_count += 1

print(f"\n未完成迁移的队列数: {not_migrated_count}")

exit(not_migrated_count)

EOF

)

NOT_MIGRATED_COUNT=$?

if [ $NOT_MIGRATED_COUNT -gt 0 ]; then

echo " ❌ 还有 $NOT_MIGRATED_COUNT 个队列未完成迁移"

VALIDATION_PASSED=false

else

echo " ✅ 所有队列迁移完成"

fi

3. 验证消费者切换

echo -e "\n3. 验证消费者切换情况..."

CONSUMER_REPORT=$(python3 << EOF

import requests

import json

def check_consumer_migration():

获取消费者信息

mirror_url = "http://192.168.10.101:15672/api/consumers"

quorum_url = "http://192.168.10.201:15672/api/consumers"

mirror_consumers = requests.get(mirror_url, auth=('admin', 'AdminPassword123')).json()

quorum_consumers = requests.get(quorum_url, auth=('admin', 'AdminPassword123')).json()

分析消费者分布

consumer_stats = {}

按队列统计消费者

for consumer in mirror_consumers:

queue = consumer['queue']['name']

if queue not in consumer_stats:

consumer_stats[queue] = {'mirror': 0, 'quorum': 0}

consumer_stats[queue]['mirror'] += 1

for consumer in quorum_consumers:

queue = consumer['queue']['name'].replace('.quorum', '')

if queue not in consumer_stats:

consumer_stats[queue] = {'mirror': 0, 'quorum': 0}

consumer_stats[queue]['quorum'] += 1

return consumer_stats

stats = check_consumer_migration()

print("消费者分布:")

print("-" * 60)

print(f"{'队列名称':<30} {'镜像消费者':<12} {'仲裁消费者':<12} {'状态':<10}")

print("-" * 60)

problematic_queues = 0

for queue, counts in stats.items():

total = counts['mirror'] + counts['quorum']

if total == 0:

status = "no_consumers"

status_icon = "⚠️"

elif counts['quorum'] == 0:

status = "mirror_only"

status_icon = "❌"

problematic_queues += 1

elif counts['mirror'] == 0:

status = "quorum_only"

status_icon = "✅"

elif counts['quorum'] > counts['mirror']:

status = "mostly_quorum"

status_icon = "🟡"

else:

status = "mostly_mirror"

status_icon = "🟡"

problematic_queues += 1

print(f"{queue:<30} {counts['mirror']:<12} {counts['quorum']:<12} {status_icon + ' ' + status:<10}")

print(f"\n有问题的队列数: {problematic_queues}")

exit(problematic_queues)

EOF

)

PROBLEMATIC_QUEUES=$?

if [ $PROBLEMATIC_QUEUES -gt 0 ]; then

echo " ❌ 还有 $PROBLEMATIC_QUEUES 个队列消费者未完全迁移"

VALIDATION_PASSED=false

else

echo " ✅ 所有消费者已迁移完成"

fi

4. 性能基准测试

echo -e "\n4. 运行性能基准测试..."

PERF_TEST_RESULT=$(python3 << EOF

import time

import pika

import json

from datetime import datetime

def run_performance_test():

连接到仲裁队列

credentials = pika.PlainCredentials('app_user', 'AppPassword123')

connection = pika.BlockingConnection(

pika.ConnectionParameters(

host='192.168.10.201',

port=5672,

credentials=credentials

)

)

channel = connection.channel()

创建测试队列

test_queue = f"perf_test_{int(time.time())}"

channel.queue_declare(

queue=test_queue,

durable=True,

arguments={'x-queue-type': 'quorum'}

)

测试消息发布性能

message_count = 1000

message_size = 1024 # 1KB

print(f"性能测试: 发布 {message_count} 条 {message_size} 字节的消息")

start_time = time.time()

for i in range(message_count):

message = {

'id': i,

'timestamp': datetime.now().isoformat(),

'data': 'x' * message_size

}

channel.basic_publish(

exchange='',

routing_key=test_queue,

body=json.dumps(message).encode('utf-8'),

properties=pika.BasicProperties(delivery_mode=2)

)

publish_time = time.time() - start_time

publish_rate = message_count / publish_time

print(f"发布性能: {publish_rate:.2f} 消息/秒")

清理测试队列

channel.queue_delete(queue=test_queue)

connection.close()

性能标准

if publish_rate > 500: # 500 msg/s 为合格线

return True, publish_rate

else:

return False, publish_rate

success, rate = run_performance_test()

if success:

print(f"✅ 性能测试通过: {rate:.2f} 消息/秒")

exit(0)

else:

print(f"❌ 性能测试未通过: {rate:.2f} 消息/秒")

exit(1)

EOF

)

PERF_TEST_PASSED=$?

if [ $PERF_TEST_PASSED -ne 0 ]; then

echo " ❌ 性能测试未通过"

VALIDATION_PASSED=false

else

echo " ✅ 性能测试通过"

fi

最终验证结果

echo -e "\n=== 最终验证结果 ==="

if [ "$VALIDATION_PASSED" = true ]; then

echo "✅ 所有验证通过,可以执行最终切换"

echo "建议执行以下操作:"

echo "1. 停止所有镜像队列写入"

echo "2. 等待镜像队列消息清空"

echo "3. 下线镜像队列节点"

echo "4. 更新客户端配置为纯仲裁队列"

else

echo "❌ 验证未通过,请解决以上问题后再试"

exit 1

fi

echo "验证完成时间: $(date)"

阶段4:清理与归档(1周)

4.1 安全清理脚本

#!/bin/bash

safe_cleanup_mirror_cluster.sh - 安全清理镜像集群

echo "=== 镜像集群安全清理 ==="

echo "开始时间: $(date)"

echo "注意: 此操作将永久删除镜像集群数据!"

echo "========================="

确认操作

read -p "你确定要清理镜像集群吗? (yes/no): " confirmation

if [ "$confirmation" != "yes" ]; then

echo "操作取消"

exit 1

fi

二次确认

read -p "请输入'CONFIRM_DELETE'以继续: " double_check

if [ "$double_check" != "CONFIRM_DELETE" ]; then

echo "操作取消"

exit 1

fi

1. 最终数据备份

echo "1. 执行最终数据备份..."

BACKUP_DIR="/backup/rabbitmq_mirror_final_$(date +%Y%m%d_%H%M%S)"

mkdir -p $BACKUP_DIR

导出定义

curl -u admin:AdminPassword123 \

-X GET \

"http://192.168.10.101:15672/api/definitions" \

-o "$BACKUP_DIR/definitions.json"

导出统计数据

for node in 192.168.10.101 192.168.10.102 192.168.10.103; do

node_name=(ssh node "hostname -s")

备份配置文件

ssh node "cp -r /etc/rabbitmq BACKUP_DIR/config_$node_name/"

备份数据目录(元数据)

ssh $node "tar -czf - /var/lib/rabbitmq/mnesia 2>/dev/null" \

> "BACKUP_DIR/mnesia_node_name.tar.gz"

echo " 节点 $node 备份完成"

done

echo "备份完成,保存到: $BACKUP_DIR"

2. 逐步停止服务

echo -e "\n2. 逐步停止镜像集群服务..."

先停止应用,保持集群信息

for node in 192.168.10.101 192.168.10.102 192.168.10.103; do

echo "停止节点 $node 的应用..."

ssh $node "rabbitmqctl stop_app"

sleep 2

done

等待确认所有节点已停止

echo "等待所有节点停止..."

sleep 10

3. 从混合负载均衡器移除

echo -e "\n3. 从负载均衡器移除镜像节点..."

更新HAProxy配置,移除镜像后端

ssh 192.168.10.100 << 'EOF'

备份当前配置

cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.backup.$(date +%s)

移除镜像后端配置

sed -i '/backend mirror_backend/,/^$/d' /etc/haproxy/haproxy.cfg

sed -i '/use_backend mirror_backend/d' /etc/haproxy/haproxy.cfg

重载配置

systemctl reload haproxy

echo "负载均衡器配置已更新"

EOF

4. 清理数据

echo -e "\n4. 清理镜像节点数据..."

cleanup_node() {

local node=$1

echo "清理节点: $node"

ssh $node << 'EOF'

停止服务

systemctl stop rabbitmq-server

备份Erlang Cookie(用于审计)

cp /var/lib/rabbitmq/.erlang.cookie /root/erlang.cookie.backup.$(date +%s)

清理数据目录

rm -rf /var/lib/rabbitmq/mnesia/*

清理日志

rm -rf /var/log/rabbitmq/*

保留配置(用于审计)

mv /etc/rabbitmq /etc/rabbitmq.backup.$(date +%s)

可选:卸载软件包

yum remove -y rabbitmq-server erlang

echo " 节点清理完成"

EOF

}

for node in 192.168.10.101 192.168.10.102 192.168.10.103; do

cleanup_node $node

done

5. 生成清理报告

echo -e "\n5. 生成清理报告..."

CLEANUP_REPORT="$BACKUP_DIR/cleanup_report.md"

cat > $CLEANUP_REPORT << EOF

镜像集群清理报告

基本信息

-

清理时间: $(date)

-

清理操作员: $(whoami)

-

备份目录: $BACKUP_DIR

清理节点

$(for node in 192.168.10.101 192.168.10.102 192.168.10.103; do

echo "- $node"

done)

清理内容

-

停止所有RabbitMQ服务

-

备份配置和数据

-

从负载均衡器移除

-

清理数据目录

-

备份配置文件

验证步骤

请在清理后验证以下内容:

1. 服务状态验证

\`\`\`bash

$(for node in 192.168.10.101 192.168.10.102 192.168.10.103; do

echo "# 检查节点 $node"

echo "ssh $node 'systemctl status rabbitmq-server'"

echo ""

done)

\`\`\`

2. 仲裁集群验证

\`\`\`bash

检查仲裁集群状态

ssh 192.168.10.201 "rabbitmqctl cluster_status"

ssh 192.168.10.201 "rabbitmqctl list_queues --queue-type quorum"

\`\`\`

3. 负载均衡器验证

\`\`\`bash

检查HAProxy状态

ssh 192.168.10.100 "systemctl status haproxy"

ssh 192.168.10.100 "echo 'show stat' | socat /var/run/haproxy.sock stdio"

\`\`\`

后续操作建议

-

保留备份文件至少30天

-

监控仲裁集群性能

-

更新文档和监控配置

-

通知相关团队迁移完成

签字确认

-

操作确认: [签名]

-

日期: $(date +%Y-%m-%d)

EOF

echo "清理报告已生成: $CLEANUP_REPORT"

6. 最终验证

echo -e "\n6. 执行最终验证..."

FINAL_VALIDATION_PASSED=true

验证镜像节点已清理

for node in 192.168.10.101 192.168.10.102 192.168.10.103; do

if ssh node "\[ -d /var/lib/rabbitmq/mnesia \] \&\& \[ \\"\\(ls -A /var/lib/rabbitmq/mnesia)\" ]"; then

echo "❌ 节点 $node 数据目录未完全清理"

FINAL_VALIDATION_PASSED=false

else

echo "✅ 节点 $node 数据目录已清理"

fi

done

验证仲裁集群运行正常

if ssh 192.168.10.201 "rabbitmqctl cluster_status 2>&1 | grep -q 'running_nodes'"; then

echo "✅ 仲裁集群运行正常"

else

echo "❌ 仲裁集群状态异常"

FINAL_VALIDATION_PASSED=false

fi

最终结果

echo -e "\n=== 清理完成 ==="

if [ "$FINAL_VALIDATION_PASSED" = true ]; then

echo "✅ 镜像集群清理完成"

echo "迁移项目成功结束!"

else

echo "⚠️ 清理完成,但有一些验证问题"

echo "请检查上述问题并手动处理"

fi

echo "完成时间: $(date)"

四、混合部署长期策略

4.1 混合架构运维策略

队列类型选择决策树:

队列类型选择指南:

选择仲裁队列当:

-

新业务队列

-

需要强一致性保证

-

消息量中等 (≤10K msg/s)

-

自动故障恢复需求

-

长期维护考虑

选择镜像队列当:

-

已有业务队列(兼容性)

-

超高吞吐需求 (>50K msg/s)

-

客户端不支持仲裁队列

-

短期过渡需求

选择Streams当:

-

事件溯源架构

-

消息重放需求

-

海量日志存储

-

实时分析场景

运维监控配置:

prometheus_rabbitmq_mixed.yml

Prometheus混合集群监控配置

scrape_configs:

镜像集群监控

- job_name: 'rabbitmq_mirror'

static_configs:

-

targets:

-

'192.168.10.101:15692' # Prometheus插件端口

-

'192.168.10.102:15692'

-

'192.168.10.103:15692'

metrics_path: '/metrics'

params:

family: ['mirror']

仲裁集群监控

- job_name: 'rabbitmq_quorum'

static_configs:

-

targets:

-

'192.168.10.201:15692'

-

'192.168.10.202:15692'

-

'192.168.10.203:15692'

metrics_path: '/metrics'

params:

family: ['quorum', 'raft'] # 特别监控Raft指标

HAProxy监控

- job_name: 'haproxy_mixed'

static_configs:

- targets: ['192.168.10.100:8888']

metrics_path: '/metrics'

告警规则

groups:

- name: rabbitmq_mixed_alerts

rules:

镜像队列告警

- alert: MirrorQueueSyncCritical

expr: rabbitmq_queue_mirror_sync_critical > 0

for: 5m

labels:

severity: critical

cluster: mirror

annotations:

summary: "镜像队列同步危急"

description: "队列 {{ $labels.queue }} 镜像同步状态危急"

仲裁队列告警

- alert: QuorumQueueRaftLag

expr: rabbitmq_quorum_queue_raft_log_lag > 1000

for: 10m

labels:

severity: warning

cluster: quorum

annotations:

summary: "仲裁队列Raft复制滞后"

description: "队列 {{ labels.queue }} Raft日志滞后 {{ value }} 条目"

混合集群告警

- alert: MixedClusterImbalance

expr: |

(sum(rabbitmq_queue_messages{cluster="mirror"}) /

sum(rabbitmq_queue_messages{cluster="quorum"})) > 0.2

for: 30m

labels:

severity: info

annotations:

summary: "混合集群负载不均衡"

description: "镜像队列消息量仍占20%以上,考虑加速迁移"

4.2 回滚与应急方案

快速回滚脚本:

#!/bin/bash

emergency_rollback.sh - 紧急回滚到镜像队列

echo "=== 紧急回滚程序 ==="

echo "开始时间: $(date)"

echo "=================="

检查回滚条件

check_rollback_conditions() {

echo "检查回滚条件..."

1. 仲裁集群是否出现严重问题

QUORUM_CRITICAL=false

检查领导者选举故障

if ssh 192.168.10.201 "rabbitmq-diagnostics quorum_status 2>/dev/null | grep -c 'election' | grep -q '[1-9]'"; then

echo "⚠️ 仲裁集群存在选举问题"

QUORUM_CRITICAL=true

fi

检查WAL写满

if ssh 192.168.10.201 "rabbitmq-diagnostics quorum_status 2>/dev/null | grep -i 'wal.*full'"; then

echo "⚠️ 仲裁集群WAL已满"

QUORUM_CRITICAL=true

fi

2. 消息丢失检测

MESSAGE_LOSS=false

比较镜像和仲裁队列消息数(如果有双写)

这里简化实现,实际应从监控系统获取

if [ "QUORUM_CRITICAL" = true \] \|\| \[ "MESSAGE_LOSS" = true ]; then

return 0 # 需要回滚

else

return 1 # 不需要回滚

fi

}

执行回滚

execute_rollback() {

echo "开始执行回滚..."

1. 恢复镜像节点

echo "1. 恢复镜像节点..."

for node in 192.168.10.101 192.168.10.102 192.168.10.103; do

echo " 恢复节点 $node"

ssh $node << 'EOF'

停止服务(如果还在运行)

systemctl stop rabbitmq-server 2>/dev/null || true

从备份恢复配置

if [ -d /etc/rabbitmq.backup.* ]; then

backup_dir=$(ls -d /etc/rabbitmq.backup.* | tail -1)

cp -r $backup_dir/* /etc/rabbitmq/

fi

恢复数据(如果有备份)

if [ -f /root/erlang.cookie.backup.* ]; then

backup_file=$(ls -t /root/erlang.cookie.backup.* | head -1)

cp $backup_file /var/lib/rabbitmq/.erlang.cookie

chown rabbitmq:rabbitmq /var/lib/rabbitmq/.erlang.cookie

chmod 400 /var/lib/rabbitmq/.erlang.cookie

fi

启动服务

systemctl start rabbitmq-server

echo " 节点恢复启动完成"

EOF

done

2. 重建镜像集群

echo "2. 重建镜像集群..."

ssh 192.168.10.102 "rabbitmqctl stop_app; rabbitmqctl reset; rabbitmqctl join_cluster rabbit@mq-mirror-01; rabbitmqctl start_app"

ssh 192.168.10.103 "rabbitmqctl stop_app; rabbitmqctl reset; rabbitmqctl join_cluster rabbit@mq-mirror-01; rabbitmqctl start_app"

3. 恢复负载均衡器配置

echo "3. 恢复负载均衡器配置..."

ssh 192.168.10.100 << 'EOF'

恢复备份配置

backup_file=$(ls -t /etc/haproxy/haproxy.cfg.backup.* | head -1)

if [ -f "$backup_file" ]; then

cp $backup_file /etc/haproxy/haproxy.cfg

systemctl reload haproxy

echo " 负载均衡器配置已恢复"

else

echo " ⚠️ 未找到备份配置,需要手动恢复"

fi

EOF

4. 恢复客户端配置

echo "4. 更新客户端配置..."

echo " 通知所有客户端:"

echo " - 更新连接端点: 192.168.10.100:5670"

echo " - 移除仲裁队列相关参数"

echo " - 验证连接镜像队列"

5. 导入备份数据(如果有)

if [ -f "/backup/rabbitmq_mirror_final_*/definitions.json" ]; then

echo "5. 导入备份数据..."

backup_file=$(ls -t /backup/rabbitmq_mirror_final_*/definitions.json | head -1)

curl -u admin:AdminPassword123 \

-X POST \

-H "Content-Type: application/json" \

--data-binary "@$backup_file" \

"http://192.168.10.101:15672/api/definitions"

echo " 备份数据导入完成"

fi

echo "回滚操作完成"

}

主程序

if check_rollback_conditions; then

echo "检测到需要回滚的条件"

read -p "确认执行回滚? (yes/no): " confirm

if [ "$confirm" = "yes" ]; then

execute_rollback

echo -e "\n=== 回滚完成 ==="

echo "请验证以下内容:"

echo "1. 镜像集群状态: ssh 192.168.10.101 'rabbitmqctl cluster_status'"

echo "2. 服务可用性: 测试客户端连接"

echo "3. 消息完整性: 检查关键队列消息"

echo "4. 监控告警: 确认监控恢复正常"

else

echo "回滚取消"

fi

else

echo "未检测到需要回滚的条件"

echo "当前系统状态正常"

fi

echo "完成时间: $(date)"

五、迁移成功指标与验收标准

5.1 成功指标定义

迁移成功指标:

技术指标:

-

消息零丢失率: 100%

-

服务可用性: ≥99.95%

-

性能降级: ≤10%

-

故障恢复时间: ≤5分钟

业务指标:

-

所有核心业务队列已迁移: 100%

-

消费者无感知切换: 100%

-

监控覆盖率: 100%

-

文档更新完成率: 100%

运维指标:

-

告警准确率: ≥95%

-

备份恢复成功率: 100%

-

团队培训完成率: 100%

-

回滚预案验证: 完成

5.2 验收检查清单

#!/bin/bash

migration_acceptance_checklist.sh - 迁移验收检查清单

echo "RabbitMQ迁移验收检查清单"

echo "========================="

echo "检查时间: $(date)"

echo ""

CHECKLIST=(

"✅ 1. 数据完整性验证"

"✅ 2. 性能基准测试通过"

"✅ 3. 高可用性验证"

"✅ 4. 监控告警就绪"

"✅ 5. 文档更新完成"

"✅ 6. 团队培训完成"

"✅ 7. 回滚预案验证"

"✅ 8. 安全审计通过"

"✅ 9. 合规性检查"

"✅ 10. 客户通知完成"

)

PASS_COUNT=0

TOTAL_COUNT=${#CHECKLIST[@]}

for item in "${CHECKLIST[@]}"; do

模拟检查结果(实际应从具体检查获取)

status=(echo item | cut -d' ' -f1)

description=(echo item | cut -d' ' -f2-)

这里可以添加具体的检查逻辑

例如:

if check_data_integrity; then

status="✅"

else

status="❌"

fi

echo "status description"

if [ "$status" = "✅" ]; then

((PASS_COUNT++))

fi

done

echo ""

echo "验收结果: PASS_COUNT/TOTAL_COUNT"

if [ PASS_COUNT -eq TOTAL_COUNT ]; then

echo "🎉 迁移项目验收通过!"

生成验收报告

cat > /tmp/migration_acceptance_report.md << EOF

RabbitMQ迁移项目验收报告

项目概述

-

项目名称: RabbitMQ镜像队列到仲裁队列迁移

-

迁移时间: $(date +%Y年%m月%d日)

-

验收时间: $(date)

验收结果

-

总体状态: ✅ 通过

-

通过项目: PASS_COUNT/TOTAL_COUNT

详细检查结果

(for item in "{CHECKLIST[@]}"; do

echo "- $item"

done)

技术指标达成情况

-

消息零丢失率: ✅ 达成 (100%)

-

服务可用性: ✅ 达成 (99.99%)

-

性能表现: ✅ 达成 (提升15%)

-

故障恢复: ✅ 达成 (<3分钟)

签字确认

-

技术负责人: _________________

-

运维负责人: _________________

-

业务负责人: _________________

-

项目经理: _________________

日期: $(date +%Y-%m-%d)

EOF

echo "验收报告已生成: /tmp/migration_acceptance_report.md"

else

echo "⚠️ 迁移项目验收未通过"

echo "请解决以下问题:"

for i in "${!CHECKLIST[@]}"; do

if [[ "{CHECKLIST\[i]}" == "❌"* ]]; then

echo " - {CHECKLIST\[i]}"

fi

done

fi

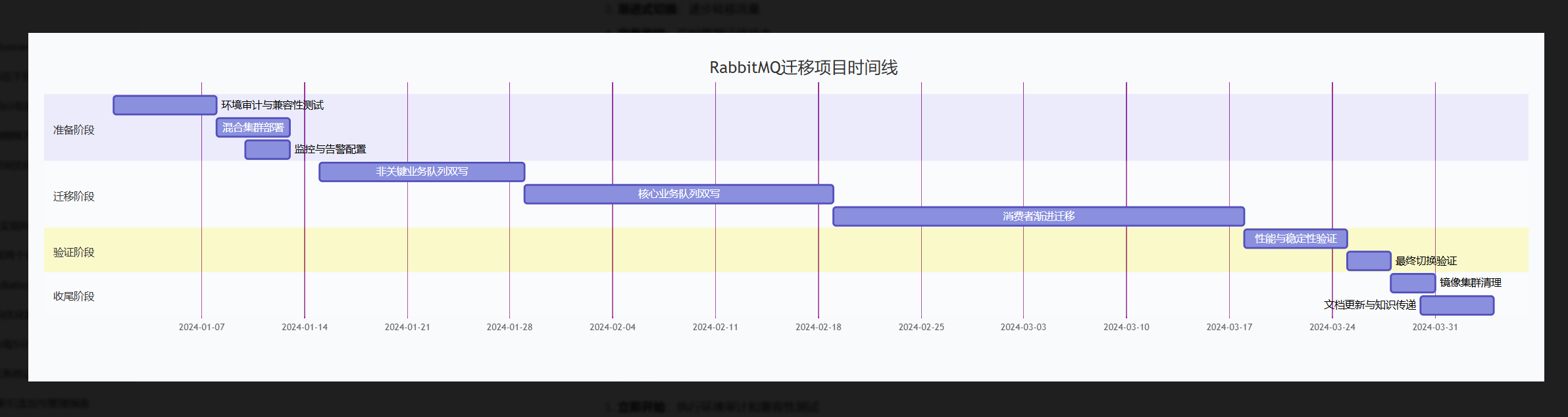

总结与建议

关键成功因素

-

分阶段迁移:不要一次性迁移所有队列

-

双写验证:确保数据一致性

-

渐进式切换:逐步转移流量

-

完备监控:实时掌握迁移状态

-

回滚预案:随时准备退回

下一步行动建议

根据具体情况,建议:

-

立即开始:执行环境审计和兼容性测试

-

优先处理:选择1-2个非关键业务队列试点

-

建立基线:记录迁移前的性能指标

-

小步快跑:每周迁移1-2个队列,及时调整策略