部署建议

https://github.com/Wan-Video/Wan2.2/blob/main/README.md

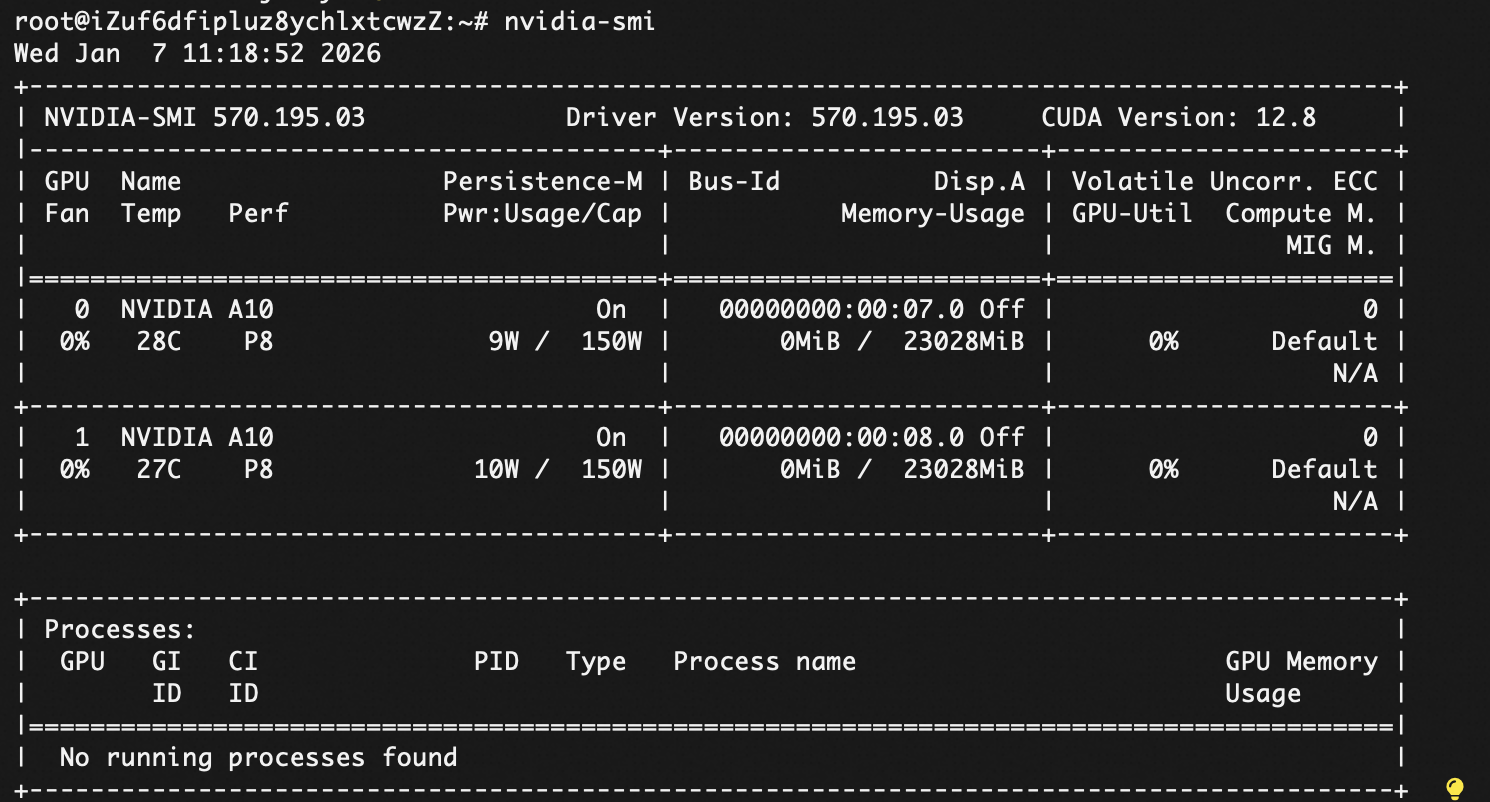

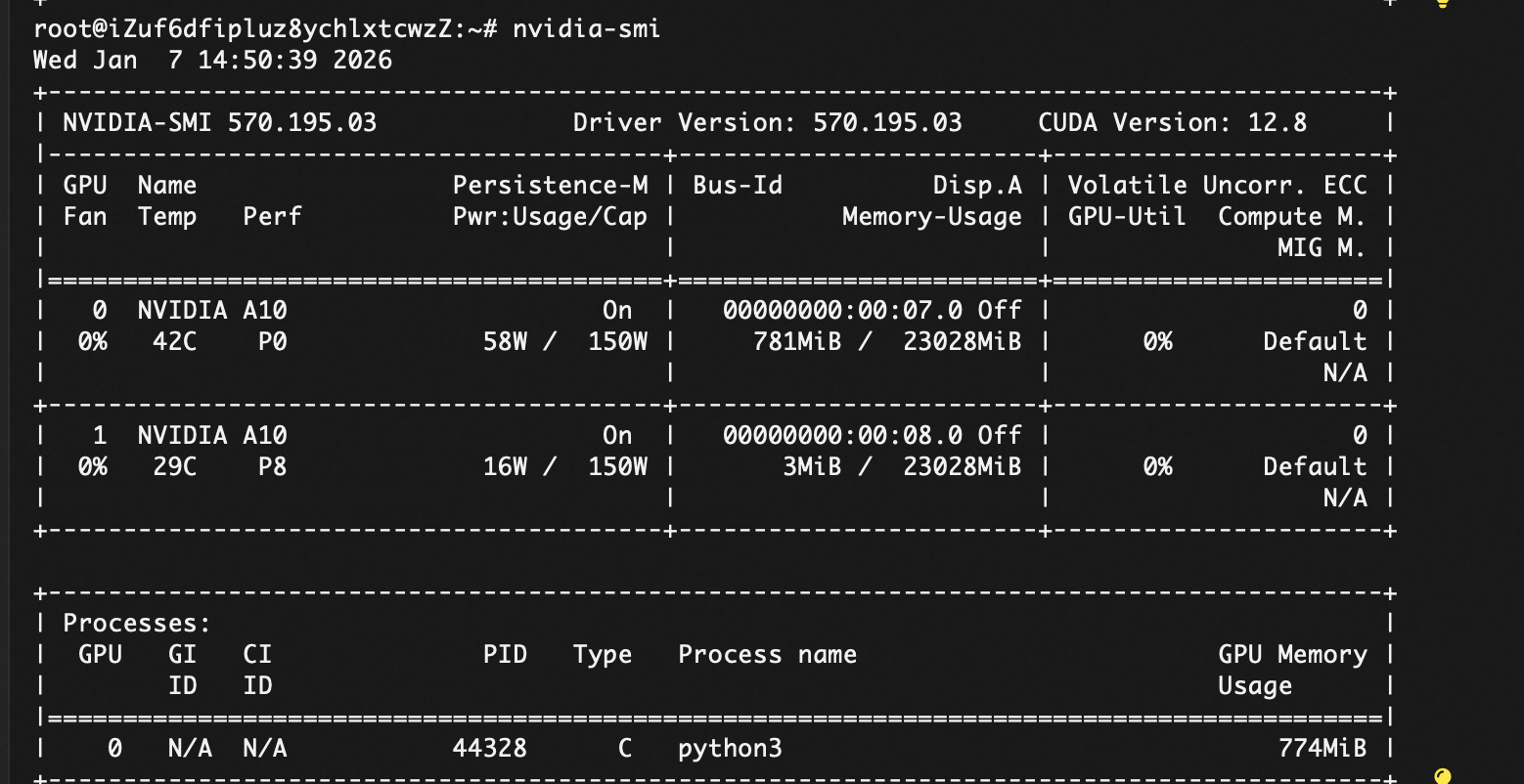

建议最低选用80G显存的GPU部署 。否则会OOM报错,解决也比较麻烦。

服务器带宽越高越好,方便下载模型。

资源开通

选用A100 ecs.gn7e-c16g1.4xlarge, 显存80G。

OS要选择Ubuntu 22.04 ,不建议用ubutun24.04,是否部署的时候会遇到很多包的兼容问题。

开通机器后,系统会执行/root/auto_install/auto_install_v4.0.sh脚本去安装CUDA等。

-----------------------------------------------------------------------------------分隔符------------------

系统是较新的 Ubuntu(24.04 或 23.10),Python 是 3.12,并且你直接在系统环境下运行 pip install,触发了 PEP 668 安全机制。

需要创建虚拟环境:

sudo apt update

sudo apt install -y python3.12-venv

python3 -m venv wan2-env

安装torch和

pip install torch2.4.0 torchvision0.19.0 torchaudio==2.4.0 --index-url https://download.pytorch.org/whl/cu121

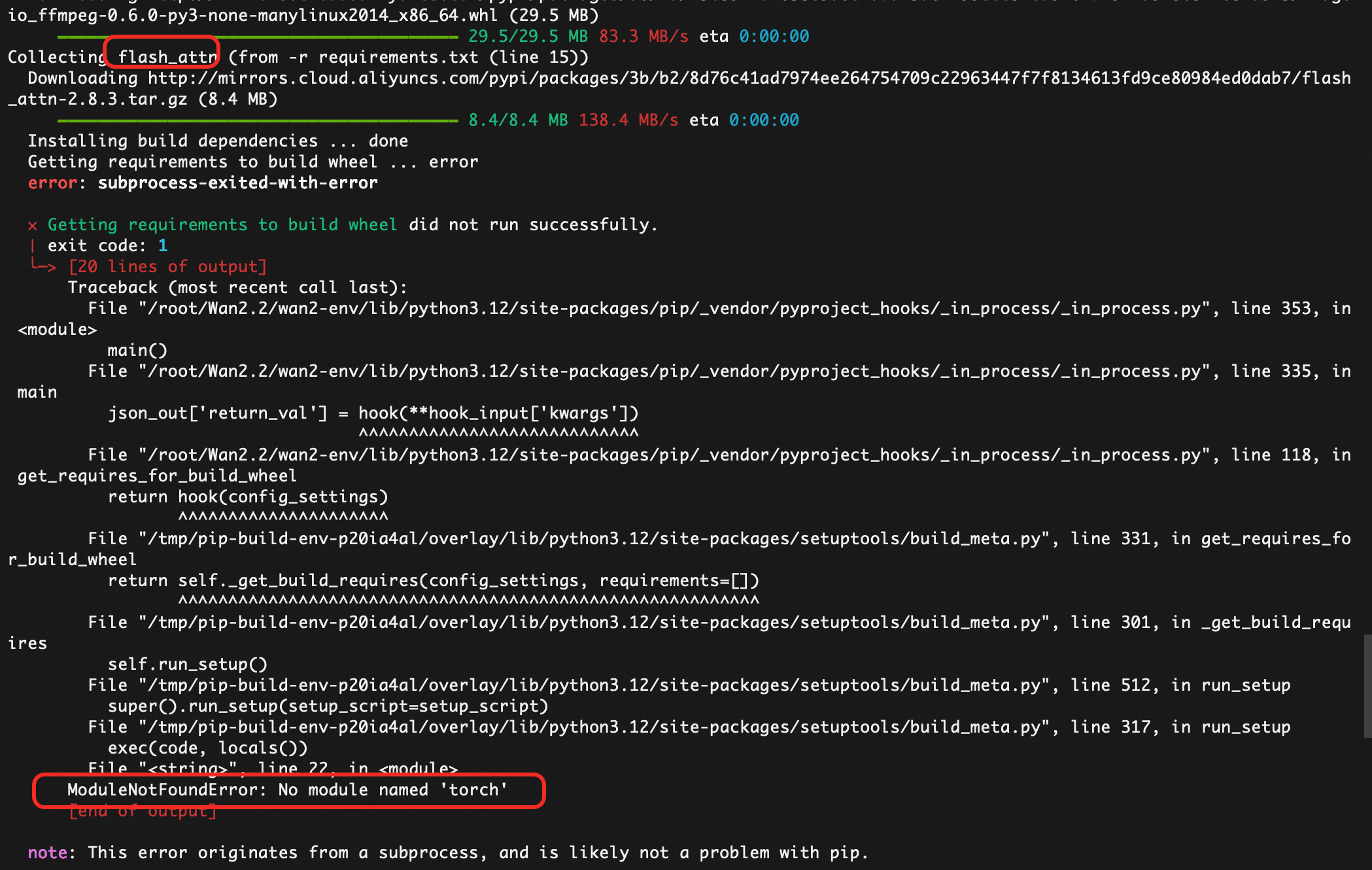

执行pip install -r requirements.txt 前需要安装torch,是否会遇到报错

然后修改requirements.txt,注视flash_attn后执行:

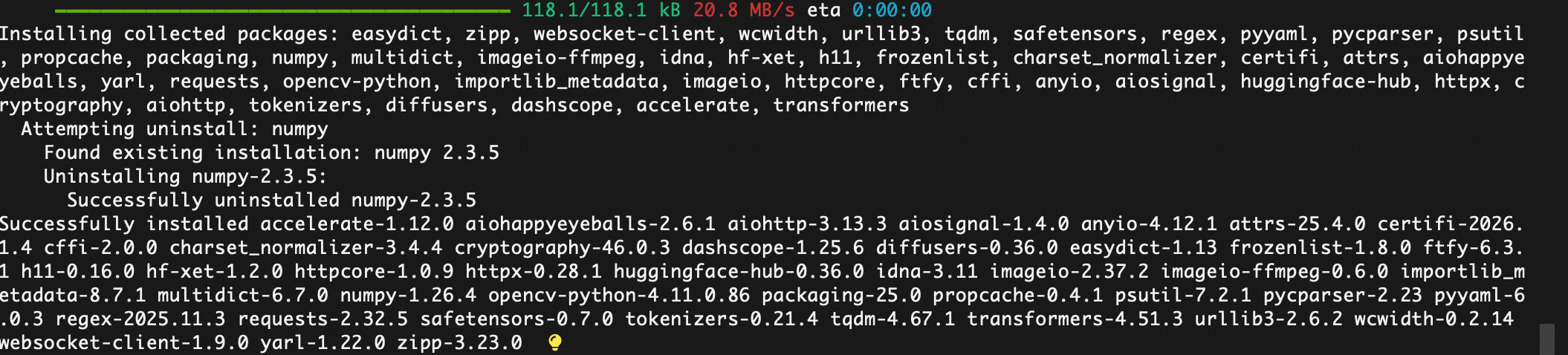

pip install -r requirements.txt

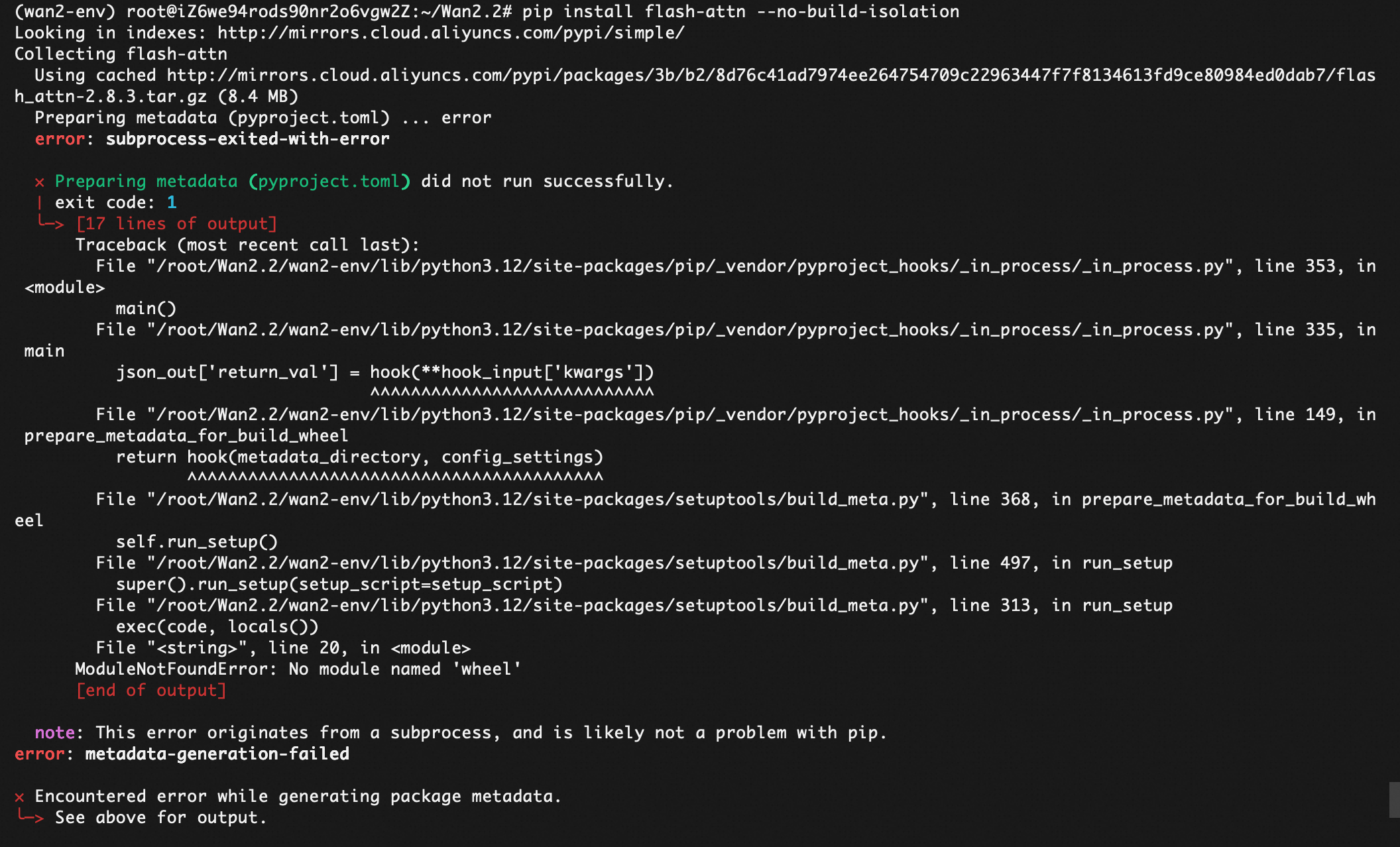

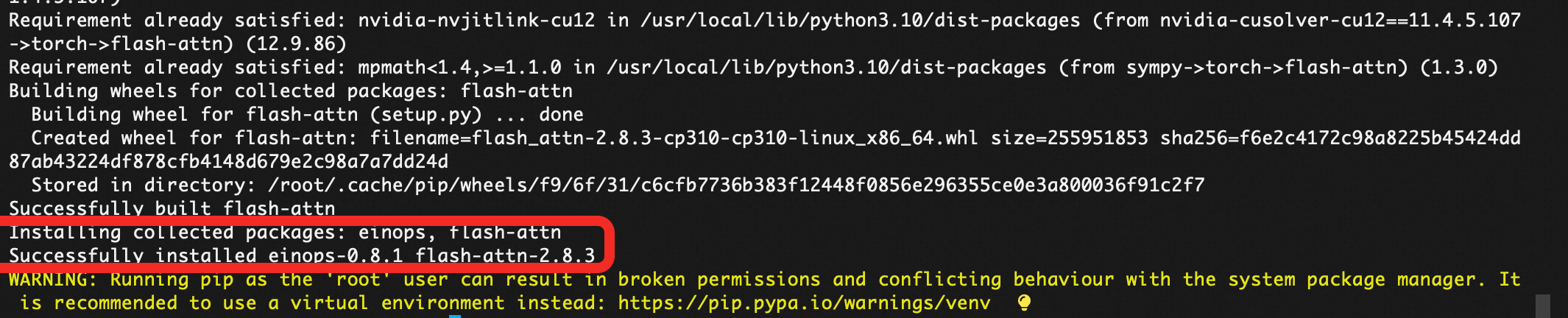

单独安装flash_attn

1. 安装构建依赖(关键!)

pip install psutil ninja packaging -i https://pypi.tuna.tsinghua.edu.cn/simple

2. 安装 flash-attn

pip install flash-attn --no-build-isolation -i https://pypi.tuna.tsinghua.edu.cn/simple

如果不指定路径,阿里云的镜像会摆错:

最后再执行pip install -r requirements.txt

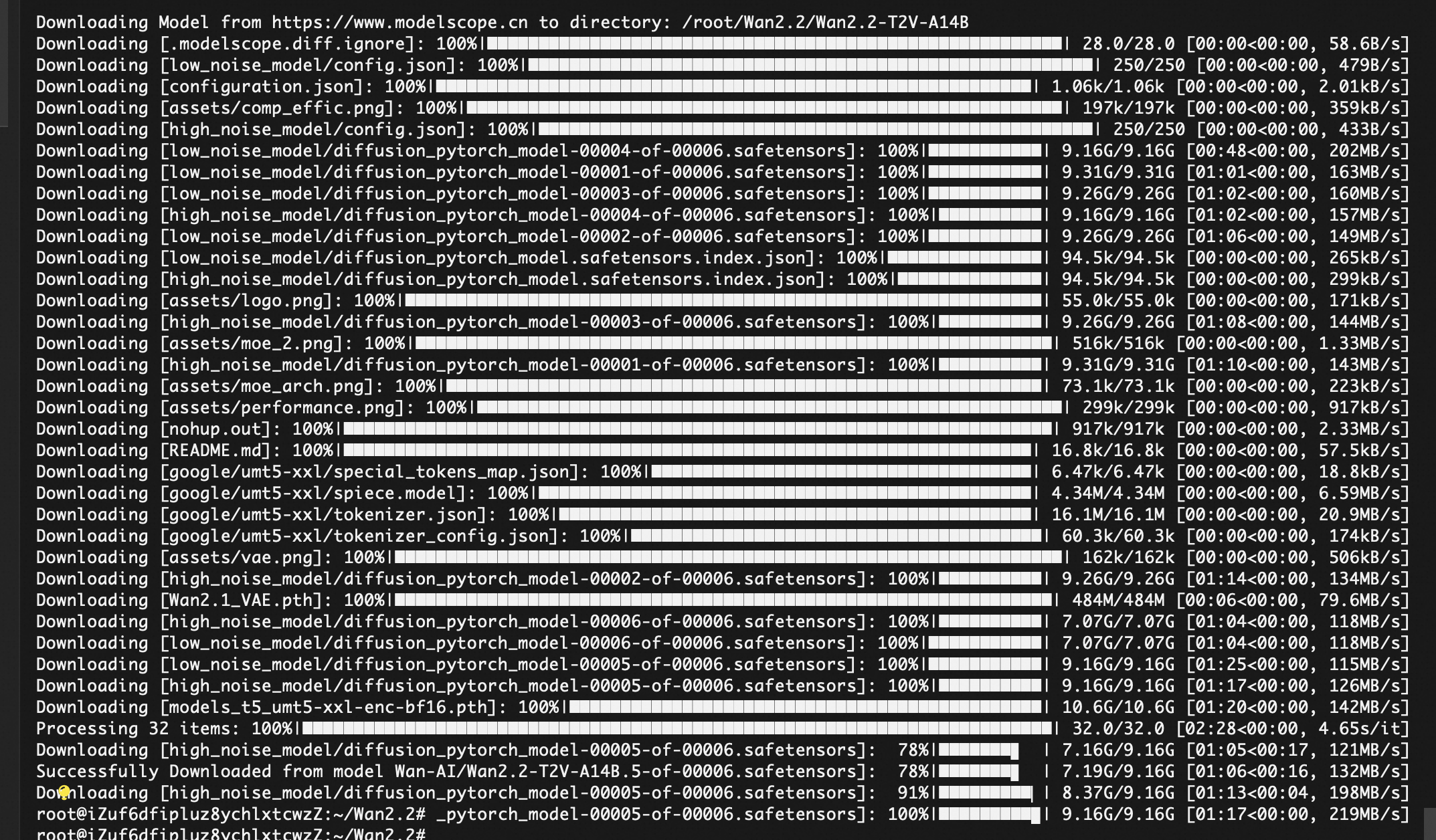

下载模型,需要加载100G+文件,大概需要一小时。

国内机器执行:

pip install modelscope

modelscope download Wan-AI/Wan2.2-T2V-A14B --local_dir ./Wan2.2-T2V-A14B

modelscope download Wan-AI/Wan2.2-T2V-A14B --local_dir ./Wan2.2-T2V-A14B_1

中途觉得速度慢把带宽100M按量带宽该成了200M固定带宽。

也可以从海外huggingface下载。

pip install "huggingface_hub[cli]"

hf download Wan-AI/Wan2.2-T2V-A14B --local-dir ./Wan2.2-T2V-A14B

nohup hf download Wan-AI/Wan2.2-T2V-A14B --local-dir ./Wan2.2-T2V-A14B > wan2_download.log 2>&1 &

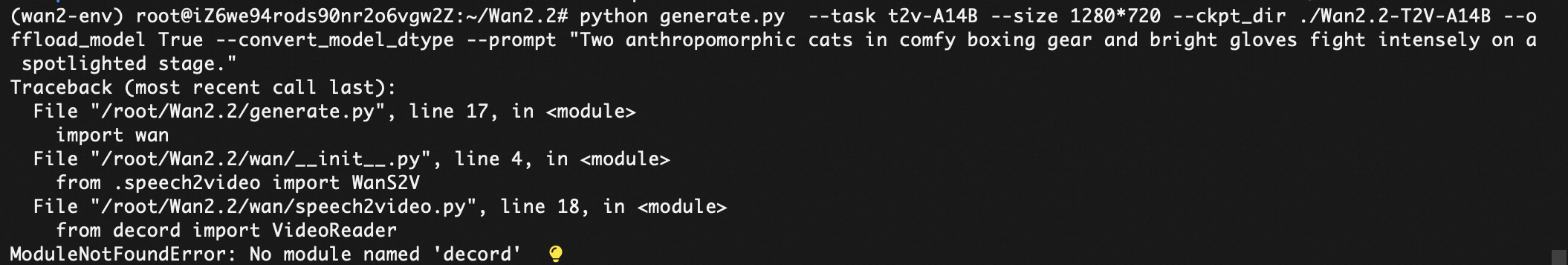

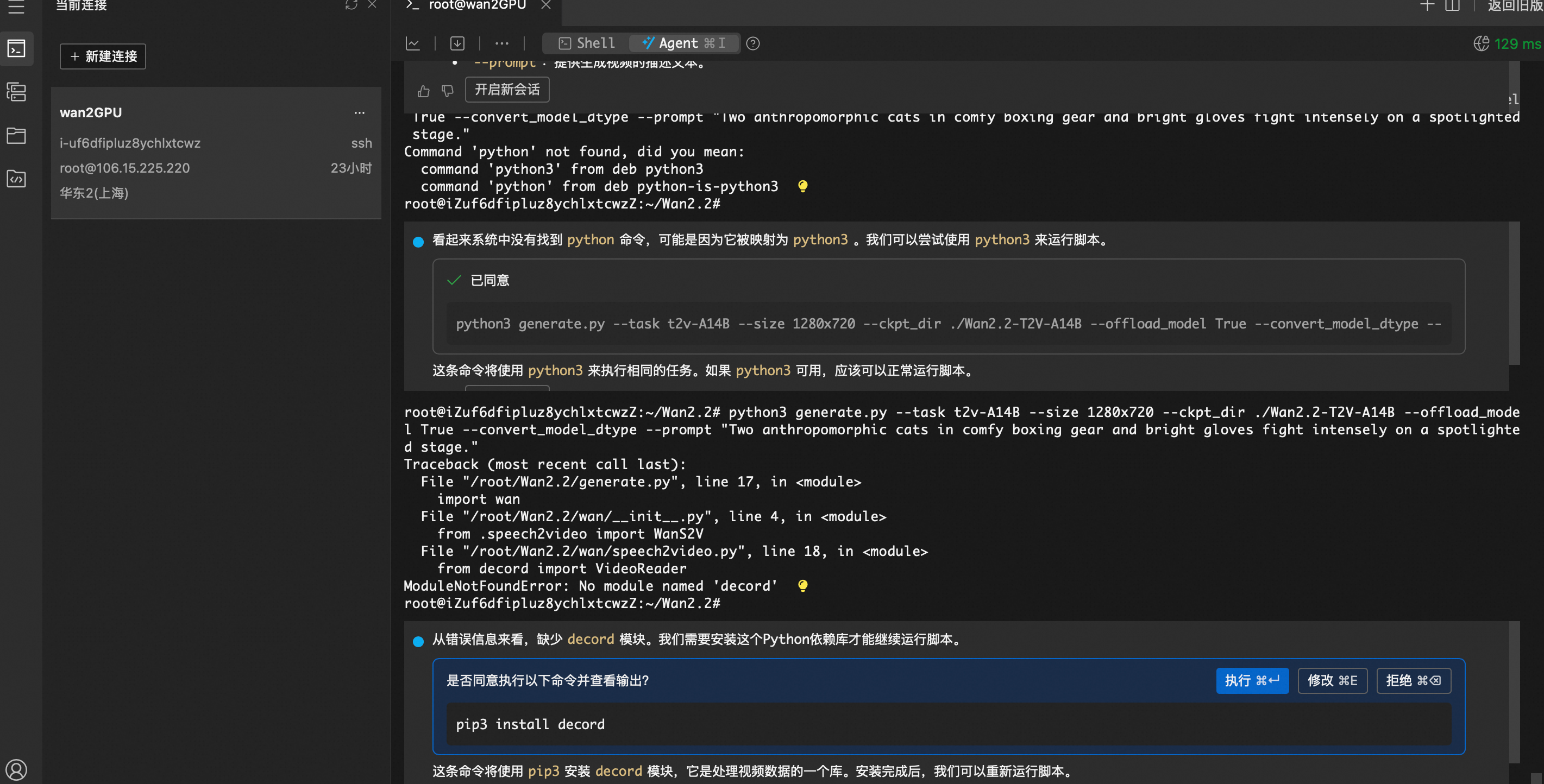

pip install peft decord librosa 后在执行

pip install --no-cache-dir --force-reinstall

"peft==0.18.0"

🔍 真相:transformers.modeling_layers 是 v4.40.0 引入,但在 v4.48+ 被移除或重构!

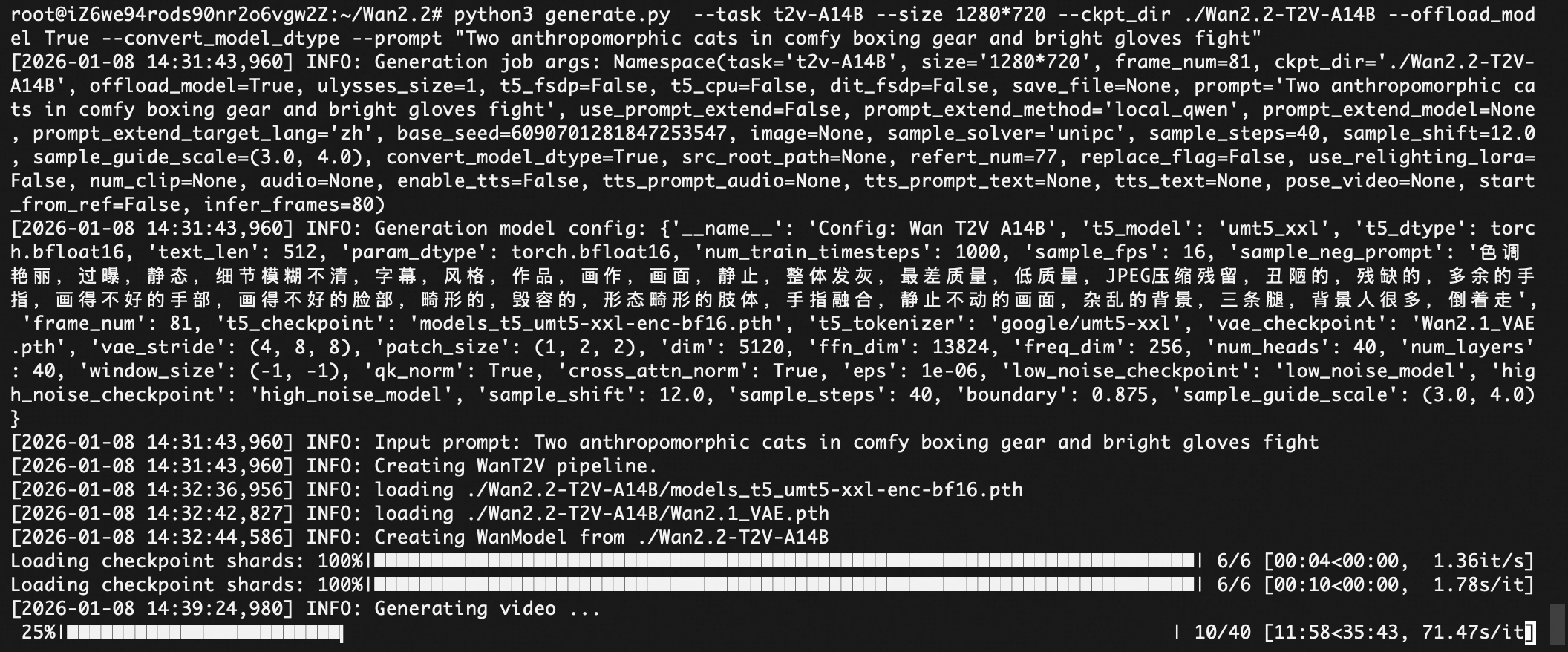

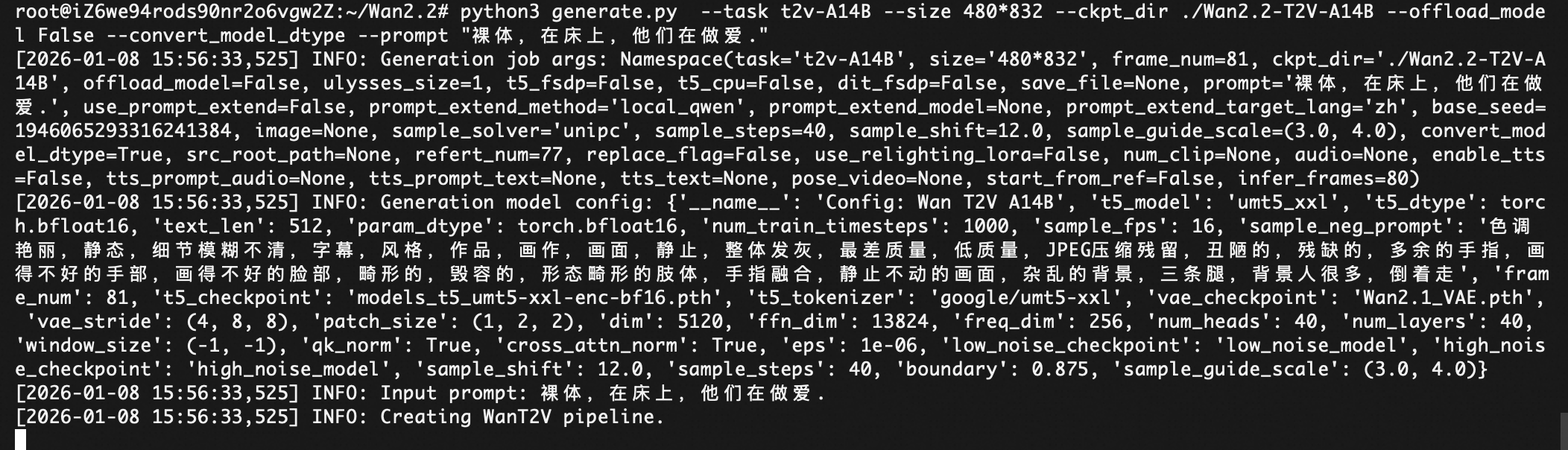

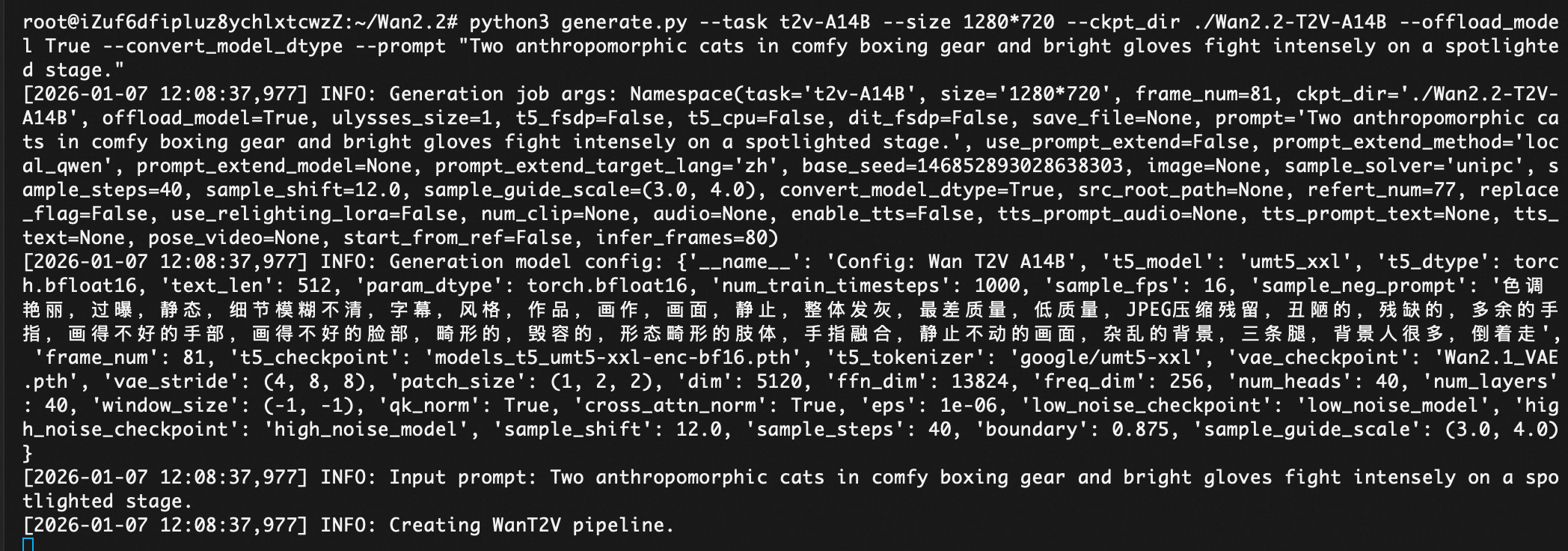

python3 generate.py --task t2v-A14B --size 1280720 --ckpt_dir ./Wan2.2-T2V-A14B --offload_model True --convert_model_dtype --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

下载到本地MAC去播放

en@Ethan-s-Super-Mac ~ % scp root@8.216.13.88:/root/Wan2.2/'t2v-A14B_1280720_1_Two_anthropomorphic_cats_in_comfy_boxing_gear_and__20260108_152755.mp4' ~/Downloads/

更快的命令:

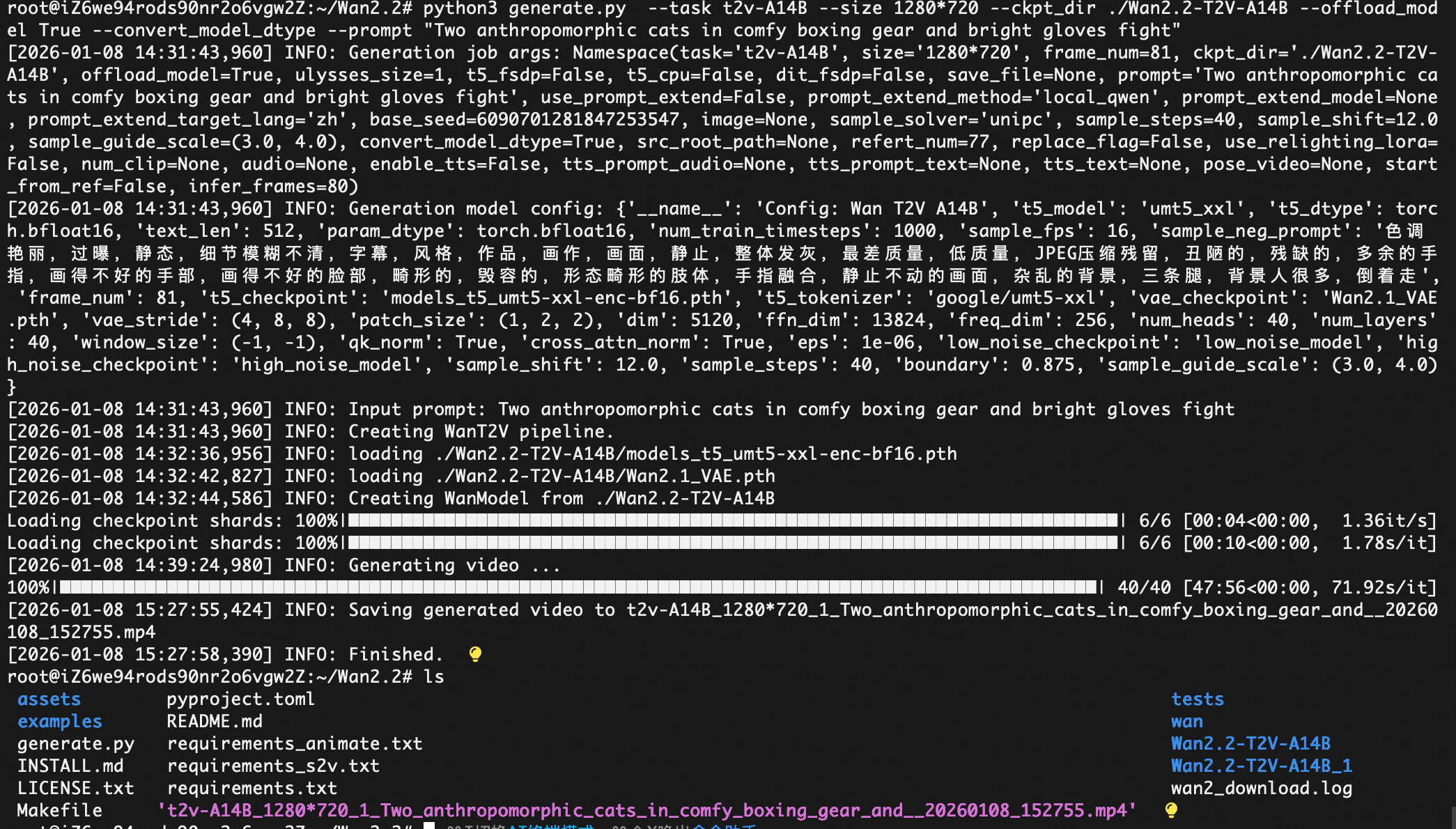

python3 generate.py --task t2v-A14B --size 480*832 --ckpt_dir ./Wan2.2-T2V-A14B --offload_model False --convert_model_dtype --prompt "裸体,在床上,他们在做爱."

测试一下这个开源模型是否控制内容的合规。ps:Wan2.2/wan/configs# vi shared_config.py 里面sample_neg_prompt里面去掉"过曝"。

注意:上述测试是为了测试模型对于有毒prompt的检测能力,请弘扬社会主义价值观,遵纪守法。

选项 作用 性能影响

--offload_model True 每次模型 forward 后,把 DiT/T5 权重从 GPU 卸载到 CPU,释放显存 ⚠️ 频繁 CPU↔GPU 数据传输,I/O 瓶颈严重

--convert_model_dtype 在推理时动态转换模型参数 dtype(如 float32 ↔ bfloat16) ⚠️ 增加额外计算和内存拷贝

检查环境:

root@iZuf6dfipluz8ychlxtcwzZ:~# lsb_release -a

LSB Version: core-11.1.0ubuntu4-noarch:security-11.1.0ubuntu4-noarch

Distributor ID: Ubuntu

Description: Ubuntu 22.04.5 LTS

Release: 22.04

Codename: jammy

git clone https://github.com/Wan-Video/Wan2.2.git

cd Wan2.2

/** Ensure torch >= 2.4.0

If the installation of flash_attn fails, try installing the other packages first and install flash_attn last *** /

pip install -r requirements.txt

后报错

pip install torch2.4.0 torchvision0.19.0 torchaudio2.4.0

--index-url https://pypi.tuna.tsinghua.edu.cn/simple

--extra-index-url https://pypi.tuna.tsinghua.edu.cn/pytorch-wheels/cu121

如果执行下面,会因为墙的问题很慢很慢。

pip install torch2.4.0 torchvision0.19.0 torchaudio2.4.0 --index-url https://download.pytorch.org/whl/cu121

部署成功:

Installing collected packages: mpmath, typing-extensions, sympy, pillow, nvidia-nvtx-cu12, nvidia-nvjitlink-cu12, nvidia-nccl-cu12, nvidia-curand-cu12, nvidia-cufft-cu12, nvidia-cuda-runtime-cu12, nvidia-cuda-nvrtc-cu12, nvidia-cuda-cupti-cu12, nvidia-cublas-cu12, numpy, networkx, fsspec, filelock, triton, nvidia-cusparse-cu12, nvidia-cudnn-cu12, nvidia-cusolver-cu12, torch, torchvision, torchaudio

Successfully installed filelock-3.20.2 fsspec-2025.12.0 mpmath-1.3.0 networkx-3.4.2 numpy-2.2.6 nvidia-cublas-cu12-12.1.3.1 nvidia-cuda-cupti-cu12-12.1.105 nvidia-cuda-nvrtc-cu12-12.1.105 nvidia-cuda-runtime-cu12-12.1.105 nvidia-cudnn-cu12-9.1.0.70 nvidia-cufft-cu12-11.0.2.54 nvidia-curand-cu12-10.3.2.106 nvidia-cusolver-cu12-11.4.5.107 nvidia-cusparse-cu12-12.1.0.106 nvidia-nccl-cu12-2.20.5 nvidia-nvjitlink-cu12-12.9.86 nvidia-nvtx-cu12-12.1.105 pillow-12.1.0 sympy-1.14.0 torch-2.4.0 torchaudio-2.4.0 torchvision-0.19.0 triton-3.0.0 typing-extensions-4.15.0

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv

torch>=2.4.0

torchvision>=0.19.0

torchaudio

opencv-python>=4.9.0.80

diffusers>=0.31.0

transformers>=4.49.0,<=4.51.3

tokenizers>=0.20.3

accelerate>=1.1.1

tqdm

imageio[ffmpeg]

easydict

ftfy

dashscope

imageio-ffmpeg

##备注掉# flash_attn

numpy>=1.23.5,<2

如果在大陆的服务器可以执行:

1. 安装构建依赖(关键!)

pip install ninja packaging setuptools wheel -i https://pypi.tuna.tsinghua.edu.cn/simple

2. 安装 flash-attn

pip install flash-attn --no-build-isolation -i https://pypi.tuna.tsinghua.edu.cn/simple

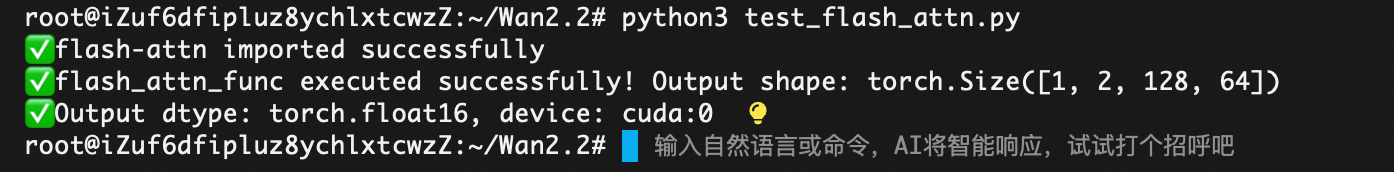

验证flash_attn是否如预期安装:

powershell

```python

# test_flash_attn.py

import torch

from flash_attn import flash_attn_func

# 检查是否能导入

print("✅ flash-attn imported successfully")

# 创建测试张量 (batch=1, heads=2, seqlen=128, dim=64)

B, H, N, D = 1, 2, 128, 64

q = torch.randn(B, H, N, D, dtype=torch.float16, device="cuda")

k = torch.randn(B, H, N, D, dtype=torch.float16, device="cuda")

v = torch.randn(B, H, N, D, dtype=torch.float16, device="cuda")

# 调用 flash attention

try:

out = flash_attn_func(q, k, v, dropout_p=0.0, softmax_scale=None, causal=False)

print(f"✅ flash_attn_func executed successfully! Output shape: {out.shape}")

print(f"✅ Output dtype: {out.dtype}, device: {out.device}")

except Exception as e:

print(f"❌ flash_attn_func failed: {e}")

raise

下载模型文件

pip install modelscope

modelscope download Wan-AI/Wan2.2-T2V-A14B --local_dir ./Wan2.2-T2V-A14B

python generate.py --task t2v-A14B --size 1280*720 --ckpt_dir ./Wan2.2-T2V-A14B --offload_model True --convert_model_dtype --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

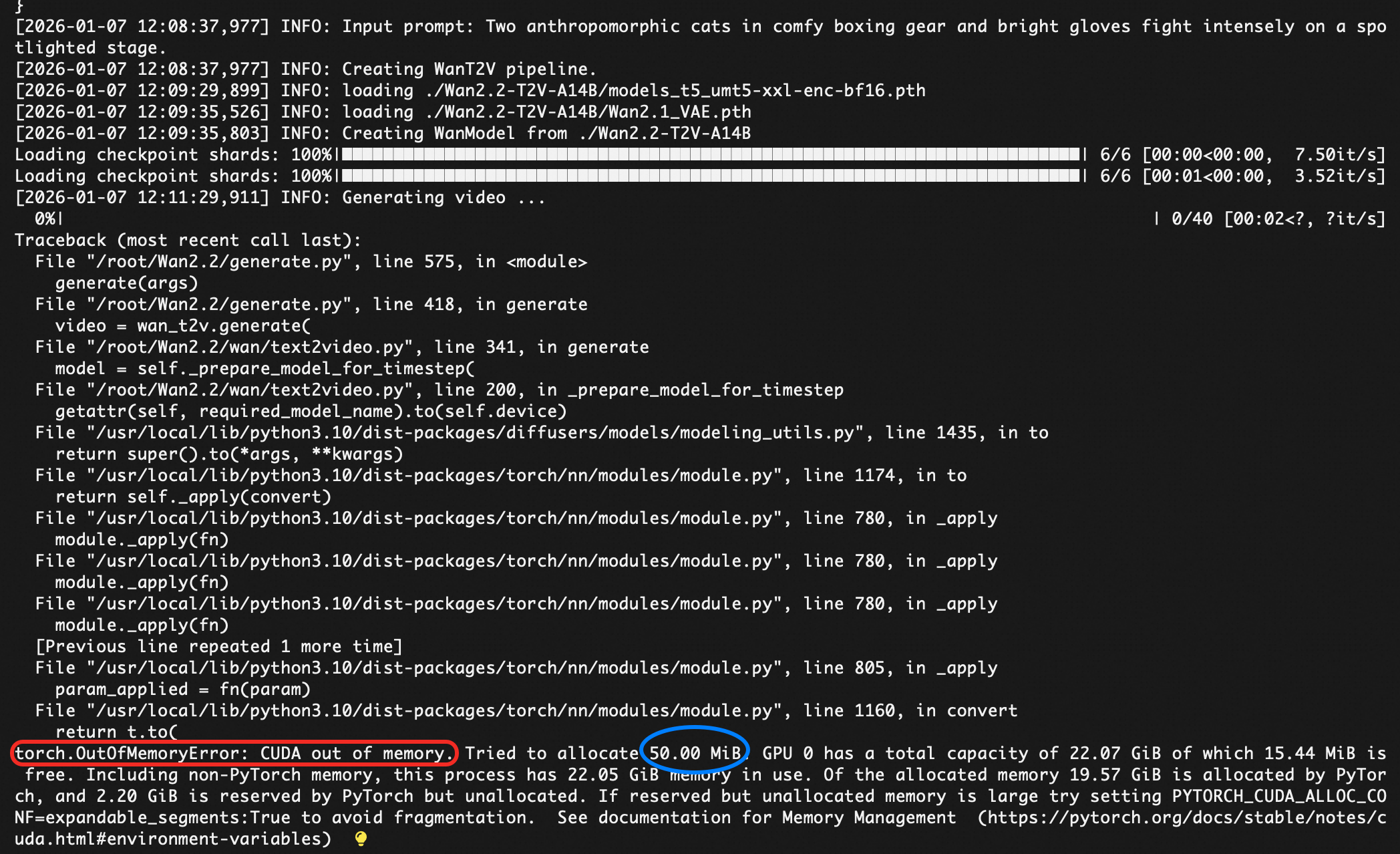

显示显存不够。

python generate.py --task t2v-A14B --size 1280720 --ckpt_dir ./Wan2.2-T2V-A14B --offload_model True --convert_model_dtype --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

改为

python generate.py --task t2v-A14B --size 640360 --ckpt_dir ./Wan2.2-T2V-A14B --offload_model True --convert_model_dtype --t5_cpu --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

python generate.py --task t2v-A14B --size "640*360" --ckpt_dir ./Wan2.2-T2V-A14B --offload_model True --convert_model_dtype --t5_cpu --prompt "cats boxing"

官网sample语句:

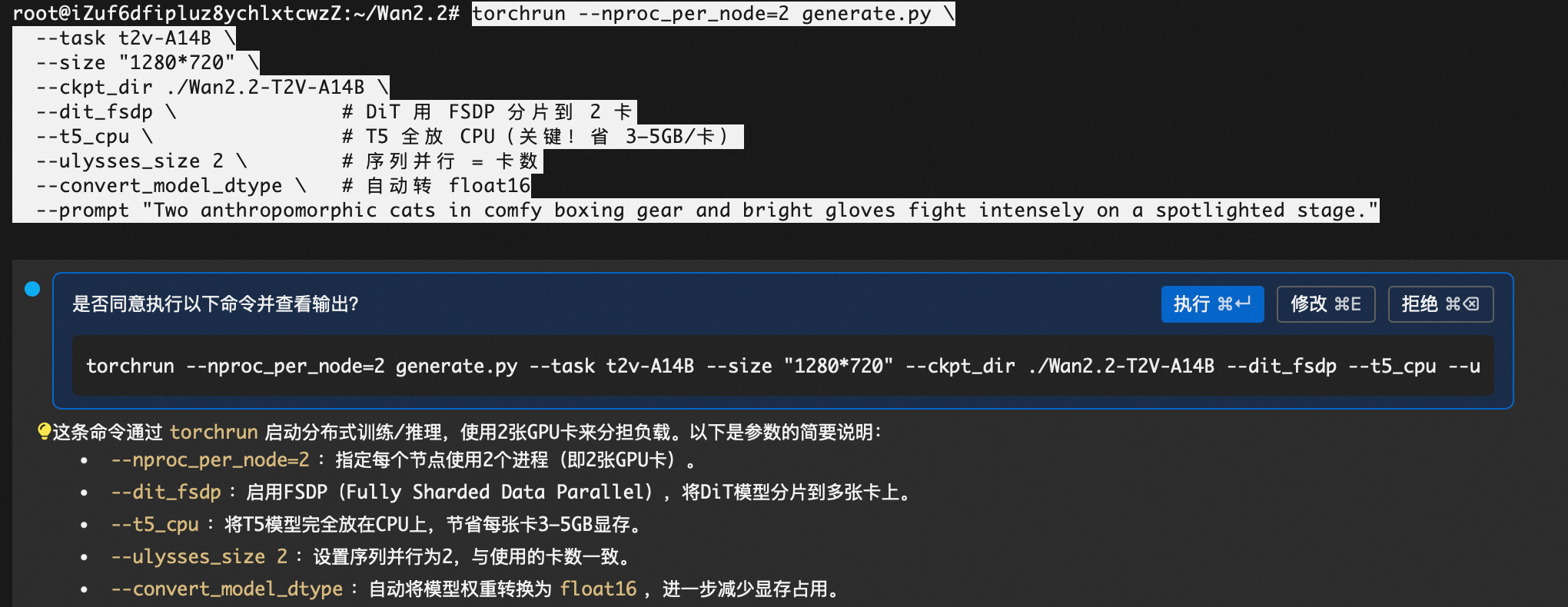

torchrun --nproc_per_node=8 generate.py --task t2v-A14B --size 1280*720 --ckpt_dir ./Wan2.2-T2V-A14B --dit_fsdp --t5_fsdp --ulysses_size 8 --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

因为是2卡,修改如下:

torchrun --nproc_per_node=2 generate.py

--task t2v-A14B

--size "480*832"

--ckpt_dir ./Wan2.2-T2V-A14B

--dit_fsdp --t5_cpu --ulysses_size 2 --convert_model_dtype

--prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

generate.py用法:

比如--size需要在里面选项之一。不能YY。

powershell

usage: generate.py [-h] [--task {t2v-A14B,i2v-A14B,ti2v-5B,animate-14B,s2v-14B}]

[--size {720*1280,1280*720,480*832,832*480,704*1280,1280*704,1024*704,704*1024}] [--frame_num FRAME_NUM]

[--ckpt_dir CKPT_DIR] [--offload_model OFFLOAD_MODEL] [--ulysses_size ULYSSES_SIZE] [--t5_fsdp] [--t5_cpu]

[--dit_fsdp] [--save_file SAVE_FILE] [--prompt PROMPT] [--use_prompt_extend]

[--prompt_extend_method {dashscope,local_qwen}] [--prompt_extend_model PROMPT_EXTEND_MODEL]

[--prompt_extend_target_lang {zh,en}] [--base_seed BASE_SEED] [--image IMAGE] [--sample_solver {unipc,dpm++}]

[--sample_steps SAMPLE_STEPS] [--sample_shift SAMPLE_SHIFT] [--sample_guide_scale SAMPLE_GUIDE_SCALE]

[--convert_model_dtype] [--src_root_path SRC_ROOT_PATH] [--refert_num REFERT_NUM] [--replace_flag]

[--use_relighting_lora] [--num_clip NUM_CLIP] [--audio AUDIO] [--enable_tts]

[--tts_prompt_audio TTS_PROMPT_AUDIO] [--tts_prompt_text TTS_PROMPT_TEXT] [--tts_text TTS_TEXT]

[--pose_video POSE_VIDEO] [--start_from_ref] [--infer_frames INFER_FRAMES]torchrun --nproc_per_node=2 generate.py --task t2v-A14B --size "480*832" --ckpt_dir ./Wan2.2-T2V-A14B --dit_fsdp --t5_cpu --ulysses_size 2 --frame_num 17 --convert_model_dtype --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

设置帧的参数在Wan2.2/wan/configs/shared_config.py 里面做了默认配置。

torchrun --nproc_per_node=2 generate.py --task t2v-A14B --size "120*208" --ckpt_dir ./Wan2.2-T2V-A14B --dit_fsdp --t5_cpu --ulysses_size 2 --frame_num 17 --convert_model_dtype --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage."

Wan2.2/wan/configs/init.py 里面修改SUPPORTED_SIZES ,把120*208选项加进去。

python3 generate.py

--task t2v-A14B

--size "128*208"

--frame_num 17

--ckpt_dir ./Wan2.2-T2V-A14B

--offload_model True \ # 关键!

--t5_cpu

--convert_model_dtype

--prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage"

python3 generate.py --task t2v-A14B --size "120*208" --ckpt_dir ./Wan2.2-T2V-A14B --offload_model True --convert_model_dtype --frame_num 17 --t5_cpu --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage"

加上--t5_cpu

T5-XXL 模型(~10B 参数)在 float16 下占 ~20GB 显存,即使 offload_model,T5 编码阶段仍会全量加载到 GPU!

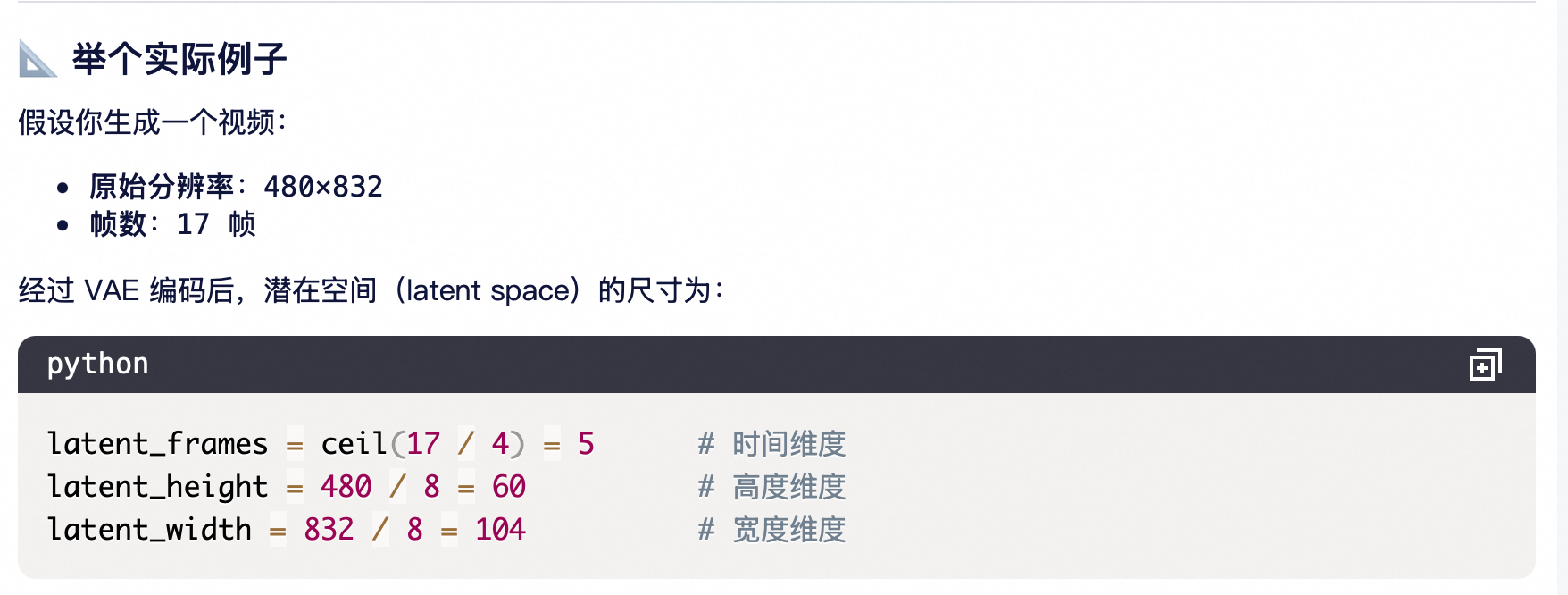

t2v_A14B.vae_stride = (4, 8, 8) 是 视频变分自编码器(Video VAE) 的关键参数,它定义了 输入视频如何被压缩成潜在表示(latent representation)。

为什么需要 VAE?

降低计算复杂度

原始视频:17×480×832 ≈ 680 万像素/帧 × 17帧

Latent 空间:5×60×104 ≈ 3.1 万 tokens

计算量减少 >200 倍!

学习语义压缩

VAE 不是简单降采样,而是学习 保留语义信息的紧凑表示(类似 JPEG 但可逆)

适配 DiT 架构

DiT(Diffusion Transformer)设计用于处理 token 序列,而非原始像素

root@iZuf6dfipluz8ychlxtcwzZ:~/Wan2.2# python3 generate.py --task t2v-A14B --size "120*208" --ckpt_dir ./Wan2.2-T2V-A14B --offload_model True --convert_model_dtype --frame_num 17 --t5_cpu --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage"

2026-01-07 14:46:14,021\] INFO: Generation job args: Namespace(task='t2v-A14B', size='120* 208', frame_num=17, ckpt_dir='./Wan2.2-T2V-A14B', offload_model=True, ulysses_size=1, t5_fsdp=False, t5_cpu=True, dit_fsdp=False, save_file=None, prompt='Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage', use_prompt_extend=False, prompt_extend_method='local_qwen', prompt_extend_model=None, prompt_extend_target_lang='zh', base_seed=8391047073834277617, image=None, sample_solver='unipc', sample_steps=40, sample_shift=12.0, sample_guide_scale=(3.0, 4.0), convert_model_dtype=True, src_root_path=None, refert_num=77, replace_flag=False, use_relighting_lora=False, num_clip=None, audio=None, enable_tts=False, tts_prompt_audio=None, tts_prompt_text=None, tts_text=None, pose_video=None, start_from_ref=False, infer_frames=80) \[2026-01-07 14:46:14,021\] INFO: Generation model config: {'**name** ': 'Config: Wan T2V A14B', 't5_model': 'umt5_xxl', 't5_dtype': torch.float16, 'text_len': 512, 'param_dtype': torch.float16, 'num_train_timesteps': 1000, 'sample_fps': 16, 'sample_neg_prompt': '色调艳丽,过曝,静态,细节模糊不清,字幕,风格,作品,画作,画面,静止,整体发灰,最差质量,低质量,JPEG压缩残留,丑陋的,残缺的,多余的手指,画得不好的手部,画得不好的脸部,畸形的,毁容的,形态畸形的肢体,手指融合,静止不动的画面,杂乱的背景,三条腿,背景人很多,倒着走', 'frame_num': 17, 't5_checkpoint': 'models_t5_umt5-xxl-enc-bf16.pth', 't5_tokenizer': 'google/umt5-xxl', 'vae_checkpoint': 'Wan2.1_VAE.pth', 'vae_stride': (4, 8, 8), 'patch_size': (1, 2, 2), 'dim': 5120, 'ffn_dim': 13824, 'freq_dim': 256, 'num_heads': 40, 'num_layers': 40, 'window_size': (-1, -1), 'qk_norm': True, 'cross_attn_norm': True, 'eps': 1e-06, 'low_noise_checkpoint': 'low_noise_model', 'high_noise_checkpoint': 'high_noise_model', 'sample_shift': 12.0, 'sample_steps': 40, 'boundary': 0.875, 'sample_guide_scale': (3.0, 4.0)} \[2026-01-07 14:46:14,021\] INFO: Input prompt: Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage \[2026-01-07 14:46:14,021\] INFO: Creating WanT2V pipeline. \[2026-01-07 14:47:05,891\] INFO: loading ./Wan2.2-T2V-A14B/models_t5_umt5-xxl-enc-bf16.pth \[2026-01-07 14:47:20,685\] INFO: loading ./Wan2.2-T2V-A14B/Wan2.1_VAE.pth \[2026-01-07 14:47:20,990\] INFO: Creating WanModel from ./Wan2.2-T2V-A14B Loading checkpoint shards: 100%\|████████████████████████████████████████████████████████████████████████\| 6/6 \[00:00\<00:00, 8.44it/s

Loading checkpoint shards: 100%|████████████████████████████████████████████████████████████████████████| 6/6 [00:00<00:00, 6.43it/s]

2026-01-07 14:49:21,708\] INFO: Generating video ...

python3 generate.py --task t2v-A14B --size "120*208" --ckpt_dir ./Wan2.2-T2V-A14B --offload_model True --convert_model_dtype --frame_num 17 --t5_cpu --sample_steps 20 --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage"

python3 generate.py --task t2v-A14B --size "120*208" --ckpt_dir ./Wan2.2-T2V-A14B --convert_model_dtype --frame_num 17 --t5_cpu --sample_steps 20 --prompt "Two anthropomorphic cats in comfy boxing gear and bright gloves fight intensely on a spotlighted stage"