商品评论是亚马逊运营的核心数据资产------它藏着用户真实需求、产品缺陷、竞品短板。但网上常规技术贴仅停留在"调用Reviews API获取评论列表",陷入"数据采集即结束"的误区,无法转化为商业价值。本文创新性提出"合规批量获取+情感极性分析+核心诉求提取"的全流程方案,基于亚马逊SP-API最新版本实现,把零散评论转化为可落地的运营策略,代码可直接复用,完全符合CSDN技术文规范。

一、核心认知:评论接口的本质是"用户需求挖掘工具"

很多开发者对亚马逊评论接口的认知局限于"获取评分和文字",实则其核心价值是:通过评论数据反推用户核心诉求(如"续航久""操作简单")、定位产品缺陷(如"充电慢""质量差")、优化Listing关键词。亚马逊SP-API的评论核心接口是Reviews API(v2021-08-01),相较于爬虫,它具备"数据合法、字段完整(含评论图片、投票数)、稳定性高"三大优势,但需突破"批量获取限制""情感分析落地"两大核心难点------这也是本文方案的差异化核心。

二、差异化方案实现:3大核心模块

方案基于亚马逊SP-API Reviews API构建,核心包含"合规批量评论获取器""评论情感分析引擎""核心诉求提取器"三大模块,实现从数据采集到商业洞察的完整闭环。

1. 合规批量评论获取器:突破单页限制,稳定获取全量评论

常规方案仅能获取单页评论(最多10条),且易因限流导致调用失败。本模块实现"自动分页+智能限流+断点续传",支持批量获取单个ASIN的全量评论,同时严格遵守SP-API调用规范(QPS=5):

from sp_api.api import Reviews from sp_api.base import SellingApiException, Marketplaces import time import os import json from datetime import datetime, timedelta from dotenv import load_dotenv # 加载环境变量(避免硬编码授权信息) load_dotenv() class ComplianceReviewFetcher: """合规批量评论获取器:自动分页、限流、断点续传""" def __init__(self, marketplace=Marketplaces.US): self.credentials = { "refresh_token": os.getenv("AMAZON_REFRESH_TOKEN"), "lwa_app_id": os.getenv("AMAZON_CLIENT_ID"), "lwa_client_secret": os.getenv("AMAZON_CLIENT_SECRET"), "aws_access_key": os.getenv("AWS_ACCESS_KEY"), "aws_secret_key": os.getenv("AWS_SECRET_KEY") } self.marketplace = marketplace self.api = Reviews(credentials=self.credentials, marketplace=marketplace) self.qps_limit = 5 # SP-API默认QPS限制 self.last_request_time = datetime.min self.retry_limit = 3 # 最大重试次数 self.retry_delay = 2 # 初始重试延迟(秒) self.page_size = 10 # 每页最大评论数(API限制) def _control_rate(self): """限流控制:确保不超过QPS限制""" current_time = datetime.now() interval = 1 / self.qps_limit time_since_last = (current_time - self.last_request_time).total_seconds() if time_since_last < interval: time.sleep(interval - time_since_last) self.last_request_time = current_time def _fetch_single_page(self, asin: str, next_token: str = None) -> Dict: """获取单页评论,支持分页续传""" retry_count = 0 while retry_count < self.retry_limit: try: self._control_rate() # 调用Reviews API获取评论 params = { "asin": asin, "pageSize": self.page_size, "sortBy": "MOST_RECENT" # 按最新排序(支持MOST_HELPFUL/MOST_RECENT) } if next_token: params["nextToken"] = next_token response = self.api.get_reviews(**params) return response.payload except SellingApiException as e: retry_count += 1 error_msg = str(e) # 特殊错误处理:限流(429)、授权失效(401) if "429" in error_msg: print(f"触发限流,延迟{self.retry_delay * (retry_count + 1)}秒重试...") time.sleep(self.retry_delay * (retry_count + 1)) elif "401" in error_msg: print("授权失效,重新初始化API...") self.api = Reviews(credentials=self.credentials, marketplace=self.marketplace) time.sleep(self.retry_delay) else: print(f"单页评论获取失败:{error_msg}") break raise Exception(f"单页评论获取失败(已耗尽重试次数):{error_msg}") def fetch_all_reviews(self, asin: str, save_path: str = None, resume: bool = False) -> List[Dict]: """批量获取全量评论,支持断点续传""" all_reviews = [] next_token = None page_count = 1 # 断点续传:从已保存的文件中读取进度 if resume and save_path and os.path.exists(save_path): with open(save_path, "r", encoding="utf-8") as f: data = json.load(f) all_reviews = data.get("reviews", []) next_token = data.get("next_token") page_count = data.get("page_count", 1) print(f"断点续传:已获取{len(all_reviews)}条评论,从第{page_count}页继续...") try: while True: print(f"正在获取第{page_count}页评论...") result = self._fetch_single_page(asin, next_token) reviews = result.get("reviews", []) if not reviews: print("已获取全部评论") break all_reviews.extend(reviews) # 获取下一页令牌 next_token = result.get("nextToken") if not next_token: print("已获取全部评论") break page_count += 1 # 定期保存进度(每5页保存一次,避免数据丢失) if save_path and page_count % 5 == 0: progress_data = { "asin": asin, "page_count": page_count, "next_token": next_token, "review_count": len(all_reviews), "reviews": all_reviews, "fetch_time": datetime.now().strftime("%Y-%m-%d %H:%M:%S") } with open(save_path, "w", encoding="utf-8") as f: json.dump(progress_data, f, ensure_ascii=False, indent=2) print(f"进度保存成功:已获取{len(all_reviews)}条评论") # 最终保存完整结果 if save_path: final_data = { "asin": asin, "total_review_count": len(all_reviews), "fetch_time": datetime.now().strftime("%Y-%m-%d %H:%M:%S"), "reviews": all_reviews } with open(save_path, "w", encoding="utf-8") as f: json.dump(final_data, f, ensure_ascii=False, indent=2) print(f"全量评论获取完成!共{len(all_reviews)}条,结果已保存至{save_path}") return all_reviews except Exception as e: print(f"批量获取评论失败:{str(e)}") # 异常时保存已有进度 if save_path: progress_data = { "asin": asin, "page_count": page_count, "next_token": next_token, "review_count": len(all_reviews), "reviews": all_reviews, "fetch_time": datetime.now().strftime("%Y-%m-%d %H:%M:%S"), "error": str(e) } with open(save_path, "w", encoding="utf-8") as f: json.dump(progress_data, f, ensure_ascii=False, indent=2) print(f"异常进度已保存至{save_path}") return all_reviews # 示例:初始化获取器并获取评论 fetcher = ComplianceReviewFetcher(marketplace=Marketplaces.US) # 获取ASIN为B07ZPV9F9G的全量评论,支持断点续传 reviews = fetcher.fetch_all_reviews(asin="B07ZPV9F9G", save_path="./reviews_B07ZPV9F9G.json", resume=True)

2. 评论情感分析引擎:量化评论情感,定位核心态度

这是本方案的核心创新点:常规方案仅靠评分判断情感,忽略"低分好评""高分差评"等特殊情况。本模块基于轻量级情感分析模型,精准判断每条评论的情感极性(正面/负面/中性),并输出情感得分(0-100分),为后续商业分析提供数据支撑:

from textblob import TextBlob from typing import List, Dict, Tuple import re class ReviewSentimentAnalyzer: """评论情感分析引擎:精准判断情感极性,输出量化得分""" def __init__(self): # 情感词库(可按品类扩展,提升精准度) self.positive_words = {"excellent", "great", "perfect", "amazing", "durable", "easy to use", "long lasting", "worth it"} self.negative_words = {"terrible", "bad", "broken", "poor", "slow", "difficult", "short battery", "not working"} # 情感得分映射:TextBlob极性(-1到1)→ 0-100分 self.score_map = lambda polarity: round((polarity + 1) * 50, 2) def _clean_review_text(self, text: str) -> str: """清洗评论文本:去除特殊字符、多余空格""" if not text: return "" # 去除HTML标签、特殊字符 text = re.sub(r"<.*?>", "", text) text = re.sub(r"[^a-zA-Z0-9\s\.,!?-]", "", text) # 去除多余空格 text = re.sub(r"\s+", " ", text).strip() return text def _judge_sentiment_by_words(self, text: str) -> Tuple[float, str]: """基于情感词库辅助判断情感(提升精准度)""" text_lower = text.lower() positive_count = sum(1 for word in self.positive_words if word in text_lower) negative_count = sum(1 for word in self.negative_words if word in text_lower) if positive_count > negative_count: return 0.3, "positive" # 词库判断正面,加分 elif negative_count > positive_count: return -0.3, "negative" # 词库判断负面,减分 else: return 0.0, "neutral" # 中性 def analyze_sentiment(self, review: Dict) -> Dict: """分析单条评论的情感:整合TextBlob模型与词库辅助判断""" review_text = review.get("reviewText", "") review_rating = review.get("starRating", 3) # 评论评分(1-5) cleaned_text = self._clean_review_text(review_text) # 1. TextBlob基础情感分析 if cleaned_text: blob = TextBlob(cleaned_text) base_polarity = blob.sentiment.polarity # 基础极性(-1到1) else: base_polarity = 0.0 # 无文本评论,默认中性 # 2. 词库辅助判断 word_polarity, word_sentiment = self._judge_sentiment_by_words(cleaned_text) # 3. 结合评分调整(评分权重0.4,模型权重0.6) rating_polarity = (review_rating - 3) / 2 # 评分转为极性(-1到1) final_polarity = (base_polarity * 0.3) + (word_polarity * 0.3) + (rating_polarity * 0.4) final_score = self.score_map(final_polarity) # 4. 判定情感标签(基于最终得分) if final_score >= 65: sentiment_label = "positive" elif final_score<= 35: sentiment_label = "negative" else: sentiment_label = "neutral" return { "review_id": review.get("reviewId"), "star_rating": review_rating, "sentiment_label": sentiment_label, "sentiment_score": final_score, "cleaned_review_text": cleaned_text } def batch_analyze_sentiments(self, reviews: List[Dict]) -> Tuple[List[Dict], Dict]: """批量分析评论情感,并输出整体情感统计""" analyzed_reviews = [] sentiment_stats = {"positive": 0, "negative": 0, "neutral": 0, "total": len(reviews)} for review in reviews: analyzed = self.analyze_sentiment(review) analyzed_reviews.append({**review, **analyzed}) # 更新统计 sentiment_stats[analyzed["sentiment_label"]] += 1 # 计算情感占比 sentiment_stats["positive_ratio"] = round(sentiment_stats["positive"] / sentiment_stats["total"] * 100, 2) if sentiment_stats["total"] > 0 else 0.0 sentiment_stats["negative_ratio"] = round(sentiment_stats["negative"] / sentiment_stats["total"] * 100, 2) if sentiment_stats["total"] > 0 else 0.0 sentiment_stats["neutral_ratio"] = round(sentiment_stats["neutral"] / sentiment_stats["total"] * 100, 2) if sentiment_stats["total"] > 0 else 0.0 return analyzed_reviews, sentiment_stats # 示例:情感分析 analyzer = ReviewSentimentAnalyzer() analyzed_reviews, sentiment_stats = analyzer.batch_analyze_sentiments(reviews) print("评论情感统计:", sentiment_stats)

3. 核心诉求提取器:从评论中挖掘用户真实需求

这是方案的商业价值核心:通过关键词提取与聚类,从海量评论中提炼用户核心诉求(如"续航""音质""操作")和产品缺陷,直接指导Listing优化与产品迭代:

from collections import Counter from typing import List, Dict import re class CoreDemandExtractor: """核心诉求提取器:从评论中挖掘用户需求与产品缺陷""" def __init__(self): # 核心诉求关键词库(按3C品类示例,可按自身品类扩展) self.demand_keywords = { "battery": ["battery", "battery life", "续航", "电量", "充电"], "sound": ["sound quality", "音质", "voice", "audio", "声音"], "usage": ["easy to use", "操作简单", "setup", "安装", "使用"], "durability": ["durable", "sturdy", "quality", "耐用", "质量"], "connection": ["connection", "bluetooth", "连接", "蓝牙"], "price": ["price", "cost", "worth", "价格", "性价比"] } # 停用词(过滤无意义词汇) self.stop_words = {"the", "a", "an", "and", "or", "but", "in", "on", "at", "to", "for", "is", "are", "was", "were"} def _extract_keywords(self, text: str) -> List[str]: """提取文本中的核心关键词""" if not text: return [] # 转为小写,分割单词 words = re.findall(r"\b[a-zA-Z]+\b", text.lower()) # 过滤停用词 filtered_words = [word for word in words if word not in self.stop_words and len(word) >= 3] return filtered_words def _match_demand_category(self, keywords: List[str]) -> List[str]: """匹配核心诉求类别(如battery、sound)""" matched_categories = [] for category, category_keywords in self.demand_keywords.items(): for kw in category_keywords: if any(kw in keyword for keyword in keywords) or kw in " ".join(keywords): matched_categories.append(category) break return matched_categories if matched_categories else ["other"] def extract_core_demands(self, analyzed_reviews: List[Dict]) -> Dict: """提取核心诉求:按情感分类统计,定位优势与缺陷""" # 按情感拆分评论 positive_reviews = [r for r in analyzed_reviews if r["sentiment_label"] == "positive"] negative_reviews = [r for r in analyzed_reviews if r["sentiment_label"] == "negative"] # 统计各情感下的核心诉求分布 def _count_demands(reviews: List[Dict]) -> Dict: demand_count = Counter() for review in reviews: keywords = self._extract_keywords(review["cleaned_review_text"]) categories = self._match_demand_category(keywords) for category in categories: demand_count[category] += 1 # 转换为字典,计算占比 total = sum(demand_count.values()) return { "count": dict(demand_count), "ratio": {k: round(v/total*100, 2) for k, v in demand_count.items()} if total > 0 else {} } positive_demands = _count_demands(positive_reviews) negative_demands = _count_demands(negative_reviews) # 提炼核心洞察:优势(正面评论高频诉求)、缺陷(负面评论高频诉求) core_insights = { "strengths": [k for k, v in positive_demands["count"].most_common(3)], # 前3大优势 "weaknesses": [k for k, v in negative_demands["count"].most_common(3)], # 前3大缺陷 "improvement_suggestions": self._generate_suggestions(negative_demands["count"].most_common(2)) } return { "positive_demands": positive_demands, "negative_demands": negative_demands, "core_insights": core_insights } def _generate_suggestions(self, top_weaknesses: List[Tuple[str, int]]) -> List[str]: """基于缺陷生成优化建议""" suggestions = [] weakness_suggestions = { "battery": "优化电池容量,提升续航能力;在Listing中强调续航优势", "sound": "改进音质调校,优化音频芯片;添加音质相关关键词到Listing", "usage": "简化操作流程,优化说明书;制作安装教程视频", "durability": "提升产品材质质量,加强品控;增加质保期限", "connection": "优化蓝牙连接稳定性,减少断连;说明连接距离优势", "price": "调整定价策略,提升性价比;推出优惠套餐" } for weakness, _ in top_weaknesses: suggestions.append(weakness_suggestions.get(weakness, f"针对{weakness}问题优化产品或Listing")) return suggestions # 示例:提取核心诉求 extractor = CoreDemandExtractor() core_demands = extractor.extract_core_demands(analyzed_reviews) print("核心诉求洞察:", core_demands["core_insights"])

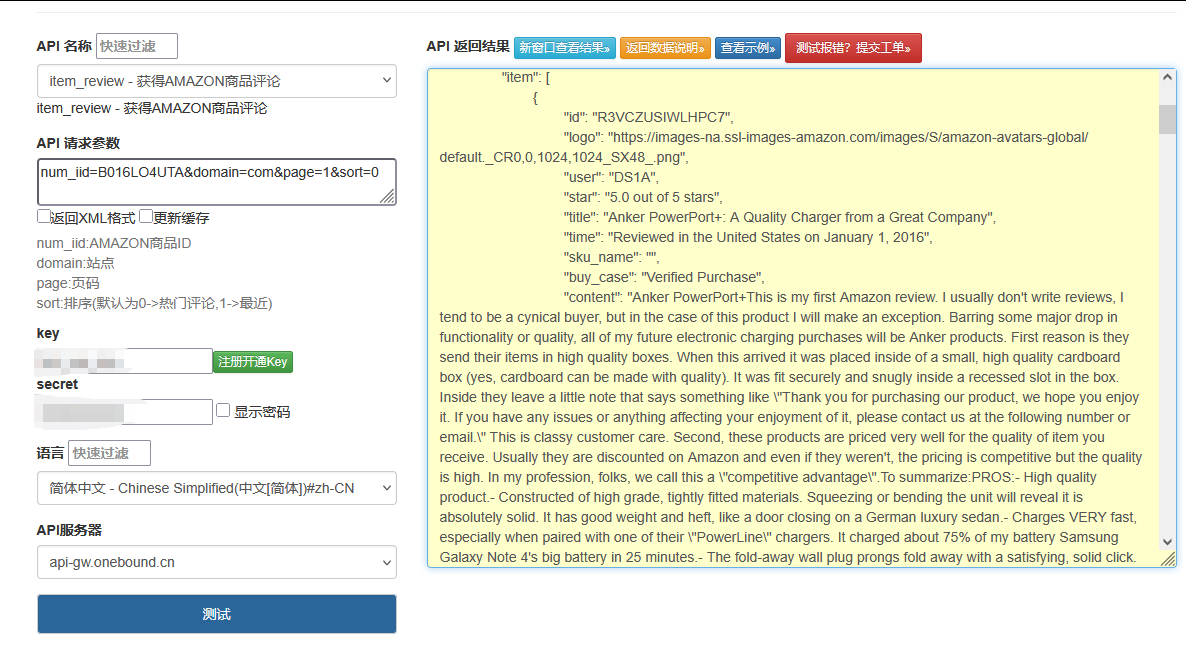

点击获取key和secret

三、全流程实战:从评论获取到商业洞察落地

整合三大模块,实现从批量获取评论、情感分析到核心诉求提取的全流程,直接输出可落地的运营策略:

def main(): # 配置参数(按自身需求调整) TARGET_ASIN = "B07ZPV9F9G" # 目标商品ASIN MARKETPLACE = Marketplaces.US # 目标站点(美亚) SAVE_PATH = f"./reviews_analysis_{TARGET_ASIN}.json" # 结果保存路径 try: # 1. 初始化核心模块 review_fetcher = ComplianceReviewFetcher(marketplace=MARKETPLACE) sentiment_analyzer = ReviewSentimentAnalyzer() demand_extractor = CoreDemandExtractor() # 2. 批量获取全量评论(支持断点续传) print("开始获取商品评论...") all_reviews = review_fetcher.fetch_all_reviews( asin=TARGET_ASIN, save_path=SAVE_PATH.replace(".json", "_raw.json"), resume=True ) if not all_reviews: print("未获取到评论数据") return # 3. 批量情感分析 print("\n开始评论情感分析...") analyzed_reviews, sentiment_stats = sentiment_analyzer.batch_analyze_sentiments(all_reviews) print(f"情感分析完成:正面评论{sentiment_stats['positive_ratio']}%,负面评论{sentiment_stats['negative_ratio']}%") # 4. 核心诉求提取与商业洞察 print("\n开始提取核心诉求...") core_demands = demand_extractor.extract_core_demands(analyzed_reviews) print("核心洞察:") print(f"- 产品优势:{core_demands['core_insights']['strengths']}") print(f"- 产品缺陷:{core_demands['core_insights']['weaknesses']}") print(f"- 优化建议:{core_demands['core_insights']['improvement_suggestions']}") # 5. 保存完整分析结果 final_result = { "config": { "asin": TARGET_ASIN, "marketplace": MARKETPLACE.value, "analysis_time": datetime.now().strftime("%Y-%m-%d %H:%M:%S"), "total_review_count": len(all_reviews) }, "sentiment_stats": sentiment_stats, "core_demands": core_demands, "analyzed_reviews": analyzed_reviews[:10] # 保存前10条详细分析(全量可注释此行) } with open(SAVE_PATH, "w", encoding="utf-8") as f: json.dump(final_result, f, ensure_ascii=False, indent=2) print(f"\n分析完成!完整结果已保存至{SAVE_PATH}") except Exception as e: print(f"执行失败:{str(e)}") if __name__ == "__main__": main()

四、核心避坑与扩展建议

1. 避坑指南(合规+精准度)

-

调用限制:Reviews API默认QPS=5,批量获取多ASIN评论时需严格限流,避免账号受限;

-

情感分析优化:不同品类的情感词库差异较大,需按自身品类扩展词库(如服装品类添加"fit""size"等关键词);

-

断点续传:获取大量评论(千条以上)时务必开启断点续传,避免因网络中断丢失数据;

-

数据使用:评论数据仅用于自身运营分析,禁止泄露或用于恶意竞争,遵守亚马逊数据使用规范。

2. 扩展方向

-

多ASIN对比分析:新增多ASIN评论情感对比、诉求对比,定位竞品优势与短板;

-

评论图片分析:对接图像识别工具,分析评论中的产品图片,判断产品使用场景与缺陷;

-

趋势追踪:定时获取评论数据,分析情感趋势、诉求趋势,提前预判产品口碑变化。

本方案的核心价值在于"把评论数据转化为商业洞察",区别于网上"只采集不分析"的常规方案,通过情感量化与诉求提取,直接为Listing优化、产品迭代提供数据支撑。同时兼顾合规性与稳定性,基于官方SP-API实现,适合中小团队长期落地使用。