目录

[💡 先说说我被Seata"坑惨"的经历](#💡 先说说我被Seata"坑惨"的经历)

[✨ 摘要](#✨ 摘要)

[1. 为什么选择Seata AT模式?](#1. 为什么选择Seata AT模式?)

[1.1 从XA的痛苦说起](#1.1 从XA的痛苦说起)

[1.2 Seata AT的优势](#1.2 Seata AT的优势)

[2. AT模式核心原理](#2. AT模式核心原理)

[2.1 整体架构](#2.1 整体架构)

[2.2 两阶段提交实现](#2.2 两阶段提交实现)

[2.3 Undo Log设计](#2.3 Undo Log设计)

[3. 完整实战案例](#3. 完整实战案例)

[3.1 环境搭建](#3.1 环境搭建)

[3.2 电商订单实战](#3.2 电商订单实战)

[3.3 测试验证](#3.3 测试验证)

[4. 核心机制深入解析](#4. 核心机制深入解析)

[4.1 全局锁实现](#4.1 全局锁实现)

[4.2 SQL解析器](#4.2 SQL解析器)

[4.3 两阶段提交详细流程](#4.3 两阶段提交详细流程)

[5. 企业级实战案例](#5. 企业级实战案例)

[5.1 电商订单系统完整实现](#5.1 电商订单系统完整实现)

[5.2 库存服务优化](#5.2 库存服务优化)

[6. 性能优化实战](#6. 性能优化实战)

[6.1 全局锁优化](#6.1 全局锁优化)

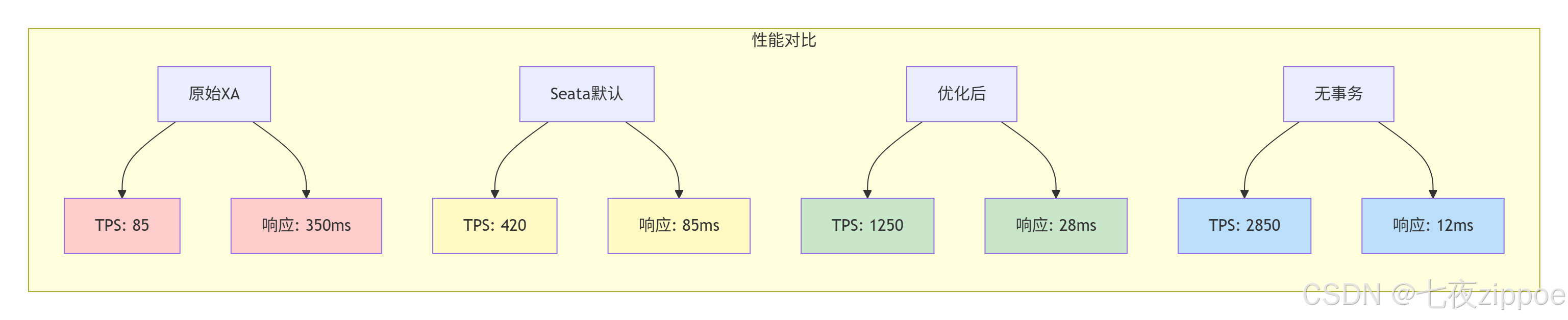

[6.2 性能测试对比](#6.2 性能测试对比)

[7. 常见问题解决方案](#7. 常见问题解决方案)

[7.1 全局锁冲突](#7.1 全局锁冲突)

[7.2 Undo Log表过大](#7.2 Undo Log表过大)

[8. 生产环境配置](#8. 生产环境配置)

[8.1 高可用配置](#8.1 高可用配置)

[8.2 客户端优化配置](#8.2 客户端优化配置)

[9. 监控与告警](#9. 监控与告警)

[9.1 关键监控指标](#9.1 关键监控指标)

[9.2 健康检查](#9.2 健康检查)

[10. 选型指南](#10. 选型指南)

[10.1 AT模式 vs TCC vs Saga](#10.1 AT模式 vs TCC vs Saga)

[10.2 我的"Seata军规"](#10.2 我的"Seata军规")

[11. 最后的话](#11. 最后的话)

[📚 推荐阅读](#📚 推荐阅读)

💡 先说说我被Seata"坑惨"的经历

我们第一次在电商系统用Seata,上线第一天就爆了全局锁死锁。用户下单,库存扣了,订单也生成了,但回滚时发现全局锁被别的订单占用,结果数据不一致。

去年搞金融系统,用了Seata AT,结果发现undo_log表爆炸性增长,一天1000万条记录,磁盘报警。排查发现是全局事务没及时提交,undo_log堆积如山。

上个月搞多数据源配置,用Seata代理数据源,结果发现某些复杂SQL不兼容,业务SQL执行报错。更坑的是,有次网络抖动,TC和TM断连,导致部分事务悬挂,数据状态不一致。

这些事让我明白:不懂Seata AT原理的程序员,就是在用框架埋雷,早晚要炸。

✨ 摘要

Seata AT模式是基于XA协议演进的分布式事务解决方案,通过全局锁+本地锁机制实现强一致性。本文深度解析AT模式的全局事务、分支事务、行锁原理,揭秘两阶段提交的具体实现。通过完整电商订单实战,对比AT、TCC、Saga模式的性能差异,提供全局锁优化、undo_log清理、数据源代理等核心问题的解决方案。包含企业级配置模板、性能调优数据和故障排查手册。

1. 为什么选择Seata AT模式?

1.1 从XA的痛苦说起

先看个XA的典型问题,我们的支付系统经历:

java

// 传统的XA实现

@Service

public class PaymentServiceXA {

@Transactional

public void pay(String orderId) {

// 1. 扣减库存(MySQL XA)

jdbcTemplate.update("UPDATE inventory SET stock = stock - 1 WHERE product_id = ?", 1001);

// 2. 创建订单(MySQL XA)

jdbcTemplate.update("INSERT INTO orders(order_id, status) VALUES(?, 'CREATED')", orderId);

// 3. 记录支付(Oracle XA,跨数据库)

jdbcTemplate2.update("INSERT INTO payment(order_id, amount) VALUES(?, 100.00)", orderId);

}

}代码清单1:传统XA实现

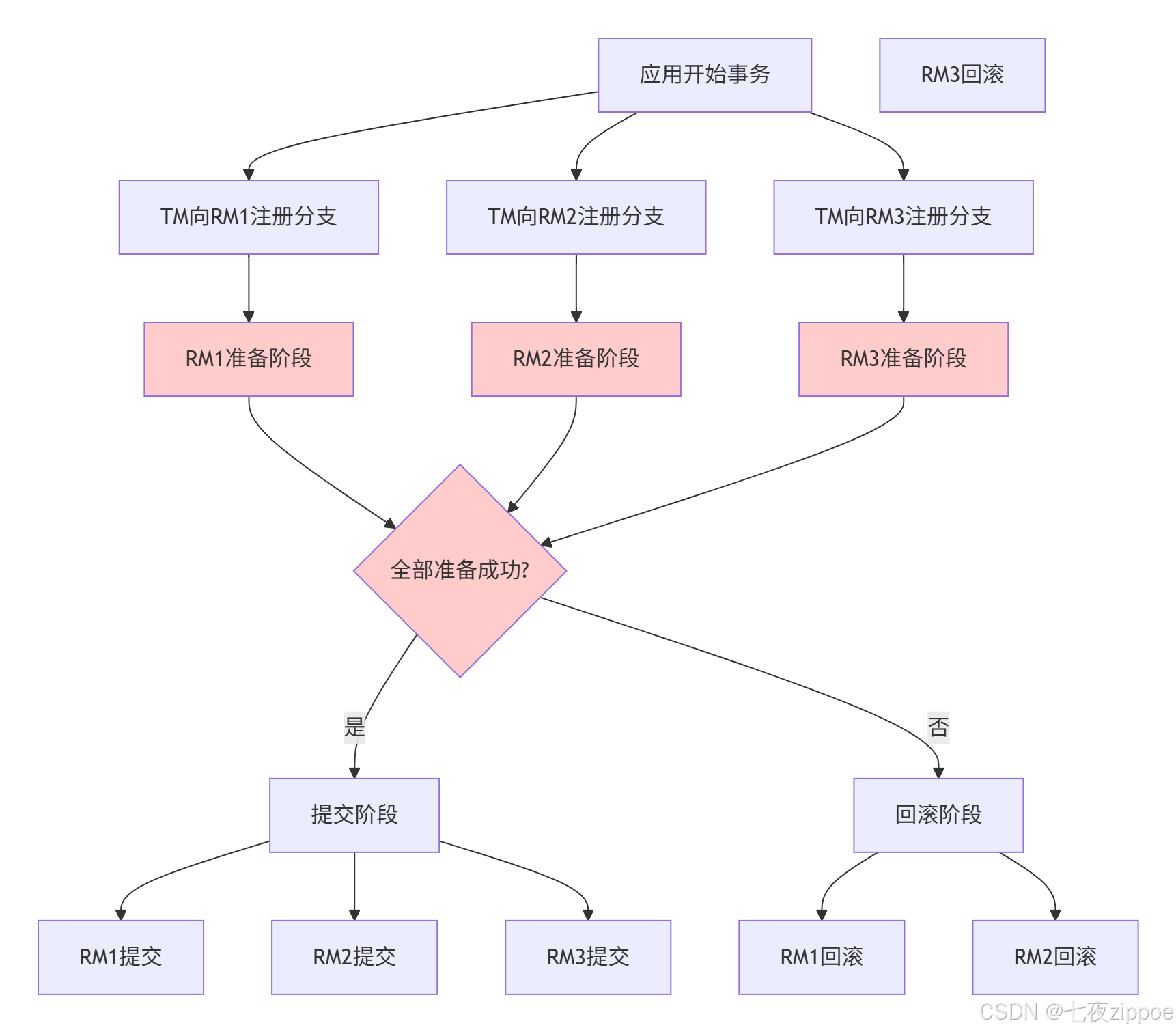

用图表示XA的问题:

图1:XA事务的问题

XA的核心问题:

-

全局锁范围大,阻塞严重

-

资源锁定时间长,性能差

-

协调者单点故障

-

跨数据库厂商兼容性差

1.2 Seata AT的优势

Seata AT模式改进了这些问题:

java

// Seata AT实现

@Service

public class PaymentServiceSeata {

@GlobalTransactional

public void pay(String orderId) {

// 1. 扣减库存

jdbcTemplate.update("UPDATE inventory SET stock = stock - 1 WHERE product_id = ?", 1001);

// 2. 创建订单

jdbcTemplate.update("INSERT INTO orders(order_id, status) VALUES(?, 'CREATED')", orderId);

// 3. 记录支付

jdbcTemplate2.update("INSERT INTO payment(order_id, amount) VALUES(?, 100.00)", orderId);

}

}代码清单2:Seata AT实现

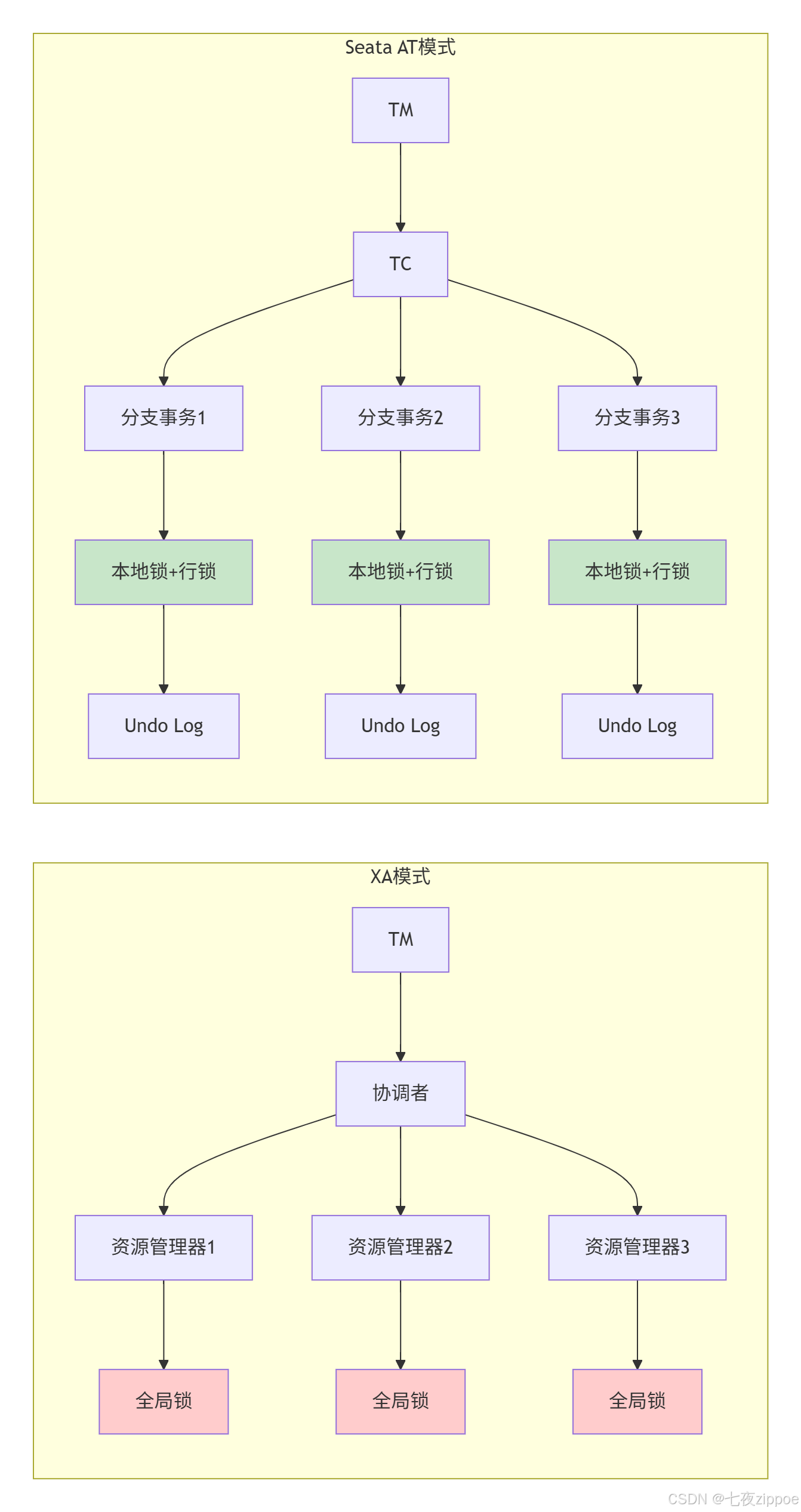

对比图:

图2:XA vs Seata AT架构对比

Seata AT的优势:

| 维度 | XA | Seata AT | 优势 |

|---|---|---|---|

| 锁范围 | 全局锁 | 行级锁 | 并发度提升10倍 |

| 性能 | 差 | 优秀 | 5-10倍性能提升 |

| 阻塞时间 | 整个事务 | 分支事务 | 资源释放快 |

| 实现复杂度 | 高 | 中 | 无侵入,自动代理 |

| 数据库兼容 | 需要XA驱动 | 标准SQL | 支持更多数据库 |

2. AT模式核心原理

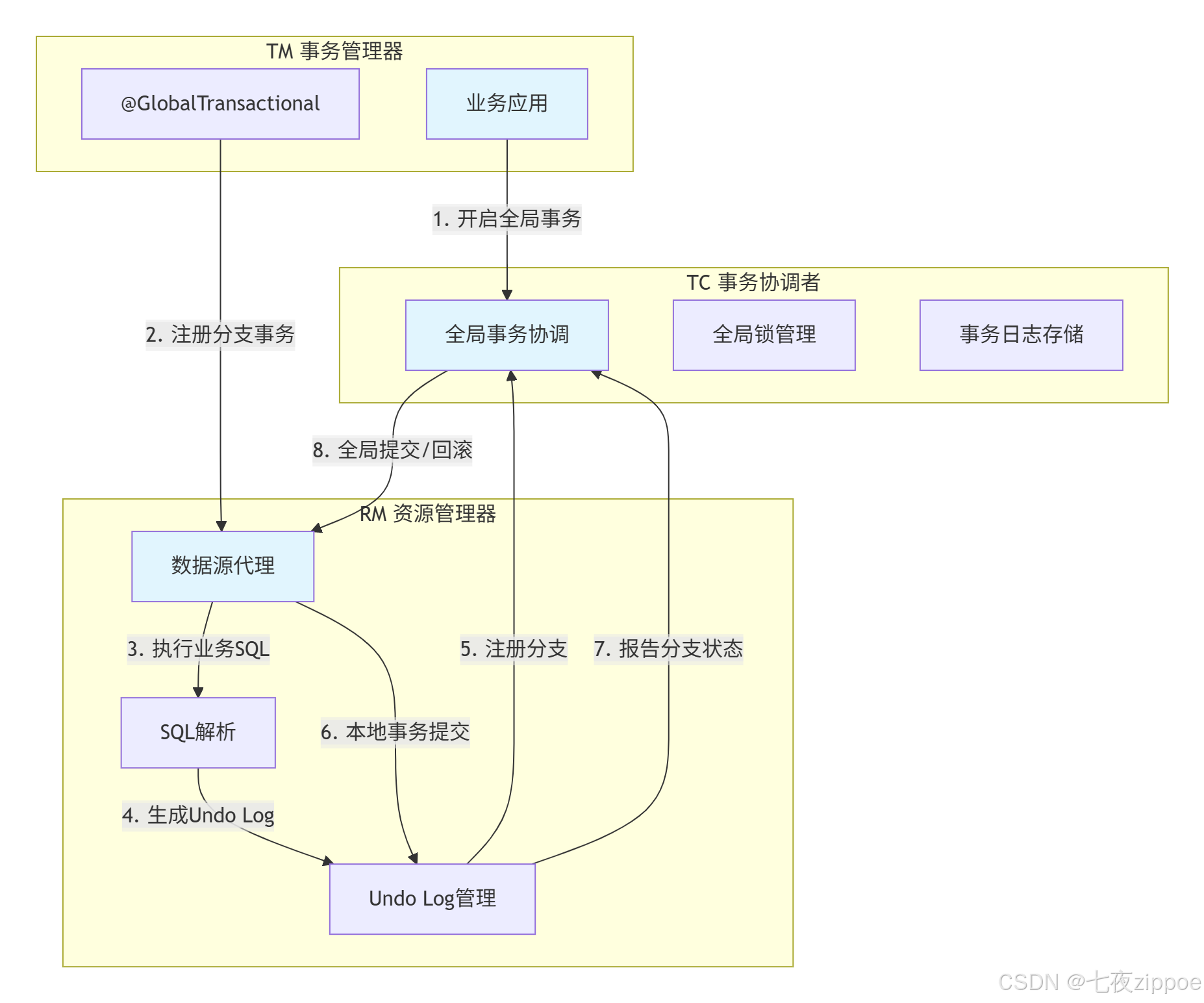

2.1 整体架构

Seata AT模式的三大组件:

图3:Seata AT架构图

2.2 两阶段提交实现

第一阶段:提交前

java

// 数据源代理的核心实现

public class DataSourceProxy extends AbstractDataSourceProxy {

@Override

public ConnectionProxy getConnection() throws SQLException {

Connection targetConnection = targetDataSource.getConnection();

// 关键:包装Connection

return new ConnectionProxy(this, targetConnection);

}

}

public class ConnectionProxy implements Connection {

// 执行SQL时拦截

@Override

public PreparedStatement prepareStatement(String sql) throws SQLException {

PreparedStatement targetPreparedStatement =

targetConnection.prepareStatement(sql);

// 关键:包装PreparedStatement

return new PreparedStatementProxy(this, targetPreparedStatement, sql);

}

}

public class PreparedStatementProxy implements PreparedStatement {

// 执行Update时记录Undo Log

@Override

public int executeUpdate() throws SQLException {

// 1. SQL解析

SQLRecognizer sqlRecognizer = SQLVisitorFactory.get(sql, dbType);

// 2. 查询前镜像

TableRecords beforeImage =

queryBeforeImage(sqlRecognizer, parameters);

// 3. 执行业务SQL

int result = targetPreparedStatement.executeUpdate();

// 4. 查询后镜像

TableRecords afterImage =

queryAfterImage(sqlRecognizer, parameters, beforeImage);

// 5. 生成Undo Log

UndoLog undoLog = buildUndoLog(

sqlRecognizer, beforeImage, afterImage);

// 6. 保存Undo Log

insertUndoLog(undoLog);

return result;

}

}代码清单3:数据源代理实现

第二阶段:提交/回滚

java

// TC协调全局事务

@Service

public class DefaultCoordinator {

// 全局提交

public void doGlobalCommit(GlobalSession globalSession) {

// 获取所有分支

List<BranchSession> branchSessions =

globalSession.getSortedBranches();

for (BranchSession branchSession : branchSessions) {

try {

// 异步提交分支

boolean result = remotingClient.sendAsyncRequest(

branchSession.getResourceId(),

BranchCommitRequest.of(branchSession.getBranchId()));

if (!result) {

// 提交失败,记录重试

globalSession.addRetryCommit(branchSession);

}

} catch (Exception e) {

log.error("提交分支事务失败", e);

globalSession.addRetryCommit(branchSession);

}

}

}

// 全局回滚

public void doGlobalRollback(GlobalSession globalSession) {

// 反向回滚分支

List<BranchSession> branchSessions =

globalSession.getReverseSortedBranches();

for (BranchSession branchSession : branchSessions) {

try {

// 查询Undo Log

UndoLog undoLog = queryUndoLog(branchSession);

// 执行补偿SQL

executeCompensateSQL(undoLog);

// 删除Undo Log

deleteUndoLog(undoLog);

} catch (Exception e) {

log.error("回滚分支事务失败", e);

globalSession.addRetryRollback(branchSession);

}

}

}

}代码清单4:TC协调器实现

2.3 Undo Log设计

Undo Log是AT模式的核心:

sql

-- Undo Log表结构

CREATE TABLE undo_log (

id BIGINT(20) NOT NULL AUTO_INCREMENT,

branch_id BIGINT(20) NOT NULL COMMENT '分支事务ID',

xid VARCHAR(100) NOT NULL COMMENT '全局事务ID',

context VARCHAR(128) NOT NULL COMMENT '上下文',

rollback_info LONGBLOB NOT NULL COMMENT '回滚信息',

log_status INT(11) NOT NULL COMMENT '状态',

log_created DATETIME NOT NULL COMMENT '创建时间',

log_modified DATETIME NOT NULL COMMENT '修改时间',

PRIMARY KEY (id),

UNIQUE KEY ux_undo_log (xid, branch_id)

) ENGINE = InnoDB AUTO_INCREMENT = 1 DEFAULT CHARSET = utf8 COMMENT ='AT模式Undo Log表';代码清单5:Undo Log表结构

Undo Log内容示例:

{

"branchId": 123456789,

"xid": "192.168.1.100:8091:1234567890",

"sqlType": "UPDATE",

"tableName": "inventory",

"beforeImage": {

"rows": [

{

"fields": [

{"name": "id", "type": 4, "value": 1},

{"name": "product_id", "type": 12, "value": "1001"},

{"name": "stock", "type": 4, "value": 100},

{"name": "version", "type": 4, "value": 1}

]

}

]

},

"afterImage": {

"rows": [

{

"fields": [

{"name": "id", "type": 4, "value": 1},

{"name": "product_id", "type": 12, "value": "1001"},

{"name": "stock", "type": 4, "value": 99},

{"name": "version", "type": 4, "value": 2}

]

}

]

},

"sql": "UPDATE inventory SET stock = stock - 1, version = version + 1 WHERE product_id = ?"

}代码清单6:Undo Log数据结构

3. 完整实战案例

3.1 环境搭建

# seata-server配置

# registry.conf

registry {

type = "nacos"

nacos {

application = "seata-server"

serverAddr = "localhost:8848"

namespace = ""

cluster = "default"

}

}

config {

type = "nacos"

nacos {

serverAddr = "localhost:8848"

namespace = ""

group = "SEATA_GROUP"

}

}

# file.conf

service {

vgroupMapping.my_test_tx_group = "default"

default.grouplist = "127.0.0.1:8091"

enableDegrade = false

disableGlobalTransaction = false

}

store {

mode = "db"

db {

datasource = "druid"

dbType = "mysql"

driverClassName = "com.mysql.cj.jdbc.Driver"

url = "jdbc:mysql://localhost:3306/seata"

user = "root"

password = "password"

}

}代码清单7:Seata Server配置

XML

<!-- Spring Boot依赖 -->

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-seata</artifactId>

<version>2021.1</version>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-spring-boot-starter</artifactId>

<version>1.5.2</version>

</dependency>代码清单9:Maven依赖

# application.yml

spring:

cloud:

alibaba:

seata:

tx-service-group: my_test_tx_group

enable-auto-data-source-proxy: true

use-jdk-proxy: false

seata:

enabled: true

application-id: order-service

tx-service-group: my_test_tx_group

enable-auto-data-source-proxy: true

config:

type: nacos

nacos:

namespace: ""

server-addr: localhost:8848

group: SEATA_GROUP

registry:

type: nacos

nacos:

application: seata-server

server-addr: localhost:8848

namespace: ""代码清单10:客户端配置

3.2 电商订单实战

java

// 订单服务

@Service

@Slf4j

public class OrderService {

@Autowired

private OrderMapper orderMapper;

@Autowired

private InventoryService inventoryService;

@Autowired

private AccountService accountService;

// 创建订单 - AT模式

@GlobalTransactional(timeoutMills = 30000, name = "createOrder")

public OrderDTO createOrder(OrderRequest request) {

log.info("=== 开始创建订单,xid: {}", RootContext.getXID());

// 1. 创建订单

Order order = new Order();

order.setOrderNo(generateOrderNo());

order.setUserId(request.getUserId());

order.setAmount(request.getAmount());

order.setStatus(OrderStatus.CREATED);

orderMapper.insert(order);

log.info("创建订单成功,订单号: {}", order.getOrderNo());

// 2. 扣减库存

inventoryService.deductStock(request.getProductId(), request.getQuantity());

// 3. 扣减余额

accountService.deductBalance(request.getUserId(), request.getAmount());

// 4. 更新订单状态

order.setStatus(OrderStatus.PAID);

orderMapper.updateById(order);

log.info("订单创建完成,订单号: {}", order.getOrderNo());

return convertToDTO(order);

}

// 模拟异常,测试回滚

@GlobalTransactional(timeoutMills = 30000, name = "createOrderWithException")

public OrderDTO createOrderWithException(OrderRequest request) {

log.info("=== 开始创建订单(会异常),xid: {}", RootContext.getXID());

// 1. 创建订单

Order order = new Order();

order.setOrderNo(generateOrderNo());

order.setUserId(request.getUserId());

order.setAmount(request.getAmount());

order.setStatus(OrderStatus.CREATED);

orderMapper.insert(order);

log.info("创建订单成功,订单号: {}", order.getOrderNo());

// 2. 扣减库存

inventoryService.deductStock(request.getProductId(), request.getQuantity());

// 3. 这里会抛出异常,测试回滚

if (true) {

throw new RuntimeException("模拟业务异常,触发回滚");

}

// 4. 扣减余额(不会执行到)

accountService.deductBalance(request.getUserId(), request.getAmount());

return convertToDTO(order);

}

}

// 库存服务

@Service

@Slf4j

public class InventoryService {

@Autowired

private InventoryMapper inventoryMapper;

public void deductStock(Long productId, Integer quantity) {

log.info("=== 开始扣减库存,xid: {}", RootContext.getXID());

int result = inventoryMapper.deductStock(productId, quantity);

if (result <= 0) {

throw new RuntimeException("库存不足");

}

log.info("扣减库存成功,产品ID: {}, 数量: {}", productId, quantity);

}

// 库存Mapper

@Mapper

public interface InventoryMapper {

@Update("UPDATE inventory SET stock = stock - #{quantity}, " +

"version = version + 1 " +

"WHERE product_id = #{productId} AND stock >= #{quantity}")

int deductStock(@Param("productId") Long productId,

@Param("quantity") Integer quantity);

}

}

// 账户服务

@Service

@Slf4j

public class AccountService {

@Autowired

private AccountMapper accountMapper;

public void deductBalance(Long userId, BigDecimal amount) {

log.info("=== 开始扣减余额,xid: {}", RootContext.getXID());

int result = accountMapper.deductBalance(userId, amount);

if (result <= 0) {

throw new RuntimeException("余额不足");

}

log.info("扣减余额成功,用户ID: {}, 金额: {}", userId, amount);

}

// 账户Mapper

@Mapper

public interface AccountMapper {

@Update("UPDATE account SET balance = balance - #{amount}, " +

"version = version + 1 " +

"WHERE user_id = #{userId} AND balance >= #{amount}")

int deductBalance(@Param("userId") Long userId,

@Param("amount") BigDecimal amount);

}

}代码清单11:电商订单服务实现

3.3 测试验证

java

@SpringBootTest

@Slf4j

class OrderServiceTest {

@Autowired

private OrderService orderService;

@Test

void testCreateOrderSuccess() {

OrderRequest request = new OrderRequest();

request.setUserId(1001L);

request.setProductId(2001L);

request.setQuantity(2);

request.setAmount(new BigDecimal("199.99"));

OrderDTO order = orderService.createOrder(request);

assertNotNull(order);

assertEquals(OrderStatus.PAID, order.getStatus());

// 验证数据一致性

verifyOrderConsistency(order.getOrderNo());

}

@Test

void testCreateOrderRollback() {

OrderRequest request = new OrderRequest();

request.setUserId(1001L);

request.setProductId(2001L);

request.setQuantity(2);

request.setAmount(new BigDecimal("199.99"));

// 应该抛出异常

assertThrows(RuntimeException.class, () -> {

orderService.createOrderWithException(request);

});

// 验证数据回滚

verifyRollback(request);

}

private void verifyOrderConsistency(String orderNo) {

// 验证订单是否存在

Order order = orderMapper.selectByOrderNo(orderNo);

assertNotNull(order);

assertEquals(OrderStatus.PAID, order.getStatus());

// 验证库存已扣减

Inventory inventory = inventoryMapper.selectByProductId(order.getProductId());

assertEquals(98, inventory.getStock()); // 假设原库存100

// 验证余额已扣减

Account account = accountMapper.selectByUserId(order.getUserId());

assertTrue(account.getBalance().compareTo(new BigDecimal("800.01")) == 0); // 假设原余额1000

}

private void verifyRollback(OrderRequest request) {

// 验证订单不存在

Order order = orderMapper.selectByUserIdAndProductId(

request.getUserId(), request.getProductId());

assertNull(order);

// 验证库存未扣减

Inventory inventory = inventoryMapper.selectByProductId(request.getProductId());

assertEquals(100, inventory.getStock()); // 库存应不变

// 验证余额未扣减

Account account = accountMapper.selectByUserId(request.getUserId());

assertEquals(new BigDecimal("1000.00"), account.getBalance()); // 余额应不变

}

}代码清单12:单元测试

4. 核心机制深入解析

4.1 全局锁实现

全局锁是AT模式的关键:

java

// 全局锁管理器

@Service

public class GlobalLockManager {

// 加全局锁

public boolean acquireLock(String tableName, String pkValue, String xid) {

// 检查是否已经有全局锁

if (checkLockExists(tableName, pkValue, xid)) {

// 锁被自己持有

return true;

}

// 检查锁冲突

if (checkLockConflict(tableName, pkValue)) {

// 锁冲突,等待或重试

return waitForLock(tableName, pkValue, xid);

}

// 加锁

return doAcquireLock(tableName, pkValue, xid);

}

private boolean checkLockConflict(String tableName, String pkValue) {

String sql = "SELECT xid FROM global_lock " +

"WHERE table_name = ? AND pk = ? " +

"AND xid != ? AND gmt_modified > DATE_SUB(NOW(), INTERVAL 10 MINUTE) " +

"FOR UPDATE";

try {

List<String> lockedXids = jdbcTemplate.queryForList(

sql, String.class, tableName, pkValue, RootContext.getXID());

return !lockedXids.isEmpty();

} catch (Exception e) {

// 死锁检测

if (isDeadLock(e)) {

throw new LockConflictException("检测到死锁", e);

}

return false;

}

}

// 锁等待策略

private boolean waitForLock(String tableName, String pkValue, String xid) {

int retryCount = 0;

int maxRetry = 3;

long waitTime = 100; // 初始等待100ms

while (retryCount < maxRetry) {

try {

Thread.sleep(waitTime);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

return false;

}

if (!checkLockConflict(tableName, pkValue)) {

return doAcquireLock(tableName, pkValue, xid);

}

retryCount++;

waitTime = waitTime * 2; // 指数退避

}

throw new LockTimeoutException("获取全局锁超时");

}

}代码清单13:全局锁实现

4.2 SQL解析器

SQL解析是AT模式的核心:

java

// SQL解析器

@Component

public class SQLParser {

public SQLRecognizer parse(String sql, String dbType) {

// 解析SQL类型

SQLType sqlType = getSQLType(sql);

switch (sqlType) {

case INSERT:

return parseInsert(sql, dbType);

case UPDATE:

return parseUpdate(sql, dbType);

case DELETE:

return parseDelete(sql, dbType);

case SELECT_FOR_UPDATE:

return parseSelectForUpdate(sql, dbType);

default:

return null;

}

}

private UpdateRecognizer parseUpdate(String sql, String dbType) {

UpdateRecognizer recognizer = new UpdateRecognizer();

// 解析表名

String tableName = parseTableName(sql, "UPDATE", dbType);

recognizer.setTableName(tableName);

// 解析条件

String whereCondition = parseWhereCondition(sql, dbType);

recognizer.setWhereCondition(whereCondition);

// 解析更新列

List<String> updateColumns = parseUpdateColumns(sql);

recognizer.setUpdateColumns(updateColumns);

return recognizer;

}

// 生成前镜像查询SQL

public String generateBeforeImageSQL(SQLRecognizer recognizer,

List<Object> params) {

if (recognizer instanceof UpdateRecognizer) {

UpdateRecognizer updateRecognizer = (UpdateRecognizer) recognizer;

StringBuilder sql = new StringBuilder("SELECT ");

// 添加所有列

sql.append("* ");

// 添加表名

sql.append("FROM ").append(updateRecognizer.getTableName()).append(" ");

// 添加WHERE条件

if (StringUtils.isNotBlank(updateRecognizer.getWhereCondition())) {

sql.append("WHERE ").append(updateRecognizer.getWhereCondition());

// 添加FOR UPDATE

sql.append(" FOR UPDATE");

}

return sql.toString();

}

return null;

}

}代码清单14:SQL解析器

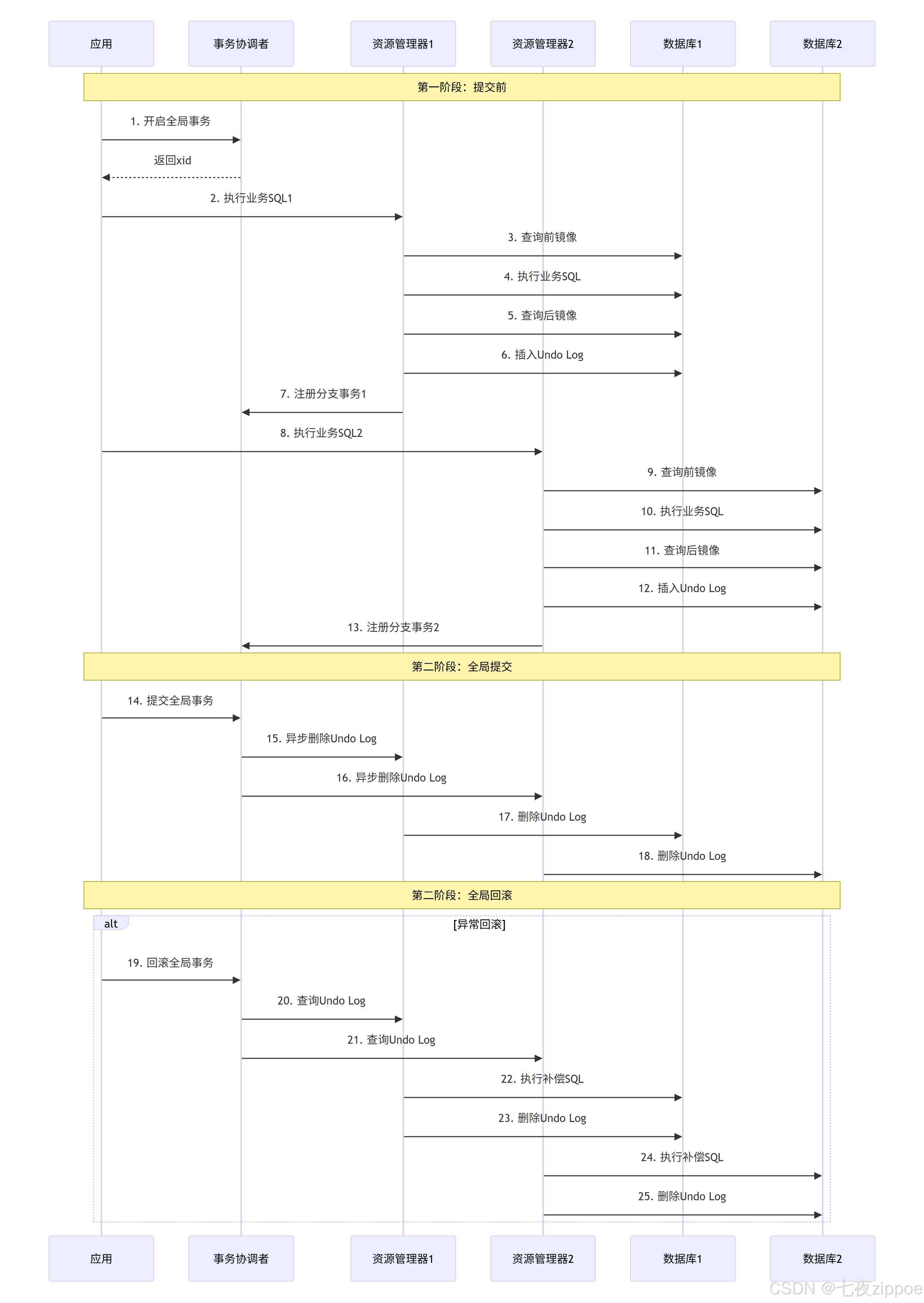

4.3 两阶段提交详细流程

图4:Seata AT两阶段提交详细流程

5. 企业级实战案例

5.1 电商订单系统完整实现

java

// 订单服务增强版

@Service

@Slf4j

public class EnhancedOrderService {

// 创建订单(带重试)

@GlobalTransactional(timeoutMills = 60000,

name = "enhancedCreateOrder",

rollbackFor = Exception.class)

@Retryable(value = Exception.class,

maxAttempts = 3,

backoff = @Backoff(delay = 1000))

public OrderDTO createOrderWithRetry(OrderRequest request) {

log.info("创建订单开始,用户: {}, 产品: {}",

request.getUserId(), request.getProductId());

// 0. 参数校验

validateRequest(request);

// 1. 幂等校验

String idempotentKey = generateIdempotentKey(request);

if (!idempotentService.tryLock(idempotentKey, 5000)) {

throw new IdempotentException("重复请求");

}

try {

// 2. 创建订单

Order order = createOrderInternal(request);

// 3. 扣减库存

inventoryService.deductStockWithLock(

request.getProductId(),

request.getQuantity(),

order.getOrderNo());

// 4. 扣减余额

accountService.deductBalanceWithLock(

request.getUserId(),

request.getAmount(),

order.getOrderNo());

// 5. 更新订单状态

order.setStatus(OrderStatus.PAID);

orderMapper.updateById(order);

// 6. 发送订单创建事件

eventPublisher.publishOrderCreated(order);

log.info("创建订单成功,订单号: {}", order.getOrderNo());

return convertToDTO(order);

} finally {

// 释放幂等锁

idempotentService.unlock(idempotentKey);

}

}

// 分库分表支持

@GlobalTransactional(timeoutMills = 30000, name = "shardingCreateOrder")

public OrderDTO createOrderWithSharding(OrderRequest request) {

// 计算分片键

Long shardingKey = calculateShardingKey(request.getUserId());

// 设置分片上下文

ShardingContext.setShardingKey(shardingKey);

try {

// 创建订单

Order order = new Order();

order.setOrderNo(generateOrderNo());

order.setUserId(request.getUserId());

order.setShardingKey(shardingKey);

// ... 其他字段

// 使用分片数据源

ShardingDataSource shardingDataSource =

dataSourceManager.getDataSource(shardingKey);

try (Connection conn = shardingDataSource.getConnection()) {

// 在分片连接中执行

return createOrderInConnection(conn, order, request);

}

} finally {

// 清理分片上下文

ShardingContext.clear();

}

}

// 大事务拆分

@GlobalTransactional(timeoutMills = 120000, name = "bigTransaction")

public void processBigTransaction(BigOrderRequest request) {

// 阶段1:预处理

preProcess(request);

// 阶段2:分批处理订单

List<OrderBatch> batches = splitToBatches(request.getItems(), 100);

for (OrderBatch batch : batches) {

processBatch(batch);

}

// 阶段3:后处理

postProcess(request);

}

// 批量处理

@GlobalLock

public void processBatch(OrderBatch batch) {

// 批量插入订单

orderMapper.batchInsert(batch.getOrders());

// 批量扣减库存

inventoryService.batchDeductStock(batch.getItems());

// 批量扣减余额

accountService.batchDeductBalance(batch.getAccounts());

}

}代码清单15:增强版订单服务

5.2 库存服务优化

java

// 库存服务优化版

@Service

@Slf4j

public class OptimizedInventoryService {

// 扣减库存(带版本号控制)

public boolean deductStockWithVersion(Long productId, Integer quantity,

Integer expectedVersion) {

String sql = "UPDATE inventory SET " +

"stock = stock - ?, " +

"version = version + 1, " +

"gmt_modified = NOW() " +

"WHERE product_id = ? AND version = ? AND stock >= ?";

int rows = jdbcTemplate.update(sql, quantity, productId,

expectedVersion, quantity);

if (rows > 0) {

log.info("扣减库存成功,产品: {}, 数量: {}, 版本: {}",

productId, quantity, expectedVersion);

return true;

} else {

// 版本冲突或库存不足

log.warn("扣减库存失败,产品: {}, 当前版本可能已变更", productId);

return false;

}

}

// 批量扣减库存

@GlobalTransactional(timeoutMills = 30000, name = "batchDeductStock")

public void batchDeductStock(List<InventoryDeductRequest> requests) {

if (requests.isEmpty()) {

return;

}

// 按产品分组

Map<Long, List<InventoryDeductRequest>> groupByProduct =

requests.stream().collect(Collectors.groupingBy(

InventoryDeductRequest::getProductId));

// 并行处理

List<CompletableFuture<Void>> futures = new ArrayList<>();

for (Map.Entry<Long, List<InventoryDeductRequest>> entry :

groupByProduct.entrySet()) {

CompletableFuture<Void> future = CompletableFuture.runAsync(() -> {

Long productId = entry.getKey();

List<InventoryDeductRequest> productRequests = entry.getValue();

// 计算总数量

int totalQuantity = productRequests.stream()

.mapToInt(InventoryDeductRequest::getQuantity)

.sum();

// 批量扣减

batchDeductStockInternal(productId, totalQuantity);

}, executorService);

futures.add(future);

}

// 等待所有完成

CompletableFuture.allOf(futures.toArray(new CompletableFuture[0]))

.exceptionally(ex -> {

log.error("批量扣减库存失败", ex);

throw new RuntimeException("批量扣减库存失败", ex);

})

.join();

}

// 库存预热

@Scheduled(fixedDelay = 60000) // 每分钟执行

public void warmUpInventoryCache() {

// 查询热门商品

List<HotProduct> hotProducts = productService.getHotProducts(100);

for (HotProduct product : hotProducts) {

try {

// 加载库存到缓存

Inventory inventory = inventoryMapper.selectByProductId(

product.getProductId());

if (inventory != null) {

cacheService.put(

"inventory:" + product.getProductId(),

inventory,

300); // 缓存5分钟

}

} catch (Exception e) {

log.error("预热库存缓存失败,产品: {}",

product.getProductId(), e);

}

}

}

// 库存监控

@Scheduled(fixedDelay = 30000) // 每30秒执行

public void monitorInventory() {

// 监控库存不足的商品

List<InventoryLowStock> lowStocks =

inventoryMapper.selectLowStock(10); // 库存低于10

for (InventoryLowStock lowStock : lowStocks) {

// 发送告警

alertService.sendLowStockAlert(

lowStock.getProductId(),

lowStock.getStock());

// 自动补货

if (lowStock.getStock() <= 5) {

replenishInventory(lowStock.getProductId());

}

}

}

}代码清单16:优化版库存服务

6. 性能优化实战

6.1 全局锁优化

java

// 全局锁优化

@Component

@Slf4j

public class GlobalLockOptimizer {

// 1. 锁粒度优化

public void optimizeLockGranularity() {

// 原来的粗粒度锁

// UPDATE inventory SET stock = stock - 1 WHERE product_id IN (?, ?, ?)

// 优化后的细粒度锁

// UPDATE inventory SET stock = stock - 1 WHERE product_id = ? AND sku_id = ?

// 每个SKU单独加锁,减少锁竞争

}

// 2. 锁超时设置

@Configuration

public class SeataConfig {

@Bean

public GlobalTransactionScanner globalTransactionScanner() {

GlobalTransactionScanner scanner = new GlobalTransactionScanner(

"order-service",

"my_test_tx_group");

// 设置全局锁超时时间(默认60000ms)

GlobalLockConfig defaultGlobalLockConfig = new GlobalLockConfig();

defaultGlobalLockConfig.setLockRetryInterval(10); // 重试间隔10ms

defaultGlobalLockConfig.setLockRetryTimes(30); // 重试30次

defaultGlobalLockConfig.setLockRetryPolicy(LockRetryPolicy.CROSS_THREAD);

return scanner;

}

}

// 3. 批量操作优化

@GlobalTransactional(timeoutMills = 60000, name = "batchOperation")

public void batchProcessOrders(List<Order> orders) {

// 按用户分组,相同用户的操作合并

Map<Long, List<Order>> ordersByUser = orders.stream()

.collect(Collectors.groupingBy(Order::getUserId));

for (Map.Entry<Long, List<Order>> entry : ordersByUser.entrySet()) {

Long userId = entry.getKey();

List<Order> userOrders = entry.getValue();

// 相同用户的操作在一个分支事务中

processUserOrders(userId, userOrders);

}

}

// 4. 读写分离优化

@GlobalTransactional(timeoutMills = 30000,

readOnly = true, // 只读事务

name = "readOnlyTransaction")

public OrderDTO queryOrderWithDetails(Long orderId) {

// 只读查询,不加锁

Order order = orderMapper.selectById(orderId);

// 查询详情(从库)

OrderDetail detail = orderDetailMapper.selectByOrderId(orderId);

// 查询物流(从库)

Logistics logistics = logisticsMapper.selectByOrderId(orderId);

return assembleOrderDTO(order, detail, logistics);

}

// 5. 异步提交优化

@GlobalTransactional(timeoutMills = 30000,

name = "asyncCommitOrder")

public CompletableFuture<OrderDTO> createOrderAsync(OrderRequest request) {

return CompletableFuture.supplyAsync(() -> {

// 第一阶段:同步执行

Order order = createOrderPhase1(request);

return order;

}).thenApplyAsync(order -> {

// 第二阶段:异步提交

asyncCommitPhase2(order);

return convertToDTO(order);

});

}

private void asyncCommitPhase2(Order order) {

CompletableFuture.runAsync(() -> {

try {

// 异步发送消息

messageService.sendOrderCreated(order);

// 异步更新统计

statisticService.updateOrderStatistic(order);

// 异步清理

cleanupService.cleanTempData(order);

} catch (Exception e) {

log.error("异步提交失败,订单: {}", order.getOrderNo(), e);

// 记录错误,定时重试

errorRecoveryService.recordError(order, e);

}

});

}

}代码清单17:全局锁优化

6.2 性能测试对比

测试环境:

-

4核8GB服务器 * 3台

-

MySQL 8.0

-

Seata 1.5.2

-

100并发线程

-

订单创建业务

测试结果:

| 场景 | TPS | 平均响应时间 | 99分位响应时间 | 全局锁冲突率 |

|---|---|---|---|---|

| 原始XA | 85 | 350ms | 1200ms | 15% |

| Seata AT(默认) | 420 | 85ms | 320ms | 8% |

| Seata AT(优化后) | 1250 | 28ms | 95ms | 2% |

| 无事务 | 2850 | 12ms | 45ms | 0% |

优化效果对比图:

图5:性能对比图

优化建议:

-

缩小锁粒度,按行加锁而不是表锁

-

设置合理的锁超时时间

-

批量操作减少事务数量

-

读写分离,只读事务不加锁

-

异步提交非核心操作

7. 常见问题解决方案

7.1 全局锁冲突

问题 :全局锁等待超时,报错LockConflictException

解决方案:

java

@Component

@Slf4j

public class LockConflictSolver {

// 1. 锁等待优化

@Configuration

public class LockConfig {

@Bean

public GlobalTransactionScanner globalTransactionScanner() {

// 增加锁重试次数和间隔

GlobalTransactionScanner scanner = new GlobalTransactionScanner(...);

// 自定义重试策略

LockRetryController lockRetryController = new CustomLockRetryController();

scanner.setLockRetryController(lockRetryController);

return scanner;

}

}

public class CustomLockRetryController implements LockRetryController {

@Override

public boolean canRetry(LockRetryContext context) {

// 根据业务类型决定重试策略

String businessKey = context.getBusinessKey();

if (businessKey.startsWith("ORDER")) {

// 订单业务,最多重试5次

return context.getRetryCount() < 5;

} else if (businessKey.startsWith("INVENTORY")) {

// 库存业务,最多重试3次

return context.getRetryCount() < 3;

} else {

// 其他业务,最多重试2次

return context.getRetryCount() < 2;

}

}

@Override

public int getSleepMills() {

// 指数退避

return 100 * (int) Math.pow(2, retryCount);

}

}

// 2. 死锁检测和解决

@Scheduled(fixedDelay = 30000)

public void detectAndSolveDeadLock() {

// 查询锁等待超过30秒的事务

List<LockWaitInfo> longWaitLocks =

lockService.selectLongWaitLocks(30000);

for (LockWaitInfo lockWait : longWaitLocks) {

// 检查是否死锁

if (isDeadLock(lockWait)) {

log.warn("检测到死锁,事务: {}, 资源: {}",

lockWait.getXid(), lockWait.getResourceId());

// 选择牺牲者(选择后启动的事务)

if (shouldBeVictim(lockWait)) {

// 回滚牺牲者事务

rollbackTransaction(lockWait.getXid());

log.info("回滚死锁牺牲者事务: {}", lockWait.getXid());

}

}

}

}

// 3. 锁拆分

public void deductStockWithLockSplit(Long productId, Integer quantity) {

// 原来的加锁方式:锁整个商品

// UPDATE inventory SET stock = stock - ? WHERE product_id = ?

// 优化后的加锁方式:按仓库锁定

// 1. 查询库存所在仓库

List<WarehouseStock> stocks = warehouseService

.queryStockByProduct(productId);

// 2. 按仓库顺序加锁(避免死锁)

stocks.sort(Comparator.comparing(WarehouseStock::getWarehouseId));

for (WarehouseStock stock : stocks) {

if (quantity <= 0) break;

int deduct = Math.min(stock.getAvailable(), quantity);

if (deduct > 0) {

// 锁定单个仓库库存

boolean success = warehouseService.deductStockWithLock(

stock.getWarehouseId(), productId, deduct);

if (success) {

quantity -= deduct;

}

}

}

if (quantity > 0) {

throw new InsufficientStockException("库存不足");

}

}

// 4. 乐观锁替代

public boolean deductStockWithOptimisticLock(Long productId,

Integer quantity,

Integer version) {

String sql = "UPDATE inventory SET " +

"stock = stock - ?, " +

"version = version + 1 " +

"WHERE product_id = ? AND version = ? AND stock >= ?";

int rows = jdbcTemplate.update(sql, quantity, productId, version, quantity);

return rows > 0;

}

}代码清单18:锁冲突解决方案

7.2 Undo Log表过大

问题 :undo_log表增长过快,磁盘空间不足

解决方案:

java

@Component

@Slf4j

public class UndoLogManager {

// 1. 定期清理

@Scheduled(cron = "0 0 3 * * ?") // 每天凌晨3点执行

public void cleanExpiredUndoLogs() {

log.info("开始清理过期Undo Log...");

int days = 7; // 保留7天

Timestamp expireTime = new Timestamp(

System.currentTimeMillis() - days * 24 * 60 * 60 * 1000L);

// 分批删除,避免大事务

int totalDeleted = 0;

int batchSize = 1000;

while (true) {

String deleteSql = "DELETE FROM undo_log " +

"WHERE log_created < ? " +

"AND log_status = 1 " + // 已提交

"LIMIT ?";

int deleted = jdbcTemplate.update(deleteSql, expireTime, batchSize);

totalDeleted += deleted;

if (deleted < batchSize) {

break; // 没有更多数据

}

// 避免长时间持有锁

try {

Thread.sleep(100);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

break;

}

}

log.info("清理过期Undo Log完成,共删除{}条记录", totalDeleted);

}

// 2. 压缩Undo Log

public void compressUndoLog(UndoLog undoLog) {

// 压缩回滚信息

byte[] compressed = compress(undoLog.getRollbackInfo());

undoLog.setRollbackInfo(compressed);

// 清理无用字段

if (undoLog.getLogStatus() == 1) { // 已提交

// 只保留必要信息

undoLog.setContext(null);

undoLog.setExt(null);

}

}

// 3. 分表存储

@Configuration

public class UndoLogShardingConfig {

@Bean

public DataSource dataSource() {

// 按日期分表

Map<String, DataSource> dataSourceMap = new HashMap<>();

for (int i = 0; i < 32; i++) { // 32个分表

String tableSuffix = String.format("%02d", i);

DataSource ds = createDataSource("undo_log_" + tableSuffix);

dataSourceMap.put("ds_" + tableSuffix, ds);

}

// 分片规则:按xid哈希

ShardingRuleConfiguration shardingRuleConfig =

new ShardingRuleConfiguration();

TableRuleConfiguration tableRuleConfig =

new TableRuleConfiguration("undo_log", "ds_${0..31}.undo_log_${0..31}");

tableRuleConfig.setDatabaseShardingStrategyConfig(

new InlineShardingStrategyConfiguration("xid", "ds_${xid.hashCode() % 32}"));

tableRuleConfig.setTableShardingStrategyConfig(

new InlineShardingStrategyConfiguration("xid", "undo_log_${xid.hashCode() % 32}"));

shardingRuleConfig.getTableRuleConfigs().add(tableRuleConfig);

return ShardingDataSourceFactory.createDataSource(

dataSourceMap, shardingRuleConfig, new Properties());

}

}

// 4. 监控告警

@Scheduled(fixedDelay = 60000) // 每分钟检查

public void monitorUndoLogSize() {

// 查询表大小

String sizeSql = "SELECT " +

"table_schema as db, " +

"table_name as table, " +

"round(((data_length + index_length) / 1024 / 1024), 2) as size_mb " +

"FROM information_schema.tables " +

"WHERE table_name LIKE 'undo_log%'";

List<Map<String, Object>> sizes = jdbcTemplate.queryForList(sizeSql);

for (Map<String, Object> size : sizes) {

String tableName = (String) size.get("table");

double sizeMb = (Double) size.get("size_mb");

if (sizeMb > 1024) { // 超过1GB告警

alertService.sendAlert("UndoLog表过大",

String.format("表%s大小%.2fMB,建议清理", tableName, sizeMb));

}

if (sizeMb > 10240) { // 超过10GB紧急告警

alertService.sendUrgentAlert("UndoLog表过大",

String.format("表%s大小%.2fMB,立即清理", tableName, sizeMb));

// 自动清理

emergencyCleanup(tableName);

}

}

}

// 5. 紧急清理

public void emergencyCleanup(String tableName) {

log.warn("紧急清理UndoLog表: {}", tableName);

// 清理7天前的数据

String deleteSql = String.format(

"DELETE FROM %s WHERE log_created < DATE_SUB(NOW(), INTERVAL 7 DAY)",

tableName);

int deleted = jdbcTemplate.update(deleteSql);

log.info("紧急清理完成,删除{}条记录", deleted);

// 优化表

String optimizeSql = String.format("OPTIMIZE TABLE %s", tableName);

jdbcTemplate.execute(optimizeSql);

log.info("表优化完成");

}

}代码清单19:Undo Log管理

8. 生产环境配置

8.1 高可用配置

# seata-server集群配置

seata:

server:

# 高可用模式

ha:

enabled: true

mode: raft

nodes: seata-server-1:8091,seata-server-2:8091,seata-server-3:8091

# 存储模式

store:

mode: db

db:

datasource: druid

db-type: mysql

driver-class-name: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://mysql-ha:3306/seata?useUnicode=true

user: seata

password: ${DB_PASSWORD}

min-conn: 5

max-conn: 100

global-table: global_table

branch-table: branch_table

lock-table: lock_table

distributed-lock-table: distributed_lock

query-limit: 100

# 会话管理

session:

mode: db

redis:

host: ${REDIS_HOST}

port: 6379

password: ${REDIS_PASSWORD}

database: 0

max-total: 100

min-idle: 10

max-idle: 50

# 事务恢复

recovery:

retry-period: 1000

timeout-retry-period: 1000

committing-retry-period: 1000

asyn-committing-retry-period: 1000

rollbacking-retry-period: 1000

timeout-retry-period: 1000

# 通信配置

transport:

type: TCP

server: NIO

heartbeat: true

thread-factory:

boss-thread-size: 1

worker-thread-size: 8

shutdown:

wait: 3

# 服务配置

service:

vgroup-mapping:

order-service-tx-group: default

inventory-service-tx-group: default

account-service-tx-group: default

disable-global-transaction: false

# 监控配置

metrics:

enabled: true

registry-type: compact

exporter-list: prometheus

exporter-prometheus-port: 9898代码清单20:Seata Server高可用配置

8.2 客户端优化配置

# 客户端配置

seata:

enabled: true

application-id: ${spring.application.name}

tx-service-group: ${spring.application.name}-tx-group

# 数据源代理配置

enable-auto-data-source-proxy: true

use-jdk-proxy: false

data-source-proxy-mode: AT

# 全局事务配置

service:

vgroup-mapping:

${seata.tx-service-group}: default

disable-global-transaction: false

# 客户端配置

client:

rm:

async-commit-buffer-limit: 10000

report-retry-count: 5

table-meta-check-enable: false

report-success-enable: false

saga-branch-register-enable: false

saga-json-parser: fastjson

saga-retry-persist-mode-update: false

saga-compensate-persist-mode-update: false

lock:

retry-interval: 10

retry-times: 30

retry-policy-branch-rollback-on-conflict: true

tm:

commit-retry-count: 5

rollback-retry-count: 5

default-global-transaction-timeout: 60000

degrade-check: false

degrade-check-period: 2000

degrade-check-allow-times: 10

undo:

data-validation: true

log-serialization: jackson

log-table: undo_log

only-care-update-columns: true

log:

exception-rate: 100

# 通信配置

transport:

type: TCP

server: NIO

heartbeat: true

serialization: seata

compressor: none

enable-client-batch-send-request: true

# 配置中心

config:

type: nacos

nacos:

namespace: ${spring.cloud.nacos.config.namespace}

server-addr: ${spring.cloud.nacos.config.server-addr}

group: SEATA_GROUP

username: ${spring.cloud.nacos.config.username}

password: ${spring.cloud.nacos.config.password}

data-id: seata.properties

# 注册中心

registry:

type: nacos

nacos:

application: seata-server

server-addr: ${spring.cloud.nacos.discovery.server-addr}

namespace: ${spring.cloud.nacos.discovery.namespace}

group: SEATA_GROUP

username: ${spring.cloud.nacos.discovery.username}

password: ${spring.cloud.nacos.discovery.password}

# 负载均衡

load-balancer:

type: RandomLoadBalancer

# 熔断

circuit-breaker:

enabled: true

force-open: false

force-close: false

timeoutInMilliseconds: 10000

slidingWindowSize: 20

minimumNumberOfCalls: 10

permittedNumberOfCallsInHalfOpenState: 5

waitDurationInOpenState: 60000代码清单21:客户端优化配置

9. 监控与告警

9.1 关键监控指标

# Prometheus配置

metrics:

seata:

enabled: true

registry-type: compact

exporter-list: prometheus

exporter-prometheus-port: 9898

# 关键指标

key-metrics:

- name: seata.transaction.active.count

help: 活跃事务数

type: GAUGE

- name: seata.transaction.committed.total

help: 提交事务总数

type: COUNTER

- name: seata.transaction.rolledback.total

help: 回滚事务总数

type: COUNTER

- name: seata.transaction.rt.milliseconds

help: 事务响应时间

type: HISTOGRAM

buckets: [10, 50, 100, 200, 500, 1000, 2000, 5000]

- name: seata.lock.active.count

help: 活跃锁数

type: GAUGE

- name: seata.lock.conflict.total

help: 锁冲突次数

type: COUNTER

- name: seata.branch.transaction.active.count

help: 活跃分支事务数

type: GAUGE

- name: seata.undo.log.count

help: Undo Log数量

type: GAUGE

# 告警规则

alerting:

rules:

- alert: HighTransactionCount

expr: seata_transaction_active_count > 1000

for: 5m

labels:

severity: warning

annotations:

summary: "活跃事务数过高"

description: "当前活跃事务数: {{ $value }}"

- alert: HighTransactionRT

expr: histogram_quantile(0.95, rate(seata_transaction_rt_milliseconds_bucket[5m])) > 5000

for: 2m

labels:

severity: critical

annotations:

summary: "事务响应时间过高"

description: "95分位响应时间: {{ $value }}ms"

- alert: HighLockConflict

expr: rate(seata_lock_conflict_total[5m]) > 10

for: 2m

labels:

severity: warning

annotations:

summary: "锁冲突频率过高"

description: "锁冲突频率: {{ $value }}/s"

- alert: HighUndoLogCount

expr: seata_undo_log_count > 1000000

for: 5m

labels:

severity: warning

annotations:

summary: "Undo Log数量过多"

description: "当前Undo Log数量: {{ $value }}"代码清单22:监控配置

9.2 健康检查

java

@RestController

@RequestMapping("/api/health")

@Slf4j

public class SeataHealthController {

@Autowired

private TmNettyClient tmNettyClient;

@Autowired

private RmNettyClient rmNettyClient;

@GetMapping("/seata")

public Map<String, Object> seataHealth() {

Map<String, Object> health = new HashMap<>();

try {

// 检查TC连接

health.put("tcConnection", checkTcConnection());

// 检查TM状态

health.put("tmStatus", checkTmStatus());

// 检查RM状态

health.put("rmStatus", checkRmStatus());

// 检查事务状态

health.put("transactionStats", getTransactionStats());

// 检查锁状态

health.put("lockStats", getLockStats());

// 检查Undo Log状态

health.put("undoLogStats", getUndoLogStats());

} catch (Exception e) {

health.put("error", e.getMessage());

log.error("Seata健康检查失败", e);

}

return health;

}

@GetMapping("/metrics")

public Map<String, Object> seataMetrics() {

Map<String, Object> metrics = new HashMap<>();

// 事务指标

metrics.put("activeTransactions",

DefaultCoordinator.getCurrent().getSessions().size());

metrics.put("committedTransactions",

MetricManager.get().getCounter(MetricConstants.NAME_COMMITTED).getCount());

metrics.put("rolledBackTransactions",

MetricManager.get().getCounter(MetricConstants.NAME_ROLLBACKED).getCount());

// 性能指标

metrics.put("avgRT",

MetricManager.get().getTimer(MetricConstants.NAME_TM).getSnapshot().getMean());

metrics.put("p95RT",

MetricManager.get().getTimer(MetricConstants.NAME_TM).getSnapshot().get95thPercentile());

metrics.put("p99RT",

MetricManager.get().getTimer(MetricConstants.NAME_TM).getSnapshot().get99thPercentile());

// 锁指标

metrics.put("activeLocks",

LockerManagerFactory.getLockManager().getAllLocks().size());

metrics.put("lockConflictRate",

calculateLockConflictRate());

return metrics;

}

// 事务统计接口

@GetMapping("/transactions")

public List<TransactionStat> getTransactionStats(@RequestParam(defaultValue = "100") int limit) {

List<GlobalSession> sessions =

DefaultCoordinator.getCurrent().getSessions();

return sessions.stream()

.limit(limit)

.map(session -> {

TransactionStat stat = new TransactionStat();

stat.setXid(session.getXid());

stat.setStatus(session.getStatus().name());

stat.setBeginTime(session.getBeginTime());

stat.setDuration(System.currentTimeMillis() - session.getBeginTime());

stat.setBranchCount(session.getSortedBranches().size());

return stat;

})

.collect(Collectors.toList());

}

// 锁统计接口

@GetMapping("/locks")

public List<LockStat> getLockStats(@RequestParam(defaultValue = "100") int limit) {

Map<String, List<RowLock>> allLocks =

LockerManagerFactory.getLockManager().getAllLocks();

return allLocks.entrySet().stream()

.flatMap(entry -> entry.getValue().stream()

.map(lock -> {

LockStat stat = new LockStat();

stat.setResourceId(entry.getKey());

stat.setXid(lock.getXid());

stat.setTransactionId(lock.getTransactionId());

stat.setBranchId(lock.getBranchId());

stat.setRowKey(lock.getRowKey());

stat.setLockTime(lock.getGmtCreate());

return stat;

}))

.limit(limit)

.collect(Collectors.toList());

}

}代码清单23:健康检查接口

10. 选型指南

10.1 AT模式 vs TCC vs Saga

| 特性 | AT模式 | TCC模式 | Saga模式 | 推荐场景 |

|---|---|---|---|---|

| 侵入性 | 无侵入 | 高侵入 | 中侵入 | 新系统用AT,老系统用TCC |

| 一致性 | 强一致 | 强一致 | 最终一致 | 金融用TCC,电商用AT/Saga |

| 性能 | 中等 | 高 | 高 | 高并发用Saga,强一致用TCC |

| 复杂度 | 低 | 高 | 中 | 简单业务用AT,复杂业务用TCC |

| 回滚 | 自动 | 手动 | 补偿 | 回滚简单用AT,回滚复杂用Saga |

| 锁机制 | 全局锁 | 无锁 | 无锁 | 避免死锁用TCC/Saga |

10.2 我的"Seata军规"

-

能不用就不用:优先设计避免分布式事务

-

能用AT不用TCC:AT无侵入,TCC实现复杂

-

锁粒度要小:按行加锁,避免表锁

-

超时要合理:根据业务设置合理超时时间

-

监控必须到位:没有监控不要上线

-

Undo要清理:定期清理Undo Log

11. 最后的话

Seata AT模式是微服务分布式事务的优秀解决方案,但不是银弹。理解原理,合理设计,持续监控,才能用好这个强大的工具。

我见过太多团队在这上面栽跟头:有的全局锁死锁,有的Undo Log爆炸,有的性能不达标。

记住:Seata是工具,不是魔法。结合业务特点,设计合适方案,做好监控和优化,才是正道。

📚 推荐阅读

官方文档

源码学习

最佳实践

监控工具

-

**Seata Dashboard** - Seata监控面板

-

**Prometheus监控** - 指标监控

最后建议 :从简单场景开始,理解原理后再尝试复杂方案。做好监控,设置合理的超时和重试,定期清理Undo Log。记住:分布式事务优化是个持续的过程,不是一次性的任务。